Convergence thesis between longtermism and neartermism

By weeatquince🔸 @ 2021-12-30T16:03 (+100)

Epistemic status: some vague musings.

Introduction

Here is a post I wrote very quickly a little while back on the topic of: would it be surprising that the best things to do if you are a longtermist and if you are not a longtermist might converge and be the same.

It takes a many weak arguments approach to considering the question. I do not make the case that longtermism and neartermism will converge only that it would not in fact be “surprising and suspicious” if they did converge [1]. Making the stronger case that they do converge would require a bit more time and empirical data gathering work and this is mostly a thought exercise so far.

Longtermism (roughly) defined here as: the moral belief that future wellbeing matters significantly and given the future might be extremely big it is extremely morally important to make sure it goes well. Neartermism is defined as not longtermism.

Top 10 weak arguments

SAME PROBLEMS

1. Neglectedness – the world is chronically short-term

We should focus on issues that are high in scale, neglected and tractable. The world is chronically short term. Like extremely so. At least anecdotally I can say that politicians I have worked with have not put much thought into anything beyond their expected 2 year tenure in office. The UK national risk register looks 2 years ahead. Here is a whole bunch of literature pointing out how politicians are short term. For some reasons this even applies to politicians and society planning their own futures – as can be seen by the neglect of improving care for elderly people with mental illness (source).

Even just considering people alive today most of society is not planning for 95% of our future. This is due to both presentism bias and the nature of our modern democracies. Given high negelctedness it should not be surprising that focusing on making sure the future goes well matters a lot even if you ignore the very long-term, so it should not be surprising if longtermist suggestions of how to do good are the same as suggestions from non-longtermist.

2. The same problems are relevant for both – e.g. X-risk estimates are high

Consider Toby Ord’s estimates of existential risks. If these estimates are accurate and roughly constant over the next 100 years then your chance of dying any year from an existential catastrophe is roughly 1/600 or 0.16667% (there are some reasons to think it is higher or lower but let's call that a reasonable estimate). Let's compare to say your chance of dying from malaria at 0.00518% (source) or traffic accident if you live in the UK at 0.0026% (source and source). Your chance of dying in a global catastrophe would be over an order of magnitude higher than your chance of dying from any other big killer that can be listed.

Many non-longtermists are also interested in topics such as preventing pandemics. We are already seeing convergent problems and should not be surprised to see more such convergence.

SAME SOLUTIONS

3. The power-law rule of having impact could apply to approaches to doing good.

Our ability to have impact is heavy tailed (source), maybe power law distributed, with some ways of having impact being much more powerful than other ways of having impact. This means some ways of doing good are vastly superior to other ways of doing good. If we think this applies to the tools that we use to impact the world then we should not be surprised if there are some super-powered approaches to doing good and if the same really high impact tool is great for both the long-term and the short-term. We might a priori expect that if examined some tool such as: becoming prime minister, promoting growth, fixing science, or something else, might be many times more impactful than other ways of doing good and that the positive effect on both the short-term and the long-term will be huge.

4. We already see the same super-powered tools suggested for both, like growth or EA community building

Tyler Cowen on the 80K podcast suggests that our top moral priority should be preserving and improving humanity’s long-term future and that the way to do that is to maximise the rate of sustainable economic growth (source). Meanwhile Hauke Hillebrandt on the EA forum argues that the best way to improve global development is to focus on economic growth (source).

EA meta – such as EA community building or practical exploratory cause prioritisation research could be the best thing for both the near and long term. So could moral circle expansion or promoting animal welfare (before humans spread wild animal suffering across the galaxy).

We are already seeing convergent solutions and should not be surprised to see more such convergence.

5. Last dollar spending

The EA community has $bns, some of that is committed to be spent in the next few decades. When considering impact we shouldn’t think about the best place for the marginal additional $1 to be spent on existing projects but where the very last $ will go. Longtermist EAs are already suggesting they are short on projects to fund and that technical AI safety work is well funded and perhaps they should focus on carbon off-sets or generally making the world go well in the short-term. We should not be surprised if the last $ of longtermist spending is pegged to something that is broadly good in the short-term, in fact this is already happening.

EMPIRICISM & LONG-TERM PLANNING

6. Need short feedback loops so the things that are most measurable are most measurable on both

Humans are very bad at doing good. Like horrendous. Arguably most social programs don’t work (source source) and most people trying to do good are both failing to have an impact and convinced that they are having an impact. International development has taken a century of trying to have any impact – trying and failing to drive economic growth and developing the tools we need to focus on things that can be changed like health and decades and decades to reach the point where we know how to do that well.

In short the history of people trying to do good shows that if you do not instigate short feedback loops to demonstrate you are having an impact then maybe best to assume you are not having that much of an impact.

Given how challenging it is to set up good feedback loops and prove impact we should not be too surprised if the most impactful set of actions fall within a very small range of actions that are easy to demonstrate impact of.

For example at an extreme end maybe the only things we can be sure are positively affecting the world are actions that can be measured with RCT or similar such studies. At a less extreme end perhaps we should be building up EAs capacity to do good by working out how to influence policy to lead to positive social outcomes and track and judge the impact of that across a range of topics, perhaps starting with things that are more measurable and easier to track (this seems to be a bit of what OpenPhil is trying to do). Perhaps if we do this we work out there are limits to what can be demonstrated to be impactful (pushing us towards more short-term focus), or perhaps we learn to demonstrate impact even in speculative areas (pushing us towards more long-term focus).

7. Long term plans naturally converge

Looking forward beyond a certain point in time has no appreciable impact on the shape of long term plans. Try it.

Try making the best plan you can accounting for all the souls in the next trillion years, but no longer. Done that? Great! Now make the best plan but only take into account the next hundred billion years, so ignoring 90% of the previously considered future. Done? Does it look any different? Likely it is exactly the same plan. Now try a billion years and a million years? How different does that look? What about the best plan for 100000 years? What about 1000 years or 100 years? At what point does it look different?

Plans that consider orders of magnitude more of the future clearly converge with one another. It is not clear how much of the future you can ignore before plans start to look different but convergence between a 100 year plan and a 1x1012 year plan should not be surprising.

8. Long term planning involves setting 10-25 year goals, and this is significantly shorter than the lives of existing people today.

In practice long term planning rarely goes into details beyond a roughly 10-25 year time window. This is not to say that long-term planners do not care beyond that window just that the best way to improve the world (or whatever the plan is being made about) beyond 25 years is to have a really clear vision of what a world in 25 years time look like, such that that future actors at that 25 year point are left in a very strong position to prepare for the next 25 years, and so on. Having a long-term by making longer-term detailed plans just appears not to work very well (see here). As such, making the world in 10 years time or 25 years time as strong as possible to deal with the challenges beyond 10 or 25 years from now is likely the best way to plan for the long-term.

Now it is notable that 10-25 years is significantly shorter than just the lifespan of existing humans (70-80 years). If a neartermist only cared about existing humans, they might note that perhaps 66% of the value is beyond the point that it is easy to plan directly for. As such they might also put significant weight on long-term plans that enable future actors to make good decisions. It would not be shocking if our imaginary neartermist’s attempt to capture that 66% of human value looked very similar to a longtermist trying to capture the 99.9+% of human value they feel lies outside the length of time humans can easily directly plan for.

This similarity might be even stronger for individuals working in areas where planning timescales are short, like policy-making.

9. Experts and common sense suggests that it is plausible that the best thing you can do for the long term is to make the short term go well

It is not unusual to hear people say that the best thing you can do for the long term is to make the short term good. This seems a reasonable common sense view.

Even people who are trusted and considered experts with the EA community express this view. For example here Peter Singer suggest that “If we are at the hinge of history, enabling people to escape poverty and get an education is as likely to move things in the right direction as almost anything else we might do; and if we are not at that critical point, it will have been a good thing to do anyway” (source)

DOING BOTH IS BEST

10. Why not both?

There is also a case for taking a mixed strategy and doing both. Arguments for this include:

- Worldview diversification – OpenPhil makes a strong case for worldview diversification: “putting significant resources behind each worldview that we find highly plausible”. (source) This prevents diminishing marginal returns and has a range of other benefits. Such as:

- Managing uncertainty – If highly uncertain about what has impact should take a robust strategy and do at least some things in all domains.

- Maximise learning – exploration of how to do good has high value so we should be doing a bit of everything right now and not focus on one thing. In the future we might have a better view on how to do good than we do now.

- Epistemic modesty – We have a difference of view in the community. Given epistemic modesty (source) we should be distributing as per the views of our informed peers, such means across cause areas.

- Moral trades – We have a difference of view in the community. We should be willing to make moral trades we are going to maximise our comparative advantages, this means doing some of both if it is advantageous for us to do so (source)

- Appeal to experts – trusted thoughtful actors, in particular OpenPhil, supports doing both

My views

Despite making the case for convergence being plausible this does still feel a bit contrived. I am sure if you put effort into it you could make a many weak arguments approach to show that nearterm and longterm approaches to doing good will diverge.

Also I think we should not expect the same answer for all people. Maybe a programmer considering the EA question might get divergence (e.g. between doing AI safety research or earn to give depending on if they are longtermist or nertermist) but maybe a policy maker will get convergence (e.g. in both cases the person should improve institutions they have the power to influence).

In short I don’t think we yet know how to do the most good and there is a case for much more exploratory research, (source, and see my view on this here). Practical exploratory research seems hugely neglected right now, especially given the amount of money EA causes might have access to this is perhaps even more of a priority.

Possible implications

I would like to see near-term EAs like GiveWell looking more at the long-term implications of the interventions they recommend and more speculative but potentially higher return interventions such as policy change or preventing disasters (I believe they have mostly put-off doing this again). I do think there are better ways of doing good than bednets from a near-term point of view if we actually start looking for them.

I would like to see long-term EAs do more work to set mid-term goals and make practical plans of things they can do to generally build a resilient world (see my views on how to do this here). I do think we need to find ways of doing good beyond AI safety from a long-term point of view and we can do that if we actually start looking for them.

I would like to see more focus on the kinds of interventions that look great across all domains: meta-science, improving institutional decision making, economic growth, (safe) research and development of biotech, moral circle expansion, etc. Honestly I would not at all be surprised if any of these areas becomes a key focus for EA in a few years.

I would like to see less of a community split between neartermist and longtermist. It may be that for some folk this is not the deciding factor on how they best go do good in the world. For example it seems odd to me that the OpenPhil neartermist team has a different skill set from the longtermist team (economist and philosophers respectively), and I am sure they could, and do, learn from each other.

I genuinely love how this community brings together people working across a diverse range of cause areas to collaborate, share resources, and focus on doing the most good and I worry about organisations splitting into longtermist and neartermist camps and think there is so much to do together.

I am excited to see where we can go from here.

Thank you to Charlotte Siegman, Adam Bales and David Thorstad for input and feedback.

FOOTNOTE

[1] The "surprising and suspicious convergence" terminology is from Beware surprising and suspicious convergence – Gregory Lewis.

There is some implication that longtermism and neartermism convergence would be surprising and suspicious on p5 of The Case for Strong Longtermism - GPI Working Paper June 2021 (2). If convergence is true (in some cases for some people) then the case for Strong Longtermism would be trivially true and as such would perhaps not be a particularly useful decision heuristic in the world today (for those cases or those people).

antimonyanthony @ 2022-01-02T04:01 (+25)

I found several of these arguments uncompelling. While you acknowledge that your approach is one of "many weak arguments," the overall case doesn't seem persuasive.

Specifically:

#1: This seems to be a non sequitur. If relatively short-term problems are also neglected, why exactly does this suggest that interventions that improve the long-term future would converge with those that improve the relatively short-term (yet not extremely short-term)? All that you've shown here is that we shouldn't be surprised if interventions that improve the relatively short term may be quite different from those that people typically prioritize.

#2: Prima facie this is fair enough. But I'd expect the tractability of effectively preventing global catastrophic and/or x-risks to not be high enough for this to be competitive with malaria nets, if one is only counting present lives.

#4: Conversely, though, we've seen that the longtermist community has identified AI as one of the most plausible levers for positive or negative impact on the long term future, and increasing economic growth will on average increase AI capabilities more than safety. Re: EA meta, if your point is that getting more people into EA increases efforts on more short- and long-term interventions, sure, but this is entirely consistent with the view that the most effective interventions to improve the long vs short term will diverge. Maybe the most effective EA meta work from a longtermist perspective is to spread longtermism specifically, not EA in broad strokes. Tensions between short-term animal advocacy and long-term (wild) animal welfare have already been identified, e.g., here and here.

#5: For those who don't have the skills for longtermist direct work and instead purely earn to give, this is fair enough, but my impression is that longtermists don't focus much on effective donations anyway. So this doesn't persuade me that longtermists with the ability to skill up in direct work that aims at the long term would just as well aim at the short term.

#6: If you grant longtermist ethics, by the complex cluelessness argument, aiming at improving the short term doesn't help you avoid this feedback loop problem. Your interventions at the short term will still have long term effects that probably dominate the short term effects, and I don't see why the feedback on short term effectiveness would help you predict the sign and magnitude of the long term effects. (Having said this, I don't think longtermists have really taken complex cluelessness for long term-aimed interventions as seriously as they should, e.g., see Michael's comment here. My critiques of your post here should not be taken as a wholesale endorsement of the most popular longtermist interventions.)

#8: I agree with the spirit of this point, that long term plans will be extremely brittle. But even if the following is true:

making the world in 10 years time or 25 years time as strong as possible to deal with the challenges beyond 10 or 25 years from now is likely the best way to plan for the long-term

I would expect "as strong as possible" to differ significantly from a near- vs longtermist perspective. Longtermists will probably want to build the sorts of capacities that are effective at achieving longtermist goals (conditional on your other arguments not providing a compelling case to the contrary), which would be different from those that non-longtermists are incentivized to build.

#9: I don't agree that this is "common sense." The exact opposite seems common sense to me - if you want to optimize X, it's common sense that you should do things aimed at improving X, not aimed at Y. This is analogous to how "charity begins at home" doesn't seem commonsensical, i.e., if the worst off people on this planet are in poor non-industrialized nations, it would be counterintuitive if the best way to help them were to help people in industrialized nations. Or if the best way to help farmed and wild animals were to help humans. (Of course there can be defeaters to common sense, but I'm addressing your argument on its own terms.)

weeatquince @ 2022-01-02T10:29 (+16)

Without going into specific details of each of your counter-arguments your reply made me ask myself: why would it be that for across a broad range of arguments I consistently find them more compelling than you do? Do we disagree on each of these points or is there some underlying crux?

I expect if there is a simple answer here it is that my intuitions are more lenient towards many of these arguments as I have found some amount of convergence to be a thing time and time again in my life to date. Maybe this would be an argument #11 and it might go like this:

#11. Having spent many years doing EA stuff, convergence keeps happening to me.

When doing UK policy work the coalition we built essentially combined long- and near-termist types. The main belief across both groups seemed to be that the world is chronically short term and if we want to prevent problems (x-risks, people falling into homelessness) we need to fix government and make it less short-term. (This looks like evidence of #1 happening in practice). This is a form of improving institutional decision making (which looks like #6).

Helping government make good risk plans, e.g. for pandemics, came very high up the list of Charity Entrepreneurship's neartermist policy interventions to focus on. It was tractable and reasonably well evidenced. Had CE believed that Toby's estimates of risks were correct it would have looked extremely cost-effective too. (This looks like #2).

People I know seem to work in longtermist orgs, where talent is needed, but donate to neartermist orgs, where money is needed. (This looks like #5).

In the EA meta and community building work I have done covering both long- and near-term causes seems advantageous. For example Charity Entrepreneurship's model (talent + ideas > new charities) is based on regularly switching cause areas. (This looks like #6.)

Etc.

It doesn’t feel like I really disagree with any thing concrete that you wrote (except maybe I think you overstate the conflict between long- and near-term animal welfare folk), more that you and I have different intuitions on how much this all points push towards convergence being possible, or at least not suspicious. And maybe those intuitions, as intuitions often do, arise from different lived experiences to date. So hopefully the above captures some of my lived experiences.

Greg_Colbourn @ 2022-01-01T17:13 (+20)

X-risk as a focus for neartermism

I think it's unfortunate how x-risks are usually lumped in with longtermism , and longtermism is talked about a lot more as a top-level EA cause area these days, and x-risk less so. This considering that, arguably, x-risk is very important from a short-term (or at least medium-term) perspective too.

As OP says in #2, according to our best estimates, many (most?) people's chances of dying in a global catastrophe over the next 10-25 years are higher than many (any?) other regular causes of death (car accidents, infectious disease, heart disease and cancer for those of median age or younger, etc). As Carl Shulman outlines in his common sense case for x-risk work on the 80k podcast:

If you believe that the risk of human extinction over the next century is something like one in six (as Toby Ord suggests is a reasonable figure in his book The Precipice), then it would be worth the US government spending up to $2.2 trillion to reduce that risk by just 1%, in terms of [present] American lives saved alone.

See also Martin Trouilloud's comment arguing that "a great thing you can do for the short term is to make the long term go well", and this post arguing that "the ‘far future’ is not just the far future" [due to near term transformative technology and x-risk].

I think X-risk should be reinstated as a top-level EA cause area in it's own right, distinct from Longtermism. I worry that having it as a sub-level concern, under the broad heading of longtermism, will lead to people seeing it as less urgent than it is; "longtermism" giving the impression that we have plenty of time to figure things out (when we really don't, in expectation).

michaelchen @ 2022-01-03T06:41 (+7)

If you believe that the risk of human extinction over the next century is something like one in six (as Toby Ord suggests is a reasonable figure in his book The Precipice)

To be precise, Toby Ord's figure of one in six in ''The Precipice'' refers to the chance of existential catastrophe, not human extinction. Existential catastrophe which includes events such as unrecoverable collapse.

Greg_Colbourn @ 2022-04-07T09:44 (+6)

Scott Alexander argues for the focus to be put (back) on x-risk here.

weeatquince @ 2022-01-02T00:25 (+5)

Great point!

Greg_Colbourn @ 2022-01-01T17:16 (+2)

This especially considering that an all-things-considered (and IMO conservative) estimate for the advent of AGI is 10% chance in (now) 14 years! This is a huge amount of short-term risk! It should not be considered as (exclusively) part of the longtermist cause area.

MichaelDickens @ 2021-12-31T17:58 (+14)

No comment on the specific arguments given, but I like the way this post is structured: a list of weak arguments, grouped into categories, each short enough that they're easy to read quickly.

JackM @ 2021-12-31T08:48 (+14)

On needing short feedback loops - I'd be interested to hear what longtermist work/interventions you think the community is doing that doesn't achieve these feedback loops. I'd then find it easier to evaluate your point.

I'm worried that relying on short feedback loops would then mean not doing interventions to avoid existential catastrophes, because in a sense the only way to judge the effectiveness of such interventions is to observe their counterfactual impact on reducing the incidence of such catastrophes, which is pretty impossible as if one catastrophe happens we're done for.

weeatquince @ 2021-12-31T17:35 (+10)

Maybe see it as a spectrum. For example:

- V. strong need for empirical evidence – Probably no x-risk work meet this bar

- Medium need for empirical evidence – I expect the main x-risk things that could meet this bar is policy change or technical safety research, where that work can be shown to be immediately useful in non x-risks type situations (e.g. improving prediction ability, vaccine technologies, etc) as there is some feedback loop.

- Weak need for empirical evidence – I expect most x-risk stuff that currently happens meets this bar except for some speculative research with out clear goals or justification (perhaps some of FHI work) or things taken on trust (perhaps choosing to funding AI research where all such research is kept private, like MIRI)

- No need for empirical evidence – All x-risk work would meet this bar

(Above I characterised it as a single bar for quality of evidence and once something passes the bar you are good to go but obviously in practice it is not that simple as you will weigh of quality of evidence with other factors: scale, cost-effectiveness, etc.)

The higher you think the bar is the more likely it is that longtermist things and neartermist things will converge. At the very top they will almost certainly converge as you are stuck doing mostly things that can be justified with RCTs or similar levels of evidence. At the medium level convergence seems more likely than at the weak level .

I think the argument is that there are very good reasons to think the bar ought to be very very high, so convergence shouldn't be that unlikely.

MichaelStJules @ 2021-12-31T19:32 (+8)

The higher you think the bar is the more likely it is that longtermist things and neartermist things will converge. At the very top they will almost certainly converge as you are stuck doing mostly things that can be justified with RCTs or similar levels of evidence.

I'm not sure anything would be fully justified with RCTs or similar levels of evidence, since most effects can't be measured in practice (especially far future effects), so we're left using weaker evidence for most effects or just ignoring them.

weeatquince @ 2022-01-02T00:15 (+2)

Yes good point. In practice that bar is too high to get much done.

Linch @ 2021-12-31T20:04 (+2)

The RCTs or other high-rigor evidence that would be most exciting for long-term impact probably aren't going to be looking at the same evidence base or metrics that would be the best for short-term impact.

Linch @ 2021-12-31T20:04 (+8)

Here's my rushed, high-level take. I'll try to engage with specific subpoints later.

My views

Despite making the case for convergence being plausible this does still feel a bit contrived. I am sure if you put effort into it you could make a many weak arguments approach to show that nearterm and longterm approaches to doing good will diverge.

I feel like this is by far the most important part of this post and I think it should be highlighted more/put more upfront. The entire rest of the article felt like a concerted exercise in motivated reasoning (including the introductory framing and the word "thesis" in the title) and I almost didn't read it or bothered to comment as a result; I'm glad I did read to this section however.

In short I don’t think we yet know how to do the most good and there is a case for much more exploratory research

I agree with this. As a result I was surprised at the "possible implications" section, since it presumes that the main conclusion of the post is correct.

MaxGhenis @ 2021-12-31T03:55 (+7)

I would like to see near-term EAs like GiveWell looking more at the long-term implications of the interventions they recommend and more speculative but potentially higher return interventions such as policy change

I wholeheartedly agree. Last month, GiveDirectly criticized GiveWell for ignoring externalities of cash transfers, and while I think this is sort of unfair given they also ignore externalities of malaria prevention, I genuinely don't know what kind of multiplier to put on various effective short-term interventions.

In my post, Mortality, existential risk, and universal basic income, I summarized some research connecting poverty to patience, trust, and attitudes toward global cooperation. This evidence is sparse and mostly (though not entirely) correlational, and the connection to longtermism is speculative at this point. The EA community could fill some of this knowledge gap by commissioning surveys on longtermist attitudes, and utilizing either natural experiments or RCTs to examine the effect of short-term interventions in shaping these attitudes.

MichaelStJules @ 2021-12-31T19:04 (+6)

9. Experts and common sense suggests that it is plausible that the best thing you can do for the long term is to make the short term go well

It is not unusual to hear people say that the best thing you can do for the long term is to make the short term good. This seems a reasonable common sense view.

Even people who are trusted and considered experts with the EA community express this view. For example here Peter Singer suggest that “If we are at the hinge of history, enabling people to escape poverty and get an education is as likely to move things in the right direction as almost anything else we might do; and if we are not at that critical point, it will have been a good thing to do anyway” (source)

It's unfortunate that Singer didn't expand more on this, since we're left to speculate, and my initial reaction is that this is false, and on a more careful reading, probably misleading.

- How is he imagining reducing poverty and increasing education moving things in the right direction? Does it lead to more fairly distributed influence over the future which has good effects, and/or a wider moral circle? Is he talking about compounding wealth/growth? Does it mean more people are likely to contribute to technological solutions? But what about accelerating technological risks?

- Does he think "enabling people to escape poverty and get an education" moves things in the right direction as much as almost anything else in expectation, in case we have both likelihood and "distance" to consider?

- Maybe "enabling people to escape poverty and get an education is as likely to move things in the right direction as almost anything else we might do", but "almost anything else" could leave a relatively small share of interventions that we can reliably identify as doing much better for the long term (and without also picking things that backfire overall).

- Is he just expressing skepticism that longtermist interventions actually reliably move things in the right direction at all without backfiring, e.g. due to cluelessness/deep uncertainty? I'm most sympathetic to this, but if this is what he meant, he should have said so.

Also, I don't think Singer is an expert in longtermist thinking and longtermist interventions, and I have not seen him engage a lot with longtermism. I could be wrong. Of course, that may be because he's skeptical of longtermism, possibly justifiably so.

weeatquince @ 2022-01-02T00:19 (+2)

Agree

I think the weak argument here is not: Singer has thought about this a lot and has an informed view. It is maybe something like: There is an intuition that convergence makes sense, and even smart folk (e.g. Singer) have this intuition, and intuitions are some evidence.

FWIW I don’t think that Peter Singer piece is a great piece.

Guy Raveh @ 2021-12-30T23:17 (+6)

I really like this, though I haven't had the time yet to read all the details. I do tend to feel a convergence should occur between near term and long term thinking, though perhaps for different reasons (the most important of which is that to actually get the long term right, we need to empower most or all of the population to voice their views on what "right" means - and empowering the current population is also what non-longtermists generally try to do).

I also specifically like the format and style. They are almost the same like other posts, but somehow this is much more legible for me than many (most?) EA forum posts.

JackM @ 2021-12-31T08:42 (+4)

I would like to see more focus on the kinds of interventions that look great across all domains: meta-science, improving institutional decision making, economic growth, (safe) research and development of biotech, moral circle expansion, etc. Honestly I would not at all be surprised if any of these areas becomes a key focus for EA in a few years.

What is your argument for doing things that look good across all domains?

Arguing for doing these things seems different to your argument in point 10 where you say do things that look good from a neartermist perspective and (different?) things that look good from a longtermist perspective - implying that these are likely to be different to each other. Am I understanding you correctly? Point 10 then seems to undermine the general point you're trying to make about convergence.

Linch @ 2021-12-31T20:09 (+6)

I'm personally pretty skeptical that much of IIDM, economic growth, and meta-science is net positive, am confused about moral circle expansion (though intuitively feel the most positive about it on this list), and while I agree that "(safe) research and development of biotech is good," I suspect the word "safe" is doing a helluva work here.

Also the (implicit) moral trade perspective here assumes that there exists large gains from trade from doing a bunch of investment into new cause/intervention areas that naively looks decent on both NT and LT grounds; it's not clear to me (and I'd tentatively bet against) that this is a better deal than people working on the best cause/intervention areas for each.

weeatquince @ 2022-01-02T00:23 (+4)

Why are you sceptical of IIDM, meta-science, etc. Would love to hear arguments against?

The short argument for is that insofar as making the future goes well means dealing with uncertainty and things that are hard to predict, then these seem like exactly the kinds of interventions to work on (as set out here).

JackM @ 2022-01-02T13:23 (+3)

A common criticism of economic growth and scientific progress is that it entails sped up technological development which could mean greater x-risk. This is why many EAs prefer differential growth/progress and focusing on specific risks.

On the other hand there are arguments that economic growth and technological development could reduce x-risk and help us achieve existential security e.g. here and Will MacAskill alludes to a similar argument in his recent EA Global fireside chat at around the 7 minute mark.

Overall there seems to be disagreement amongst prominent EAs and it's quite unclear overall.

With regards to IIDM I don't see why that wouldn't be net positive.

Linch @ 2022-01-02T19:39 (+2)

Yeah since almost all x-risk is anthropogenic, our prior for economic growth and scientific progress is very close to 50-50, and I have specific empirical (though still not very detailed) reasons to update in the negative direction (at least on the margin, as of 2022).

With regards to IIDM I don't see why that wouldn't be net positive.

I think this disentanglement by Lizka might be helpful*, especially if (like me) your empirical views about external institutions are a bit more negative than Lizka's.

*Disclaimer: I supervised her when she was writing this

weeatquince @ 2022-01-03T01:03 (+4)

Hi. Thank you so much for the link, somehow I had missed that post by Lizka. Was great reading :-)

To flag however I am still a bit confused. Lizka's post says "Personally, I think IIDM-style work is a very promising area for effective altruism"so I don’t understand how you go from that too IIDM is net-negative. I also don’t understand what the phrase "especially if (like me) your empirical views about external institutions are a bit more negative than Lizka's" means (like if you think institutions are generally not doing good then IIDM might be more useful not less).

I am not trying to be critical here. I am genuinely very keen to understand the case against. I work in this space so it would be really great to find people who think this is not useful and to understand their point of view.

IanDavidMoss @ 2022-01-03T01:40 (+4)

Not to speak for Linch, but my understanding of Lizka's overall point is that IIDM-style work that is not sufficiently well-targeted could be net-negative. A lot of people think of IIDM work primarily from a tools- and techniques-based lens (think e.g. forecasting), which means that more advanced tools could be used by any institution to further its aims, no matter whether those aims are good/productive or not. (They could also be put to use to further good aims but still not result in better decisions because of other institutional dysfunctions.) This lens is in contrast to the approach that Effective Institutions Project is taking to the issue, which considers institutions on a case-by-case basis and tries to understand what interventions would cause those specific institutions to contribute more to the net good of humanity.

Linch @ 2022-01-03T05:37 (+2)

This lens is in contrast to the approach that Effective Institutions Project is taking to the issue, which considers institutions on a case-by-case basis and tries to understand what interventions would cause those specific institutions to contribute more to the net good of humanity.

I'm excited about this! Do people on the Effective Institutions Project consider these institutions from a LT lens? If so, do they mostly have a "broad tent" approach to LT impacts, or more of a "targeted/narrow theory of change" approach?

IanDavidMoss @ 2022-01-03T12:59 (+4)

Yes, we have an institutional prioritization analysis in progress that uses both neartermist and longtermist lenses explicitly and also tries to triangulate between them (in the spirit of Sam's advice that "Doing Both Is Best"). We'll be sending out a draft for review towards the end of this month and I'd be happy to include you in the distribution list if interested.

With respect to LT impact/issues, it is a broad tent approach although the theory of change to make change in an institution could be more targeted depending on the specific circumstances of that institution.

Linch @ 2022-01-03T05:29 (+2)

I appreciate the (politer than me) engagement!

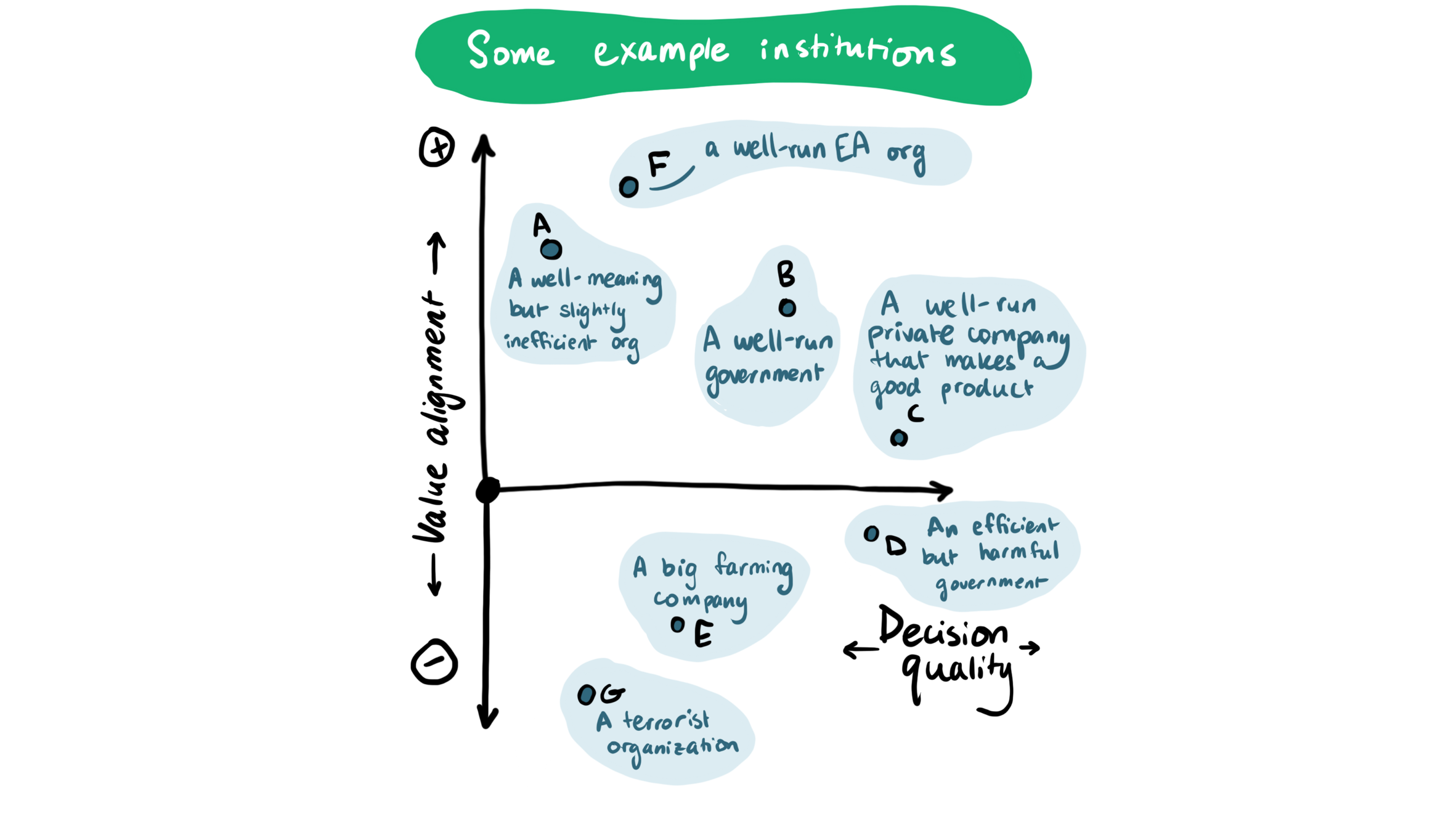

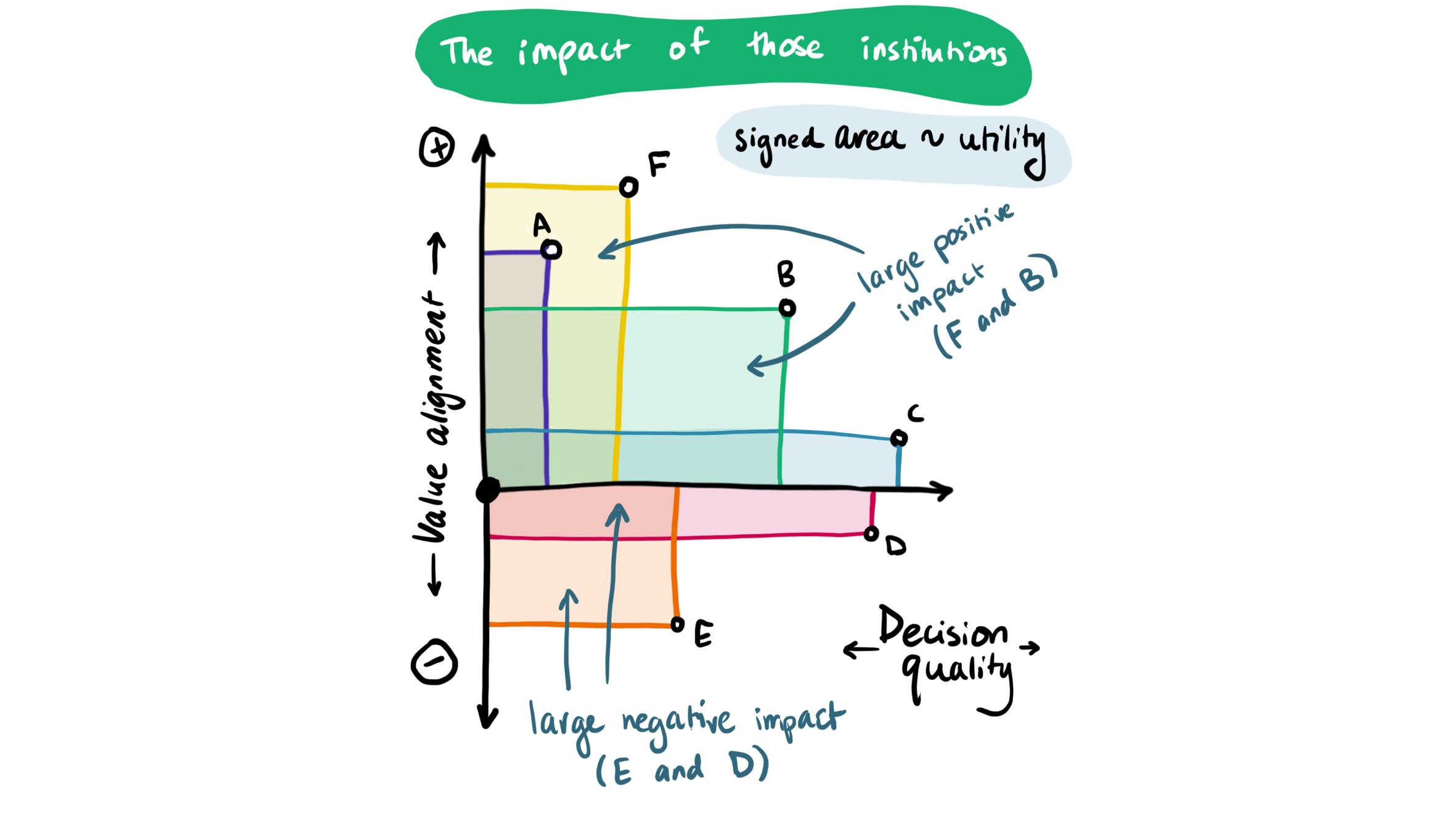

These are the key diagrams from Lizka's post:

The key simplifying assumption is one in which decision quality is orthogonal to value alignment. I don't believe this is literally true, but is a good start. MichaelA et. al's BIP (Benevolence, Intelligence, Power) ontology* is also helpful here.

If we think of Lizka's B in the first diagram ("a well-run government") is only weakly positive or neutral on the value alignment axis from an LT perspective, and most other dots are negative, we'd yield a simplified result that what Lizka calls "un-targeted, value-neutral IIDM" -- that is, improving decision quality of unaligned actors (which is roughly what much of EA work/grantmaking in IIDM in practice looks like, eg in forecasting or alternative voting) as broadly having the same effect as improving technological progress or economic growth.

I'm more optimistic about IIDM that's either more targeted (e.g. specialized in improving the decision quality of EA institutions, or perhaps via picking a side in great power stuff) or value-aligned (e.g. having predictive setups where we predict certain types of IIDM work differentially benefits the LT future over other goals an institution can have, I think your(?) work on "institutions for future generations" plausibly fall here).

One way to salvage these efforts' LT impact is claiming that in practice work that apparently looks like "un-targeted, value-neutral IIDM" (e.g. funding academic work in forecasting or campaigning for approval voting) is in practice pretty targeted or value-gnostic, e.g. because EAs are the only ones who care about forecasting.

A secondary reason (not covered by Lizka's post) I'm leery is that influence goes both ways, and I worry that LT people who get stuck on IIDM may (eventually) get corrupted by the epistemics or values of institutions they're trying to influence, or that of other allies. I don't think this is a dominant consideration however, and ultimately I'd reluctantly lean towards EA being too small by ourselves to save the world without at least risking this form of corruption**.

*MichaelA wrote this while he was at Convergence Analysis. He now works at RP. As an aside, I do think there's a salient bias I have where I'm more likely to read/seriously consider work by coworkers than other work of equivalent merit, unfortunately I do not currently have active plans to fix this bias.

**Aside 2: I'm worried that my word choice in this post is too strong, with phrases like "corruption" etc. I'd be interested in more neutral phrasing that conveys the same concepts.

weeatquince @ 2022-01-03T11:36 (+2)

Super thanks for the lengthy answer.

I think we are mostly on the same page.

Decision quality is orthogonal to value alignment. ... I'm more optimistic about IIDM that's either more targeted or value-aligned.

Agree. And yes to date I have focused on targeted interventions (e.g. improving government risk management functions) and value-aligning orgs (e.g. institutions for Future Generations).

[Could] claiming that in practice work that apparently looks like "un-targeted, value-neutral IIDM" (e.g. funding academic work in forecasting or campaigning for approval voting) is in practice pretty targeted or value-gnostic.

Agree. FWIW I think I would make this case about approval voting as I believe aligning powerful actors (elected officials) incentives with the populations incentives is a form of value-aligning. Not sure I would make this case for forecasting, but could be open to hearing others make the case.

So where if anywhere do we disagree?

I'm leery is that influence goes both ways, and I worry that LT people who get stuck on IIDM may (eventually) get corrupted by the epistemics or values of institutions they're trying to influence, or that of other allies.

Disagree. I don’t see that as a worry. I have not seen any evidence any cases of this, and there are 100s of EA aligned folk in the UK policy space. Where are you from? I have heard this worry so far only from people in the USA, maybe there are cultural differences or this has been happening there. Insofar as it is a risk I would assume it might be less bad for actors working outside of institutions (capaigners, lobbyists) so I do think more EA-aligned institutions in this domain could be useful.

If we think of Lizka's B in the first diagram ("a well-run government") is only weakly positive or neutral on the value alignment axis from an LT perspective

I think a well-run government is pretty positive. Maybe it depends on the government (as you say maybe there is a case for picking sides) and my experience is UK based. But, for example my understanding is there is some evidence that improved diplomacy practice is good for avoiding conflicts and mismanagement of central government functions can lead to periods of great instability (e.g. financial crises). Also a government is a collections of many smaller institutions it when you get into the weeds of it it becomes easier to pick and choose the sub-institutions that matter more.

weeatquince @ 2021-12-31T17:53 (+2)

What is your argument for doing things that look good across all domains?

I think the rough argument would be

- EA is likely to start focusing on new cause areas. EA has a significant amounts of money (£bn) and is unsure (especially for longtermists) how best to spend it and one of the key ways of finding more places to give is to explore new cause areas. Also many EAs think we will likely move to new/adjacent cause areas (source). Also EA has underinvested in cause research (link).

- Cause areas that look promising for both neartermists and longtermists are a good bet. They are likely to have the things that neartermists filter for (e.g. quality of evidence) and that longtermists filter for (e.g. potential to leverage large impact in the long-run). And at minimum we should not dismiss them because we view the convergence as "surprising and suspicious".

Point 10 then seems to undermine the general point you're trying to make about convergence.

Yes to some degree the different arguments here might undermine each other. I just listed the 10 most plausible arguments I could think off. I made no effort to make sure they didn’t contradict one another (although I think contradictions are minimal).

If you want to reconcile Point 10 with the general narrative you could say something like: As a community we should do at least some of both. So for an individual with a specific skill set the relevant question would be personal strength and choosing the cause she/he can have the most impact on (rather than longtermism or not). To be honest I am not sure I 100% agree with that but it might help a bit.

Martin Trouilloud @ 2021-12-31T00:58 (+3)

Great post. #9 is interesting because the inverse might also be true, making your idea even stronger: maybe a great thing you can do for the short term is to make the long term go well. X-risk interventions naturally overlap with maintaining societal stability, because 1) a rational global order founded in peace and mutual understanding, which relatively speaking we have today more than ever before, reduces the probability of global catastrophes; and less convincingly 2) a catastrophe that nevertheless doesn’t kill everyone would indefinitely set the remaining population back at square one for all neartermist cause areas. Maintaining the global stability we’ve enjoyed since the World Wars is a necessary precondition for the coterminous vast improvements in global health and poverty, and it seems like a great bulk of X-risk work boils down to that. Your #2 is also relevant.