Open Phil EA/LT Survey 2020: Other Findings

By Eli Rose🔸 @ 2021-09-09T01:01 (+40)

We'll be posting results from this survey to the frontpage one at a time, since a few people asked us not to share all of them at once and risk flooding the Forum. You can see the full set of results at our sequence.

This post is the last in a series on Open Phil’s 2020 survey of a subset of people doing (or interested in) longtermist priority work. See the first post for an introduction and a discussion of the high-level takeaways from this survey. Previous posts discussed the survey’s methodology, background statistics about our respondent pool, our findings on what helped and hindered our respondents re: their positive impact on the world, our findings on impact from EA groups specifically, and our findings about when and how our respondents first learned about EA/EA-adjacent ideas. This post discusses miscellaneous other findings that we thought were important.

You can read this whole sequence as a single Google Doc here, if you prefer having everything in one place. Feel free to comment either on the doc or on the Forum. Comments on the Forum are likely better for substantive discussion, while comments on the doc may be better for quick, localized questions.

Summary of Key Results

- Many of our respondents think that high-school outreach programs would have been useful for them and people like them. We asked “What do you think the best age for you to hear about EA/EA-adjacent ideas would have been?” The average response was 16 (as opposed to the average age our respondents reported actually hearing about these ideas, which was 20). Few respondents (~3%) said that it would have been better for them to first hear of these ideas later than they actually did. Respondents often mentioned that hearing about EA before starting university would have been particularly helpful because they could have planned how to use their time at university better; e.g. what to major in. We also saw similar themes mentioned in our respondents’ answers to various other questions (see more.)

Results

Satisfaction with the EA Community

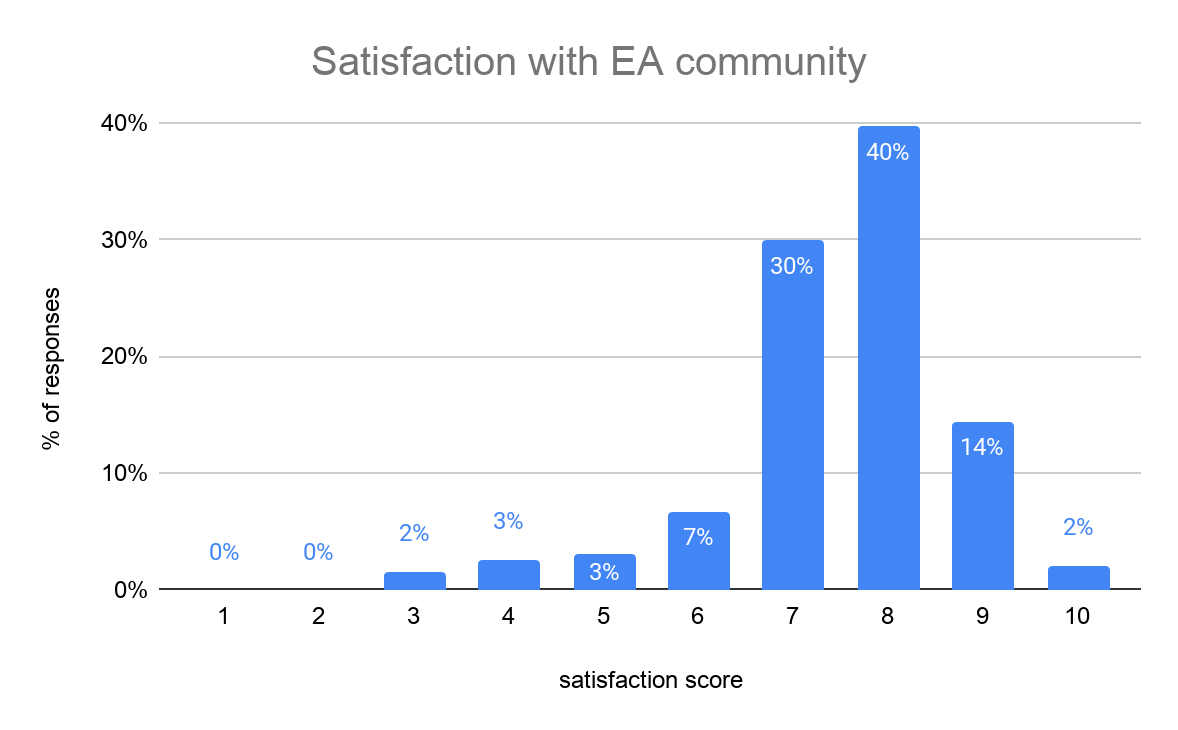

We asked about our respondents’ satisfaction with the EA community (focusing specifically on that community, as opposed to adjacent ones.)

What is your overall satisfaction with the effective altruism community? (Here we mean the effective altruism community in particular, not including adjacent communities, contrary to the definition we use elsewhere in the survey. Feel free to skip if you don’t think you have much of a relationship with that community.)

The possible responses were on a numeric scale: 1 through 10. ~90% of our respondents answered this question. Here’s the distribution of responses.

The mean of this distribution is ~7.5.

Best Age for First Exposure

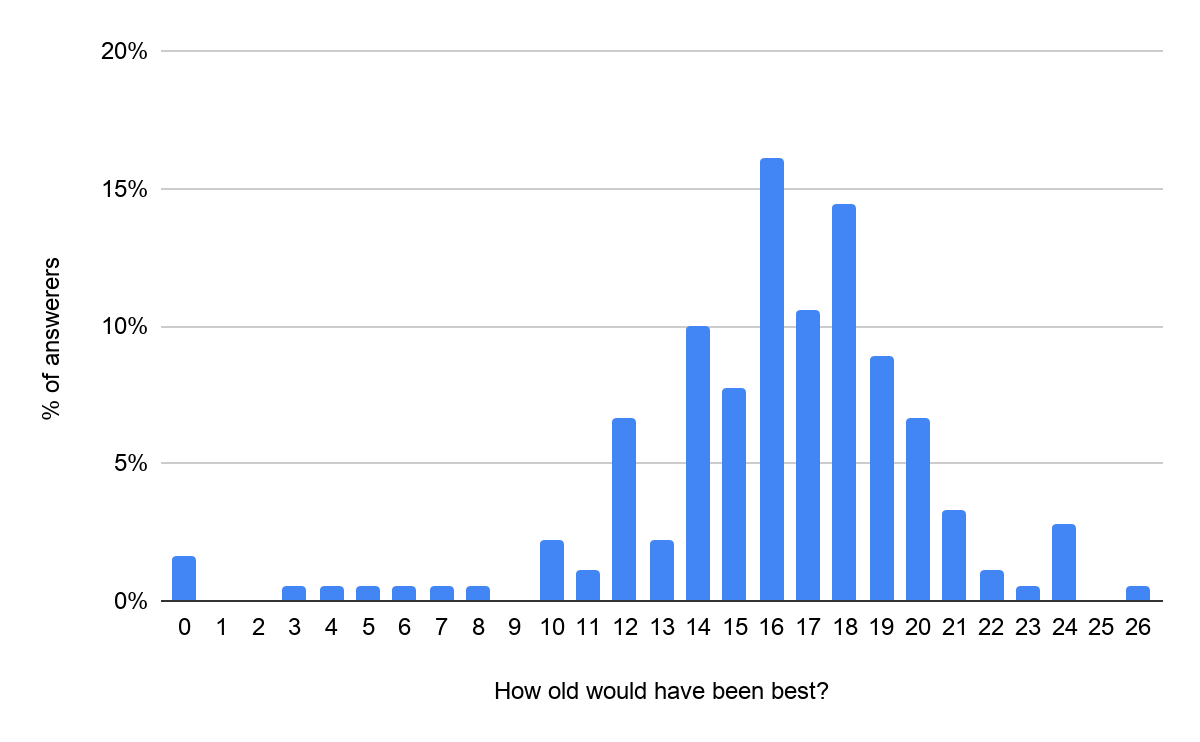

In addition to asking our respondents when they first learned about EA/EA-adjacent ideas, we also asked them what they think the best age for them to have learned about EA/EA-adjacent ideas would have been.

What do you think would have been the best age for you to hear about EA or EA-adjacent ideas, and why? (For whatever you take “best” to mean.)

82% of our respondents answered this question. The answers were free-text, so I translated them into single numbers in order to do a quantitative comparison. When a range was given, I took the middle of the range. For example, I translated the answer “in high school” to 16, the middle of the range of typically-aged high schoolers.

The results had the following distribution.

Mean and the median are both ~16.

The small values on the left tail are real — three respondents said it would have been best for them to be introduced at age 0, and a handful of others gave various ages below 10. 6 other respondents just said something like “the earlier the better” (though from context, I guessed that they weren’t talking about <10 years old). In order to make this graph, I arbitrarily chose to translate “the earlier the better” to age 12. If I instead ignore the “the earlier the better” responses, the mean shifts up slightly to 16.3 and the median becomes 16.5.

Many respondents expressed that they wish they had known about EA before university, since it would have affected their decisions about what to study. Some people expressed a wish to have done their “soul-searching” before they entered university, so they could better use their time there. Some respondents who said that ages before 16 would have been ideal for them mentioned that at these ages they were already very interested in ethics and intellectual approaches to altruism, and they wished that EA had been around for that stage of their thought. A few of these people specifically mentioned being dissatisfied with the impact they saw “conventionally altruistic” activities as having at this age.

You might distrust this self-report evidence for a number of reasons; you might think our respondents have a (possibly unconscious) desire to present themselves as “ideal EAs” who were always sympathetic to the ideas, or you might think that they suffer from a “curse of knowledge”-like effect where they remember their former selves as having beliefs more consistent with their present beliefs than was actually the case. For me, after accounting for these biases, the self-reports still felt like a meaningful update about the promisingness of high-school outreach[1].

5 (~3%) of respondents who answered the question said they wished they had been introduced at a later age than they actually were; they wished they had been introduced 2-4 years later. Three out of the five were introduced at age 18 or below. That makes for ~6% of respondents who were introduced at age 18 or below who said they wished they had been introduced later (but this is based on a very small number of data points).

Respondents’ Thoughts on Outreach

We asked our respondents directly how to attract people like them. In an attempt to get answers that were grounded in direct experience, we used a framing where we asked about “people very, very similar to you.”

Imagine that there’s a pool of other people who are very, very similar to you (with similar backgrounds, skills, interests, and personalities) who haven’t yet engaged with EA/EA-adjacent ideas. What advice would you give a person or organization who hoped to get more of those people to become involved in working on top-priority causes? If Open Phil were interested in making grants with the goal of getting more of those people involved, what advice would you give to us?

126/217 = ~58% of our respondents answered this question (it was in the optional section). My first takeaway from these responses was that they were very diverse; there’s no one intervention that everyone is screaming for. Some themes that jumped out at me:

- 10% of answers mentioned starting outreach younger, most mentioning high school.

- Additionally, some people who had been to SPARC and ESPR mentioned that they thought these programs had been effective for them and would recommend them to very similar people.

- 9% of answers had a strong element of “for people very similar to me, exposure should be enough, so just promoting the ideas widely would suffice.”

- 7% of answers mentioned providing prestigious ways to get involved.

- 8% of answers included something about improving or widening the reach of local and student groups.

We also (later) asked a multiple-choice question on “outreach efforts applicable to you personally” which gave a paragraph of description on each of several different hypothetical outreach efforts and asked respondents to select which ones they think would have been especially useful to them. The text of the question is below (it’s quite long).

Below are descriptions of several interventions/programs that have been proposed. After you read through the descriptions, we’ll ask you which, if any, seem like they would be or in the past would have been very useful to you personally, in terms of substantially increasing your expected lifetime positive impact, if implemented reasonably-but-not-implausibly well, and what versions, if any, would have been especially useful.

Structured education on EA and EA-adjacent ideas: I.e. programs to systematically teach people about EA, longtermism, and/or related ideas, as well as associated research techniques. This could include the technical/empirical aspects of these areas (e.g. things like “arguments about why transformative AI might happen soon” and “results of research on human biases that might affect career choice”) as well as philosophical (e.g. “introduction to population ethics and how that affects estimates of the moral value of the far future” and “arguments for and against digital minds being morally relevant”). The programs could be either short (a 4-day workshop) or long (a semester-long “course”), online or in-person, focused on relatively introductory or more advanced content, and focused on either broader or more specific content.

Outreach — summer program for high-schoolers: A summer program for high-schoolers to meet other high-schoolers interested in EA, longtermism, and/or related ideas, as well as learn more about the topics. The focus would be on deepening engagement for people who are already somewhat engaged with the ideas, through a variety of activities (e.g. possibly including socializing, learning, doing research, talking about what it’s like to pursue university studies in relevant areas, etc.).

Outreach — summer program for university students: A summer program for university students to meet other university students interested in EA, longtermism, and/or related ideas, as well as learn more about the topics. The focus would be on deepening engagement for people who are already somewhat-engaged with the ideas, through a variety of activities (e.g. socializing, learning, doingresearch, talking about what it’s like to pursue careers in relevant areas, etc.).

Outreach — more online content targeted at people in their teens or early twenties: This might include blogs, articles, advertising, or videos, aimed at increasing the odds people become engaged earlier, and that more people become engaged in total (rather than helping already-engaged people deepen their engagement).

Social events: A greater variety and frequency of opportunities to interact socially with other people pursuing longtermist priority work, for example at meetups, events, parties, or one-on-one, with the aim of making it easier to make friends with others pursuing those aims, and increasing the social interconnectedness of the community.

Meetings with a mentor: People who are newer to the relevant communities and ideas get assigned to be mentored by people with more experience via a matching process aimed at facilitating helpful mentorship relationships, and increasing the odds that the newer people get a chance to benefit from the support, advice, and connections that others might have. The mentor would not be deeply involved in the newer person’s projects, but would be available to meet regularly to provide support and advice.

Scholarships/early financial support: Efforts to provide people who seek to work on projects in priority cause areas with more/earlier financial support. This could include funding for students (merit- or need-based), or for people who are working independently, trying to start new projects, or think supplementary financial support in addition to income they earn doing their projects would be useful.

Dedicated resources for starting new projects: This category includes various forms of support dedicated specifically to people trying to start new projects, orgs, and other initiatives. Specific examples include (a) access to common resources needed for starting new projects/orgs like legal advice, fiscal sponsorship, etc. (b) sets of ideas from more experienced people in relevant areas about what projects/orgs seem most useful, (c) a point person for questions about unilateralism and ways projects could potentially be harmful, for the purpose of avoiding doing harm and showing the rest of the community one is sensitive to these harms, (d) social resources like a dedicated discussion space for people trying to start new projects, access to lists of potential co-founders and early employees, etc.

Intensive coaching/self-help/reasoning skills: In contrast to the “structured education” option, this one focuses on helping people learn and practice meta-skills related to thinking and emotion, like thinking about the opportunity cost of one’s choices, recognizing and addressing motivated reasoning, and generally increasing self-efficacy/agency. The focus would be on learning these skills in order to increase positive impact on the world. This could also include more dedicated support for brainstorming ways to address obstacles, reflect on personal fit, and trying out strategies to increase productivity. CFAR workshops and EA coaching are examples of efforts in this category.

Other: [as described by you]

Which of these seem like they would have been very useful to you personally?

- Structured education

- High school outreach

- University outreach

- Online outreach to young people

- Social events

- Mentorship

- Scholarships/early financial support

- Resources for new projects

- Coaching/self-help

- Other [as described by you]

- None of the above

And a follow-up question:

Of the ones which seem like they would have been very useful, which versions of them seem most helpful?

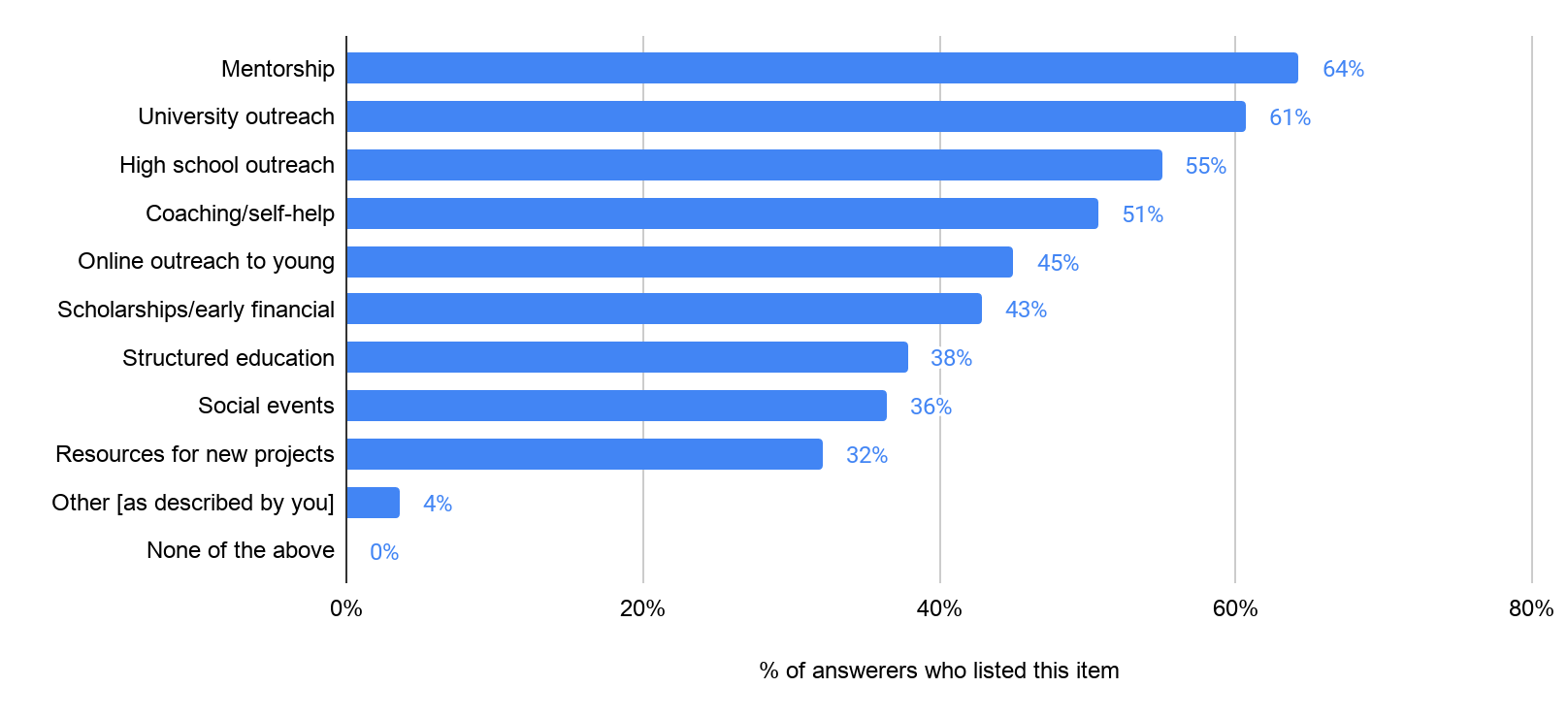

Selecting multiple options was allowed. 64% of our respondents answered one or more options for this question (it was in the optional section). Here is the distribution of results: