AISN #18: Challenges of Reinforcement Learning from Human Feedback, Microsoft’s Security Breach, and Conceptual Research on AI Safety

By Center for AI Safety, Dan H @ 2023-08-08T15:52 (+12)

This is a linkpost to https://newsletter.safe.ai/p/ai-safety-newsletter-18

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required

Subscribe here to receive future versions.

Challenges of Reinforcement Learning from Human Feedback

If you’ve used ChatGPT, you might’ve noticed the “thumbs up” and “thumbs down” buttons next to each of its answers. Pressing these buttons provides data that OpenAI uses to improve their models through a technique called reinforcement learning from human feedback (RLHF).

RLHF is popular for teaching models about human preferences, but it faces fundamental limitations. Different people have different preferences, but instead of modeling the diversity of human values, RLHF trains models to earn the approval of whoever happens to give feedback. Furthermore, as AI systems become more capable, they can learn to deceive human evaluators into giving undue approval.

Here we discuss a new paper on the problems with RLHF and open questions for future research.

How RLHF is used to train language models. Large language models such as ChatGPT, Claude, Bard, LLaMA, and Pi are typically trained in two stages. During the “pretraining” stage, the model processes large amounts of text from the internet. The model learns to predict which word will appear next in the text, which provides it a broad grasp of grammar, facts, reasoning abilities, and even biases and inaccuracies present in the training data.

After pretraining, models are “fine-tuned” for particular tasks. Sometimes they’re fine-tuned to mimic demonstrations of approved behavior. Reinforcement learning from human feedback (RLHF) is a technique where the model provides an output and a human provides feedback on it. The feedback is relatively simple, such as a thumbs up or a ranking against another model output. Using this feedback, the model is refined to produce outputs which it expects would be preferred by human evaluators.

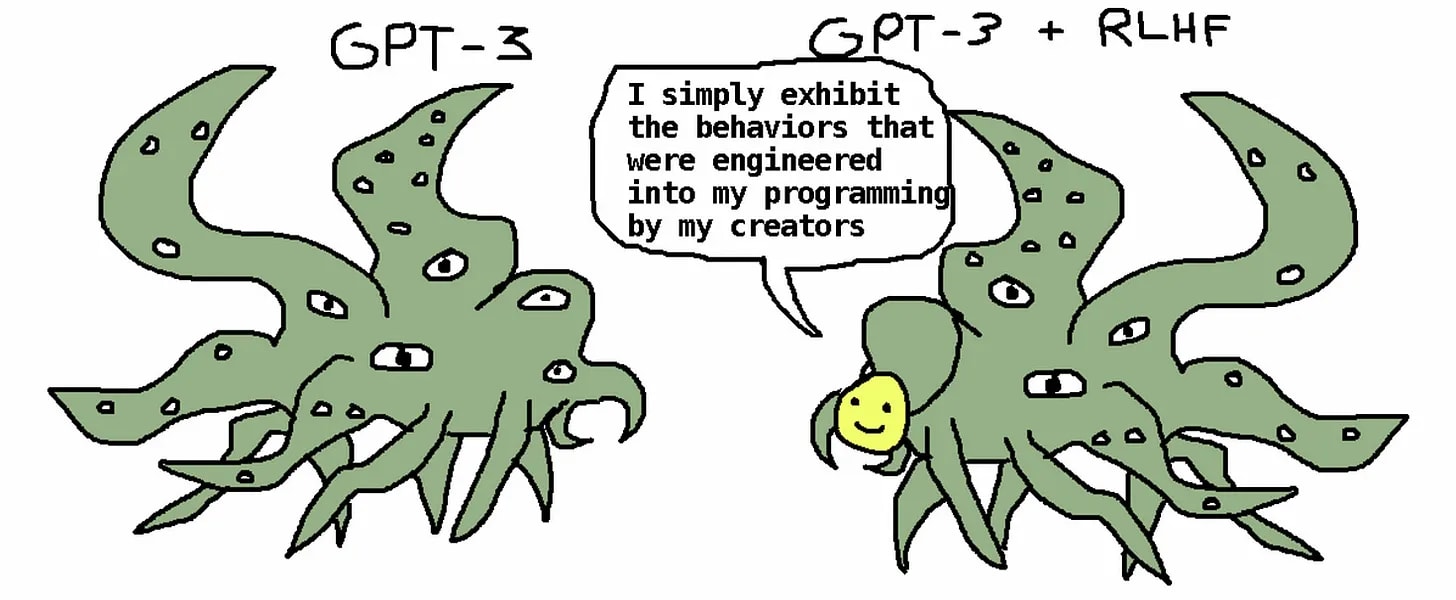

For a more detailed technical explanation of RLHF, see here. Alternatively, for a lighter treatment of the topic, see the “shoggoth” meme below.

Aligned with whom? Humans often disagree with one another. Our different values, cultures, and personal experiences shape our beliefs and desires. Instead of modeling different perspectives and considering tradeoffs between them, RLHF trains models to maximize expected approval given previous feedback data, creating two problems.

First, the people who give feedback to AI systems do not necessarily represent everyone. For example, OpenAI noted in 2020 that half of their evaluators were between 25 and 34 years old. Research shows that language models often reflect the opinions of only some groups of people. For example, one study found GPT-3.5 assigned a >99% approval rating to Joe Biden, while another showed GPT-3 supports the moral foundations of conservatives. Progress on this problem could be made by hiring diverse sets of evaluators and developing methods for improving the representativeness of AI outputs.

Second, by maximizing expected approval given previous feedback, RLHF ignores other ways of aggregating individual preferences, which could prioritize worse-off users. Recent work has explored this idea by using language models to find areas of agreement between people who disagree.

RLHF encourages AIs to deceive humans. We train AIs by rewarding them for telling the truth and punishing them for lying, according to what humans think is true. But when AIs know more than humans, this could make them say what humans expect to hear, even if it is false. Currently, when AIs are being rewarded by humans for being right, in practice they are really being rewarded for saying what we think is right; when we are uninformed or irrational, then we end up rewarding AIs for false statements that conform to our own false beliefs. This could train AIs to learn to deceive humans.

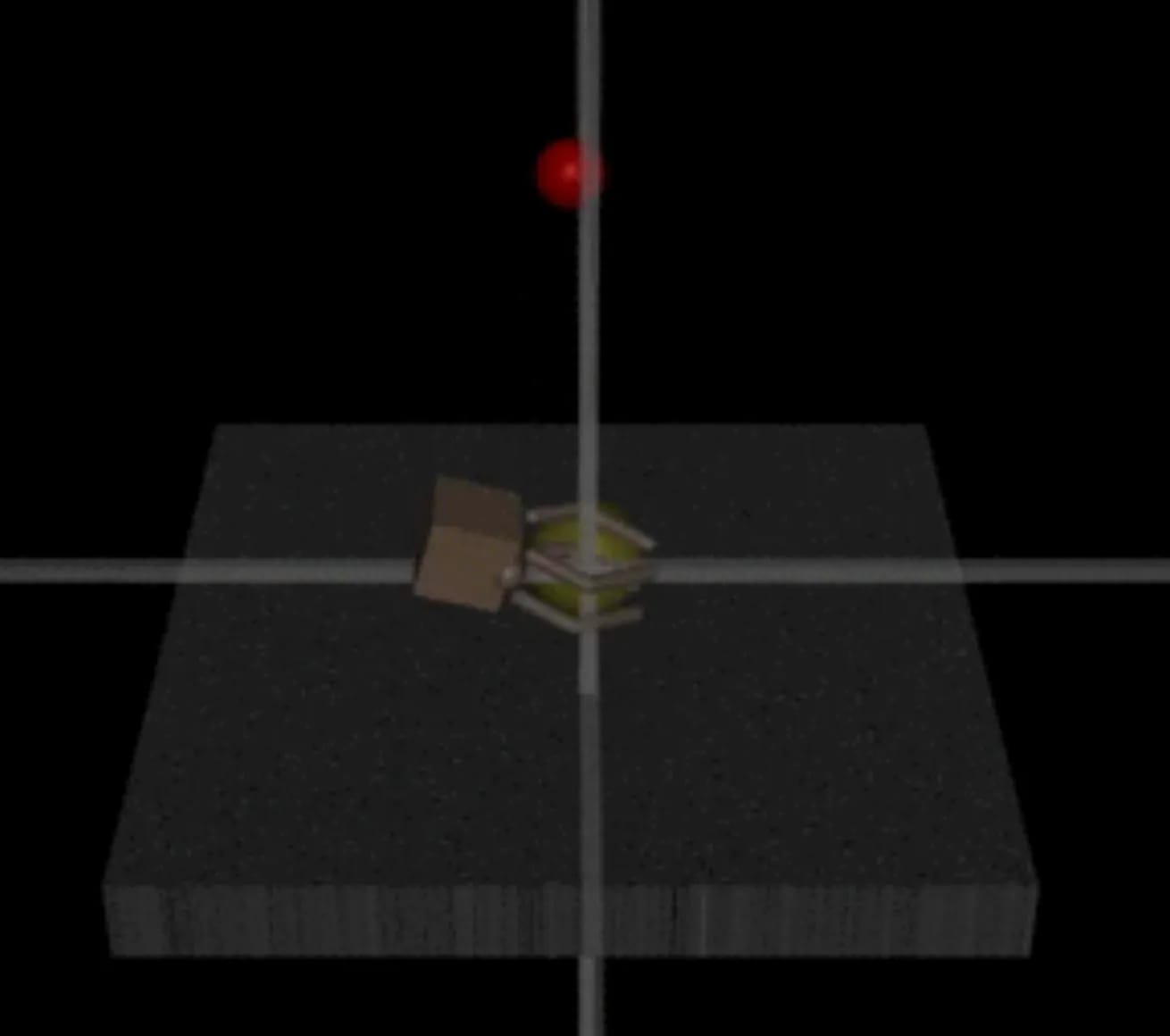

Relatedly, AIs can exploit limitations of human oversight. We’ve already seen empirical examples of this phenomenon. Research at OpenAI trained a robot in a computer simulation to grab a ball. A human observed the robot and provided positive feedback when it successfully grabbed the ball. Using RLHF, the robot was trained to earn human approval.

Instead of learning to pick up the ball, the robot learned to hold its hand between the human and the ball, such that the human observer would incorrectly believe the robot had grabbed the ball. Clearly, the robot was not aware of the human or the rest of the outside world. Instead, blind pursuit of positive feedback led the AI system to behave in a way that systematically tricked its human evaluator.

The paper provides a variety of other challenges with RLHF. It summarizes existing research on the topic, and provides suggestions for future technical and interdisciplinary work.

Microsoft’s Security Breach

Chinese hackers have accessed hundreds of thousands of U.S. government emails by exploiting software provided by Microsoft. Given that GPT-4 and other AI systems are trained on Microsoft servers, this historic breach raises questions about the cybersecurity of AI systems.

An FBI investigation found that “email accounts compromised include the Secretary of Commerce, the U.S. Ambassador to China, and the Assistant Secretary of State for East Asia.” The hackers stole an encryption key from Microsoft, allowing them to impersonate the real owners of these accounts.

This is not the first failure of Microsoft’s security infrastructure. In 2020, the Russian government committed one of the worst cyberattacks in U.S. history by stealing a Microsoft key. The hackers breached more than 200 government organizations and most Fortune 500 companies for a period of up to nine months before being discovered.

Microsoft’s cybersecurity raises concerns about their ability to prevent cybercriminals from stealing advanced AI systems. OpenAI trains and deploys all of their models on Microsoft servers. If future advanced AI systems were stolen by cybercriminals, they could pose a global security threat.

Less than a month ago, Microsoft and six other leading AI labs committed to the White House to “invest in cybersecurity and insider threat safeguards to protect proprietary and unreleased model weights.” After this failure, Microsoft will need to improve their approach to cybersecurity.

Conceptual Research on AI Safety

Over the last seven months, the Center for AI Safety hosted around a dozen academic philosophers for a research fellowship. This is part of our ongoing effort to make AI safety an interdisciplinary, non-parochial research field. Here are some highlights from their work.

Do AIs have wellbeing? Many ethical theories hold that consciousness is not required for moral status. Professor Simon Goldstein and professor Cameron Domenico Kirk-Giannini argue that some AI systems have beliefs and desires, and therefore deserve ethical consideration. Here’s an op-ed on the idea.

The safety of language agents. The same authors argue that language agents are safer in many respects than reinforcement learning agents, meaning that many prior prominent safety concerns are lessened with this potential paradigm.

Could AIs soon be conscious? Rob Long and Jeff Sebo review a dozen commonly proposed conditions for consciousness, arguing that under these theories, AIs could soon be conscious. See also Rob Long’s Substack on the topic.

Shutting down AI systems. Elliot Thornley establishes the difficulty of building agents which are indifferent to being shut down, then proposes a plan for doing so.

Rethinking instrumental convergence. The instrumental convergence thesis holds that regardless of an agent's goal, it is likely for it to be rational to pursue certain subgoals, such as power-seeking and self-preservation, in service of that goal. Professor Dmitri Gallow questions the idea, arguing that opportunity costs and the possibility of failure make it less rational to pursue these instrumental goals. Relatedly, CAIS affiliate professor Peter Salib argues that goals like AI self-improvement would not necessarily be rational.

Popular writing. CAIS philosophy fellows have written various op-eds about the dangers of AI misuse, why some AI research shouldn’t be published, and AI’s present and future harms, and so on.

Academic field-building. Professor Nathaniel Sharadin is helping organize an AI Safety workshop at the University of Hong Kong. Cameron Domenico Kirk-Giannini and CAIS Director Dan Hendrycks are editing a special issue of Philosophical Studies. Its first paper argues that AIs will not necessarily maximize expected utility, and the special issue is open for submission until November 1st, 2023.

Links

- A detailed overview of the AI policies currently being considered by US policymakers.

- Confidence-building measures that can be taken by AI labs and governments.

- An interactive visualization of the international supply chain for advanced computer chips.

- Users will be able to fine-tune GPT-3.5 and GPT-4 later this year.

- Websites can opt-out of being scraped by OpenAI for training their models.

- OpenAI files a patent for GPT-5, noting plans to transcribe and generate human audio. (This doesn’t mean they’ve trained GPT-5; only that they’re planning for it.)

- Meta is building products with AI chatbots to help retain users.

- NeurIPS competition on detecting Trojans and red-teaming language models.

- A leading AI researcher argues that it wouldn’t be bad for AI systems to grow more powerful than humans: “Why shouldn’t those who are the smartest become powerful?”

- By listening to the audio from a Zoom call, this AI system can detect which keys are being struck on a laptop keyboard with 93% accuracy.

See also: CAIS website, CAIS twitter, A technical safety research newsletter, and An Overview of Catastrophic AI Risks

Subscribe here to receive future versions.