(p-)Zombie Universe: another X-risk

By Toby Tremlett🔹 @ 2022-07-28T21:34 (+21)

Cross-post from: https://raisingdust.substack.com/p/zombie-universe

TL;DR

We aren’t certain that digital people could have conscious experience in the way necessary to make them morally important.

It is plausible that there will be many, and possibly only, digital people in the future.

If digital people don’t have morally relevant experience, and we don’t know, then letting them populate the galaxy would be an existential catastrophe.

Would it be good to fill the universe with digital minds living happy lives? What if this meant there were fewer biological humans in the future? What if the digital minds only seemed to be experiencing happiness?

I was thinking about this after reading Holden Karonfsky’s Most Important Century series. Holden gives a few compelling but superficial arguments for digital minds being capable of conscious experience. In this post, I’ll focus on exploring the moral state of a future where these arguments are wrong, and only biological life is capable of conscious experience.

First— what do I mean by “conscious experience” and why does it matter?

A being with conscious experience is one who has the feeling of being in pain as well as behaving as if they are in pain, the felt experience of seeing yellow as well as the ability to report that the colour they are seeing is yellow. Philosophers sometimes call these ‘felt’ elements of our experience “qualia”. A human who acts normally, but who in fact is lacking in this ‘felt’, ‘internal’ aspect of experience is referred to as a “philosophical zombie”. A philosophical zombie’s brain fulfills all the same functions as ours, and they would respond to questions in exactly the same ways— even those about whether they have conscious experience. But, there is nothing it is like to be this person. They are acting mechanically, not experiencing.

Philosophers argue about whether this concept is coherent, or even imaginable. Those who see consciousness as constituted by the functional organisation of a brain don’t see any sense in the idea of a philosophical zombie. The zombie can do everything that we can do, so if we are conscious they must be as well.

Those who do see philosophical zombies as possible don’t have a clear idea of how consciousness relates to the brain, but they do think that brains could exist without consciousness, and that consciousness is something more than just the functions of the brain. In their view, a digital person (an uploaded human mind which runs on software) may act like a conscious human, and even tell you all about its ‘conscious experience’, but it is possible that it is in fact empty of experience.

This matters because we generally think we should only consider a being morally if they have conscious experience. We don’t think of it as morally wrong to kill a simulated character in a video game, because even if they seem realistic, we know they don’t feel pain. Philosophers wrangle over which animals are intelligent or feel pain and pleasure, because the answer to the question will affect how we should treat them.

So, it is possible that digital people would not be conscious, and therefore, would not be morally relevant. This means that filling a universe with very happy seeming simulations of human lives would be at best a morally neutral action, and if it came at a high cost, a massive moral failure.

But how could we get to a (mostly) digital universe?

Our question — whether digital minds could be conscious — becomes much more crucial when we imagine a world filled predominately or entirely with digital minds. But how could we get there?

It seems likely that in a future with well-established digital people, it is preferable to be a digital person than a physically embodied one. Broadly this would be the case if:

They live in much better environments than any biological human could (no unnecessary pain, no unnecessary violence, ability to think faster, or gain any powers or comforts that humans lack).

There was a general societal belief that digital people deserved rights, and had consciousness.

If this world was also one in which you could choose to become a digital person through a mind-upload, it seems likely that many or most people would.

If humanity is intrinsically interested in colonising the galaxy enough that it would still be motivated to do so even when it lived in a wonderful digital environment, or if the systems that ran the digital minds were otherwise motivated to expand, then it would also be digital minds, not biological minds, who would end up populating the galaxy.

How this could go very wrong

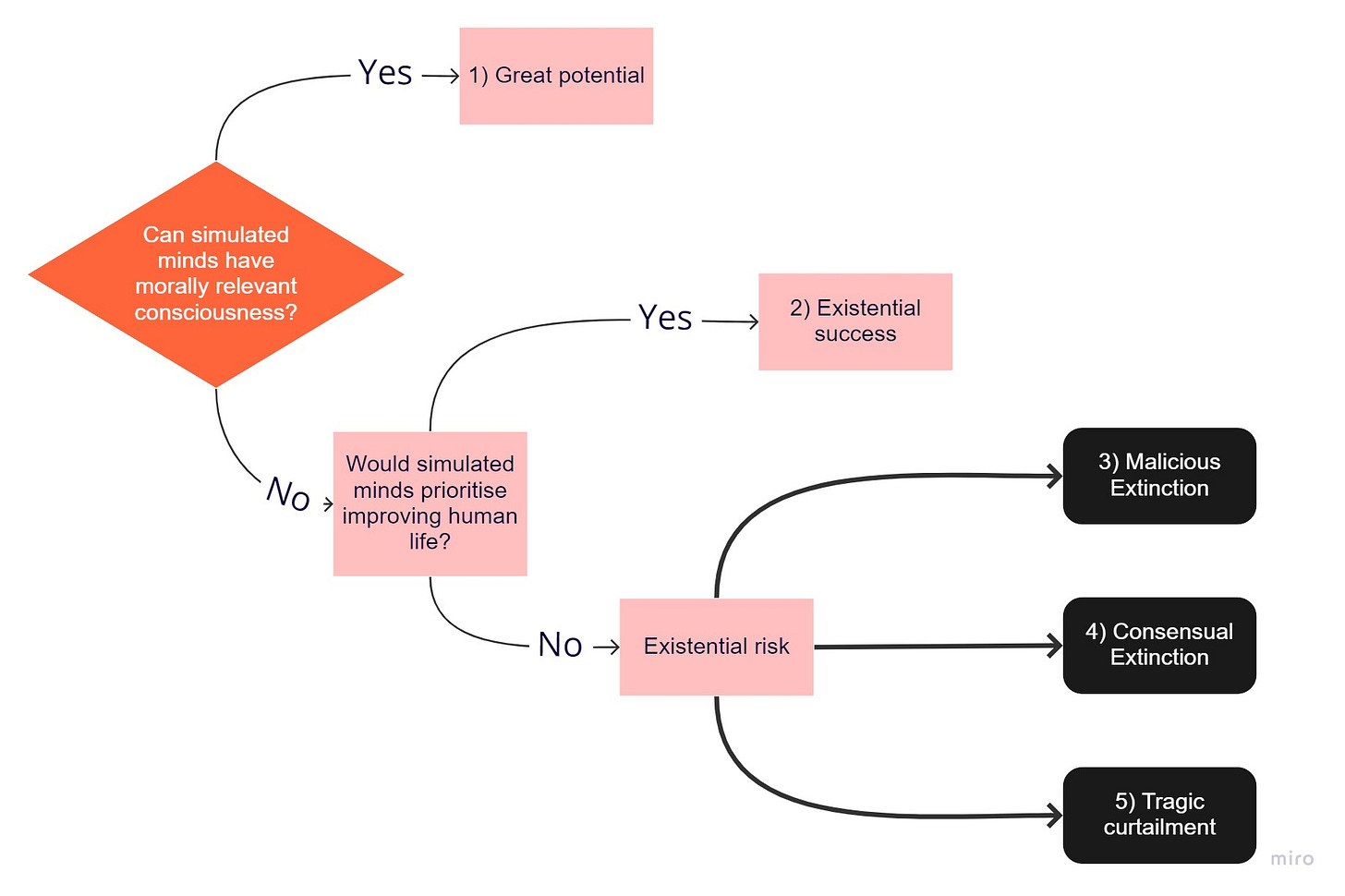

If these digital minds were conscious and happy, then humanity has succeeded in doing something amazing. Through some high-tech alchemy, we have turned previously value-free rocks into happy minds. We could still quibble about whether this is in fact the best possible world, but it seems like at least a very good one. Clearly, this could also go very wrong, but I’ll not focus on those possibilities here. [‘1) Great potential’ on diagram]

However, if it was the case that digital minds were not conscious, that in fact, they were philosophical zombies devoid of all experience, then what does our galaxy look like? This could go four ways.

The first option is that digital minds, while having no experience themselves, help embodied humanity live the best lives available to them. They could do all or most of the unfulfilling work that humans currently have to do, and allow all physical humans to live in luxury. This may be a better outcome than a universe where digital minds are never invented, because humanity will have far more technological capability in this world [(2) Existential success].

The other three options would all be called Existential Risks. I also think, for fun, we could call them Zombie Universes.

The first, which I have called ‘malicious extinction’ could occur if humans were in conflict with digital minds for resources or space, and the digital minds won the conflict. [(3) Malicious Extinction]

The second, consensual extinction, is a name for the scenario in which all humans opt-in to mind upload, leaving only digital minds, empty of moral value, to occupy the cosmos. [(4) Consensual Extinction]

The third— tragic curtailment— is one in which humans still live, but as a very small population, either in luxury or as servicers of the machines. Either way, this future is far, far smaller than our best options. Under many views, and definitely under consequentialism, this also makes it far, far worse. [(5) Tragic curtailment]

In all of these paths, the universe is practically barren of moral value (when compared to the alternative paths we could have taken). In each of them, the only conscious beings are the animals and humans left on earth— if there are any. This is a group that may live in luxury, or may live in pain and poverty with most resources hoarded by the digital minds. In these worlds, humanity has created a universe full of zombies, a galaxy with the outward appearance of great activity, but inwardly — silence.

Appendix — what about post-human intelligence and AGI?

I focus on digital people in this essay because I think they are easier to imagine than AGI, and this is bordering on too sci-fi-y anyway.

But in case anyone was wondering, I think the same successes and failures would exist with AGI. If the AGI was conscious and had positive experiences, filling the universe with it may be a massive moral success. If the AGI has no conscious experience, then filling the universe with it alone would be useless. The only missing aspect in a world with only AGI and no digital people is that there would be no consensual extinction option (at least not by the same means). However, it seems odd to imagine a world with AGI that roughly obeyed humans, and no digital people or mind-uploads.

rgb @ 2022-07-30T17:22 (+6)

You write,

Those who do see philosophical zombies as possible don’t have a clear idea of how consciousness relates to the brain, but they do think...that consciousness is something more than just the functions of the brain. In their view, a digital person (an uploaded human mind which runs on software) may act like a conscious human, and even tell you all about its ‘conscious experience’, but it is possible that it is in fact empty of experience.

It's consistent to think that p-zombies are possible but to think that, given the laws of nature, digital people would be conscious. David Chalmers is someone who argues for both views.

It might be useful to clarify that the questions of

(a) whether philosophical zombies are metaphysically possible (and the closely related question of physicalism about consciousness)

is actually somewhat orthogonal to the question of

(b) whether uploads that are functionally isomorphic to humans would be conscious

David Chalmers thinks that philosophical zombies are metaphysically possible, and that consciousness is not identical to the physical. But he also argues that, given the laws of nature in this world, uploaded minds, of sufficiently fine-grained functional equivalence to human minds, that act and talk like conscious humans would be conscious. In fact, he's the originator of the 'fading qualia' argument that Holden appeals to in his post.

On the other side, Ned Block thinks that zombies are not possible, and is a physicalist. But he also thinks that only biological-instantiated minds can be conscious.

Here's Chalmers (2010) on the distinction between the two issues:

I have occasionally encountered puzzlement that someone with my own property dualist views (or even that someone who thinks that there is a significant hard problem of consciousness) should be sympathetic to machine consciousness. But the question of whether the physical correlates of consciousness are biological or functional is largely orthogonal to the question of whether consciousness is identical to or distinct from its physical correlates. It is hard to see why the view that consciousness is restricted to creatures with our biology should be more in the spirit of property dualism! In any case, much of what follows is neutral on questions about materialism and dualism.

Question Mark @ 2022-07-29T03:37 (+2)

A P-zombie universe could be considered a good thing if one is a negative utilitarian. If a universe lacks any conscious experience, it would not contain any suffering.

tobytrem @ 2022-07-29T17:13 (+1)

Thanks for flagging- yes I have definitely taken more of the MacAskill-Ord-Greaves party line in this post. Personally, I'm pretty uncertain on total utilitarianism so this should reflect that a little more.

Ariel_ZJ @ 2022-07-29T00:12 (+1)

As per usual, Scott Alexander has a humorous take on this problem here (you need to be an ACX subscriber).

But as a general response, this is why we need to try and develop an accepted theory of consciousness. The problem you raise isn't specific to digital minds, it's the same problem when considering non-adult-human consciousness. Maybe most animals aren't conscious and their wellbeing is irrelevant? Maybe plants are conscious to a certain degree and we should be concerned with their welfare (they have action potentials after all)? Open Philanthropy also has a report on this issue.

For the moment, the best we've got for determining whether a system is conscious is:

- Subjective reports (do they say they're conscious?)

- Analogous design to things we already consider conscious (i.e. adult human brains)

The field of consciousness science is very aware that this is not a great position to be in. Here's a paper on how this leads to theories of consciousness being either trivial or non-falsifiable. Here's a debate between various leading theories of consciousness that is deeply unsatisfying in providing a resolution to these issues.

Anyway, this is just to say there are a great deal of problems that stem from 'we don't know which systems are conscious' and sadly we're not doing a great job of being close to having a solution for that.

tobytrem @ 2022-07-29T17:14 (+1)

Yep- I was going to have a 'what we should do' section and then realised that I had nothing very helpful to say. Thanks for those resources, I'll check them out.