JP Addison's Quick takes

By JP Addison🔸 @ 2019-08-13T01:40 (+4)

JP Addison @ 2023-09-08T12:41 (+75)

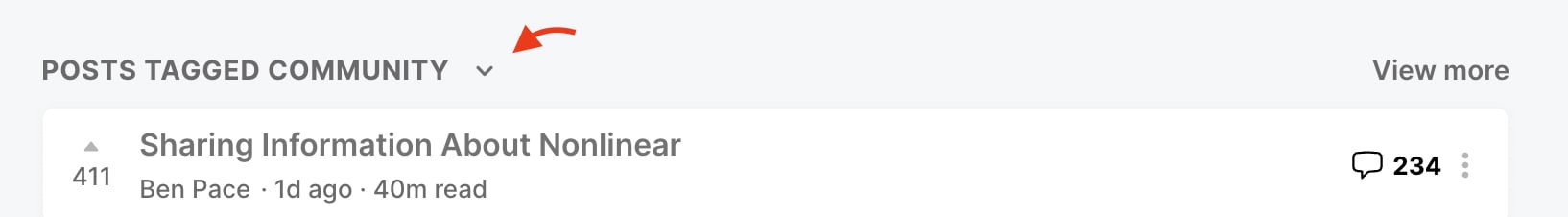

PSA: Apropos of nothing, did you known you can hide the community section?

(You can get rid of it entirely in your settings as well.)

Vaidehi Agarwalla @ 2023-09-08T14:38 (+16)

Is there a way to snooze the community tab or snooze / hide certain posts? I would use this feature.

Lorenzo Buonanno @ 2023-09-08T15:38 (+8)

Is there a way to do this for community quick takes? Most of the quick takes at this moment seem to be about the community.

MathiasKB @ 2023-09-08T14:29 (+6)

Thanks, you just bought me days of productivity

jpaddison @ 2019-09-06T18:51 (+55)

Appreciation post for Saulius

I realized recently that the same author that made the corporate commitments post and the misleading cost effectiveness post also made all three of these excellent posts on neglected animal welfare concerns that I remembered reading.

Fish used as live bait by recreational fishermen

Rodents farmed for pet snake food

35-150 billion fish are raised in captivity to be released into the wild every year

For the first he got this notable comment from OpenPhil's Lewis Bollard. Honorable mention includes this post which I also remembered, doing good epistemic work fact-checking a commonly cited comparison.

saulius @ 2019-09-09T11:25 (+21)

Also, I feel that as the author, I get more credit than is due, it’s more of a team effort. Other staff members of Rethink Charity review my posts, help me to select topics, and make sure that I have to worry about nothing else but writing. And in some cases posts get a lot of input from other people. E.g., Kieran Greig was the one who pointed out the problem of fish stocking to me and then he gave extensive feedback on the post. My CEE of corporate campaigns benefited tremendously from talking with many experts on the subject who generously shared their knowledge and ideas.

saulius @ 2019-09-08T22:44 (+17)

Thanks JP! I feel I should point out that it's now basically my full time job to write for the EA forum, which is why there are quite many posts by me :)

JP Addison @ 2020-10-24T11:10 (+42)

Offer of help with hands-free input

If you're experiencing wrist pain, you might want to take a break from typing. But the prospect of not been able to interact with the world might be holding you back. I want to help you try voice input. It's been helpful for me to go from being scared about my career and impact to being confident that I can still be productive without my hands. (In fact this post is brought to you by nothing but my voice.) Right now I think you're the best fit if you:

- Have ever written code, even a small amount, or otherwise feel comfortable editing a config file

- Are willing to give it a few days

- Have a quiet room where you can talk to your computer

Make sure you have a professional microphone — order this mic if not, which should arrive the next day.

Then you can book a call with me. Make sure your mic will arrive by the time the call is scheduled.

EdoArad @ 2020-10-24T18:45 (+2)

😍

Mojmir_Stehlik @ 2020-12-02T21:52 (+1)

hey! What's a programme that you're using?

JP Addison @ 2020-12-03T07:29 (+2)

Talon Voice

JP Addison @ 2022-08-31T15:52 (+34)

The Forum went down this morning, for what is I believe the longest outage we've ever had. A bot was breaking our robots.txt and performing a miniature (unintentional) DOS attack on us. This was perfectly timed with an upgrade to our infrastructure that made it hard to diagnose what was going on. We eventually figured out what was going on and blocked the bot, and learned some lessons along the way. My apologies on behalf of the Forum team :(

JP Addison @ 2024-04-05T20:20 (+26)

Here’s a puzzle I’ve thought about a few times recently:

The impact of an activity () is due to two factors, and . Those factors combine multiplicatively to produce impact. Examples include:

- The funding of an organization and the people working at the org

- A manager of a team who acts as a lever on the work of their reports

- The EA Forum acts as a lever on top of the efforts of the authors

- A product manager joins a team of engineers

Let’s assume in all of these scenarios that you are only one of the players in the situation, and you can only control your own actions.

From a counterfactual analysis, if you can increase your contribution by 10%, then you increase the impact by 10%, end of story.

From a Shapley Value perspective, it’s a bit more complicated, but we can start with a prior that you split your impact evenly with the other players.

Both these perspectives have a lot going for them! The counterfactual analysis has important correspondences to reality. If you do 10% better at your job the world gets better. Shapley Values prevent the scenario where the multiplicative impact causes the involved agents to collectively contribute too much.

I notice myself feeling relatively more philosophically comfortable running with the Shapely Value analysis in the scenario where I feel aligned with the other players in the game. And potentially the Shapley Value approach downsides go down if I actually run the math (Fake edit: I ran a really hacky guess as to how I’d calculate this using this calculator and it wasn’t that helpful).

But I don’t feel 100% bought-in to the Shapley Value approach, and think there’s a value in paying attention to the counterfactuals. My unprincipled compromise approach would be to take some weighted geometric mean and call it a day.

Interested in comments.

Zach Stein-Perlman @ 2024-04-06T05:22 (+13)

I'm annoyed at vague "value" questions. If you ask a specific question the puzzle dissolves. What should you do to make the world go better? Maximize world-EV, or equivalently maximize your counterfactual value (not in the maximally-naive way — take into account how "your actions" affect "others' actions"). How should we distribute a fixed amount of credit or a prize between contributors? Something more Shapley-flavored, although this isn't really the question that Shapley answers (and that question is almost never relevant, in my possibly controversial opinion).

Happy to talk about well-specified questions. Annoyed at questions like "should I use counterfactuals here" that don't answer the obvious reply, "use them FOR WHAT?"

I don’t feel 100% bought-in to the Shapley Value approach, and think there’s a value in paying attention to the counterfactuals. My unprincipled compromise approach would be to take some weighted geometric mean and call it a day.

FOR WHAT?

Let’s assume in all of these scenarios that you are only one of the players in the situation, and you can only control your own actions.

If this is your specification (implicitly / further specification: you're an altruist trying to maximize total value, deciding how to trade off between increasing X and doing good in other ways) then there is a correct answer — maximize counterfactual value (this is equivalent to maximizing total value, or argmaxing total value over your possible actions), not your personal Shapley value or anything else. (Just like in all other scenarios. Multiplicative-ness is irrelevant. Maximizing counterfactual value is always the answer to questions about what action to take.)

Owen Cotton-Barratt @ 2024-04-09T15:39 (+6)

I think you're right to be more uncomfortable with the counterfactual analysis in cases where you're aligned with the other players in the game. Cribbing from a comment I've made on this topic before on the forum:

I think that counterfactual analysis is the right approach to take on the first point if/when you have full information about what's going on. But in practice you essentially never have proper information on what everyone else's counterfactuals would look like according to different actions you could take.

If everyone thinks in terms of something like "approximate shares of moral credit", then this can help in coordinating to avoid situations where a lot of people work on a project because it seems worth it on marginal impact, but it would have been better if they'd all done something different. Doing this properly might mean impact markets (where the "market" part works as a mechanism for distributing cognition, so that each market participant is responsible for thinking through their own alternative options, and feeding that information into the system via their willingness to do work for different amounts of pay), but I think that you can get some rough approximation to the benefits of impact markets without actual markets by having people do the things they would have done with markets -- and in this context, that means paying attention to the share of credit different parties would get.

Shapley values are one way to divide up that credit. They have some theoretical appeal, but it's basically as "what would a fair division of credit be, which divides the surplus compared to outside options". And they're extremely complex to calculate so in practice I'd recommend against even trying. Instead just think of it as an approximate bargaining solution between the parties, and use some other approximation to bargaining solutions -- I think Austin's practice of looking to the business world for guidance is a reasonable approach here.

(If there's nobody whom you're plausibly coordinating with then I think trying to do a rough counterfactual analysis is reasonable, but that doesn't feel true of any of your examples.)

Austin @ 2024-04-05T23:01 (+5)

So, as a self-professed mechanism geek, I feel like the Shapley Value stuff should be my cup of tea, but I must confess I've never wrapped my head around it. I've read Nuno's post and played with the calculator, but still have little intuitive sense of how these things work even with toy examples, and definitely no idea on how they can be applied in real-world settings.

I think delineating impact assignment for shared projects is important, though I generally look to the business world for inspiration on the most battle-tested versions of impact assignment (equity, commissions, advertising fees, etc). Startup/tech company equity & compensation, for example, at least provides a clear answer to "how much does the employer value your work". The answer is suboptimal in many ways (eg my guess is startups by default assign too much equity to the founders), but at least it provides a simple starting point; better to make up numbers and all that.

MichaelStJules @ 2024-04-06T06:20 (+4)

Rationally and ideally, you should just maximize the expected value of your actions, taking into account your potential influence on others and their costs, including opportunity costs. This just follows assuming expected utility theory axioms. It doesn’t matter that there are other agents; you can just capture them as part of your outcomes under consideration.

When you're assigning credit across other actors whose impacts aren't roughly independent, including for estimating their cost-effectiveness for funding, Shapley values (or something similar) can be useful. You want assigned credit to sum to 100% to avoid double counting or undercounting. (Credit for some actors can even be negative, though.)

But, if you were going to calculate Shapley values, which means estimating a bunch of subgroup counterfactuals that didn't or wouldn't happen, anyway, you may be able to just directly estimate how to best allocate resources instead. You could skip credit assignments (EDIT especially ex ante credit assignments, or when future work will be similar to past work in effects).

Zach Stein-Perlman @ 2024-04-06T16:52 (+2)

(I endorse this.)

JP Addison @ 2024-04-15T15:09 (+2)

FYI thanks for all the helpful comments here — I promptly got covid and haven't had a chance to respond 😅

RyanCarey @ 2024-04-10T11:56 (+2)

I think you can get closer to dissolving this problem by considering why you're assigning credit. Often, we're assigning some kind of finite financial rewards.

Imagine that a group of n people have all jointly created $1 of value in the world, and that if any one of them did not participate, there would only be $0 units of value. Clearly, we can't give $1 to all of them, because then we would be paying $n to reward an event that only created $0 of value, which is inefficient. If, however, only the first guy (i=1) is an "agent" that responds to incentives, while the others (1<=i<=n) are "environment" whose behaviour is unresponsive to incentives, then it is fine to give the first guy a reward of $1.

This is how you can ground the idea that agents who cooperate should share their praise (something like a Shapley Value approach), whereas rival agents who don't buy into your reward scheme should be left out of the shapley calculation.

Owen Cotton-Barratt @ 2024-04-09T15:24 (+2)

I'll give general takes in another comment, but I just wanted to call out how I think that at least for some of your examples the assumptions are unrealistic (and this can make the puzzle sound worse than it is).

Take the case of "The funding of an organization and the people working at the org". In this case the must factors combine in a sub-multiplicative way rather than a multiplicative way. For it's clear that if you double the funding and double the people working at the org you should approximately double the output (rather than quadruple it). I think that Cobb-Douglas production functions are often a useful modelling tool here.

In the case of managers or the Forum I suspect that it's also not quite multiplicative -- but a bit closer to it. In any case I do think that after accounting for this there's still a puzzle about how to evaluate it.

JP Addison @ 2024-04-24T13:42 (+24)

With the US presidential election coming up this year, some of y’all will probably want to discuss it.[1] I think it’s a good time to restate our politics policy. tl;dr Partisan politics content is allowed, but will be restricted to the Personal Blog category. On-topic policy discussions are still eligible as frontpage material.

- ^

Or the expected UK elections.

JP Addison @ 2022-07-21T20:22 (+24)

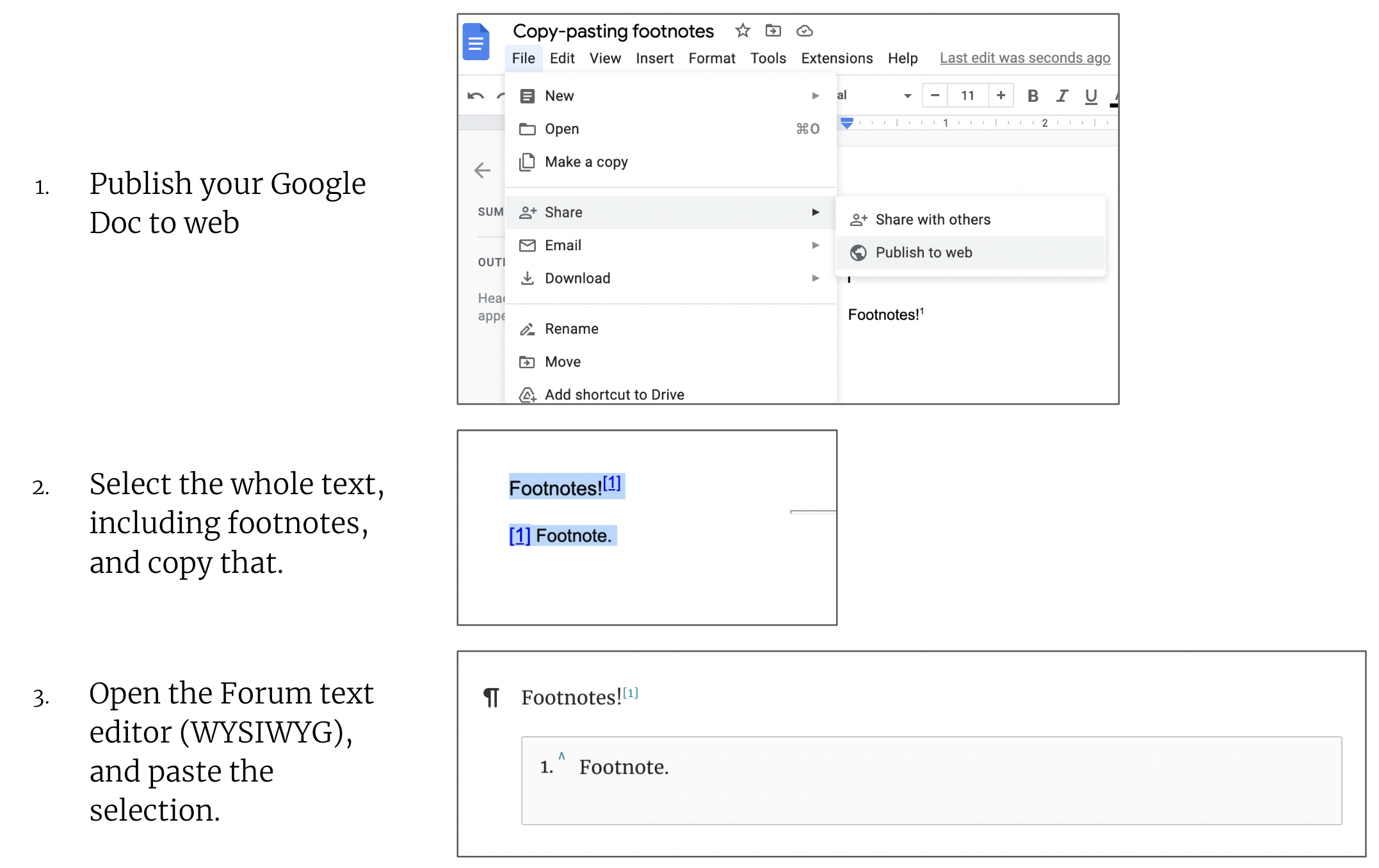

If you're copy-pasting from a Google Doc, you can now copy-paste footnotes into the WYSIWYG editor in the following way (which allowed us to get around the impossibility of selecting text + footnotes in a Google Doc):

- Publish your Google Doc to web

- You can do this by clicking on File > Share > Publish to web

- Then approve the pop-up asking you to confirm (hit "Publish")

- Then open the link that you'll be given; this is now the published-to-web version of your document.

- Select the whole text, including footnotes, and copy that. (If you'd like, you can now unpublish the document.)

- Open the Forum text editor (WYSIWYG), and paste the selection.

We'll announce this in a top level post as part of a broader feature announcement soon, but I wanted to post this on my shortform to get this in to the minds of some of the authors of the Forum.

Thanks to Jonathan Mustin for writing the code that made this work.

jpaddison @ 2019-11-07T20:29 (+23)

The new Forum turns 1 year old today.

🎵Happy Birthday to us 🎶

JP Addison @ 2022-09-09T19:35 (+21)

We now have google-docs-style collaborative editing. Thanks a bunch to Jim Babcock of LessWrong for developing this feature.

Miranda_Zhang @ 2022-09-14T15:38 (+2)

woah! I haven't tried it yet but this is really exciting! the technical changes to the Forum have seemed impressive to me so far. I also just noticed that the hover drop-down on the username is more expanded, which is visually unappealing but probably more useful.

JP Addison @ 2021-09-05T10:40 (+21)

How hard should one work?

Some thoughts on optimal allocation for people who are selfless but nevertheless human.

Baseline: 40 hours a week.

Tiny brain: Work more get more done.

Normal brain: Working more doesn’t really make you more productive, focus on working less to avoid burnout.

Bigger brain: Burnout’s not really caused by overwork, furthermore when you work more you spend more time thinking about your work. You crowd out other distractions that take away your limited attention.

Galaxy brain: Most EA work is creative work that benefits from:

- Real obsession, which means you can’t force yourself to do it.

- Fresh perspective, which can turn thinking about something all the time into a liability.

- Excellent prioritization and execution on the most important parts. If you try to do either of those while tired, you can really fuck it up and lose most of the value.

Here are some other considerations that I think are important:

- If you work hard you contribute to a culture of working hard, which could be helpful for attracting the most impactful people, who are more likely than average in my experience to be hardworking.

- Many people will have individual-specific reasons not to work hard. Some people have mental health issues that empirically seem to get worse if they work too hard, or they would get migraines or similar. Others will just find that they know themselves well enough to know when they should call it quits, for reasons captured elsewhere in this doc or not. This makes me usually very reluctant to call someone else out for not working hard enough.

A word on selflessness — I’m analyzing this from the perspective of someone trying to be purely selfless. I think it’s a useful frame. But I also think most people should make the decision about how much they work from the perspective of someone with the actual goals they have. It is a whole nother much more complicated blog post to flesh that out.

Finally, I want to say that although this post makes it seem like I’m coming down on the side of working less hard, I do overall think the question is complicated, and I definitely don’t know what the right answer is. This is mostly me writing in response to my own thinking, and to a conversation I recently had with my friend. My feeling from reading the discussions the Forum’s had about it, the conversation rarely gets past the normal brain take, plausibly because it seems like a bad look to argue the case for working harder. If I were writing to try to shift the state of public discussion, I would probably argue the bigger brain take more. But this is shortform, so it’s written for me.

Ben_West @ 2021-09-06T17:10 (+7)

Thanks for writing this up – I'm really interested in answers to this and have signed up for notifications to comments on this post because I want to see what others say.

I find it hard to talk about "working harder" in the abstract, but if I think of interventions that would make the average EA work more hours I think of things like: surrounding themselves by people who work hard, customizing light sources to keep their energy going throughout the day, removing distractions from their environment, exercising and regulating sleep well, etc. I would guess that these interventions would make the average EA more productive, not less.

(nb: there are also "hard work" interventions that seem more dubious to me, e.g. "feel bad about yourself for not having worked enough" or "abuse stimulants".)

One specific point: I'm not sure I agree regarding the benefits of "fresh perspective". It can sometimes happen that I come back from vacation and realize a clever solution that I missed, but usually me having lost context on a project makes my performance worse, not better.

JP Addison @ 2021-09-06T20:07 (+5)

Maybe you’re suspicious of this claim, but if I think if you convinced me that JP working more hours was good on the margin, I could do some things to make it happen. Like have one saturday a month be a workday, say. That wouldn’t involve doing broadly useful life-improvements.

On “fresh perspective”, I‘m not actually that confident in the claim and don’t really want to defend it. I agree I usually take a while after a long vacation to get context back, which especially matters in programming. But I think (?) some of my best product ideas come after being away for a while.

Also you could imagine that the real benefit of being away for a while is not that you’re not thinking about work, but rather that you might’ve met different people and had different experiences, which might give you a different perspective.

Ben_West @ 2021-09-08T10:47 (+4)

I see. My model is something like: working uses up some mental resource, and that resource being diminished presents as "it's hard for you to work more hours without some sort of lifestyle change." If you can work more hours without a lifestyle change, that seems to me like evidence your mental resources aren't diminished, and therefore I would predict you to be more productive if you worked more hours.

As you say, the most productive form of work might not be programming, but instead talking to random users etc.

nonn @ 2021-09-05T15:27 (+7)

For the sake of argument, I'm suspicious of some of the galaxy takes.

Excellent prioritization and execution on the most important parts. If you try to do either of those while tired, you can really fuck it up and lose most of the value

I think relatively few people advocate working to the point of sacrificing sleep, prominent hard-work-advocate (& kinda jerk) rabois strongly pushes for sleeping enough & getting enough exercise.

Beyond that, it's not obvious working less hard results in better prioritization or execution. A naive look at the intellectual world might suggest the opposite afaict, but selection effects make this hard. I think having spent more time trying hard to prioritize, or trying to learn about how to do prioritization/execution well is more likely to work. I'd count "reading/training up on how to do good prioritization" as work

Fresh perspective, which can turn thinking about something all the time into a liability

Agree re: the value of fresh perspective, but idk if the evidence actually supports that working less hard results in fresh perspective. It's entirely plausibly to me that what is actually needed is explicit time to take a step back - e.g. Richard Hamming Fridays - to reorient your perspective. (Also, imo good sleep + exercise functions as a better "fresh perspective" that most daily versions of "working less hard", like chilling at home)

TBH, I wonder if working on very different projects to reset your assumptions about the previous one or reading books/histories of other important project/etc works better is a better way of gaining fresh perspective, because it's actually forcing you into a different frame of mind. I'd also distinguish vacations from "only working 9-5", which is routine enough that idk if it'd produce particularly fresh perspective.

Real obsession, which means you can’t force yourself to do it

Real obsession definitely seems great, but absent that I still think the above points apply. For most prominent people, I think they aren't obsessed with ~most of the work their doing (it's too widely varied), but they are obsessed with making the project happen. E.g. Elon says he'd prefer to be an engineer, but has to do all this business stuff to make the project happen.

Also idk how real obsession develops, but it seems more likely to result from stuffing your brain full of stuff related to the project & emptying it of unrelated stuff or especially entertainment, than from relaxing.

Of course, I don't follow my own advice. But that's mostly because I'm weak willed or selfish, not because I don't believe working more would be more optimal

JP Addison @ 2021-09-06T06:43 (+4)

This is a good response.

Khorton @ 2021-09-05T17:46 (+2)

One thing that hasn't been mentioned here is vacation time and sabbaticals, which would presumably be very useful for a fresh perspective!

nonn @ 2021-09-06T15:07 (+1)

Yeah I agree that's pretty plausible. That's what I was trying to make an allowance for with "I'd also distinguish vacations from...", but worth mentioning more explicitly.

Khorton @ 2021-09-06T17:09 (+2)

Sorry I missed that! My bad

JP Addison @ 2021-09-05T10:40 (+4)

A few notes on organizational culture — My feeling is some organizations should work really hard, and have an all-consuming, startup-y culture. Other organizations should try a more relaxed approach, where high quality work is definitely valued, but the workspace is more like Google’s, and more tolerant of 35 hour weeks. That doesn’t mean that these other organizations aren’t going to have people working hard, just that the atmosphere doesn’t demand it, in the way the startup-y org would. The culture of these organizations can be gentler, and be a place where people can show off hobbies they’d be embarrassed about in other organizations.

These organizations (call them Type B) can attract and retain staff who for whatever reason would be worse fits at the startup-y orgs. Perhaps they’re the primary caregiver to their child or have physical or mental health issues. I know many incredibly talented people like that and I’m glad there are some organizations for them.

JP Addison @ 2023-04-07T21:32 (+20)

I'd like to try my hand at summarizing / paraphrasing Matthew Barnett's interesting twitter thread on the FLI letter.[1]

The tl;dr is that trying to ban AI progress will increase the hardware overhang, and risk the ban getting lifted all of a sudden in a way that causes a dangerous jump in capabilities.

Background reading: this summary will rely on an understanding of hardware overhangs (second link), which is a somewhat slippery concept, and I myself wish I understood at a deeper level.

***

Barnett Against Model Scaling Bans

Effectiveness of regulation and the counterfactual

It is hard to prevent AI progress. There's a large monetary incentive to make progress in AI, and companies can make algorithmic progress on smaller models. "Larger experiments don't appear vastly more informative than medium sized experiments."[2] The current proposals on the table on ban the largest runs.

Your only other option is draconian regulation, which will be hard to do well and will unpredictable and bad effects.

Conversely, by default, Matthew is optimistic about companies putting lots of effort into alignment. It's economically incentivized. And we can see this happening: OpenAI has put more effort into aligning its models over time, and GPT-4 seems more aligned than GPT-2.

But maybe some delay on the margin will have good effects anyway? Not necessarily:

Overhang

Matthew's arguments above about algorithmic progress still occurring imply that AI progress will occur during a ban.[3] Given that, the amount of AI power that can be wrung out humanity's hardware stock will be higher at the end of the ban than at the start. What are these consequences of that? Nothing good, says Matthew:

First, we need to account for the sudden jump in capabilities when the ban is relaxed. Companies will suddenly train up to the economically incentivized levels, leading to a discontinuous jump in capabilities. Discontinuous jumps in capabilities are more dangerous than incremental improvements. This is the core of the argument, according to my read.

Maybe we can continue the ban indefinitely? Sounds extremely risky to Matthew. Matthew is worried that we will try, and then the overhang will get worse and worse until for some reason (global power conflict?) regulators decide to relax the ban, and a more massive discontinuous jump occurs.

As an additional consideration, there will now be more competitive pressure, as the regulation has evened the playing field by holding back the largest labs, leading to more incentive to cut corners in safety.

Fixed budget of delays

[Caveat lector: It seems likely to me that I'm not grokking Matthew here, this section is rougher and more likely to mislead you]: Matthew claims that the there's only so much budget of delays that humanity has. He argues that humanity should spend that budget later, when AI is closer to being an existential threat, rather than now.

Matthew considers the argument that we will wait too long to spend our budget and reach AGI before we do, but rejects that because he believes it will be obvious when AI is getting dangerous.

***

I consider the arguments presented here to be overall quite strong. I may come back and write up a response, but this is basically just a passthrough of Matthew, if I've done it right. Which brings me to:

Disclaimer: I say "Matthew is worried", and other such statements. I have not run this by Matthew. I am writing this based on my understand from his twitter thread, see the original for the ground truth of what he actually said.

- ^

Thanks to Lizka for pointing me to it.

- ^

Seems like a controversial claim, I'd be curious for someone more knowledgable than I to weigh in.

It's not obvious that this is crux-y for me though. See next bullet point.

- ^

Matthew does not mention the ongoing march of compute progress. I don't understand why. It seems to me to make his argument stronger. Compute progress will lead to overhang just as surely as algorithmic progress, and seems about as hard to stop.

Matthew_Barnett @ 2023-04-10T04:21 (+6)

Thanks. I think that your summary is great and touched on basically all the major points that I meant to address in my original Twitter thread. I just have one clarification.

[Caveat lector: It seems likely to me that I'm not grokking Matthew here, this section is rougher and more likely to mislead you]: Matthew claims that the there's only so much budget of delays that humanity has. He argues that humanity should spend that budget later, when AI is closer to being an existential threat, rather than now.

I want to note that this argument was not spelled out very well, so I can understand why it might be confusing. I didn't mean to make a strong claim about whether we have a fixed budget of delays; only that it's possible. In fact, there seems to be a reason why we wouldn't have a fixed budget, since delaying now might help us delay later.

Nonetheless, delaying now means that we get more overhang, which means that we might not be able to "stop" and move through later stages as slowly as we're moving through our current stage of AI development. Even if the moratorium is lifted slowly, I think we'd still get less incremental progress later than if we had never had the moratorium to begin with, although justifying this claim would take a while, and likely requires a mathematical model of the situation. But this is essentially just a repetition of the point about overhangs stated in a different way, rather than a separate strong claim that we only get a fixed budget of delays.

JP Addison @ 2022-07-15T15:50 (+16)

Note: due to the presence of spammers submitting an unusual volume of spam to the EA Topics Wiki, we've disabled creation and editing of topics for all users with <10 karma.

Khorton @ 2022-07-15T15:53 (+10)

Thank you!

JP Addison @ 2022-03-11T22:07 (+15)

I've just released the first step towards having EA Forum tags become places where you can learn more about a topic after read a post. Posting about it because it's a pretty noticeable change:

The tag at the top of the post makes it easier to notice that there's a category to this post. (It's the most relevant core tag to the post.) We had heard feedback from new users that they didn't know tags were a thing.

Once you get to the tag page, there's now a header image. More changes to the tag pages to give it more structure are coming.

Yonatan Cale @ 2022-03-13T17:56 (+4)

Maybe worth trying adding a (tiny) title over the tags, such as "see more posts about:"

Or adding the tags again at the bottom of the post with a similar title.

Anyway +1 to this, and I'm a big fan of the "tags" feature!

JP Addison🔸 @ 2025-09-11T20:33 (+14)

Quick ask: I’m working on an AI tool that takes your resume/LinkedIn and shows you the most relevant opportunities from the 80k Job Board, then lets you discuss them with an AI.

I like to talk to 5-10 people for quick user interviews (30 mins). You might be interested if you:

- Are job hunting or career planning

- Want to help me build a tool for others trying to have an impact with their career

Drop your details here if interested.

Vaidehi Agarwalla 🔸 @ 2025-09-11T23:36 (+2)

If this tool could be used by orgs I think it would be super useful. E.g. analyzing the HIP talent directory or inbound applications against a job you're recruiting for. Any chance you could make the prompts/tool available to orgs as well?

JP Addison🔸 @ 2025-09-11T23:53 (+2)

That seems like the reverse, right? Given candidates, find me the best ones? So you'd want different prompts, though potentially some of the same logic carries over. In any case with the primitive nature of my prompts, and the complexity of the approach, I would probably advise someone to start from scratch. I'm generally a fan of open source though, and I could imagine releasing it.

JP Addison @ 2022-03-03T13:39 (+14)

Consider time discounting in your cost-benefit calculations of avoiding tail risks

[Epistemic status: bit unendorsed, written quickly]

For many questions of tail risks (covid, nuclear war risk) I've seen EAs[1] doing a bunch of modeling of how likely the tail risk is, and what the impact will be, maybe spending 10s of hours on it. And then when it comes time to compare that tail risk vs short term tradeoffs, they do some incredibly naive multiplication, like life expectancy * risk of death.

Maybe if you view yourself as a pure hedonist with zero discounting, but that's a hell of a mix. I at least care significantly about impact in my life, and the impact part of my life has heavy instrumental discounting. If I'm working on growing the EA community, which is exponentially growing at ~20% per year, then I should discount my future productivity at 20% per year.

[This section especially might be wrong] So altruistically, I should be weighing the future at a factor of about (not gonna show my work) times my productivity for a year, which turns out to be a factor of about 5.

Maybe you disagree with this! Good! But now this important part of the cost-benefit calculation is getting the attention it deserves, rather than being a complete afterthought.

- ^

Myself included.

JP Addison @ 2022-03-03T13:48 (+2)

Another interesting way this could diverge from the naive case is not by including discounting, but by considering how much more / less impactful you would be if you were one of the survivors of a nuclear war. I lean in the direction of thinking I would be less impactful, but maybe one would be more. This consideration doesn't apply to long covid, but seems to dominate the nuclear war considerations in my view.

jpaddison @ 2019-09-06T06:24 (+14)

Posting this on shortform rather than as a comment because I feel like it's more personal musings than a contribution to the audience of the original post —

Things I'm confused about after reading Will's post, Are we living at the most influential time in history?:

What should my prior be about the likelihood of being at the hinge of history? I feel really interested in this question, but haven't even fully read the comments on the subject. TODO.

How much evidence do I have for the Yudkowsky-Bostrom framework? I'd like to get better at comparing the strength of an argument to the power of a study.

Suppose I think that this argument holds. Then it seems like I can make claims about AI occurring because I've thought about the prior that I have a lot of influence. I keep going back and forth about whether this is a valid move. I think it just is, but I assign some credence that I'd reject it if I thought more about it.

What should my estimate of the likelihood we're at the HoH if I'm 90% confident in the arguments presented in the post?

jpaddison @ 2019-08-13T01:40 (+14)

This first shortform comment on the EA Forum will be both a seed for the page and a description.

Shortform is an experimental feature brought in from LessWrong to allow posters a place to put quickly written thoughts down, with less pressure to make it to the length / quality of a full post.

jpaddison @ 2019-10-10T11:40 (+13)

Thus starts the most embarrassing post-mortem I've ever written.

The EA Forum went down for 5 minutes today. My sincere apologies to anyone who's Forum activity was interrupted.

I was first alerted by Pingdom, which I am very glad we set up. I immediately knew what was wrong. I had just hit "Stop" on the (long unused and just archived) CEA Staff Forum, which we built as a test of the technology. Except I actually hit stop on the EA Forum itself. I turned it back on and it took a long minute or two, but was soon back up.

...

Lessons learned:

* I've seen sites that, after pressing the big red button that says "Delete", makes you enter the name of the service / repository / etc. you want to delete. I like those, but did not think of porting it to sites without that feature. I think I should install a TAP that whenever I hit a big red button, I confirm the name of the service I am stopping.

* The speed of the fix leaned heavily on the fact that Pingdom was set up. But it doesn't catch everything. In case it doesn't catch something, I just changed it so that anyone can email me with "urgent" in the subject line and I will get notified on my phone, even if it is on silent. My email is jp at organizationwebsite.

jpaddison @ 2019-08-24T14:54 (+13)

On the incentives of climate science

Alright, the title sounds super conspiratorial, but I hope the content is just boring. Epistemic status: speculating, somewhat confident in the dynamic existing.

Climate science as published by the IPCC tends to

1) Be pretty rigorous

2) Not spend much effort on the tail risks

I have a model that they do this because of their incentives for what they're trying to accomplish.

They're in a politicized field, where the methodology is combed over and mistakes are harshly criticized. Also, they want to show enough damage from climate change to make it clear that it's a good idea to institute policies reducing greenhouse gas emissions.

Thus they only need to show some significant damage, not a global catastrophic one. And they want to maintain as much rigor as possible to prevent the discovery of mistakes, and it's easier to be rigorous about things that are likely than about tail risks.

Yet I think longtermist EAs should be more interested in the tail risks. If I'm right, then the questions we're most interested in are underrepresented in the literature.

JP Addison @ 2023-06-20T00:54 (+9)

Here's a thought you might have: "AI timelines are so short, it's significantly reducing the net present value[1] of {high school, college} students." I think that view is tempting based on eg (1, 2). However, I claim that there's a "play to your outs" effect here. Not in a "AGI is hard" way, but "we slow down the progress of AI capability development".

In those worlds, we get more time. And, with that more time, it sure would seem great if we could have a substantial fraction of the young workforce care about X-Risk / be thinking with EA principles. Given the success we've had historically in convincing young people of the value of these ideas, it still seems pretty promising to have some portion of our community continue to put work into doing so.

- ^

By this I mean, the lifetime impact of the student, discounted over time.

Yonatan Cale @ 2023-06-20T08:03 (+4)

Many young people (zoomers) care a lot about climate change, afaik. I think adding the hopefully-small thought of "how about going over all things that might destroy the world and prioritizing them (instead of staying with the first one you found)" might go a long way, maybe

JP Addison @ 2023-02-23T18:04 (+9)

Note from the Forum team: The Forum was intermittently down for roughly an hour and change last night/this morning, due to an issue with our domain provider.

JP Addison @ 2021-08-04T10:54 (+9)

Temporary site update: I've taken down the allPosts page. It appears we have a bot hitting the page, and it's causing the site to be slow. While I investigate, I've simply taken the page down. My apologies for the inconvenience.

JP Addison @ 2021-08-05T07:08 (+4)

This is over.

JP Addison @ 2023-05-08T12:32 (+8)

Here's an interesting tweet from a thread by Ajeya Cotra:

But I'm not aware of anyone who successfully took complex deliberate *non-obvious* action many years or decades ahead of time on the basis of speculation about how some technology would change the future.

I'm curious for this particular line to get more discussion, and would be interested in takes here.

Habryka @ 2023-05-08T21:38 (+8)

Seems wrong to me. For example, Hamilton and Jefferson on the U.S. patent system. Also debates between Tesla and Edison on DC vs. AC and various policies associated with electricity. Also people working on nuclear regulation. Also people working on the early internet. Also lots of stuff around genetic engineering, the trajectory of which seems to have been shaped by many people taking deliberate action. Also, climate change, where a bunch of people did successfully invest in solar stuff which seems like it's now paying off quite well, and certainly didn't seem that obvious.

I don't know whether any of these count as "non-obvious". I feel like the "obvious" is doing all the work here, or something.

JP Addison @ 2023-05-08T21:52 (+3)

I agree obvious is probably doing a lot of work, and is pretty open to interpretation. I still think it's an interesting question!

JP Addison @ 2023-05-08T21:51 (+3)

I will personally venmo[1] anyone $10 per good link they put in to supply background reading for those examples.

Please try to put effort into your links, I reserve the right to read your link and capriciously decide that I don't like it enough to pay out. Offer valid for one link per historical example with more available at my option.

[1]: Or transferwise I guess.

Howie_Lempel @ 2023-05-10T15:20 (+4)

[Only a weak recommendation.] I last looked at this >5 years ago and never read the whole thing. But FYI that Katja Grace wrote a case study on the Asilomar Conference on Recombinant DNA, which established a bunch of voluntary guidelines that have been influential in biotech. Includes analogy to AI safety. (No need to pay me.) https://intelligence.org/files/TheAsilomarConference.pdf

NunoSempere @ 2023-05-10T21:16 (+2)

Maybe some @ https://teddit.nunosempere.com/r/AskReddit/comments/12rk46t/there_is_a_greek_proverb_a_society_grows_great/?sort=top

zchuang @ 2023-05-10T18:36 (+1)

I feel like non-obvious is very load bearing here because my first thought is Joseph Bazalgette and the London Sewer system.

JP Addison @ 2023-05-11T21:57 (+6)

A tax, not a ban

In which JP tries to learn about AI governance by writing up a take. Take tl;dr: Overhang concerns and desire to avoid catchup effects seem super real. But that need not imply speeding ahead towards our doom. Why not try to slow everything down uniformly? — Please tell me why I’m wrong.

After the FLI letter, the debate in EA has coalesced into “6 month pause” vs “shut it all down” vs some complicated shrug. I’m broadly sympathetic to concerns (1, 2) that a moratorium might make the hardware overhang worse, or make competitive dynamics worse.

To put it provocatively (at least around these parts), it seems like there’s something to the OpenAI “Planning for AGI and beyond” justification for their behavior. I think that sudden discontinuous jumps are bad.

Ok, but it remains true that OpenAI has burned a whole bunch of timelines, and that’s bad. It seems to me that speeding up algorithmic improvements is incredibly dubious. And the large economic incentives they’ve created for AI chips seems really bad.[1]

So, how do we balance these things? Proposal: ““we”” reduce the economic incentive to speed ahead with AI. If we’re successful, we could slow down hardware progress, and algorithmic progress, for OpenAI, and all its competitors.

How would this work? This could be the weak point of my analysis, but you could put a tax on “AI products”. This would be terrible and distortionary, but it would probably be effective at slashing the most centrally AGI companies. You can also put a tax on GPUs.

Note: a way to think about this is a Pigouvian tax.

Counterarguments

China. Yep. This is a counterargument against a moratorium as well. I think I’m willing to bite this bullet.

Something like: we really need the cooperation of chip companies. If we tax their chips they’ll sell to China immediately. This is the major reason why I’m more optimistic about taxing “AI products” than GPUs, which would be easier to tax.

IDK, JP, a moratorium would be easier to coordinate around, is more likely to actually happen, and isn’t that bad. We should put our wood behind that arrow. Seems plausible, but I really don’t think this is a long term solution, and I tentatively think the tax thing is.

***

Again, will be very appreciative of different takes, etc here.

See also: Notes on Potential Future AI Tax Policy. This post was written before reading that one. Sadly that post spends too much time in the weeds arguing against a specific implementation which Zvi apparently really doesn’t like, and not enough time discussing overall dynamics, IMO.

Zach Stein-Perlman @ 2023-05-11T23:01 (+2)

- I agree increasing the cost of compute or decreasing the benefits of compute would slow dangerous AI.

- I claim taxing AI products isn't great because I think the labs that might make world-ending models aren't super sensitive to revenue-- I wouldn't expect a tax to change their behavior much. (Epistemic status: weak sense; stating an empirical crux.)

- Relatedly, taxing AI products might differentially slow safe cool stuff like robots and autonomous vehicles and image generation. Ideally we'd only target LLMs or something.

- Clarification: I think "hardware overhang" in this context means "amount labs could quickly increase training compute (because they were artificially restrained for a while by regulation but can quickly catch up to where they would have been)"? There is no standard definition, and the AI Impacts definition you linked to seems inappropriate here (and almost always useless-- it was a useful concept before the training is expensive; running is cheap era).

jpaddison @ 2019-10-01T14:54 (+5)

We're planning Q4 goals for the Forum.

Do you use the Forum? (Probably, considering.) Do you have feelings about the Forum?

If you send me a PM, one of the CEA staffers running the Forum (myself or Aaron) will set up a call call where you can tell me all the things you think we should do.

Stefan_Schubert @ 2019-10-07T11:52 (+20)

I'm wondering about the possibility to up-vote one's own posts and comments. I find that a bit of an odd system. My guess would be that someone up-voting their own post is a much weaker signal of quality than someone up-voting someone else's post.

Also, it feels a bit entitled/boastful to give a strong up-vote to one's own posts and comments. I'm therefore reluctant to vote on my own work.

Hence, I'd suggest that one shouldn't be able to vote on one's own posts and comments.

jpaddison @ 2019-10-08T10:50 (+3)

By default your comments are posted with a regular upvote on them posts with a strong upvote on them. The fact that it's default seems to me to lower my concern about boastfulness. Although I do think it's possible the Forum shouldn't let you change away from those defaults. When I observed someone strong-upvoting their comments on LW, I found it really crass.

As to why not change the default, I do think that you by default endorse your comments and posts. This provides useful info to people, because if you're a user with strong upvote power, your posts and comments enter more highly rated. This provides a small signal to new users about who the Forum has decided to trust. And it makes it less likely that you'll see a dispiriting "0" next to your comment. OTOH, we don't count self-votes for the purposes of calculating user karma, so maybe by consistency we shouldn't show it.

Pablo_Stafforini @ 2019-10-08T11:24 (+5)

Although I do think it's possible the Forum shouldn't let you change away from those defaults.

I am in favor of these defaults and also in favor of disallowing people to change them. I know of two people on LW who have admitted to strong-upvoting their comments, and my sense is that this behavior isn't that uncommon (to give a concrete estimate: I'd guess about 10% of active users do this on a regular basis). Moreover, some of the people who may be initially disinclined to upvote themselves might start to do so if they suspect others are, both because the perception that a type of behavior is normal makes people more willing to engage in it, and because the norm to exercise restrain in using the upvote option may seem unfair when others are believed to not be abiding by it. This dynamic may eventually cause a much larger fraction of users to regularly self-upvote.

So I think these are pretty strong reasons for disallowing that option. And I don't see any strong reasons for the opposite view.

Stefan_Schubert @ 2019-10-08T11:23 (+3)

I guess there are two different issues:

1) Should comments and posts by default start out with positive karma, or should it be 0?

2) Should it be possible for the author to change the default level of karma their post/comment starts out with?

This yields at least four combinations:

a) Zero initial karma, and that's unchangeable.

b) Zero initial karma by default, but you could give up-votes (including strong up-votes) to your own posts, if you wanted to.

c) A default positive karma (which is a function of your total level of karma), which can't be changed.

d) A default positive karma, which can be increased (strong up-vote) or decreased (remove the default up-vote). (This is the system we have now.)

My comments only pertained to 2), whether you should be able to change the default level of karma - e.g. to give strong up-votes to your own own posts and comments. On that, you found it "crass" when someone did that. You also made this comment:

This provides useful info to people, because if you're a user with strong upvote power, your posts and comments enter more highly rated. This provides a small signal to new users about who the Forum has decided to trust. And it makes it less likely that you'll see a dispiriting "0" next to your comment.

This rather seems to relate to 1).

As stated, I don't think one should be able to change the default level of karma. This would rule out b) and d), and leave a) and c). I have a less strong view on how to decide between those two systems, but probably support a).

jpaddison @ 2019-10-08T12:05 (+1)

I agree with you and Pablo that I'd rather see it unchangeable. My prioritization basically hinges on how common it is. If Pablo's right and it's 10%, that seems concerning. I've asked the LW team.

Habryka @ 2019-10-08T17:13 (+3)

Making it unchangeable also seems reasonable to me, or at least making it so that you can no longer strong-upvote your own comments.

Strong-upvoting your own posts seems reasonable to me (and is also the current default behavior)

RyanCarey @ 2022-04-14T18:41 (+11)

I think strong upvoting yourself should either be the default (opt-out), or impossible. It shouldn't be opt-in, because this rewards self-promotion.

Charles He @ 2022-04-14T19:10 (+4)

Did you know you can see strong self upvotes for all users?

You can see the this at the user level (how much they do this in total) and I’m 80% sure you can see this at the comment/post level for each user.

This might mitigate your concerns and the related activity might finally produce a report where I am ranked #1.

Khorton @ 2022-04-14T19:22 (+4)

How can this list be viewed?

Charles He @ 2022-04-14T19:33 (+2)

There is a 70% chance someone else will do this and explain how, making the next paragraph irrelevant:

Moving slightly slowly because this takes actual work to ensure quality (or I’m just being annoyingly coy), if someone makes a $200 counterfactual donation to an EA charity specified by me (that meets the qualifications as a 501c3 charity in an EA cause area and donated to by senior EA grantmakers), I will produce this report and send it you (after I get back from a major conference that is going on in the next 7 days).

Khorton @ 2022-04-14T19:57 (+2)

Oh, I thought you meant this was already available online - my mistake!

Khorton @ 2019-10-07T22:06 (+9)

Please fix the EA forum search engine and/or make it easier to find forum posts through Google.

Pablo_Stafforini @ 2019-10-08T09:57 (+8)

On the whole, I really like the search engine. But one small bug you may want to fix is that occasionally the wrong results appear under 'Users'. For example, if you type 'Will MacAskill', the three results that show up are posts where the name 'Will MacAskill' appears in the title, rather than the user Will MacAskill.

EDIT: Mmh, this appears to happen because a trackback to Luke Muehlhauser's post, 'Will MacAskill on Normative Uncertainty', is being categorized as the name of a user. So, not a bug with the search engine as such, but still something that the EA Forum tech team may want to fix.

jpaddison @ 2019-10-08T10:27 (+3)

Oh the joys of a long legacy of weird code. I've deleted those accounts, although I'm sad to report that our search engine is not smart enough to figure out that "Will MacAskill" should return "William_MacAskill"

SamDeere @ 2019-10-08T12:13 (+1)

Is there a way to give Algolia additional information from the user's profile so that it can fuzzy search it?

jpaddison @ 2019-10-08T13:00 (+1)

We could probably add a nickname field that we set manually.

Habryka @ 2019-10-08T17:11 (+2)

Yeah, you can add lots of additional fields. It also has like 100 options for changing the algorithm (including things like changing the importance of spelling errors in search, and its eagerness to correct them), so playing around with that might make sense.

jpaddison @ 2019-10-10T18:35 (+5)

With a configuration change, the search engine now understands that karma is important in ranking posts and comments. (It unfortunately doesn't have access to karma for users.)

Khorton @ 2019-10-10T23:26 (+4)

This doesn't fix the example I put forward, but it does make the search function more understandable and less frustrating. Thanks!

Habryka @ 2019-10-10T21:03 (+2)

Oh, interesting. LessWrong always had that, and I never even thought about that maybe being a configuration difference between the two sites.

Habryka @ 2019-10-07T22:30 (+2)

Curious what the problem with the current search engine is? Agree that it's important to be able to find forum posts via Google, which is currently an EA Forum specific issue, but improvements to the search likely also affect LessWrong, so I am curious in getting more detail on that.

Khorton @ 2019-10-07T22:55 (+8)

Posts are not listed in order of relevance. You need to know exact words from the post you're searching for in order to find it - preferably exact words from the title.

For example, if I wanted to find your post from four days ago on long term future grants and typed in "grants", your post wouldn't appear, because your post uses the word "grant" in the title instead.

Ben Pace @ 2019-10-07T23:09 (+2)

For example, if I wanted to find your post from four days ago on long term future grants and typed in "grants", your post wouldn't appear, because your post uses the word "grant" in the title instead.

FYI, this was a very helpful concrete example.

On reflection your reasoning is false though - it's not because the post uses the word 'grant'. If I search 'grant' I get almost identical results, certainly the first 6 are the same. If I search 'ltf grants' I get the right thing even though neither 'ltf' or 'grants' is in the title. I also think that it's not like there aren't a lot of other posts you could be searching for with the word 'grant' - it isn't just random other posts, there are *many* posts withing ~2x karma that have that word in the title.

Still, I share a vague sense that something about search is not quite right, though I can't put my finger on it.

Habryka @ 2019-10-07T23:01 (+2)

(Edit: This was written before Khorton edited a concrete example into their comment)

Interesting. I haven't had many issues with the search. I mostly just wanted it to have more options that I can tweak (like restricting it to a specific time period and author). If you know of any site (that isn't a major search engine provider) that has search that does better here, I would be curious to look into what technology they use (we use Algolia, which seems to be one of the most popular search providers out there, and people seem to generally be happy with it). It might also be an issue of configuration.

jpaddison @ 2019-10-08T10:34 (+1)

Speaking to the google search results – It's pretty hard to just rise up the google rankings. We've done the basic advice: the crawled page contains the post titles and keywords, made sure google finds the mobile view is satisfactory. It's likely there more we can do but it's not straightforward. Complicating matters is that during the great spampocalypse in May, we were hit with a punitive action from google, because we were polluting their ranking algorithm with spam links. You may remember a time when there were no results linking to posts at all. We fixed it, but it's possible (and I'd guess likely) that we're still getting dinged for that. Unfortunately, google gives us no way of knowing.

jpaddison @ 2019-10-08T11:24 (+7)

NB: We're now done planning Q4. Suggestions are still valuable, but consider holding off on further comments for a bit, we have a final draft of a post that's about to give a lot more context. Of course, if you've got a useful comment you'd otherwise forget about, I don't mind continuing to answer.

Khorton @ 2019-10-01T17:07 (+3)

I clicked on 'go to Permalink' for this post, because I was going to send it to a friend, but I don't think it did anything.

What I actually wanted to do was find a link to just this post (not the whole shortform) that wasn't going to change.

jpaddison @ 2019-10-02T09:10 (+3)

What happens when you do that is that now your url bar in your browser points to this post, with a fancy standalone version of the comment above the post. Unfortunately, because the post doesn't actually change, you aren't navigating to a new page and your scroll stays where it is. It's a new feature from LessWrong, I've filed a bug report with them.

HaukeHillebrandt @ 2019-10-07T11:43 (+2)

I'd be interested in seeing views/ hits counters on every post and general data on traffic.

Also quadratic voting for upvotes.

jpaddison @ 2019-10-08T11:03 (+1)

Also quadratic voting for upvotes.

This is an interesting question. It would certainly prevent a bunch of bad behavior and force people to be more intentional in their voting. Here are I think the main reasons we / LW have talked about it but not implemented it:

a) Some people just read way more of the Forum than others. Should their votes have less weight because they must be spread over many comments?

b) I don't want users to have to think about conserving their voting resources. If they like something, I want them to vote something and move on. Karma is fun, but the purpose of the site is the content.

jpaddison @ 2019-10-08T10:59 (+1)

I'd be interested in seeing views/ hits counters on every post and general data on traffic.

We could a) put that data on the start of every post or b) put it under a menu option under the ... menu. I think (a) wouldn't provide enough value to balance the cost of busying the UI, which is currently very sparse and the more valuable for it. I don't expect (b) would be used much. I don't have the data to back this up (yet! I really want to be able to easily check all of these) but I guess most people don't click on those menu buttons very often.

HaukeHillebrandt @ 2019-10-07T16:56 (+1)

Mandatory field 200 characters summarizing the blogpost.

Mandatory keywords box.

Better Google Docs integration.

jpaddison @ 2019-10-08T11:11 (+2)

Mandatory keywords box.

See an upcoming post for how I feel about tagging.

jpaddison @ 2019-10-08T11:17 (+1)

Better Google Docs integration

My guess is that it'll be hard to beat copy and pasting. Copy and pasting of styling works fairly well and is a pretty simple C-c,C-v. It works fairly well right now, with the main complaints (images, tables) being limitations of our current editor. I'm optimistic that a forthcoming upgrade to use CKEditor will improve the situation a lot.

Lukas_Finnveden @ 2019-10-11T10:06 (+1)

It works fairly well right now, with the main complaints (images, tables) being limitations of our current editor.

Copying images from public Gdocs to the non-markdown editor works fine.

jpaddison @ 2019-10-08T11:10 (+1)

Mandatory field 200 characters summarizing the blogpost

This one's been requested a few times. My thought is that a well written post has a summary or hook in the first paragraph. Aaron is more optimistic though.

With this one and the keywords box, I'd tend heavily towards leaving it optional but encouraged. I want to keep posting easy, and lean towards trusting the authors to know what will work with their post.

jpaddison @ 2019-09-13T14:07 (+5)

I want to write a post saying why Aaron and I* think the Forum is valuable, which technical features currently enable it to produce that value, and what other features I’m planning on building to achieve that value. However, I've wanted to write that post for a long time and the muse of public transparency and openness (you remember that one, right?) hasn't visited.

Here's a more mundane but still informative post, about how we relate to the codebase we forked off of. I promise the space metaphor is necessary. I don't know whether to apologize for it or hype it.

———

You can think of the LessWrong codebase as a planet-sized spaceship. They're traveling through the galaxy of forum-space, and we're a smaller spacecraft following along. We spend some energy following them, but benefit from their gravitational pull.

(The real-world correlate of their gravity pulling us along is that they make features which we benefit from.)

We have less developer-power than they do (1 dev vs 2.5-3.5, depending on how you count.) So they can move faster than we can, and generally go in directions we want to go. We can go further away from the LW planet-ship (by writing our own features), but this causes their gravitational pull to be weaker and we have to spend more fuel to keep up with them (more time adapting their changes for our codebase).

I view the best strategy as making features that LW also wants (moving both ships in directions I want), and then, when necessary, making changes that only I want.

———

One implication of this is that feature requests are more likely to be implemented, and implemented quickly, if they are compelling to both the EA Forum and LessWrong. These features keep the spaceships close together, helping them burn less fuel in the process.**

** I was going to write something about how this could be a promising climate-change reduction strategy, until I remembered that carbon emissions don’t matter in outer space.

JP Addison @ 2023-04-24T10:51 (+4)

Scoreboard

Or: Let impact outcomes settle debates

When American sports players try to trash talk their opponents, the opponents will sometimes retort with the word "scoreboard" along with pointing at the screen showing that they plainly have more points. This is to say, basically, "whatever bro, I'm winning". It's a cocky response, but it's hard to argue with. I'll try to keep this post not-cocky.

I think of this sometimes in the context of the EA ecosystem. Sometimes I am astounded by how disorganized someone might be, and because of that I might be tempted to think of them as fundamentally incompetent. But if I happen to notice that they're sitting on a huge pile of impact, then I should be more humble. Something's obviously working for them. And maybe their disorganization is linked to them being so impactful. Despite this, there's an attitude I sometimes see in myself or others, which is to bring it up in a rather unhelpful or condescending way, which is especially apparent if someone is clearly doing something right.

The example of a disorganized person seems extremely clear to me. But can I still use this concept in a more difficult case? What if I have a strategic disagreement? Sometimes I might be tempted to tell someone that their strategy means that their apparent impact is illusory. But I think a really valuable skill is noticing when I kinda, you know, want that to be true, but it isn't, and in fact the scoreboard simply shows just the opposite.

JP Addison @ 2022-04-27T21:42 (+4)

For a long time the EA Forum has struggled with what to call its tag / wiki thing. Our interface had several references to "this wiki-tag". As part of our work to improve the tagging feature, we've now renamed it to Topics.

JP Addison @ 2021-12-31T12:06 (+4)

I should write up an update about the Decade Review. Do you have questions about it? I can answer them here, but it will also help me inform the update.

jpaddison @ 2019-08-29T23:14 (+4)

Tip: if you want a way to view Will's AMA answers despite the long thread, you can see all his comments on his user profile.

JP Addison @ 2024-05-20T20:57 (+2)

Working questions

A mental technique I’ve been starting to use recently: “working questions.” When tackling a fuzzy concept, I’ve heard of people using “working definitions” and “working hypotheses.” Those terms help you move forward on understanding a problem without locking yourself into a frame, allowing you to focus on other parts of your investigation.

Often, it seems to me, I know I want to investigate a problem without being quite clear on what exactly I want to investigate. And the exact question I want to answer is quite important! And instead of needing to be precise about the question from the beginning, I’ve found it helpful to think about a “working question” that I’ll then refine into a more precise question as I move forward.

An example: “something about the EA Forum’s brand/reputation” -> “What do potential writers think about the costs and benefits of posting on the Forum?” -> “Do writers think they will reach a substantial fraction of the people they want to reach, if they post on the EA Forum?”

Mo Putera @ 2024-05-21T04:08 (+3)

This sounds similar to what David Chapman wrote about in How to think real good; he's mostly talking about solving technical STEM-y research problems, but I think the takeaways apply more broadly:

Many of the heuristics I collected for “How to think real good” were about how to take an unstructured, vague problem domain and get it to the point where formal methods become applicable.

Formal methods all require a formal specification of the problem. For example, before you can apply Bayesian methods, you have to specify what all the hypotheses are, what sorts of events constitute “evidence,” how you can recognize one of those events, and (in a decision theoretic framework) what the possible actions are. Bayesianism takes these as given, and has nothing to say about how you choose them. Once you have chosen them, applying the Bayesian framework is trivial. (It’s just arithmetic, for godssakes!)

Finding a good formulation for a problem is often most of the work of solving it. [...]

Before applying any technical method, you have to already have a pretty good idea of what the form of the answer will be.

Part of a “pretty good idea” is a vocabulary for describing relevant factors. Any situation can be described in infinitely many ways. For example, my thinking right now could be described as an elementary particle configuration, as molecules in motion, as neurons firing, as sentences, as part of a conversation, as primate signaling behavior, as a point in world intellectual history, and so on.

Choosing a good vocabulary, at the right level of description, is usually key to understanding.

A good vocabulary has to do two things. Let’s make them anvils:

1. A successful problem formulation has to make the distinctions that are used in the problem solution.

So it mustn’t categorize together things that are relevantly different. Trying to find an explanation of manic depression stated only in terms of emotions is unlikely to work, because emotions, though relevant, are “too big” as categories. “Sadness” is probably a complex phenomenon with many different aspects that get collapsed together in that word.

2. A successful problem formulation has to make the problem small enough that it’s easy to solve.

Trying to find an explanation of manic depression in terms of brain state vectors in which each element is the membrane potential of an individual neuron probably won’t work. That description is much too complicated. It makes billions of distinctions that are almost certainly irrelevant. It doesn’t collapse the state space enough; the categories are too small and therefore too numerous.

It’s important to understand that problem formulations are never right or wrong.

Truth does not apply to problem formulations; what matters is usefulness.

In fact,

All problem formulations are “false,” because they abstract away details of reality.

[...]

There’s an obvious difficulty here: if you don’t know the solution to a problem, how do you know whether your vocabulary makes the distinctions it needs? The answer is: you can’t be sure; but there are many heuristics that make finding a good formulation more likely. Here are two very general ones:

Work through several specific examples before trying to solve the general case. Looking at specific real-world details often gives an intuitive sense for what the relevant distinctions are.

Problem formulation and problem solution are mutually-recursive processes.

You need to go back and forth between trying to formulate the problem and trying to solve it. A “waterfall” approach, in which you take the formulation as set in stone and just try to solve it, is rarely effective.

(sorry for the overly long quote, concision is a work in progress for me...)

jpaddison @ 2020-01-30T22:13 (+2)

We've been experiencing intermittent outages recently. Multiple possible causes and fixes have not turned out to fix it, so we're still working on it. If you see an error saying:

"503 Service Unavailable: No healthy endpoints to handle the request. [...]"

Try refreshing, or waiting 30 seconds and then refreshing; they're very transient errors.

Our apologies for the disruption.

jpaddison @ 2020-02-07T20:42 (+3)

It appears we've fixed this.