IAPS: Mapping Technical Safety Research at AI Companies

By Zach Stein-Perlman @ 2024-10-24T20:30 (+24)

This is a linkpost to https://www.iaps.ai/research/mapping-technical-safety-research-at-ai-companies

As artificial intelligence (AI) systems become more advanced, concerns about large-scale risks from misuse or accidents have grown. This report analyzes the technical research into safe AI development being conducted by three leading AI companies: Anthropic, Google DeepMind, and OpenAI.

We define “safe AI development” as developing AI systems that are unlikely to pose large-scale misuse or accident risks. This encompasses a range of technical approaches aimed at ensuring AI systems behave as intended and do not cause unintended harm, even as they are made more capable and autonomous.

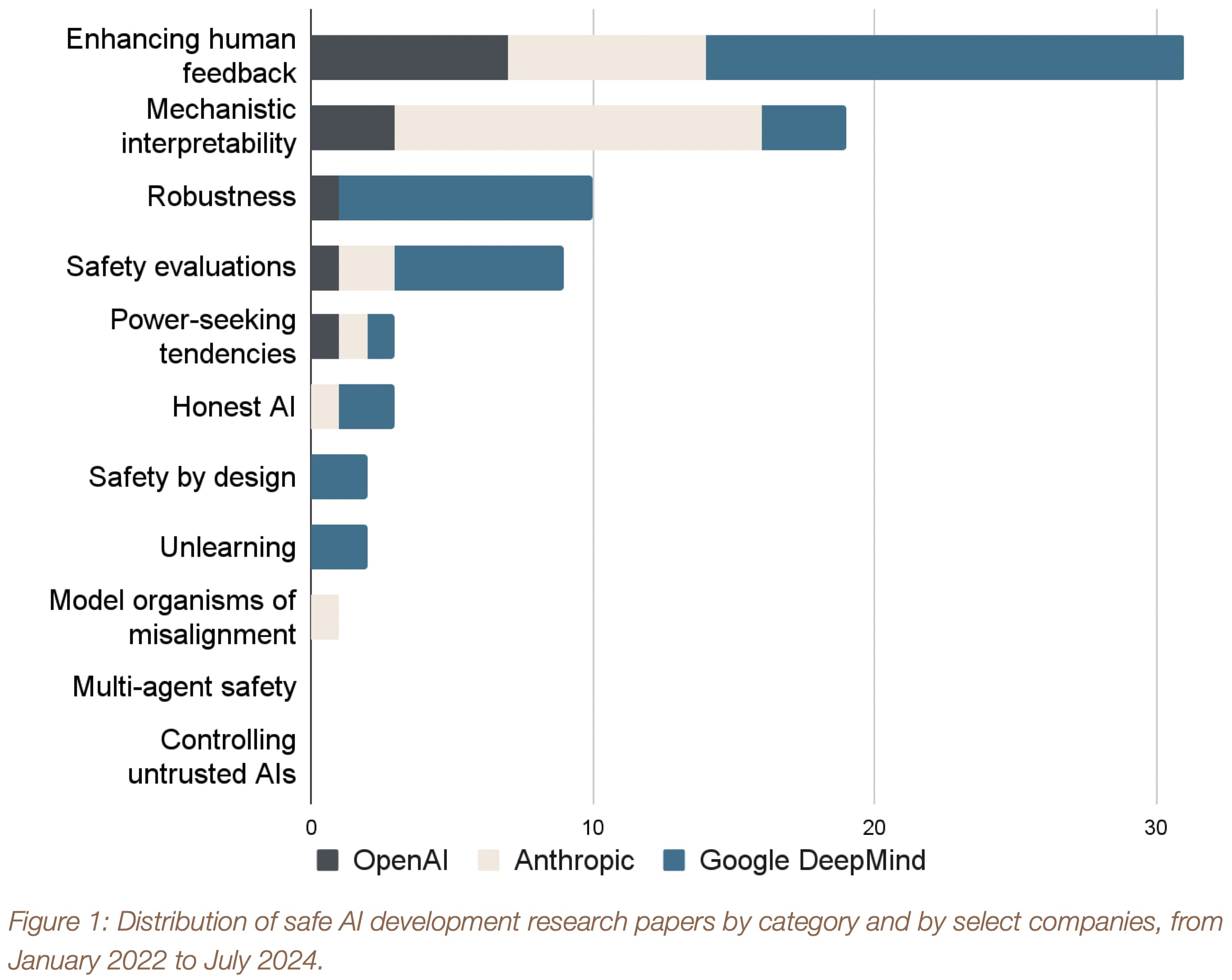

We analyzed all papers published by the three companies from January 2022 to July 2024 that were relevant to safe AI development, and categorized the 80 included papers into nine safety approaches. Additionally, we noted two categories representing nascent approaches explored by academia and civil society, but not currently represented in any research papers by these leading AI companies. Our analysis reveals where corporate attention is concentrated and where potential gaps lie.

Some AI research may stay unpublished for good reasons, such as to not inform adversaries about the details of safety and security techniques they would need to overcome to misuse AI systems. Therefore, we also considered the incentives that AI companies have to research each approach, regardless of how much work they have published on the topic. In particular, we considered reputational effects, regulatory burdens, and to what extent the approaches could be used to make the company’s AI systems more useful.

We identified three categories where there are currently no or few papers and where we do not expect AI companies to become much more incentivized to pursue this research in the future. These are model organisms of misalignment, multi-agent safety, and safety by design. Our findings provide an indication that these approaches may be slow to progress without funding or efforts from government, civil society, philanthropists, or academia.

One reason to be interested in this kind of work is as a precursor to measuring the value of different labs' safety publications. Right now the state-of-the-art technique for that is count the papers (and maybe elicit vibes from friends). But counting the papers is crude; it misses how good the papers are (and sometimes misses valuable non-paper research artifacts).

See more of IAPS's research. My favorite piece of IAPS research is still Deployment Corrections from a year ago.

Update: see my related List of AI safety papers from companies, 2023–2024.