Applying to MATS: What the Program Is Like, and Who It’s For

By rajlego @ 2026-01-17T00:25 (+15)

Application deadline: Three days remaining! MATS Summer 2026 applications close this Sunday, January 18, 2026 AOE. We've shortened the application this year. Most people finish in 1–2 hours, and we'll get back to applicants about first stage results by the end of January. Visit our website for details: matsprogram.org/apply.

TL;DR: This post is a follow-up to our shorter announcement that MATS Summer 2026 applications are open. It's intended for people who are considering applying and want a clearer sense of what the program is actually like, how mentorship and research support work in practice, and whether MATS is likely to be a good fit.

What MATS is trying to do

MATS aims to find and train talented individuals for what we see as one of the world's most urgent and talent-constrained problems: reducing risks from unaligned AI. We believe ambitious people from a wide range of backgrounds can meaningfully contribute to this work. Our program provides the mentorship, funding, training, and community to make that happen.

Since late 2021, MATS has supported over 500 researchers working with more than 100 mentors from organizations like Anthropic, Google DeepMind, OpenAI, UK AISI, GovAI, METR, Apollo, RAND, AI Futures Project, Redwood Research, and more. Fellows have collectively co-authored 160+ research papers, with 7,800+ citations and an organizational h-index of 40.

Fellows have contributed to research agendas like:

- Sparse auto-encoders for AI interpretability

- Activation/representation engineering

- Emergent misalignment

- Evaluating situational awareness

- Inoculation prompting

- Developmental interpretability

- Computational mechanics

- Gradient routing

Approximately 80% of alumni now work directly in AI safety/security, and around 10% have gone on to co-found AI safety organizations or research teams. These 30+ initiatives include Apollo Research, Atla AI, Timaeus, Simplex, Leap Labs, Theorem Labs, Workshop Labs, and Watertight AI

What fellows receive

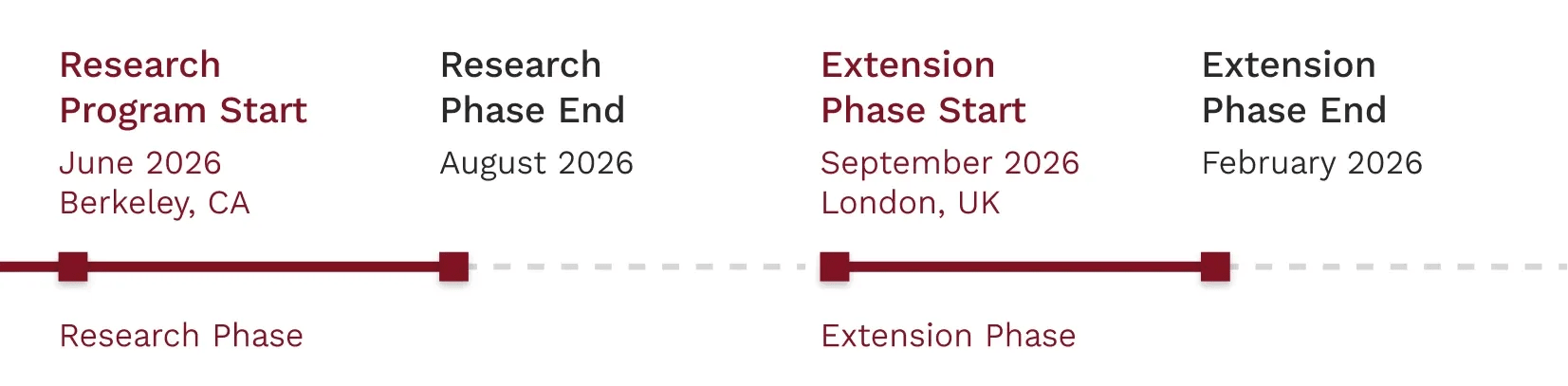

The initial program runs for 12 weeks (June–August 2026) in Berkeley, London, or remotely, depending on stream. Fellows receive:

- Mentorship from world-class researchers and a dedicated research manager

- $15,000 stipend and $12,000 compute budget

- Housing in a private room, catered meals, and travel to and from the program covered

- Office space and the community that comes from working alongside other fellows

- Seminars, workshops, and networking events with the broader AI safety community

- The opportunity to continue for 6-12 additional months with ongoing stipend, compute, mentorship, and research support through the extension

What the 12-week research phase is like in practice

Most fellows work on an independent research project, typically scoped in collaboration with their mentor during the early weeks. During this period, fellows are usually:

- getting oriented to the MATS environment,

- refining or rethinking an initial project idea,

- learning how their mentor prefers to work,

- and calibrating what "good progress" looks like in an open-ended research setting.

As the program progresses, fellows iterate on a research plan, check in regularly with mentors and research managers, and gradually sharpen their questions and methods.

Fellows complete two milestones during the 12-week phase. In the past, the first has been a Project Proposal or Research Plan. The second is a Poster Presentation at the MATS Research Symposium, attended by members of the AI safety community.

How mentorship and research management work

Mentorship at MATS is intentionally varied. Mentors differ in:

- how directive they are,

- how often they meet,

- whether they focus more on high-level framing or low-level technical details.

Every fellow also works with our dedicated research management team. Research managers (RMs) work with the fellows and mentors in a stream to support their program goals. Often this involves providing regular check-ins, helping unblock stalled projects, offering feedback on research plans, and supporting fellows in translating ideas into concrete progress.

There is, however, a lot flexibility in what an RM can do in their role. This includes offering productivity coaching, career advice, application help, conference submission assistance, publication guidance, and much more!

Fellows consistently rate the experience highly, with an average score of 9.4/10 for our latest program (median of 10/10).

Community at MATS

The 12-week phase provides fellows with a community of peers who share an office, meals, and housing. Working in a community grants fellows easy access to future collaborators, a deeper understanding of other research agendas, and a social network in the AI safety community. Fellows also receive support from full-time Community Managers.

Each week includes social events e.g., parties, game nights, movie nights, hikes. Weekly lightning talks give fellows an opportunity to share their research interests in an informal setting. Outside of work, fellows organize road trips, city visits, weekend meals, and more.

The 6-12 month extension phase

At the conclusion of the 12-week research phase, fellows can apply to continue their research in a fully-funded 6-12 month extension. Approximately 75% of fellows continue into the extension. By scholar-time weighting, ~60% of the MATS experience is the extension phase (12 month extensions shift this even further).

Acceptance decisions are based on mentor endorsement and double-blind review of the 12-week program milestones. By this phase, fellows are expected to pursue their research with high autonomy.

Who should apply

MATS welcomes talented applicants that traditional pipelines may overlook. We're looking for:

- Technical researchers (ML/AI background helpful but not required)

- People who can demonstrate strong reasoning and research potential

- Those interested in alignment, interpretability, security, or governance

- Policy professionals with strong writing ability, understanding of governmental processes, and technical literacy to engage with AI concepts

- Individuals with experience in government, think tanks, or policy orgs; domain expertise in national security, cybersecurity, US-China relations, biosecurity, or nuclear policy a plus

Our ideal applicant has:

- An understanding of the AI safety research landscape equivalent to having completed BlueDot Impact’s Technical AI Safety or AI Governance courses.

- Previous experience with technical research (e.g., ML, CS, math, physics, neuroscience), generally at a postgraduate level; OR previous policy research experience or a background conducive to AI governance

- Strong motivation to pursue a career in AI safety research

Even if you do not meet all of these criteria, we encourage you to apply. Several past fellows applied without strong expectations and were accepted.

All nationalities are eligible to participate and roughly 50% of MATS fellows are international.

Who tends to thrive at MATS

There's no single "MATS profile," but fellows who thrive tend to share a few characteristics:

- Comfort with ambiguity and open-ended problems

- Strong reasoning skills, even outside their primary domain

- Willingness to revise or abandon initial ideas

- Ability to work independently while seeking feedback strategically

Prior ML experience is helpful but not required. Many successful fellows come from mathematics, physics, policy research, security studies, or other analytical fields.

Who might not find MATS a good fit

You may want to reconsider applying if you're primarily looking for:

- a structured curriculum with clear assignments,

- an academic credential or resume signal,

- or tightly scoped tasks with well-defined answers.

We think it’s a feature and not a bug that some very capable people decide MATS isn’t for them.

How to apply

General Application (December 16th to January 18th)

Applicants fill out a general application, which should take 1-2 hours. Applications are due by January 18th AoE.

Additional Evaluations (Late January through March)

Applicants that are advanced in the applications process go through additional evaluations including reference checks, coding tests, work tests, and interviews. Which evaluations you will undergo depend on the mentors and streams you apply to.

Admissions Decisions (Mid March through Early April)

Selected applicants are notified of their acceptance and anticipated mentor later in the application cycle.

If you have any questions about the program or application process, contact us at applications@matsprogram.org.

Closing

If you're considering applying to MATS Summer 2026, we hope this post helps you decide whether the program is a good fit for you. Full details about the program structure, timelines, and application process are on the MATS website.

We're happy to answer questions in the comments!

Acknowledgments: Claude was used for limited editorial support (e.g., wording suggestions, structural feedback).

SummaryBot @ 2026-01-19T22:58 (+2)

Executive summary: This post explains what the MATS Summer 2026 program is like in practice and argues that it is a good fit for self-directed researchers motivated to work on reducing risks from unaligned AI, while being a poor fit for those seeking structured coursework or credentials.

Key points:

- The author states that MATS aims to identify and train talented people to work on reducing risks from unaligned AI by providing mentorship, funding, and community rather than a formal curriculum.

- Since 2021, MATS has supported over 500 researchers with 100+ mentors, resulting in 160+ papers, 7,800+ citations, and roughly 80% of alumni working directly in AI safety or security.

- Fellows receive a 12-week fully funded research phase with mentorship, a $15,000 stipend, a $12,000 compute budget, housing, and access to seminars and the AI safety community.

- Most fellows work on independent research projects with flexible mentorship styles and support from dedicated research managers, culminating in milestones like a research plan and poster presentation.

- Around 75% of fellows continue into a 6–12 month extension phase, which accounts for roughly 60% of total scholar-time and expects high research autonomy.

- The program is best suited to applicants comfortable with ambiguity, independent research, and revising ideas, and is not intended for those seeking structured assignments, credentials, or tightly defined tasks.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.