Thoughts on this $16.7M "AI safety" grant?

By defun 🔸 @ 2024-07-16T09:16 (+61)

UPDATE: read Peter Slattery's answer.

Open Philanthropy has recommended a total of $16.7M to the MIT to support research led by Neil Thompson on modeling the trends and impacts of AI and computing.

2020 - MIT — AI Trends and Impacts Research - $550,688

2022 - MIT — AI Trends and Impacts Research - $13,277,348

2023 - MIT — AI Trends and Impacts Research - $2,911,324

I've read most of their research, and I don't understand why Open Philanthropy thinks this is a good use of their money.

Thompson's Google Scholar here.

Thompson's most cited paper "The Computational Limits of Deep Learning" (2020)

@gwern pointed out some flaws on Reddit.

Thompson's latest paper "A Model for Estimating the Economic Costs of Computer Vision Systems that use Deep Learning" (2024)

This paper has many limitations (as acknowledged by the author), and from an x-risks point of view, it seems irrelevant.

What do you think about Open Philanthropy recommending a total of $16.7M for this work?

PeterSlattery @ 2024-07-17T17:26 (+42)

Disclosure: I have past, present, and potential future affiliations with MIT FutureTech. These views are my own.

Thank you for this post. I think it would be helpful for readers if you explained the context a little more clearly; I think the post is a little misleading at the moment.

These were not “AI Safety” grants; they were for “modeling the trends and impacts of AI and computing” which is what Neil/the lab does. Obviously that is important for AI Safety/x-risk reduction, but it is not just about AI Safety/x-risk reduction and somewhat upstream.

Importantly, the awarded grants were to be disbursed over several years for an academic institution, so much of the work which was funded may not have started or been published. Critiquing old or unrelated papers doesn't accurately reflect the grant's impact.

You claim to have read 'most of their research' but only cite two papers, neither funded by Open Philanthropy. This doesn't accurately represent the lab's work.

Your criticisms of the papers lack depth, e.g., 'This paper has many limitations (as acknowledged by the author)' without explaining why this is problematic. Why are so many people citing that 2020 paper, if it is not useful? Do you do research in this area, or are you assuming that you know what is useful/good research here? (genuine question - I honestly don't know).

By asking readers to evaluate '$16.7M for this work', you imply that the work you've presented was what was funded, which is not the case.

Could you please update your post to address these issues and provide a more accurate representation of the grants and the lab's work?

Now, to answer your question, I personally think the work being done by the lab deserves significant funding. Some reasons:

- I think modeling the trends and impacts of AI and computing is very important and that it is valuable for OP to be able to fund very rigorous work to reduce their related uncertainties.

- I think that it is very valuable to have respected researchers and institutions producing rigorous and credible work; I think that the impact of research scales superlinearly based on the credibility and rigor of the researchers.

- The lab is growing very rapidly and attracting a lot of funding and interest from many sources.

- The work is widely cited in policy documents, including, for instance, the 2024 Economic Report of the President.

- The work is widely covered in the media.

- Neil seems to be well respected by those who know him. I joined the lab after I spoke to a range of people I respect about their experiences working with him. Everyone I spoke with was very positive about Neil and the importance of his work. My experiences at the lab have reinforced my perspective.

- Many of the new and ongoing projects (which I cannot discuss) seem quite neglected and important (e.g., they respond to requests from funders and I don't know of other research on them). I expect they will be very valuable once they are released.

- The lab is interdisciplinary and has a very broad, balanced and integrative approach to AI trends and impacts. Neil has a broad background and knowledge across many domains. This is reflected by how the lab functions; we hire and engage with people across many areas of the AI landscape, from people working on hardware and algorithms, to those working directly on AI risks reduction and evaluation. For instance, see the wide range of attendees at our AI Scaling Workshop (and the agenda). This seems rare and valuable (especially in a place like MIT CSAIL).

Geoffrey Miller @ 2024-07-17T17:33 (+6)

Peter -- This is a valuable comment; thanks for adding a lot more detail about this lab.

defun @ 2024-07-17T18:13 (+3)

Thanks a lot for giving more context. I really appreciate it.

These were not “AI Safety” grants

These grants come from Open Philanthropy's focus area "Potential Risks from Advanced AI". I think it's fair to say they are "AI Safety" grants.

Importantly, the awarded grants were to be disbursed over several years for an academic institution, so much of the work which was funded may not have started or been published. Critiquing old or unrelated papers doesn't accurately reflect the grant's impact.

Fair point. I agree old papers might not accurately reflect the grant's impact, but they correlate.

Your criticisms of the papers lack depth ... Do you do research in this area, ...

I totally agree. That's why I shared this post as a question. I'm not an expert in the area and I wanted an expert to give me context.

Could you please update your post to address these issues and provide a more accurate representation of the grants and the lab's work?

I added an update linking to your answer.

Overall, I'm concerned about Open Philanthropy's granting. I have nothing against Thompson or his lab's work.

peterbarnett @ 2024-07-16T18:29 (+37)

I think it's worth noting that the two papers linked (which I agree are flawed and not that useful from an x-risk viewpoint) don't acknowledge OpenPhil funding, and so maybe the OpenPhil funding is going towards other projects within the lab.

I think that Neil Thompson has some work which is pretty awesome from an x-risk perspective (often in collaboration with people from Epoch):

- Algorithmic progress in language models

- Economic impacts of AI-augmented R&D

- The growing influence of industry in AI research

From skimming his Google Scholar, a bunch of other stuff seems broadly useful as well.

In general, research forecasting AI progress and economic impacts seems great, and even better if it's from someone academically legible like Neil Thompson.

defun @ 2024-07-17T16:25 (+5)

This paper is from Epoch. Thompson is a "Guest author".

I think this paper and this article are interesting but I'd like to know why you think they are "pretty awesome from an x-risk perspective".

Epoch AI has received much less funding from Open Philanthropy ($9.1M), yet they are producing world-class work that is widely read, used, and shared.

PeterSlattery @ 2024-07-17T17:35 (+7)

This seems misleading. Some of the authors are from Epoch, but there are authors from two other universities on the paper.

Also, where does it say that he is a guest author? Neil is a research advisor for Epoch and my understanding is that he provides valuable input on a lot of their work.

defun @ 2024-07-17T17:52 (+3)

Sorry, I should have attached this in my previous message.

where does it say that he is a guest author?

Here.

PeterSlattery @ 2024-07-17T18:07 (+11)

Thanks. My impression is that they are using 'Guest author' on their blog post to differentiate who works for Epoch or is external. As far as I can tell, that usage implies nothing about the contribution of the authors to the paper.

Rebecca @ 2024-07-18T06:00 (+2)

Yeah this seems the most straightforward interpretation

calebp @ 2024-07-17T19:10 (+4)

I think it's worth noting that the two papers linked (which I agree are flawed and not that useful from an x-risk viewpoint)

I haven't read the papers but I am surprised that you don't think they are useful from an x-risk perspective. The second paper "A Model for Estimating the Economic Costs of Computer Vision Systems that use Deep Learning" seems highly relevant to forecasting AI progress which imo is one of the most useful AIS interventions.

The OP's claim

This paper has many limitations (as acknowledged by the author), and from an x-risks point of view, it seems irrelevant.

Seems overstated and I'd guess that many people working on AI safety would disagree with them.

defun @ 2024-07-17T19:39 (+5)

Hi calebp.

If you have time to read the papers, let me know if you think they are actually useful.

Zach Stein-Perlman @ 2024-07-16T21:07 (+4)

Setting aside whether Neil's work is useful, presumably almost all of the grant is for his lab. I failed to find info on his lab.

peterbarnett @ 2024-07-16T21:19 (+5)

I would guess grants made to Neil's lab are referring to the MIT FutureTech group, which he's the director of. FutureTech says on its website that it has received grants from OpenPhil and the OpenPhil website doesn't seem to mention a grant to FutureTech anywhere, so I assume the OpenPhil FutureTech grant was the grant made to Neil's lab.

aogara @ 2024-07-21T19:09 (+25)

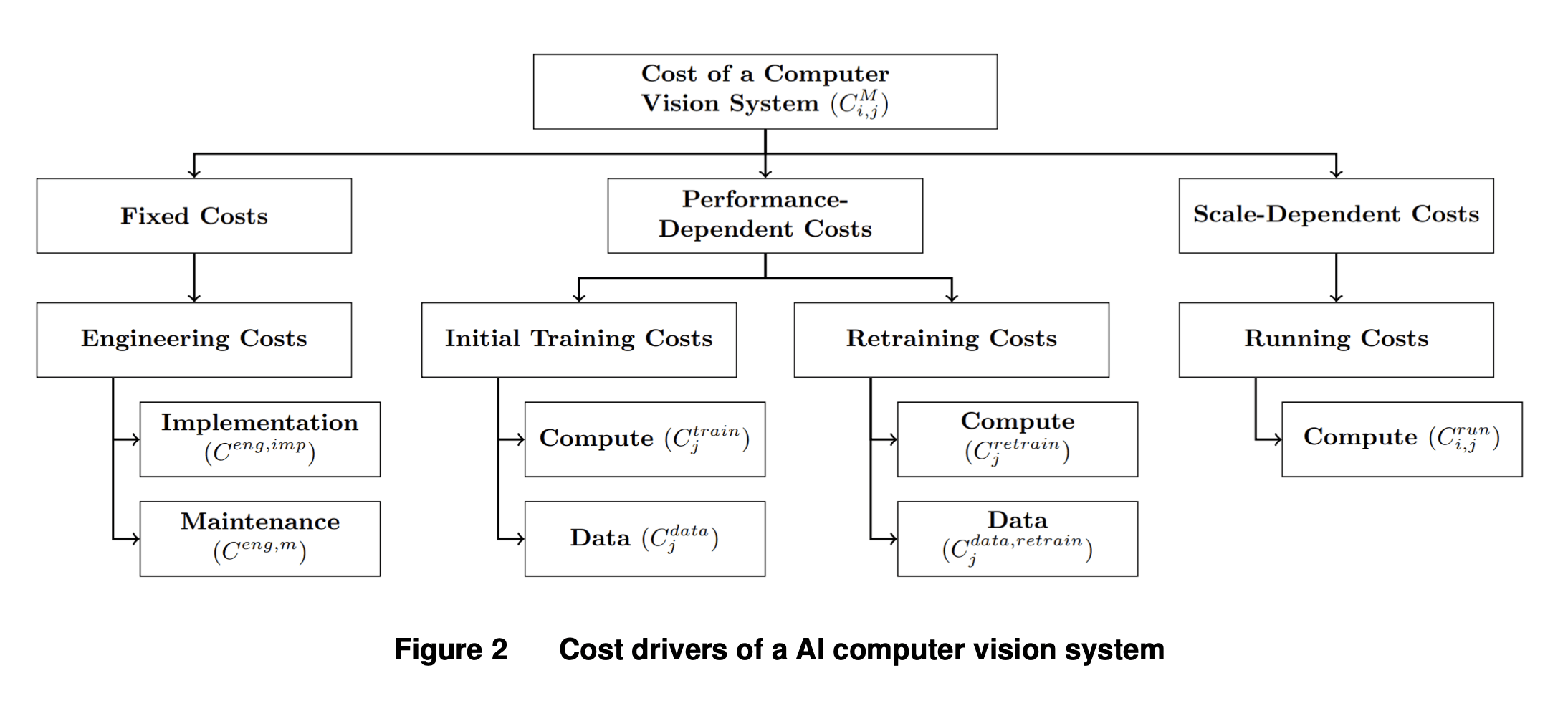

I really liked MIT FutureTech's recent paper, "Beyond AI Exposure: Which Tasks are Cost-Effective to Automate with Computer Vision?" I think it's among the 10 best economics of AI papers I've read from the last few years. It proposes an economic model of the circumstances under which companies would automate human labor with AI.

Previously, some of the most cited papers on the potential impacts of AI automation used an almost embarrassingly simple methodology: surveys. They take a list of jobs or tasks, and survey people about whether they think AI could someday automate that job or task. That's it. For validation, they might cross reference different people's estimates. Their conclusion would be something like "according to our survey, people think AI could automate X% of jobs." This methodology has been employed by some of the highest profile papers on the potential economic impact of AI, including this paper in Science and this paper with >10K citations.

(Other papers ignore the micro-level of individuals tasks and firms, and instead model the macroeconomic adoption of AI. For example, Tom Davidson, Epoch, Phil Trammell, Anton Korinek, William Nordhaus, and Chad Jones have done research where they suppose that it's cost-effective for AI to automate a certain fraction of tasks. This macro-level modeling is also valuable, but by ignoring the choices of individual firms to automate individual tasks, they assume away a lot of real world complexity.)

The MIT FutureTech paper significantly improves upon the survey method by creating a mathematical model of what it would cost for a firm to automate a task with AI. The basic premise is that a firm will automate human labor with AI if the human labor is more expensive than AI automation would be. To estimate the cost of AI automation, they break down the costs of automation into the following parts:

Then they estimate distributions for each of these parameters, and come up with an overall distribution for the cost of AI automation. They compare the distribution of AI automation costs to the distribution of human wages in tasks that could be automated, and thereby estimate which tasks it would be cost-effective to automate. This allows them to make conclusions like "X% of tasks would be cost-effective to automate."

There's a lot of detail to the paper, and there are plenty of reasonable critiques one could make of it. I don't mean to endorse the other sections or the bottom-line conclusions. But I think this is clearly the state of the art methodology for estimating firm-level adoption of AI automation, and I would be excited to see future work that refines this model or applies it to other domains.

More broadly, I've found lots of Neil Thompson's research informative, and I think FutureTech is one of the best groups working on the economics of AI. I am surprised at the size of the grant, as I'd tend to think economics research is pretty cheap to fund, but I don't know the circumstances here.

(Disclosure: Last summer I applied for an internship at MIT FutureTech.)

defun @ 2024-07-22T10:36 (+3)

Thanks for the comment @aogara <3. I agree this paper seems very good from an academic point of view.

My main question: how does this research help in preventing existential risks from AI?

Other questions:

- What are the practical implications of this paper?

- What insights does this model provide regarding text-based task automation using LLMs?

- Looking into one of the main computer vision tasks: self-driving cars. What insights does their model provide? (Tesla is probably ~3 years away from self-driving cars and this won't require any hardware update, so no cost)

aogara @ 2024-07-22T16:36 (+4)

Mainly I think this paper will help inform people about the potential economic implications of AI development. These implications are important for people to understand because they contribute to AI x-risks. For example, explosive economic growth could lead to many new scientific innovations in a short period of time, with incredible upside but also serious risks, and perhaps warranting more centralized control over AI during that critical period. Another example would be automation: if most economic productivity comes from AI systems rather than human labor or other forms of capital, this will dramatically change the global balance of power and contribute to many existential risks.

defun @ 2024-07-22T17:14 (+3)

Thanks again for the comment.

You think that the primary value of the paper is in its help with forecasting, right?

In that case, do you think it would be fair to ask expert forecasters if this paper is useful or not?

aogara @ 2024-07-22T21:40 (+9)

I think this kind of research will help inform people about the economic impacts of AI, but I don't think the primary benefits will be for forecasters per se. Instead, I'd expect policymakers, academics, journalists, investors, and other groups of people who value academic prestige and working within established disciplines to be the main groups that would learn from research like this.

I don't think most expert AI forecasters would really value this paper. They're generally already highly informed about AI progress, and might have read relatively niche research on the topic, like Ajeya Cotra and Tom Davidson's work at OpenPhil. The methodology in this paper might seem obvious to them ("of course firms will automate when it's cost effective!"), and its conclusions wouldn't be strong or comprehensive enough to change their views.

It's more plausible that future work building on this paper would inform forecasters. As you mentioned above, this work is only about computer vision systems, so it would be useful to see the methodology applied to LLMs and other kinds of AI. This paper has a relatively limited dataset, so it'd be good to see this methodology applied to more empirical evidence. Right now, I think most AI forecasters rely on either macro-level models like Davidson or simple intuitions like "we'll get explosive growth when we have automated remote workers." This line of research could eventually lead to a much more detailed economic model of AI automation, which I could imagine becoming a key source of information for forecasters.

But expert forecasters are only one group of people whose expectations about the future matter. I'd expect this research to be more valuable for other kinds of people whose opinions about AI development also matter, such as:

- Economists (Korinek, Trammell, Brynjolfsson, Chad Jones, Daniel Rock)

- Policymakers (Researchers at policy think tanks and staffers in political institutions who spend a large share of their time thinking about AI)

- Other educated people who influence public debates, such as journalists or investors

Media coverage of this paper suggests it may be influential among those audiences.

core_admiral @ 2024-10-25T04:45 (+1)

Thanks for writing this, I really appreciate your insight. If or whenever it wouldn't cost you too much time, I think the other of the 10 best economics of AI papers from the past few years could be a useful compilation for people.

Zach Stein-Perlman @ 2024-07-16T14:02 (+16)

...huh, I usually disagree with posts like this, but I'm quite surprised by the 2022 and 2023 grants.

defun @ 2024-07-16T14:10 (+1)

Agree. OP's hits-based giving approach might justify the 2020 grant, but not the 2022 and 2023 grants.