AI safety starter pack

By mariushobbhahn @ 2022-03-28T16:05 (+131)

There are a ton of good resources for AI safety out there. However, conversations with people trying to get into the field revealed that these materials are often unknown. Therefore, I want to provide a very basic overview of how to start. My claim is that a person who invests ~2-3 hours a week to study the recommended material will have a good understanding of the AI safety landscape within one year (if you are already familiar with ML you can get there in ~3 months, e.g. by doing the AGI safety fundamentals fellowship). This post is primarily designed for people who want to get into technical AI safety. While it might be still helpful for AI governance it is not specifically geared towards it.

I want to thank Jaime Sevilla, Alex Lawsen, Richard Ngo, JJ Hepburn, Tom Lieberum, Remmelt Ellen and Rohin Shah for their feedback.

I intend to update this post regularly. If you have any feedback please reach out.

Updates:

- Charlie wrote a long guide on careers in AI alignment. It's really really good!

- Gabriel wrote a concrete guide to level up AI safety engineering. I enjoyed it a lot.

Motivation & mental bottlenecks

When I look back on my own (still very young) journey in AI safety, the biggest bottlenecks were not the availability of resources or a lack of funding. My biggest bottlenecks were my own psychological barriers. For example, I had vague thoughts like “AI safety is so important, I’m not up for the task”, “If I dive into AI safety, I’m doing it properly--fully focused with a clear mind” (which meant I always delayed it) or “AI safety is so abstract, I don’t know where to start”. To be clear, I never explicitly and rationally held these beliefs. They were mostly subconscious but nevertheless prevented me from doing more earlier.

I think there are a few simple insights that make joining the field much easier.

- AI safety is still in its infancy: If you believe that AI safety might be one of the biggest or even the biggest problem of humanity, then the “correct” number of people working on it is at least 1000x larger than it currently is. The position for which you are a good fit might not even exist yet but it probably will in the future---possibly by your own creation.

- AI safety is multi-disciplinary: AI safety doesn’t just require technical people. It needs social scientists, it needs community builders to grow the field, it needs people in governance, it needs people thinking about the bigger picture, and many more. You don’t need to be good at math or coding to be able to contribute meaningfully.

- You can contribute relatively quickly: The field is young and there are a ton of low-hanging fruit waiting to be picked. There are organizations like the AI Safety Camp that facilitate these projects but you can usually find small online projects throughout the year.

Lastly, there are some simple tips that make the start of your journey easier and more fun

- Find others & get help: Working with others is more fun, you learn faster and it creates positive accountability. I can generally recommend it and the AI safety community is usually very friendly and helpful as long as you are well-intentioned. If you realize that you are emotionally bottlenecked, ask others for their experiences and let them help you--you are not the only one struggling. To find a community more easily, check out the resources of the AI safety support group such as the AI safety slack (see below).

- Build habits: In general (not only for AI safety), building habits is a good way to get into a field. You could start by setting a daily alarm that reminds you to check the alignment forum, the EA forum or LessWrong. You don’t even have to read an article, just skimming the headlines is already enough to build up the habit.

- Don’t overload yourself: You don’t need to read everything all the time and it’s completely fine to ignore the latest trend. In many ways, this document is intended to provide a feeling of “If I do some of this, I’ll be just fine”.

- Choose your speed: some people need to take it slow, others want to dive in and not think about anything else. If you want to speedrun this document, just do it. If you want to take it slow, that's also fine.

Resources

The first five are sorted broadly by how much background knowledge you need and the rest is harder to order.

- Brian Christian’s The Alignment Problem (book): beginner-friendly and very good to get a basic overview of what alignment is and why we need it.

- AI safety from first principles by Richard Ngo: Good dive into AI safety without requiring much background knowledge.

- The Alignment newsletter by Rohin Shah: can contain technical jargon but provides a good sense of “what’s going on in AI safety”.

- The AGI safety fundamentals fellowship designed by Richard Ngo: It covers the most important topics in AI safety and I really liked it. It requires a bit of background knowledge but not much. If you just missed the yearly run, consider doing it outside of the official program with others. If you do just one thing on this list, it should be this program.

- Rob Miles’ Youtube channel: Rob claims that the videos require no background knowledge but some probably do. The videos are really good.

- The Alignment forum (especially the recommended sequences on the landing page), EA forum and LessWrong: Don’t read all articles. Skim the headlines and read those that sound interesting to you. If none sound interesting come back another time. You can ask more experienced people to send you recommendations whenever they stumble across an article they like. Forum posts can be very technical at times, so I would recommend this more to people who already have a basic understanding of ML or AI safety.

- Use the AI safety support resources: They have a newsletter, provide 1-on-1 career coaching, a list of resources, a health coach and feedback events. Furthermore, you can read JJ’s posts on “Getting started independently in AI safety” and “The application is not the applicant”.

- Listen to podcasts: I can recommend 80K podcast episodes #107 and #108 (with Chris Olah), #92 (with Brian Christian), #90 (with Ajeya Cotra) and #62 and #44 (with Paul Christiano). A bit of background knowledge helps but is not necessary. There is also the AXRP podcast which is more technical (start with the episode with Paul Christiano).

- The AI Safety Camp: Every year a new batch of AI safety enthusiasts work on multiple practical AI safety problems. Some background knowledge is helpful/required so make sure you have a decent understanding of the field before applying.

- The ML safety community: They have an intro to ML safety, a resources page, multiple competitions, the ML safety scholars program and much more. They are a young organization and I expect them to create more great resources over the coming years.

- Various slack channels or Whatsapp/Telegram/Signal groups: If you want to connect with other AI safety enthusiasts, ask around and see if you can join. These groups often share resources or read papers together (not mandatory).

- Write posts on the forums (AF/EA/LW): Posts don’t have to be spectacular---a good summary of a relevant paper is already valuable. Writing in groups can be a lot of fun. I wrote a 5-minute guide on writing better blog posts. John Wentworth’s post on “Getting into independent AI safety research” contains a lot of valuable knowledge, not only for writing blog posts.

- In case you want to contribute as an engineer or research engineer, you should check out “AI Safety Needs Great Engineers” and the 80K podcast episode with Catherine Olsson and Daniel Ziegler.

- Have a 1:1 careers conversation with 80k. They can talk to you at an early stage when you're trying to work out whether to explore AI safety at all, or later on when you've already tried out a few things. They're probably most useful earlier on as getting help sooner might mean you can work out some of your uncertainties faster. In case you are uncertain whether you are a good fit just apply and they will help you out. They are really nice and friendly, so don’t be afraid of applying.

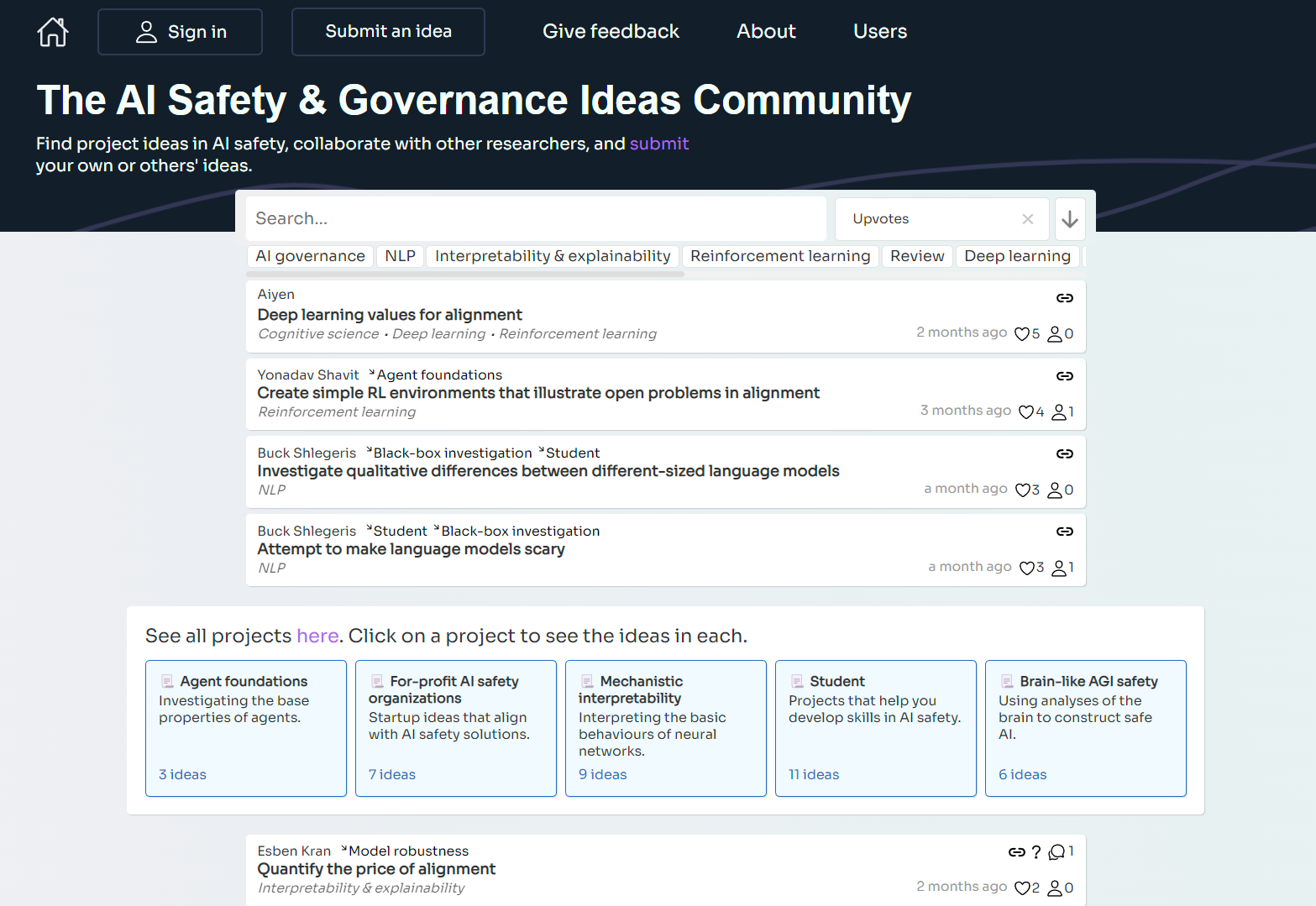

- Check out the projects on AI safety ideas. It's basically a project board for AI safety projects. Many ideas don't require that much background so you can contribute with relatively little background knowledge.

Funding

If you want to contribute more seriously to a project there is a good chance you can get funded for it and you should have a low bar for applying. Even a rejection is helpful because it provides feedback for improvement. The funding depends a bit on your background, current career stage and exact topic but it’s important to know that the opportunities exist (copied from Richard Ngo’s Careers in AI safety):

- Open Philanthropy undergraduate scholarship

- Open Philanthropy early-career funding

- Long-term future fund

- FTX Future Fund (discontinued due to FTX debacle)

- Survival and flourishing fund

- The Center on Long-Term Risk Fund (CLR Fund)

- Future of Life grants

- Open Philanthropy AI Ph.D. scholarship

I hope this little overview helps. Let me know if you profited from it or if you have suggestions for improvement. If you have more questions on AI safety, feel free to reach out or hit me up at any EA events I attend. I can’t promise that I’ll always find the time but I’ll try my best.

If you want to be informed about new posts, you can follow me on Twitter.

L Purcell @ 2022-07-01T03:35 (+9)

Thanks mariushobbhahn, very useful. You summarised all my own hesitations.

I have bookmarked a number of resources, signed up for a newsletter, and have more clarity over what my next steps should be (which includes seeking more help).

Thanks for taking the time to put this together. I definitely benefitted.

mariushobbhahn @ 2022-07-01T06:39 (+2)

Great. Thanks for sharing. I hope it increases accountability and motivation!

Yonatan Cale @ 2022-03-28T16:34 (+8)

Thank you, this is very useful!

I expect to refer lots of people to this post

jskatt @ 2022-08-11T04:05 (+3)

This list is great. I recommend adding the new Intro to ML Safety course and the ML Safety Scholars Program. Or maybe everyone is supposed to read Charlie's post for the most up-to-date resources? It's worth clarifying.

mariushobbhahn @ 2022-08-11T07:51 (+2)

Added it. Thanks for pointing it out :)

michaelchen @ 2022-03-30T13:24 (+3)

you can usually find small online projects throughout the year

Where?

Callum McDougall @ 2022-06-15T11:35 (+9)

Update on the project board thing - I'm assuming that was referring to this website, which looks really awesome!

https://aisafetyideas.com/

mariushobbhahn @ 2022-06-15T13:44 (+8)

Nice. It looks pretty good indeed! I'll submit something in the near future.

Charles He @ 2022-06-17T00:21 (+2)

Wow, the site mentioned looks fantastic

(I don't know anything about AI safety or longtermism) but just to repeat the discussion above, this site looks great looks great.

- It has a PWA feel (if you click and interact, the response is instant, like a native app on an iPhone, not a website with a click delay).

- The content seems really good.

There's probably considerations, founder effects and seeding of content, but this implementation seems like a great pattern for community brainstorming for other causes.

Imagine getting THL staff and other EAs going on a site for animal welfare or global health.

mariushobbhahn @ 2022-03-31T11:39 (+1)

There is no official place yet. Some people might be working on a project board. See comments in my other post: https://forum.effectivealtruism.org/posts/srzs5smvt5FvhfFS5/there-should-be-an-ai-safety-project-board

Until then, I suggest you join the slack I linked in the post and ask if anyone is currently searching. Additionally, if you are at any of the EAGs and other conferences, I recommend asking around.

Until we have something more official, projects will likely only be accessible through these informal channels.

Rahela @ 2022-04-04T07:19 (+2)

Thanks mariushobbhahn this is helpful. I just signed up to the newsletter.

AkshatN @ 2023-10-10T17:05 (+1)

The AGI safety fundamentals fellowship leads to a broken link, and the amount of googling I've done to find the actual page, suggests that the website is actually down.

Is there any way something can be done about this?

Lorenzo Buonanno @ 2023-10-10T17:57 (+2)

Maybe it's this? https://aisafetyfundamentals.com/