Statement on Pluralism in Existential Risk Studies

By GideonF @ 2023-08-16T14:29 (+28)

This is a copy of the English version a statement released yesterday by a group of academics that can be seen at https://www.existentialriskstudies.org/statement/. The Spanish translation, by Mónica A. Ulloa Ruiz, will be put on the forum soon

This statement was drawn up by a group of researchers from a variety of institutions who attended the FHI and CSER Workshop on Pluralisms in Existential Risk Studies from 11th-14th May 2023. It conveys our support for the necessity for the community concerned with existential risk to be pluralistic, containing a diversity of methods, approaches and perspectives, that can foster difference and disagreement in a constructive manner. We recognise that the field has not yet achieved this necessary pluralism, and commit to bring about such pluralism. A list of researchers who support the statement is included at the end. In the spirit of pluralism, whilst we signatories do not all necessarily endorse every point contained within the statement, we stand sufficiently behind the thrust of the statement that we think it is well worth making.

We believe it is important that the community concerned with existential risk is well adapted to its purposes, and that pluralism is necessary for this community to achieve various epistemic, ethical and pragmatic goals that each of us hold to be important.

Existential risk, as a concept, is defined in a multitude of ways in our community. Some in this group think it to be ‘risks of human extinction, societal collapse and other events associated with these’[1], others conceptions involve ‘risk of permanent loss of humanity’s potential’[2] or the risk of the loss of large amounts of expected value of the future[3]. Some see it as an inseparable part of the broader class of risks to the existence of individuals, communities or specific 'worlds'[4]. While differing definitions of existential risk can and do lead to relevant divergence,it also seems we (the signatories) regularly have enough of a shared interest in working together, learning from each other (even through our disagreements) that being part of the same, pluralistic community appears to be mutually beneficial. This needn’t mean we will come to the same conclusions, or always agree; rather it suggests that we would all benefit from the existence of a healthy, pluralistic intellectual ecosystem concerned with issues around the plurality of conceptions of existential risk.

We disagree, or take different approaches, on a lot. We differ on the methods we think best for studying existential risk[5]. We differ on the epistemic aims of such research[6]. We differ on what should be accepted as evidence[7] and how this evidence ought to be interpreted[8], evaluated[9] and aggregated[10]. We differ on what core assumptions should underpin our visions of existential risk[11] and how to research them[12]. We differ on our visions of the future. We differ as to what is ethically important or acceptable[13]. We differ on our politics and how this relates to our thinking and practice with regards to existential risk. We have different methodologies, epistemologies and ethics.

These differences do not emerge from nowhere; they are both a feature of intellectual differences between schools of thought and research cultures, as well as a feature of deeper global structural factors such as inequality and deep rooted cultural difference[14]. Indeed, one of the reasons these deep disagreements are often obscured is because the same global structures of inequality also lead to many important voices that give expression to such different perspectives not being present in the field. Thus, any movement towards pluralism ought to acknowledge these structural forces and the role they have played in the development of the field and the production of existential risk thus far. Preventing the reproduction of these structures will be essential to broadening the conversation around existential risk; why such broadening is important in the aim of reducing existential risk will be discussed below.

Differences are a strength. We think our community can be better by each of our own lights through a plurality of approaches, even those about which we disagree amongst ourselves. Further, we suggest that the power to confer support for different approaches should be distributed among the community rather than allocated by a few actors and funders, as no single individual can adequately manifest the epistemic and ethical diversity we deem necessary. This position is justified by the following reasons.

Firstly, we recognise the high level of uncertainty[15] involved in studying a large class of existential risks. Much of our reasoning is based on novel ideas and unexplored models of the world, often with sparse or heavily contested evidence to support the assumptions that fundamentally underpin them[16]. Whilst it is possible to make evidence-based claims, evidence seems so sparse that no decisive case can be made for a single approach. It may be equally as reasonable, given the sparse evidence available, to base enquiry off a variety of different assumptions. As such, it is prudent to adopt a pluralistic approach, to allow us to ‘hedge our bets’ and develop a variety of lines of inquiry. Moreover, under such uncertainty, scientific creativity is important[17], which thrives better in pluralistic communities than in homogeneous ones. We are still uncertain with regard to which questions to ask, let alone the correct methods and assumptions to underpin the process of answering them, that taking many approaches appears to us the correct course of action.

Secondly, disagreement can be constructive, so a pluralistic community can allow us to make discoveries or develop ideas none of us individually would have reached. This is not to suggest that these new ideas come from convergence towards a singular truth, but rather that the process of disagreement, and the exposure to new concepts, can play a key role in the epistemic process. Creating an intellectual ecosystem that encourages cross-fertilization through encounter and disagreement may help to clarify useful courses of action for mitigating existential risk, whilst avoiding premature convergence; indeed, convergence on a single course of action may be imprudent given our epistemic situation[18].

Thirdly, having a pluralistic community can be instrumentally useful, to allow us to understand how to reduce existential risk from several perspectives, and allow us to build coalitions to combat risk. Much of existential risk reduction is political[19], so diverse and broad based coalitions can give us a useful political basis for action. Many different types of existential risk may have similar political causes[20], and a pluralistic community may open up new avenues for collaboration with those whom we each have common cause[21]. This is not to say this will always happen; indeed, an important aspect of pluralism is its openness to a proliferation of possibilities; but having a pluralistic community will allow for fruitful possibilities for collaboration when unified political aims are present. Thus, in the conditions where it is possible, the pluralistic community would be the site for incompletely theorised agreements of necessary political action.

Finally, there are also reasons to want a community where a plurality of normative ideas are represented. While many of us are deeply concerned with the idea of building futures consistent with each of our best ethical theories, we all have at least some degree of ethical uncertainty about what views are best. It may be that different ethical theorists are all “climbing the same mountain”, or it may be that they are deeply and irreconcilably divided. However, none of us are able to answer this question with certainty (indeed, many of us think this is unanswerable). Nevertheless existential risk, and how we should respond to it, is deeply bound up with our normative beliefs about what would be good and bad[22]. Highlighting, collaborating with or respecting people of different ethical viewpoints is therefore vital to working together within a pluralistic community. Indeed, having a variety of ethical perspectives heard and empowered may be vital to the achievement of justice within existential risk, an ethical priority that for many of us is crucial.

We want to acknowledge, draw benefit from, and support the existing pluralism of the community, and to actively cultivate a greater plurality of perspectives, methodologies, worldviews, and values. In the academic community, this will mean exploring and encouraging disciplines that are often neglected in studying existential risk, such as science and technology studies (STS), political science, peace and conflict studies, archaeology, anthropology and sociology. It may mean utilising existing methods from a wider range of disciplines and attempting to innovate our own methods. It also means genuine respect for and inclusion of those people who are outside of groups typically considered ‘experts’. This will also likely require more care put into publication practices for those engaged in research, including greater consideration and conversation of the appropriate role of different fora in the epistemic process, including peer reviewed publications, than has currently been the case to date. A greater focus on creating a research culture that actively promotes creativity and diversity of evidence-based thinking will be key.

Moreover, all of this entails greater geographic, socio-economic, cultural, gender, racial and ability diversity, both in terms of those who may have interest in being a part of the community, and those whom the community may learn from. In particular, providing opportunities for those outside the traditional centres of the community concerned with existential risk (the USA and Northern Europe) to engage as part of or in dialogue with the community we are creating is vitally important. This diversification and increased inclusion would only be beneficial if engaged in a manner of mutual respect and equality rather than attempting to leverage existing power imbalances to allow for a particular perspective to dominate; it cannot just be on the terms of those of us with already entrenched privilege. Dialogue and openness towards those with different ethical perspectives, particularly non-Western ethical perspectives, different definitions of existential risk and different bases with which they start reasoning about existential risk from should also be a key priority; expanding the community such that we can learn and collaborate with those from a diverse range of perspectives is ethically and epistemically important.

We recognise that this vision will have tradeoffs. A community like this will take time, money and energy to help build and maintain that could be used for other purposes. A more diverse and pluralistic community would involve the ceding of some power within the community by those with the most influence and authority at present, and a ceding of the dominance of the present dominant viewpoints; such a process will be difficult. Engaging with a diversity of viewpoints will compromise some of the unity that exists in the field, which will inevitably lead to more conflict than at present, and reduce the ability of the field to rapidly converge on a set of similar viewpoints, including around prioritisation. Nonetheless, pushing the field in the direction of greater pluralism is worth such a cost given the benefits for the epistemic and ethical health of the community in achieving its plurality of purposes.

We thus declare our support for moving the existential risk community towards greater pluralism, and acknowledge our responsibility in trying to bring this about. We have only given a rough sketch of what pluralism must entail, and hope that the process of building such a community will help concretise what it means in practice. We don’t wish to merely respect each other at a distance, but desire more active engagement, sharing ideas and disagreement with people from across the community, and empowerment of each other to take different approaches. We don’t merely wish for the perspectives of those of us with the most power to dominate, but rather to create a culture where a genuine proliferation of evidence-based insights can occur. This requires support in many different forms from different actors in the community, which we are optimistic can happen; if we are to bring about a less endangered world, it must.

Signed by:

Gideon Futerman (University of Oxford, Coordinator and principal author of this statement)

SJ Beard (Senior Research Associate, Centre for the Study of Existential Risk, University of Cambridge)

Anders Sandberg (Senior Research Fellow, Future of Humanity Institute, University of Oxford)

Paul N Edwards (Director, Stanford Existential Risk Initiative, Stanford University)

Erica Thompson (Associate Professor, Department of Science, Technology, Engineering and Public Policy, University College London)

Thomas Meier (Director, Centre for Apocalyptic and Post Apocalyptic Studies, University of Heidelberg)

Paul Ingram (Senior Research Associate, Centre for the Study of Existential Risk, University of Cambridge)

Matthijs Maas (Senior Research Fellow, Law and AI and Head of AI Research at the Legal Priorities Project)

Florian Ulrich Jehn (Senior Researcher, Alliance to Feed the Earth in Disasters

David Thorstad (Postdoctoral Research Fellow, Global Priorities Institute, University of Oxford)

Karim Jebari (Institute for Futures Studies)

Mónica A. Ulloa Ruiz (Policy Transfer Officer, Riesgos Catastroficós Globales)

Bill Anderson-Samways (AI Governance Researcher)

Ximena Barker Huesca (Kings College London)

Lin Bowker-Lonneker (University of Oxford)

Nadia Mir-Montazeri (University of Bonn)

Phillip Spillman (University of Cambridge)

Noah Taylor (Peace Studies Researcher)

- ^

Beard, S., Rowe, T. and Fox, J. (2020) ‘An analysis and evaluation of methods currently used to quantify the likelihood of existential hazards’, Futures, 115, p. 102469.

- ^

Bostrom, N. (2002) ‘Existential risks: analyzing human extinction scenarios and related hazards’, Journal of evolution and technology / WTA, 9.

- ^

Cotton-Barratt, O. and Ord, T. (2015) Existential risk and existential hope: Definitions. Available at: http://files.tobyord.com/existential-risk-and-existential-hope.pdf (Accessed: 22 June 2023).

- ^

Mitchell, A. and Chaudhury, A. (2020) ‘Worlding beyond “the” “end” of “the world”: white apocalyptic visions and BIPOC futurisms’, International relations, p. 004711782094893.

- ^

Such as the different methods laid out in: Beard, S., Rowe, T. and Fox, J. (2020) ‘An analysis and evaluation of methods currently used to quantify the likelihood of existential hazards’, Futures, 115, p. 102469.

- ^

Including how important generating probabilistic forecasts are, the importance of specific causal-chain threat models, the utility of speculation and more.

- ^

For example, how far individual case studies can be taken as evidence.

- ^

For example, whether worries of tipping points and unknown feedbacks can be taken as evidence for existential risk or not.

- ^

For example, how far we should rely on the updating of individual credences by a small number of decision-makers.

- ^

For example whether we can or should adequately differentiate between GCR and XRisk, or how likely we are to identify correct threat models and hazards in advance.

- ^

For example whether looking at sub-existential catastrophes as evidence of cascades dominating will appropriately scale to existential catastrophes.

- ^

For example whether looking at sub-existential catastrophes as evidence of cascades dominating will appropriately scale to existential catastrophes.

- ^

For example, with regards to how much we agree with strong longtermism

- ^

Ibid 4

- ^

Currie, A. (2019) ‘Existential risk, creativity & well-adapted science’, Studies in history and philosophy of science, 76, pp. 39–48.

- ^

Sundaram, Lalitha, Matthijs M. Maas, and S. J. Beard. ‘Seven Questions for Existential Risk Studies’, 25 May 2022. https://doi.org/10.2139/ssrn.4118618.

- ^

Ibid 15

- ^

Ibid 15

- ^

In the sense of being involved in addressing and utilising power in public life.

- ^

Cotton-Barratt, O., Daniel, M. and Sandberg, A. (2020) ‘Defence in Depth Against Human Extinction: Prevention, Response, Resilience, and Why They All Matter’, Global policy, 11(3), pp. 271–282.

- ^

Such has been discussed within the space of people discussing the risks and harms of AI: Stix, C. and Maas, M.M. (2021) ‘Bridging the gap: the case for an “Incompletely Theorized Agreement” on AI policy’, AI and ethics, 1(3), pp. 261–271.

- ^

Cremer, Carla Zoe, and Luke Kemp. (2021). ‘Democratising Risk: In Search of a Methodology to Study Existential Risk’. https://papers.ssrn.com/abstract=3995225.

quinn @ 2023-08-16T17:25 (+67)

Downvoted because I'm allergic to applause-lighting pluralism: I would like whitelists and blacklists, tell us which things are underrepresented but another look ought to be taken / tell us which things are underrrepresented and should remain underrepresented. Be specific.

Reminder that it's ok to feel burned out by diversity complaints not being totally honest:

But in this case your criticism is not “effective altruism should be more inclusive of different political views,” it’s “effective altruism’s political views are wrong and they should have different, correct ones,” and it is dishonest to smuggle it in as an inclusivity thing.

I'm not saying this statement/project in particular smells especially nontrustworthy in this way (I in fact think it's better than most things in the reference class!), but it's worth pointing out that my prior is pretty tuned and it would take a lot for me to get excited about this sort of thing.

John G. Halstead @ 2023-08-16T20:19 (+4)

I agree with this. All appeals to so-called 'diversity' I have seen on the forum have actually been appeals for EA to be folded into other left wing social movements. I don't think these arguments are transparent. Folding EA into extinction rebellion, which as I understand is the main aim of heterodox CSER-type approaches in EA, is not a good way to increase diversity in the marketplace of ideas.

'Differences are a strength' implies 'lets have a lot more homophobes, Trump supporters, and people who want China to invade Taiwan'. You don't actually want this. What you actually want is diversity in the form favoured by the usual preoccupations of left wing thought - identity diversity and more left wing environmentalism.

Sean_o_h @ 2023-08-17T10:36 (+26)

John's comment points to another interesting tension.

CSER was indeed intended to be pluralistic and to provide space for heterodox approaches. And the general 'vibe' John gestures towards (I take it he's not intending to be fully literal here - please correct me if I'm misinterpreting, John) is certainly more present at CSER than at other Xrisk orgs. It is also a vibe that is regularly rejected as a majority position in internal CSER full-group discussions. However, some groups and lineages are much more activist and evangelical in their approach than others. Hence they crowd out other heterodoxies and create an outsized external footprint, which can further make it difficult for other heterodoxies to thrive (whether in a centre or community). The CSER-type heterodoxy John and (I suspect) much of EA is familiar with is one that much of CSER is indifferent to, or disagrees with to various degrees. Other heterodoxies are... quieter.

In creating a pluralistic ERS, some diversities (as discussed by others) will be excluded from the get go (perhaps for good reasons, I do not offer comment on this). Of those included/tolerated, some will be far better-equipped with the tools to assert themselves. Disagreements are good, but the field on which disagreements are debated is often not an even one. Figuring out how to navigate this would be one of the key challenges for the approach proposed, I would think.

Gideon Futerman @ 2023-08-17T12:44 (+15)

in response to your first point, I think one of the hopes of creating a pluralistic xrisk community is so that different parts of the community actually understand what work and persepctives each are doing, rather than either characturing them/misrepresenting them (for example, I've heard people outside EA assuming all EA XRisk work is basically just what Bostrom says) or just not knowing what other have to say. Ultimately, I think the workshop that this statement came out of did this really well, and so I hope if there is desire to move towards a more pluralistic community (which, perhaps from this forum, there isn't) then we would better understand each others persepctives and why we disagree, and gain value from this disagreement. One example here is I think I personally have gained huge value from my discussions with John Halstead on climate, and really trying to understand his position.

I agree on the last paragraph, and is definitely a tension we will have to try anda resolve over time. This is one of the reasons we spoke about "we suggest that the power to confer support for different approaches should be distributed among the community rather than allocated by a few actors and funders, as no single individual can adequately manifest the epistemic and ethical diversity we deem necessary." which would hopefully go someway to make sure that more forms of pluralism can assert themselves. Obviously, though, this won't be perfect, and we will have to create spaces where voices that may previously not have been heard, because they don't have all the money or aren't loud and assertive, would get heard; this will be hard, and will definitely be difficult for someone like me who is clearly quite loud and likes to get my opinion out there.

NB: (I would also like to comment, and I really don't want to be antagonistic to John as I do deeply respect him, but I do think his representation of 'CSER-type heterodoxy' or at least how he's framed it with his two chief examples being me and Luke seems to me to be a misrepresentation. I know this may be arguing back too much, but given he's said I believe something I don't, I think its important to put the record straight (I'd hope its unintentional, although we have actually spoken a lot about my views))

Richard Ren @ 2023-08-17T12:42 (+9)

This comment seems to assume very bad faith from the original poster (e.g. "these claims smell especially untrustworthy" and "I don't think these arguments are transparent."). These comments made certainly have very creative interpretations of the original post.

quinn @ 2023-08-17T16:34 (+6)

Trump supporters and homophobes are easy to rule out if you assume that the only way to be valid or useful in expectation is to go to college. Which, fine, whatever, but it does violate the spirit of the thing in a way that I'd hope is obvious.

Gideon Futerman @ 2023-08-16T21:13 (+9)

Firstly John, before I address the ( interesting and useful) substantive point you make here, I think the first paragraph is clearly blatantly false. Firstly, you accuse all these signatories and authors of not being transparent. This is a deeply disrespectful accusation of bad faith, which is clearly untrue. Please recind this statement or I will be reporting you to the forum team for breaking forum norms.

I don't speak for every signatory, although I would urge you to look at the list of signatories; does this look to you like a group of people who want to fold EA into XR. The letter is explicitly talking about ERS as a field and not just EA; it wants to create a more pluralistic field with EA engaging as a part of this more pluralistic and diverse field, not replace EA with this field. It is also not about making ERS just CSER-type approaches by any margin. Also, please point me where in the letter you see any calls for things that look at all like 'Folding EA into extinction rebellion'; we are talking about a plurality of methods to study XRisk, and a plurality of individuals and visions of the future to do this, and a community that best supports this, and I can't think this misreading of our letter comes from anywhere else but pattern matching to other pieces rather than actually engaging with what is said. Please correct this.

Your also are simply wrong about 'Folding EA into extinction rebellion' is the 'main aim of heterdox CSER-type approaches to EA'; the point of this letter isn't to advance CSER, but I think this blatant misinformation ought to be corrected. How is 'Governing Boring Apocalypses (https://www.sciencedirect.com/science/article/pii/S0016328717301623) or 'Classifying Global Catastrophic Risks https://www.sciencedirect.com/science/article/pii/S0016328717301957', two classic works in the CSER-type approaches folding EA into extinction rebellion? Even the work on climate and xrisk, such as 'Climate Endgame' of 'Climate Change's contribution to GCR' has very little to do with XR. Again, unless you can provide me evidence for your point that is not a gross misrepresentation of a large body of work by a large amount of people, please recind and correct your statement.

Onto your more substantive point; I basically agree this is an issue with calls for diversity; ultimately the tent has to stop somewhere, and that somewhere will clearly be 'politically (not necessarilly party political) charged'. I agree, I don't want racists, homophobes or ableists in the ERS community. I think negotitating what the boundaries of this community is and should be is a really difficult task, and indeed, in many ways I think you undersell it. Why shouldn't we include people who think that God will bring about the apocalypse? Indeed, focusing on a variety of methods was also a key part, so how do we constrain what methods we should use, and how we can decide if two methods disagree what to do? I think this problem is tricky, and is definitely something that will need to be iterated on, negotiated and researchered and understood conceptually more. But you here throw the baby out with the bathwater; its a deeply unsastifying solution when we have a good reason to have pluralism of method, vision of the future, epistemology and also greater diversities of many different factors to suggest that just because it may be possible to justify the inclusion of those you don't like on this logic, then we have to throw out the entire argument full stop.

John G. Halstead @ 2023-08-17T09:00 (+10)

Sorry but I won't rescind my comment. I don't know whether it is conscious lack of transparency or not, but it is not transparent, in my opinion. This is also indicated by Quinn above, and in Larks' comment. The dialectic on these posts goes:

- A categorical statement is made that 'diversity is a strength' or 'diversity of all kinds is always good'.

- Myself or someone else presents a counterexample - eg note there are lots of homophobes, nationalists, Trump supporters etc who are underrepresented in EA

- The OP concedes in the comments that diversity of some kinds is sometimes bad, or doesn't respond.

- A new post is released some time later repeating 1.

I have made point 2 to you several times on previous posts, but in this post you again make a categorical claim that 'diversity is a strength' and that we need to move towards greater pluralism, when you actually endorse 'diversity is sometimes a strength, sometimes a weakness'. Like, in this post you say we need to take on 'non-Western-perspectives', but among very popular non-Western perspectives are homophobia and the idea that China should invade Taiwan, which you immediately disavow in the comments.

But you here throw the baby out with the bathwater; its a deeply unsastifying solution when we have a good reason to have pluralism of method, vision of the future, epistemology and also greater diversities of many different factors to suggest that just because it may be possible to justify the inclusion of those you don't like on this logic, then we have to throw out the entire argument full stop.

I think the issue here is that it is incumbent upon you to provide criteria for how much diversity we want, otherwise your post has no substantive content because everyone already agrees that some forms of diversity are good and some are bad. The main post says/strongly gives the impression that more diversity of all kinds is always good because there is something about diversity itself that is good. In the comments, you walk back from this position.

Correct me if I am wrong, but my understanding is that diversity is being used to defend the proposition that EA should engage in non-merit-based hiring that is biased with respect to race, gender, ability, nation, and socioeconomic status.

all of this entails greater geographic, socio-economic, cultural, gender, racial and ability diversity, both in terms of those who may have interest in being a part of the community, and those whom the community may learn from.

I think this would be unfair, and strongly disagree that this would 'create a culture where a genuine proliferation of evidence-based insights can occur'. The diversity considerations you mention in the post also cannot defend it since they cannot distinguish good and bad forms of diversity.

My claim was "Folding EA into extinction rebellion, which as I understand is the main aim of heterodox CSER-type approaches in EA". I would guess that you and (eg) Kemp would be happy with this, for instance. CSER researchers like Dasgupta have collaborated papers with Paul Ehrlich who I think would also endorse this vibe, so I would guess Dasgupta is at least sympathetic. I basically think what I said is broadly correct, and I don't think there is much reason for me to correct the record. I would actually be interested in some sort of statement/poll from different groups in x-risk studies about their beliefs about the world.

Gideon Futerman @ 2023-08-23T23:11 (+20)

Hi John,

Sorry to revisit this, and I understand if you don't. I must apologies if my previous comments felt a bit defensive from my side, as I do feel your statements towards me were untrue, but I think I have more clarity on the perspective you've come from and some of the possible baggage brought to this conversation, and I'm truly sorry if I've be ignorant of relevant context.

I think this comment is more going to address the overall conversation between us two on here, and where I perceive it to have gone, although I may be wrong, and I am open to corrections.

Firstly, I think you have assumed this statement is essentially a product of CSER, perhaps because it has come from me, who was a visit at CSER, and has been similarly critical of your work in a way that I know some at CSER have. [I should say, for the record on this, I do think your work is of high quality, and I hope you've never got the impression that I don't. Perhaps some of my criticisms last year towards the review process your report went through felt poor quality (and I can't remember what they were and may not stand by them today), but if so, I am sorry.] Nonetheless, I think its really important to keep in mind that this statement is absolutely not a 'CSER' statement; I'd like to remind you of the signatories, and whilst every signatory doesn't agree with everything, I hope you can see why I got so defensive when you claimed that the signatories weren't being transparent and actually attempting to just make EA another left-wing movement. I tried really hard to get a plurality of voices in this document, which is why such an accusation offended me, but ultimately I shouldn't have got defensive over this, and I must apologise.

Secondly, on that point, I think we may have been talking about different things when you said 'heterodox CSER approaches to EA.' Certainly, I think Ehrlich and much of what he has called for is deeply morally reprehensible, and the capacity for ideas like his to gain ground is a genuine danger of pluralistic xrisk, because it is harder to police which ideas are acceptable or not (similarly, I have recieved criticism because this letter fails to call out eugenics explicitly, another danger). Nonetheless, I think we can trust as a more pluralistic community develops it would better navigate where the bounds of acceptable or unacceptable views and behaviours are, and that this would be better than us simply suggesting this now. Maybe this is a crux we/the signatories and much of the commens section disagree on. I think we can push for more pluralism and diversity in response to our situation whilst trusting that the more pluralistic ERS community will police how far this can go. You disagree and think we need to lay this out now otherwise it will either a) end up with anything goes, including views we find moral reprehensible or b) will mean EA is hijaked by the left. I think the second argument is weaker, particularly because this statement is not about EA, but about building a broader field of Existential Risk Studies, although perhaps you see this as a bit of a trojan horse. I understand I am missing some of the historical context that makes you think it is, but I hope that the signatories list may be enough to show you that I really do mean what I say when I call for pluralism.

I also must apologise if the call for retraction of certain parts of your comment seemed uncollegiate or disrespectful to you; this was certainly not my intention. I, however, felt that your painting of my views was incorrect, and thought you may, in light of this, be happy to change; although given you are not happy to retract, I assume you are either trying to make the argument that these are in fact my underlying beliefs (or that I am being dishonest, although I have no reason to suspect you would say this!).

I think there are a few more substantive points we disagree on, but to me this seems like the crux of the more heated discussion, and I must apologise it got so heated

John G. Halstead @ 2023-08-25T19:22 (+36)

Thanks for these comments and for the discussion. I do genuinely appreciate discussing things with you - I appreciate the directness and willingness to engage. I also appreciate that given how direct we both are and how rude I sometimes am/seem on here, it can create tension, and that is mainly my fault here.

I think my cruxes are:

I suppose my broader point is that EA is <1% of social movements 'trying to do social good' in some broad sense. >98% of the remainder is focused on broadly 'do what sounds good' vibes, with a left wing valence, i.e. work on climate change, rich country education, homelessness, identity politics type stuff etc. Over the years, I have seen many proposals to make EA more like the remainder, or even just make it exactly the same as the remainder, in the name of diversity or pluralism.

This strikes me as an Orwellian use of those terms. I don't think it would in any way create more pluralism or diversity to have EA shift in the direction of doing that kind of stuff. EA offers a distinctive perspective and I think it is valuable to have that in the marketplace of ideas to actually provide a challenge to what remains the overwhelmingly dominant form of thinking about 'trying to do good'.

I also view the >98% as very epistemically closed; I don't think they are a good advert for an epistemic promised land for EAs.

There is a powerful social force that I do not understand which means that every organisation that is not explicitly right wing eventually becomes left wing, and I have seen that dynamic at play repeatedly over the last 13 years, and I would view this as the latest example. EA is not focused on areas I would view as particularly left or right valenced at the moment.

I am also very opposed to efforts to make hiring decisions according to demographic considerations. I think the instrumental considerations enumerated for doing this are usually weak on closer examination, and I think the commonsense idea that people who do best on work-related hiring criteria will be best at their job is fundamentally correct and the reason it is fundamentally correct are obvious. The idea that implicit bias against demographic groups could be driving demographic skews in EA also strikes me as extremely implausible. It is violently at odds with my lived experience of being on hiring panels or knowing about them at other organisations, and there being a very strong explicit bias against the typical EA demographic. The idea that implicit bias could be strong enough to overcome this is not credible.

I am aware that I am setting my precious social capital alight in making these arguments (which is, I think, a lesson in itself)

Gideon Futerman @ 2023-08-17T09:24 (+4)

Once again, I think the accusation that we are not being transparent is deeply disingenuous.

If you agree that saying 'diversity is a strength' is equivalent to 'diversity is always a strength and there are no problems increasing diversity in anyway then I can see your concern; I'm pretty confused how this is your assumption of what we mean, and to me is far from the common usage of the phrase. But yes, I agree even if our epistemic situation demands diversity, there are ways this could go wrong, and its not an easy problem 'where the tent stops', and whilst it is a very important conversation to have and to negotiate, I too often think that having this conversation in response to any calls to diversify ends up doing much more harm than good.

Once again, these post is not talking about EA, and I'm not sure it's particularly advocating for 'non-merit based' practices (some signatories may agree, some may not). One example of initiatives that could be done to increase demograohic diversity are efforts like magnify mentoring, or doing more outreach in developing countries, or funding initiatives in a broader geographic distribution, or even improving the advertising of projects and job positions. But sure, if we think increasing demographic diversity is important, we might want to have a conversation about other things that can be done.

Also, much of the diversity we speak about is about pluralism of method, core assumptions etc, which only have something to do with 'merit'if you are judging from already a very particular perspective, and it is having this singular perspective is one of the things we are arguing against.

On your final point, you have definitely entirely misrepresentation my position and I am shocked from the conversations we have had that you would come to this conclusion about my work. I'm also pretty surprised this would be your conclusion of Luke's work as well, which has included everything from biosecurity work for the WHO, work on AI governance and work on climate change, but I don't know how much of his stuff your reading. I can safely say Luke disagrees that ERS should basically just be XR. I know far less about Dasgupta's work. Also, i really don't understand how we can be seen as fully representative of CSER-style xrisk work either. I don't quite understand how you can claim people hold beliefs, be counteracted, then fail to give evidence for your point whilst maintaining that you are right.

quinn @ 2023-08-17T10:07 (+13)

and to me is far from the common usage of the phrase

It's pretty lowest common denominator to say "you should infer that we mean the good stuff and not the bad stuff, since we all intuitively agree on commonsensical differences between good and bad". Affordable housing, degrowth, etc. Diversity doesn't have to be one of those!

David Mathers @ 2023-08-17T15:55 (+2)

Sometimes "currently we should have more of this rather than less, there will be (non-edge) cases where the cost of more is worth it" can be reasonably obvious, even if it's not obvious how much more, or what the least costly way to get more is, and for some specific proposals its unclear whether the benefits outweigh the costs.

Gideon Futerman @ 2023-08-16T22:22 (+2)

Hi Quinn,

I think you may have strawmanned the case quite a lot here, likely unintentionally (so sorry if the strawman accusation comes off harsh). So let me clarify:

- This statement is about ERS not about EA. We want to make a broad, thriving field which EA could and I hope would be a part of creating

- I basically think this is wrong. Sure, I, for example, don't want racists in my community (and I spoek about this to John). But this is a genuine attempt to make a community with a plurality of methods, visions of the futures, ways of doing things. We explicitly don't want agreement, and we say as much. If you look at the signatories, these are people who hold a whole host of different views (although its probably more homogenous than I would like actually!)

- This statement was based on a workshop, and the signatories are only drawn from there. There was large amounts of disagreement at the workshop about a bunch of things, and we definitely would want a space that could sustain this. Indeed, much of the point of setting up an ERS space is to facilitate active disagreement that we can learn from, rather than shut it down and replace one political view with another. So I essentially think your comment here is wrong

quinn @ 2023-08-17T08:52 (+17)

It's not clear to me how you can exclude racists if you "explicitly don't want agreement". Presumably there are heuristics for knowing what's overton-violating and what isn't, but you need to be specific about how you can improve what people already do. I don't think the idea of "applause lights" was strawmanning at all toward the top level post, but I see your views are more detailed and careful than that in comments.

Sorry about conflation between ERS and EA, I get it can be a different stream.

I'm not super incurious about certain methodological, sociology of science, metascience opportunities to improve the x-risk community. I just need to see the specifics! For example, I am incurious about frankfurty stuff because it tends to be rather silly, but presumably lots of people are working on peer review and career clout to fix it's downsides (seems like an economics puzzle to me) and I'm very curious about that.

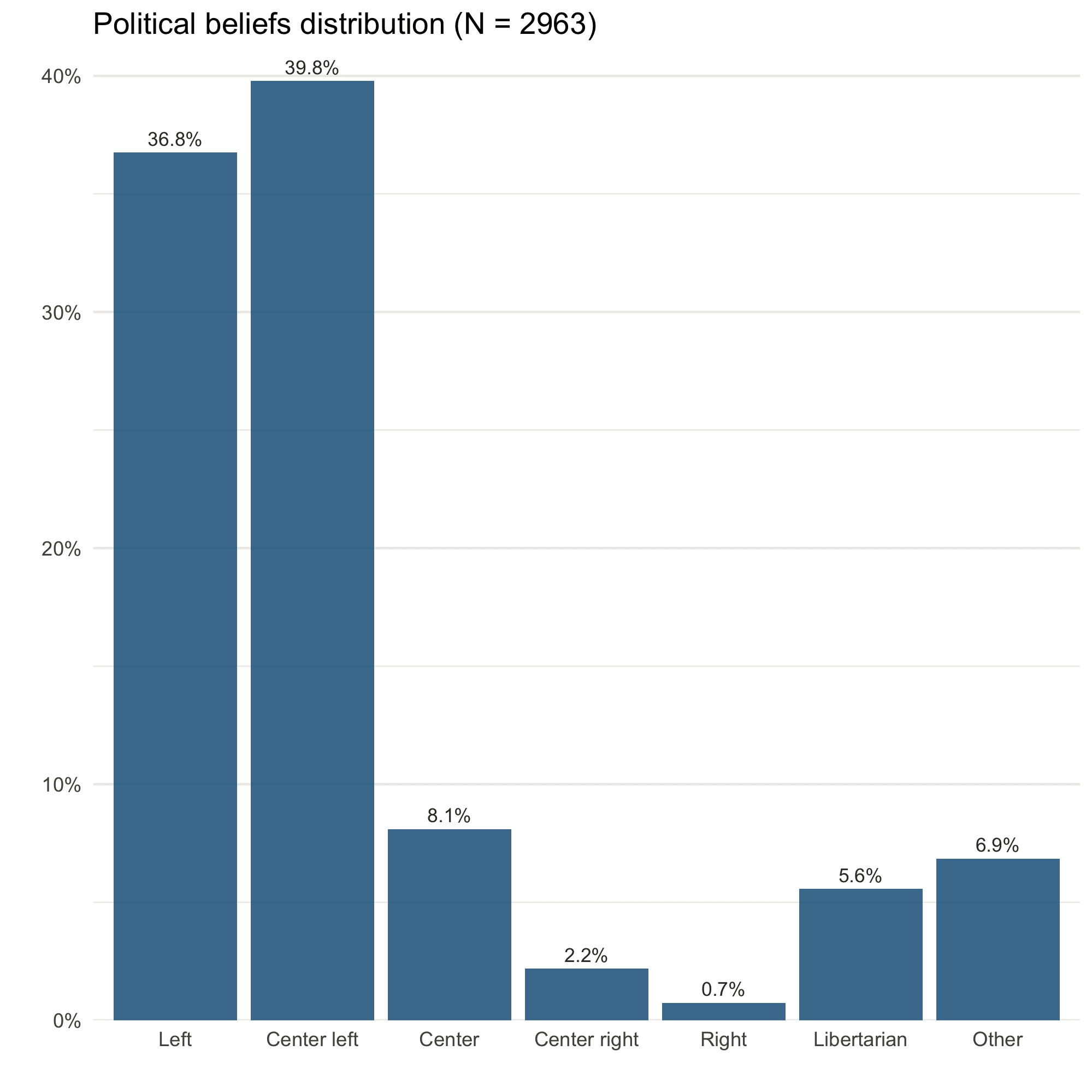

Larks @ 2023-08-16T18:57 (+46)

Thanks very much for sharing this! I definitely agree with the importance of including a broad range of political perspectives. As you know, the EA and existential risk movements are currently overwhelmingly left wing, and appear to becoming more homogeneous over time; do you have any thoughts on what could be done to attract and include more conservatives / Republicans etc.?

Gideon Futerman @ 2023-08-16T21:50 (+7)

I think its important to not simplify political pluralism down to simply party politics, particularly in one country, so I'm simply not sure this simple left-right axis can at all accurately conceptualise the politics of the space and I think if we are to fully capitalise on the politics of this space and how to diversify them, we really need to look more expansively.

Addressing your question directly, I firstly don't claim to know or have all the answers, although I can think of places it would definitely be useful. One potential example would be to develop a political philosophy of xrisk reduction based on Burkeian ideas, which I think may be a useful addition to an 'agents of doom' agenda. I'm sure other intellectual currents in conseravtive thought could also be useful, particularly given how inherently conservative the idea of XRisk reduction is (eg see Mitchell and Chaudrey's paper, and I actually agree this inherent conseravtivism is problematic) and so conservatives ought to have a lot to say about xrisk reduction.

John G. Halstead @ 2023-08-17T08:18 (+17)

In the post, you say "Much of existential risk reduction is political[19], so diverse and broad based coalitions can give us a useful political basis for action. Many different types of existential risk may have similar political causes[20], and a pluralistic community may open up new avenues for collaboration with those whom we each have common cause[21]." It does seem strange not to say in the main post that apparently almost all EAs would vote probably vote Labour or Democrat, so clearly something is amiss here by your own lights

Gideon Futerman @ 2023-08-17T08:46 (+8)

I do basically agree that we should have people who we would say are on the right (hence my suggestion) and I can see why my previous comment may come across dismissive (apologies), I just don't agree that breaking it down to party politics(eg Republican) or left-right given how broad it is is necessarily that useful. (Again, please remember I'm not just talking about EA but ERS, but I think your point still applies)

Ben_West @ 2023-08-17T21:53 (+3)

I just wanted to say thanks for taking the question seriously, even though you don't fully endorse the frame!

quinn @ 2023-08-17T09:18 (+2)

what's the inherent conservatism of xrisk reduction, or the version that's salient to you, or to Mitchell/Chaudrey? I know of a few approaches. I guess Mitchell/Chaudrey might plausibly think the "everyone dying bad" frame solves the whiteness problem (i.e. someone like Ord throws in a bunch of extra stuff on top which you could accuse of a kind of WEIRD supremacy / insufficiently anticapitalist / etc). I remain slightly confused why "positive longtermism and negative longtermism" have not been adopted since I'm frequently aggressively reminded that it's a source of cruxes for different people.

FJehn @ 2023-09-23T06:29 (+3)

Just a thought here. I am not sure if you can literally read this as EA being overwhelmingly left, as it depends a lot on your view point and what you define as "left". EA exists both in the US and Europe. Policy positions that are seen as left and especially center left in the US would often be more on the center or center right spectrum in Europe.

quinn @ 2023-08-16T17:28 (+15)

Credentialism good:

This will also likely require more care put into publication practices for those engaged in research, including greater consideration and conversation of the appropriate role of different fora in the epistemic process, including peer reviewed publications, than has currently been the case to date.

Credentialism bad:

It also means genuine respect for and inclusion of those people who are outside of groups typically considered ‘experts’.

Why does this work?

Gideon Futerman @ 2023-08-16T17:49 (+1)

This is an oversimplification here of what is said. Suggesting that there are and could be useful roles of peer review and useful roles for expansion of how respect should be conferred is not the same as 'credentialism good' and 'credentialism bad'. They may simply have different epistemic and pragmatic roles

quinn @ 2023-08-16T20:34 (+8)

Yeah, this is fair-- but it seems kinda not worth saying sans specifics. You're vaguing about the kinds of respect you see being denied to this that or the other person, for this that or the other reason, and that's making this that or the other decisions to be made suboptimally.

Gideon Futerman @ 2023-08-16T20:42 (+3)

I definitely think a weakeness of the statement is the vagueness; it had to be to be so ecumenical.

Nonetheless, I do think it is untrue that its not worth saying sans specifics. As I say above, 'greater conversation as to the role of peer review' does not mean 'peer review and credentialism everywhere'. Rather, it means better understanding the epistemic role it plays, which can be pretty useful, and deliberately deciding whether and how this means we should and could deploy peer review in the field.

Secondly, the vagueness overall does mean it says less of substance than many people I have spoken about this would like. The hope is that this lays a stake in the ground suggesting that actually the epsitemic status of XRisk does mean pluralism OF SOME KIND AND TO SOME EXTENT is required to make this field better. I think the argument we make in that section, and the sketches we give of pluralism ought to be informative enough to at least start a discussion and debate about this. I've felt its been a bit unfortunate that people seem to have solely focused on the diversity side (which is really important, and definitely some signatories think is most important) rather than the pluralism of approaches, methodologies, core assumptions etc. I think even in this piece it sets out a decently substantive vision that we ought to use new and more approaches, and construct a community that is better suited to this

quinn @ 2023-08-16T23:25 (+3)

I agree-voted this.

John G. Halstead @ 2023-08-16T20:23 (+4)

I do think there is often a tension in what you write on this front. On the one hand, you seem to support radical democratic control of (every?) decision made by anyone anywhere. And on the other hand, you think we should all defer to experts.

Gideon Futerman @ 2023-08-16T20:35 (+1)

On the one hand, I agree there is a tension, and one that I definitely haven't figure out (although I do think this is actually somewhat different to my views on EA structure and organising, particularly because I am not here actually talking about EA).

I am deeply concerned with the problem that I'm really unsure it make sense to use the term 'expert' with reference to anyone in XRisk, given the events haven't occurred and so its really unclear what would confer the experience to make one an expert. I think my greater reticence to see expertise as something that can be had in XRisk seperates me from others somewhat similar to me like Luke Kemp, and is definitely somewhere I differ substantially from people like David Thorstad. On the other hand, I definitely think peer review can play a really important role, including in promoting the establishment of a strong methodological basis, in the creation of trusted 'techniques of futuring' which help us to construct and performed shared futures (although the relative success of AI Safety in doing this without a strong reliance on peer review definitely reduces this argument). I also think peer review guards against a strong 'Matthew Effect' explained well here (https://www.liamkofibright.com/uploads/4/8/9/8/48985425/is_peer_review_a_good_idea_.pdf) which I actually think is realtively prevelant in xrisk. However, this is about supporting greater conversation about what the role is, rather than even saying such role needs to be prominent; rather, I think we ought to be much more deliberate about whether or not and how we want peer review to be present in our research culture as a community, rather than simply relying on the current status quo either in ERS or in mainstream academia.

John G. Halstead @ 2023-08-16T20:58 (+30)

I do find the emphasis on peer review and expertise hard to square with the radical democratic view, and I don't think that is a needle that can be threaded. If the majority were climate sceptics and were in favour of repealing all climate policy, it seems like you would have to be in favour of that given your radical democratic views but opposed to it because it is violently at odds with peer reviewed science.

My understanding (appreciating I may be somewhat biased on this), is that the demand for greater expertise comes from what you and others perceive to be the lack of deference to peer reviewed science by EAs working on climate change (which I think isn't true fwiw because the 'standard EA view' is in line with the expert consensus on climate change) and the fact that there is not much peer reviewed work in AI and to a lesser extent bio (I'm sympathetic on AI).

That aside, Yeah I have somewhat conflicted and not worked out thoughts on peer review in EA.

- As a statement of how I view things, I would generally be more inclined to trust a report with paid expert reviewers by Open Phil, or a blogpost or report by someone like Scott Alexander, Carl Shulman or Toby Ord, than the median peer reviewed study on a topic area. I think who write something matters a lot and explains a lot of the variation in quality, independent of the fora in which something is published.

- I generally think peer review is a bit of a mess compared to what we might hope for the epistemic foundation of modern society. Published definitely doesn't mean true. Most published research is false. Reviewers don't usually check the maths going into a paper. Political bias and seniority influences publishing decisions. The bias is worse in the most prestigious journals. Some fields are far worse than others and some should be completely ignored (eg continental philosophy, nutritional epidemiology). 'Experts' who know a lot of factual information on a topic area can systematically err because they have bad epistemics (witness the controversy about the causes of the Holocene megafauna extinction)

- That being said, I think the median peer reviewed study is usually better than the typical EA forum or lesswrong blogpost. Given how thin the literature on AI is, the marginal value of yet another blogpost that isn't related to an established published literature seems low. In AI, the marginal value of more peer reviewed work seems high. But I also think the marginal value of more open phil reports with paid expert reviewers with published reviewer reports would probably be higher than peer review given how flawed peer review is and how much better the incentives are for the open phil-type approach

Gideon Futerman @ 2023-08-16T21:33 (+2)

Ye, in response to the first point I basically think you've misinterpreted my position on this John, so thanks for laying this out so clearly.

I have never (as far as I know, and if I have, I must apologise because I am wrong) accused you or other EA's of lack of deference to peer reviewed science in the climate space. I essentially agree that you are more in line with the climate expert consensus on climate and xrisk than I am, and I would (and have) defended you against accusations that you don't know and utilise this literature very well (althouigh I think you do fail to engage with the literature on climate and xrisk, but we've already had this discussion and its very tangential to my main point). I just don't think the present peer reviewed literature actually does a very good job at all at addressing climate and xrisk questions (although again, I think tis is tangential).

I full agree with your second bullet point. But note, again, the statement says "more care put into publication practices for those engaged in research, including greater consideration and conversation of the appropriate role of different fora in the epistemic process, including peer reviewed publications". I interpreted this as suggesting not 'peer review is perfect and amazing' but rather if we are to make this community as epistemically healthy as possible, we need to have consideration for what the appropriate role for peer reviewed publications are. For me anyway, one motivating factor behind this is a question of if we ought to or not have an xrisk journal, or whether we ought to set up an xrisk specific forum (like the alignment forum is). Each of these can play different epistemic roles, and I think there is often a polarised response of peer review is perfect or peer review is the worst. I do think there is a strong argument against peer review in xrisk work, although I also think there are compelling arguments in favour of peer review in certain forms. Indeed, many signatories have made public defences of peer review (eg David Thorstad) and others have been critical (eg Erica Thompson's Escape from Modelland contains critiques of peer review), but I think we broadly felt that it was a discussion much more worth having in the xrisk space

Oscar Delaney @ 2023-08-16T23:03 (+9)

Thanks Gideon and others for creating this, I'm glad it exists. I have only skimmed the other comments, so apologies if the following have already been discussed elsewhere. In my mind it is useful to separate two quite different sentiments, I'd be interested if others agree with/find useful this distinction:

- We would prefer a world where demographic markers (perhaps most notably nationality) are not predictive of ability to participate in intellectual communities and knowledge-making.

- Given the current very unequal and unjust world, we would prefer to fund/hire/support/etc people who do worse in our evaluation metrics (interviews, work trials, research proposals, etc) but are from more underrepresented backgrounds.

Both are in favour of greater pluralism and diversity. I think 1 is trivially true - of course a more equal and just world would be better (though it would be a further claim that this is something X-risk orgs should focus on). 2 I am more torn about, I think I more disagree than agree though. I think if two people, one from a very intellectually privileged background, and one less so, do equally well on our evaluation metrics, the latter person is likely to be more intelligent/creative/novel as they had a harder road to there and needed to do more for themselves. I also think though that it would be pretty bad (and often clearly illegal) for decision-makers to use demographic markers over and above evaluation metrics.

I think the "In Defense of Merit in Science" paper is relevant here, though I haven't read more than the abstract and the public discussions of it. I am glad the statement talks about tradeoffs. I think this is another important tradeoff to add: the more dimensions you are optimising over, the less well you can optimise each of them. This seems uncontroversially true. The implication of this is that if we add more demographic dimensions to optimise over this will have a cost on whatever other dimensions we may already be using (novelty of ideas, clarity of writing, mathematical ability, etc). I think for me this is the most important tradeoff.

Gideon Futerman @ 2023-08-16T23:39 (+3)

I'm not quite sure how to address most of your comment, as I think in many ways we are critiquing much of the underlying logic of how we evaluate what we ought to be funded. Its essentially suggesting, not really from a place of justice at all, that the current structures fail to optimise or assess the things that are useful for the community. And I think it may also be implicitly suggesting that centralising this power as is done currently is counter to the aims, and so power in the community ought to be better distributed. One response would be to change these criteria to better optimise for things that we may think are more valuable eg novelty of ideas/approach. Another would be to randomise funding ie every proposal that crosses a certain bar gets entered into a lottery. Ultimately, I'm (not necessarily the other signatories)pretty comfortable making the argument for this on purely utilitarian grounds, although some people may feel a pull towards talking in terms of justice; a community that have metrics and evaluations that better encourage scientific creativity and a pluralism of ideas and approaches will be better off, and evaluation criteria that optimise for these sorts of pluralism are considerable better than the status quo.

I also worry that your focus is essentially on individual epistemics over a small range of positions, rather than the overall questions of funding structures and where it goes. For example, Open Phil could have decided to fund fellowships in developing countries rather than ERA in Cambridge, where I can't imagine the applicants are any less good but didn't. I think this expanding of geographic diversity may be a realtively easy win, although definitely isn't the only criteria we could have followed. (Also, as a side point, I would suggest there is good cost-effectiveness reasons to do this as well; for example, the DEGREES initiative (that OpenPhil funds), has now got the largest geoengineering research team in the world, all based in Developing Countries, with significant impact now on informing policy, for a cost that was less than the budget of ERA I believe. Here, increasing geographic diversity must have played into their decision, again purely from a utilitarian and not justice perspectuve. )

In response to the trade off stuff, to some extent this is true, although I'm unconvinced this is that different to the trade offs we discuss. In response to your examples, I would say that the proposals we make are definitely promoting 'novelty of ideas' much heavier than the status quo, and indeed we are making a clear trade off between more 'novel ideas' and more depth along the current 'orthodox' research paths

Oscar Delaney @ 2023-08-17T00:10 (+11)

I think I am a lot more on board with promoting idea pluralism (I realise I should have said this in my original comment, I was focusing there on what I found more controversial or difficult to think about well). I think science generally would go faster if funders took more risks on heterodox ideas (particularly given most research projects have far larger upside risks than downside risks, so 'hits-based' funding could work well). That's a good point re things being cheaper to run in poorer countries, so more cost-effective all else equal.

I can't imagine the applicants are any less good

At one level, yes intelligence and creativity are ~evenly distributed worldwide. But I think this gets to my earlier point about educational and other opportunities currently being very unequally distributed, so I think it would be the case (unfortunately) that applicants with access to loads of opportunities to develop their thinking and writing and research skills, disproportionately in the rich world, will be better able to contribute straight away. I think there could also be a strong case to run such fellowships elsewhere with fellows who have had fewer opportunities and are currently less capable, as this is more additional, but this seems like a notably different theory of change.

Gideon Futerman @ 2023-08-17T00:26 (+3)

So I think you are pointing to something real here, although even then I don't think it actually constitutes a great defence of the status quo of ERS. If we are to get people with preexisting experience in the disciplines we want, as most disciplines are far from equal, and if you are straying into an interdisciplinary soace like this job security and lack of mentorship may mean being more senior is very benefiical, we are likely to not get the sorts of demographic diversity we may want. However, ERS at present also rarely included and reaches out to people with deep expertise in these fields as well, so in some ways it feels we get the worst of both worlds; we bring in relatively young and inexperienced people and yet churn out people who broadly think very similarly and have the ability to influence very similar spaces . Sure, I'm not saying juggling all this is easy, nor that it will be perfect, but this status quo seems really suboptimal

quinn @ 2023-08-16T23:21 (+2)

Link broken, but I googled and perhaps you meant this https://iopenshell.usc.edu/pubs/pdf/JCI_Merit_final.pdf

Oscar Delaney @ 2023-08-17T00:12 (+1)

whoops, fixed. Thanks.

Alimi @ 2023-10-03T08:15 (+7)

I agree with @Gideon Futerman and others in principle. But I am yet to figure out how it can translate into concrete actions for desirable outcomes. I think the statement ought to be globalistic not just focusing on the existential risks studies by covering other cause areas. Also, pluralism might mean shifting attention to the Global South as most of us want to significantly contribute to the conversation but restricted for lack of support. Also , I think lack of pluralism results in redundant interventions and poorer use of money. Instead of spending $2200 on a coffee table or spending $50k on high schoolers , you could have funded one of my bar exams or you could have funded me for 8 months to work on longtermist community-building in Nigeria. You might want to chip in some dollars here https://www.gofundme.com/f/help-alimi-salifou-sit-bar-part-i-ii . I aspire to be the ombudsman of future generations in Africa. So help me :)

Ben Stevenson @ 2023-08-17T10:38 (+4)

Upvoted. Pleased to see this, Gideon et al!

David_Althaus @ 2023-08-17T09:41 (+3)

Since you emphasize diversity, I wanted to ask whether (or to what extent) this paragraph is meant to include or exclude s-risks:

Existential risk, as a concept, is defined in a multitude of ways in our community. Some in this group think it to be ‘risks of human extinction, societal collapse and other events associated with these’[1], others conceptions involve ‘risk of permanent loss of humanity’s potential’[2] or the risk of the loss of large amounts of expected value of the future[3]. Some see it as an inseparable part of the broader class of risks to the existence of individuals, communities or specific 'worlds'[4].

Gideon Futerman @ 2023-08-17T09:45 (+6)

Some of those definitions definitely do, and some probablg do, so yes! However, I think there is a valid question is studying S-Risks gains much from being part of the ERS community or whether it would be more beneficial to be its own thing. I'm unsure (genuinely) how much either side gains from its involvement (maybe a lot!) In general, to me the two areas that may be commonly associated with xrisk that I don't know how useful it is for it to be fully in ERS (although in conversation with definitely helps) is pure technical alignment (when divorced from AI Strategy) and maybe srisks, but I'm pretty unsure of this take, and many signatories would probably disagree.

David_Althaus @ 2023-08-17T09:49 (+5)

Good to know, thanks!