Relationship between EA Community and AI safety

By Tom Barnes🔸 @ 2023-09-18T13:49 (+157)

Personal opinion only. Inspired by filling out the Meta coordination forum survey.

Epistemic status: Very uncertain, rough speculation. I’d be keen to see more public discussion on this question

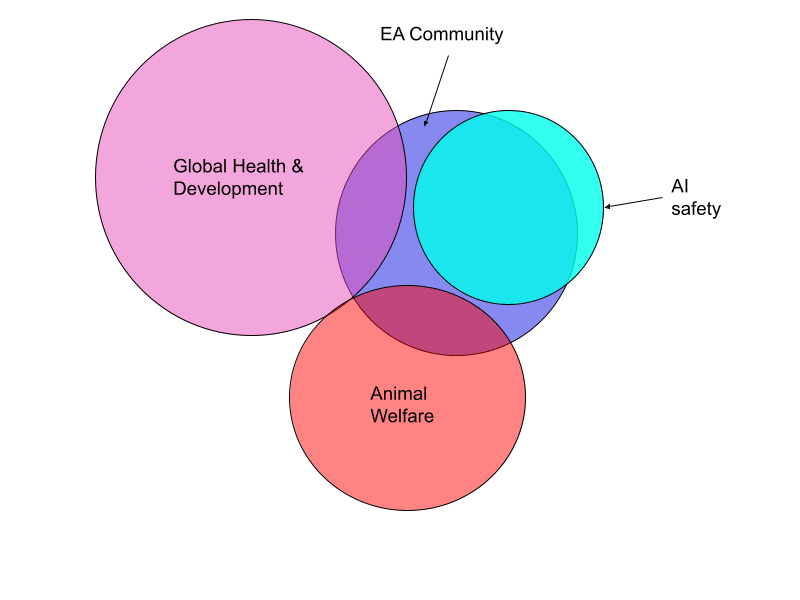

One open question about the EA community is it’s relationship to AI safety (see e.g. MacAskill). I think the relationship EA and AI safety (+ GHD & animal welfare) previously looked something like this (up until 2022ish):[1]

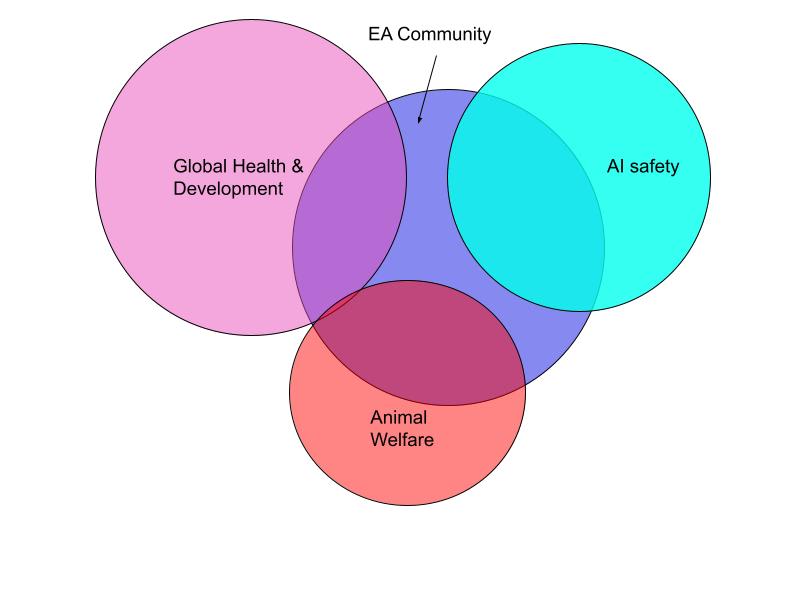

With the growth of AI safety, I think the field now looks something like this:

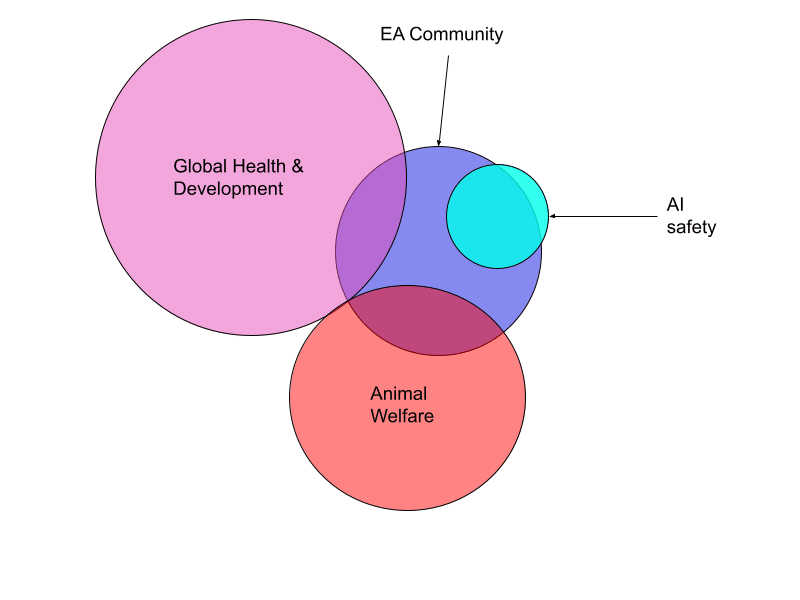

It's an open question whether the EA Community should further grow the AI safety field, or whether the EA Community should become a distinct field from AI safety. I think my preferred approach is something like: EA and AI safety grow into new fields rather than into eachother:

- AI safety grows in AI/ML communities

- EA grows in other specific causes, as well as an “EA-qua-EA” movement.

As an ideal state, I could imagine the EA community being in a similar state w.r.t AI safety that it currently has in animal welfare or global health and development.

However I’m very uncertain about this, and curious to here what other people’s takes are.

- ^

I’ve ommited non-AI longtermism, along with other fields, for simplicity. I strongly encourage not interpreting these diagrams too literally

MichaelPlant @ 2023-09-19T09:48 (+41)

Thanks for this and great diagrams! To think about what the relationship between EA and AI safety, it might help about what EA is for in general. I see a/the purpose of EA is helping people figure out how they can do the most good - to learn about the different paths, the options, and the landscape. In that sense, EA is a bit like a university, or a market, or maybe even just a signpost: once you've learnt what you needed, or found what you want and where to go, you don't necessarily stick around: maybe you need to 'go out' in the world to do what calls you.

This explains your venn diagram: GHD and animal welfare are causes that exist prior to, and independent of EA. They, rather than EA, are where the action is if you prioritise those things. AI safety grew up inside EA.

I imagine AI safety will naturally form it own ecosytem independent of EA: much like, if you care about global development, you don't need to participate in the EA community, a time will come when, for AI safety, you won't need to participate in EA either.

This doesn't mean that EA becomes irrelevant, much like a university doesn't stop mattering when students graduate - or a market ceases to be useful when some people find what they want. There will be further cohorts who want to learn - and some people have to stick around to think about and highlight their options.

lilly @ 2023-09-18T18:57 (+37)

I like how you're characterizing this!

I get that the diagram is just an illustration, and isn't meant to be to scale, but the EA portion of the GHD bubble should probably be much, much smaller than is portrayed here (maybe 1%, because the GHD bubble is so much bigger than the diagram suggests). This is a really crude estimate, but EA spent $400 million on GHD in 2021, whereas IHME says that nearly $70 billion was spent on "development assistance for health" in 2021, so EA funding constitutes a tiny portion of all GHD funding.

I think this matters because GHD EAs have lots and lots of other organizations/spaces/opportunities outside of EA that they can gravitate to if EA starts to feel like it's becoming dominated by AI safety. I worry about this because I've talked to GHD EAs at EAGs, and sometimes the vibe is a bit "we're not sure this place is really for us anymore" (especially among non-biosecurity people). So I think it's worth considering: if the EA community further grows the AI safety field, is this liable to push non-AI safety people—especially GHD people, who have a lot of other places to go—out of EA? And if so, how big of a problem is that?

I assume it would be possible to analyze some data on this, for instance: are GHD EAs attending fewer EAGs? Do EAs who express interest in GHD have worse experiences at EAGs, or are they less likely to return? Has this changed over time? But I'd also be interested in hearing from others, especially GHD people, on whether the fact that there are lots of non-EA opportunities around makes them more likely to move away from EA if EA becomes increasingly focused on AI safety.

NickLaing @ 2023-09-18T19:36 (+18)

That's my big concern as well. I just applied for my first EAG, only after asking the organisers if there would be enough content/people to make it worthwhile for someone who really has something to offer only to GHD people. Their response was still pretty encouraging

"We'd roughly estimate about 10% of attendees at Boston will be focussed on Global Health and Development. Our content specialist will be aiming to curate about 15% of the content around Global Health and Development (which refers to talks, workshops, speed meetings, and meetups). "

That would mean maybe 100-150 GHD people at the conference which is all good from my perspective, but if it was half that I would be getting a bit shaky on whether it would be worth it or not.

DavidNash @ 2023-09-28T10:06 (+4)

Also the $70 billion on development assistance for health doesn't include other funding that contributes to development.

- $100b+ on non health development

- $500b+ remittances

- Harder to estimate but over a trillion spent by LMICs on their own development and welfare

Gemma Paterson @ 2023-09-18T16:36 (+21)

Really appreciate those diagrams - thanks for making them! I agree and think there are serious risks from EA being taken over as a field by AI safety.

The core ideas behind EA are too young and too unknown by most of the world for them to be strangled by AI safety - even if it is the most pressing problem.

Pulling out a quote from MacAskill's comment (since a lot of people won't click)

I’ve also experienced what feels like social pressure to have particular beliefs (e.g. around non-causal decision theory, high AI x-risk estimates, other general pictures of the world), and it’s something I also don’t like about the movement. My biggest worries with my own beliefs stem around the worry that I’d have very different views if I’d found myself in a different social environment. It’s just simply very hard to successfully have a group of people who are trying to both figure out what’s correct and trying to change the world: from the perspective of someone who thinks the end of the world is imminent, someone who doesn’t agree is at best useless and at worst harmful (because they are promoting misinformation).

In local groups in particular, I can see how this issue can get aggravated: people want their local group to be successful, and it’s much easier to track success with a metric like “number of new AI safety researchers” than “number of people who have thought really deeply about the most pressing issues and have come to their own well-considered conclusions”.

One thing I’ll say is that core researchers are often (but not always) much more uncertain and pluralist than it seems from “the vibe”.

...

What should be done? I have a few thoughts, but my most major best guess is that, now that AI safety is big enough and getting so much attention, it should have its own movement, separate from EA. Currently, AI has an odd relationship to EA. Global health and development and farm animal welfare, and to some extent pandemic preparedness, had movements working on them independently of EA. In contrast, AI safety work currently overlaps much more heavily with the EA/rationalist community, because it’s more homegrown.

If AI had its own movement infrastructure, that would give EA more space to be its own thing. It could more easily be about the question “how can we do the most good?” and a portfolio of possible answers to that question, rather than one increasingly common answer — “AI”.

At the moment, I’m pretty worried that, on the current trajectory, AI safety will end up eating EA. Though I’m very worried about what the next 5-10 years will look like in AI, and though I think we should put significantly more resources into AI safety even than we have done, I still think that AI safety eating EA would be a major loss. EA qua EA, which can live and breathe on its own terms, still has huge amounts of value: if AI progress slows; if it gets so much attention that it’s no longer neglected; if it turns out the case for AI safety was wrong in important ways; and because there are other ways of adding value to the world, too. I think most people in EA, even people like Holden who are currently obsessed with near-term AI risk, would agree.

NickLaing @ 2023-09-18T18:10 (+5)

Wow Will really articulates that well, thanks for the quote you're right I wouldn't have seen it myself!

I also fear that EA people with an AI focus may focus too hard on "EA aligned" AI safety work (Technical AI alignment and "inner game" policy work), that they might limit the growth of movements that could grow the AI safety community, outside the AI circle. (E.g. AI ethics, or AI pause activism)

This is of course highly speculative, but I think that's what we're doing right now ;).

Davidmanheim @ 2023-09-18T16:14 (+20)

I largely agree; AI safety isn't something that remains neglected, nor is it obvious that it's particularly tractable. There is a lot of room for people to be working on other things, and there's also a lot of room for people who don't embrace cause neutrality or even effectiveness to work on AI safety.

In fact, in my experience many "EA" AI safety people aren't buying in to cause neutrality or moral circle expansion, they are simply focused on a near-term existential threat. And I'm happy they are doing so - just like I'm happy that global health works on pandemic response. That doesn't make it an EA priority. (Of course, it doesn't make it not an EA priority either!)

Edit to add: I'm really interested in hearing what specifically the people who are voting disagree actually disagree with. (Especially since there are several claims here, some of which are normative and some of which are positive.)

NickLaing @ 2023-09-18T19:12 (+6)

Thanks David I agree its not obvious that it's tractable, can you explain the argument for it not being neglected a bit, or point me to a link about that? I thought the number of people working on it was only in the high hundreds to low thousands?

Davidmanheim @ 2023-09-18T19:16 (+17)

I think that 6 months ago, "high hundreds" was a reasonable estimate. But there are now major governments that have explicitly said they are interested, and tons of people from various different disciplines are contributing. (The UK's taskforce and related AI groups alone must have hired a hundred people. Now add in think tanks around the world, etc.) It's still in early stages, but it's incredibly hard to argue it's going to remain neglected unless EA orgs tell people to work on it.

NickLaing @ 2023-09-18T19:31 (+6)

Thanks, interesting answer appreciate it.

Vaipan @ 2023-09-22T07:44 (+15)

Would be nice to know what you are basing these diagrams on, other than intuition. If you are very present on the forum and mainly focused on AI of course that is going to be your intuition. Here are the dangers of this intuition I find to exist about this topic :

It's a self-reenforcing thing : people deep into AI or newly converted are much more likely to think that EA revolves essentially around AI, and people outside of AI might think 'Oh that's what the community is about now' and don't feel like they belong here. Someone who just lurks out there and see that the forum is now almost exclusively filled with posts on AI will now think that EA is definitely about longtermism.

Funding is also a huge signal. With OpenPhil funding essentially AI and other longtermist projects, for someone who is struggling to find a job (yes we have a few talents who are being aked out everywhere but that's not the case of the majority even for highly-educated EAs), it is easy to think in a opportunistic way and switch to AI out of necessity instead of conviction, see McAskill quote very relevantly cited by someone in the comments.

And finally, the message given by people at the top. If CEA focuses a lot of Ai career switches and think of other career switches as neutral, of course community builders will focus on AI people. Which means, factually, more men with a STEM background (we have excellent women working at visible and prestigious jobs in AI that's true, but unless we consciously try to find a concrete way of making women entering the field it is going to be difficult to maintain this, and this is not a priority so far) since the ratio men/women in STEM is still very not in favor of women. The community might thus become even more masculine, and even more STEM (exception made to philosophers and policy-makers but the funds for such jobs are still scarce). I know this isn't a problem for some here as many of the posts about diversity and their comments attest it, but for those who do see the problem with narrowing down even further, the point is made. And it's just dangerous to focus on helping people switching to AI if in the need the number of jobs doesn't grow as expected.

So all the ingredients are there for EA to turn into a practically exclusively AI community, but as D. Nash said, differentiating between the two might actually be more fruitful.

Also I'm not sure that I want to look back in five years and realize that what made the strength of EA--a highly diverse community in terms of interests and centers of impact, and a measurable impact in the world (I might be very wrong here but so far measuring impact for all these new AI orgs is difficult as we clearly lack data and it's a new field of understanding--, has just disappeared. It's OK to be seen as nerds and elitist (because let's face it, that is how EA is seen in the mainstream) is fine as long as we have concrete impact to show for it, but if we become an exclusively technical community that is all about ML and AI governance, it is going to be even more difficult to get traction outside of EA (and we might want to care about that, as explained in a recent post on AI advocacy).

I know I'm going against the grain here, but I like to think that all these 'EA open to criticism' thinggy is not a thing of the past. And I truly think that these points need to be addressed, instead of being drown under the new enthusiasm for AI. And if needed to be said : I do realize how important AI is, and how impactful working on it is. I just think that it is not enough to go all-AI, and that many here tend to forget other dynamics and factors playing because of the AI takeover in the community.

DavidNash @ 2023-09-18T17:02 (+13)

I've written a bit about this here and think that they would both be better off if they were more distinct.

As AI safety has grown over the last few years there may have been missed growth opportunities from not having a larger separated identity.

I spoke to someone at EAG London 2023 who didn't realise that AI safety would get discussed at EAG until someone suggested they should go after doing an AI safety fellowship. There are probably many examples of people with an interest in emerging tech risks who would have got more involved at an earlier time if they'd been presented with those options at the beginning.

Geoffrey Miller @ 2023-09-22T17:37 (+4)

Tom - you raise some fascinating issues, and your Venn diagrams, however impressionistic they might be, are useful visualizations.

I do hope that AI safety remains an important part of EA -- not least because I think there is some important, under-explored overlap between AI safety and the other key cause areas, global health & development, and animal welfare.

For example, I'm working on an essay about animal welfare implications of AGI. Ideally, advanced AI wouldn't just be 'aligned' with human interests, but with the interests of the other 70,000 species of sentient vertebrates (and the sentient invertebrates). But very little has been written about this so far. So, AI safety has a serious anthropocentrism bias that needs challenging. The EAs who have worked on animal welfare could have a lot to say about AI safety issues in relation to other species.

Likewise, the 'e/acc' cult (which dismisses AI safety concerns, and advocates AGI development ASAP), often argues that there's a moral imperative to develop AGI, in order to promote global health and development (e.g. 'solving longevity' and 'promoting economic growth'). EA people who have worked on global health and development could contribute a lot to the debate over whether AGI is strictly necessary to promote longevity and prosperity.

So, the Venn diagrams need to overlap even more!

Christopher Chan @ 2023-09-18T20:48 (+1)

Where is catastrophic resilience from volcanic and nuclear risks, biosecurity and pandemic preparedeness?

Christopher Chan @ 2023-09-18T20:52 (+1)

I saw the footnote