Development RCTs Are Good Actually

By ozymandias @ 2024-03-28T13:37 (+84)

This post was cross-posted from the substack Thing of Things with the permission of the author.

In defense of trying things out

The Economist recently published an article, “How poor Kenyans became economists’ guinea pigs,” which critiques development economists’ use of randomized controlled trials. I think it exemplifies the profoundly weird way people think about experiments.

The article says:

In 2018, an RCT run by two development economists, in partnership with the World Bank and the water authority in Nairobi, Kenya’s capital, tracked what happened when water supply was cut off to households in several slum settlements where bills hadn’t been paid. Researchers wanted to test whether landlords, who are responsible for settling the accounts, would become more likely to pay as a result, and whether residents would protest.

Hundreds of residents in slum settlements in Nairobi were left without access to clean water, in some cases for weeks or months; virtually none of them knew that they were part of an RCT. The study caused outrage among local activists and international researchers. The criticisms were twofold: first, that the researchers did not obtain explicit consent from participants for their involvement (they said that the landlord’s contracts with the water company allowed for the cut-offs); and secondly, that interventions are supposed to be beneficial. The economists involved published an ethical statement defending the trial. Their research did not make the cut-offs more likely, they explained, because they were a standard part of the water authority’s enforcement arsenal (though they acknowledged that disconnections in slums had previously been “ad hoc”). The statement did little to placate the critics.

You know what didn’t get an article in The Economist? All the times that slum dwellers in Nairobi were left without access to clean water for weeks or months without anyone studying them.

By the revealed preferences of local activists, international researchers, and The Economist, the problem isn’t that people are going without clean water, or that the water authority is shutting off people’s water—those things have been going on for decades without more than muted complaining. The ethical problem is that someone is checking whether this unthinkably vast amount of human suffering is actually accomplishing anything.

The water authority is presumably not shutting off people’s water recreationally: it’s shutting off people’s water because they think it will get them to pay their water bills. Therefore, the possible effects of this study are:

The water authority continues to do the same thing it was doing all along.

The water authority learns that shutting off water doesn’t get people to pay their bills, so it stops shutting off people’s water, and they have enough to drink.

If you step back from your instinctive ick reaction, you’ll notice that this study may well improve water access for slum dwellers in Nairobi, and certainly isn’t going to make it any worse. But people are still outraged because, I don’t know, they have a strongly felt moral opposition to random number generators.

I really don’t understand the revulsion people feel about experimenting on humans. It’s true that many scientists have done great evil in the name of science: the Tuskegee syphilis experiment, MKUltra, Nazi human experimentation, the Imperial Japanese Unit 731.[1] But the problem isn’t the experiments. It’s not somehow okay to deny people treatment for deadly diseases, force them to take drugs, or torture them if you happen to not write anything down about it.

If it’s fine to do something, then it’s fine to randomly assign people to two groups, only do it to half of them, and then compare the results. We should, of course, be mindful of how the greater good and the thirst for knowledge can tempt scientists to commit atrocities. But that doesn’t mean that we should go “ah! I see that you’re bothering to check whether the thing you’re doing works! You have opted in to Special Ethics and now you can’t cross the street unless you have signed consents from the drivers and have shown that your street-crossing benefits them.”

One of the people quoted in the article goes beyond questioning experimental ethics to questioning the concept of followup studies:

The children of the original recipients are now themselves research subjects: Miguel and several other colleagues are currently collecting data which will assess whether the health and developmental consequences of rcts might be passed down the generations. This lengthy duration is a virtue, Miguel believes: “A lot of the value in the research is in the longitudinal data set.”

Yet other researchers have questioned the ethics of continuous data collection in general. “When does the cycle of collecting data stop?” asked Joel Mumo from the Busara Centre. “What’s enough? How do you justify experimenting on people over and over again?”

Well, you see, I justify experimenting on people over and over again, because sometimes our actions have consequences long in the future, and it’s good to know what those consequences are. It stops when the experimenters no longer think they’re collecting useful information, when they can’t find people to fund the studies, or when they’ve lost enough of the participants to attrition that they can no longer meaningfully generalize about the whole sample.

Part of the reason The Economist is comfortable with absurdly high standards of behavior for experimenters is that they’re skeptical that we need experiments at all, and can’t just go on vibes:

The randomistas have a tendency to operate as if they have little prior knowledge about how the world works. But for Duflo, experiments are the only way to challenge assumptions. “Often one’s intuitions are incorrect,” she said. But do you really need an experiment to tell you whether pillows and blankets will help children fall asleep, or whether access to clean water will improve health?

It’s as if “there was no source of knowledge ever, except for RCTs,” said Lant Pritchett, a development economist. He pointed to a paper that used an RCT to conclude, in part, that girls in Afghanistan were more likely to go to community school when there was one in their village. (“I am not shitting you,” he said.)

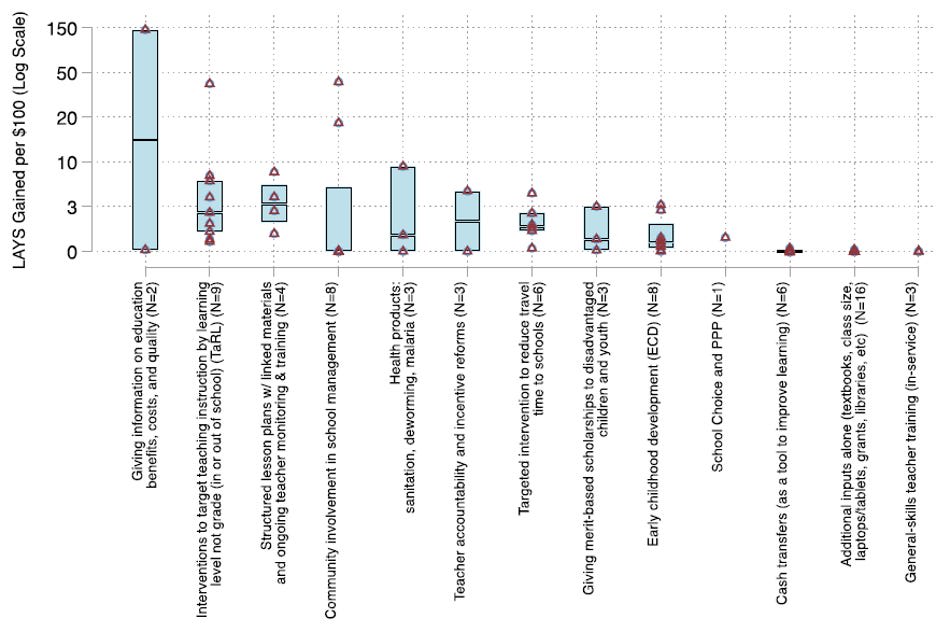

Look at this chart of the cost-effectiveness of various developing-world educational interventions:

Better incentives for teachers and structured lesson plans both seem, intuitively, like they’d improve education—and they do. It turns out that structured lesson plans are about 150% more effective than better incentives. Would you have been able to guess that ahead of time?

The purpose of an RCT about clean water or school access isn’t necessarily to establish whether there’s an effect at all: it’s to establish how big the effect is. If you run the health department or the department of education for a developing country—one that doesn’t have a lot of resources—it’s important to know not only what works but also what will let you squeeze the most health or education out of your limited budget. Afghanistan doesn’t exactly have a lot of money.

But even that’s giving the Economist too much credit. Intuitively, it seems like teacher training and new textbooks would improve children’s education. I can imagine Lant Pritchett going “I am not shitting you” about a study of whether textbooks improve learning outcomes. But it turns out the effects are indistinguishable from zero.

Similarly, it could be true that girls in certain countries aren’t meaningfully more likely to go to school if there’s one in their village—for example, if girls being educated is extremely stigmatized, or if families need girls to work to support the family. Even if girls are much more likely to go to school if there’s a school in their village, that doesn’t necessarily mean they learn anything. And even if they learn more, that doesn’t necessarily mean that they earn more money, have more ability to control their lives, or otherwise benefit. We need to check.

As my dad used to say, when you assume you make an ass out of you and me.

In fact, some of the purported harm of RCTs is actually a reason we need to do RCTs:

Cash-transfer studies can be especially divisive. “Some of the men married a second wife, some homes broke apart, some wives left their husbands,” said Andrew Wabwire, a field manager for REMIT, a research company. As with Okela-C, rumours have spread that the money is cursed – that people would turn into snakes if they accepted it.

You know, I would not have predicted “recipients believe money will turn you into snakes” as a potential outcome of cash transfers? Isn’t it great that someone is following up with recipients to ask about these things?

And we don’t have to rely on anecdotes from Andrew Wabwire, because we’ve done randomized controlled trials. In spite of the divorces and polygamous marriages, cash transfers have a small but significant positive effect on wellbeing and mental health among recipients. Cash transfers may have a negative effect on wellbeing among people in the same village who didn’t get a cash transfer, although further analysis suggests that the effect might be a statistical artifact.[2] But this study is part of the reason that GiveDirectly switched to giving money to everyone in a village, instead of just the poorest.

To their critics, the interventions studied by RCTs are so small, and the timelines required so long, that the actual effects on poverty can seem marginal at best. Even Kremer’s and Miguel’s deworming project, which is considered one of the most successful RCTs to date, may have done little to augment overall student success. “Deworming is trivial as compared with practical educational improvement,” said Pritchett. (Miguel said this view is a caricature of RCTs that minimises their insight when aggregated.)

As far as I can tell, the deworming project being “one of the most successful RCTs to date” is just wrong. There is widespread disagreement about what we can conclude about deworming from the available evidence, with many respected academics saying that deworming has no effect on education at all. Many RCTs show a much larger effect.

Improving education—in the developing or the developed world—is really really hard. Lots of programs that seem like they ought to work don’t. (For example.) There are a number of promising programs, such as Teaching At The Right Level, in which students are taught based on what they already know instead of having a bunch of illiterate children stare blankly at the blackboard while the teacher tries to get them to read the Ramayana. These programs have generally been identified through randomized controlled trials.

Some policymakers don’t like randomized controlled trials:

Arvind Subramanian, a former chief economic adviser to the Indian government, told me that during his time in office, rcts did not figure into his calculations. “They were never meant to analyse big macroeconomic questions – they’re about small, microeconomic interventions,” he said. “The ability of RCTs to answer any of these big questions is close to zero.” The rise of RCTs, in his view, is due to the influence of a “very incestuous club of prominent academics, philanthropy and mostly weak governments. That’s why you will see RCTs used disproportionately in sub-Saharan African contexts, where…state capacity is weak.”

Gulzar Natarajan, a leading Indian development economist who is currently secretary of finance for the state of Andhra Pradesh, has argued that RCTs merely provide “standalone technical fixes” that do not work in practice. When it comes to implementation across entire countries, the same features that initially seemed appealing about RCTs begin to look like failures. “The state’s capacity to administer and monitor, masked by the small size and presence of energetic research assistants in the field experiment, gets exposed. Logic gets torn apart when faced with practical challenges,” he argues. (Duflo points out that jpal has a long-term partnership with Tamil Nadu, an Indian state, to make policy out of findings from RCTs.)

Maybe Subramanian and Natarajan should talk to each other. Is it that RCTs are only useful when a government lacks state capacity, or is it that you can’t scale up RCTs because the government is too bad at administering and monitoring things?

It’s true that RCTs are sharply limited in what questions they can answer: you can’t randomly assign half of all countries to one central bank policy and one to another. But there are a number of questions that governments face—health, education, infrastructure—that RCTs do answer. I agree it’s weird that RCTs are so associated with economists and mostly do well answering questions that aren’t traditionally economic; such are the consequences of economists being the only social scientists who are mathematically literate. That doesn’t mean that health, education, or infrastructure are somehow unimportant.

And it’s true that RCTs are often hard to turn into policy programs. If you scroll up to the chart I posted, you’ll see that “giving information on education costs, benefits, and quality” has widely variable cost effectiveness. This is because it only works if parents are ignorant about the benefits of education. In places where parents already know that education is important, it does no good. As a policymaker, you have to understand not only what the RCT found but why it found that. And any program is more difficult to implement for everyone in a country than it is for a small number of people in a study. New challenges arise. Compromises have to be made for the sake of political expediency. You don’t have researchers checking up that the teachers are in fact Teaching At The Right Level and haven’t gone back to teaching the grade-level curriculum.

But that doesn’t mean that empiricism is valueless. As a policymaker, you may not be able to straightforwardly implement Teaching At The Right Level as it was implemented in the RCT. But you know to ask “are our teachers teaching grade-level material even though our students are near-universally below grade level and have no hope of understanding it?” You have a toolkit of things that worked on the small scale, such as hiring tutors that specialize in teaching below-grade-level material. Regardless of whether an RCT has been conducted, you’d have to adjust things for your own context and capacities. But RCTs are your best chance of knowing whether it ever worked for anyone at all.

I’ve been quite critical of this article, but there is one thing in it I find troubling:

Officially, all researchers are required to secure informed consent from their subjects. But that doesn’t mean a subject understands what exactly they are signing up for, that they are presented with the option to decline or that the trial might go on for an indefinite period of time. “People are constantly trying to work out, ‘What is the value exchange that is going on here?’” said Kingori. “They understand that some extraction is taking place, but they can’t quite work out what the value is.”

Virtually every field worker I spoke to told me stories of locals demanding to know why they were placed in the control group, challenging the randomisation techniques or refusing to speak to the researchers who return to their villages year after year. Eric Ochieng, a research manager based at the Busia office of IPA, told me that he had been approached on the street by subjects asking why they were given nothing when others received electricity or cash. Others don’t understand why, despite opting to participate in several trials, they always seem to end up in the control group. “Sometimes they come and ask, ‘What is it with my name that the computer never selects me?’” Many remain doubtful that participants are truly selected at random. One group of recipients was so suspicious that they asked researchers to draw names out of a hat in public…

Magero told me that in the 16 years she has worked on RCTs, she had only ever encountered one man, a “learned man”, who actually understood how the studies work. “There is something good that comes out of this research, it’s just that some people can’t really understand,” she said. “They just want [to see the] direct benefit to them, they don’t want the communal benefit that is coming.”

For many reasons, it’s not realistic to hold off on RCTs in the developing world until the respondents can correctly explain why studies need a control group. (Only 60% of Americans have this concept down.) But I do think the respondents’ informed consent isn’t especially, well, informed.

Development economists should consider more carefully how to do right by their respondents. Instead of applying ethical standards developed for WEIRD people as if they make sense in every country, we should develop appropriate standards for different groups. How can we explain what the study is for in a way that actually makes sense to the respondents? Can we randomize in a way verifiable by respondents (I love the hat idea)? Can we do a phased rollout of a program instead of denying it to half the participants, and if we do how do we explain what a “phased rollout” is? Does it even make sense to try to explain randomization, or does it just make participants confused and upset? Maybe in a particular country “we’re trying to learn about your health” will actually give respondents a more accurate understanding of what’s going on than trying to explain what an RCT is. Can we pay people an amount of money or goods that makes responding to surveys feel fair to them, even if they don’t get to take advantage of the program?

I think it would be well worth running focus groups to try to figure out best practices for conducting studies in a particular context.[3] As a person from the developed world, I don’t pretend to have all the answers here. But I think it’s something worth considering—as long as we’re working from the premise that empiricism is important and RCTs aren’t inherently morally suspect.

- ^

I am leaving out the Wikipedia link for Unit 731 because learning about Unit 731 will replace any of your plans for the day with staring at the wall contemplating the limitless depths of human evil. I want people to have some time to reflect about whether they want to do this.

- ^

Specifically, people in control villages were more likely to drop out than people in treatment villages, even people who didn’t get a cash transfer. The study couldn’t establish that the effect wasn’t caused by happy people in control villages being more likely to answer surveys.

- ^

J haven’t seen any studies like this but it seems probable to me that someone has done them; if you know, please send me links!

Arepo @ 2024-03-28T21:30 (+9)

The Copenhagen interpretation of ethics strikes again.

huw @ 2024-03-28T23:03 (+12)

It's wild for a news organisation that routinely witnesses and reports on tragedies without intervening (as is standard journalistic practice, for good reason) to not recognise it when someone else does it.

Arepo @ 2024-03-29T04:03 (+2)

I hadn't even thought of that! Yeah, that's some pretty impressive hypocrisy.

Karthik Tadepalli @ 2024-03-28T21:22 (+5)

Good post, more detailed thoughts later, but one nitpick:

As far as I can tell, the deworming project being “one of the most successful RCTs to date” is just wrong. There is widespread disagreement about what we can conclude about deworming from the available evidence, with many respected academics saying that deworming has no effect on education at all. Many RCTs show a much larger effect.

I don't think "most successful RCT" is supposed to mean "most effective intervention" but rather "most influential RCT"; deworming has been picked up by a bunch of NGOs and governments after Miguel and Kremer, plausibly because of that study.

(Conflict note: I know and like Ted Miguel)

Sam_Coggins @ 2024-04-03T23:36 (+4)

I think high ethical standards for RCTs in developing countries are important for:

- Trust: RCTs facilitate less or no benefits if desired research users do not trust the RCTs and researchers that facilitated them

- Social licence to operate: insensitive RCTs can 'burn bridges' that constrain future RCTs from being permitted. It's not a trivial ask for governments to permit foreign researchers to do experiments on vulnerable people in their countries, especially those with colonial histories.

- Mitigating direct harm: moral uncertainty makes this important, even if there is an envisioned greater good. Empirical uncertainty also makes mitigating direct harm important because envisioned greater good ≠ greater good.

I hold this view as someone enthusiastic about the potential benefits of RCTs, having recently contributed to one in Bihar (India)