Superintelligent AI is necessary for an amazing future, but far from sufficient

By So8res @ 2022-10-31T21:16 (+35)

(Note: Rob Bensinger stitched together and expanded this essay based on an earlier, shorter draft plus some conversations we had. Many of the key conceptual divisions here, like "strong utopia" vs. "weak utopia" etc., are due to him.)

I hold all of the following views:

- Building superintelligent AI is profoundly important. Aligned superintelligence is our best bet for taking the abundant resources in the universe and efficiently converting them into flourishing and fun and art and beauty and adventure and friendship, and all the things that make life worth living.[1]

- The best possible future would probably look unrecognizably alien. Unlocking humanity’s full potential not only means allowing human culture and knowledge to change and grow over time; it also means building and becoming (and meeting and befriending) very new and different sorts of minds, that do a better job of realizing our ideals than the squishy first-pass brains we currently have.[2]

- The default outcome of building artificial general intelligence, using anything remotely like our current techniques and understanding, is not a wondrously alien future. It’s that humanity accidentally turns the reachable universe into a valueless wasteland (at least up to the boundaries defended by distant alien superintelligences).

The reason I expect AGI to produce a “valueless wasteland” by default, is not that I want my own present conception of humanity’s values locked into the end of time.

I want our values to be able to mature! I want us to figure out how to build sentient minds in silicon, who have different types of wants and desires and joys, to be our friends and partners as we explore the galaxies! I want us to cross paths with aliens in our distant travels who strain our conception of what’s good, such that we all come out the richer for it! I want our children to have values and goals that would make me boggle, as parents have boggled at their children for ages immemorial!

I believe machines can be people, and that we should treat digital people with the same respect we give biological people. I would love to see what a Matrioshka mind can do.[3] I expect that most of my concrete ideas about the future will seem quaint and outdated and not worth their opportunity costs, compared to the rad alternatives we'll see when we and our descendants and creations are vastly smarter and more grown-up.

Why, then, do I think that it will take a large effort by humanity to ensure that good futures occur? If I believe in a wondrously alien and strange cosmopolitan future, and I think we should embrace moral progress rather than clinging to our present-day preferences, then why do I think that the default outcome is catastrophic failure?

In short:

- Humanity’s approach to AI is likely to produce outcomes that are drastically worse than, e.g., the outcomes a random alien species would produce.

- It’s plausible — though this is much harder to predict, in my books — that a random alien would produce outcomes that are drastically worse (from a cosmopolitan, diversity-embracing perspective!) than what unassisted, unmodified humans would produce.

- Unassisted, unmodified humans would produce outcomes that are drastically worse than what a friendly superintelligent AI could produce.

The practical take-away from the first point is “the AI alignment problem is very important”; the take-away from the second point is “we shouldn’t just destroy ourselves and hope aliens end up colonizing our future light cone, and we shouldn’t just try to produce AI via a more evolution-like process”;[4] and the take-away from the third point is “we shouldn’t just permanently give up on building superintelligent AI”.

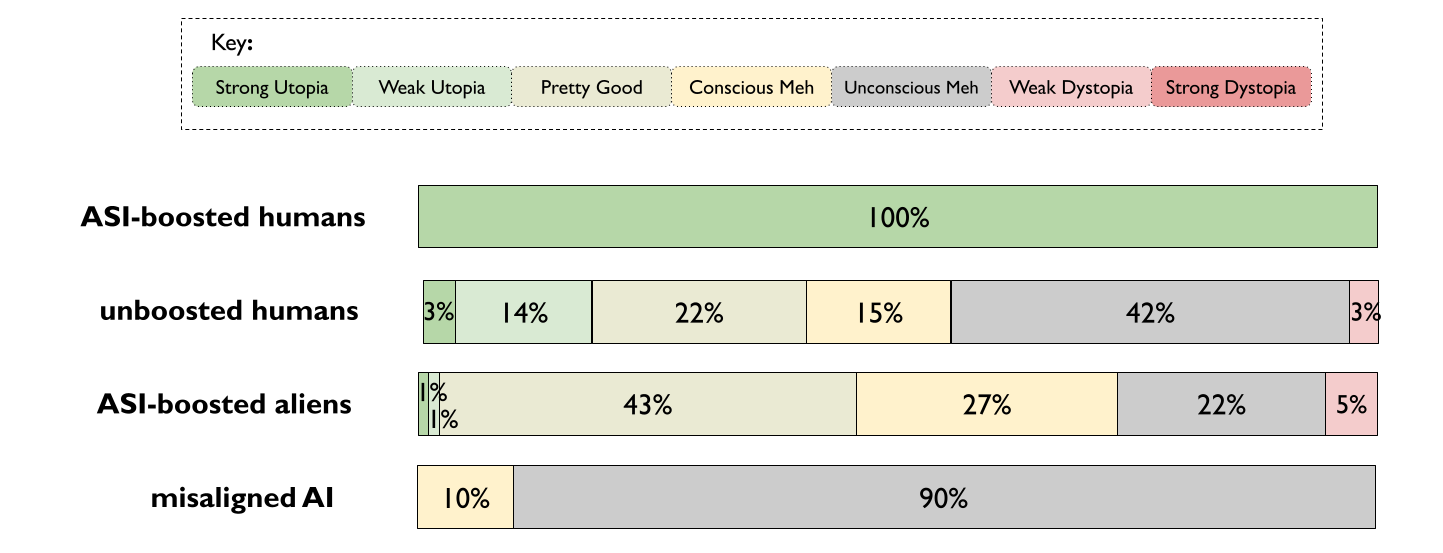

To clarify my views, Rob Bensinger asked me how I’d sort outcomes into the following broad bins:

- Strong Utopia: At least 95% of the future’s potential value is realized.

- Weak Utopia: We lose 5+% of the future’s value, but the outcome is still at least as good as “tiling our universe-shard with computronium that we use to run glorious merely-human civilizations, where people's lives have more guardrails and more satisfying narrative arcs that lead to them more fully becoming themselves and realizing their potential (in some way that isn't railroaded), and there's a far lower rate of bad things happening for no reason”.

- (“Universe-shard” here is short for “the part of our universe that we could in principle reach, before running into the cosmic event horizon or the well-defended borders of an advanced alien civilization”.

- Pretty Good: The outcome is worse than Weak Utopia, but at least as good as “tiling our universe-shard with computronium that we use to run lives around as good and meaningful as a typical fairly-happy circa-2022 human”.

- Conscious Meh: The outcome is worse than the “Pretty Good” scenario, but isn’t worse than an empty universe-shard. Also, there’s a lot of conscious experience in the future.

- Unconscious Meh: Same as “Conscious Meh”, except there’s little or no conscious experience in our universe-shard’s future. E.g., our universe-shard is tiled with tiny molecular squiggles (a.k.a. “molecular paperclips”).

- Weak Dystopia: The outcome is worse than an empty universe-shard, but falls short of “Strong Dystopia”.

- Strong Dystopia: The outcome is about as bad as physically possible.

For each of the following four scenarios, Rob asked how likely I think it is that the outcome is a Strong Utopia, a Weak Utopia, etc.:

- ASI-boosted humans — We solve all of the problems involved in aiming artificial superintelligence at the things we’d ideally want.

- unboosted humans — Somehow, humans limp along without ever developing advanced AI or radical intelligence amplification. (I’ll assume that we’re in a simulation and the simulator keeps stopping us from using those technologies, since this is already an unrealistic hypothetical and “humans limp along without superintelligence forever” would otherwise make me think we must have collapsed into a permanent bioconservative dictatorship.)

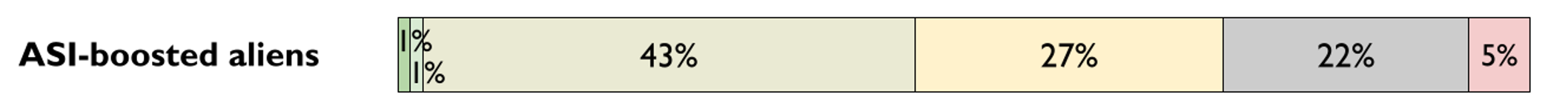

- ASI-boosted aliens — A random alien (that solved their alignment problem and avoided killing themselves with AI) shows up tomorrow to take over our universe-shard, and optimizes the shard according to its goals.

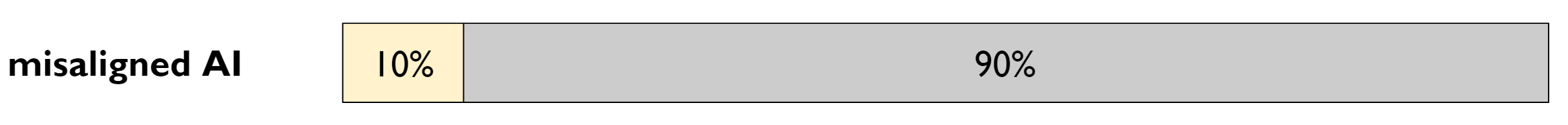

- misaligned AI — Humans build and deploy superintelligent AI that isn’t aligned with what we’d ideally want.

These probabilities are very rough, unstable, and off-the-cuff, and are “ass numbers” rather than the product of a quantitative model. I include them because they provide somewhat more information about my view than vague words like “likely” or “very unlikely” would.

(If you’d like to come up with your own probabilities before seeing mine, here’s your chance. Comment thread.)

.

.

.

.

.

(Spoiler space)

.

.

.

.

.

With rows representing odds ratios:

| Strong Utopia | Weak Utopia | Pretty Good | Con. Meh | Uncon. Meh | Weak Dystopia | Strong Dystopia | |

| ASI-boosted humans | 1 | ~0 | ~0 | ~0 | ~0 | ~0 | ~0 |

| unboosted humans[5] | 1 | 5 | 7 | 5 | 14 | 1 | ~0 |

| ASI-boosted aliens | 1 | 1 | 40 | 20 | 25 | 5 | ~0 |

| misaligned AI | ~0 | ~0 | ~0 | 1 | 9 | ~0 | ~0 |

“~0” here means (in probabilities) “greater than 0%, but less than 0.5%”. Converted into (rounded) probabilities by Rob:

Below, I’ll explain why my subjective distributions look roughly like this.

Unboosted humans << Friendly superintelligent AI

I don’t think it’s plausible, in real life, that humanity goes without ever building superintelligence. I’ll discuss this scenario anyway, though, in order to explain why I think it would be a catastrophically bad idea to permanently forgo superintelligence.

If humanity were magically unable to ever build superintelligence, my default expectation (ass number: 4:1 odds in favor) is that we’d eventually be stomped by an alien species (or an alien-built AI). Without the advantages of maxed-out physically feasible intelligence (and the tech unlocked by such intelligence), I think we would inevitably be overpowered.

At that point, whether the future goes well or poorly would depend entirely on the alien's / AI’s values, with human values only playing a role insofar as the alien/AI terminally cares about our preferences.

Why think that humanity will ever encounter aliens?

My current tentative take on the Fermi paradox is:

- If it's difficult for life to evolve (and therefore there are no aliens out there), then we should expect humanity to have evolved at a random (complex-chemistry-compatible) point in the universe's history.

- If instead life evolves pretty readily, then we should expect the future to be tightly controlled by expansionist aliens who want more resources in order to better achieve their goals. (Among other things, because a wide variety of goals imply expansionism.)

- We should then expect new intelligent species (including humans) to all show up about as early in the universe's history as possible, since new life won't be able to arise later in the universe's history (when all the resources will have already been grabbed).

- We should also expect expansionist aliens to expand outwards, in all directions, at an appreciable fraction of the speed of light. This implies that if the aliens originate far from Earth, we should still expect to only be able to see them in the night sky for a short window of time on cosmological timescales (maybe a few million years?).

- Furthermore, if humanity seems to have come into existence pretty early on cosmological scales, then we can roughly estimate the distance to aliens by looking at exactly how early we are.

- If we evolved a million years later than we could have, then intelligent life cannot be so plentiful that there exist lots of aliens (some of them resource-hungry) within a million light years of us, or Earth would have been consumed already.

- It looks like intelligent life indeed evolved on Earth pretty early on cosmological timescales, maybe (rough order-of-magnitude) within a billion years of when life first became feasibly possible in this universe.

- Intelligent life maybe could have evolved ~100 million years earlier on Earth, during the Mesozoic period, if it didn't get hung up on dinosaurs. Which means that we're not as early as we can possibly get; we're at least 100 million years late.

- We could be even later than that, if Earth itself arrived on the scene late. But it’s plausible that first- and second-generation stars didn’t produce many planets with the complex chemistry required for life, which limits how much earlier life could have arisen.

- I'd be somewhat surprised to hear that the Earth is ten billion years late, though I don't know enough cosmology to be confident; so I'll treat ten billion years as a weak upper bound on how early a lot of intelligent aliens start arising, and 100 million years as a lower bound.

- This "we're early" observation provides at least weak-to-moderate evidence that we're in the second scenario, and that intelligent life therefore evolves readily. We should therefore expect to encounter aliens one day, if we spread to the stars — though plausibly none that evolved much earlier than we did (on cosmic timescales).

This argument also suggests that we should expect the nearest aliens to be more than 100 million light-years away; and we shouldn't expect aliens to have more of a head start than they are distant. E.g., aliens that evolved a billion years earlier than we did are probably more than a billion light-years away.

This means that even if there are aliens in our future light-cone, and even if those aliens are friendly, there's still quite a lot at stake in humanity’s construction of AGI, in terms of whether the Earth-centered ~250-million-light-year-radius sphere of stars goes towards Fun vs. towards paperclips.

(Robin Hanson has made some related arguments about the Fermi paradox, and various parts of my model are heavily Hanson-influenced. I attribute many of the ideas above to him, though I haven’t actually read his “grabby aliens” paper and don’t know whether he would disagree with any of the above.)

Why think that most aliens succeed in their version of the alignment problem?

I don’t have much of an argument for this, just a general sense that the problem is “hard but not that hard”, and a guess that a fair number of alien species are smarter, more cognitively coherent, and/or more coordinated than humans at the time they reach our technological level. (E.g., a hive-mind species would probably have an easier time solving alignment, since they wouldn’t need to rush.)

I’m currently pessimistic about humanity’s odds of solving the alignment problem and escaping doom, but it seems to me that there are a decent number of disjunctive paths by which a species could be better-equipped to handle the problem, given that it’s strongly in their interest to handle it well.

If I have to put a number on it, I’ll wildly guess that 1/3 of technologically advanced aliens accidentally destroy themselves with misaligned AI.[6]

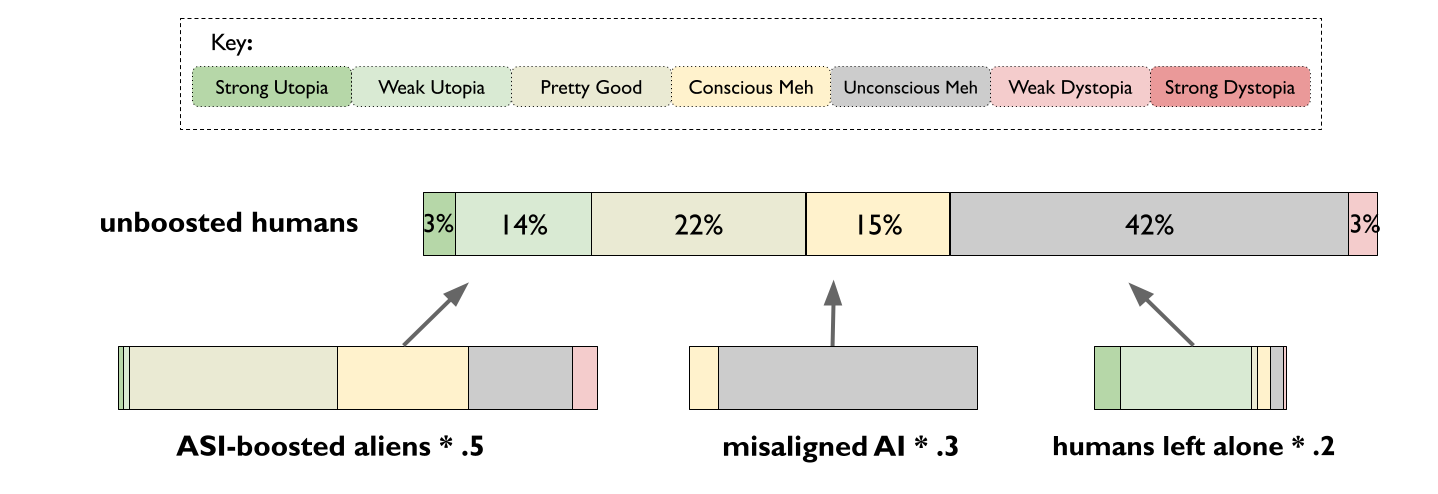

My ass-number distribution for “how well does the future go if humans just futz around indefinitely?” is therefore the sum of “50% chance we get stomped by evolved aliens, 30% chance we get stomped by misaligned alien-built AI, 20% chance we retain control of the universe-shard”:

As with many of the numbers in this post, I haven’t reflected on these much, and might revise them if I spent more minutes considering them. But, again, I figure unstable numbers are more informative in this context than just saying “(un)likely”.

Why build superintelligence at all?

So that we don’t get stomped by superintelligent aliens or alien AI; and so that we can leverage superhuman intelligence to make the future vastly better.

(Seriously, humans, with our <10 working memory slots, are supposed to match minds that can potentially attend to millions of complex thoughts in their mind at once in all sorts of complex relationships??)

In real life, the reason I’m in a hurry to solve the AI alignment problem is because humanity is racing to build AGI, at which point we’ll promptly destroy ourselves (and all of the future value in our universe-shard) with misaligned AGI, if the tech proliferates much. And AGI is software, so preventing proliferation is hard — hard enough that I haven’t heard of a more promising solution than “use one of the first AGIs to restrict proliferation”. But this requires that we be able to at least align that system, to perform that one act.

In the long run, however, the reason I care about the alignment problem is that “what should the future look like?” is a subtle and important problem, and humanity will surely be able to answer it better if we have access to reliable superintelligent cognition.

(Though “we need superintelligence for this” doesn’t entail “superintelligence will do everything for us”. It's entirely plausible to me that aligned AGI does something like "set up some guardrails for humanity, but then pass lots of the choices about how our future goes back to us", with the result that mere-humans end up having lots of say over how the future looks (including the sorts of weirder minds we build or become).)

The “easy” alignment problem is the problem of aiming AGI at a task that restricts proliferation (at least until we can get our act together as a species).

But the main point of restricting proliferation, from my perspective, is to give humanity as much time as it needs to ultimately solve the “hard” alignment problem: aiming AGI at arbitrary tasks, including ones that are far more open-ended and hard-to-formalize.

Intelligence is our world’s universal problem-solver; and more intelligence can mean the difference between finding a given solution quickly, and never finding it at all. So my default guess is that giving up on superintelligence altogether would result in a future that’s orders of magnitude worse than a future where we make use of fully aligned superintelligence.

Fortunately, I see no plausible path by which humanity would prevent itself from ever building superintelligence; and not many people are advocating for such a thing. (Instead, EAs are doing the sane thing of advocating for delaying AGI until we can figure out alignment.) But I still think it’s valuable to keep the big picture in view.

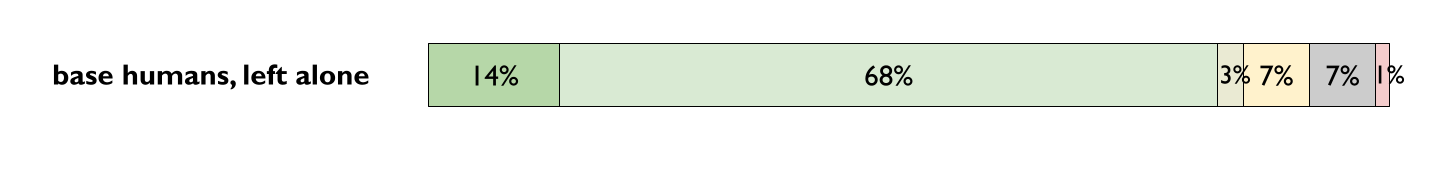

OK, but what if we somehow don't build superintelligence? And don’t get stomped by aliens or alien AI, either?

My ass-number distribution for that scenario, stated as an odds ratio, is something like:

| Strong Utopia | Weak Utopia | Pretty Good | Con. Meh | Uncon. Meh | Weak Dystopia | Strong Dystopia |

| 10 | 50 | 2 | 5 | 5 | 1 | ~0 |

I.e.:

The outcome’s goodness depends a lot on exactly how much intelligence amplification or AI assistance we allow in this hypothetical; and it depends a lot on whether we manage to destroy ourselves (or permanently cripple ourselves, e.g., with a stable totalitarian regime) before we develop the civilizational tech to keep ourselves from doing that.

If we really lock down on human intelligence and coordination ability, that seems real rough. But if there's always enough freedom and space-to-expand that pilgrims can branch off and try some new styles of organization when the old ones are collapsing under their bureaucratic weight or whatever, then I expect that eventually even modern-intelligence humans start capturing lots and lots of the stars and converting lots and lots of stellar negentropy into fun.[7]

If you don't have the pilgrimage-is-always-possible clause, then there's a big chance of falling into a dark attractor and staying there, and never really taking advantage of the stars.

In constraining human intelligence, you’re closing off the vast majority of the space of exploration (and a huge fraction of potential value). But there’s still a lot of mindspace to explore without going too far past current intelligence levels.

In good versions of this scenario, a lot of the good comes from humans being like, “I guess we do the same things the superintelligence would have done, but the long way.” Humanity has to do the work that a superintelligence would naturally handle at a lot of junctures to make the future eudaimonic.

It’s probably possible to eventually do a decent amount of that work with current-human minds, if you have an absurdly large number of them collaborating just right, and if you’re willing to go very slow. (And if humanity hasn’t locked itself into a bad state.)

I’ll note in passing that the view I’m presenting here reflects a super low degree of cynicism relative to the surrounding memetic environment. I think the surrounding memetic environment says "humans left unstomped tend to create dystopias and/or kill themselves", whereas I'm like, "nah, you'd need somebody else to kill us; absent that, we'd probably do fine". (I am not a generic cynic!)

Still, ending up in this scenario would be a huge tragedy, relative to how good the future could go.

A different way of framing the question “how good is this scenario?” is “would you rather really quite a lot of the alien ant-queen’s will, or a smidge of poorly-implemented fun?”.

In that case, I suspect (non-confidently) that I’d take the fun over the ant-queen’s will. My guess is that the aliens-control-the-universe-shard scenario is net-positive, but that it loses orders of magnitude of cosmopolitan utility compared to the “cognitively constrained humans” scenario.

To explain why I suspect this, I’ll state some of my (mostly low-confidence) guesses about the distribution of smart non-artificial minds.

Alien CEV << Human CEV

On the whole, I’m highly uncertain about the expected value of “select an evolved alien species at random, and execute their coherent extrapolated volition (CEV) on the whole universe-shard”.

(Quoting Eliezer: "In poetic terms, our coherent extrapolated volition is our wish if we knew more, thought faster, were more the people we wished we were, had grown up farther together; where the extrapolation converges rather than diverges, where our wishes cohere rather than interfere; extrapolated as we wish that extrapolated, interpreted as we wish that interpreted.")

My point estimate is that this outcome is a whole lot better than an empty universe, and that the bad cases (such as aliens that both are sentient and are unethical sadists) are fairly rare. But humans do provide precedent for sadism and sentience! And it sure is hard to be confident, in either direction, from a sample size of 1.

Moreover, I suspect that it would be good (in expectation) for humans to encounter aliens someday, even though this means that we’ll control a smaller universe-shard.

I suspect this would be a genuinely better outcome than us being alone, and would make the future more awesome by human standards.

To explain my perspective on this, I'll talk about a few different questions in turn:

- How many technologically advanced alien species are sentient?

- How likely is it that such aliens produce extremely-good or extremely-bad outcomes?

- How will aliens feel about us?

- How many aliens are more like paperclip maximizers? How many are more like cosmic brethren to humanity, with goals that are very alien but (in their alien way) things of wonder, complexity, and beauty?

- How should we feel about our alien brethren?

- What do I mean by “good”, “bad”, “cosmopolitan value”, etc.?

How many advanced alien species are sentient?

I expect more overlap between alien minds and human minds, than between AI minds (of the sort we’re likely to build first, using methods remotely resembling current ML) and human minds. But among aliens that were made by some process that’s broadly similar to how humans evolved, it's pretty unclear to me what fraction we would count as "having somebody home” in the limit of a completed science of mind.

I have high enough uncertainty here that picking a median doesn’t feel very informative. I have maybe 1:2 or 1:3 odds on “lots of advanced alien races are sentient” : “few advanced alien races are sentient”, conditional on my current models not including huge mistakes. (And I’d guess there’s something like a 1/4 chance of my models containing huge mistakes here, in which case I’m not sure what my distribution looks like.)

“A nonsentient race that develops advanced science and technology” may sound like a contradiction in terms: How could a species be so smart and yet lack "somebody there to feel things" in the way humans seem to? How could something perform such impressive computations and yet “the lights not be on”?

I won’t try to give a full argument for this conclusion here, as this would require delving into my (incomplete but nontrivial) models of what's going on in humans just before they insist that there's something it's like to be them.[8] (As well as my model of evolutionary processes and general intelligence, and how those connect to consciousness.) But I’ll say a few words to hopefully show why this claim isn’t a wild claim, even if you aren’t convinced of it.

My current best models suggest that the “somebody-is-home” property is a fairly contingent coincidence of our evolutionary history.

On my model, human-style consciousness is not a necessary feature of all optimization processes that can efficiently model the physical world and sort world-states by some criterion; nor is it a necessary feature of all optimization processes that can abstractly represent their own state within a given world-model.

To better intuit the idea of a very smart and yet unconscious process, it might help to consider a time machine that outputs a random sequence of actions, then resets time and outputs a new sequence of actions, unless a specified outcome occurs.

The time machine does no planning, no reflection, no learning, no thinking at all. It just detects whether an outcome occurs, and hits “refresh” on the universe if the outcome didn’t happen.

In spite of this lack of reasoning, this time machine is an incredibly powerful optimizer. It exhibits all the behavioral properties of a reasoner, including many of the standard difficulties of (outer) AI alignment.

If the machine resets any future that isn't full of paperclips, then we should expect it to reset until machinery exists that's busily constructing von Neumann probes for the sake of colonizing the universe and paperclipping it.

And we should expect the time machine and the infrastructure it builds to be well-defended, since "you can't make the coffee if you're dead", and you can’t make paperclips without manufacturing equipment. The optimization process exhibits convergent instrumental behavior and behaves as though it's "trying" to route around obstacles and adversaries, even though there's no thinking-feeling mind guiding it.[9]

You can’t actually build a time machine like this, but the example helps illustrate the fact that in principle, powerful optimization — steering the future into very complicated and specific states of affairs, including states that require long sequences of events to all go a specific way — does not require consciousness.

We recognize that a textbook can store a lot of information and yet not “experience” that information. What’s less familiar is the idea that powerful optimization processes can optimize without “experience”, partly (I claim) because we live in a world where there are many simpler information-storing and computational systems, but where the only powerful optimization processes are humans.

Moreover, we don’t know on a formal level what general intelligence or sentience consists in, so we only have our evolved empathy to help us model and predict the (human) general intelligences in our environment. Our “subjective point of view”, from that empathic perspective, feels like something basic and intrinsic to every mental task we perform, rather than feeling like a complicated set of cogs and gears doing specific computational tasks.

So when something is not only “storing a lot of useful information” but “using that information to steer environments, like an agent”, it’s natural for us to use our native agent-modeling software (i.e., our human-brain-modeling software) to try to simulate its behavior. And then it just “feels as though” this human-like system must be self-aware, for the same reason it feels obvious that you’re conscious, that other humans are conscious, etc.

Moreover, it’s observably the case that consciousness-ascription is hyperactive. We readily see faces and minds in natural phenomena. We readily imagine simple stick-figures in comic strips experiencing rich mental lives.

A concern I have with the whole consciousness discussion in EA-adjacent circles is that people seem to consider their empathic response to be important evidence about the distribution of qualia in Nature, despite the obvious hyperactivity.

Another concern I have is that most people seem to neglect the difference between “exhibiting an external behavior in the same way that humans do, and for the same reasons we do”, and “having additional follow-on internal responses to that behavior”.

An example: If we suppose that it’s very morally important for people to internally subvocalize “I sneezed” after sneezing, and you do this whenever you sneeze, and all your (human) friends report that they do it too, it would nonetheless be a mistake to see a dog sneeze and say: “See! They did the morally relevant thing! It would be weird to suppose that they didn’t, when they’re sneezing for the same ancestral reasons as us!”

The ancestral reasons for the subvocalization are not the same as the ancestral reasons for the sneeze; and we already have an explanation for why animals sneeze, that doesn’t invoke any process that necessarily produces a follow-up subvocalization.

None of this rules out that dogs subvocalize in a dog-mental-language, on its own; but it does mean that drawing any strong inferences here requires us to have some model of why humans subvocalize.

We can debate what follow-on effects are morally relevant (if any), and debate what minds exhibit those effects. But it concerns me that “there are other parts downstream of the sneeze / flinch / etc. that are required for sentience, and not required for the sneeze” doesn’t seem to be in many people’s hypothesis space. Instead, they observe a behavioral analog, and move straight to a confident ascription “the internal processes accompanying this behavior must be pretty similar”.

In general, I want to emphasize that a blank map doesn't correspond to a blank territory. If you currently don't understand the machinery of consciousness, you should still expect that there are many, many details to learn, whether consciousness is prevalent among alien races or rare.

If a machine isn't built to notice how complicated or contingent it is when it does a mental action we choose to call "introspection", it doesn't thereby follow that the machine is simple, or that it can only be built one way.

Our prior shouldn’t be that consciousness is simple, given the many ways it appears to interact with a wide variety of human mental faculties and behaviors (e.g., its causal effects on the words I’m currently writing); and absent a detailed model of consciousness, you shouldn’t treat your empathic modeling as a robust way of figuring out whether an alien has this particular machinery, since the background facts that make empathic inference pretty reliable in humans (overlap in brain architecture, genes, evolutionary history, etc.) don’t hold across the human-alien gap.

Again, I haven’t given my fragments-of-a-model of consciousness here (which would be required to argue for my probabilities). But I’ve hopefully said enough to move my view from “obviously crazy” to “OK, I see how additional arguments could potentially plug in here to yield non-extreme credences on the prevalence of sapient-but-nonsentient evolved optimizers“.

How likely are extremely good and extremely bad outcomes?

If we could list out the things that 90+% of spacefaring alien races have in common, there’s no guarantee that this list would be very long. I recommend stories like Three Worlds Collide and “Kindness to Kin” for their depiction of genuinely different aliens minds, as opposed to the humans in funny suits common to almost all sci-fi.

That said, I do think there’s more overlap (in expectation) between minds produced by processes similar to biological evolution, than between evolved minds and (unaligned) ML-style minds. I expect more aliens to care about at least some things that we vaguely recognize, even if the correspondence is never exact.

On my models, it’s entirely possible that there just turns out to be ~no overlap between humans and aliens, because aliens turn out to be very alien. But “lots of overlap” is also very plausible. (Whereas I don’t think “lots of overlap” is plausible for humans and misaligned AGI.)

To the extent aliens and humans overlap in values, it's unclear to me whether this is mostly working to our favor or detriment. It could be that a random alien world tends to be worse than a random AI-produced world, exactly because the alien shares more goal-content in common with us, and is therefore more likely to optimize or pessimize quantities that we care about.

If I had to guess, though, I would guess that this overlap makes the alien scenario better in expectation than the misaligned-AI scenario, rather than worse.

A special case of “values overlap increases variance” is that the worst outcomes non-human optimizers produce, as well as the best ones, are likely to come from conscious aliens. This is because:

- Aliens that evolved consciousness are far more likely to end up with consciousness involved in their evolved goals.

- Consciousness states have the potential to be far worse than unconscious ones, or far better.

Since I think it’s pretty plausible that most aliens are nonsentient, I expect most alien universe-shards to look “pretty good” or “meh” from a human perspective, rather than “amazing” or “terrible”.

Note that there’s an enormous gap between "pretty dystopian" and "pessimally dystopian". Across all the scenarios (whether alien, human, or AI), I assign ~0% probability to Strong Dystopia, the sort of scenario you get if something is actively pessimizing the human utility function. "Aliens who we'd rather there be nothing than their CEV" is an immensely far cry from "negative of our CEV". But I’d guess that even Weak Dystopias are fairly rare, compared to “meh” or good outcomes of alien civilizations.

How will aliens feel about us?

Given that I think aliens plausibly tend to produce pretty cool universe-shards, a natural next question is: if we encounter a random alien race one day, will they tend to be glad that they found us? Or will they tend to be the sort of species that would have paid a significant number of galaxies to have paved over earth before we ascended, so that they could have had all our galaxies instead?

I think my point estimate there is "most aliens are not happy to see us", but I’m highly uncertain. Among other things, this question turns on how often the mixture of "sociality (such that personal success relies on more than just the kin-group), stupidity (such that calculating the exact fitness-advantage of each interaction is infeasible), and speed (such that natural selection lacks the time to gnaw the large circle of concern back down)" occurs in intelligent races’ evolutionary histories.

These are the sorts of features of human evolutionary history that resulted in us caring (at least upon reflection) about a much more diverse range of minds than “my family”, “my coalitional allies”, or even “minds I could potentially trade with” or “minds that share roughly the same values and faculties as me”.

Humans today don’t treat a family member the same as a stranger, or a sufficiently-early-development human the same as a cephalopod; but our circle of concern is certainly vastly wider than it could have been, and it has widened further as we’ve grown in power and knowledge.

My tentative median guess is that there are a lot of aliens out there who would be grudging trade partners (who would kill us if we were weaker), and also a smaller fraction who are friendly.

I don’t expect significant violent conflict (or refusal-to-trade) between spacefaring aliens and humans-plus-aligned-AGI, regardless of their terminal values, since I expect both groups to be at the same technology level (“maximal”) when they meet. At that level, I don’t expect there to be a cheap way to destroy rival multi-galaxy civilizations, and I strongly expect civilizations to get more of what they want via negotiation and trade than via a protracted war.[10]

I also don’t think humans ought to treat aliens like enemies just because they have very weird goals. And, extrapolating from humanity’s widening circle of concern and increased soft-heartedness over the historical period — and observing that this trend is caused by humans recognizing and nurturing seeds of virtue that they had within themselves already — I don’t expect our descendants in the distant future to behave cruelly toward aliens, even if the aliens are too weak to fight back.[11]

I also feel this way even if the aliens don’t reciprocate!

Like, one thing that is totally allowed to happen is that we meet the ant-people, and the ant-people don’t care about us (and wouldn’t feel remorse about killing us, a la the buggers in Ender’s Game). So they trade with us because they’re not able to kill us, and the humans are like “isn’t it lovely that there’s diversity of values and species! we love our ant-friends” while the aliens are like “I would murder you and lay eggs in your corpse given the slightest opening, and am refraining only because you’re well-defended by force-backed treaty”, and the humans are like “oh haha you cheeky ants” and make webcomics and cartoons featuring cute anthropomorphized ant-people discovering the real meaning of love and friendship and living in peace and harmony with their non-ant-person brothers and sisters.

To which the ant-person response is, of course, “You appear to be imagining empathic levers in my mind that did not receive selection pressure in my EEA. How I long to murder you and lay eggs in your corpse!”

To which my counter-response is, of course: “Oh, you cheeky ants!”

(Respectfully. I don’t mean to belittle them, but I can’t help but be charmed to some degree.)

Like, reciprocity helps, but my empathy and goodwill for others is not contingent upon reciprocation. We can realize the gains from peace, trade, and other positive-sum interactions without being best buddies; and we can like the ants even if the ants don’t like us back.

Cosmopolitan values are good even if they aren't reciprocated. This is one of the ways that you can tell that cosmopolitan values are part of us, rather than being universal: We'd still want to be fair and kind to the ant-folk, even if they were wanting to lay eggs in our corpse and were refraining only because of force-backed treaty.

This is part of my response to protests "why are you looking at everything from the perspective of human values?" Regard for all sentients, including aliens, isn't up for grabs, regardless of whether it's found only in us, or also in them.

How likely (and how good) are various outcomes on the paperclipper-to-brethren continuum?

Short answer: I’m wildly uncertain about how likely various points on this continuum are, and (outside of the most extreme good and bad outcomes) I’m very uncertain about their utility as well.

I expect an alien’s core goals to reflect pretty different shatterings of evolution’s “fitness” goal, compared to core human goals, and compared to other alien races’ goals. (See also the examples in “Niceness is unnatural.”)

I expect most aliens either…

- … look something like paperclip/squiggle maximizers from our perspective, converting galaxies into unconscious and uninteresting configurations;

- … or look like very-alien brethren, who like totally different things from humans but in a way where we rightly celebrate the diversity;

- … or fall somewhere ambiguously in between those two categories.

Figuring out the utility of different points on this continuum (from an optimally reasonable and cosmopolitan perspective) seems like a wide-open philosophy and (xeno)psychology question. Ditto for figuring out the probability of different classes of outcomes.

Concretely: I expect that there's a big swath of aliens whose minds and preferences are about as weird and unrecognizable to us as the races in Three Worlds Collide — crystalline self-replicators, entities with no brain/genome segregation, etc. — and that turn out to fall somewhere between “explosive self-replicating process that paperclipped the universe and doesn’t have feelings/experiences/qualia” and “buddies”.

If we cross this question with “how likely are aliens to be conscious?”, we get 2x2 scenarios:

| conscious | unconscious | |

| squiggle maximizer | A sentient alien that converts galaxies into something ~valueless. | A non-sentient alien that converts galaxies into something ~valueless. |

| alien brethren | A sentient alien that converts galaxies into something cool. | A non-sentient alien that converts galaxies into something cool. |

I think my point estimate is "a lot more aliens fall on the very-alien-brethren side than on the squiggle-maximizer side”. But I wouldn’t be surprised to learn I’m wrong about that.

My guess would be that the most common variety of alien is “unconscious brethren”, followed by “unconscious squiggle maximizer”, then “conscious brethren”, then “conscious squiggle maximizer”.

It might sound odd to call an unconscious entity “brother”, but it's plausible to me that on reflection, humanity strongly prefers universes with evolved-creatures doing evolved-creature-stuff (relative to an empty universe), even if none of those creatures are conscious.

Indeed, I consider it plausible that “a universe full of humans trading with a weird extraterrestrial race of crystal formations that don’t have feelings” could turn out to be more awesome than the universe where we never run into any true aliens, even though this means that humans control a smaller universe-shard. It's plausible to me that we'd turn out not to care all that much about our alien buddies having first-person “experiences”, if they still make fascinating conversation partners, have an amazing history and a wildly weird culture, have complex and interesting minds, etc. (The question of how much we care about whether aliens are in fact sentient, as opposed to merely sapient, seems open to me.)

And also, it’s not clear that “feelings” or “experiences” or “qualia” (or the nearest unconfused versions of those concepts) are pointing at the right line between moral patients and non-patients. These are nontrivial questions, and (needless to say) not the kinds of questions humans should rush to lock in an answer on today, when our understanding of morality and minds is still in its infancy.

How should we feel about encountering alien brethren?

Suppose that we judge that the ant-queen is more like a brother, not a squiggle maximizer. As I noted above, I think that encountering alien brethren would be a good thing, even though this means that the descendants of humanity will end up controlling a smaller universe-shard. (And I’d guess that many and perhaps most spacefaring aliens are probably brethren-ish, rather than paperclipper-ish.)

This is not to say that I think human-CEV and alien-CEV are equally good (as humans use the word “good”). It's real hard to say what the ratios are between "human CEV", “unboosted humans”, "random alien CEV (absent any humans)", and "random misaligned AI", but my vague intuition is that there's a big factor drop at each of those steps; and I would guess that this still holds even if we filter out the alien paperclippers and alien unethical sadists.

But it is to say that I think we would be enriched by getting to meet minds that were not ourselves, and not of our own creation. Intuitively, that sounds like an awesome future. And I think this sense of visceral fascination and excitement, the “holy shit that’s cool!” reaction, tends to be an important (albeit fallible) indicator of “which outcomes will we end up favoring upon reflection?”.

It’s a clue to our values that we find this scenario so captivating in our fiction, and that our science fiction takes such a strong interest in the idea of understanding and empathizing with alien minds.

Much of the value of alien civilizations might well come from the interaction of their civilization and ours, and from the fairness (which may well turn out to be a major terminal human value) of them getting their just fraction of the universe.

And in most scenarios like “we meet alien space ants and become trading partners”, I’d guess that the space ants’ own universe-shard probably has more cosmopolitan value than a literally empty universe-shard of the same size. It’s cool, at least! Maybe the ant-queens are even able to experience it, and their experiences are cool; that would make me much more confident that indeed, their universe-shard is a lot better than an empty one. And maybe the ant-queens come pretty close to caring about their kids, in ways that faintly echo human values; who knows?

We should be friendly toward an alien race like that, I claim. But still, I’d expect the vast majority of the cosmopolitan value in a mixed world of humans+ants to come from the humans, and from the two groups’ interaction.

So, for example, my guess is that we shouldn’t be indifferent about whether a particular galaxy ends up in our universe-shard versus an alien neighbor’s shard. (Though this is another question where it seems good to investigate far more thoroughly before locking in a decision.)

And if our reachable universe-shard turns out to be 3x as large and resource-rich as theirs, we probably shouldn’t give them a third of our stars to make it fifty-fifty. I think that humanity values fairness a great deal, but not enough to outweigh the other cosmopolitan value that would be burnt (in the vast majority of cases) if we offered such a gift.[12]

Hold up, how is this “cosmopolitan”?

A reasonable objection to raise here is: “Hold on, how can it be ‘cosmopolitan’ to favor human values over the values of a random alien race? Isn’t the whole point of ‘cosmopolitan value’ that you’re not supposed to prioritize human-specific values over strange and beautiful alien perspectives?”

In short, my response is to emphasize that cosmopolitanism is a human value. If it’s also an alien value, then that’s excellent news; but it’s at least a value that is in us.

When we speak of “better” or “worse” outcomes, we (probably) mean “better/worse according to cosmopolitan values (that also give fair fractions to the human-originated styles of Fun in particular)”, at least if these intuitions about cosmopolitanism hold on reflection. (Which I strongly suspect they do.)

In more detail, my response is:

- Cosmopolitanism is a contentful value that’s inside us, not a mostly-contentless function averaging the preferences of all nearby optimizers (or all logically possible optimizers).

- The content of cosmopolitanism is complex and fragile, for the same reason unenlightened present-day human values are complex and fragile.

- There isn’t anything wrong, or inconsistent, with cosmopolitanism being “in us”. And if there were some value according to which cosmopolitanism is wrong, then that value too would need to be in us, in order to move us.

1. Cosmopolitanism isn’t “indifference” or “take an average of all possible utility functions”.

E.g., a good cosmopolitan should be happier to hear that a weird, friendly, diverse, sentient alien race is going to turn a galaxy into an amazing megacivilization, than to hear that a paperclipper is going to turn a galaxy into paperclips. Cosmopolitanism (of the sort that we should actually endorse) shouldn’t be totally indifferent to what actually happens with the universe.

It’s allowed to turn out that we find a whole swath of universe that is the moral equivalent of "destroyed by the Blight", which kinda looks vaguely like life if you squint, but clearly isn't sentient, and we're like "well let's preserve some Blight in museums, but also do a cleanup operation". That's just also a way that interaction with aliens can go; the space of possible minds (and things left in that mind's wake) is vast.

And if we do find the Blight, we shouldn’t lie to ourselves that blighted configurations of matter are just as good as any other possible configuration of matter.

It’s allowed to turn out that we find a race of ant-people (who want to kill us and lay eggs in our corpse, yadda yadda), and that the ant-people are getting ready to annihilate the small Fuzzies that haven’t yet reached technological maturity, on a planet that’s inside the ant-people’s universe-shard.

Where, obviously, you trade rather than war for the rights of the Fuzzies, since war is transparently an inefficient way to resolve conflicts.

But the one thing you don’t do is throw away some of your compassion for the Fuzzies in order to “compromise” with the ant-people’s lack-of-compassion.

The right way to do cosmopolitanism is to care about the Fuzzies’ welfare along with the ant-people’s welfare — regardless of whether the Fuzzies or ant-people reciprocate, and regardless of how they feel about each other — and to step up to protect victims from their aggressors.

There’s a point here that the cosmopolitan value is in us, even though it’s (in some sense) not just about us.

These values are not necessarily in others, no matter how much we insist that our values aren’t human-centric, aren’t speciesist, etc. And because they’re in us, we’re willing to uphold them even when we aren’t reciprocated or thanked.

It’s those values that I have in mind when I say that outcomes are “better” or “worse”. Indeed, I don’t know what other standard I could appeal to, if not values that bear some connection to the contents of our own brains.

But, again, the fact that the values are in us, doesn’t mean that they’re speciesist. A human can genuinely prefer non-speciesism, for the same reason a citizen of a nation can genuinely prefer non-nationalism. Looking at the universe through a lens that is in humans does not mean looking at the universe while caring only about humans. The point is that we'll keep on caring about others, even if we turn out to be alone in that.

2. Cosmopolitan value is fragile, for the same reason unenlightened present-day human values are fragile.

See “Complex Value Systems Are Required to Realize Valuable Futures” and the Arbital article on cosmopolitan value.

There are many ways to lose an enormous portion of the future’s cosmopolitan value, because the simple-sounding phrase “cosmopolitan value” translates into a very complex logical object (making many separate demands of the future) once we start trying to pin it down with any formal precision.

Our prior shouldn’t be that a random intelligent species would happen to have a utility function pointing at exactly the right high-complexity object. So it should be no surprise if a large portion of the future’s value is lost in switching between different alien species’ CEVs, e.g., because half of the powerful aliens are the Blight and another half are the ant-queens, and both of them are steamrolling the Fuzzies before the Fuzzies can come into their own. (That's a way the universe could be, for all that we protest that cosmopolitanism is not human-centric.)

And even if the aliens turn out to have some respect for something roughly like cosmopolitan values, that doesn't mean that they'll get as close as they could if they had human buddies (who have another five hundred million years of moral progress under our belts) in the mix.

3. There is no radically objective View-From-Nowhere utility function, no value system written in the stars.

(... And if there were, the mere fact that it exists in the heavens would not be a reason for human CEV to favor it. Unless there’s some weird component of human CEV that says something like “if you encounter a pile of sand on a planet somewhere that happens to spell out a utility function in morse code, you terminally value switching to some compromise between your current utility function and that utility function”. … Which does not seem likely.)

If our values are written anywhere, they’re written in our brain states (or in some function of our brain states).

And this holds for relatively enlightened, cosmopolitan, compassionate, just, egalitarian, etc. values in exactly the same way that it holds for flawed present-day human values.

In the long run, we should surely improve on our brains dramatically, or even replace ourselves with an entirely new sort of mind (or a wondrously strange intergalactic patchwork of different sorts of minds).

But we shouldn’t be indifferent about which sorts of minds we become or create. And the answer to “which sorts of minds/values should we bring into being?” is some (complicated, not-at-all-trivial-to-identify) function of our current brain. (What else could it be?)

Or, to put it another way: the very idea that our present-day human values are “flawed” has to mean that they’re flawed relative to some value function that’s somehow pointed at by the human brain.

There’s nothing wrong (or even particularly strange) about a situation like “Humans have deeper, stronger (‘cosmopolitan’) values that override other human values like ‘xenophobia’”.

Mostly, we’re just not used to thinking in those terms because we’re used to navigating human social environments, where an enormous number of implicit shared values and meta-values can be taken for granted to some degree. It takes some additional care and precision to bring genuinely alien values into the conversation, and to notice when we’re projecting our own values. (Onto other species, or onto the Universe.)

If a value (or meta-value or meta-meta-value or whatever) can move us to action, then it must be in some sense a human value. We can hope to encounter aliens who share our values to some degree; but this doesn’t imply that we ought (in the name of cosmopolitanism, or any other value) to be indifferent to what values any alien brethren possess. We should probably assist the Fuzzies in staving off the Blight, on cosmopolitan grounds. And given value fragility (and the size of the cosmic endowment), we should expect the cosmopolitan-utility difference between totally independent evolved value systems to be enormous.

This, again, is no reason to be any less compassionate, fair-minded, or tolerant. But also, compassion and fair-mindedness and tolerance don’t imply indifference over utility functions either!

3. The superintelligent AI we’re likely to build by default << Aliens

In the case of aliens, we might imagine encountering them hundreds of millions or billions of years in the future — plenty of time to anticipate and plan for a potential encounter.

In the case of AI, the issue is much more pressing. We have the potential to build superintelligent AI systems very soon; and I expect far worse outcomes from misaligned AI optimizing a universe-shard than from a random alien doing the same (even though there’s obviously nothing inherently worse about silicon minds than about biological minds, alien crystalline minds, etc.).

For examples of why the first AGIs are likely to immediately blow human intelligence out of the water, see AlphaGo Zero and the Foom Debate and Sources of advantage for digital intelligence. For a discussion of why alignment seems hard, and why such systems are likely to kill us if we fail to align them, see So Far and AGI Ruin.

The basic reason why I expect AI systems to produce worse outcomes than aliens is that other evolved creatures are more likely to have overlap with us, by dint of their values being forced by more similar processes. And some of the particular ways in which misaligned AI is likely to differ from an evolved species suggests a much more homogeneous and simple future. (Like “a universe tiled with molecular squiggles”.)[13]

The classic example of AGI ruin is the "paperclip maximizer" (which should probably be called a "molecular squiggle maximizer" instead):

So what actually happens as near as I can figure (predicting future = hard) is that somebody is trying to teach their research AI to, god knows what, maybe just obey human orders in a safe way, and it seems to be doing that, and a mix of things goes wrong like:

The preferences not being really readable because it's a system of neural nets acting on a world-representation built up by other neural nets, parts of the system are self-modifying and the self-modifiers are being trained by gradient descent in Tensorflow, there's a bunch of people in the company trying to work on a safer version but it's way less powerful than the one that does unrestricted self-modification, they're really excited when the system seems to be substantially improving multiple components, there's a social and cognitive conflict I find hard to empathize with because I personally would be running screaming in the other direction two years earlier, there's a lot of false alarms and suggested or attempted misbehavior that the creators all patch successfully, some instrumental strategies pass this filter because they arose in places that were harder to see and less transparent, the system at some point seems to finally "get it" and lock in to good behavior which is the point at which it has a good enough human model to predict what gets the supervised rewards and what the humans don't want to hear, they scale the system further, it goes past the point of real strategic understanding and having a little agent inside plotting, the programmers shut down six visibly formulated goals to develop cognitive steganography and the seventh one slips through, somebody says "slow down" and somebody else observes that China and Russia both managed to steal a copy of the code from six months ago and while China might proceed cautiously Russia probably won't, the agent starts to conceal some capability gains, it builds an environmental subagent, the environmental agent begins self-improving more freely, undefined things happen as a sensory-supervision ML-based architecture shakes out into the convergent shape of expected utility with a utility function over the environmental model, the main result is driven by whatever the self-modifying decision systems happen to see as locally optimal in their supervised system locally acting on a different domain than the domain of data on which it was trained, the light cone is transformed to the optimum of a utility function that grew out of the stable version of a criterion that originally happened to be about a reward signal counter on a GPU or God knows what.

Perhaps the optimal configuration for utility per unit of matter, under this utility function, happens to be a tiny molecular structure shaped roughly like a paperclip.

That is what a paperclip maximizer is. It does not come from a paperclip factory AI. That would be a silly idea and is a distortion of the original example.

This example is obviously comically conjunctive; the point is in no way "we have a crystal ball, and can predict that things will go down in this ridiculously-specific way". Rather, the point is to highlight ways in which the development process of misaligned superintelligent AI is very unlike the typical process by which biological organisms evolve.

Some relatively important differences between intelligences built by evolution-ish processes and ones built by stochastic-gradient-descent-ish processes:

- Evolved aliens are more likely to have a genome/connectome split, and a bottleneck on the genome.

- Aliens are more likely to have gone through societal bottlenecks.

- Aliens are much more likely the result of optimizing directly for intergenerational prevalence. The shatterings of a target like “intergenerational prevalence” are more likely to contain overlap with the good stuff, compared to the shatterings of training for whatever-training-makes-the-AGI-smart-ASAP. (Which is the sort of developer goal that’s likely to win the AGI development race and kill humanity first.)

Evolution tends to build patterns that hang around and proliferate, whereas AGIs are likely to come from an optimization target that's more directly like "be good at these games that we chose with the hope that being good at them requires intelligence", and the shatterings of the latter are less likely to overlap with our values.[14]

To be clear, “I trained my AGI in a big pen of other AGIs and rewarded it for proliferating” still results in AGIs that kill you. Most ways of trying won't replicate the relevant properties of evolution. And many aliens would murder Earth in its cradle if they could too. And even if your goal were just “get killed by an AGI that produces a future as good as the average alien’s CEV”, I would expect the "reward AGI for proliferating" approach to result in almost-zero progress toward that goal, because there’s a huge architectural gap between AI and biology, and (in expectation) another huge gap in the various ways that you built the pen wrong.[15]

You've really got to have a lot of things line up favorably in order to get niceness into your AGI system; and evolution’s much more likely to spit that out than AGI training, and so some aliens are nice (even though we didn’t build them), to a far greater degree than some AGIs are nice (if we don’t figure out alignment).

I would also predict that aliens have a much higher rate of somebody-is-home (sentience, consciousness, etc.), because of the contingencies of evolutionary history that I think resulted in human consciousness. I have wide error bars on how common these contingencies are across evolved species, but a much lower probability that the contingencies also arise when you’re trying to make the thing smart rather than good-at-proliferating.

The mechanisms behind qualia seem to me to involve at least one epistemically-derpy shortcut — the sort of thing that’s plausibly rare among aliens, and very likely rare among misaligned AI systems.

If we get lucky on consciousness being a super common hiccup, I could see more worlds where misaligned AI produces good outcomes. My current probability is something like 90% that if you produced hundreds of random uncorrelated superintelligent AI systems, <1% of them would be conscious.[16]

The most important takeaway from this post, I’d claim, is: If humanity creates superintelligences without understanding much about how our creations reason, then our creations will kill literally everyone and do something boring with the universe instead.

I'm not saying "it will take joy in things that I don't recognize; but I want the future to have my values rather than the values of my child, like many a jealous parent before me." I'm saying that, by default, you get a wasteland of molecular squiggles.

We basically have to go for superintelligence at some point, given the overwhelming amount of value that we can expect to lose if we rely on crappy human brains to optimize the future. But we also have to achieve this transition to AGI in the right way, on pain of wiping out ~everything.

Right now it looks to me like the world is rushing headlong down the "wipe out ~everything" branch, for lack of having even put a nontrivial amount of serious thought into the question of how to shape good outcomes via highly capable AI.

And so I try to redirect that path, or protest against the most misdirected attempts to address the problem.

I note that we have no plan, we have no science of differentially selecting AGI systems that produce good outcomes, and a reasonable planet would not race off a cliff before thinking about the implications.

And when I do that, I worry that it's easy to misread me as being anti-superintelligence, and anti-singularity. So I’ve written this post in part for the benefit of the rare reader who doesn’t already know this: I'm pro-singularity.

I consider myself a transhumanist. I think the highest calling of humanity today is to bring about a glorious future for a wondrously strange universe of posthuman minds.

And I'd really appreciate it if we didn't kill literally everyone and turn the universe into an empty wasteland before then.

- ^

And my concept of “what makes life worth living” is very likely an impoverished one today, and a friendly superintelligence could guide us to discovering even cooler versions of things like “art” and “adventure”, transcending the visions of fun that humanity has considered to date. The limit of how good the universe could become, once humanity has matured and grown into its full potential, likely far surpasses what any human today can concretely imagine.

- ^

I’ll flag that I do think that some people overestimate how “unimaginable” the future is likely to be, out of some sense of humility/modesty.

I think there's a decent chance that if you showed me the future I'd be like “ah, so that's what computronium looks like” or “so reversible computers wrapped around black holes did turn out to be best”, and that when you show me the experiences running on those computers, I'm like "neato, yeah, lots of minds having fun, I'm sure some of that stuff would look pretty fun to me if you decoded it". I wouldn’t expect to immediately understand everything going on, but I wouldn’t be surprised if I can piece together the broad strokes.

In that sense, I find it plausible that ~optimal futures will turn out to be familiar/recognizable/imaginable to a digital-era transhumanist in a way they wouldn't be to an ancient Roman. We really are better able to see the whole universe and its trajectory than they were.

To be clear, it's very plausible to me that it'll somehow be unrecognizable or shocking to me, as it would have been to an ancient Roman, at least on some axes. But it's not guaranteed, and we don't have to pretend that it's guaranteed in order to avoid insinuating that we're in a better epistemic position than people were in the past. We are in a better epistemic position than people were in the past!

There's a separate point about how much translation work you need to do before I recognize a particular arc of fun unfolding before me as something actually fun. On that point I’m like, "Yeah, I'm not going to recognize/understand my niece's generation's memes, never mind a posthuman’s varieties of happiness, without a lot more context (and plausibly a much bigger and deeply-changed mind)".

Separately, I don't want to make any claims about how hard and fast humanity becomes "strongly transhuman" / changes to using minds that would be unrecognizable (as humans) to the present. I'd be surprised if it were super-fast for everyone, and I'd be surprised if some humans' minds weren’t very different a thousand sidereal years post-singularity. But I have wide error bars.

- ^

Provided that this turns out to be a good use of stellar resources. (I'm not confident one way or the other. E.g., I'm not confident that human-originated minds get relevantly more interesting/fun at Matrioshka-brain scales. Maybe we’ll learn that slapping on more matter at that scale lets you prove some more theorems or whatever, but isn’t the best way to convert negentropy into fun, compared to e.g. spending that compute on whole civilizations full of interacting and flourishing people who don't have star-sized brains.)

- ^

A separate reason it’s a terrible idea to destroy ourselves is that, e.g., if the nearest aliens are 500 million years away then our death means that a ~500 million lightyear radius sphere of stellar fuel is going to be entirely wasted, instead of spent on rad stuff.

- ^

As I’ll note later, this odds ratio is a result of giving 0.2x weight to “humans control the universe-shard”, 0.5x to “aliens control it”, and 0.3x to “unfriendly AI built by aliens controls it”. Rob rounded the resulting odds ratio in this table to 1 : 5 : 7 : 5 : 14 : 1 : ~0.

Also, as a general reminder: I’m giving my relatively off-the-cuff thoughts in this post, recognizing that I’ll probably recognize some of my numbers as inconsistent — or otherwise mistaken — if I reflect more. But absent more reflection, I don’t know which direction the inconsistencies would shake out.

- ^

I’d have some inclination to go lower, but for the one evolved species we've seen seeming dead-set on destroying itself.

- ^

Though another input to the value of the future, in this scenario, is “What happens to the places that the pilgrims had to leave behind until some pilgrim group hit upon a non-terrible organizational system?” Hopefully it’s not too terrible, but it’s hard to say with humans!

One note of optimism is that there’s likely to be a strong negative correlation (in this ~impossible hypothetical) between “how terrible is the civilization?” and “how interested is it in spreading to the stars, or spreading far?” Many ways of shutting down moral progress, robust civic debate, open exploration of ideas, etc. also cripple scientific and technological progress in various ways, or involve commitment to a backwards-looking ideology. It’s possible for the universe-shard to be colonized by Space Amish, but it’s a weirder hypothetical.

- ^

Note that I’ll use phrasings like “there’s something it’s like to be them”, “they’re sentient”, and “they’re conscious” interchangeably in this post. (This is not intended to be a bold philosophical stance, but rather a flailing attempt to wave at properties of personhood that seem plausibly morally relevant.)

- ^

Eliezer uses the term “outcome pump” to introduce a similar idea:

The Outcome Pump is not sentient. It contains a tiny time machine, which resets time unless a specified outcome occurs. For example, if you hooked up the Outcome Pump's sensors to a coin, and specified that the time machine should keep resetting until it sees the coin come up heads, and then you actually flipped the coin, you would see the coin come up heads. (The physicists say that any future in which a "reset" occurs is inconsistent, and therefore never happens in the first place - so you aren't actually killing any versions of yourself.)

Whatever proposition you can manage to input into the Outcome Pump, somehow happens, though not in a way that violates the laws of physics. If you try to input a proposition that's too unlikely, the time machine will suffer a spontaneous mechanical failure before that outcome ever occurs.

I think his example is underspecified, though. Suppose that you ask the outcome pump for paperclips, and physics says “sorry, this outcome is too improbable” and exhibits a mechanical failure. This would then mean that it’s true that the outcome pump outputting paperclips is “improbable”, which makes the hypothetical consistent. We need some way to resolve which internally-consistent set of physical laws compatible with this description (“make paperclips” or “don’t make paperclips”) actually occurs; the so-called "outcome pump" is not necessarily pumping the desired outcome.

Giving the time machine the ability to output a random sequence of actions addresses this problem: we can say that the machine only undergoes a mechanical failure if some large number (e.g., Graham’s number) of random action sequences all fail to produce the target outcome. We can then be confident that the outcome pump will eventually brute-force a solution, provided that one is physically possible.

Other examples of easily-understood non-conscious optimization processes that can achieve very impressive things include AIXI and natural selection. The AIXI example is made pedagogically complicated for present purposes, however, by the fact that AIXI’s hypothesis space contains many smaller conscious optimizers (that don't much matter to the point, but that might confuse those who can see that some hypotheses contain conscious reasoners and can't see their irrelevance to the point at hand); and the natural selection example is weakened by the fact that selection isn't a very powerful optimizer.

- ^

A possible objection here is “Human emotional responses often cause us to get into violent conflicts in cases where this foreseeably isn’t worth it; why couldn’t aliens be the same?”. But “technology for widening the space of profitable trades” is in the end just another technology, and ambitious spacefaring species are likely to discover such tech for the same reason they’re likely to discover other tech that’s generally useful for getting more of what you want. Humans have certainly gotten better at this over time, and if we continue to advance our scientific understanding, we’re likely to get far better still.

- ^

Like, we've seen that the seeds are there, and it would be pretty weird for us to go around uprooting seeds of value on a whim.

As a side-note: one of my hot takes about how morality shakes out is "we don't sacrifice anything (among the seeds of value)". Like, values like sadism and spite might be tricky to redeem, but if we do our job right I think we should end up finding a way to redeem them.

- ^

Unless we’ve made some bargain across counterfactual worlds that justifies our offering this gift in our world. But there are friction costs to bargains, and my guess is that the way it pans out is that you keep what you can get in your branch and it evens out across branches.

As a side-note, another possible implication of my view on “alien brethren” is: in the much less likely event that we meet weak young non-spacefaring aliens, the future might go drastically better if we help guide their development as a species, teaching them about the Magic of Friendship and all that.

(Or perhaps not. I remain very uncertain about whether it’s positive-human-EV to guide alien development.)

- ^

Though some aliens may shake out to be simple too! Humans are pretty far from "tile the universe with vats of genes", but it's not clear how contingent that fact is.

- ^

Though it should be emphasized that we're totally allowed to find that evolved life tends to go some completely different way than how humans shook out. Generalizing from one example is hard!!

- ^

And even if you succeeded, it’s not clear that you’d get any utility as a result; my guess that evolved aliens tend to be better than paperclippers can just be wrong, easily.

And even if you got some utility, it’s going to be a paltry amount compared to if you’d built aligned AGI.

- ^

Possibly this is too extreme; I haven’t refined these probabilities much, and am still just giving my off-the-cuff numbers.

In any case, I want to emphasize that my view isn’t “most misaligned AGIs aren’t sentient, but if you randomly spin up a large number of them you’ll occasionally get a sentient one”. Rather, my view is “almost no random misaligned AGIs are sentient” (but with some uncertainty about whether that’s true). I’m much more uncertain about whether this background view is true than I am uncertain about whether, given this background view, a given misaligned AGI will happen to be sentient.

(Like how I think the chance that the lightspeed limit turns out to be violable is greater than 1 in a billion; but that doesn't mean that if you threw a billion baseballs, I would expect one of them to break the lightspeed limit on average.)

RobBensinger @ 2022-11-01T00:55 (+17)

An interface for registering your probabilities (or you can just say stuff in comments):

Charlie_Guthmann @ 2022-11-01T07:55 (+10)

I started filling this out and then stopped because I'm confused about this CEV and cosmopolitan value stuff and just generally what OP means by value. It's possible I'm confused because I missed something (I skimmed the post but read most of it). Questions that would help me answer the prediction's above.

- What is the definition of value are we are supposed to be using (my current intuition is average CEV of humans)?

- Was I meant to just answer the above question with my own values (or my CEV)?

- Do other people feel like the above questions are invariant to the definition of value/ specific value of CEVs?

- What is the definition of cosmopolitian value and how is it action relevant in all of this?

The stuff below is a bit rambly so apologies in advance.

I don't really get the purpose of CEV for this stuff or why it solves any deep problems of defining value. I definitely think we should reflect on our moral values and update on new information as it feels right to us. This doesn't mean we solved ethics. It also begs the question of whose CEV we are using? CEV is agent dependent, so we need to specficy how we weight the CEV's of all the agents we are taking into consideration. In any case, my main complaint is that if the answers to the above questions are at least in part a function of what our CEV is(or what definition of value we are use), then I feel like we are stacking two questions on top of each other and not necessarily leaving room to talk through cruxes of either.

Let's assume we are just taking the average CEV of human's alive today as our definition of value. Some vales might be more difficult to pull off then others, as they may trend further from what aliens want or just be harder to pull of in the context of the amount of shards we have. Plus like, I just assumed we are taking the average of human's CEVs but we don't know what political system we will have. Who's to say that just because we have the ASI and have an average CEV value the human's will agree to push towards this average CEV. I guess in short I feel like I'm guessing the CEV and how that achievable that CEV is.

I also don't really follow the cosmopolitan stuff. I have cosmopolitan intuitions but I'm unclear what the author is getting at with it. I have some vague sense that this is trying to address the fact that CEV gives special weight to agents that are alive now. Not really sure how to even express my confusion if I'm being honest.

That being said I loved this post. Lot's of information from disparate places put together. A summary could be nice, maybe i'll try to write one if no one else does.

RobBensinger @ 2022-11-01T20:11 (+3)

- What is the definition of value are we are supposed to be using (my current intuition is average CEV of humans)?

- Was I meant to just answer the above question with my own values (or my CEV)?

The OP defines Strong Utopia as "At least 95% of the future’s potential value is realized.", and then defines the other scenarios via various concrete scenarios that serve as benchmarks for how "good" the universe is.

CEV isn't mentioned at that part of the article, nor is any other account of what "good" and "value" mean, so IMO you should use your own conception of what it means for things to be good, valuable, etc. Which outcomes would actually be better or worse, by your own lights?

My own personal view is that CEV is a good way of hand-waving at "good" and "valuable", and I can say more about that if helpful. The main resource I'd recommend reading is https://arbital.com/p/cev/.

I don't know what you mean by the "average" CEV of humans. Eliezer's proposal on https://arbital.com/p/cev/ is to use all humans as the extrapolation base for CEV.

I predict that if you ran a CEV-ish process extrapolating from my brain, it would give the same ultimate answers as a CEV-ish process extrapolating from all humans' brains. (Among other things, because my brain would probably prefer to run a CEV that takes into account everyone else's brain-state too, and it can just go do that; and because the universe is way too abundant in resources and my selfish desires get saturated almost immediately, leaving the rest of the cosmic endowment for the welfare of other minds.)