The Offense-Defense Balance Rarely Changes

By Maxwell Tabarrok @ 2023-12-09T15:22 (+82)

This is a linkpost to https://maximumprogress.substack.com/p/the-offense-defense-balance-rarely

You’ve probably seen several conversations on X go something like this:

Michael Doomer ⏸️: Advanced AI can help anyone make bioweapons

If this technology spreads it will only take one crazy person to destroy the world!Edward Acc ⏩: I can just ask my AI to make a vaccine

Yann LeCun: My good AI will take down your rogue AI

The disagreement here hinges on whether a technology will enable offense (bioweapons) more than defense (vaccines). Predictions of the “offense-defense balance” of future technologies, especially AI, are central in debates about techno-optimism and existential risk.

Most of these predictions rely on intuitions about how technologies like cheap biotech, drones, and digital agents would affect the ease of attacking or protecting resources. It is hard to imagine a world with AI agents searching for software vulnerabilities and autonomous drones attacking military targets without imagining a massive shift the offense defense balance.

But there is little historical evidence for large changes in the offense defense balance, even in response to technological revolutions.

Consider cybersecurity. Moore’s law has taken us through seven orders of magnitude reduction in the cost of compute since the 70s. There were massive changes in the form and economic uses for computer technology along with the increase in raw compute power: Encryption, the internet, e-commerce, social media and smartphones.

The usual offense-defense balance story predicts that big changes to technologies like this should have big effects on the offense defense balance. If you had told people in the 1970s that in 2020 terrorist groups and lone psychopaths could access more computing power than IBM had ever produced at the time from their pocket, what would they have predicted about the offense defense balance of cybersecurity?

Contrary to their likely prediction, the offense-defense balance in cybersecurity seems stable. Cyberattacks have not been snuffed out but neither have they taken over the world. All major nations have defensive and offensive cybersecurity teams but no one has gained a decisive advantage. Computers still sometimes get viruses or ransomware, but they haven’t grown to endanger a large percent of the GDP of the internet. The US military budget for cybersecurity has increased by about 4% a year every year from 1980-2020, which is faster than GDP growth, but in line with GDP growth plus the growing fraction of GDP that’s on the internet.

This stability through several previous technological revolutions raises the burden of proof for why the offense defense balance of cybersecurity should be expected to change radically after the next one.

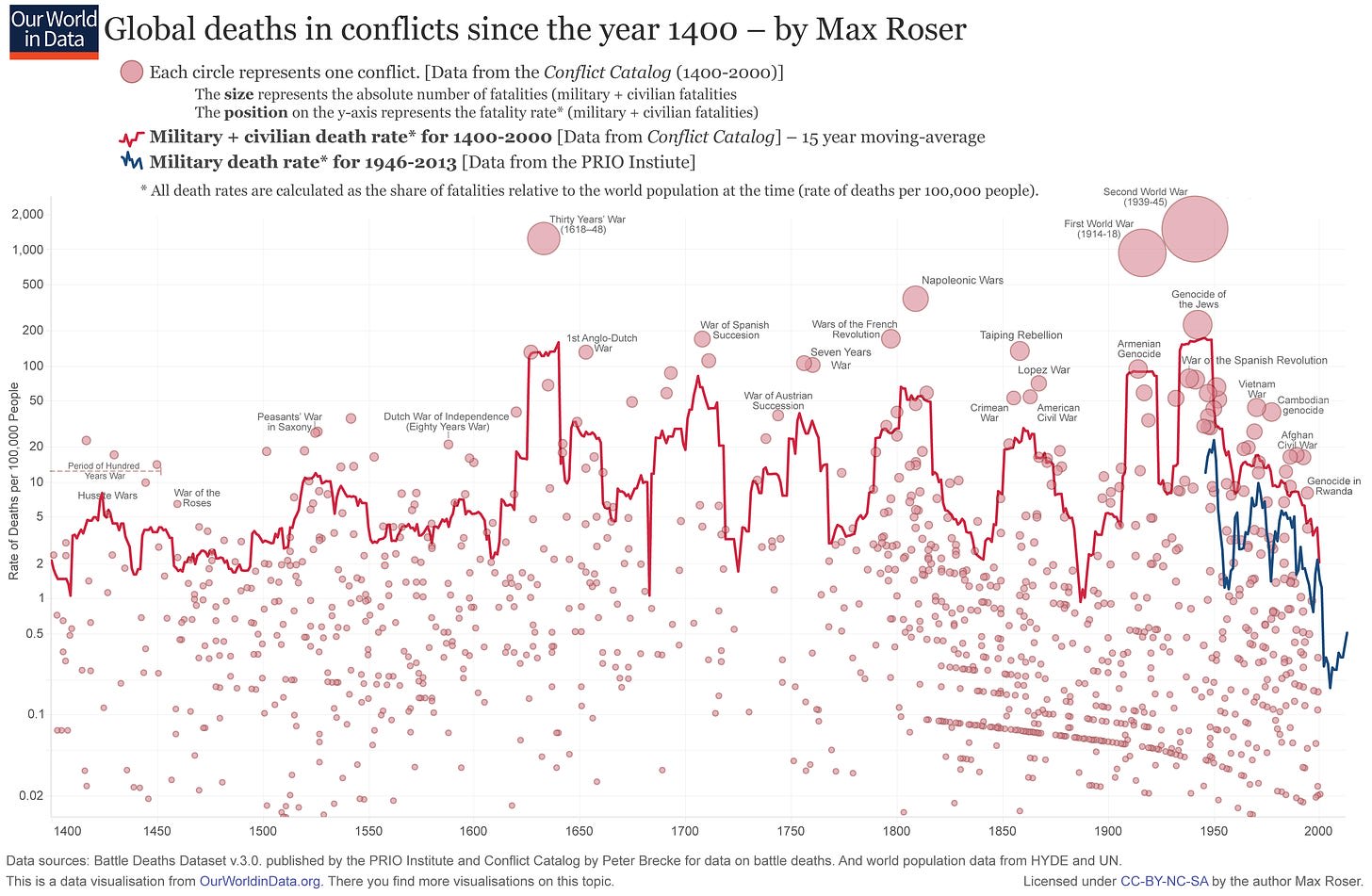

The stability of the offense-defense balance isn’t specific to cybersecurity. The graph below shows the per capita rate of death in war from 1400 to 2013. This graph contains all of humanity’s major technological revolutions. There is lots of variance from year to year but almost zero long run trend.

Does anyone have a theory of the offense-defense balance which can explain why the per-capita deaths from war should be about the same in 1640 when people are fighting with swords and horses as in 1940 when they are fighting with airstrikes and tanks?

It is very difficult to explain the variation in this graph with variation in technology. Per-capita deaths in conflict is noisy and cyclic while the progress in technology is relatively smooth and monotonic.

No previous technology has changed the frequency or cost of conflict enough to move this metric far beyond the maximum and minimum range that was already set 1400-1650. Again the burden of proof is raised for why we should expect AI to be different.

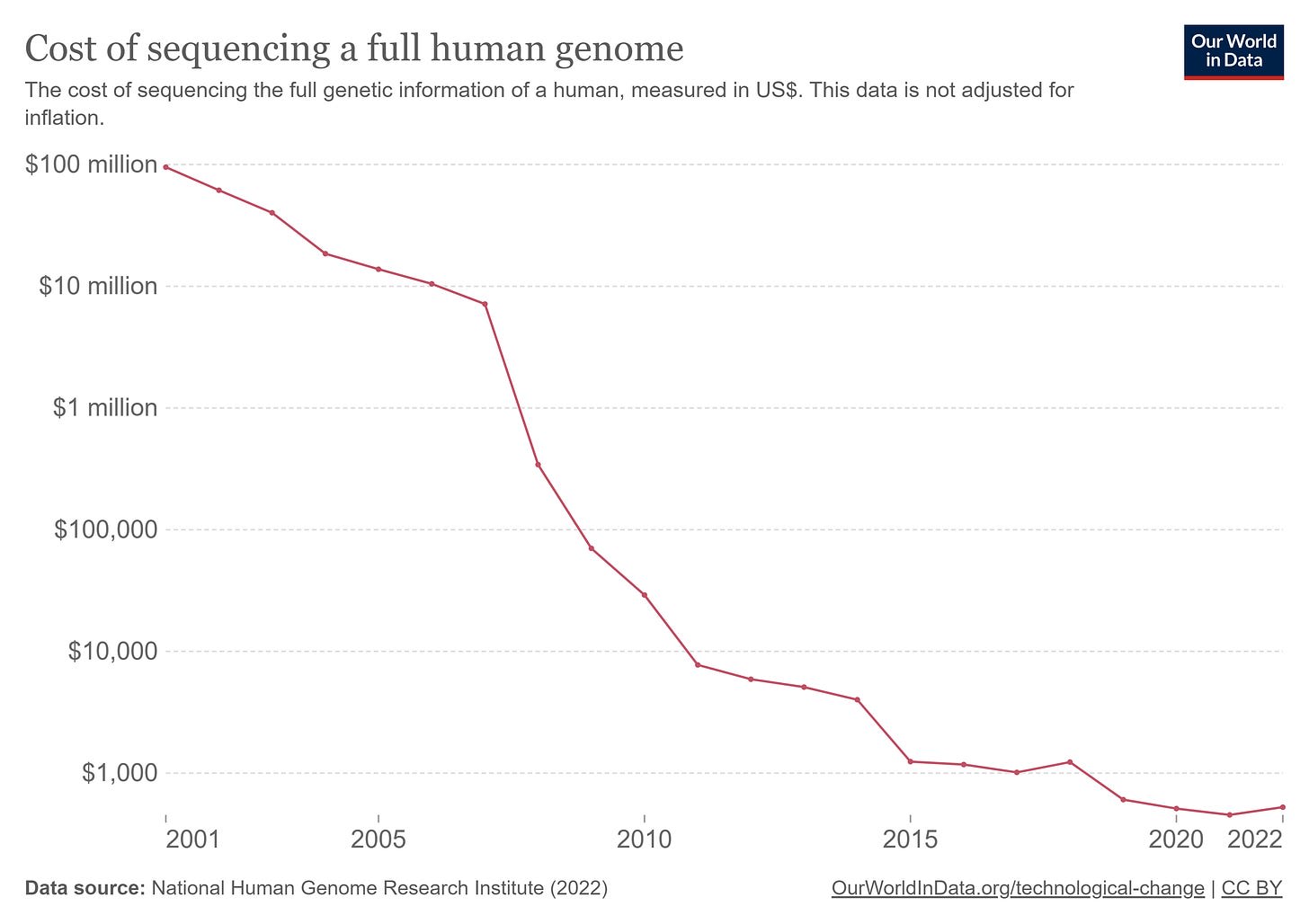

The cost to sequence a human genome has also fallen by 6 orders of magnitude and dozens of big technological changes in biology have happened along with it. Yet there has been no noticeable response in the frequency or damage of biological attacks.

Possible Reasons For Stability

Why is the offense-defense balance so stable even when the technologies behind it are rapidly and radically changing? The main contribution of this post is just to support the importance of this question with empirical evidence, but here is an underdeveloped theory.

The main thing is that the clean distinction between attackers and defenders in the theory of the offense-defense balance does not exist in practice. All attackers are also defenders and vice-versa. Invader countries have to defend their conquests and hackers need to have strong information security.

So if there is some technology which makes invading easier than defending or info-sec easier than hacking, it might not change the balance of power much because each actor needs to do both. If offense and defense are complements instead of substitutes then the balance between them isn’t as important.

What does this argument predict for the future of AI? It does not predict that the future will be very similar to today. Even though the offense defense balance in cybersecurity is pretty similar today as in the 1970s, there have been massive changes in technology and society since then. AI is clearly the defining technology of this century.

But it does predict that the big changes from AI won’t come from huge upsets to the offense-defense balance. The changes will look more like the industrial revolution and less like small terrorist groups being empowered to take down the internet or destroy entire countries.

Maybe in all of these cases there are threshold effects waiting around the corner or AI is just completely different from all of our past technological revolutions but that’s a claim that needs a lot of evidence to be proven. So far, the offense-defense balance seems to be very stable through large technological change and we should expect that to continue.

Ben Stewart @ 2023-12-09T20:45 (+47)

I weakly agree with the claim that the offense/defense balance is not a useful way to project the implications of AI. However, I disagree strongly with how the post got there. Considering only cyber-security and per-capita death rate is not a sufficient basis for the claim that there is "little historical evidence for large changes in the O/D balance, even in response to technological revolutions."

There are good examples where technology greatly shifts the nature of war: castles favouring defense, before becoming negated by cannons. The machine gun and barbed wire are typically held as technologies that gave a significant defensive advantage in WWI, and were crucial in the development of trench warfare. Tanks similarly for offense. And so on. One would need to consider and reject these examples (which is certainly plausible, but needs to be actually engaged with).

I don't think the per capita mortality from war is a very useful measure of the O/D balance - it will be driven by many other confounding factors, and as you point out technologies alter the decision calculus of both sides of a conflict. Part of the problem with the O/D theory is a lack of well-defined measures (e.g.). From my impression of the literature, it also appears to fail in empirical predictions (e.g.). Cyber-security is a good example of an arena where O/D theory applies poorly (e.g.). I think this section does well to support the claim that "shifting O/D balance doesn't dominantly affect the frequency and intensity of war", but it doesn't show that "technologies don't shift the O/D balance".

So I disagree with the post by thinking that technologies can systematically favour aggressive vs. defensive actions and actors, but agree that this pattern doesn't necessarily result in strong implications about the rate or intensity of large-scale conflict. However, I think there can still be implications for non-state violence, especially when the technology enables an 'asymmetric weapon' - a cheap way to inflict very costly damages or demand costly defence. The prototypical example here being terrorist use of improvised explosives. This is an important channel for worries about future bioweapons.

Marcel D @ 2023-12-16T16:14 (+3)

Thank you so much for articulating a bunch of the points I was going to make!

I would probably just further drive home the last paragraph: it’s really obvious that the “number of people a lone maniac can kill in given time” (in America) has skyrocketed with the development of high fire-rate weapons (let alone knowledge of explosives). It could be true that the O/D balance for states doesn’t change (I disagree) while the O/D balance for individuals skyrockets.

Wei Dai @ 2023-12-09T19:28 (+29)

The main thing is that the clean distinction between attackers and defenders in the theory of the offense-defense balance does not exist in practice. All attackers are also defenders and vice-versa.

I notice that this doesn't seem to apply to the scenario/conversation you started this post with. If a crazy person wants to destroy the world with an AI-created bioweapon, he's not also a defender.

Another scenario I worry about is AIs enabling value lock-in, and then value locked-in AIs/humans/groups would have an offensive advantage in manipulating other people's values (i.e., those who are not willing to value lock-in yet) while not having to be defenders.

gwern @ 2023-12-11T23:47 (+16)

If a crazy person wants to destroy the world with an AI-created bioweapon

Or, more concretely, nuclear weapons. Leaving aside regular full-scale nuclear war (which is censored from the graph for obvious reasons), this sort of graph will never show you something like Edward Teller's "backyard bomb", or a salted bomb. (Or any of the many other nuclear weapon concepts which never got developed, or were curtailed very early in deployment like neutron bombs, for historically-contingent reasons.)

There is, as far as I am aware, no serious scientific doubt that they are technically feasible: multi-gigaton bombs could be built or that salted bombs in relatively small quantities would render the earth uninhabitable to a substantial degree, for what are also modest expenditures as a percentage of GDP etc. It is just that there is no practical use of these weapons by normal, non-insane people. There is no use in setting an entire continent on fire, or in long-term radioactive poisoning of the same earth on which you presumably intend to live afterwards.

But you would be greatly mistaken if you concluded from historical data that these were impossible because there is nothing in the observed distribution anywhere close to those fatality rates.

(You can't even make an argument from an Outside View of the sort that 'there have been billions of humans and none have done this yet', because nuclear bombs are still so historically new, and only a few nuclear powers were even in a position to consider whether to pursue these weapons or not - you don't have k = billions, you have k < 10, maybe. And the fact that several of those pursued weapons like neutron bombs as far as they did, and that we know about so many concepts, is not encouraging.)

finm @ 2023-12-10T12:52 (+26)

Copying a comment from Substack:

If offence and defence both get faster, but all the relative speeds stay the same, I don’t see how that in itself favours offence (we get ICBMs, but the same rocketry + guidance etc tech means missile defence gets faster at the same rate). But ideas like this make sense, e.g. if there are any fixed lags in defence (like humans don’t get much faster at responding but need to be involved in defensive moves) then speed favours offence in that respect.

That is to say there could be a 'faster is different' effect, where in the AI case things might move too chaotically fast — faster than the human-friendly timescales of previous tech — to effectively defend. For instance, your model of cybersecurity might be a kind of cat-and-mouse game, where defenders are always on the back foot looking for exploits, but they patch them with a small (fixed) time lag. The lag might be insignificant historically, until the absolute lag begins to matter. Not sure I buy this though.

A related vague theme is that more powerful tech in some sense ‘turns up the volatility/variance’. And then maybe there’s some ‘risk of ruin’ asymmetry if you could dip below a point that’s irrecoverable, but can’t rise irrecoverably above a point. Going all in on such risky bets can still be good on expected value grounds, while also making it much more likely that you get wiped out, which is the thing at stake.

Also, embarassingly, I realise I don't have a very good sense of how exactly people operationalise the 'offence-defence balance'. One way could be something like 'cost to attacker of doing $1M of damage in equilibrium', or in terms of relative spending like Garfinkel and Dafoe do ("if investments into cybersecurity and into cyberattacks both double, should we expect successful attacks to become more or less feasible"). Or maybe something about the cost-per-attacker spending to hold on to some resource (or cost-per-defender spending to sieze it).

This is important because I don't currently know how to say that some technology is more or less defence-dominant than another, other than in a hand-wavery intuitive way. But in hand-wavey terms it sure seems like bioweapons are more offence-dominant than, say, fighter planes. Because it's already the case that you need to spend a lot of money to prevent most the damage someone could cause with not much money at all.

I see the AI stories — at least the ones I find most compelling — as being kinda openly idiosyncratic and unprecedented. The prior from previous new tech very much points against them, as you show. But the claim is just: yes, but we have stories about why things are different this time ¯\_(ツ)_/¯

Great post.

Marcel D @ 2023-12-16T16:37 (+5)

If offence and defence both get faster, but all the relative speeds stay the same, I don’t see how that in itself favours offence

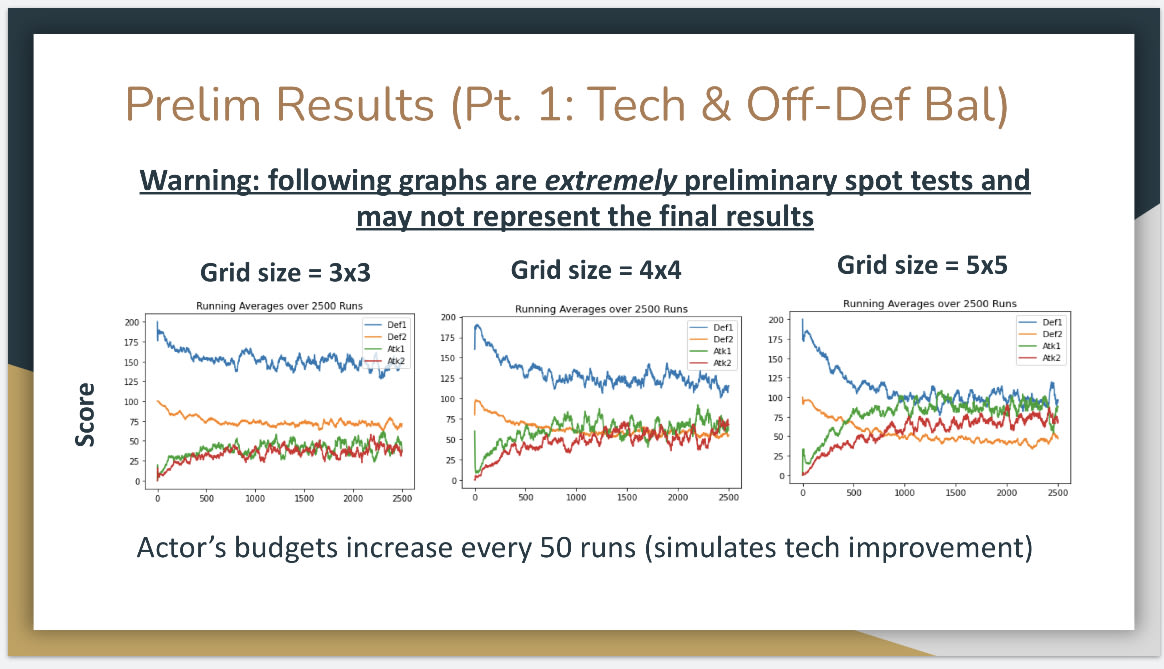

Funny you should say this, it so happens that I just submitted a final paper last night for an agent-based model which was meant to test exactly this kind of claim for the impacts of improving “technology” (AI) in cybersecurity. Granted, the model was extremely simple + incomplete, but the theoretical results explain how this could possible.

In short, when assuming a fixed number of vulnerabilities in an attack surface, while attackers’ and defenders’ budgets are very small there may be many more vulnerabilities that go unnoticed. For example, suppose they together can only explore 10% of the attack surface, but vulnerabilities are only in 1% of the surface. Thus, even if atk/def budgets increase by the same factor (e.g., 10x), it increases the likelihood that vulnerabilities are found either by the attacker or defender.

The following results are admittedly not very reliable (I didn’t do any formal verification/validation beyond spot checks), but the point of showing these graphs is not “here are the definitive numbers” but more an illustrative “here is what the pattern of relationships between attack surface, atk/def budgets, and theft rate could look like”.

Notice how as the attack surface increases the impact of multiplying the attackers and defenders’ budgets causes more convergence. With a hypothetical 1x1 attack surface (grid) for each actor, the budget multiplication should have no effect on loss rates, because all vulnerabilities are found and it’s just a matter of who found them first, which is not affected by budget multiplication. However, with a hypothetical infinite by infinite grid, the multiplication of budgets strictly benefits the attacker, because the defenders’ will ~never check the same squares that the attacker checks.

(ultimately my model makes many unrealistic assumptions and may have had bugs, but this seemed like a decent intuition seed—not a true “conclusion” which can be carelessly applied elsewhere.)

finm @ 2023-12-16T18:29 (+3)

Very cool! Feel free to share your paper if you're able, I'd be curious to see.

I don't know how to interpret the image, but the this makes sense:

With a [small] attack surface (grid) for each actor, the budget multiplication should have no effect on loss rates, because all vulnerabilities are found and it’s just a matter of who found them first, which is not affected by budget multiplication. However, with a [large attack surface], the multiplication of budgets strictly benefits the attacker, because the defenders will ~never check the same squares that the attacker checks.

Marcel D @ 2023-12-16T19:55 (+3)

I probably should have been more clear, my true "final" paper actually didn't focus on this aspect of the model: the offense-defense balance was the original motivation/purpose of my cyber model, but I eventually became far more interested in using the model to test how large language models could improve agent-based modeling by controlling actors in the simulation. I have a final model writeup which explains some of the modeling choices in more detail and talks about the original offense/defense purpose in more detail.

(I could also provide the model code which is written in Python and, last I checked, runs fine, but I don't expect people would find it to be that valuable unless they really want to dig into this further, especially given that it might have bugs.)

Davidmanheim @ 2023-12-09T19:42 (+12)

So if there is some technology which makes invading easier than defending or info-sec easier than hacking, it might not change the balance of power much because each actor needs to do both. If offense and defense are complements instead of substitutes then the balance between them isn’t as important.

This seems reasonable to explain many past data points, but it's not at all reassuring for bioweapons, which is a critical reason to be concerned about offense-defense balance of future technologies, and one where there really is a clear asymmetry. So to the extent that the reasoning for explaining the past is correct, it seems to point to worrying more about AIxBio, rather than be reassured about it,

johnburidan @ 2023-12-12T13:46 (+7)

Forgive me if I'm thick, but isn't this equivalent to saying the elasticities of supply and demand for offense and defense rarely change over time? The price might go up or down, factor markets might change, but ratios remain stable?

This makes sense, but I worry it is not Hayekian enough. People are the ones who respond to price changes; nations are the ones who respond to price changes in the cost of defense, deterrence, and attack. In the long run, there is equilibrium. But in the short run, everyone is making adjustments all the time and the costs fluctuate wildly, as does who has the upper hand.

The Athenians have the upper hand until Spartans figure out siege warfare, the 11th century nobles of Aragon and Barcelona are consolidated and made more subservient to the King-count Jaume II by his monopoly on trebuchets, the Mongols had logistics advances that gave them a 60 year moat.

The question isn't whether there is equilibrium, but how long until we get there, which equilibrium is it, and what mischief is caused in the meantime. Human action and choices determines the answer to these questions.

David Stinson @ 2023-12-18T07:56 (+2)

I was going to say something similar, based on international relations theory (realism). The optimal size of a military power unit changes over time, but equilibria can exist.

In the shorter term, though, threshold effects are possible, particularly when the optimal size grows and the number of powers shrinks. We appear to be in the midst of a consolidation cycle now, as cybersecurity and a variety of internet technologies have strong economies of scale.

Chris Said @ 2023-12-10T20:04 (+4)

Good post, but I’m not sure I agree with the implications around bioengineered viruses. While I agree we might build defenses that keep overall death rate low, biological and psychological constraints make these defenses pretty unappealing.

You mentioned that while biotech makes it easier for people to develop viruses, it also makes it easier to develop vaccines. But if the ability to create viruses becomes easy for bad actors, we might need to create *hundreds* of different vaccines for the hundreds of new viruses. Even if this works, taking hundreds of vaccines doesn’t sound appealing, and there are immunological constraints on how well broad spectrum vaccines will ever work.

In the face of hundreds of new viruses and the inability to vaccinate against all of them, we’ll need to fall back to isolation or some sort of sterilizing tech like ubiquitous UV. I don’t like isolation, and I don’t like how ubiquitous UV might create weird autoimmune issues or make us especially vulnerable to breakthrough transmissions.

christian.r @ 2023-12-12T19:28 (+3)

Thanks for this post! I'm not sure cyber is a strong example here. Given how little is known publicly about the extent and character of offensive cyber operations, I don't feel that I'm able to assess the balance of offense and defense very well

Matthew Rendall @ 2023-12-13T20:24 (+2)

Stephen Van Evera [1] argues that for purposes of explaining the outbreak of war, what's most important is not what the objective o/d balance is (he thinks it usually favours the defence), but rather what states believe it is. If they believe it favours the offence (as VE and some other scholars argue that they did before World War I), war is more likely.

It seems as if perceptions should matter less in the case of cyberattacks. Whereas a government is unlikely to launch a major war unless it thinks either that it has good prospects of success or that it faces near-certain defeat if it doesn't, the costs of a failed cyberattack are much lower.

- ^

(https://direct.mit.edu/isec/article-abstract/22/4/5/11594/Offense-Defense-and-the-Causes-of-War)

Sopuii @ 2023-12-11T17:34 (+1)

I would guess a combination of deadlier weapons + advanced in medicine net out. Injuries and disease were more fatal than deaths by swordfighting in medieval times.

Today, your prognosis is very good if you get an infection while deployed. But advanced weapons kill many more in a single attack.

SummaryBot @ 2023-12-11T13:40 (+1)

Executive summary: Despite intuitive predictions that major technological advances shift the offense-defense balance, historical data shows remarkable stability even across massive technological change.

Key points:

- Debates about future AI often hinge on predictions it will advantage offense or defense, but evidence contradicts this.

- Per-capita deaths from war show no long-term trend from 1400-2013 despite major tech advances.

- Cybersecurity threats have not radically changed despite exponential growth in computing since the 1970s.

- Biological attacks have not increased alongside rapid advances and cost reductions in genomics and biotech.

- Stability may stem from actors needing both offense and defense, making them complements not substitutes.

- AI will still drive massive change, but likely not via offense-defense imbalance upsets.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.