How are resources in EA allocated across issues?

By Benjamin_Todd @ 2021-08-08T12:52 (+133)

This is a cross-post from 80,000 Hours.

How are the resources in effective altruism allocated across cause areas?

Knowing these figures, for both funding and labour, can help us spot gaps in the current allocation. In particular, I’ll suggest that broad longtermism seems like the most pressing gap right now.

This is a follow on from my first post, where I estimated the total amount of committed funding and people, and briefly discussed how many resources are being deployed now vs. invested for later.

These estimates are for how the situation stood in 2019. I made them in early 2020, and made a few more adjustments when I wrote this post. As with the previous post, I recommend that readers take these figures as extremely rough estimates, and I haven’t checked them with the people involved. I’d be keen to see additional and more thorough estimates.

Update Oct 2021: I mistakenly said the number of people reporting 5 for engagement was ~2300, but actually this was the figure for people reporting 4 or 5.

Allocation of funding

Here are my estimates:

| Cause Area | $ millions per year in 2019 | % |

| Global health | 185 | 44% |

| Farm animal welfare | 55 | 13% |

| Biosecurity | 41 | 10% |

| Potential risks from AI | 40 | 10% |

| Near-term U.S. policy | 32 | 8% |

| Effective altruism/rationality/cause prioritisation | 26 | 6% |

| Scientific research | 22 | 5% |

| Other global catastrophic risk (incl. climate tail risks) | 11 | 3% |

| Other long term | 1.8 | 0% |

| Other near-term work (near-term climate change, mental health) | 2 | 0% |

| Total | 416 | 100% |

What it’s based on:

- Using Open Philanthropy’s grants database, I averaged the allocation to each area 2017–2019 and made some minor adjustments. (Open Phil often makes 3yr+ grants, and the grants are lumpy, so it’s important to average.) At a total of ~$260 million, this accounts for the majority of the funding. (Note that I didn’t include the money spent on Open Phil’s own expenses, which might increase the meta line by around $5 million.)

- I added $80 million to global health for GiveWell using the figure in their metrics report for donations to GiveWell-recommended charities excluding Open Philanthropy. (Note that this figure seems like it’ll be significantly higher in 2020, perhaps $120 million, but I’m using the 2019 figure.)

- GiveWell says their best guess is that the figures underestimate the money they influence by around $20 million, so I added $20 million. These figures also ignore what’s spent on GiveWell’s own expenses, which could be another $5 million to meta.

- For longtermist and meta donations that aren’t Open Philanthropy, I guessed $30 million per year. This was based on roughly tallying up the medium-sized donors I know about and rounding up a bit. I then roughly allocated them across cause areas based on my impressions. This figure is especially uncertain, but seems small compared to Open Philanthropy, so I didn’t spend too long on it.

Neartermist donations outside of Open Phil and GiveWell are the most uncertain.

- I decided to exclude donors who don’t explicitly donate under the banner of effective altruism, or else we might have to include billions of dollars spent on cost-effective global health interventions, pandemic prevention, climate change etc. I excluded the Gates Foundation too, though they have said some nice things about EA. This is a very vague boundary.

- For animal welfare, about $9 million has been donated to the EA Animal Welfare Fund, compared to $11.6 million to the Long Term Future Fund and the Meta Fund (now called the Infrastructure Fund). If the total amount to longtermist and meta causes is $30 million per year, and this ratio holds more broadly, it would imply $23 million per year to EA animal welfare (excluding OP) in total. This seems plausible considering that Animal Charity Evaluators says it influenced about $11 million last year, which would be about half the total.

- From looking at GiveWell’s metrics report, I guess that most EA-motivated donations to global health are tracked in GiveWell’s figures already. I’ll guess there’s an additional $5 million.

- I guessed $1 million per year is spent on neartermist climate change and mental health (that’s not global health or global catastrophic risks).

- Overall all these figures could be way off, but I haven’t spent much time on them because they seem small compared to the Open Philanthropy + GiveWell donations.

Some quick things to flag about the allocation:

- Global health is the biggest area for funding, but it’s not a majority.

- AI is only 10%, so it doesn't seem fair to say EA is dominated by AI.

- Likewise, only 6% is broadly ‘meta’. Even if we add in the operating budgets of Open Phil and GiveWell at about $10 million, which would bring it to 8%, we still seem to be a long way from being in a 'meta trap'.

Allocation of people

For the total number of people, see this estimate, which finds that in 2019 there were 2,300 people similar to those who answered ‘5’ (out of 5) for engagement in the EA Survey, and perhaps 6,500 similar to those who answered ‘4’ or ‘5’.

The 2019 EA Survey asked people which problem areas they’re working on. They could give multiple answers, so I normalised to 100%.

For those who answered ‘5’ for engagement, the breakdown was:

| 5-engaged EAs cause currently working in (normalised) | % |

| AI | 18 |

| Movement building | 15 |

| Rationality | 12 |

| Other near term (near-term climate change, mental health) | 12 |

| Cause prioritisation | 10 |

| Other global catastrophic risks | 10 |

| Animal welfare | 10 |

| Global poverty | 6 |

| Biosecurity | 4 |

| Other | 4 |

| Total | 101 |

This question wasn’t asked in the 2020 EA Survey, so these are the most up-to-date answers on this question.

If I was repeating this analysis, I’d look at the figures for ‘4-engaged’ EAs as well, since I realised this is a pretty high level of engagement. This would tilt things away from longtermism, but only a little. (I quickly checked the figures and they were so similar, it didn't seem worth redoing all the tables.)

It's interesting how different the allocation is compared to funding. For instance, global health is only 6% compared to 44% for funding. This should be considered when asking what 'representative' content should look like.

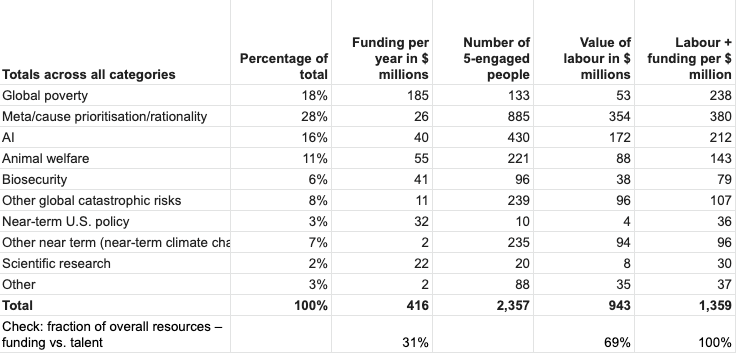

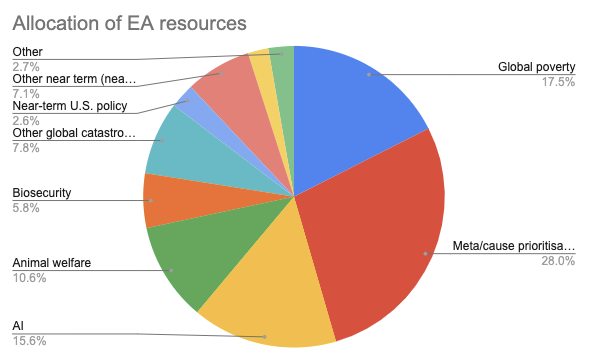

People plus funding

If we totally guess that the value of each year of labour in financial terms will average to $100,000 per year over a career, then we can look at the combined portfolio. (This figure could easily be off by a factor of 10.)

Different surveys used different categories, so I’ve had to make a bunch of guesses about how they line up.

| Totals across all categories | Funding per year in $ millions | Number of 5-engaged people | Value of labour in $ millions | Labour + funding per $ million | Percentage of total |

| Global poverty | 185 | 133 | 13 | 198 | 30% |

| Meta/cause prioritisation/rationality | 26 | 885 | 88 | 115 | 18% |

| AI | 40 | 430 | 43 | 83 | 13% |

| Animal welfare | 55 | 221 | 22 | 77 | 12% |

| Biosecurity | 41 | 96 | 10 | 51 | 8% |

| Other global catastrophic risks | 11 | 239 | 24 | 35 | 5% |

| Near-term U.S. policy | 32 | 10 | 1 | 33 | 5% |

| Other near term (near-term climate change, mental health) | 2 | 235 | 24 | 25 | 4% |

| Scientific research | 22 | 20 | 2 | 24 | 4% |

| Other | 2 | 88 | 9 | 11 | 2% |

| Total | 416 | 2,357 | 236 | 652 | 100% |

It’s interesting to note that meta seems a bit people heavy; AI is balanced; and biosecurity, global health and farm animal welfare are funding heavy.

These figures also suggest the value of the funding is about twice the value of the people – similar to what I found for committed funds as whole. This comparison is particularly rough guesswork, so I wouldn’t read much into it; though it does match a general picture in which there’s more funding than labour (which seems the reverse of the broader economy).

What might we learn from this?

We can look at how people guess the ideal portfolio should look, and look for differences.

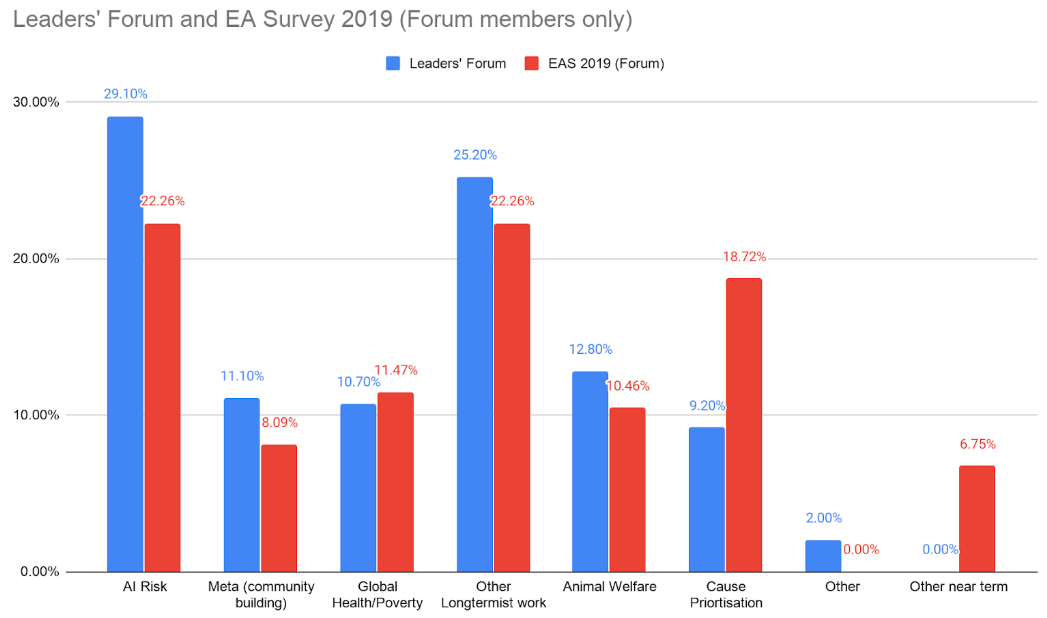

Below, the blue bars show the average response of the attendees of the 2019 EA Leaders Forum for what percentage of resources they thought should go to each area.

To check whether this is representative of engaged members of the broader movement, I compared it to the top cause preference of readers of the EA Forum in the 2019 EA Survey, which is shown in red. (The figures for all EAs who answered ‘4’ or ‘5’ for engagement were similar.) Note that ‘top cause preference’ is not the same as ‘ideal percentage to allocate’ but will hopefully correlate. See more about this data. You can also see cause preferences by engagement level from the 2020 EA Survey.

(The categories also don’t line up exactly again.)

There was a similar survey from the 2020 EA Coordination Forum — an event similar to Leaders Forum, but with a narrower focus and a greater concentration of staff from longtermist organizations, which may have influenced the survey results. These results have not been officially released, but here is a summary, which I’ve compared to the current allocation (as above). Note that the results are very similar to the 2019 Leaders Forum, though I prefer them since they use more comparable categories and should be a little more up-to-date.

| EACF 2020 ideal portfolio | Guess at current allocation (labour + money) | Difference | |

| Global poverty | 9% | 30% | 22% |

| Meta/cause prioritisation/rationality | 23% | 17% | -6% |

| AI | 28% | 13% | -15% |

| Animal welfare | 8% | 12% | 4% |

| Biosecurity | 9% | 8% | -1% |

| Other global catastrophic risks | 4% | 5% | 2% |

| Other near term (near-term climate change, mental health) | 4% | 4% | 0% |

| Scientific research | 3% | 4% | 1% |

| Other (incl. wild animal welfare) | 4% | 2% | -2% |

| Broad longtermist | 9% | 1% | -8% |

My own guesses at the ideal portfolio would also be roughly similar to the above. I also expect that polling EA Forum members would lead to similar results, as happened in the 2019 results above.

What jumps out at me from looking at the current allocation compared to the ideal?

1) The biggest gap in proportional terms seems like broad longtermism.

In the 2020 Leaders Forum survey, the respondents were explicitly asked how much they thought we should allocate to “Broad longtermist work (that aims to reduce risk factors or cause other positive trajectory changes, such as improving institutional decision making)”. (See our list of potential highest priorities for more on what could be in this bucket.)

The median answer was 10%, with an interquartile range of 5% to 14%.

However, as far as I can tell, there is almost no funding for this area currently, since Open Philanthropy doesn’t fund it, and I’m not aware of any other EA donors giving more than $1 million per year.

There are some people aiming to work on improving institutional decision making and reducing great power conflict, but I estimate it’s under 100. (In the tables for funding and people earlier, this would probably mostly fall under ‘rationality’ within the meta category, or otherwise within ‘Other GCRs,’ so I subtracted it from there.)

This would mean that, generously, 1% of resources are being spent on broad longtermism. So, we’re maybe off the ideal by a factor of nine.

Note that the aim of grants or careers in this area would mainly be to explore whether there’s an issue that’s worth funding much more heavily, rather than scaling up an existing approach.

2) Both the EA Leaders Forum respondents and the EA Forum members in the EA survey would like to see significantly more allocated to AI safety and meta.

3) Global health seems to be where the biggest over-allocation is happening.

(And is responsible for why neartermist issues currently receive ~50% when the survey respondents estimate ~25% would be ideal.)

For global health, this is almost all driven by funding rather than people. While global health receives about 44% of funding, only about 6% of ‘5-engaged’ EAs are working on it. In particular, GiveWell brings in lots of funders for this issue who won't fund the other issues.

I think part of what’s going on for funding is that global health is already in ‘deployment’ mode, whereas the other causes are still trying to build capacity and figure out what to support.

My hope is that other areas scale up over time, bringing the allocation to global health in line with the target, but without necessarily reducing the amount spent on it.

However, if someone today has the option to work on global health or one of the under-allocated areas, and feels unsure which is best for them, I’d say they should default away from global health.

Wrapping up:

I find it useful to look at the portfolio, but keep in mind that this ‘top-down’ approach is just one way to figure out what to do.

Comparatively, it’s probably more important to take a more ‘bottom-up’ approach that looks at specific opportunities, and tries to compare them to ‘the bar’ for funding, or by using more qualitative factors.

For someone choosing a career who wants to coordinate with the EA community, the portfolio framework should play a minor role compared to other factors, such as personal fit, career capital, and other ways of evaluating which priorities are most pressing.

You might also be interested in:

- Part 1: Is effective altruism growing? An update on committed funds and people.

- What does the effective altruism community most need?

- What actually is effective altruism?

I post draft research ideas on Twitter.

Davidmanheim @ 2021-08-08T16:17 (+34)

I would suggest that part of the difference between funding and people for global health is the huge non-EA workforce that is already working on the things EAs want to optimize for - so replaceability is very high, and marginal value low, unless we think value alignment is particularly critical.

The same can't be said about AI risk, biosecurity, and other areas where the EA perspective about what to focus on differs from the perspective of most other people working in the area. (Not to mention EA meta / prioritization, where non-EAs working on it is effectively impossible.)

Benjamin_Todd @ 2021-08-08T17:22 (+12)

Good point, I agree that's a factor.

We should want funding to go into areas where there is more existing infrastructure / it's easier to measure results / there are people who already care about the issue.

Then aligned people should focus on areas that don't have those features.

It's good to see this seems to be happening to some degree!

Benjamin_Todd @ 2021-08-09T10:53 (+7)

Though, to be clear, I think this is only a moderate reason (among many other factors) in favour of donating to global health vs. say biosecurity.

Overall, my guess is that if someone is interested in donating to biosecurity but worried about the smaller existing workforce, then it would be better to:

- Fund movement building efforts to build the workforce

- Invest the money and donate later when the workforce is bigger

abrahamrowe @ 2021-08-11T23:35 (+8)

I think this is likely true for animal welfare too. For example, looking at animal welfare organizations funded by Open Phil, and thinking about my own experience working at/with groups funded by them, I'd guess that under 10% of employees at a lot of the bigger orgs (THL, GFI) engage with non-animal EA content at all, and a lot fewer than that fill out the EA survey.

vaidehi_agarwalla @ 2021-08-27T03:00 (+17)

For Meta/cause prioritisation/rationality I would downgrade the labour value estimates by ~half because:

- Apart from full-time EA staff at meta orgs, the bulk of people/ who would have listed this cause area are volunteer group organisers who would put themselves as working on this area are doing organising part-time

- Unlike other causes, it is easier to work on movement building part-time, so I would guess this issue is more or less unique to meta.

- At a guess I'd say the number of equivalent full-time people is probably ~450 people (likely less)

- I would suggest downgrading the $ value from $88 million to maybe $45 million

- This would reduce the portion of meta to 12%, suggesting it's even further (11% instead of 6%) from the optimal allocation

Benjamin_Todd @ 2021-08-27T12:30 (+6)

Seems reasonable.

Salaries are also lower than in AI.

You could make a similar argument about animal welfare, though, I think.

Juan Cambeiro @ 2021-08-08T15:21 (+16)

This is interesting, thanks. Do you plan on making funding/people allocation estimates using this methodology in the future? If so perhaps it'd be worthwhile for us to expand Metaculus forecasting on future EA resources to include questions on future funding/people allocation, with the aim of informing efforts to address the gap between ideal future portfolios and expectations for what future portfolios will actually be.

Benjamin_Todd @ 2021-08-08T17:19 (+7)

My hope is that someone with more time to do it carefully will be able to do this in the future.

Having on-going metaculus forecasts sounds great too.

MichaelPlant @ 2021-08-11T17:28 (+8)

Thanks for this! Some minor points.

I'm puzzled by what's going on in the category "Other near-term work (near-term climate change, mental health)". The two causes in parentheses are quite different and I have no idea what other topics fall into this. Also, this has 12% of the people, but >1% of the money: how did that happen? What are those 12% of people doing?

Also, shouldn't "global health" really be "global health and development"? If it's just "global health" that leaves out the economic stuff, e.g. Give Directly. Further, global health should probably either include mental health, or be specified as "global physical health".

Benjamin_Todd @ 2021-08-12T10:33 (+4)

Yes, sorry I was using 'global health' as a shorthand to include 'and development'.

For other near term, that category was taken from the EA survey, and I'm also unsure exactly what's in there. As David says, it seems like it's mostly mental health and climate change though.

David_Moss @ 2021-08-11T19:51 (+4)

I think the figures for highly engaged EAs working in Mental Health, drawn from EA Survey data, will be somewhat inflated by people who are working in mental health, but not in an EA-relevant sense e.g. as a psychologist. This is less of a concern for more distinctively EA cause areas of course.

Among people who, in EAS 2019, said they were currently working for an EA org, the normalised figures were only ~5% for Mental Health and ~2% for Climate Change (which, interestingly, is a bit closer to Ben's overall estimates for the resources going to those areas). Also, as Ben noted, people could select multiple causes, and although the 'normalisation' accounts for this, it doesn't change the fact that these figures might include respondents who aren't solely working on Mental Health or Climate Change, but could be generalists whose work somewhat involved considering these areas.

Jonas Vollmer @ 2021-09-19T03:26 (+6)

I'd guess that the labor should be valued at significantly more than $100k per person-year. Your calculation suggests that 64% of EA resources spent are funding and 36% are labour, but given that we're talent-constrained, I would guess that the labor should be valued at something closer to $400k/y, suggesting a split of 31%/69% between funding and talent, respectively. (Or put differently, I'd guess >20 people pursuing direct work could make >$10 million per year if they tried earning to give, and they're presumably working on things more valuable than that, so the total should be a lot higher than $200 million.)

Using those figures, the overallocation to global poverty looks less severe, we're over- rather than underallocating to meta, and the other areas look roughly similar (e.g., there still is a large gap in AI).

Regarding the overallocation to meta, one caveat is that the question was multi-select, and many people who picked that might only do a relatively small amount of meta work, so perhaps we're allocating the appropriate amount.

Benjamin_Todd @ 2021-09-20T20:24 (+2)

I agree that figure is really uncertain. Another issue is that the mean is driven by the tails.

For that reason, I mostly prefer to look at funding and the percentage of people separately, rather than the combined figure - though I thought I should provide the combined figure as well.

On the specifics:

I'd guess >20 people pursuing direct work could make >$10 million per year if they tried earning to give

That seems plausible, though jtbc the relevant reference class is the 7,000 most engaged EAs rather than the people currently doing (or about to start doing) direct work. I think that group might in expectation donate several fold-less than the narrower reference class.

Jonas Vollmer @ 2021-09-27T07:21 (+2)

Thanks, agreed!

SofiaBalderson @ 2024-01-11T12:40 (+5)

Thanks a lot for this! Do you know if there is an updated version of this?

JWS @ 2024-01-11T22:12 (+4)

This isn't a direct update (I think something along those lines would be useful) but the most up-to-date things in terms of funding might be:

- EA Funding Spreadsheet that's been floating around updated with 2023 estimates. This shows convincingly, to me, that the heart of EA funding is in global health and development for the benefit of people living now, and not in longtermist/AI risk positions[1]

- GWWC donations - between 2020 and 2022 about ~two thirds of donations went to Global Health and Animal Welfare

- In terms of community opinions, I think the latest EA Survey is probably the best place to look. But as it's from 2022, and we've just come off EA's worst ever year, I think a lot will have changed in terms of community opinion, and some people will understandably have walked away.

- Reading the OP for the first time in 2024 is interesting. Taking the opinions of the Leader's Forum to cause area and using that to anchor the 'ideal' allocation between cause areas.... hasn't really aged well, let's just say that.

- ^

I'm not actually taking a stand on the normative question. It's just bemusing to me that so many EA critics go for the "the money used to go to bed nets, now it's all goes to AI Safety Research" critique despite the evidence pointing out this isn't true

MichaelStJules @ 2024-01-12T03:33 (+4)

What do you mean by the last point/that it hasn't aged well?

JWS @ 2024-01-12T10:22 (+6)

I think it's probably a topic for it's own post/dialogue I guess, but I think that the last two years (post ~FTX and fallout and the public beating that EA has suffered and is suffering) that 'EA Leadership' broadly defined has lost a lot of trust, and the right to be deferred to. I think arguments for decentralisation/democratisation ala Cremer look stronger with each passing month. Another framing might be that, with MacAskill having to take a step back post FTX until legal matters are more finalised (I assume, please correct me if wrong), that nobody holds the EA 'mandate of heaven'.[1]

It also especially odd to me that Ben takes >50% of resources (defined as money and people) going towards Longtermism as the lodestar to aim for, instead of "hmm isn't it weird that this doesn't match EA funding patterns at all?", like revealed preferences show a very different picture of what EAs value, see the GWWC donations above or the Donation Election results. And the CURVE sequence seems to be one of the few places where we actually get concrete cost effectiveness numbers for longtermist interventions, looking back I'm not sure how much holds up to scrutiny.[2]

I also have an emotional, personal response that in the aftermath to EA's annus horribilis that a lot of the 'EA Leadership' (which I know is a vague term) has been conspicuous by its absence and not stepping up to take responsibility or provide guidance when times start to get tough, and instead direct the blame toward the "EA Community" (also vaguely defined).[3] But again, that's just an emotional feeling, I don't have references for it to hand, and it definitely colours my perspective on this whole thing.

- ^

This is an idea I want to explore in a post/discussion. If anyone wants to collaborate let me know.

- ^

At least from a 'this is the top impartial priority' perspective. I think from a 'exploring underrated/unknown ideas' perspective it looks very good, but that's not what the Leaders were asked in this survey

- ^

I thought I recalled a twitter thread from Ben where he was talking about being separate from the EA Community as a good thing, and that most of his friends weren't EAs, but I couldn't find it, so maybe I just imagined it or confused it with someone else?

tylermaule @ 2021-08-11T03:15 (+3)

Thanks for writing, and I agree it would be great to see more like this in future.

It does seem like 'ideal portfolio of resources' vs 'ideal split of funds donated this year' can be quite a bit different—perhaps a question for next time?

(see here for some similar funding estimates)

Benjamin_Todd @ 2021-08-11T13:02 (+6)

Yes, I agree. Different worldviews will want to spend a different fraction of their capital each year. So the ideal allocation of capital could be pretty different from the ideal allocation of spending. This is happening to some degree where GiveWell's neartermist team are spending a larger fraction than the longtermist one.

Benjamin_Todd @ 2021-08-10T11:51 (+3)

If lots of the people working on 'other GCRs' are working on great power conflict, then the resources on broad longtermism could be higher than the 1% I suggest, but I'd expect it's still under 3%.

Miranda_Zhang @ 2021-08-08T22:24 (+3)

This is really great - would love to see more of these in the future. It also made me reconsider the way I currently allocate across EA funds/charities, mostly by shifting funds away from global health.

I do think that what David Manheim mentioned is a strong argument against shifting EA funds away from global health, but I think it makes sense to shift some of my allocation seeing that this issue is not only less neglected by non-EAs but within EA as well.

Linch @ 2021-08-08T23:03 (+15)

I think dollars are much more fungible than careers, so for most people, you should move your donations away from global health if and only if you believe that marginal donations to other charities are more cost-effective. "Neglectedness" is just a herustic, and not a very strong one.

Miranda_Zhang @ 2021-08-09T00:19 (+1)

Hmm, that's fair - crowdedness for giving is different from career path, in that I should be thinking about the marginal impact of a dollar for the former rather than overall field neglectedness.

I think this makes me less certain about my reallocation because I believe very strongly in the cost-effectiveness of global health charities, although I'm also wary that that is not solely due to true cost-effectiveness (due to cost-effectiveness being harder to measure across cause areas) and that most people think that way - hence the funding gap.

Khorton @ 2021-08-08T22:59 (+6)

My impression is that there's a lot of funding available for stuff other than global health, but not a lot of great places to spend it at the moment. So finding a charity with a robust theory of change for improving the long-term future and donating there can be very valuable - and starting something like that would be even more valuable! - but I'm less sure about the value of taking money you would spend on bednets and donating the the Long-Term Future Fund (or at least I'd recommend reviewing their past grants first).

Disclaimer: I have a much higher bar for funding long-termist charities than many other EAs.

Miranda_Zhang @ 2021-08-09T00:12 (+4)

This is a good point! I actually redirected the funding more towards EA Infrastructure instead of the Long-term fund - partly since my giving acts as diversifying my investments (as I'm investing time in building a career oriented towards more longtermist goals), and partly because my existing donations are much smaller relative to what I'm investing to give later on (and hopefully we have more longtermist charities then).

I really appreciate you highlighting the different implications one could draw out from funding disparities.

Tetraspace Grouping @ 2021-08-12T18:27 (+1)

What were your impressions for the amount of non-Open Philanthropy funding allocated across each longtermist cause area?