Funding circle aimed at slowing down AI - looking for participants

By Greg_Colbourn ⏸️ @ 2024-01-25T23:58 (+93)

Are you an earn-to-giver or (aspiring) philanthropist who has short AGI timelines and/or high p(doom|AGI)? Do you want to discuss donation opportunities with others who share your goal of slowing down / pausing / stopping AI development[1]? If so, I want to hear from you!

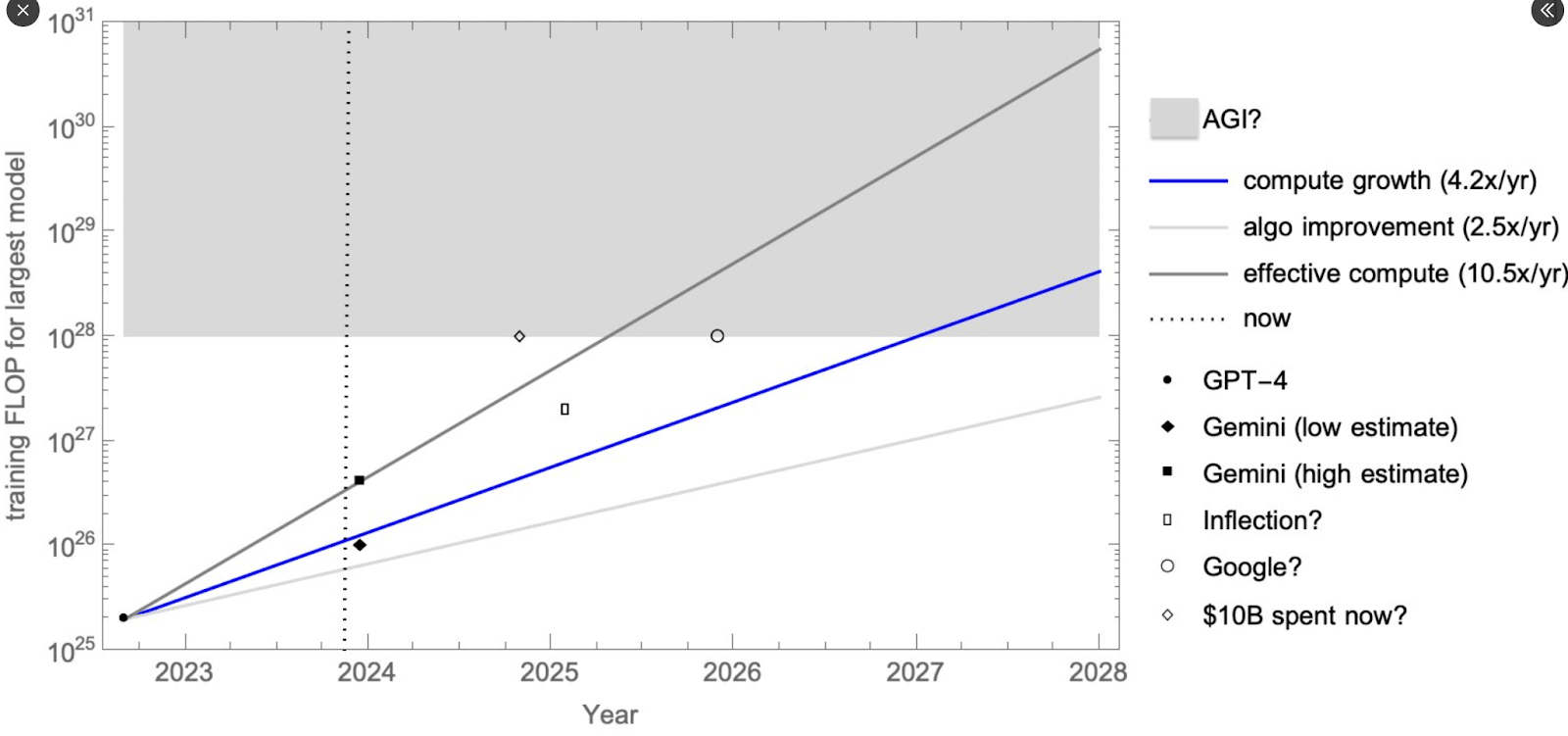

For some context, I’ve been extremely concerned about short-term AI x-risk since March 2023 (post-GPT-4), and have, since then, thought that more AI Safety research will not be enough to save us (or AI Governance that isn’t focused[2] on slowing down AI or a global moratorium on further capabilities advances). Thus I think that on the margin far more resources need to be going into slowing down AI (there are already many dedicated funds for the wider space of AI Safety).

I posted this to an EA investing group in late April:

I'm thinking 4-6 people, each committing ~$100k(+) over 2024, would be good. The idea would be to discuss donation opportunities in the “slowing down AI” space during a monthly call (e.g. Google Meet), and have an informal text chat for the group (e.g. Whatsapp or Messenger). Fostering a sense of unity of purpose[6], but nothing too demanding or official. Active, but low friction and low total time commitment. Donations would be made independently rather than from a pooled fund, but we can have some coordination to get "win-wins" based on any shared preferences of what to fund. Meta-charity Funders is a useful model.

We could maybe do something like an S-process for coordination, like what Jaan Tallinn's Survival and Flourishing Fund does[7]; it helps avoid "donor chicken" situations. Or we could do something simpler like rank the value of donating successive marginal $10k amounts to each project. Or just stick to more qualitative discussion. This is all still to be determined by the group.

Please join me if you can[8], or share with others you think may be interested. Feel free to DM me here or on X, book a call with me, or fill in this form.

- ^

If you oppose AI for other reasons (e.g. ethics, job loss, copyright), as long as you are looking to fund strategies that aim to show results in the short term (say within a year), then I’d be interested in you joining the circle.

- ^

I think Jaan Tallinn’s new top priorities are great!

- ^

After 2030, if we have a Stop and are still here, we can keep kicking the can down the road..

- ^

I’ve made a few more donations since that tweet.

- ^

Public examples include Holly Elmore, giving away copies of Uncontrollable, and AI-Plans.com.

- ^

Right now I feel quite isolated making donations in this space.

- ^

It's a little complicated, but here's a short description: "Everyone individually decides how much value each project creates at various funding levels. We find an allocation of funds that’s fair and maximises the funders’ expressed preferences (using a number of somewhat dubious but probably not too terrible assumptions). Funders can adjust how much money they want to distribute after seeing everyone’s evaluations, including fully pulling out." (paraphrased from Lightspeed Grants [funders and evaluators would probably be the same people in our case, but we could outsource evaluation]). See link (the footnote) for more detail.

- ^

If there is enough interest from people who want to contribute but can’t stretch to $100k, we can look at other potential structures.

Angelina Li @ 2024-01-26T15:44 (+11)

I'm pretty excited about funder circles as a structure for medium-high but not UHNW donors to scale their impact and find support. Good luck!

Angelina Li @ 2024-11-15T10:10 (+10)

@Greg_Colbourn just out of curiosity, did you end up pursuing this? I'd be interested in hearing how it went, if so — no pressure!