Critiques of EA that I want to read

By abrahamrowe @ 2022-06-19T22:22 (+464)

Note — I’m writing this in a personal capacity, and am not representing the views of my employer.

I’m interested in the EA red-teaming contest as an idea, and there are lots of interesting critiques I’d want to read. But I haven’t seen any of those written yet. I put together a big list of critiques of EA I’d be really interested in seeing come out of the contest. I personally would be interested in writing some of these, but don’t really have time to right now, so I am hoping that by sharing these, someone else will write a good version of them. I’d also welcome people to share other critiques they’d be excited to see written in the comments here!

I think that if someone wrote all of these, there are many where I wouldn’t necessarily agree with the conclusions, but I’d be really interested in the community having a discussion about each of them and I haven’t seen that discussion happen before.

If you want to write any of these, I’m happy to give brief feedback on it, or give you a bunch of bullet-points of my thoughts on them.

Critiques of EA

- Defending person-affecting views

- Person-affecting views try to capture an intuition that is something like, “something can only be bad if it is bad for some particular person or group of people.”

- This specifically interacts with an argument for reducing existential risk that presents reducing x-risk as good because then future people will be born and have good lives. If you take a person-affecting view seriously, maybe you think it is no big deal that people won’t be born, since they aren’t any particular people, and thus not being born is not bad for them.

- Likewise, some forms of person-affecting views are generally neutral toward adding additional happy people, while a non-person-affecting total utilitarian view is in favor of adding additional happy people, all other things being neutral.

- People in the EA community have critiqued person-affecting views for a variety of reasons related to the nonidentity problem

- Person-affecting views are interesting, but pretty much universally dismissed in the EA community. However, I think a lot of people find the intuitions behind person-affecting views to be really powerful. And, if there was a convincing version of a person-affecting view, it probably would change a fair amount of longtermist prioritization.

- I’d really love to see a strong defense of person-affecting views, or a formulation of a person-affecting view that tries to address critiques made of them. This seems like a fairly valuable contribution to making EA more robust. I think I’d currently bet on the community not realigning in light of this defense, but it seems worth trying to make because the intuitions powering person-affecting views are compelling.

- Person-affecting views try to capture an intuition that is something like, “something can only be bad if it is bad for some particular person or group of people.”

- It’s getting harder socially to be a non-longtermist in EA.

- People seem to push back on the idea that EA is getting more longtermist by pointing at the increase in granting in the global health and development space.

- Despite this, a lot of people seem to have a sense that EA is pushing toward longtermism.

- I think that the argument that EA is becoming more longtermist is stronger than doubters give it credit for, but it might mostly have to do with social dynamics in the space.

- The intellectual leaders / community building efforts seem to be focused on longtermism.

- The “energy” of the space seems mostly focused on longtermist projects.

- People join EA interested in neartermist causes, and gradually become more interested in longtermist causes (on average)

- I think that these factors might be making it socially harder to be a non-longtermist who engages with the EA community, and that is an important and missing part of the ongoing discussion about EA community norms changing.

- EA is neglecting trying to influence non-EA organizations, and this is becoming more detrimental to impact over time.

- I’m assuming that EA is generally not missing huge opportunities for impact.

- As time goes on, theoretically many grants / decisions in the EA space ought to be becoming more effective, and closer to what the peak level of impact possible might be.

- If this is the case, changing EAs’ minds is becoming less effective, because the possible returns on changing their views are lower.

- Despite this, it seems like relatively little effort is put into changing the minds of non-EA funders, and pushing them toward EA donation opportunities, and a lot more effort is put into shaping the prioritization work of a small number of EA thinkers.

- If this is the case, it seems like a non-ideal strategy for the EA research community — the possible returns on changing the prioritization of EA thinkers are fairly small.

- The fact that everyone in EA finds the work we do interesting and/or fun should be treated with more suspicion.

- It's exciting and interesting to work on lots of EA topics. This should make us mildly suspicious that they are as important as we think.

- I’ve worked professionally in EA and EA-adjacent organizations since around 2016, and the entire time, I’ve found my work really really interesting.

- I know a lot of other people who find their work really really interesting.

- I’m pretty confident that what I find interesting is not that likely to overlap with what’s most important.

- It seems pretty plausible from this that I’m introducing fairly large biases into what I do because of what I find interesting, and missing a lot of opportunities for impact.

- It seems plausible that this is systematically happening across the EA space.

- Sometimes change takes a long time — EA is poorly equipped to handle that

- I’ve been involved in the EA space and adjacent communities for around 8 or so years, and throughout that time, it feels like the space has changed dramatically.

- But some really important projects probably take a long time to demonstrate progress or results.

- If the community is going to continue changing and evolving rapidly, it seems like we are not equipped to do these projects.

- There are some ways to address this (e.g. giving endowments to charities so they can operate independently for longer), but these seem underexplored in the EA space.

- Alternative models for distributing funding are probably better and are definitely under-explored in EA

- Lots of people in EA buy into the idea that groups of people make better decisions than individuals, but all our funding mechanisms are built around a small number of individuals making decisions.

- The FTX regranting program is a counterexample to this (and a good one), but still is fundamentally not that transformative, and only slightly improves the number of people making decisions about funding.

- There are lots of alternative funding models that could be explored more, and should be!

- Distribute funds across EA Funds by the number of people donating to each cause, or by people’s aggregate weighting of causes, instead of total donations (thus getting a fund distribution that represents priorities of the community instead of wealth).

- Play with projects like Donation Democracy on a larger scale.

- Trial consensus mechanisms for distributing fundings with large groups of donors (likely moderating against very unusual but good grants, but improving average grant quality).

- Pursue more active grantmaking of ideas that seem promising (not really using group decision making but still a different funding approach).

- Lots of people in EA buy into the idea that groups of people make better decisions than individuals, but all our funding mechanisms are built around a small number of individuals making decisions.

- EA funder interactions with charities often make it harder to operate an EA charity than it has to be

- I’ve worked at several EA and non-EA charities, and overall, the approach to funding in the EA space is vastly better than the non-EA world. But it still isn’t ideal, and lots of problems happen.

- Sometimes funders try to play 5d chess with each other to avoid funging each other’s donations, and this results in the charity not getting enough funding.

- Sometimes funders don’t provide much clarity on the amount of time they intend to fund organizations for, which makes it harder to operate the organization long-term or plan for the future.

- Lots of EA funding mechanisms seem basically based on building relationships with funders, which makes it much harder to start a new organization in the space if you’re an outsider.

- Relatedly, it’s harder to build these relationships without knowing a large EA vocabulary, which seems bad for bringing in new people.

- These interactions seem addressable through funders basically thinking less about how other funders are acting, and also working on longer time-horizons with grants to organizations.

- I’ve worked at several EA and non-EA charities, and overall, the approach to funding in the EA space is vastly better than the non-EA world. But it still isn’t ideal, and lots of problems happen.

- RFMF doesn’t make much sense in many of the contexts it’s used in

- Room For More Funding makes a lot of sense for GiveWell-style charity evaluation. It’s answering a straightforward question like, “how much more money could this charity absorb and continue operating at X level of cost-effectiveness with this very concrete intervention.”

- But this is not how charities that don’t do concrete interventions operate, and due to some historical reasons (like GiveWell using this term), people often ask these charities about RFMF.

- Charities estimating their own “RFMF” probably mean a variety of different things, including:

- How much money they need to keep operating for another year at their current level

- How much money they could imagine spending over the next year

- How much money they could imagine spending over XX years

- How much money a reasonable strategic plan would cost over some time period

- We need a more precise language for talking about the funding needs of research or community-building organizations, so that people can interpret these figures accurately.

- Suffering-focused longtermism stuff seems weirdly sidelined

- S-risks seem like at least a medium-big deal, but seem to be treated as basically not important.

- Lots of people in the EA space seem to believe that a large portion of future minds will be digital (e.g. here is a leader in the EA space saying they think there is an ~80% chance of this).

- If this happens, it seems totally reasonable to give some credence to worlds where lots of digital minds suffer a lot.

- This possibility seems to be taken seriously by only a few organizations (e.g. Center on Long-term Risk) but basically seems like a fringe position, and doesn’t seem represented at major grantmakers and community-building organizations.

- I think this is probably because these views are somewhat associated with really strong negative utilitarianism, but they seem also very concerning for total utilitarians.

- It seems bad to have sidelined these perspectives, and s-risks probably should be explored more, or at least discussed more openly (especially from a non-negative utilitarian perspective).

- Logical consistency seems underexplored in the EA space

- A big core and unstated premise for an EA approach is that ethics should be consistent.

- I don’t think I have particularly good reasons for thinking ethics should be consistent, especially if I adopt a moral realism that seems somewhat popular in the EA space.

- It seems like there are plausible reasons for explaining why I think ethics should be consistent that don’t have much to do with morality (e.g. maybe logic is an artifact of the way human languages are structured, etc.).

- I’d be interested in someone writing a critique of the idea that ethics have to be consistent, as it seems like it underpins a lot of EA thinking.

- There is a lot of philosophical critique of moral particularism, but I think that EA cases are interesting both because of the high degree of interest in moral realism, and because EA explicitly acts on what might be only thought experiments in other contexts (like very low-likelihood, high-EV interventions).

- If the result of a critique is that consistency is actually fairly important, that seems like it would have ramifications for the community (consistency seems to be assumed to be important, but a lot of decisions don’t seem particularly consistent).

- People are pretty justified in their fears of critiquing EA leadership/community norms

- It's actually probably bad for your career to publicly critique EA leadership, or to write some critiques in response to this contest, but the community seems to want to pretend that it isn't in the framing of these invitations for critique.

- I think I have a handful of critiques I want to make about EA that I am fairly certain would negatively impact my career to voice, even though I believe they are good faith criticisms, and I think engaging with them would strengthen EA.

- I removed a handful of items from this list because I basically thought they’d be received too negatively, and that could harm me or my employer.

- I think everything I removed was fairly good faith and important as a critique.

- I'm making a list of critiques I want to see, not even critiques I necessarily believe, but still felt like I had to remove things.

- I think it is bad that I felt the need to remove anything, but I also think it was the right decision.

- I removed a handful of items from this list because I basically thought they’d be received too negatively, and that could harm me or my employer.

- I’ve heard anecdotes about people posting what seem like reasonable critiques and being asked to take them down by leadership in the EA space (for reasons like “they are making a big deal out of something not that important”).

- I think that this is a bad dynamic, and has a lot to do with the degree of power imbalances in the EA space, and how socially and intellectually tight-knit EA leadership is.

- This seems like a dynamic that should be discussed openly and critiqued.

- Grantmakers brain-drain organizations — is this good?

- My impression of the EA job market is that people consider jobs at grantmakers to be the highest status.

- The best paying jobs in EA (maybe besides some technical roles), are at grantmakers.

- This probably causes some degree of “brain-drain” where grantmakers are able to get the most talented researchers.

- This seems like it could have some negative effects for organizations that are bad for the community.

- Grantmakers are narrowly focused on short-term decisions ("issue this grant or not?"), rather than doing longer-term or exploratory research.

- This means the most skilled researchers are taken away from exploratory questions to short-term questions, even if the skilled researcher might prioritize the exploratory research over the short-term questions.

- Grantmakers tend to be relatively secretive / quiet about their decision-making and thinking, so the research of the best researchers in the community often isn’t shared more widely (and thus can’t be more widely adopted).

- Grantmakers are narrowly focused on short-term decisions ("issue this grant or not?"), rather than doing longer-term or exploratory research.

- If this dynamic is net-negative (it has good effects too, like grantmakers making better grants!), then addressing it seems pretty important.

- Effective animal advocacy mostly neglects the most important animals

- The effective animal advocacy movement has focused mostly on welfare reforms for laying hens and broiler chickens for the last several years.

- I think this is probably partially for historical reasons — the animal advocacy movement was already a bit focused on welfare reforms, and of the interventions being pursued at the time it seemed like the most promising.

- I think that it is possible this approach has entirely missed a ton of impact for other farmed animals (especially fish and insects) and wild animals, and that prioritizing these other animals from the beginning could have been a much more effective use of that funding, even if new organizations needed to be formed, etc.

- I think in particular not working on insect farming over the last decade may come to be one of the largest regrets of the EAA community in the near future.

- This dynamic probably will continue to play out to some extent, and it seems like it could be important to address it sooner rather than later.

- Large organizations in the space seem focused only on specific strategies and specific animals, and priorities over the next few years are still on laying hens and broilers.

Erin Braid @ 2022-06-20T01:05 (+152)

Something I personally would like to see from this contest is rigorous and thoughtful versions of leftist critiques of EA, ideally translated as much as possible into EA-speak. For example, I find "bednets are colonialism" infuriating and hard to engage with, but things like "the reference class for rich people in western countries trying to help poor people in Africa is quite bad, so we should start with a skeptical prior here" or "isolationism may not be the good-maximizing approach, but it could be the harm-minimizing approach that we should retreat to when facing cluelessness" make more sense to me and are easier to engage with.

That's an imaginary example -- I myself am not a rigorous and thoughtful leftist critic and I've exaggerated the EA-speak for fun. But I hope it points at what I'd like to see!

quinn @ 2022-06-20T02:31 (+41)

Strong upvote. I'm a former leftist and I've got a soft spot for a few unique ideas in their memeplex. I read our leftist critics whenever I can because I want them to hit the quality target I know the ideas are worth in my mind, but they never do.

If anyone reading this knows leftist critics that you think have hit a reasonable quality bar or you want to coauthor a piece for the contest where we roleplay as leftists, DM me on the forum or otherwise hit me up.

Yellow @ 2022-06-28T13:40 (+10)

I consider myself a current leftist, and I honestly don't have a big "leftist critique of ea". Effective altruism seems uncomplicatedly good according to all the ideas I have that I consider "leftist", and leftism similarly seems good according to all the ideas that I consider EA.

Effective altruists as individuals aren't always radical leftist of course, though they are pretty much all left of center. If you press me to come up with criticisms of EA, I can think of harmful statements or actions made by high profile individuals to critique, I guess, though idk if that would be useful to anyone involved. I can also say that the community as a whole doesn't particularly escape the structural problems and interpersonal prejudices found in larger society - but it's certainly not any worse than larger society. Also EA organizations, are not totally immune to power and corruption and internal politics and things like that, these things could be pointed out too. What I am saying is, effective altruists and institutions aren't immune from things like racism and sexism and stuff like that. But that's true of most people and organizations, including leftist ones. But there's nothing that un-leftist about effective altruism, the ideology.

If the whole idea is that you're impartially treating everyone equally and doing the most you can to help them then that's... almost tautologically and by definition, good, from almost all reasonable political perspectives, leftist or otherwise? I think you really gotta make some stronger and more specific claims which touch upon a leftist angle, if you want someone to refute them from a leftist angle.

TheOtherHannah @ 2022-08-31T19:23 (+9)

Hey, I've written up a post along these lines. If you're still interested: https://forum.effectivealtruism.org/posts/oD3zus6LhbhBj6z2F/red-teaming-contest-demographics-and-power-structures-in-ea

abrahamrowe @ 2022-06-20T14:58 (+24)

I definitely agree with this. Here are a bunch of ideas that are vaguely in line with this that I imagine a good critique could be generated from (not endorsing any of the ideas, but I think they could be interesting to explore):

- Welfare is multi-dimensional / using some kind of multi-dimensional analysis captures important information that a pure $/lives saved approach misses.

- Relatedly, welfare is actually really culturally dependent, so using a single metric misses important features.

- Globalism/neoliberalism are bad in the longterm for some variety of reasons (cultural loss that makes human experience less rich and that's really bad? Capitalism causes more harms than benefits in the long run? Things along those lines).

- Some change is really expensive and takes a really long time and a really indirect route to get to, but it would be good to invest in anyway even if the benefits aren't obvious immediately. (I think this is similar to what people mean when they argue for "systemic" change as an argument against EA).

I think that one issue is that lots of the left just isn't that utilitarian, so unless utilitarianism itself is up for debate, it seems hard to know how seriously people in the EA community will take lefty critiques (though I think that utilitarianism is worth debating!). E.g. "nobody's free until everyone is free" is fundamentally not a utilitarian claim.

Charles He @ 2022-06-21T19:08 (+12)

but things like "the reference class for rich people in western countries trying to help poor people in Africa is quite bad, so we should start with a skeptical prior here" or "isolationism may not be the good-maximizing approach, but it could be the harm-minimizing approach that we should retreat to when facing cluelessness"

For onlookers I want to point out that this doesn't read as leftist criticism.

This is very close (almost identical) to what classical conservatives say:

From:

I think we can relieve suffering. But relieving suffering isn't the only thing I care about. I also care about what I would call flourishing--that people should have the chance to use their skills in ways that are exhilarating and meaningful and they provide dignity.

And some of the challenges I think we face as rich Westerners is that we don't know very much about those things. We don't even know how to sustain the markets that sustain our standard of living to imply that we can solve that problem in different cultures and settings seems to be a bit of hubris.

So I don't mean to be so pessimistic. But it seems to me that some of the value and return from it are going to be grossly overstated. Because we don't have all the pieces at once.

...

I'm not convinced.... And that's the hope: that a more scientific approach, a more evidence-based approach I would call it, we could always spend our money, might lead us to be more optimistic. But it might not be true.

...

Now, I'm on your side for sure in saying that it's a small amount of money toward a big possible improvement and it's worth spending because that's your expected value. Which I find very persuasive. It's just not obvious to me we know a lot about how to do that well.

...

We don't know what the numbers are. I have no problem with giving people a fishing net, if that's what they think is best to do with it--there may be some issues there. But, you know--children, etc. I think most people love their children and they are probably more worried about feeding them than keeping them malaria-free, I guess. But I do think there is this complexity issue that relentlessly makes this challenging.

This seemed to confuse Julia Wise too, and she's really smart.

Guy Raveh @ 2022-06-21T17:44 (+10)

I think the vocabulary is not fully separable from the ideology. As the latter evolves, I'd expect changes to be required in the former.

And for what it's worth, all the versions you gave are equally intellectually challenging for me to understand. The jargon is easier for some people but harder for others, most importantly to outsiders. This also means it's unfair to expect outsiders to voice their views in insider-speak.

Ulrik Horn @ 2022-06-21T03:48 (+9)

Would you be interested in outside, non-EAs doing leftist critique? And if so, how would you convince them to participate by asking them to conform to our vocabulary? I am asking as I think that some of the best people to make a thoughtful critique of EA are placed in academia. If that is true, they would be much more interested in critiquing us if they are allowed to publish. And to publish there is a strong desire to "engage with and build on existing literature and thought in the field," meaning they want to draw on academic work on international aid, decolonialism, philanthropy, etc.

Denis Drescher @ 2022-06-20T20:38 (+9)

Maybe something along the lines of: Thinking in terms of individual geniuses, heroes, Leviathans, top charities implementing vertical health interventions, central charity evaluators, etc. might go well for a while but is a ticking time bomb because these powerful positions will attract newcomers with narcissistic traits who will usurp power of the whole system that the previous well-intentioned generation has built up.

The only remedy is to radically democratize any sort of power, make sure that the demos in question is as close as possible to everyone who is affected by the system, and build in structural and cultural safeguards against any later attempts of individuals to try to usurp absolute power over the systems.

But I think that's better characterized as a libertarian critique, left or right. I can’t think of an authoritarian-left critique. I wouldn’t pass an authoritarian-left intellectual Turing test, but I have thought of myself as libertarian socialist at one point in my life.

Charles He @ 2022-06-21T18:27 (+5)

I'm in favor of good leftist criticism and there isn't any arch subtext here:

I'm a little worried that left criticism is going to just wander into a few stale patterns:

- "Big giant revolution" whose effects rely on mass coordination.

- Activists are correct, in the sense that society can shift, if a lot of people get behind it

- But I'm skeptical of how often it actually happens

- In addition to how often, I suspect the real reasons it does can be really different and unexpected from common narrative

- If it doesn't happen, it might rationalize decades of work, noise and burn out, and crowd out real work

- But I'm skeptical of how often it actually happens

- The practices/actualization often seem poorly defined or unrealized

- Defund the police, that came out of odious police abuse — did this go anywhere— was the particular asks viable in the first place?

- I expect that if you looked at MLK and the patterns that caused his success, many people would be very surprised

- A reasonable explanation is that the "founder effects", or "seating" of the causes/asks are defective—if so, it seems like they are defective because of these very essays or activists in some way

- Defund the police, that came out of odious police abuse — did this go anywhere— was the particular asks viable in the first place?

- This strategy rationalizes a lot of bad behavior and combined with poor institutions, structures and norms, you tend to see colonization/inveiglement by predators/"narcissists" and "cluster B" personality types.

- There's just bad governance in general and it leads to trashiness and repellence

- I point out this same thought is behind a lot of movements (e.g. libertarianism), as well as apps and businesses, and other things.

- Since this "giant movement/revolution" can achieve literally any outcome, shouldn't we be suspicious of those who rely on it, versus using other strategies that require resources, institutional competence and relationship building?

- Activists are correct, in the sense that society can shift, if a lot of people get behind it

- "Value statements", equity or fairness

- This just is a value thing

- There's not much to be done here, if you value people on your street or country being equal or not suffering, even if they are objectively better off than the poorest people in the world

- Because there's not much to be done, a lot of arguments might boil down to using rhetoric/devices or otherwise smuggling in things, instead of being substantive

- This just is a value thing

It would be very interesting to see a highly sophisticated (on multiple levels) leftist criticism EA.

- I think there are very deep pools of thought or counter thought that could be brought out that isn't being used

Evan_Gaensbauer @ 2022-07-17T20:43 (+4)

I'm aware "bed nets are colonialism" is kind of barely a strawman of some of the shallowest criticisms of EA from the political left but is that the literal equivalent of any real criticism you've seen?

John Bridge @ 2022-06-20T13:09 (+3)

Also strong upvote. I think nearly 100% of the leftist critiques of EA I've seen are pretty crappy, but I also think it's relatively fertile ground.

For example, I suspect (with low confidence) that there is a community blindspot when it comes to the impact of racial dynamics on the tractability of different interventions, particularly in animal rights and global health.[1] I expect that this is driven by a combination of wanting to avoid controversy, a focus on easily quantifiable issues, the fact that few members of the community have a sociology or anthropology background, and (rightly) recognising that every issue can't just be boiled down to racism.

evelynciara @ 2022-06-19T23:24 (+87)

I agree that S-risks are more neglected by EA than extinction risks, and I think the explanation that many people associate S-risks with negative utilitarianism is plausible. I'm a regular utilitarian and I've reached the conclusion that S-risks are quite important and neglected, and I hope this bucks the perception of those focused on S-risks.

Alfredo_Parra @ 2022-06-20T18:12 (+23)

Two recent, related articles by Magnus Vinding that I enjoyed reading:

Denis Drescher @ 2022-06-20T20:28 (+20)

Strong upvote. My personal intuitions are suffering focused, but I’m currently convinced that I ought to do whatever evidential cooperation in large worlds (ECL) implies. I don’t know exactly what that is, but I find it eminently plausible that it’ll imply that extinction and suffering are both really, really bad, and s-risks, especially according to some of the newer, more extreme definitions, even more so.

Before ECL, my thinking was basically: “I know of dozens of plausible models of ethics. They contradict each other in many ways. But none of them is in favor of suffering. In fact, a disapproval of many forms of suffering seems to be an unusually consistent theme in all of them, more consistent than any other theme that I can identify.[1] Methods to quantify tradeoffs between the models are imprecise (e.g., moral parliaments). Hence I should, for now, focus on alleviating the forms of suffering of which this is true.”

Reducing suffering – in all the many cases where doing so is unambiguously good across a wide range of ethical systems – still strikes me as at least as robust as reducing extinction risk.

- ^

Some variation on universalizability, broadly construed, may be a contender.

NunoSempere @ 2022-06-20T02:00 (+51)

I think I have a handful of critiques I want to make about EA that I am fairly certain would negatively impact my career to voice, even though I believe they are good faith criticisms, and I think engaging with them would strengthen EA.

This seems suboptimal, particularly if more people feel like that. But it does seem fixable: I'm up for receiving things like this anonymously at this link, waiting for a random period, rewording them using GPT-3, and publishing them. Not sure what proportion of that problem that would fix, though.

Gavin @ 2022-06-20T10:42 (+32)

The criticism contest has an anonymous submission form too.

Aleks_K @ 2022-06-22T20:50 (+14)

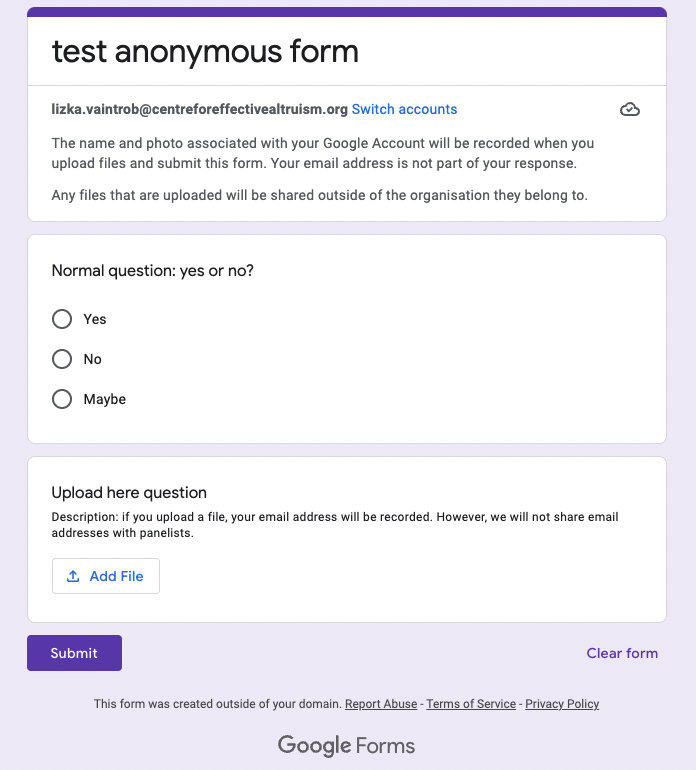

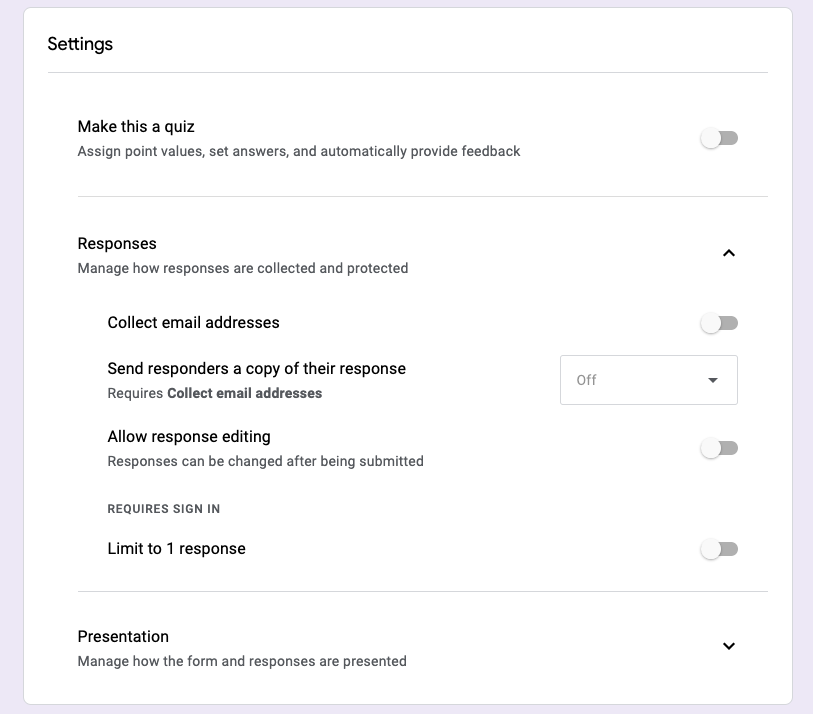

It's not anonymous, it records the name associated with your google account. (Of course you can just create a google account with a fake name, but then you can also just make an EA forum account with a fake name and post here.)

Ben_West @ 2022-06-23T15:16 (+16)

I believe this is just the confusing way that Google handles anonymous forms. It states the account you are currently using, but then has a parenthetical indicating that the information won't be shared.

Gavin @ 2022-06-23T18:49 (+2)

Think that changed after Aleks commented

Lizka @ 2022-06-23T19:50 (+7)

The issue was that we were letting people upload files as submissions. If you uploaded a file, your email or name would be shared (and we had a note explaining this in the description of the question that offered the upload option). Nearly no one was using the upload option, and if you didn't upload anything, your information wasn't shared.

Unfortunately, Google's super confusing UI says: "The name and photo associated with your Google account will be recorded when you upload files and submit this form. Your email is not part of your response," which makes it seem like the form is never anonymous. (See below.)

I removed the upload option today to reduce confusion, and hope people will just create a pseudonym or fake Google account if they want to share something that's not publicly accessible on the internet via link anonymously.

What the form looked like:

Here's what the settings for the test form look like:

Aleks_K @ 2022-06-24T21:51 (+1)

It previously said: "Your name and profile picture will be shared" (or something like that), but this seems to be fixed now.

Gavin @ 2022-06-22T23:12 (+10)

Yeah I asked em to fix this

abrahamrowe @ 2022-06-23T14:22 (+23)

Yeah, I think that some percentage of this problem is fixable, but I think one issue is that there are lots of important critiques that might be made from a place of privileged information, and filling in a form will be deanonymizing to some extent. I think this is especially true when an actor's actions diverge from stated values/goals — I think many of the most important critiques of EA that need to be made come from actions diverging from stated values/goals, so this seems hard to navigate. E.g. I think your recent criminal justice reform post is a pretty good example of the kind of critique I'm thinking of, but there are ones like it based on actions that aren't public or at least aren't written up anywhere that seem really important to have shared.

Related to this, I feel like a lot of people in EA lately have expressed a sentiment that they have general concerns like the one I outlined here, but can't point to specific situations. One explanation for this is that their concerns aren't justified, but another is that people are unwilling to talk about the specifics.

That being said, I think the anonymous submission form is really helpful, and glad it exists.

For what its worth, I've privately been contacted more about about this particular critique resonating with people than any other in this post by a large degree, which suggests to me that many people share this view.

sapphire @ 2022-06-28T06:09 (+3)

There are multiple examples of EA orgs behaving badly I can't really discuss in public. The community really does not ask for much 'openness'.

MichaelPlant @ 2022-06-23T10:32 (+49)

Someone suggested I should mention a few of the EA critiques I'm personally working on. I've only skimmed the comment so sorry if I've missed something relevant.

Three are of longtermism (and prospectively with funding support from the Forethought Foundation).

- One is based on defending person-affecting views. Here are some brief, questionably comprehensible notes for a talk I did at GPI a couple of weeks ago. Prose blog post and eventually an academic paper to follow.

- Another is on tractability/cluelessness: can we foreseeably and significantly influence the long-term? No notes yet, but I sketch the idea in another EA forum comment.

- A third is developing a theoretical justification for something like worldview diversification. If this were true, it would seem to follow we should split resources rather than go 'all-in' on any one cause. In fairness, this isn't an argument against being a longtermist, it's an argument against being only a longtermism. No note on this yet, either, but hopefully a blog post sketching it in <2 month

I've also got a 'red-team' of Open Philanthropy's cause prioritisation framework. That's written and should appear within a month.

On top of these, me and the team at HLI are generally doing research which starts with the assumption our cost-effectiveness analyses should directly measure the effects on people's subjective wellbeing (aka happiness) and see how that could change our priorities. Last week, we did a webinar with StrongMinds where I set out our work which found that treating depression in Africa is about 10x better than providing cash transfers (recording). More work in this vein to come too...

I also share sympathy with some of the other ones OP flags.

Jack Malde @ 2022-06-24T17:44 (+2)

I'm looking forward to reading these critiques! A few thoughts from me on the person-affecting views critique:

- Most people, myself included, find existence non-comparativism a bit bonkers. This is because most people accept that if you could create someone who you knew with certainty would live a dreadful life, that you shouldn't create them, or at least that it would be better if you didn't (all other things equal). So when you say that existence non-comparativism is highly plausible, I'm not so sure that is true...

- Arguing that existence non-comparativism and the person-affecting principle (PAP) are plausible isn't enough to argue for a person-affecting view (PAV), because many people reject PAVs on account of their unpalatable conclusions (which can signal that underlying motivations for PAVs are flawed). My understanding is that the most common objection of PAVs is that they run into the non-identity problem, implying for example that there's nothing wrong with climate change and making our planet a hellscape, because this won't make lives worse for anyone in particular as climate change itself will change the identities of who comes into existence. Most people agree the non-identity problem is just that...a problem, because not caring about climate change seems a bit stupid. This acts against the plausibility of narrow person-affecting views.

- Similarly, if we know people are going to exist in the future, it just seems obvious to most that it would be a good thing, as opposed to a neutral thing, to take measures to improve the future (conditional on the fact that people will exist).

- It has been that argued that moral uncertainty over population axiology pushes one towards actions endorsed by a total view even if one's credence in these theories is low. This assumes one uses an expected moral value approach to dealing with moral uncertainty. This would in turn imply that having non-trivial credence in a narrow PAV isn't really a problem for longtermists. So I think you have to do one of the following:

- Argue why this Greaves/Ord paper has flawed reasoning

- Argue that we can have zero or virtually zero credence in total views

- Argue why an expected moral value approach isn't appropriate for dealing with moral uncertainty (this is probably your best shot...)

MichaelStJules @ 2022-06-30T18:29 (+6)

Also maximizing expected choice-worthiness with intertheoretic comparisons can lead to fanaticism focusing on quantum branching actually increasing the number of distinct moral patients (rather aggregating over the quantum measure and effectively normalizing), and that can have important consequences. See this discussion and my comment.

Lukas_Gloor @ 2022-06-26T08:14 (+5)

Argue that we can have zero or virtually zero credence in total views

FWIW, I've comprehensively done this in my moral anti-realism sequence. In the post Moral Realism and Moral Uncertainty Are in Tension, I argue that you cannot be morally uncertain and a confident moral realist. Then, in The "Moral Uncertainty" Rabbit Hole, Fully Excavated, I explain how moral uncertainty works if it comes with metaethical uncertainty and I discuss wagers in favor of moral realism and conditions where they work and where they fail. (I posted the latter post on April 1st thinking people would find it a welcome distraction to read something serious next to all the silly posts, but it got hardly any views, sadly.) The post ends with a list of pros and cons for "good vs. bad reasons for deferring to (more) moral reflection." I'll link to that section here because it summarizes under which circumstances you can place zero or virtually zero credence in some view that other sophisticated reasoners consider appealing.

MichaelStJules @ 2022-06-26T10:24 (+2)

On 3, I actually haven't read the paper yet, so should probably do that, but I have a few objections:

- Intertheoretic comparisons seem pretty arbitrary and unjustified. Why should there be any fact of the matter about them? If you choose some values to identify across different theories, you have to rule out alternative choices.

- The kind of argument they use would probably support widespread value lexicality over a continuous total view. Consider lexical threshold total utilitarianism with multiple thresholds. For any such view (including total utilitarianism without lexical thresholds), if you add a(nother) greater threshold past the others and normalize by values closer to 0 than the new threshold, then the new view and things past the threshold will dominate the previous one view and things closer to 0, respectively. I think views like maximin/leximin and maximax/leximax would dominate all forms of utilitarianism, including lexical threshold utilitarianism, because they're effectively lexical threshold utilitarianim with lexical thresholds at every welfare level.

- Unbounded utility functions, like risk-neutral expected value maximizing total utilitarianism, are vulnerable to Dutch books and money pumps, and violate the sure-thing principle, due to finite-valued lotteries with infinite or undefined expectations, like St. Petersburg lotteries. See, e.g. Paul Christiano's comment here: https://www.lesswrong.com/posts/gJxHRxnuFudzBFPuu/better-impossibility-result-for-unbounded-utilities?commentId=hrsLNxxhsXGRH9SRx So, if we think it's rationally required to avoid Dutch books or money pumps in principle, or satisfy the sure-thing principle, and finite value but infinite expectated value lotteries can't be ruled out with certainty, then risk-neutral EV-maximizing total utilitarianism is ruled out.

Jack Malde @ 2022-06-26T13:00 (+2)

When it comes to comparisons of values between PAVs and total views I don't really see much of a problem as I'm not sure the comparison is actually inter-theoretic. Both PAVs and total views are additive, consequentialist views in which welfare is what has intrinsic value. It's just the case that some things count under a total view that don't under (many) PAVs i.e. the value of a new life. So accounting for both PAVs and a total view in a moral uncertainty framework doesn't seem too much of a problem to me.

What about genuine inter-theoretic comparisons e.g. between deontology and consequentialism? Here I'm less sure but generally I'm inclined to say there still isn't a big issue. Instead of choosing specific values, we can choose 'categories' of value. Consider a meteor hurtling to earth destined to wipe us all out. Under a total view we might say it would be "astronomically bad" to let the meteor wipe us out. Under a deontological view we might say it is "neutral" as we aren't actually doing anything wrong by letting the meteor wipe us out (if you have a view that invokes an act/omission distinction). So what I'm doing here is assigning categories such as "astronomically bad", "very bad", "bad", "neutral", "good" etc. to acts under different ethical views - which seems easy enough. We can then use these categories in our moral uncertainty reasoning. This doesn't seem that arbitrary to me, although I accept it may still run into issues.

MichaelStJules @ 2022-06-26T17:47 (+5)

PAVs and total views are different theories, so the comparisons are intertheoretic, by definition. Even if they agree on many rankings (in fixed population cases, say), they do so for different reasons. The value being compared is actually of a different kind, as total utilitarian value is non-comparative, but PA value is comparative.

So what I'm doing here is assigning categories such as "astronomically bad", "very bad", "bad", "neutral", "good" etc. to acts under different ethical views - which seems easy enough.

These vague categories might be useful and they do seem kind of intuitive to me, but

- "Astronomically bad" effectively references the size of an affected population and hints at aggregation, so I'm not sure it's a valid category at all for intertheoretic comparisons. Astronomically bad things are also not consistently worse than things that are not astronomically bad under all views, especially lexical views and some deontological views. You can have something which is astronomically bad on leximin (or another lexical view) due to an astronomically large (sub)population made worse off, but which is dominated by effects limited to a small (sub)population in another outcome that's not astronomically bad. Astronomically bad might still be okay to use for person-affecting utilitarianism (PAU) vs total utilitarianism, though.

- "Infinitely bad" (or "infinitely bad of a certain cardinality") could be used to a similar effect, making lexical views dominate over classical utilitarianism (unless you use lexically "amplified" versions of classical utilitarianism, too). Things can break down if we have infinitely many different lexical thresholds, though, since there might not be a common scale to put them on if the thresholds' orders are incompatible, but if we allow pairwise comparisons at least where there are only finitely many thresholds, we'd still have classical utilitarianism dominated by lexical threshold utilitarian views with finitely many lexical thresholds, and when considering them all together, this (I would guess) effectively gives us leximin, anyway.

- These kinds of intuitive vague categories aren't precise enough to fix exactly one normalization for each theory for the purpose of maximizing some kind of expected value over and across theories, and the results will be sensitive to which normalizations are chosen, which will also be basically somewhat arbitrary. If you used precise categories, you'd still have arbitrariness to deal with in assigning to categories on each view.

- Comparisons between theories A and B, theories B and C and theories A and C might not be consistent with each other, unless you find a single common scale for all three theories. This limits what kinds of categories you can use to those that are universally applicable if you want to take expected values across all theories at once. You also still need the categories and the theories to be basically roughly cardinally (ratio scale) interpretable to use expected values across theories with intertheoretic comparisons, but some theories are not cardinally interpretable at all.

- Vague categories like "very bad" that don't reference objective cardinal numbers (even imprecisely) will probably not be scope-sensitive in a way that makes the total view dominate over PAVs. On a PAV according to which death is bad, killing 50% of people would plausibly hit the highest category, or near it. The gaps between the categories won't be clear or even necessarily consistent across theories. So, I think you really need to reference cardinal numbers in these categories if you want the total view to dominate PAVs with this kind of approach.

- Expected values don't even make sense on some theories, those which are not cardinally interpretable, so it's weird to entertain such theories and therefore the possibility that expected value reasoning is wrong, and then force them into an expected value framework anyway. If you entertain the possibility of expected value reasoning being wrong at the normative level, you should probably do so for handling moral uncertainty, too.

- Some comparisons really seem to be pretty arbitrary. Consider weak negative hedonistic total utilitarianism vs classical utilitarianism, where under the weak NU view, pleasure matters 1/X times as much as suffering, or suffering matters X times more than pleasure. There are at least two possible normalizations here: a. suffering matters equally on each view, but pleasure matters X times less on weak NU view than on CU, and b. pleasure matters equally on each view, but suffering matters X times more on the weak NU view relative to pleasure on each view. When X is large enough, the vague intuitive categories probably won't work, and you need some way to resolve this problem. If you include both comparisons, then you're effectively splitting one of the views into two with different cardinal strengths. To me, this undermines intertheoretic comparisons if you have two different views which make exactly the same recommendations and for (basically) the same reasons, but have different cardinal strengths. Where do these differences in cardinal strengths come from? MacAskill, Bykvist and Ord call these "amplifications" of theories in their book, and I think suggest that they will come from some universal absolute scale common across theories (chapter 6 , section VII), but they don't explain where this scale actually comes from.

- My understanding is that those who support such intertheoretic comparisons only do so in limited cases anyway and so would want to combine them with another approach where intertheoretic comparisons aren't justified. My impression is also that using intertheoretic comparisons but saying nothing when intertheoretic comparisons aren't justified is the least general/applicable approach of those typically discussed, because it requires ratio-scale comparisons. You can use variance voting with interval-scale comparisons, and you can basically always use moral parliament or "my favourite theory".

Some of the above objections are similar to those in this chapter by MacAskill, Bykvist and Ord, and the book generally.

Guy Raveh @ 2022-06-24T20:33 (+2)

About the non-identity problem: Arden Koehler wrote a review a while ago about a paper that attempts to solve it (and other problems) for person-affecting views. I don't remember if I read the review to the end, but the idea is interesting.

About the correct way to deal with moral uncertainty: Compare with Richard Ngo's comment on a recent thread, in a very different context.

Aaron Gertler @ 2022-06-20T01:49 (+38)

The fact that everyone in EA finds the work we do interesting and/or fun should be treated with more suspicion.

I know that "everyone" was an intentional exaggeration, but I'd be interested to see the actual baseline statistics on a question like "do you find EA content interesting, independent of its importance?"

Personally, I find "the work EA does" to be, on average... mildly interesting?

In college, even after I found EA, I was much more intellectually drawn to random topics in psychology and philosophy, as well as startup culture. When I read nonfiction books for fun, they are usually about psychology, business, gaming, or anthropology. Same goes for the Twitter feeds and blogs I follow.

From what I've seen, a lot of people in EA have outside interests they enjoy somewhat more than the things they work on (even if the latter takes up much more of their time).

*****

Also, as often happens, I think that "EA culture" here may be describing "the culture of people who spend lots of time on EA Twitter or the Forum", rather than "the culture of people who spend a lot of their time on EA work". Members of the former group seem more likely to find their work interesting and/or fun; the people who feel more like I do probably spend their free time on other interests.

abrahamrowe @ 2022-06-20T11:40 (+13)

I think I agree with everything here, though I don't think the line is exactly people who spend lots of time on EA Twitter (I can think of several people who are pretty deep into EA research and don't use Twitter/aren't avid readers of the Forum). Maybe something like, people whose primary interest is research into EA topics? But it definitely isn't everyone, or the majority of people into EA.

State_Zone @ 2022-06-23T17:41 (+12)

the culture of people who spend lots of time on EA Twitter or the Forum

there's an EA Twitter?

quinn @ 2022-06-20T02:24 (+7)

Yeah I'd be figuring out homotopy type theory and figuring out personal curiosities like pre-agriculture life or life in early cities, maybe also writing games. That's probably 15% of my list of things I'd do if it wasn't for all those pesky suffering lives or that annoying crap about the end of the world.

JulianHazell @ 2022-06-20T10:59 (+35)

EA is neglecting trying to influence non-EA organizations, and this is becoming more detrimental to impact over time.

+1 to this — it's something I've been thinking about quite a bit lately, and I'm happy you mentioned it.

I'm not convinced the EA community will be able to effectively solve the problems we're keen on tackling if we mainly rely on a (relatively) small group of people who are unusually receptive to counterintuitive ideas, especially highly technical problems like AI safety. Rather, we'll need a large coalition of people who can make progress on these sorts of challenges. All else equal, I think we've neglected the value of influencing others, even if these folks might not become highly active EAs who attend conferences or whatever.

Luke Freeman @ 2022-06-20T00:00 (+25)

Thanks for sharing! I'd also love to read some of these critiques more fleshed out! Really appreciate that you posted bullet point summaries instead of either holding off for a more developed critique or just posting a vague list without summaries 😀

Denis Drescher @ 2022-06-20T20:15 (+22)

Here are four more things that I’m somewhat skeptical of and would like someone with more time on their hands and the right brain for the topic to see whether they hold water:

- Evidential cooperation in large worlds is ridiculously underexplored considering that it might “solve ethics” as I like to epitomize it. AI safety is arguably more urgent, but maybe it can even inform that discipline in some ways. I have spent about a quarter of a year thinking about ECL, and have come away with the impression that I can almost ignore my own moral intuitions in favor of what little I think I can infer about the compromise utility function. More research is needed.

- There is a tension between (1) the rather centralized approach that the EA community has traditionally taken and that is still popular, especially outside key organizations like CEA, and the pervasive failures of planned economies historically, and between (2) the much greater success of Hayakian approaches and the coordination that is necessary to avert catastrophic coordination failures that can end our civilization. My cofounders and I have started an EA org to experiment with market mechanisms for the provision of public and common goods, so we are quite desperate for more thinking of how we and EAs in general should resolve those tensions.

- 80k and others have amassed evidence that it's best for hundreds or thousands of people to apply for each EA job, e.g., because the difference between the best and second best candidate are arguably large. I find this counterintuitive. Counterintuitive conclusions are interesting and the ones we’re likely to learn most from, but they are also more often than not wrong. In particular, my intuition is that, as a shallow heuristic, people will do more good if they focus on what is most neglected, all else equal. It seems suspicious that EA jobs should be an exception to this rule. I wonder whether it’s possible to make a case against along the lines of this argument, quantitatively trading off the expected difference between the top and the second best candidate against the risk of pushing someone (the second best candidate 0 to several hops removed) out of the EA community and into AI capabilities research (e.g., because they run out of financial runway), or simply by scrutinizing the studies that 80k’s research is based on.

- I think some EAs are getting moral cooperation wrong. I’ve very often heard about instances of this but I can’t readily cite any. A fictional example is, “We can’t attend this workshop on inclusive workplace culture because it delays our work by one hour, which will cause us to lose out on converting 10^13 galaxies into hedonium because of the expansion of space.” This is, in my opinion, what it is like to get moral cooperation a bit less wrong. Obviously, all real examples will be less exaggerated, more subtle, and more defensible too.

Denis Drescher @ 2022-06-20T20:49 (+3)

A bit of a tangent, but:

Sometimes funders try to play 5d chess with each other to avoid funging each other’s donations, and this results in the charity not getting enough funding.

That seems like it could be a defection in a moral trade, which is likely to burn gains of trade. Often you can just talk to the other funder and split 50:50 or use something awesome like the S-Process.

But I’ve been in the situation where I wanted to make a grant/donation (I was doing ETG), knew of the other donor, but couldn’t communicate with them because they were anonymous to me. Hence I resorted to a bit of proto-ECL: There are two obvious Schelling points, (1) both parties each fill half of the funding gap, or (2) both parties each put half of their pre-update budget into the funding gap. Point 2 is inferior because the other party knows, without even knowing me, that more likely than not my donation budget is much smaller than half the funding gap, and because the concept of the funding gap is subjective and unhelpful anyway. Point 1 should thus be the compromise point of which it is relatively obvious to both parties that is should be obvious to both parties. Hence I donated half my pre-update budget.

There’s probably a lot more game theory that can be done on refining this acausal moral trade strategy, but I think it’s pretty good already, probably better than the status quo without communication.

david_reinstein @ 2022-06-20T10:07 (+20)

This seems very much too strong to me:

Person-affecting views are interesting, but pretty much universally dismissed in the EA community

I consider myself part of the EA community and I do not dismiss PAV... I am very sympathetic to them. When I have presented these others have not been dismissive. They are usually at least mentioned as a potential important part of a balanced breakfast of moral uncertainty.

Some articles in the forum seem to be sticking up for PAV, by Michael St Jules and others:

Here, the author states:

Unfortunately, these views have largely been neglected in population ethics, at least in EA and plausibly in academia as well,[69] while far more attention has been devoted to person-affecting views.

(Love your post by the way)

abrahamrowe @ 2022-06-26T03:15 (+3)

Yeah that's fair - there are definitely people who take them seriously in the community. To clarify, I meant my comment as person-affecting views seem pretty widely dismissed in the EA funding community (though probably the word "universally" is too strong there too.).

Ondřej Kubů @ 2022-06-29T10:20 (+15)

+1 for S-risk. I was surprised by the lack of its discussion on 80k podcast.

Lukas_Gloor @ 2022-06-26T07:56 (+15)

Defending person-affecting views

I'm working on a piece on this. (It's only a "critique of longtermism" in the weak sense that I think some longtermist claims are overstated.) If someone is working on something similar or interested in giving feedback, please DM me!

RuHats @ 2022-06-20T19:25 (+11)

I think that these factors might be making it socially harder to be a non-longtermist who engages with the EA community, and that is an important and missing part of the ongoing discussion about EA community norms changing.

This has felt very true for me!

I came across EA way back around 2011 when I was at university, pre-longtermism... EA at that point formalised a lot of my existing thinking/values and I made graduate career decisions in line with 80k advice at the time. I started getting more involved again about a year ago and was surprised to see how things had changed! I've been increasingly engaging over the past year (including starting an EA job), but have often felt a strong sense of disconnection, and have heard similar from colleagues and friends who have followed EA for a while.

How has this impacted my interactions? Well this is actually my first comment on any EA Forum post! As an example, I remember reading a post recently about 80k's updated view on climate change - it was almost entirely focused on whether it was an existential risk. That didn't seem right to me and I almost wrote a comment, but in the end I felt like I was just coming from such a different perspective that it wasn't worth it. I knew I hadn't done much longtermist reading and I felt like I'd get shot down.

Kudos to the EA criticism contest for getting me to engage with this disengagement, look more closely at my gut feeling against long-termism and work through more ideas and reading. I'm hoping I'll finding something useful to share as part of the contest - currently thinking it may be along the lines of trying to more eloquently express what I think gets missed when we simplify camps into "neartermism vs longtermism". I feel like "neartermist" EA aligns with some values (fairness? reduction of inequality?) that longtermist EA may not, but also that we can do more to evaluate near-term causes (or even just less obviously evaluable longterm causes) with longterm methods/thinking.

- Still a long way to go on this, but if you think I should look at any particular forum posts or reading in this area, please let me know.

abrahamrowe @ 2022-06-26T03:23 (+4)

I think something you raise here that's really important is that there are probably fairly important tensions to explore between the worlds that having a neartermist view and longtermist view suggest we ought to be trying to build, and that tension seems underexplored in EA. E.g. an inherent tension between progress studies and x-risk reduction.

saulius @ 2022-07-14T15:17 (+10)

Perhaps some of these criticisms might be even more useful if they were framed as opportunities. For example:

- "EA is neglecting trying to influence non-EA organizations" -> "EAs could try to influence non-EA organizations more"

- "Alternative models for distributing funding are [...] under-explored in EA" - > "we should explore alternative models"

I'm not sure if this matters much but I think it puts the focus on what can be done (e.g., what projects EA entrepreneurs could start), rather than on people feeling bad about what they already did and then defending it.

abrahamrowe @ 2022-07-14T15:36 (+4)

Yeah, I agree with this entirely. I think that probably most good critiques should result in a change, so just talking about doing that change seems promising.

Jack Malde @ 2022-06-20T12:05 (+10)

And, if there was a convincing version of a person-affecting view, it probably would change a fair amount of longtermist prioritization.

This is an interesting question in itself that I would love someone to explore in more detail. I don't think it's an obviously true statement. Two give a few counterpoints:

- People have justified work on x-risk only thinking about the effects an existential catastrophe would have on people alive today (see here, here and here).

- The EA longtermist movement has a significant focus on AI risks which I think stands up to a person-affecting view, given that it is a significant s-risk.

- Broad longtermist approaches such as investing for the future, global priorities research and movement building seem pretty robust to plausible person-affecting views.

I’d really love to see a strong defense of person-affecting views, or a formulation of a person-affecting view that tries to address critiques made of them.

I'd point out this attempt which was well-explained in a forum post. There is also this which I haven't really engaged with much but seems relevant. My sense is that the philosophical community has been trying to formulate a convincing person-affecting view and has, in the eyes of most EAs, failed. Maybe there is more work to be done though.

MichaelStJules @ 2022-06-20T17:47 (+6)

I think a person-affecting approach like the following is promising, and it and the others you've cited have received little attention in the EA community, parhaps in part because of their technical nature: https://globalprioritiesinstitute.org/teruji-thomas-the-asymmetry-uncertainty-and-the-long-term/

I wrote a short summary here: https://www.lesswrong.com/posts/Btqex9wYZmtPMnq9H/debating-myself-on-whether-extra-lives-lived-are-as-good-as?commentId=yidnhcNqLmSGCsoG9

Human extinction in particular is plausibly good or not very important relative to other things on asymmetric person-affecting views, especially animal-inclusive ones, so I think we would see extinction risk reduction relatively deemphasized. Of course, extinction is also plausibly very bad on these views, but the case for this is weaker without the astronomical waste argument.

AI safety's focus would probably shift significantly, too, and some of it may already be of questionable value on person-affecting views today. I'm not an expert here, though.

Broad longtermist interventions don't seem so robustly positive to me, in case the additional future capacity is used to do things that are in expectation bad or of deeply uncertain value according to person-affecting views, which is plausible if these views have relatively low representation in the future.

Jack Malde @ 2022-06-20T20:22 (+5)

Broad longtermist interventions don't seem so robustly positive to me, in case the additional future capacity is used to do things that are in expectation bad or of deeply uncertain value according to person-affecting views, which is plausible if these views have relatively low representation in the future.

Fair enough. I shouldn't really have said these broad interventions are robust to person-affecting views because that is admittedly very unclear. I do find these broad interventions to be robustly positive overall though as I think we will get closer to the 'correct' population axiology over time.

I'm admittedly unsure if a "correct" axiology even exists, but I do think that continued research can uncover potential objections to different axiologies allowing us to make a more 'informed' decision.

Jack Malde @ 2022-06-20T20:13 (+2)

AI safety's focus would probably shift significantly, too, and some of it may already be of questionable value on person-affecting views today. I'm not an expert here, though.

I've heard the claim that optimal approaches to AI safety may depend on one's ethical views, but I've never really seen a clear explanation how or why. I'd like to see a write-up of this.

Granted I'm not as read up on AI safety as many, but I've always got the impression that the AI safety problem really is "how can we make sure AI is aligned to human interests?", which seems pretty robust to any ethical view. The only argument against this that I can think of is that human interests themselves could be flawed. If humans don't care about say animals or artificial sentience, then it wouldn't be good enough to have AI aligned to human interests - we would also need to expand humanity's moral circle or ensure that those who create AGI have an expanded moral circle.

MichaelStJules @ 2022-06-20T21:55 (+6)

I would recommend CLR's and CRS's writeups for what more s-risk-focused work looks like:

https://longtermrisk.org/research-agenda

https://www.alignmentforum.org/posts/EzoCZjTdWTMgacKGS/clr-s-recent-work-on-multi-agent-systems

https://centerforreducingsuffering.org/open-research-questions/ (especially the section Agential s-risks)

abrahamrowe @ 2022-06-20T14:48 (+2)

Yeah those are fair - I guess it is slightly less clear to me that adopting a person-affecting view would impact intra-longtermist questions (though I suspect it would), but it seems more clear that person-affecting views impact prioritization between longtermist approaches and other approaches.

Some quick things I imagine this could impact on the intra-longtermist side:

- Prioritization between x-risks that cause only human extinction vs extinction of all/most life on earth (e.g. wild animals).

- EV calculations become very different in general, and probably global priorities research / movement building become higher priority than x-risk reduction? But it depends on the x-risk.

Yeah, I'm not actually sure that a really convincing person-affecting view can be articulated. But I'd be excited to see someone with a strong understanding of the literature really try.

I also would be interested in seeing someone compare the tradeoffs on non- views vs person-affecting. E.g. person affecting views might entail X weirdness, but maybe X weirdness is better to accept than the repugnant conclusion, etc.

antimonyanthony @ 2022-06-20T20:53 (+4)

I also would be interested in seeing someone compare the tradeoffs on non- views vs person-affecting. E.g. person affecting views might entail X weirdness, but maybe X weirdness is better to accept than the repugnant conclusion, etc.

Agreed—while I expect people's intuitions on which is "better" to differ, a comprehensive accounting of which bullets different views have to bite would be a really handy resource. By "comprehensive" I don't mean literally every possible thought experiment, of course, but something that gives a sense of the significant considerations people have thought of. Ideally these would be organized in such a way that it's easy to keep track of which cases that bite different views are relevantly similar, and there isn't double-counting.

Pablo @ 2022-06-20T15:09 (+3)

Prioritization between x-risks that cause only human extinction vs extinction of all/most life on earth (e.g. wild animals).

There are also person-neutral reasons for caring more about the extinction of all terrestrial life vs. human extinction. (Though it would be very surprising if this did much to reconcile person-affecting and person-neutral cause prioritization, since the reasons for caring in each case are so different: direct harms on sentient life, versus decreased probability that intelligent life will eventually re-evolve.)

Ren Springlea @ 2022-06-20T02:26 (+10)

I think in particular not working on insect farming over the last decade may come to be one of the largest regrets of the EAA community in the near future.

This is something that I find myself thinking about a lot. If you could wave a magic wand, what changes would you implement? I'm aware of Rethink's work to incubate the Insect Welfare Project - with that in mind, do you have any recommendations for other EAAs to help out with insect work in the meantime, even if this requires a large commitment (like starting a new org)? (I am aware of your past research on insects and that of other Rethink staff.)

Something to note - the other thing that keeps me up at night is whether the EAA movement is missing out on the impact from animal-inclusive longtermism, which is something else you've argued for and I agree with. I'm currently chatting to some people in that space about possible ways forward.

Peter Wildeford @ 2022-06-20T04:28 (+22)

Right now the thing we are most interested in is finding a strong candidate to work on the Insect Welfare Project full-time: https://careers.rethinkpriorities.org/en/jobs/50511

Donations would also be helpful. This kind of stuff can be harder to find financial support for than other things in EA. https://rethinkpriorities.org/donate

Tyner @ 2022-06-21T14:46 (+1)

>Something to note - the other thing that keeps me up at night is whether the EAA movement is missing out on the impact from animal-inclusive longtermism, which is something else you've argued for and I agree with. I'm currently chatting to some people in that space about possible ways forward.

Ren - I have also reached out to a few folks on this subject. Let's chat and see if there's some opportunity to collaborate here.

calebp @ 2022-06-20T21:56 (+9)

Thanks for writing this post, I think it raises some interesting points and I'd be interested in reading several of these critiques.

(Adding a few thoughts on some of the funding related things, but I encourage critiques of these points if someone wants to write them)

Sometimes funders try to play 5d chess with each other to avoid funging each other’s donations, and this results in the charity not getting enough funding.

I'm not aware of this happening very much, at least between EA Funds, Open Phil and FTX (but it's plausible to me that this does happen occasionally). In general I think that funders have a preference to just try and be transparent with each other and cooperate. I think occasionally this will stop organisations being funded, but I think it's pretty reasonable to not want to fund org x for project y given that they already have money for it from someone or take actions in this direction. I am aware of quite a few projects that have been funded by both Open Phil and FTX - I'm not sure whether this is much evidence against your position or is part of the 5d chess.

Sometimes funders don’t provide much clarity on the amount of time they intend to fund organizations for, which makes it harder to operate the organization long-term or plan for the future. Lots of EA funding mechanisms seem basically based on building relationships with funders, which makes it much harder to start a new organization in the space if you’re an outsider.

This is a thing I've heard a few times from grantees, I think there is some truth to it, although most funding applications that I see are time bounded anyway and we tend to just fund for the lifetime of specific projects or orgs will apply for x years worth of costs and we provide funding for that with the expectation that they will ask for more if they need it. If there are better structures that you think are easier to implement I'd be interested in hearing them, perhaps you'd prefer funding for a longer period of time conditional on meeting certain goals? I think relationships with funders can be helpful but I think it is relatively rarely the difference between people receiving funding and not receiving it within EA (although this is pretty low confidence). I can think of lots of people that we have decided against funding who have pretty good professional/personal relationships with funders. To be clear, I'm just saying that pre-existing relationships are NOT required to get funding and they do not substantially increase the chances of being funded (in my estimation).

Relatedly, it’s harder to build these relationships without knowing a large EA vocabulary, which seems bad for bringing in new people. These interactions seem addressable through funders basically thinking less about how other funders are acting, and also working on longer time-horizons with grants to organizations.

I think I disagree that the main issue is vocabulary, maybe there's cultural differences? One way in which I could imagine non EAs struggling to get funding for good projects is if they over inflate their accomplishments or set unrealistic goals as might be expected when applying to other funders, if probably think they had worse judgement than people who are more transparent about their shortcomings and strengths or worry that they were trying to con me in other parts of the application. This seems reasonable to me though, I probably do want to encourage people to be transparent.

Re funders brain drain

I'm not super convinced by this, I do think grantmaking is impactful and I'm not sure it's particularly high status relative to working at other EA orgs (e.g. I'd be surprised if people were turning down roles at redwood or Arc to work at OPP because of status - but maybe you have similar concerns about these orgs?). Most grantmakers have pretty small teams so it's plausibly not that big an issue anyway although I agree that if these people weren't doing grant making they'd probably do useful things elsewhere.

abrahamrowe @ 2022-06-21T13:38 (+18)

Thanks for the response!

RE 5d chess - I think I've experienced this a few times at organizations I've worked with (e.g. multiple funders saying, "we think its likely someone else will fund this, so are not/only partially funding it, though we want the entire thing funded," and then the project ends up not fully funded, and the org has to go back with a new ask/figure things out. This is the sort of interaction I'm thinking of here. It seems costly for organizations and funders. But I've got like an n=2 here, so it might just be chance (though one person at a different organization has messaged me since I posted this and said this point resonated with their experiences). I don't think this is intentional on funders part!