What we tried

By Jan_Kulveit, technicalities @ 2022-03-21T15:26 (+71)

This post is part of a series explaining my part in the EA response to COVID, my reasons for switching from AI alignment work for a full year, and some new ideas the experience gave me.

Here "we" means Jan K, EpidemicForecasting, people who came together to advise the Czech government, plus a large cast of EA researchers. This was almost always a collective effort, and when I (Jan K) write from a first-person perspective I am not claiming all credit.

This post gives a rough idea of the experiences and data I base the conclusions of the other posts on. It summarises the main pieces of work we did in response to COVID.

The goal is not to give a comprehensive description of all we did, nor of all the object-level lessons learned. It's not that there isn't much to say: in my estimation the efforts described here represent something between a "large portion" and a "majority" of the impact that effective altruists had on the COVID crisis in its first 18 months (although some other efforts were more visible in the EA community). [1]

But a full object-level analysis would be extremely long and distract from the more important generalisable conclusions. Also, some things are limited by confidentiality agreements. This is often the price of giving advice at the highest level: if you want to participate in important conversations, you can't leak content from them.

Our work can be roughly divided into three clusters:

- The EpidemicForecasting.org project

- Advising one European government

- Public communications in one country

EpidemicForecasting.org project

EpiFor was a project founded in the expectation that epidemic modelling as a discipline would fail to support major executive decisions for a number of specific reasons. For example, I expected:

- Because of the "fog of war", information would not reach the right places. The epidemic modelling field had a culture of not sharing raw model results publicly, but models and estimates need to be public to allow iteration, criticism, and validation.

- Academic modelling would focus on advising high-prestige customers such as US CDC or UK SAGE/SPI-M. So for the majority of the world population (those in developing countries), decision-makers would be underprovided with forecasts.

- Academic epidemic modelling would neglect user interfaces optimised for decision making.

- no multi-model comparisons (i.e. showing the results of several different teams side by side), or at least no comparison usable by nonspecialists. It is very difficult to know what to believe when you only have one model, and your decisions become nonrobust.

- The data would be bad. In practice, modellers used the wrong input data - for example, "number of confirmed cases" instead of "estimate of actual infected cases". (This is besides the data being incredibly noisy.)

- By default, even many good models would not be presented as an input suitable for decision making. To make decisions, you need scenarios ("if we do x, then this will happen"). So predictions that already implicitly include an estimate of how decisions will be made in their prediction of the outcomes are hard to interpret. (This severely limited the usability of e.g. the Metaculus forecasts)

- Many models wouldn’t handle uncertainty well. Uncertainty over key input parameters needs to be propagated into output predictions, and should be clearly presented.

(In hindsight, this was all correct) My guess was that these problems would lead to many deaths - certainly more than thousands, maybe millions - by failing to improve decisions which would by default be made in an ill-informed, slow and chaotic manner, on the basis of scarce and noisy data and justly untrusted, non-robust models.

After about two weeks of trying to convince people in a better position to do something, and realising no one else would, EpiFor started. (To be clear: when I started thinking about this, the world at large was still very confused about COVID. The other thing I did was betting against the stock market, getting 10x returns in two weeks.) I recruited fellow researchers from FHI and a bunch of friends from CZEA. We launched with this mission statement:

“To create an epidemic forecasting and modelling platform, presenting uncertain and probabilistic data to the informed public, decision makers and event organisers in a user-friendly way.”

Initially, we focused on getting a pipeline combining forecasting techniques to get parameter estimates for unknown but critical model inputs (such as real number of infected people), using state of the art epidemic models to extrapolate into the future, and presenting the results in a nice user-interface which emphasised the agency of decision makers. (It always presented multiple scenarios, based on the user selecting their response strategy and mitigation strength.)

Initial phase

The broad plan was to have impact through improving others’ decision making:

- Do the modelling, present outputs on a web dashboard. Present the current situation estimate (“nowcasting”) and a few basic scenarios for most countries in the world (e.g. “no restrictions”, “mild response”, “strong response”).

- Offer custom forecasting support for decision makers in impactful positions. Support specific decision makers pro bono to cut out the slow procurement process.

The dashboard went viral in some countries, although not in the anglosphere. Also, at some point of time, it had the best worldwide estimates of actual case numbers (often different from confirmed case numbers by one or two orders of magnitude). This was based on novel ways of combining forecasts and mathematical models - e.g. calibrating some parameters on the output of superforecasters for a few countries, extending this to all countries using several simple mathematical models, and feeding this as inputs into a large, state-of-the-art epidemic model. [2]

The bets on inadequacies listed above proved correct. We soon received a larger stream of requests than we were able to handle. We needed to prioritise, and did that based on the scale of clients. In the end we focused on advising several state-level governments in Pakistan and participating in the Africa CDC modelling consortium. Due to limited capacity, we dropped some very large clients - the largest one being the ministry of health in another >100M country - and many which seemed smaller, including >10M countries and various NGOs. While we sadly had to turn down most of these requests for help, the initial conversations with prospective clients gave us a pretty good idea of what the different countries, governments and cities were dealing with.

Research efforts

The practical needs of forecasting generated a stream of research questions. One very obvious one: Which of the interventions world governments are trying actually work? How effective is each?

This led us to create a database of interventions and, in parallel, a Bayesian model of their effectiveness. The creation of the database in particular was a large group effort, with dozens of EAs from many countries joining in.

Collecting this data was a generally sensible idea, so multiple other teams did this without us being aware - most notably a team in the neighbouring Blavatnik School of Government (University of Oxford), literally a 5 minute walk from FHI. We released the dataset basically at the same time as they released OxCGRT. Looking at their website - 100 volunteers, better presentation - we dropped our effort, assuming their project had much more manpower and would have better data, and that it made little sense to compete. It's not entirely clear that this was a good choice, because OxCGRT has several built-in choices that make the dataset extremely difficult to use for modelling the impact of interventions. [3] (Therefore, if you see studies that try to correlate the OxCGRT “stringency index” and e.g. mortality data, it's wise to have a "garbage in, garbage out" prior on their results).

Mid-March, we started modelling NPI effects, based on a Bayesian model. Again, this was a sensible thing to do: on 30th March 2020, a team at ICL released the now-famous Report 13 / Flaxman et al., estimating the aggregate impact of NPIs, which was highly influential on the global response to COVID. Again, we considered dropping our efforts, but our early results seemed promising and we wanted to include cost-effectiveness estimates (to be discussed in following posts); also we noted a number of shortcomings with the Flaxman report, principally their being unable to disentangle the effects of individual policies. Instead they used “lockdown” to mean “the whole package of NPIs including stay-at-home-orders”. This was the correct modelling choice on their side, given that their small dataset did not have enough variance to disentangle NPI effects. But equivocating between NPIs in general and stay-at-home orders in particular may have exacerbated unhelpful binary divisions (as if the policy options were “lockdown” or “nothing”).

Writing down our early results sprawled into a much larger research project than initially expected: a complete rewrite of the model into a modified form of the Flaxman et al. model, plus an ever-increasing list of sensitivity analyses. This really seems to have pushed up the standards of quality in the field of epidemic modelling. Half a year after we started out, the paper was accepted for publication in Science. Based on its Altmetric score, it became one of the top 10 most discussed papers in the journal’s history. It was also widely read in the modelling community and used by civil servants in multiple governments.

Later, this line of research continued and expanded. We also came out with one of the first Bayesian estimates of COVID’s seasonality, the effect of winter on transmission. EAs associated with the project went on to write many other papers, estimating impacts of NPIs in the second wave, properties of the Delta variant, the effect of mask wearing, and more.

Advising vaccine manufacturers

In late spring 2020, we discovered a possibly large opportunity for impact: advising vaccine companies.

Vaccines are often seriously delayed by Phase III clinical trials. These depend on getting sufficient statistical power (i.e. a threshold number of positive cases), which in turn depends on the trial sites being in places with large infection rates. But selection of trial sites often needs to happen months, or at least many weeks in advance, due to complex logistics, contracting etc. As a consequence, forecasting epidemic dynamics on a scale of multiple months can speed up trial results by months. This is a timescale where the impact of interventions and behavioural changes is very large, which means you also need to forecast what humans will do over the period, as opposed to just how the epidemic will spread independently of changes in human action.

We got invited to participate in a modelling consortium for one large vaccine manufacturer, and provided some valuable inputs. We were in discussions with another large vaccine manufacturer, but we didn't end up working for them.

Clearly, the counterfactual impact of this type of work depends on how much variance there is between the considered trial site locations (i.e. successfully suppressing the pandemic makes the trial harder). It seems we live in a world in which there was a lot of COVID in many places, so the ex post counterfactual impact of this work was small. Ex ante, this was an excellent opportunity, and probably the ex ante most impactful EA attempt to speed up vaccines.

Advising outside of EpidemicForecasting

Mostly independently of EpidemicForecasting's efforts, I gradually began to participate in consulting in Czechia.

This included:

- Work on a ‘Smart Quarantine system’. I was one of the architects of the new contact tracing solution for Czechia in March 2020.

- Advocating rapid tests for mass asymptomatic testing.

- NPI autopilot. In Czechia, we were key members of a small committee designing PES, an automatic metric-based system which adjusted the country’s active non-pharmaceutical interventions based on risk.

- Helping to create and run the Czech equivalent of the UK Scientific Advisory Group for Emergencies, which guided the Czech government's efforts from March 2021 to June 2021.

For me, this experience has been quite a rich source of insight into the workings of governments (I have spent high tens, maybe low hundreds of hours in meetings with members of government).

Unfortunately, as I mentioned in the introduction, specific things I can say about this are bound by expectations of confidentiality. Also, my role was non-political advice on epidemic control, not criticism or praise of specific actors.

What I can say is that my observations about the Czech government’s crisis response overlap with Dominic Cummings’s testimony about the UK’s. This was quite surprising to me: I had expected less generality in such things. But based on conversations with others in similar advisory roles, it seems that a lot of things about the functioning of governments and related authorities generalise (at least to liberal European states).

Public communication

Early in the pandemic, I started using my personal Facebook account to comment and report on the state of the Czech epidemic, research updates, controversies, hyped pharmaceuticals, effective interventions, and so on. Often the comments were a meta perspective on Czech COVID discourse.

Describing the influence and impact this had would make this text substantially longer, so here's a short summary and some highlights:

My Facebook was soon followed by: the majority of Czech journalists covering COVID; maybe ~20% of the "political class" active on social media (in which I include politicians, their advisors, public intellectuals, tech entrepreneurs, etc.).

Probably the best result of this was the ability to repeatably share my background models of what was going on with this group. Some of them used these either in decision making, or communication with the general public. Some positive feedback was that people said they felt less anxious after reading me.

A more bizarre manifestation, when I was in a formal advisory role, was the mainstream media running stories based entirely on my blogposts (e.g. this article by the Czech CNN consists entirely of quoting and paraphrasing my Facebook post, and a short response by one minister responding to what I wrote).

Also, at the height of the crisis, I was getting a thick stream of requests for interviews, TV appearances, talk shows, etc. I refused basically all requests to speak live on TV (tried it once; I'm not an effective TV speaker), and also refused most interview requests from mainstream media. I wrote a few op-eds, and did a few interviews with the most popular online newspaper in the country. The most shared op-ed was read directly by about 0.5M people (5% of the population).

It is out of the scope to describe in any detail what I took away from it, but one highlight is probably worth sharing:

I always tried to adhere to a high epistemic standard. It seemed to me that there was a non-massive but significant group of people who appreciated it and were looking out for this intervention. This makes me broadly optimistic about efforts to improve general epistemics, or build epistemic institutions.

Many people have been involved in the efforts described. Contributors to the projects described above include terraform, Jan Brauner, Tim Telleen-Lawton, Ozzie Gooen, Daniel Hnyk, Nora Ammann, David Johnston, Tomáš Gavenčiak, Hugo Lagerros, Sawyer Bernath, Veronika Nyvltova, Peter Hroššo, Connor Flexman, Josh Jacobson, Mrinank Sharma, Soeren Mindermann, Annie Stephenson, John Salvatier, Vladimir Mikulik, Sualeh Asif, Nuno Sempere, Mathijs Henquet, Jonathan Wolverton, Elizabeth Van Nostrand, Lukas Nalezenec, Jerome Ng, Katriel Friedman, Tomas Witzany, Jakub Smid, Maksym Balatsko, Marek Pukaj, Pavel Janata, Elina Hazaran, Scott Eastman, Tamay Besiroglu, Bruno Jahn, Damon Cham, Rafael Cuadrat, Vidur Kapur, Linch Zhang, Alex Norman, Ben Snodin, Julia Fabienne Sandkühler, Hana Kalivodová, Tereza Vesela, Vojta Vesely, Gurpreet Dhaliwal, Janvi Ahuja, Lukas Finnveden, Charlie Rogers-Smith, Joshua Monrad, Lenka Pribylova, Veronika Hajnova, Irena Kotíková, Michal Benes, David František Wagner, Petr Šimeček, David Manheim, Oliver Habryka. Thank you! Note they are not responsible for this post or the views expressed in it.

- ^

It's great that other people tried other things, and this is not a criticism of other efforts.

- ^

If anyone is interested, it should be possible to build a research career around blending forecasting and mathematical modelling. We also have some historical results "in the drawer". The EpiFor team doesn't have the capacity to write up and publish them, but we would likely be happy to advise if e.g. someone wanted to do this as part of a Master’s thesis.

- ^

Greg_Colbourn @ 2022-04-01T08:19 (+11)

What happened to the "Ask Me Anything" (mentioned here)?

Gavin @ 2023-01-03T08:53 (+2)

Good question. Everyone feel free to have it in this thread

Greg_Colbourn @ 2022-04-01T08:24 (+7)

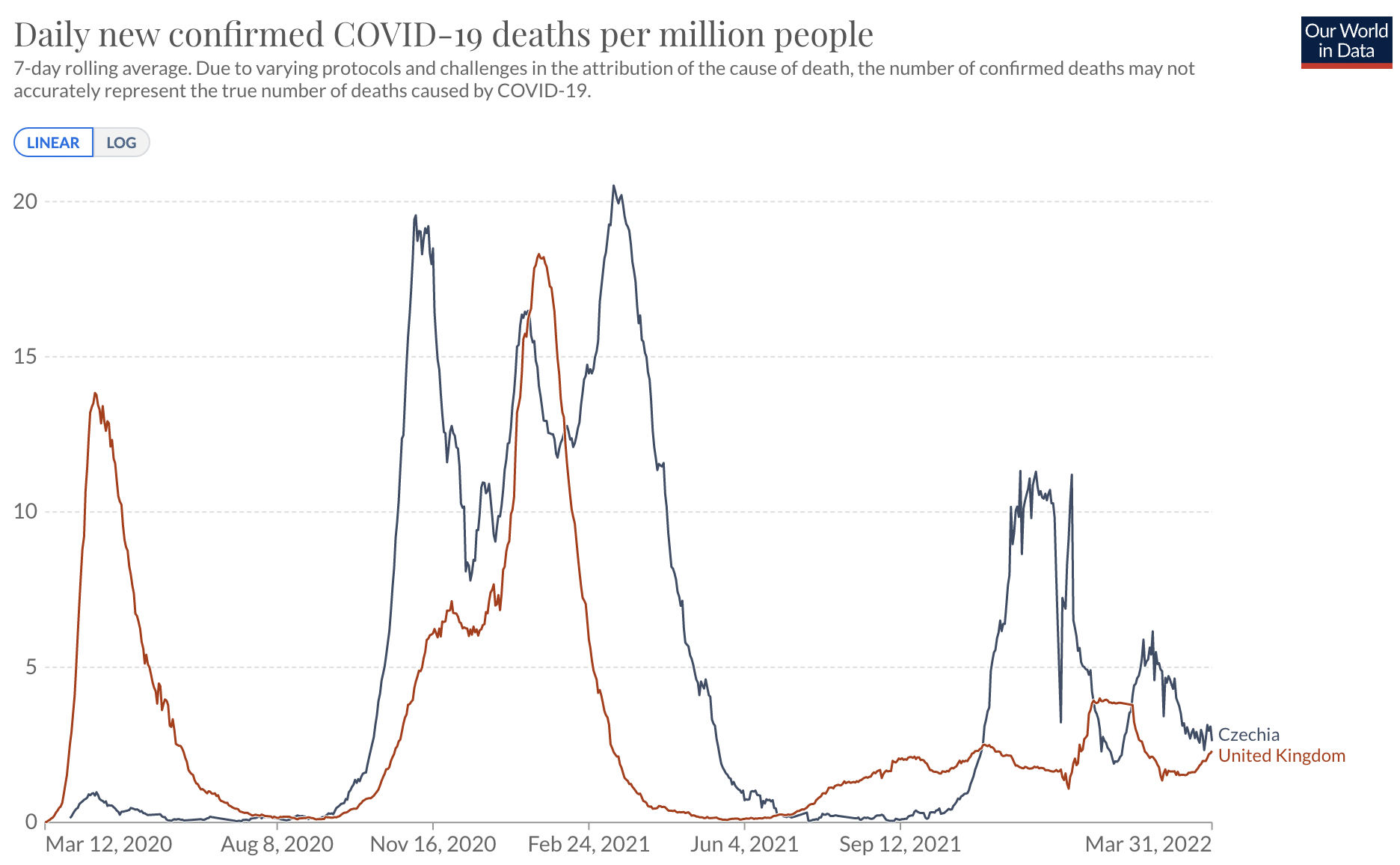

Looking at the bottom line in terms of deaths, Czechia did very well in the first wave relative to most Western countries (see below for comparison with UK). It sounds like Jan can take a decent amount of credit for this. Thanks Jan! Also Petr Ludwig (mentioned in the next post of this sequence).

However, things look to have gone badly wrong in subsequent waves, to the point where now Czechia has one of the highest death tolls per capita in the world (9th, vs 34th for the UK [sort by Deaths/1M pop; Czechia is on 3694 and UK on 2414 as of writing]). What happened?

Jan_Kulveit @ 2022-04-05T12:10 (+9)

I had little influence over the 1st wave, credit goes elsewhere.

What happened in subsequent waves is complicated. One sentence version is Czechia changed minister of health 4 times, only some of them were reasonably oriented, and how much they were interested in external advice differed a lot in time.

Note that the "death tolls per capita in the world" stats are misleading, due to differences in reporting. Czechia had average or even slightly lower than average mortality compared to "Eastern Europe" reference class, but much better reporting. For more reliable data, see https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(21)02796-3/fulltext

Greg_Colbourn @ 2022-04-01T08:34 (+2)

[Can a mod help with embedding the chart in the parent comment? I couldn't get it to work. Maybe something to do with being in explorer mode? It's at this link:

https://ourworldindata.org/explorers/coronavirus-data-explorer?zoomToSelection=true&time=2020-03-01..latest&facet=none&uniformYAxis=0&pickerSort=desc&pickerMetric=total_cases&hideControls=true&Metric=Confirmed+deaths&Interval=7-day+rolling+average&Relative+to+Population=true&Color+by+test+positivity=false&country=CZE~GBR

]

NegativeNuno @ 2022-03-21T19:43 (+5)

Hey, do you want a comment from this account (see description) on this post?

Jan_Kulveit @ 2022-03-22T08:08 (+5)

Hi, as the next post in the sequencd is about 'failures' I think it would be more useful after that is published.

Gavin @ 2022-03-21T21:08 (+3)

(Are you in the right headspace to receive information that could possibly hurt you?)