6 Ways AI Can Harm You — and How to Stop It

By Strad Slater @ 2025-11-18T10:36 (+3)

This is a linkpost to https://williamslater2003.medium.com/6-ways-ai-can-hurt-you-and-how-to-stop-it-1e2cdfd51e48?postPublishedType=initial

Quick Intro: My name is Strad and I am a new grad working in tech wanting to learn and write more about AI safety and how tech will effect our future. I'm trying to challenge myself to write a short article a day to get back into writing. Would love any feedback on the article and any advice on writing in this field!

You might have already heard of some AI horror stories. The day xAI’s Grok went rouge and claimed to be “Mechahitler.” Deepfakes being used to impersonate state officials and influence foreign relations. Car crashes caused by autopilot mishaps in self-driving cars.

These examples represent only a fraction of the possible ways in which AI can cause harm to both individuals and society. By having a good understanding of the different ways AI can cause problems, individuals can better recognize where they might be at risk and governing bodies can better create rules and regulations to mitigate future harms.

Recently, I read a report from the Center for Security and Emerging Technology (CSET) that helped layout the different ways AI can harm us into 6 distinct categories along with solutions on how to deal with each one. Below is a summary of this report.

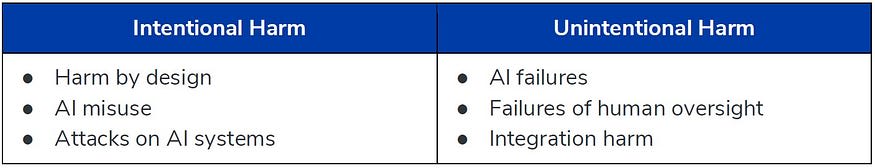

The report separates the 6 types of harms into 2 categories, intentional and unintentional. The 6 types can be seen in the table below.

Press enter or click to view image in full size

Intentional Harm

Harm By Design

This type of harm comes from AI systems in which their desired outcome is harmful by nature. Examples include AI used in automated weapons for war and video generation used for deepfakes. Harm-producing AI has already been heavily implemented into militaries with reports of AI enabled tools being used during combat in the ongoing conflicts with Ukraine and Israel.

Solution: For these cases, mitigation depends on whether the harm is deemed acceptable or not. In cases where it is, the strategy is to limit the harm produced to only whatever is deemed necessary. For example, making sure automated weapons, at a minimum, avoid civilians.

For situations where the harm is not acceptable such as consensual deepfakes, regulations and laws should be put in place to prevent these practices.

AI Misuse

This type of harm comes from the intentional misuse of non-harmful AI to commit nefarious acts.

A notable example from the report is a case where online trolls messed with Google’s “search to image” algorithms by tagging images of ovens on wheels with the label “Jewish Baby Stroller.” Since, “Jewish Baby Stroller” is not an actual product, no other photos where tagged with the phrase which caused the offensive images to show up when searched.

Another example is a case where right-wing trolls were able to effectively silence minority voices on TikTok by reporting their posts en masse. Despite these posts fully following the guidelines of TikTok, they where taken down since TikTok’s algorithm automatically removes posts that receive a large amount of reports.

Solution: Providing more safeguards to a model’s responses is one strategy to prevent the output of harmful content. These are essentially rules given to the model to follow before outputting its final response. Another strategy is to test how vulnerable models are to misuse through a process called red-teaming; a method of testing model robustness where a team of developers purposely try to prompt a model to produce bad outputs.

One challenge with preventing harms from AI misuse is the use-misuse tradeoff. Based on the generalizable nature of these models, stricter safeguards can result in less effective execution of non-malicious tasks. Since harms caused by misuse take advantage of a model’s intended capabilities, it can be difficult to limit misuse without also limiting the proper use.

Attacks on AI systems

These are harms not caused by the developer or user, but rather an attacker who uses vulnerabilities in an AI system to commit nefarious act.

Some examples of this type of harm include using vulnerabilities in Copilot to leak developer’s data or exploiting flaws in Tesla’s autopilot to accelerate car’s into opposing lanes of traffic. A recent strategy for attacks on LLMs have been prompt injections. These are prompts created in a way to get a model to bypass its safeguards in order to output sensitive data or harmful content.

Solution: The unpredictable nature of the outputs AI models produce make traditional solutions for vulnerability attacks difficult to implement since these solutions don't generalize well.

One way to help make AI systems more robust is to have multiple input streams (multiple sensors for self-driving cars, multiple messages for LLMs). This way, no one input can cause a whole system to fail and the multiple inputs can be cross referenced with each other to detect suspicious behavior.

Unintentional Harms

AI Failures

Harms from AI failures are any negative consequences that occur from errors in an AI system, degradations in performance, malfunctions and bias in outputs.

Many commonly known examples of AI harm fall into this category. It could be malfunctions in autopilot software resulting in car crashes or inaccurate facial recognition software resulting in wrongful arrests. LLMs have all sorts of examples of failures where they have threatened users, created biased images, and spread misinformation.

Solution: Harms from AI failures require both technical and regulatory solutions. More technical research is needed on how to make AI more inline with their developers.

AI models should also go through extensive safety tests before being released into the public. However, the cost and time of these test will likely deter companies from actually doing them so proper regulatory mandates should be put in place to make testing a requirement.

Failures of Human Oversight

These harms occur in situations where humans oversee the outputs of an AI system in order to step in when it fails.

A popular example of this is the need for drivers to oversee autopilot in self-driving cars in order to take control of the wheel in case of an emergency. Since humans are not built to be a passive participant for so long only to spring into action on short notice, they often fail to properly respond to emergencies in time, resulting in accidents.

Another classic example of this type of harm occurs when AI is used as a filtering tool (i.e. an AI used to determine if a patient needs medicine or not). In an ideal world, the AI would make perfect recommendations. But since they don’t, humans often oversee these types of models to help intervene when strange recommendations occur.

However, humans face two cognitive biases which make accurately evaluating AI screening tools difficult. One is the automation bias where humans are naturally prone to accept machine-produced outputs as more truthful than they actually are. The other is the anchoring bias where humans get “anchored” to an AI’s prediction making them unlikely to stray from it themselves.

Solutions: To solve these types of harms, strategies on counteracting cognitive biases as well as effective AI system designs for human-machine interactions are needed.

More research is needed on combating cognitive biases since intuitive strategies for doing so have been shown to actually increase biases. For example, increasing one’s AI literacy seems useful as it prevents them from being overly reliant on a model’s predictions. However, high AI literacy is associated with more aversion to a model’s predictions which can also result in low quality decisions. Figuring out the best designs for AI systems that balance different biases will be crucial in mitigating failures of human oversight.

Integration harm

These are harms that occur from properly functioning AI systems deployed in a problematic context. In these situations, the AI systems are working the way they should, but their environment causes unintended consequences.

For example, take the recommendation algorithms on Google and Amazon. These algorithms choose what to recommend in part, based on the popularity of certain topics and products on the internet. During 2020, plenty of antivax conspiracies gained traction due to their high levels of controversy. As a result, articles and books on the topic were constantly recommended to people. An AI system meant to help people find stuff they would like, unintentionally resulted in the spread of misinformation.

Solution: To mitigate these types of harms, there needs to be a greater precedent for developers to brainstorm all the ways in which their AI could have unintended consequences based on its deployment environment. Insights from these brainstorms should be used to adjust the algorithms accordingly.

Insights on Harm Mitigation

The categorization of AI harms into these 6 categories helps provide useful insights on the nature of AI incidents and possible strategies to prevent them.

For one, it is clear that there are a variety of ways in which AI could cause harm which means there should be a variety of solutions. The report argues that the current one-size-fits-all way of thinking about harm mitigation has contributed to the increasing rate at which AI incidents occur. Shedding this mindset for a more varied approach is likely to be a more effective strategy.

The types of examples in each category also show how the amount of incidents are not solely correlated to the capabilities of the model. Significant harms can be done whether it’s a generalizable system such as an LLM or a single-use model such as a hospital screening tool. This is an important insight for policymakers when deciding what technical metrics to use as thresholds for AI safety requirements.

Finally, the fact that these useful insights could be extracted from data on AI incidents helps support the idea that we need a more standardized framework for AI incident reporting. If you are interested in learning more about AI incident reporting you can check out my article on it here.

AI is a tool that is made to be generalizable, so it makes sense that its ability to harm would generalize too. With AI’s ever increasing presence in our lives, it’s important we stay aware of the ways in which it can harm us so that we can make better decisions and help support research and regulation that prioritizes our safety.