Alice Redfern: Moral weights in the developing world — IDinsight’s Beneficiary Preferences Project

By EA Global @ 2020-06-29T14:02 (+26)

This is a linkpost to https://www.youtube.com/watch?v=DBlJMEniJR4&list=PLwp9xeoX5p8M1U_QaFkzvJGC_3YAEpyBv&index=2

Even armed with the best intentions and evidence, donors who consider themselves to be members of the effective altruism (EA) community must make a moral choice about what “doing the most good” actually means — for example, whether it’s better to save one life or lift many people out of poverty. Alice Redfern, a manager at IDinsight, discusses the organization’s “Beneficiary Preferences Project,” which involved gathering data on the moral preferences of individuals who receive aid funding. The project could influence how governments and NGOs allocate hundreds of billions of dollars.

Below is a transcript of Alice’s talk, which we’ve lightly edited for clarity. You can also watch it on YouTube and read it on effectivealtruism.org.

The Talk

Thank you for having me. I'm really excited to be here today and to speak about this study. Buddy [Neil Buddy Shah], who is the CEO of IDinsight, actually presented a year and a half ago at EA Global: San Francisco 2018. He gave an introduction to what we were doing and shared some of the pilot-program results. Since then, we've finished piloting the project and completed a full scale-up of the same study, so today I’ll give the first presentation on the results of what we found, which is very exciting for me.

First, I'm going to try to convince you that we should capture preferences from the recipients of aid. [I’ll explain] why, because that's typically missed. Then, I'll dive into what we did in this study, hopefully convincing you that it is feasible to capture recipients’ moral preferences.

[From there], we'll get into the results — the really exciting bit. We’ll walk through how these preferences and the reasonings behind them differ from the typical EA’s reasonings, and how this might change resource-allocation decisions across the global development sector.

Why capture aid recipients’ moral preferences?

Why are we doing this?

I think we all know that effective altruists rely on value judgments in order to determine how to do the most good. We can evaluate charities, and have made a huge amount of progress [in that regard]. We do a really good job of trying to figure out which charities do the best work.

But that only gives you part of the answer. You can evaluate a charity like AMF [the Against Malaria Foundation] and find out how many lives are saved when you hand out bednets. Or you can evaluate a charity like GiveDirectly and [determine] the impact of giving out cash transfers. But that doesn’t give you a cross-outcome comparison. At some point, someone somewhere has to make a decision about how to allocate resources across different priorities. The kind of trade-offs you face are at a higher level.

[For example,] if we want to do the most good, should we be saving a child's life from malaria, or should we give a household money? [Transcriber’s note: the amounts of money involved in cash transfer programs have the potential to radically improve life for multiple members of a recipient family.] Should we save a younger individual or an older individual? These are the kinds of trade-offs that an organization like GiveWell has to work through every day, and we know that these value judgments are really difficult [to make] — and that there's a lack of relevant data to actually support them.

The current status in global development falls into one of these three categories:

1. People avoid trade-offs completely by not comparing across outcomes. People quite often decide they’re going to support something in the health sector, and then they look for what works best within that sector. But that just pushes the buck to someone else to then decide how to allocate across all of the sectors.

2. People rely on their own intuition and reasons for donating. But often, the people who do this are very far away from the recipients of that aid, and don't necessarily know how those people think through trade-offs.

3. People often rely on data from high-income countries. There is data from these countries to inform value judgments. But there isn't data from the low-income countries where these charities are working. So people just extrapolate [from high- to low-income countries] and assume that will provide part of the answer.

What people do much less often — not never, because I know that there's a growing movement of people who are trying to do this — is make trade-offs that are informed by the views and the preferences of the aid recipients.

How we captured aid recipients’ moral preferences

We've been directly partnering with GiveWell since 2017, so for about two years now, to capture preferences that inform GiveWell’s moral weights. And we've been thinking about these two questions for the last two years.

First of all, is it even feasible to capture the preferences of the recipients of aid relevant to subjective value judgments? This is not an easy task, as I'm sure you can imagine. How do you even start? How do you go about it?

Let me tell you what we did. First of all, we spent a very long time piloting [the project]. I said that Buddy spoke a year and a half ago; we spent that [entire] time just piloting, trying to figure out what works, what doesn't work, and what would [yield] useful data. And we eventually settled on three main methods. None of these methods alone is perfect; I think it's really important that we have three different methods for capturing this information from different points of view.

The first thing we did was capture the value of statistical life. This is the measure that's most often used in high-income countries to try and put a dollar value on life. It’s used by governments like those in the U.S. and in the U.K. to make these really difficult trade-offs.

We also [conducted] two-choice experiments. We asked people to think about what they want for their community. The first [questions] asked people about trade-offs between saving the lives of people who are different ages. For example: Would you rather have a program that saves 100 lives of under-five-year-olds, or 500 lives of people over 40? We asked everyone which one they’d prefer and then aggregated [the results] across the whole population to get a relative value of different ages.

Our last choice experiment, which I'll go into more detail on later, did a similar thing, but looked specifically at saving lives and providing cash transfers.

We also interviewed around 2,000 typical aid recipients across Kenya and Ghana over the last eight months or so of 2019. We conducted these quantitative interviews with really low-income households across many communities. And what became increasingly important as we went on was that we also conducted qualitative interviews with individuals and groups of people to understand how they were responding to our questions and processing these difficult trade-offs.

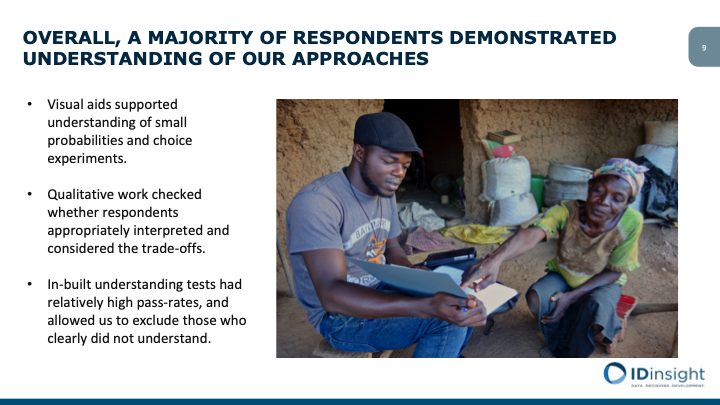

Did it work? That's a big question. My take on it is that overall, a majority of respondents demonstrated a good understanding of our approaches. I say a majority; it definitely wasn't everyone.

These were complicated questions that we asked people to engage with. But there were some things that reassured us of people’s understanding. First of all, we spent a lot of time developing visual aids to guide people through the trade-offs.

This photo is from one of the [quantitative] interviews. You can see our enumerator on the left holding the visual aids and the beneficiary pointing to her choice, which presumably is the program on the left.

We also, as I said, did qualitative work to really understand how people were interpreting our questions — if they were falling into some of the pitfalls that these questions present and misinterpreting either the questions or how to make the trade-offs.

The third and most quantitative thing that we did was to build in “understanding tests” for every single method. We had relatively high pass rates ranging from 60-80% of our sample. We eventually excluded those who clearly didn't understand. Our estimates come purely from the sample of people who did understand, which again increases our confidence.

Overall, I think [capturing preferences] is feasible. It hasn’t been easy; it's resource-intensive and you really do have to put a lot of effort into making sure the data is high-quality. But I do think we've captured actual preferences from these individuals.

The results

Onto the good bit, which is the results. For the rest of the presentation, I'm going to walk you through these. I'll start with a high-level look at the results, which really is as simple as I can get with them. As you can imagine, there's a lot behind them that I'm then going to try to unpack a bit.

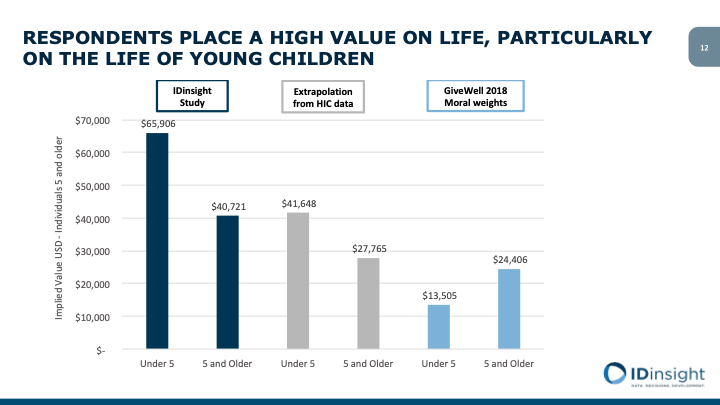

What we found at the highest level is that respondents place a really high value on life — particularly the value of life for young children. On the left you'll see the results of our study in quite a crude dollar value for a death averted of an individual under five years old and an individual five or older. In the middle you can see what happens when you predict what this value “should” be for this population based on high-income data — and our results are quite a bit higher than what people expected for this population.

If you look on the right, [you see these values in terms of] the median of GiveWell's 2018 moral weights. We use this as our benchmark for trying to figure out where we are and what the potential impact is for GiveWell. You'll see here that our results are substantially higher than [GiveWell’s] results from [2018].

[I’d like to] stress that these are GiveWell’s 2018 moral weights; they're currently updating them with our results in mind. We use this as a benchmark, but it doesn't necessarily represent where they are now.

But what we found was that our results are nearly five times as high as the GiveWell median moral weights for individuals under five years old. This is driven by two things. One is that overall, people are placing a higher value on life than predicted. The second is that people consistently value individuals under five higher than other individuals that we ask about.

As you saw on the previous slides, a lot of GiveWell staff members’ ranking of individuals is flipped compared to [our results]. We see a big difference in the value of people under five, and we also see a difference in the value of people over five. It's about 1.7 times higher.

So what does this mean?

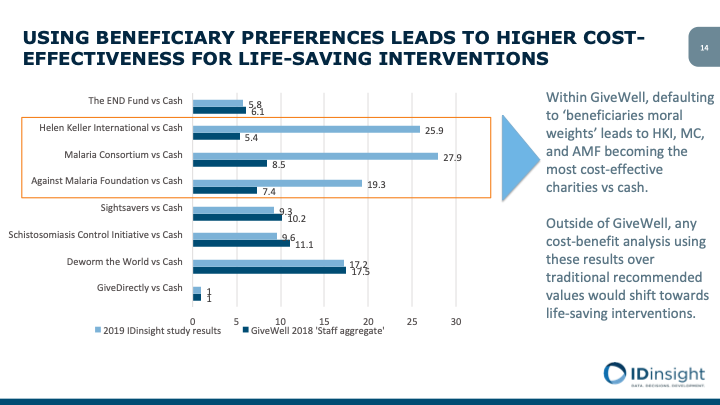

To understand the impact of this, we just took these results and put them directly into GiveWell's cost-effectiveness model. We asked, “What does that do to the cost-effectiveness of the different charities?” As you might imagine, we saw higher value placed on saving individuals under five, so the cost-effectiveness of Helen Keller International, the Malaria Consortium, and the Against Malaria Foundation increase. The same would be true outside of GiveWell. Any cost-effectiveness or cost-benefit study that's making this trade-off and using these results would shift toward life-saving interventions.

[However,] there's a whole host of reasons why this might not be the [best approach]. It might not be perfect to just default to beneficiary preferences; you might not want to just take [those] at face value. I'll walk you through some of that [thinking] now.

What I’ve [shared] so far is the simple aggregated result, and to arrive at that, we take our three methods, come up with an average, and then compare it to GiveWell’s average. But once you really get into the data, you see that there's a lot more going on.

[Let’s look at] the details of just one of our methods. This was the third choice experiment I mentioned, which gets at the relative value of money in life. This [slide shows] one of the visual aids that we used.

The language is Swahili from our Kenya study. We asked respondents to choose between two programs. Program A, shown on the left, saves lives and gives cash transfers, but it gives substantially more cash transfers. Program B, shown on the right, saves one more life [than in Program A], but it gives substantially fewer cash transfers.

We randomly changed the number of cash transfers so that people had three random choices within a range, and we looked for people switching [their choice]. If people didn't switch, we then pushed them to the extreme and said, “Okay, if you prefer cash, what if the difference is now only one cash transfer and one life? Which one would you pick?” On the flip side, if they always picked life, we pushed the number of cash transfers really high — to 1,000, to 10,000 — and asked, “What would you do then? Will you eventually switch?” We were really looking for people who have very absolute views rather than just high valuations.

We found that high values [of a life] are driven by a large chunk of respondents who always choose life-saving interventions. So 38% of our respondents still pick the program that saves one extra life, even when it was compared to 10,000 $1,000 cash transfers. That would represent a value of over $10 million for a single life, which is far above what's predicted for this population. If we were to fully incorporate this preference — and I say that because this isn't given full weight in our simple average — the value would be much higher for life-saving interventions.

Our question then was: What does that mean? What's happening with these respondents? Why are they expressing this view? Are they not really engaging with the question? Maybe they have a completely different way of thinking about it.

What we found was that for many of our respondents, this does represent a clear moral stance. We figured this out through our qualitative work. I've put up one quote here as an example of how clear people are on their views. This respondent in Migori County, Kenya said, “If there is one sick child in Migori County that needs treatment, it's better to give all the money to save the child, than give everyone in the country cash transfers.”

I can hear all of the EAs in the room panicking at a statement like that. It's very different from the typical utilitarian way of thinking about this trade-off, and we tried to understand the main reasons that people were choosing this.

Two big things came out. The first was that people place a really high value on even a very small [amount of] potential for a child to become someone significant in the future. We heard again and again, “We don't know which child might succeed and help this entire community. This one child might become the leader. They might become the economic force of the future.” They really place a high value on that small potential and want to preserve it; they [believe] that a young child deserves [that potential more than] people who might receive an amount of cash, which will have a smaller impact across more people.

The second thing we saw was people holding the view that life has inherent value that just cannot be compared to cash. This is almost the opposite of the utilitarian view. This is starting from a deontological point of view whereby “you just can't make this trade-off.” It starts from the moral standpoint that life is more important. A lot of people express this view. Sometimes it's grounded in religion — you do hear people quoting their religion and [discussing] the sanctity of life — and other times it's separate from religion and just a moral standpoint.

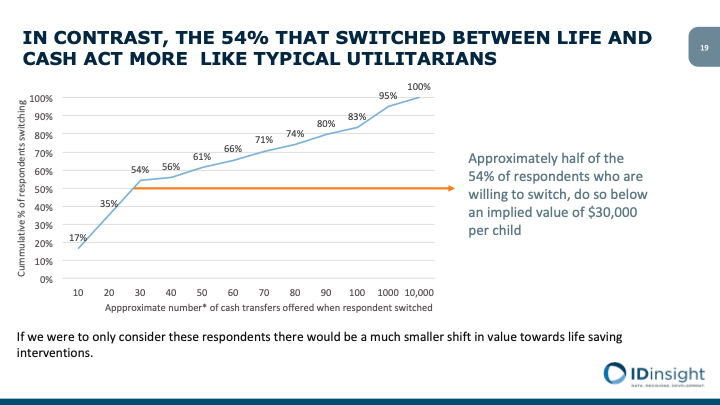

Then [the results] get interesting again. Thirty-eight of our respondents choose life no matter what. But what happens if we look at the 54% who switch between life and cash at some point?

If you look just at this group, you see that they act more like the typical utilitarians. Approximately half of the 54% of respondents were willing to switch below a level of 30 cash transfers. So that comes out as an implied value of about $30,000. If you remember the values from the earlier slides, that's very similar to the values that are already in GiveWell’s moral weights (and already seen in the literature). If we were to focus only on the people who hold this moral view, the answer is actually reasonably similar to what we already thought.

I think this leaves us with a very interesting question of what to do with the one-third of respondents who apply a completely different framework to this situation. An easy answer might be: “These are people who are engaging with the trade-off [those whose views align more with EAs], so maybe we should look at their value and put more weight on that.” But does that mean we're going to ignore the preferences of the one-third of respondents who really do think in a different way from the typical utilitarian framework?

I won't linger on this, but we also looked at the [qualitative data] on the people who do switch, and the reasons they give [for doing it] are very similar to those that we probably all give when we try and think through these trade-offs — and very similar to the types of reasons that our staff members give when they're trying to come up with their moral weights.

To sum up, what have we found?

On average, we found that aid recipients do place more value on life than predicted, particularly the lives of young children. What we also know is that these results can be incorporated into resource-allocation decisions straightaway. GiveWell is already working on updating their moral weights using this data. We've already been talking to other organizations that might be interested in using [our findings] as well.

The answer might be that more focus should be placed on life-saving interventions. But — and this is a very big “but” — the framework used by respondents to answer these questions is different from the typical framework that's used. I think a lot more thought is needed to really understand what that means and how to interpret these results and apply them to difficult decisions. I don't think anyone is going to take [the findings] so [literally] that they take a number and straightforwardly apply it to a decision. We need to work through all of the different ways of thinking about this [in order] to understand how best to apply [our research].

So what's next? I've just scratched the surface of the study here. We did a lot of other things. But from here, we also would love to apply a similar approach to capture preferences for more populations. I think what we've shown is that these preferences are different from what was expected, and there were substantial regional and country-level variations. The preferences weren’t uniform.

I think there's a lot of value in continuing to capture these preferences and formulate our thinking about how to incorporate the views of the recipients of charity. There also are a lot of related questions that we haven't even touched on. We focused on some really high-level trade-offs between cash and life, but there are a large number of trade-offs that decision makers have to make. We could potentially [explore those] with a similar approach.

Then, as I've mentioned, there's also a lot of work to do now to understand how best to apply these results to real-world decision-making. Any study using a cost-benefit [analysis] of interventions in low- and middle-income countries could immediately implement these results, and we'd like to work with different people to figure out how they can do that, and what the best approach is for them.

I think there's also an opportunity here for nonprofits to try to include preferences into their decision-making. Again, we would love to help figure out what that could look like. And at an even higher level, we would love to see foundations and philanthropists think more about how they can incorporate preferences into their own personal resource allocation. So that's something we're thinking about right now.

Great — that [covers] everything. Thank you.

Nathan Labenz [Moderator]: Thank you very much, Alice. Great talk. We have questions beginning to come in through the Whova app.

The first one is really interesting because it contradicted my intuition on this, which was that [your research is] just brilliant. It seems super-smart and obvious in retrospect, as Toby [Ord] said in his [EA Global talk], to just ask people what they think.

But one questioner is challenging that assumption by asking, “How much do we want to trust the moral framework of the recipients? For example, if you were to ask folks in different places how they weigh, relatively speaking, the moral importance of women versus men, you might get some results that you would dismiss out of hand. How do you think about that at a high philosophical level?

Alice: Yeah, this is really tricky. I think that essentially there has to be a limit to how far you can go with these results.

Another example that I think about often [involves] income. When you do a lot of these studies, you find, again and again, that the results are very much tied to income. And there's this really dangerous step beyond that in which you might say, “If someone has a higher income, their life is more highly valued.” Should we be prioritizing saving the lives of richer people? I don't think anyone is particularly comfortable with that.

I don't know where [you draw the] line, but I think there's a process here for figuring out what the application of these results is and how far we can go with it. It definitely can't be just defaulting to [the results], because I think that leads us to some dangerous places, as you pointed out.

Nathan: Yeah. I think you've covered this, but I didn't quite parse it. How did you end up weighting the views of the one-third of people who never switched [their opinion on the value of life] into those final numbers that you showed at the beginning?

Alice: Sure. I think I said that with the choice experiment [we ran], everyone made three choices that were randomly assigned. Then we looked to the extremes to identify the non-switchers.

To estimate [a value of life], we take the value of those three random choices. Essentially, it puts a cap on how high someone's estimate can be valued, as it's at the limit of those random choices and not above them, which gives us a good estimation model and allows us to come up with a single value. But it does detract from how high the value is of certain people.

Like I said, when we incorporate it fully, the value comes out way higher — like 50 times higher — because their value is near infinite. It definitely changes the results, but I think you have to do that to have a number that you can then process and work with.

Nathan: I'm recalling that graph where you had 10 or 20 through 100, and then 1,000 or 10,000. One hundred would be the highest that anyone could be weighted even if they never switched.

Alice: Yeah, exactly. If it’s higher than 100, it gets some weight. It can go above 100 but it's capped at that point.

Nathan: Okay, cool. Tell me about some of the other approaches that you tried. I think some of the most interesting aspects of this work are actually figuring out how to ask these questions in a way that produces meaningful results, even if they're not perfect. It sounds like there are a dozen other approaches that were tried and ultimately didn't work. Tell us a bit about that.

Alice: Sure. Yeah. It was a real process to figure out what works and which different biases changed the results in specific directions. Even this choice experiment has been through many iterations. At one point we had a very direct framing of “would you save a life, or give this many cash transfers?”

But when it's very direct — and I think this has been shown in behavioral economics elsewhere — people are inclined to never switch [their answers based on how many cash transfers are proposed] and always choose life. Whereas as soon as you make it indirect, people are willing to engage with the trade-off, and you actually get at their preferences. So it required a lot of framing edits.

We also tried some participatory budgeting exercises, which [some researchers have] tried out and used in the area [of global development]. Again, it's tricky. There's a lot of motivation to allocate resources very evenly when you're in a group setting. Also, the results are less directly applicable to the problem at hand.

I think those are two big things we tried out. We tried a lot of different “willingness to pay” [questions] that we ended up ruling out as well.

Nathan: Here’s another interesting [from the audience] about things that maybe weren't even tried. All of these frameworks were defined by you, right? As the experimenter?

Alice: Yeah.

Nathan: Did the team try to do anything where you invited the recipients to present their own framework from scratch?

Alice: Oh, that's interesting. In a way we did. Like I said, we did a lot of individual qualitative interviews, and those were not grounded in the three methods that I presented here. They were completely separate. A big part of those interviews was just presenting recipients with the GiveWell problem and asking, “How do you work through this?”, and then getting people to walk us through [their thinking].

With some people it's very effective. Some people are very willing to engage. But as you can imagine, some people are taken aback. It’s a complicated question. We tried it in that sense.

For us, simplifying [our questions] down to a single choice [in the other research methods] is what helped make the methods work. But qualitatively, you can go into that detail.

Nathan: Awesome. Well, this is fascinating work and there's obviously a lot more work to be done in this area. Unfortunately, that's all the time we have at the moment for Q&A. So please, another round of applause for Alice Redfern. Great job. Thank you for joining us.