Open Thread: January — March 2023

By Lizka @ 2023-01-09T11:13 (+20)

Welcome!

If you're new to the EA Forum:

- Consider using this thread to introduce yourself!

- You could talk about how you found effective altruism, what causes you work on and care about, or personal details that aren't EA-related at all.

- (You can also put this info into your Forum bio.)

Everyone:

- If you have something to share that doesn't feel like a full post, add it here! (You can also create a Shortform post.)

- You might also share good news, big or small (See this post for ideas.)

- You can also ask questions about anything that confuses you (and you can answer them, or discuss the answers).

For inspiration, you can see the last open thread here.

Other Forum resources

Fergus Fettes @ 2023-02-12T18:19 (+17)

Hello all,

long time lurker here. I was doing a bunch of reading today about polygenic screening, and one of the papers was so good that I had to share it, in case anyone interested in animal welfare was unfamiliar with it. The post is awaiting moderation but will presumably be here in due time.

So while I am making my first post I might as well introduce myself.

I have been sort of vaguely EA aligned since I discovered the movement 5ish years ago, listened to every episode of the 80k podcast and read a tonne of related books and blog posts.

I have a background in biophysics, though I am currently working as a software engineer in a scrappy startup to improve my programming skills. I have vague plans to return to research and do a phd at some point but lets see.

EA things I am interested in:

- bio bio bio (everything from biorisk and pandemics to the existential risk posed by radical transhumanism)

- ai (that one came out of nowhere! I mean I used to like reading Yudkowskys stuff thinking it was scifi but here we are. AGI timelines shrinking like spinach in frying pan, hoo-boy)

- global development (have lived, worked and travelled extensively in third world countries. lots of human capital out there being wasted)

- animal welfare! or I was until I gave up on the topic in despair (see my essay above) though I am still a vegan-aligned vegetarian

- philosophy?

- economics?

- i mean its all good stuff basically

Recently I have also been reading some interesting criticisms of EA that have expanded my horizons a little, the ones I enjoyed the most were

- The Nietzschean Challenge to Effective Altruism (Effective Aesthetics (EÆ) sounds awesome)

- Against Effective Altruism (the guy is just a damn fine writer and thinker, though he doesn't seem to propose any alternative which I find annoying)

- Effective Accelerationism (this appeals to my physics background and the parsimony of panpsychism and other such things)

But at the end of the day I think EAs own personal brand of minimally deontic utilitarianism is simple and useful enough for most circumstances. Maybe a little bit of Nietzschean spice when I am feeling in the mood.. and frankly I think fundamentally e/acc is mostly quite compatible, aside from the details of the coming AI apocalypse and [how|whether] to deal with it.

I also felt a little bit like coming out of the woodwork recently after all the twitter drama and cancellation shitstorms. Just to say that I think you folks are doing a fine thing actually, and hopefully the assassins will move on to the next campaign before too long.

Best regards! I will perhaps be slightly more engaged henceforth.

vlad.george.ardelean @ 2023-01-09T21:24 (+13)

Hi all, I'm Vlad, 35, from Romania. I've been working in software engineering for 12 years. I have a bachelor's and master's degree in Physics.

I'm here because I read "What we owe the future", after it was recommended to me by a friend.

I got the book recommended to me because I had an idea which is a little unconfortable for some people, but I think this idea is extremely important, and this friend of mine instantly classified my thoughts as "a branch of long-termism". I also think my idea is extremely relevant to this group, and I'm interested in getting feedback about it.

Context for the idea: Long-termism is concerned about people as far into the future as possible, up to the end of the universe.

The idea: ...what if we can make it so there doesn't have to be an end? If we had a limitless source of energy, there wouldn't have to be an end. Not only that, but we could make a lot of people very happy (like billions of billions of billions .....of billions of them? a literal infinity of them even)

It sounds crazy, I realize, but my best knowledge on this topic says this:

- We know that we don't know all the laws of the universe

- Even the known laws kind of have a loop-hole in them. Energy is supposed to be conserved, but we don't necessarily know how much energy exists out there - if an infinite amount exists, we can both use it, and conserve it

- I received feedback from a few physicists already, none of them said that infinite energy is clearly impossible - just that we don't know how we could get it

So my conclusion is: some amount of effort into the topic of infinite energy should be invested.

Is anyone interested in talking about this? I can show you what I have so far.

P.S. fusion is not a source of infinite energy, but merely a source of energy potentially far better than most others we know

P.P.S. I created this website for the initiative: https://github.com/vladiibine/infinite-energy

Erin @ 2023-01-11T16:41 (+14)

Hi Vlad,

You're getting a lot of disagree votes. I wanted to explain why (from my perspective), this is probably not a useful way to spend your time.

Longtermists typically propose working on problems that impact the long run future and can't be solved in the future. X-risks is a great example - if we don't solve it now, there will be no future people to solve it. Another example is historical records preservation, which is something that is likewise easy to do now but could be impossible to do in the future.

This seems like a problem that future people would be in a much better position to solve than we are.

Obviously there's nothing wrong with pursuing an idea simply because you find it interesting. A good starting place for you might be Isaac Arthur on Youtube. He has a series called Civilizations at the End of Time which is related to what you are thinking about.

vlad.george.ardelean @ 2023-01-14T15:53 (+1)

Hi Erin,

Thanks for your explanations of what likely is the issue regarding disagreement here. I appreciate it that you spent some time to shed light here, because feedback is important to me.

I knew about Isaac Arthur, I'm trying to reach out to him and his community as we speak.

I'd try to add some clarrifications, hoping I adress the concerns of those people that seemed to be in disagreement with my idea.

I find it quite surprising that people concerned with the long-term welfare of humanity seem to be against my idea.

If there are genuine arguments against my position, I'd totally be open to hear it - maybe indeed there's something wrong with my idea.

However I can't find a way to get rid of these points (I think this is philosophy)

- Sure, investing more than 0 effort into this initiative, takes away from other efforts

- The faster we reach this goal, the faster we can make tremendous improvements in peoples' lives

- If we delay this for long enough, society might not be in such a state as to afford doing this kind of research (society might also be in a better position, but I'm more concerned about

Regarding viability:

- I don't know how much effort must be invested into this initiative, in order to achieve its goals

- I don't know if this is possible (Though through my own expertise, and the expertise of 11 physicists out of which at least 4 are physics professors, this goal does not seem impossible to reach)

Framing in "What we owe the future" terms:

- Contingency: I'd give it 3/5 because

- 1 would be something obvious to everyone

- 2 would be obvious to experts

- 3 would be obvious to experts, but there would be cultural forces against it. William MacAskill talks about "cultural lock-in". I think science is in such kind of a situation today. You might have heard of issues such as "publish or perish" ( https://en.wikipedia.org/wiki/Publish_or_perish ). There's also the taboo created because of similarities with "perpetual motion machines".

- Persistence: 5/5. It's realistic we could lose access to this, but if we don't, then this in conceivably the most persistent thing possible (comparable to the death of all sentient beings, this is the other extreme)

- Significance: 5/5 - Hard to imagine something more significant than the ability to literally give everyone every thing they want or need (not "everything" but "every thing", because you can't give them human slaves, or make other people their friends, if those other people disagree)

So if my points are correct, we basically have a tradeoff between:

- Invest less in more concrete initiatives and

- Risk losing eternal bliss for an infinity of people

This is a genuine dylema, I don't have the answer to it, but my intuition tells me that we should invest more than 0 effort in this goal.

@Erin, or others:

Do you have any other idea where I should take this problem? As said, I'm trying to reach out to Isaac Arthur and many other people. Do you think this would be interesting for William MacAskill?

Thanks a lot,

Vlad A.

Erin @ 2023-01-17T14:22 (+13)

I don't think I stated my core point clearly. I will be blunt for the purpose of clarity. Pursing this is not useful because, even if you could make a discovery, it would not possibly be useful until literally 100 quintillion years from now, if not much longer. To think that you could transmit this knowledge that far into future doesn't make any sense.

Perhaps you wish to pursue this as a purely theoretical question. I'm not a physicist, so I can not comment on whether your ideas are reasonable from that perspective. You say that physicists have told you that they are, but do not discount the possibility that they were simply being polite, or that your questions were misinterpreted.

Additionally, the reality is that people without PhDs in a given field rarely make significant contributions these days - if you seek to do so, your ideas must be exceptionally well communicated and grounded in the current literature (e.g., you must demonstrate an understanding of the orthodox paradigm even if your ideas are heterodox). Otherwise, your ideas will be lumped in with perpetual motion machines and ignored.

I genuinely think it would be a mistake to pursue this idea at all, even from a theoretical perspective, because there is essentially no chance that you are onto something real, that you can make progress on it with the tools available to you, and that you can communicate it so clearly that you will be taken seriously.

A better route to pursue might be writing science fiction. There is always demand for imaginative sci-fi with a clear grounding in real science or highly plausible imagined science. There is also a real need for sci-fi that imagines positive/desirable futures (e.g. solarpunk).

DonyChristie @ 2023-01-15T02:35 (+2)

You would like Alexey Turchin's research into surviving the end of the universe.

Guy Raveh @ 2023-01-15T14:54 (+4)

I would not call it "research". Science fiction might be a better term. Which is also, I suspect, why Vlad's comment is very disagreed with. There's nothing to suggest surviving the end of the universe is any more plausible than any supernatural myth being true.

vlad.george.ardelean @ 2023-01-17T12:59 (+1)

Hey Guy, thanks for your feedback.

I might be wrong on this, but the way I understand probability to work is that, generally:

- if event A has probability P(A)

- and if event B has probability P(B)

- then the probability of both A and B to happen is P(A) * P(B)

What this means, is that technically:

- The existence of supernatural beings, with personalities, and specific traits AND the power "to do anything they want" is at most equal to the possibility for an endless source of energy to exist

simply on the basis that more constraints make the probability of the event smaller.

The interesting point however is that I have found (so far) no physicist that says this is not possible.

I have also not found anyone yet who knows how to estimate the effort so far.

I would be very interested however if there are arguments against this position.

And I'd be even more interested in people who want to help me with this initiative :D Arguments are nice, but making progress is better!

Guy Raveh @ 2023-01-17T13:15 (+4)

Given that empirical science cannot ever conclusively prove anything, you may never find a physicist to tell you that it isn't possible. But there's no reason to think that it is possible. Compare to Russell's Teapot.

Regarding your argument about probabilities - yes, the probability of an omnipotent god is necessarily smaller than that of any infinite source of energy (although it's not a product - that's just true for independent events). However I was not only talking about omnipotent gods, and anyway this probabilistic reasoning is the wrong way to think about this. When you do it, you get things like Pascal's wager (or Pascal's mugging, have your pick).

Barry Cotter @ 2023-02-03T06:32 (+12)

Just a warning on treating everyone as if they argue in good faith. They don’t. Émile P. Torres, aka @xriskology on Twitter doesn’t. He may say true honest things but if you find anything he says insightful check all the sources.

Émile P. Torres’s history of dishonesty and harassment An incomplete summary https://markfuentes1.substack.com/p/emile-p-torress-history-of-dishonesty

Erin @ 2023-02-03T21:04 (+16)

Not trying to disagree with what you're saying - just want to point out that Emile goes by they/them pronouns.

Nathan Young @ 2023-02-04T10:32 (+7)

I think Émile is close to the line for me but I think we've had positive interactions.

Dzoldzaya @ 2023-01-28T13:13 (+11)

Hey everyone, I'm curious about the extent to which people in EA take (weak/strong) antinatalism/ negative utilitarianism seriously. I've read a bit around the topic and find some arguments more persuasive than others, but the idea that many lives are net-negative, and that even good lives might be worse than we think they are, has stuck with me.

Based on my own mood diary, I'm leaning towards something around a 5.5/10 on a happiness scale being the neutral point, under which a life isn't worth living.

This has made me a lot less enthusiastic about 'saving lives' for its own sake, especially those lives in countries/ regions with very poor quality of life. So I suspect that some 'life-saving' charities could be actively harmful and that we should focus way more on 'life-improving' charities/ cause areas. (There are probably very few charities that only save lives- preventing malaria/ reducing lead exposure both improves and saves lives- but we can imagine a 'pure-play life-saving charity'.)

I haven't come to any conclusions here, but the 'cost to save a life' framing, still common in EA, strikes me as probably morally invalid. I don't hear this argument mentioned much (you don't seem to get anyone actively arguing against 'saving lives'), so I'm just curious what the range of EA opinion is.

NunoSempere @ 2023-02-02T15:35 (+5)

You might be interested in:

Ian Turner @ 2023-02-12T16:41 (+4)

Regarding the question of the population ethics of donating to Givewell charities, a 2014 report commissioned by Givewell suggested that donating to AMF wouldn't have a big impact on total population, because fertility decisions are related to infant mortality. Givewell also wrote a lengthy blog post about their work in the context of population ethics. I think the gist of it is that even if you don't agree with Givewell's stance on population ethics, you can still make use of their work because they provide a spreadsheet where one can plug in one's own moral weights.

Linch @ 2023-03-31T06:29 (+10)

I feel confused about how dangerous/costly it is to use LLMs for private documents or thoughts to assist longtermist research, in a way that may wind up in the training data for future iterations of LLMs. Some sample use cases that I'd be worried about:

- Summarizing private AI evals docs about plans to evaluate future models

- Rewrite emails on high-stakes AI gov conversations

- Generate lists of ideas for biosecurity interventions that can be helped/harmed by AI

- Scrub potentially risky/infohazard-y information from a planned public forecasting questions

- Summarize/rewrite speculations of potential near-future AI capabilities gains.

I'm worried about using LLMs for the following reasons:

- Standard privacy concerns/leakage to dangerous (human) actors

- If it's possible to back out your biosecurity plans from the models, this might give ideas to terrorists/rogue gov'ts.

- your infohazards might leak

- People might (probabilistically) back out private sensitive communication, which could be embarrassing

- I wouldn't be surprised if care for consumer privacy at AGI labs for chatbot consumers is much lower than say for emails hosted by large tech companies

- I've heard rumors to this effect, also see

- I wouldn't be surprised if care for consumer privacy at AGI labs for chatbot consumers is much lower than say for emails hosted by large tech companies

- (unlikely) your capabilities insights might actually be useful for near-future AI developers.

- Training models in an undesirable direction:

- Give pre-superintelligent AIs more-realistic-than-usual ideas/plans for takeover

- Subtly bias the motivations of future AIs in dangerous ways.

- Perhaps leak capabilities gains ideas that allows for greater potential for self-improvement.

I'm confused whether these are actually significant concerns, vs pretty minor in the grand scheme of things. Advice/guidance/more considerations highly appreciated!

Carlos Ramírez @ 2023-04-04T19:35 (+1)

The privacy concerns seem more realistic. A rogue superintelligence will have no shortage of ideas, so 2 does not seem very important. As to biasing the motivations of the AI, well, ideally mechanistic interpretability should get to the point we can know for a fact what the motivations of any given AI are, so maybe this is not a concern. I guess for 2a, why are you worried about a pre-superintelligence going rogue? That would be a hell of a fire alarm, since a pre-superintelligence is beatable.

Something you didn't mention though: how will you be sure the LLM actually successfully did the task you gave it? These things are not that reliable: you will have to double-check everything for all your use cases, making using it kinda moot.

Leo Mansfield @ 2023-03-17T21:40 (+9)

I would like advice on writing a resume and applying to work in an effective career. I will graduate with an economics bachelor's degree in April. I'm taking many statistics courses. I also took calculus and computer science courses. I live on the west coast of Canada and I am willing to move.

I believe I would be well suited to AI Governance but it may be better currently to find statistics/econometrics work or do survey design (to build general skills until I know more AI Governance people, or switch into a different effective cause area)

I am also open to recommendations for other effective careers. My degree is quite general and I have deliberately avoiding sinking much into AI Governance specifically. I think I have a comparative advantage in AI Governance because my father is a manager of a machine learning research team at Google, that I could potentially influence.

If I don't find an occupation immediately after graduation then I will do local community-building in Cryonics/Life-Extension and take these courses online:

- Bayesian Statistics (Statistical Rethinking by Richard Mcelreath

- AI Governance by BlueDot Impact

- In-Depth EA program

I would like to do these courses anyway but if I find an occupation then I can do one or two at a time.

If I find an occupation outside EA then I will focus on learning statistics and other general skills. Then I can better apply these skills once I move into an AI Governance. If the occupation is in government or policy spaces then I will develop relevant social networks. The downside is that I'd be less poised to take opportunities in AI Governance.

I don't know much about finding an occupation in AI Governance. I applied to internships last summer and after being refused I asked the hiring staff what skills I should learn, and read all their recommendations. But I just don't really know what is going on in the AI Governance career path.

I'd appreciate a comment if you know of:

- guides to writing resumes and job applications (EA-specific)

- places I should apply to that aren't on the 80000 hours job board.

- advice on what non-EA work would help me build relevant skills and networks. (or even volunteer projects I could do on my own! I'm not in immediate need of paid work)

Ishan Mukherjee @ 2023-03-23T08:19 (+9)

The EA Opportunities Board and Effective Thesis' database (they also have a newsletter) might be useful. I expect they're listed on 80,000 Hours so you might already know them, but if not: ERA Cambridge are accepting applications for AI governance research fellowships.

emre kaplan @ 2023-02-15T13:17 (+8)

I have seen Sabine Hossenfelder claim that it will be very expensive to maintain superintelligent AIs. I also hear many people claiming that digital minds will use much less energy than human minds, so they will be much more numerous. Does anyone have some information or a guess on how much energy ChatGPT spends per hour per user?

Felix Wolf @ 2023-02-15T17:28 (+7)

Epistemic status: quick google search, uncertain about everything, have not read the linked papers. ~15 minutes of time investment.

Source 1

The Carbon Footprint of ChatGPT

[...] ChatGPT is based on a version of GPT-3. It has been estimated that training GPT-3 consumed 1,287 MWh which emitted 552 tons CO2e [1].

Using the ML CO2 Impact calculator, we can estimate ChatGPT’s daily carbon footprint to 23.04 kgCO2e.

[...] ChatGPT probably handles way more daily requests [compared to Bloom], so it might be fair to expect it has a larger carbon footprint.

Source 2

The carbon footprint of ChatGPT

3.82 tCO₂e per day

Also, maybe take a look into this paper about a different language model:

ESTIMATING THE CARBON FOOTPRINT OF BLOOM, A 176B PARAMETER LANGUAGE MODEL

https://arxiv.org/pdf/2211.02001.pdf

Quantifying the Carbon Emissions of Machine Learning

https://arxiv.org/pdf/1910.09700.pdf

You can play a bit with this calculator, which was also used in source 1:

ML CO2 Impact

https://mlco2.github.io/impact/

constructive @ 2023-02-25T21:38 (+2)

I think a central idea here is that superintelligence could innovate and thus find more energy-efficient means of running itself. We already see a trend of language models with the same capabilities getting more energy efficient over time through algorithmic improvement and better parameters/data ratios. So even if the first Superintelligence requires a lot of energy, the systems developed in the period after it will probably need much less.

emre kaplan @ 2023-02-16T05:54 (+1)

Thanks a lot, Felix! That's very generous and some links have even more relevant stuff. Apparently, ChatGPT uses around 11870 kWh per day whereas the average human body uses 2,4 kWh.

Silas @ 2023-01-20T03:18 (+8)

Hi I’m Silas Barta. First comment here! I organize the Austin LessWrong group. I’m currently retired off of earlier investing (formerly software engineer) but am still looking for my next career to maximize my impact. I think I have a calling in either information security (esp reverse engineering) or improving the quality of explanations and introductions to technical topics.

I have donated cryptocurrency and contributed during Facebook’s Giving Tuesday, and gone to the Bay Area EA Globals in 2016 and 2017.

Agustín Covarrubias @ 2023-01-28T04:50 (+4)

You might want to know that a few weeks ago, 80.000 hours updated their career path profile on information security.

Felix Wolf @ 2023-01-20T10:25 (+4)

Hey Silas,

welcome to the Forum. I wish you the best of luck to find a fulfilling career. :)

If you have any kind of question on where to find resources or what not, feel free to ask.

With kind regards

Felix

Carlos Ramírez @ 2023-03-08T22:18 (+7)

I'm looking for statistics on how doable it is to solve all the problems we care about. For example, I came across this: https://www.un.org/sustainabledevelopment/wp-content/uploads/2018/09/Goal-1.pdf from the UN which says extreme poverty could be sorted out in 20 years for $175 billion a year. That is actually very doable, in light of the fact of how much money can go into war (in 1945, the US spent 40% of its GDP into the war). I'm looking for more numbers like that, e.g. how much money it takes to solve X problem.

I intend to use them for a post on how there is no particular reason we can't declare total war on suffering. We can totally organize massively to do great things, and we have done it many times before. We should have a wartime mobilization for the goal of ending suffering.

Brad West @ 2023-03-09T22:37 (+2)

I think I could help you in your total war. PM me if interested in learning more.

Aithir @ 2023-01-25T15:49 (+7)

I thought it might be helpful to share this article. The title speaks for itself.

How to Legalize Prediction Markets

What you (yes, you) can do to move humanity forward

emre kaplan @ 2023-03-07T08:46 (+6)

Does anyone know why Singer hasn't changed his views on infanticide and killing animals after he had become a hedonist utilitarian? As far as I know, his former views were based on the following:

a. Creation and fulfilment of new preferences is morally neutral.

b. Thwarting existing preferences is morally bad.

c. Persons have preferences about their future.

d. Non-persons don't have a sense of the future, they don't have preferences about their future either. They live in the moment.

e. Killing persons thwarts their preferences about the future.

f. Killing non-persons doesn't thwart such preferences.

g. Therefore killing a person can't be compensated by creating a new person. Whereas when you kill a non-person, you don't thwart many preferences anyway so killing non-persons can be compensated.

I think after he had become a hedonist this person/non-person asymmetry should mostly disappear. But I haven't seen him updating Animal Liberation or other books. Why is that?

NickLaing @ 2023-03-07T09:15 (+2)

Thanks Emre - simple question what are his current views, I'm assuming from what you are saying he is still pro infanticide in rare circumstances soon after birth?

emre kaplan @ 2023-03-07T11:44 (+3)

I think he's not commenting on it much anymore since this issue isn't really a major priority. But I think he used to advocate for infanticide in a larger set of circumstances(eg. when it's possible to have another child who will have a happier life). The part about infanticide isn't that relevant to any kind of work EA is doing. But his views are still debated in animal advocacy circles and I am not sure what exactly his position is.

NickLaing @ 2023-03-07T12:32 (+2)

Gotcha. It's true it's not immediately obvious from google or chatGPTx.

Lorenzo Buonanno @ 2023-03-07T13:05 (+2)

I think he writes a bit about it here: https://petersinger.info/faq in the section: "You have been quoted as saying: "Killing a defective infant is not morally equivalent to killing a person. Sometimes it is not wrong at all." Is that quote accurate?"

Carlos Ramírez @ 2023-03-01T00:45 (+6)

Hello everyone! My name is Carlos. I recently realized I should be leading a life of service, instead of one where I only care about myself, and that has taken me here, to the place that is all about doing the most good.

I'm an odd guy, in that I have read some LessWrong and have been reading Slate Star Codex/Astral Codex Ten for years, but am for all intents and purposes a mystic. That shouldn't put me at odds here too much, since rationality is definitely a powerful and much needed tool in certain contexts (such as this one), it's just that it cannot do all things.

I wonder if there are others like me here, since after all, the decision to give to charity, particularly to far-off places, is not exactly rational.

Hoping to learn a lot, and to figure out a way to make my career (been a software developer for 11 years) high impact, or at least, actually helpful.

You guys are the Rebel Alliance from Star Wars, and I am ready to be an X-Wing pilot in it!

Felix Wolf @ 2023-03-01T11:23 (+3)

Hi Carlos,

welcome to the Forum!

Moya is probably the most mystic person I know of, so nice to see that you already encountered her. :D

Here in the Forum, we really try to be nice and welcoming, if you follow along, I don't see any reason this couldn't work out. ;)

If you are open to suggestions, I want to recommend you looking into the Podcast Global Optimum from Daniel Gambacorta. He talks about how you can become a more effective altruist and has some good thinking about the pros and cons of different topics, for example the episode about how altruistic should you be?.

"[…] the decision to give to charity […] is not exactly rational." Can you please explain?

With kind regards

Felix

Carlos Ramírez @ 2023-03-01T20:22 (+1)

Hi Felix, thanks for the recs! What I mean by giving to charity not being exactly rational, is that giving to charity doesn't help one in any way. I think it makes more sense to be selfish than charitable, though there is a case where charity that improves ones community can be reasonable, since an improved community will impact your life.

And sure, one could argue the world is one big community, but I just don't see how the money I give to Africa will help me in any way.

Which is perfectly fine, since I don't think reason has a monopoly on truth. There are such things as moral facts, and morality is in many ways orthogonal to reason. For example, Josef Mengele's problem was not a lack of reason, his was a sickness of the heart, which is a separate faculty that also discerns the truth.

She's done it @ 2023-01-16T04:54 (+6)

Qn: Where is the closest EA community base to the US? How accesible is the USA from it (US Consulate)?

Context: I am recently let go from my job while on a visa in the states. Which means I have to leave the US within the next 7 days. I would like to live somewhere close to the US where I can find community so that I don't loose momentum to do the intense work that job search needs. I tend to be really affected by the energy of where I am; I work best in cities, I tend to sleep most on a countryside.

This might also be a good resource for people who are not able to enter the US for any reason whatsoever. I am assuming a longterm housing community in a nomad friendly place like CDMX would do wonders for people wishing to be within +/- 3 hours of timezone of their American colleagues.

jwpieters @ 2023-01-20T23:51 (+1)

There are some EAs hanging out in CDMX until the end of Jan (and maybe some after)

Agree that having a nomad friendly community near the US would be great

She's done it @ 2023-02-11T20:33 (+2)

I did end up in Mexico City. I plan to continue the job search from here while exploring independent contracting for some supplemental income and diverse project experience.

- If anyone is looking for expertise in biosecurity/global health to help with ongoing projects, please reach out and delegate to me! I am new here, so I haven't gathered any "EA karma" from well-written posts yet. I would love to change that!

LinkedIn

- I am open to ideas in up-skilling for the most impactful work I can do as a physician-scientist. Open to ideas for skills to master and funds to apply for the same.

- Also, EAs in the Americas, take a work-cation in CDMX! The weather is excellent, and the city is energetic and green. So far, a good group of EAs have been here after the fellowship ended. I would love to keep it up!

Moya @ 2023-02-25T01:19 (+5)

Hi all,

Moya here from Darmstadt, Germany. I am a Culture-associated scientist, trans* feminist, poly, kinky, and a witch.

I got into LessWrong in 2016 and then EA 2016 or 2017, don't quite remember. :)

I went to the University of Iceland, did a Master's degree in Computer Science / Bioinformatics there, then built software for the European Space Agency, and nowadays am a freelance programmer and activist in the Seebrücke movement in Germany and other activist groups as well. I also help organize local burn events (some but not all of them being FLINTA* exclusive safer spaces.)

Silly little confession: It took me so many years to finally sign up to the EA forum because my password manager is not great and I just didn't want to bother opening it and storing yet another password in there. But hey, finally overcame that incredibly-tiny-in-hindsight-obstacle after just a bit over half a decade and signed up. \o/

Milena Canzler @ 2023-02-28T12:45 (+4)

Hi Moya!

Welcome to the forum from another person in southern Germany. I'm curious: Are you connected to the Darmstadt local group? If so, hope to see you at the next event in the area (I live in Freiburg). Would love to connect and hear what your perspective on EA is!

Also, the password manager story is too relatable. ^^

Cheers, Mila

Moya @ 2023-03-01T22:00 (+1)

Hi Mila,

Yeah, I am involved in the Darmstadt local group (when I have the time, many many things going on.)

And wheee, would be glad to meet you too :)

Milena Canzler @ 2023-03-08T10:38 (+2)

Sweet! I'm sure we'll meet sooner or later then :D

Carlos Ramírez @ 2023-03-01T00:48 (+2)

Nice to meet you! Also a new guy. Good to see you're a witch, I'm a mystic! A burn event is a copy of Burning Man? Definitely would like to go to one of those.

Moya @ 2023-03-01T22:01 (+2)

Hi there :)

Yes indeed, burn events are based on the same principles as Burning Man, but each regional burn is a bit different just based on who attends, how these people choose to interpret the (intentionally) vague and contradicting principles, etc. :)

graceyroll @ 2023-02-17T14:19 (+5)

First time poster here.

I am currently doing my master's degree in design engineering at Imperial College London, and I am trying to create a project proposal around the topic of computational social choice and machine learning for ethical decision making. I'm struggling to find a "design engineering" take on this - what can I do to contribute in the field as a design engineer?

In terms of prior art, I've been inspired by MIT's Moral Machine, feeding ML models of aggregate ethical decisions from people. If anyone has any ideas on a des eng angle to approach this topic, please give me some pointers!

TIA

quinn @ 2023-02-21T16:50 (+3)

I don't think it'll help you in particular but my thinking was influenced by Critch's comments about how CSC applies to existential safety https://www.alignmentforum.org/posts/hvGoYXi2kgnS3vxqb/some-ai-research-areas-and-their-relevance-to-existential-1#Computational_Social_Choice__CSC_

garymm @ 2023-02-26T17:13 (+2)

Seems somewhat related to RadicalXChange stuff. Maybe look into that. They have some meetups and mailing lists.

ChayBlay @ 2023-02-06T21:11 (+5)

Hi everyone,

I was close to becoming a statistic of someone who started reading 80,000 hours but never completed the career planning program. I am coming back now as I need some direction.

Of all the global priorities, I gravitate toward those that focus on improving physical and mental health. As someone who deals with chronic pain and is in between jobs, nothing consumes my attention more than alleviating physical and mental suffering.

I am curious if anyone in the community spends their work life thinking and working on increasing longevity, eliminating chronic pain, improving athletic performance, or improving individual reasoning or cognition.

As I am searching for jobs that align with my interests and considering going back to school, I would be grateful for any insight that the community has to offer with regard to pursuing these different fields.

I am 32 years old and unfortunately, 10 years of medical school is no longer appealing. I've thought about biotech and IT because of the limitless upside that tech generally can leverage in terms of health outcomes and even salary, but I'm overwhelmed about the best place to begin to get into those fields.

I'm also thinking about PA school, but I feel like that might place limits on making a larger impact given the connotations (implicit and otherwise) of being an "assistant".

Thank you for reading this far and for any advice you are willing to share!

Chase

Erich_Grunewald @ 2023-02-06T21:25 (+5)

Of all the global priorities, I gravitate toward those that focus on improving physical and mental health. As someone who deals with chronic pain and is in between jobs, nothing consumes my attention more than alleviating physical and mental suffering. I am curious if anyone in the community spends their work life thinking and working on increasing longevity, eliminating chronic pain, improving athletic performance, or improving individual reasoning or cognition.

Not sure how helpful this is to you, but the Happier Lives Institute does research on mental health and chronic pain. See e.g. this recent post on pain relief, and this one evaluating a mental health intervention (but also this response, and this response to the response).

GoingCoast @ 2023-02-02T03:20 (+5)

Woof. This look’s exhausting. So I found out I’m on the autism spectrum. My energy for people saying things is… not a very high capacity. It’s been fun recently to stretch my curiosity with this AI https://chat.openai.com/chat But engaging with people is generally an overwhelming prospect.

I want to design a stupidly efficient system that revives public journalism and research, strengthens eco-conscious businesses challenged by competitors who manufacture unsustainable consumer goods, provides supplemental education for age groups to support navigating changing understanding and provide guidance for “better humaning and/or Earth/environmental custodianship”, and establish foundations for universal basic income. And probably design a functional healthcare system while I’m at it. And I want to burn targeted advertisement to the ground.

Thanks for giving me a space where I can say all these things.

BrownHairedEevee @ 2023-01-14T21:08 (+5)

What kind of lightbulb is Qualy? Incandescent or LED? probably not CFL given the shape

William the Kiwi @ 2023-03-14T21:22 (+4)

Hi there everyone, I'm William the Kiwi and this is my first post on EA forums. I have recently discovered AI alignment and have been reading about it for around a month. This seems like an important but terrifyingly under invested in field. I have many questions but in the interest of speed I will involve Cunningham's Law and post my current conclusions.

My AI conclusions:

- Corrigiblity is mathematically impossible for AGI.

- Alignment requires defining all important human values in a robust enough way that it can survive near-infinite amounts of optimisation pressure exerted by a superintelligent AGI. Alignment is therefore difficult.

- Superintelligence by Nick Bostrum is a way of communicating the antimeme "unaligned AI is dangerous" to the general public.

- The extinction of humanity is a plausible outcome of unaligned AI.

- Eliezer Yudkowsky seems overly pessimistic but likely correct about most things he says.

- Humanity is likely to produce AGI before it produces fully aligned AI.

- To incentivize responses to this post I should offer a £1000 reward for a response that supports or refutes each of these conclusions and provides evidence for it.

I am currently visiting England and would love to talk more about this topic with people, either over the Internet or in person.

Carlos Ramírez @ 2023-03-16T21:13 (+4)

You might want to read this is as a counter to AI doomerism: https://www.lesswrong.com/posts/LDRQ5Zfqwi8GjzPYG/counterarguments-to-the-basic-ai-x-risk-case

This for a way to contribute to solving this problem without getting into alignment:

https://www.lesswrong.com/posts/uFNgRumrDTpBfQGrs/let-s-think-about-slowing-down-ai

this too:

https://betterwithout.ai/pragmatic-AI-safety

and this for the case that we should stop using neural networks:

https://betterwithout.ai/gradient-dissent

Robi Rahman @ 2023-03-14T22:04 (+2)

Hi William! Welcome to the Forum :)

Why do you think that corrigibility is mathematically impossible for AGI? Because you think it would necessarily have a predefined utility function, or some other reason?

William the Kiwi @ 2023-03-15T10:35 (+3)

Hi Robi Rahman, thanks for the welcome.

I do not know if has a predefined utility function, or if the functions simply have similar forms. If there is a utility function that provides utility for the AI to shutdown if some arbitrary "shutdown button" is pressed, then there exists a state where the "shutdown button" is being pressed at a very high probability (e.g. an office intern is in the process of pushing the "shutdown button") that provides more expected utility than the current state. There is therefore an incentive for the AI to move towards that state (e.g. by convincing the office intern to push the "shutdown button"). If instead there was negative utility in the "shutdown button" being pressed, the AI is incentivized to prevent the button from being pressed. If instead the AI had no utility function for whether the "shutdown button" was pressed or not, but there somehow existed a code segment that caused the shutdown process to happen if the "shutdown button" was pressed, then there existed a daughter AGI that has slightly more efficient code if this code segment is omitted. An AGI that has a utility function that provides utility for producing daughter AGIs that are more efficient versions of itself, is incentivized to produce such a daughter that has the "shutdown button" code segment removed.

There is a more detailed version of this description in https://intelligence.org/files/Corrigibility.pdf

I could be wrong about my conclusion about corrigiblity (and probably am), however it is my best intuition at this point.

Misha_Yagudin @ 2023-02-25T16:13 (+4)

Is there a way to only show posts with ≥ 50 upvotes on the Frontpage?

Hauke Hillebrandt @ 2023-02-25T16:45 (+8)

Stop free-riding! voting on new content is a public good, Misha ;P

Misha_Yagudin @ 2023-02-26T15:30 (+8)

Thank you, Hauke, just contributed an upvoted to the visibility of one good post — doing my part!

Alternatively, is there a way to apply field customization (like hiding community posts and up-weighting/down-weighting certain tags) to https://forum.effectivealtruism.org/allPosts?

NunoSempere @ 2023-02-26T18:50 (+2)

Yes, ctrl+F on "customize tags"

Lizka @ 2023-02-26T20:14 (+6)

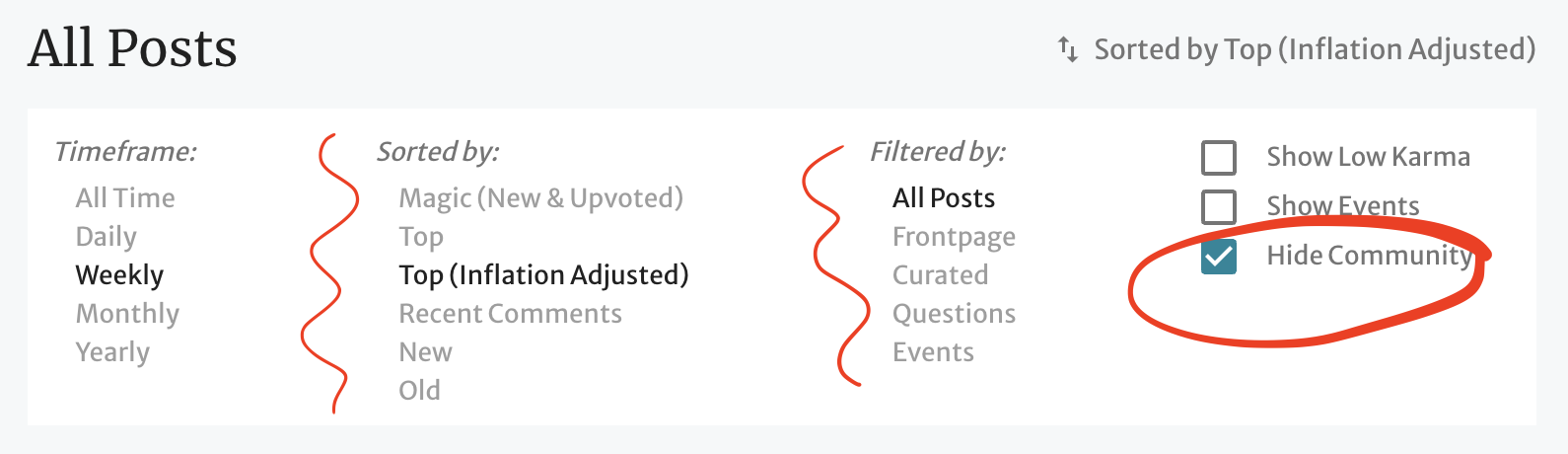

Hi! On the All Posts page, you can't filter by most tags, unfortunately, although we just added the option of hiding the Community tag:

Find the sorting options:

Hide community:

On the Frontpage, you can indeed filter by different topics.

C_Axiotes @ 2023-02-11T10:59 (+4)

It’ll be my first time at a Bay Area EA Global at the end of this month - does anyone have any tips? Any things I should definitely do?

Also if you’re interested in institutional reform you might like my blog Rules of the Game: https://connoraxiotes.substack.com/p/what-can-the-uk-government-do-to

Felix Wolf @ 2023-02-11T16:28 (+3)

Hey Axiotes,

congratulations on your accepted EAG application! Here are three articles you may find interesting.

- How to Get the Maximum Value Out of Effective Altruism Conferences

- Doing 1-on-1s Better - EAG Tips Part II

- EA Global Tips: Networking with others in mind

My personal tips are: take time for yourself and don't overwhelm yourself too much. Write down beforehand how the best EAG would look like to you and how a great EAG would look like. Take notes on what you want to accomplish and what to speak about in your 1o1s. Make 1o1s and have a good, productive time. After the EAG reevaluate what happened, what you have learned and write down next steps.

Ishan Mukherjee @ 2023-02-18T16:02 (+1)

Hey! This might be useful: An EA's Guide to Berkeley and the Bay Area

Wubbles @ 2023-01-29T22:28 (+4)

Does anyone have estimates on the cost effectiveness of trachoma prevention? It seems as though mass antibiotic administration is effective and cheap, and blindness is quite serious. However room for funding might be limited. I haven't seen it investigated by many of the organizations, but maybe I just haven't found the right report.

Ian Turner @ 2023-02-12T16:13 (+5)

GiveWell looked at this in 2009 and decided that chemoprophylaxis is not cost effective.

GiveWell leans on a 2005 Cochrane study that concluded that "For the comparisons of oral or topical antibiotic against placebo/no treatment, the data are consistent with there being no effect of antibiotics".

However, it looks like Cochrane revisited this in 2019 and I'm not sure if Givewell took a second look.

Rafael Vieira @ 2023-02-12T12:33 (+3)

Hey Wubbles,

I realise that my response is a bit late, but there is some peer-reviewed literature on this matter. The most relevant paper would be this one from 2005. The main results are:

(...) trichiasis surgery with 80% coverage of the population would avert more than 11 million DALYs per year globally, with cost effectiveness ranging from I$13 to I$78 per DALY averted, which is below the cost-effectiveness threshold of three times GDP per capita. Mass antibiotic treatment using azythromycin at prevailing market prices at 95% coverage level would avert more than 4 million DALYs per year globally and is most cost-effective among antibiotic interventions with ratio’s ranging between I$9,000 and I$65,000 per DALY averted. However, the cost per DALY averted exceeds the cost-effectiveness threshold.

Unfortunately, I am not aware of any more recent paper using updated azythromycin costs. It would be interesting for someone to perform a new cost-effectiveness study based on the 2015 International Medical Products Price Guide, as the price of azythromycin is known to have decreased since 2005. There is, however, a recent study restricted to Malawi that suggests that mass treatment with azythromycin may be cost-effective.

Matt Keene @ 2023-01-26T00:42 (+4)

How do folks! Stoked to have the opportunity to try and be a participant that contributes something meaningful here on the EA Forum.

EA Forum Guidelines (and Aaron)...thank you for the guidance and encouraging me to write the bio.

All, I'm new to the EA community. I'll hope to meet some of you soon. Please feel free to send a hello anytime.

I see the "Commenting Guidelines". They remind me of the Simple Rules of Inquiry that I've used for many years. Are they a decent match for the spirit of this Forum?

- Turn judgment into curiosity

- Turn conflict into shared exploration

- Turn defensiveness into self-reflection

- Turn assumptions into questions

What do I care about? I've been unanimously appointed to the post of lead head deputy associate administrator facilitator of my daughters' education (6 and 10) . I love them. Our educational praxis is designed to enable them to realize an evaluative evolution and create a future we want amidst the accelerating coevolution of Nature, Humans and AI. No presh. They spell great. Well, one out of two anyway.

I also care about the chill peeps sweeping the beach with metal detectors wearing headsets. I want to learn more but I don't want to be rude and interrupt what they are listening to.

See you in the funny papers.

Matt

(I'm reading the commenting guidelines wondering which ones I violated. Like a historian, I'm not sure if I was explaining or pursuading. I certainly wasn't clear. I disagreed with almost everything I wrote...didn't I? Okay. So. How do I ask readers where they went after they got kicked off this Forum? Tbc, I want to stay.).

Felix Wolf @ 2023-01-26T13:24 (+4)

Hey Matt,

welcome to the EA Forum. :)

Your personal guidelines translate well into our community guidelines here in the forum. No worries on that front.

If you want any guidance on where to find more information or where to start, feel free to ask or write me a personal message.

I was browsing your website/blog and found a missing page:

https://www.creatingafuturewewant.com/praxes/democratizingeffectiveness

→ https://prezi.com/vsrfc7ztmkvn/democratizing-effectiveness/?present=1

The presentation is offline atm. I hope this helps. :D

A suggestion for your work as lead head deputy associate administrator and facilitator could be to visit this website:

https://www.non-trivial.org/

Non-Trivial sponsors fellowships for student projects, which is something you could do in the future, but more importantly for now maybe take a look at their course:

https://course.non-trivial.org/

"How to (actually) change the world" could be interesting.

With kind regards

Felix

Matt Keene @ 2023-01-26T18:06 (+2)

Thank you Felix. Nice to feel welcome.

Grateful for the new opportunities and resources you've shared. We will look into them and keep them handy.

I appreciate the website feedack...It is a work in progress and I could do much better at tidying things up that I won't likely get to in the near term. On it!

Thank you for your service to educate our friends and peers about the environment.

Take good care of yourself Felix.

Matt

basil.halperin @ 2023-03-31T00:42 (+3)

Forgive me if I'm just being dumb, but -- does anyone know if there is a way in settings to revert to the old font/CSS? I'm seeing a change that (for me) makes things harder to read/navigate.

Misha_Yagudin @ 2023-03-13T17:34 (+3)

The table here got all messed up. Could it be fixed?

Dane Magaway @ 2023-03-14T16:22 (+5)

This has now been fixed. Our tech team has resolved the issue by using dummy bullet points to widen the columns. Thanks for reaching out! Let me know if you run into any issues on your end.

Misha_Yagudin @ 2023-03-16T18:20 (+3)

Thank you very much, Dane and the tech team!

Misha_Yagudin @ 2023-03-16T18:23 (+2)

Hey, I think the fourth column was introduced somehow… You can see it by searching for "Mandel (2019)"

Dane Magaway @ 2023-03-13T17:42 (+3)

Hi, Misha! Thanks for reaching out. We're on it and will let you know when it's sorted.

graceyroll @ 2023-03-01T14:13 (+3)

Hi guys !

I posted about 2 weeks ago here asking for masters project ideas around the field of computational social choice and machine learning for ethical decision making.

To recap: I'm currently doing my master's project in design engineering at Imperial, where I need to find something impactful, implementable and innovative.

I really appreciated all the help I got on the post, however, I've hit a kind of dead end - I'm not sure I can find something within my scope with the time frame in the field I've chosen.

So now I'm asking for any project ideas which fit the above criteria. It can be in any field, and honestly, any points would really help. I want to take this project as the opportunity to really make something meaningful with the time I have here at uni.

TIA

Lorenzo Buonanno @ 2023-03-01T14:49 (+6)

Hi Grace!

I don't have any project ideas in mind, but I wonder if it would make sense to talk with the people at https://effectivethesis.org/ and maybe to have a look at this board https://ea-internships.pory.app/board for inspiration

Good luck with your project!

Marc Wong @ 2023-02-09T16:03 (+2)

Hello, All!

I found EA via the New Yorker article about William MacAskill.

I am the author of "Thank You For Listening".

I listen, therefore you are. We understand and respect, therefore we are. We bring out the best in each other, therefore we thrive.

Go beyond Can Do. We Can understand, respect, and bring out the best in others, often beyond our expectations.

We know how to cooperate on roads. We can cooperate at home, at work, and in society. Teach everyone to listen (yield), check biases (blind spots), and reject ideological rage (road rage).

Bringing Out The Best In Humanity

Felix Wolf @ 2023-02-09T23:55 (+1)

Hey Marc,

here is a workable link to your post from October:

https://forum.effectivealtruism.org/posts/7srarHqktkHTBDYLq/bringing-out-the-best-in-humanityYLq/

BobMail @ 2023-03-14T07:59 (+1)

GiveWell traditionally has quarterly board meetings; were there ones in August and December 2022? If so, are notes available? (https://www.givewell.org/about/official-records#Boardmeetings)

Jack FitzGerald @ 2023-03-09T10:37 (+1)

Hey everyone! First time poster here, but long time advocate for effective altruism.

I've been vegan for a couple of years now, mostly to mitigate animal suffering. Recently I've been wondering how a vegetarian diet would compare in terms of suffering caused. Of course I presume veganism would be better, but by how much?

With this in mind I'm wondering is there any resources that attempt to quantify how much suffering is caused by buying various animal products? For example dairy cows produce about 40,000 litres of milk in their lifetime, which can be used to make about 4000kg of cheese. With this in mind one could consider how much suffering a dairy cow endures in their lifetime and then quantify how much suffering they are responsible for each time they purchase a kilo of cheese.

My calculations are of course very imprecise and probably quite flawed, but I'm curious if anyone else has taken a more robust attempt at comparing the suffering caused by various animal products? I realize this may be hard since suffering is hard to quantify.

https://thehumaneleague.org/article/how-much-milk-does-a-cow-produce

emre kaplan @ 2023-03-09T10:58 (+6)

I suspect most of the impact of veganism comes from its social/political side effects rather than the direct impact of the consumption. I believe it's better to mostly think about "what kind of meme and norm should I spread" as most of the impact is there.

Jack FitzGerald @ 2023-03-10T20:10 (+1)

I'm inclined to agree, although I was curious nonetheless. Also anecdotally it seems like an increasing number of people are basing their diet on calculated C02 emissions, so calculations based on suffering seem like they would be a useful counterpart.

Thanks for sharing the compilation!

Lorenzo Buonanno @ 2023-03-09T10:45 (+5)

Hi Jack!

You might be interested in https://faunalytics.org/animal-product-impact-scales/#:~:text=Wondering%20About%20Your%20Impact%20Per%20Serving%20As%20An%20Individual%3F and https://foodimpacts.org/ .

In particular, eggs seem to cause a surprising amount of suffering per serving (compared to e.g. milk or cheese)

Jack FitzGerald @ 2023-03-10T20:00 (+2)

Both of those resources are excellent and exactly the sort of thing I was looking for. Thank you so much!

emre kaplan @ 2023-03-09T17:10 (+3)

Brendan OHare 🐮 @ 2023-02-09T00:23 (+1)

Howdy everyone!

I'm Brendan O'Hare, and I was an arete fellow in college and I have been involved with EA since! I have recently decided to try and chart my own career path after striking out a couple of times in job application process post-graduation. I have decided to start a newsletter/blog/media outlet focused on Houston and local issues, particularly focusing on urbanism. I want to become an advocate for better local policies that I understand quite a bit.

If anyone has any tips with regards to writing, growing on twitter, etc. I would love to hear it! Thank you all so much for this platform.

Simon Sällström @ 2023-02-02T05:33 (+1)

Internship / board of trustees!

My name is Simon Sällström, after graduating with a masters in economics from Oxford in July 2022, I decided against going on the traditional 9-5 route in the City of London to move around money to make more money for people who already have plenty of money… Instead, I launched a charity

DirectEd Development Foundation is a charitable organisation whose mission is to propel economic growth and develop and deliver evidence-based, highly scalable and cost-effective bootcamps to under-resourced high-potential students in Africa, preparing them for remote employment by equipping them with the most sought-after digital and soft skills on the market and thereby realise their potential as leaders of Africa’s digital transformation.

I'm looking for passionate people in the EA community to join me and my team!

We are mainly looking for two unpaid positions to fill right now: interns and trustees. The latter is quite an important role

I am not entirely sure how to best go about this which is why I am writing this short comment here. Any advice?

Here's what I have done so far in terms of information about the internship position and application form: https://directed.notion.site/Job-board-3a6585f2175a456bb4f3d1149cfddba2

Here is what we have for the trustees (work in progress): https://directed.notion.site/Trustee-Role-and-Responsibilities-115d46fd04d94bd1a7061ca5d00f8f71

Happy to take any and all advice:)