AI Safety’s Talent Pipeline is Over-optimised for Researchers

By Christopher Clay @ 2025-08-30T11:02 (+113)

Thank you to all the wonderful people who've taken the time to share their thoughts with me. All opinions are my own: Will Aldred, Jonah Boucher, Deena Englander, Dewi Erwan, Bella Forristal, Patrick Gruban, William Gunn, Tobias Häberli, James Herbert, Adam Jones, Michael Kerrison, Schäfer Kleinert, Chris Leong, Cheryl Luo, Sobanan Narenthiran, Alicia Pollard, Will Saunter, Nate Simmons, Sam Smith, Chengcheng Tan, Simon Taylor, Ben West, Peter Wildeford, Jian Xin.

Executive Summary

There is broad consensus that research is not the most neglected career in AI Safety, but almost all entry programs are targeted at researchers. This creates a number of problems:

- People who are tail-case at research are unlikely to be tail-case in other careers.

- Researchers have a bias in demonstrating ‘value alignment’ in hiring rounds.

- Young people trying to choose careers have a bias towards aiming for research.

Introduction

When I finished the Non-Trivial Fellowship, I was excited to go out and do good in the world. The impression I got from general EA resources out there was that I could progress through to the ‘next stage’ relatively easily[1]. Non-Trivial is a highly selective pre-uni fellowship, so I expected to be within the talent pool for the next steps. But I spent the next 6 months floundering; I thought and thought about cause prioritisation, I read lots of 80k and I applied to fellowship after fellowship without success.

The majority of AI Safety talent pipelines are optimised for selecting and producing researchers. But research is not the most neglected talent in AI Safety. I believe this is leading to people with research-specific talent being over-represented in the community because:

- Most supporting programs into AI Safety strongly select for research skills.

- Alumni of these research programs are much better able to demonstrate value alignment.

This is leading to a much smaller talent pool for non-research roles, including advocacy and running organisations. And those non-research roles have a bias towards selecting former researchers.

From the people I talked to, I got the impression that this is broadly agreed among leaders of AI Safety organisations[2]. But these are a very small number of people thinking about this - and they’re often thinking about this completely independently of each other!

My main goal of this post is to get more people outside of high-level AI Safety organisations to study the ecosystem itself. With limited competitive pressures on the system to force it to become more streamlined, I believe having more people actively helping the movement coordinate could magnify the impact of others.

If you are interested in working on any aspect of the AI Safety Pipeline, please consider getting in touch; I’m actively looking for collaborators.

We Need Non-Research AI Safety Talent

Epistemic status: Medium

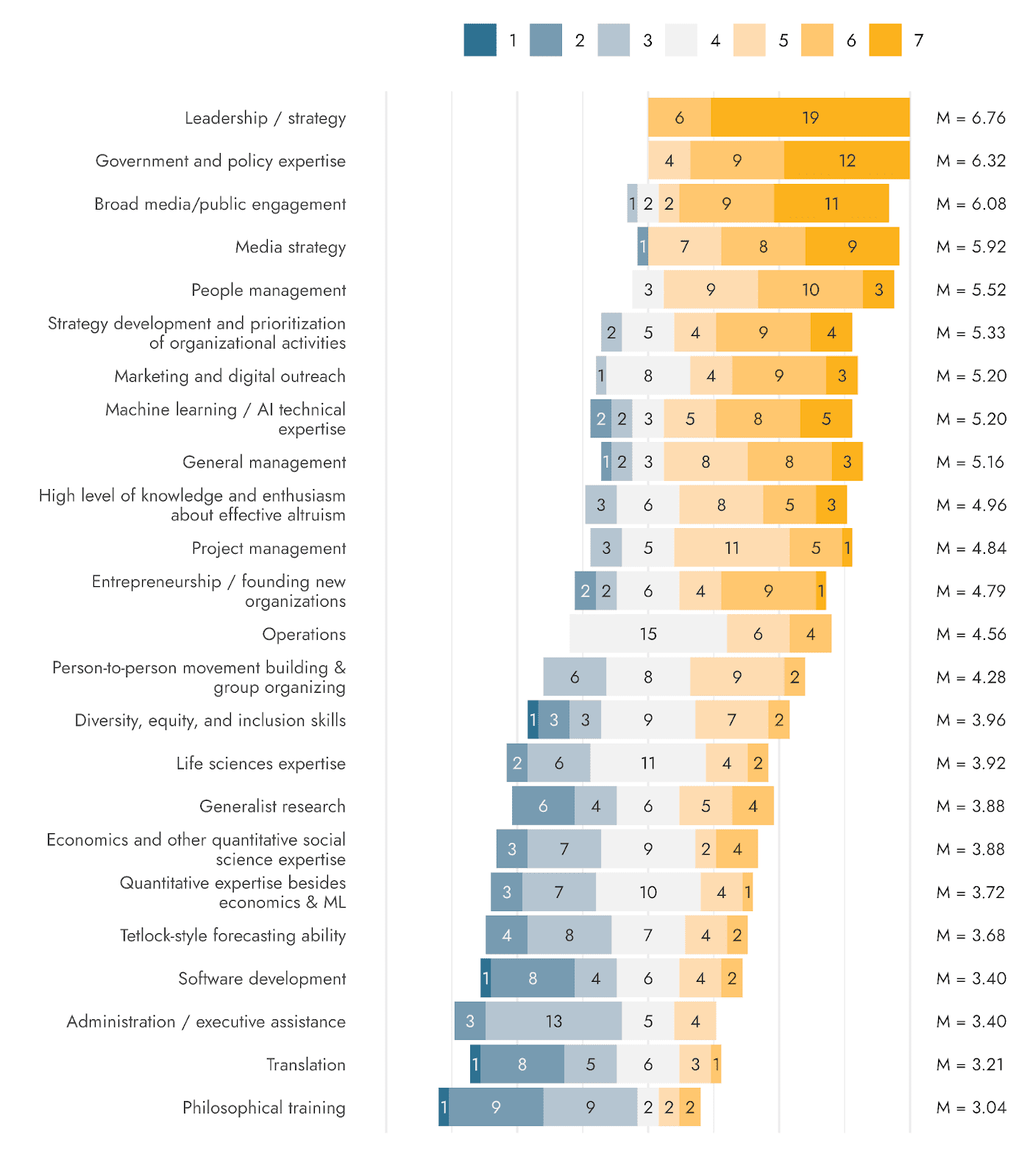

After about 30 hours of research into the area, it is clear to me that we have very little data or consensus on what’s needed in AI Safety; but the most recent and comprehensive survey of 25 leaders in EA found that leadership, policy expertise and media engagement were the most valuable skills to recruit for. In fact, all of the top 7 most sought-after skills were related to management or communications.

We potentially need more government and policy people to engage directly with politicians; More public advocates to ensure the public are on board. More founder types to ensure that the opportunities for people to work in this field are there. And potentially more organisational talent to ensure that coordination happens a bit better between the communities[3].

From the people I talked to, which of these roles precisely is most important to recruit for varies a lot. But there was a strong consensus that non-research roles are more important to recruit for at this time.

On a more meta-level, there also appears to be gaps in generalist skills in hiring rounds. And in particular when hiring for non-research roles, a challenge in discerning applicants with value alignment.

I believe these gaps aren’t accidental, but a direct consequence of a research-focused pipeline.

Most Talent Pipelines are for AI Safety Research

The vast majority of training programmes within AI Safety are explicitly geared towards developing researchers. There are over 20 full-time fellowship programs out there for upskilling in AI Safety. As far as I’m aware only 3 are for non-researchers[4].

Ironically, these non-research fellowships are the most competitive, with none having an acceptance rate more than 2%.

This intense focus on research-based upskilling creates a cascade of three systemic problems:

- It is a potentially unnecessary filter for people who could be top performers without research skills.

- It creates an impression that research is the main path to impact.

- It is harder for non-research people to demonstrate ‘Value Alignment’.

This Creates the Wrong Filter for Non-Talent Roles

Epistemic status: Medium/Low

The focus on research presents a large, unnecessary filter for the talent pool in AI Safety.

Because research fellowships are so competitive (<5% acceptance rate), applicants must demonstrate that they are both exceptionally good at research and are passionate about doing research as their career.

People who get into research fellowships are much more motivated to stay in AI Safety. This makes them a very outsized proportion of the talent pool.

Many of the people doing direct policy work, advocacy work and organisation running at the moment were initially selected as researchers. They had to demonstrate they were in the top 5th percentile of researchers. This means work they are currently doing is likely their secondary skillset.

I don’t think it’s unreasonable to expect a person with 94th percentile research skills, who would not make it into a research fellowship, to be 99th percentile at government or advocacy work.

The filter not only blocks people, but makes them think that research is the only way in.

This Creates a Feedback Loop of Status

Epistemic status: Medium

If the main paths you see when you enter AI Safety are research fellowships, there’s a strong incentive to work towards these ‘established markers of success’ over pursuing your own path for impact.

Note how the research fellowships get thousands of applications every year, but a tiny fraction are actively sending out cold emails to work in other areas.

By giving people ‘permission’, we’re signalling to them that research is the path of least resistance to impact. But given how competitive research is, this is unlikely to be true for most people.

There may also be a subconscious bias for hiring managers to think along similar lines.

Research Fellowships have a Bias in Hiring

Epistemic status: Medium

Consider two applicants for a senior people management job:

Applicant A started out wanting to be a researcher. They did MATS before becoming an AI Safety researcher. By gaining enough research experience they were promoted to a research manager.

Applicant B always wanted to be a manager. They got an MBA from a competitive business school and worked their way into becoming a people manager in a tech company. Midway through their career they discover AI Safety and decide they want to make a career transition.

Even if Applicant B put in a significant amount of effort outside of work (eg by signing the 10% pledge, posting on the EA Forum or even starting an AI Safety group), in many employers’ eyes they would not look as value aligned as someone who did MATS, something which is part of a researcher’s career path anyway.

I believe that this disproportionately increases the competitive advantage of a person who did a research fellowship, even if they choose to go into a non-research role.

Conclusion

The AI Safety pipeline is overoptimised for researchers, and this is leading to gaps in the talent pool for AI Safety careers.

Most of the people I’ve talked to have agreed with me; in fact for many of them it’s obvious. Their next question is almost always ‘what do you propose to do about this?’ over ‘is this actually a problem?’.

But to many people who do not look at the field from a meta-level, I don’t believe this is obvious.

If you’re interested in continuing this work and you would like collaborators - particularly for this Autumn or the Summer of ‘26 - please get in touch with me!

FAQ

- Maybe AI Safety has enough researchers, but isn’t the field still bottlenecked on extreme tail-case research talent?

- Yes, but I don’t expect more research programmes to attract people who aren’t already attracted to existing high-profile programmes (such as MATS). And whilst everyone in these programmes is in the top 5%, we’re still far from saturated with truly top talent.

- How do timelines play into this?

- I think for longer timelines, the case for large-scale restructuring is very clear. We’re missing out on potentially huge cumulative gains.

- For shorter timelines, it’s more of an open question. My intuition is that some form of reshifting should not take too long, and that AI Safety needs to have its act together in the coming months before transformative AI arrives.

Further Questions

I think there are an enormous number of directions further work could take. These are further questions that I’ve come up with across the course of my conversations that I think it would be valuable to have answers to:

- What guidance should be given to the enormous bycatch (95%) who aren’t yet suited for research or similar programs?

- Would individual mentorship instead of research programs be more cost-effective for direct policy and governance students?

- Would short, 6 hour bootcamps (for instance Bluedot's 6 hour operations bootcamp experiment) be an 80:20 solution to the signalling issues?

- Does organisational structure need to improve to accept more new hires? What could the role of Consultants be in improving organisational structure?

Bycatch; Addendum

Epistemic Status: Low. These are some personal reflections I've had whilst working on this.

With acceptance rates of <5%, the AI Safety fellowships cumulatively receive more applications every year than the number of people working in AI Safety altogether. This has been referred to as ‘Bycatch’[5].

Perhaps selective non-research pipelines would not solve this, but it seems crazy to me that in such a neglected space, we would not be trying to incorporate as much talent as possible.

A chosen few get the privilege of working on potentially the most important problem in history.

When I raised this point, one person said to me, ‘Well there’s simply not enough space for everyone; and there’s always Earning to Give’.

When I hear the term ‘Bycatch’, I’m reminded of the famous Albert Einstein quote:

If you judge a fish by its ability to climb a tree, it will live its whole life believing that it is stupid.

- ^

For this article, I’m defining AI Safety as including both Technical AI Safety and AI Governance.

- ^

A small number say that AI Safety still needs more extremely-tail case researchers. But in terms of numbers of researchers, very few said that this was the biggest bottleneck.

- ^

Less confident about this one.

- ^

Tarbell, Talos and IAPS. You could include Horizon and RAND’s programs in these buckets, but these are not explicitly focused on X-risk, nor do they identify with the AI Safety movement.

- ^

Rohin Shah @ 2025-09-02T08:12 (+27)

In fact, all of the top 7 most sought-after skills were related to management or communications.

"Leadership / strategy" and "government and policy expertise" are emphatically not management or communications. There's quite a lot of effort on building a talent pipeline for "government and policy expertise". There isn't one for "leadership / strategy" but I think that's mostly because no one knows how to do it well (broadly speaking, not just limited to EA).

If you want to view things through the lens of status (imo often a mistake), I think "leadership / strategy" is probably the highest status role in the safety community, and "government and policy expertise" is pretty high as well. I do agree that management / communications are not as high status as the chart would suggest they should be, though I suspect this is mostly due to tech folks consistently underestimating the value of these fields.

Applicant A started out wanting to be a researcher. They did MATS before becoming an AI Safety researcher. By gaining enough research experience they were promoted to a research manager.

Applicant B always wanted to be a manager. They got an MBA from a competitive business school and worked their way into becoming a people manager in a tech company. Midway through their career they discover AI Safety and decide they want to make a career transition.

If I were hiring for a manager and somehow had to choose between only these two applicants with only this information, I would choose applicant A. (Though of course the actual answer is to find better applicants and/or get more information about them.)

I can always train applicant A to be an adequate people manager (and have done so in the past). I can't train applicant B to have enough technical understanding to make good prioritization decisions.

(Relatedly, at tech companies, the people managers often have technical degrees, not MBAs.)

in many employers’ eyes they would not look as value aligned as someone who did MATS, something which is part of a researcher’s career path anyway.

I've done a lot of hiring, and I suppose I do look for "value alignment" in the sense of "are you going to have the team's mission as a priority", but in practice I have a hard time imagining how any candidate who actually was mission aligned could somehow fail to demonstrate it. My bar is not high and I care way more about other factors. (And in fact I've hired multiple people who looked less "EA-value aligned" than either applicants A or B, I can think of four off the top of my head.)

It's possible that other EA hiring cares more about this, but I'd weakly guess that this is a mostly-incorrect community-perpetuated belief.

(There is another effect which does advantage e.g. MATS -- we understand what MATS is, and what excellence at it looks like. Of the four people I thought of above, I think we plausibly would have passed over 2-3 of them in a nearby world where the person reviewing their resume didn't realize what made them stand out.)

Christopher Clay @ 2025-09-02T10:12 (+20)

Thanks for this! You've changed my mind

Kestrel🔸 @ 2025-09-04T06:48 (+1)

For what it's worth from my time as a civil servant, I agree:

You can train a technical person to have leadership skills. It's difficult, but it's doable. It involves a lot of throwing them at escalating levels of leadership opportunities (starting really really small if necessary) and making sure they get and respond to relevant feedback about their performance. This is something that can be done in the normal course of working at an organisation.

You cannot train a non-technical leader to have technical skills. 90% of the population don't have a maths A-level (or equivalent qualification), and people often find the process of learning maths inherently distressing. At least half the time the response I get when telling people I am a mathematics researcher is people bringing up their anxiety-ridden GCSE school days. There is nothing that can possibly be done to fix this from an organisation's perspective other than telling them to go and study something they hate for five years full-time in the hope they somehow stick with it, which organisations cannot support.

So from the view of a talent pipeline aiming for technical leaders in your field or community, it makes sense to recruit technical and teach leadership. There are occasionally people who jump the opposite way, and it's great to be on the lookout for them and have something they can do. But initially it's going to look very much like either the introductory outreach course in your field or the maths A-level curriculum, both of which are catered for outside of a specialised programme. And my experience is the kind of people who do MBAs don't stick with this kind of stuff because they find it hard, boring, anxiety-inducing, and not prestigious enough.

I did maths teaching for non-technical people learning technical skills at an EA org once. I teach maths at university level with great feedback, so I don't think the issue is me. They messed up the basics (as expected), but were totally unable to reflect on why they'd messed up in a way suitable for learning, and all dropped out. I'd need a serious example of such a talent pipeline actually working before I'd do such a thing again.

Chris Leong @ 2025-09-02T11:40 (+2)

in many employers’ eyes they would not look as value aligned as someone who did MATS, something which is part of a researcher’s career path anyway.

Yeah, I also found this sentence somewhat surprising. I likely care more about value alignment more than you, but I expect that the main way for people to signal this is by participating in multiple activities over time rather than by engaging in any particular program. I do agree with the OP's larger point though: that it is easier for researchers to demonstrate value alignment given that there are more programs to participate in. I also acknowledge that there are circumstances where it might be valuable to be able to signal value alignment with relatively few words.

12345 @ 2025-08-30T11:44 (+8)

I strongly agree with this assessment, and this is why we created a new version of ML4Good, which is non-technical, ML4Good governance, that is no longer targeted for wanna be researcher

Tristan Katz @ 2025-09-02T14:26 (+1)

Oh damn, sad to see that the deadline just passed!

Chris Leong @ 2025-08-30T23:13 (+6)

Great post! I agree with your core point about a shortfall in non-researcher pipelines creating unnecessary barriers and I really appreciated how well you've articulated these issues. Excited to see any future work!

But I spent the next 6 months floundering; I thought and thought about cause prioritisation, I read lots of 80k and I applied to fellowship after fellowship without success

Were you mostly applying to the highly competitive paid fellowships? I don't exactly know what Nontrivial entails (though my impression was that it covered a few different cause areas), but I'd expect the route after that to look like the following: do an AI safety specific intro course (BlueDot or CAIS), apply to competitive paid fellowships just to check you can't get in directly, apply to the less competitive unpaid opportunities (SPAR, AI safety camp), then apply again to the more competitive paid opportunities. This path would typically include continuing their studies at university, which would also provide an additional (and typically much less competitive) path for gaining relevant research experience.

Insofar as this is what the journey more typically looks like, it's shame that this wasn't made legible to you after Non-Trivial (then again, it is hard for programs to convey this knowledge given that there's been limited research on what a typical path into AIS looks like).

I think this is generally a reasonable path for junior folks who want to become researchers, however (as you say), we shouldn't expect those who don't want to become researchers to have to run this gauntlet.

So what should the path for non-researchers look like? I'd suggest that we probably want them to do one of the intro courses for context. After that, it likely makes sense for the default path for junior talent to be a part-time non-research project. I think SPAR and AIS Camp have offered these types of projects - but I'd love to see more programs in this space given the scalability of these kinds of programs.

Bootcamps seem like they could be valuable for more senior talent, or tail-end talent at the junior level, however 6 hours personally feels far too short for me. I'd prefer longer bootcamps both in terms of providing more context and also in terms of completion signaling more commitment.

I expect it would likely make sense to have more paid talent development programs for non-researchers, although I don't have a good idea of what such programs should look like.

nickaraph @ 2025-09-04T13:16 (+1)

I believe SPAR is no longer less competitive

Chris Leong @ 2025-09-04T17:32 (+2)

I find that surprising.

The latest iteration has 80+ projects

But why do you say that?

Kestrel🔸 @ 2025-08-30T20:45 (+6)

pauseai.info/local-organizing

Builds political advocacy and community-building skills in AI Safety. Highly scalable.

OscarD🔸 @ 2025-09-02T11:30 (+3)

Thanks for writing! If something like this doesn't already exist, perhaps someone should start an ops fellowship where there is a centralised hiring round, and then the selected applicants are placed at different orgs to help with entry-level ops tasks. Perhaps one bottleneck here is it is hard for research to be net negative (short of being infohazardous, or just wasting a mentor's time), but doing low quality ops work could be retty bad for an org. Maybe that is partly why orgs don't want to outsource ops work to junior interns? Not sure.

Christopher Clay @ 2025-09-02T13:33 (+1)

yes exactly thats what I've heard - orgs are reluctant to accept inexperienced ops people. I'd love to see a way round it!

gergo @ 2025-09-05T08:37 (+2)

Great post, thanks for writing!

DavidConrad @ 2025-09-04T15:03 (+2)

Great article. There are huge numbers of people out there who have valuable skills outside of technical research, who are passionate about working in AI safety, and who don't have any idea how they can actually contribute. Talos Network and a bunch of other organisations are working on this from a governance perspective but we need more organisations dedicated to other important parts of the AI Safety value chain like non-technical founders.

Martin Percy @ 2025-09-12T10:58 (+1)

Great piece, thank you. I’m new to EA, but I have experience engaging non-technical people on AI-related issues. I see many comments on leadership, but few on broad media/public engagement. For this, much AI safety outreach still feels very dry - text-heavy webpages or complex YouTube lectures. To reach talented non-technical people in media or government, we need approaches that are more entertaining, visual, and interactive - giving people space to express their own views rather than just absorb top-down content. IMHO that is how to bring in the non-research AI safety talent this article shows is needed.