How to evaluate relative impact in high-uncertainty contexts? An update on research methodology & grantmaking of FP Climate

By jackva, mphelan, Luisa_S, violet @ 2023-05-26T17:30 (+84)

1/ Introduction

We recently doubled our full-time climate team (hi Megan!), and we are just going through another doubling (hiring a third researcher, as well as a climate communications manager).

Apart from getting a bulk rate for wedding cake, we thought this would be a good moment to update on our progress and what we have in the pipeline for the next months, both in terms of research to be released as well as grantmaking with the FP Climate Fund and beyond.

As discussed in the next section, If you are not interested in climate, but in EA grantmaking research in general, we think it still might be interesting reading. Being part of Founders Pledge and the effective altruist endeavor at large, we continually try to build tools that are useful for applications outside the narrow cause area work – for example, some of the methodology work on impact multipliers has also been helpful for work in other areas, such as global catastrophic risks (here, as well as FP's Christian Ruhl's upcoming report on the nuclear risk landscape) and air pollution. Another way to put this is that we think of our climate work as one example of an effective altruist research and grantmaking program in a “high-but-not-maximal-uncertainty”[1] environment, facing and attacking similar epistemic and methodological problems as, say, work on great power war, or risk-neutral current generations work. We will come back to this throughout the piece.

In what follows, this update is organized as follows: We first describe the fundamental value proposition and mission of FP Climate (Section 2). We then discuss, at a high level, the methodological principles that flow from this mission (Section 3), before making this much more concrete with the discussion of three of the furthest developed research projects putting this into action (Section 4). This is the bulk of this methodology-focused-update.

We then briefly discuss grantmaking plans (Section 5) and backlog (Section 6) before concluding (Section 7).

2/ The value proposition and mission of FP Climate

As part of Founders Pledge’s research team, the fundamental goal of FP Climate is to provide donors interested in maximizing the impact of their climate giving with a convenient vehicle to do so – the Founders Pledge Climate Fund. Crucially, and this is often misunderstood, our goal is not to serve arbitrary donor preferences but rather to guide donors to the most impactful opportunities available.. Taking caring about climate as given, we seek to answer the effective altruist question of what to prioritize.

We are conceiving of FP Climate as a research-based grantmaking program to find and fund the best opportunities to reduce climate damage.

We believe that at the heart of this effort has to be a credible comparative methodology to estimate relative expected impact, fit for purpose to the field of climate where a layer of uncertainties about society, economy, techno-economic factors, and the climate system, as well as a century-spanning global decarbonization effort. This is so because we are in a situation where causal effects and theories of change are often indirect and uncertainty is often irreducible on relevant time-frames (we discuss this more in our recent 80K Podcast (throughout links to 80K link to specific sections of the transcript), as well as Volts, and in our Changing Landscape report).

While we have been building towards such a methodology since 2021 our recent increase in resourcing is quickly narrowing the gap between aspiration and reality. Before describing some exemplary projects, we quickly provide some grounding on the key underlying methodological principles.

3/ Methodological choices and their underlying rationale

We now provide a trimmed-down version of our basic methodological choices and their rationale synthesized as three principles. For readers interested in more detail in the underlying methodological choices and their justification, we recommend our Changing Landscape [2] Report. Our recent 80,000 Hours Podcast provides a somewhat less extensive explanation for those that prefer audio.

Comparative

Given the “degrees of freedom” in modeling and parameter choices, we are convinced that in a high-uncertainty indirect-theory-of-change context such as climate, bottom-up cost-effectiveness analyses as well as bottom-up plausibility checks (“Does this organization follow a sensible theory of change?” , “Does it have a competent team?”, etc.) are fundamentally insufficient for claims of high impact.

Rather, when there are large irresolvable uncertainties that give rise to vast degrees of freedom in bottom-up assessments, we believe the answer has to go through consistent comparative judgments of relative expected impact. Because many of the key uncertainties (“what is the multiplier from leveraging advocacy compared to direct work?”, “what is the effectiveness of induced technological change?”, “what is the severity of carbon lock-in?”, “what is the probability of funding being additional when a field grew by 100% last year?”) apply similarly to different fundable options, we are in a situation of high-uncertainty of absolute impact, where credible and meaningful statements of relative impact are still possible (Ozzie Gooen of the Quantified Uncertainty Research Institute (QURI) has recently made many of these points in a more rigorous way, Nuno Sempere’s work, also of QURI, is also related in spirit).

Comprehensive

When working in neglected causes an analysis of an intervention’s naive effectiveness or plausibility of theory of change might be a good approximation of its cost-effectiveness and considerations of additionality might be less important or can be resolved with little analytical effort.

However, we believe this approach utterly fails in climate.

This is because of the crowdedness in climate, making the analysis of funding additionality (“how likely would this have been funded anyway?”), activity additionality (“how many other organizations would do roughly the same thing absent funding org X?”), and policy additionality (“how many of the avoided emissions would have been avoided through other policies otherwise?”) critical to the overall effort.

Spoken somewhat roughly, we believe that analyzing an intervention’s effectiveness does less than half of the work required. This is, actually, good news, because a lot of these factors are observable variables quickly narrowing the spaces to research. We turn to this next.

Credence-driven (aka Bayesian)

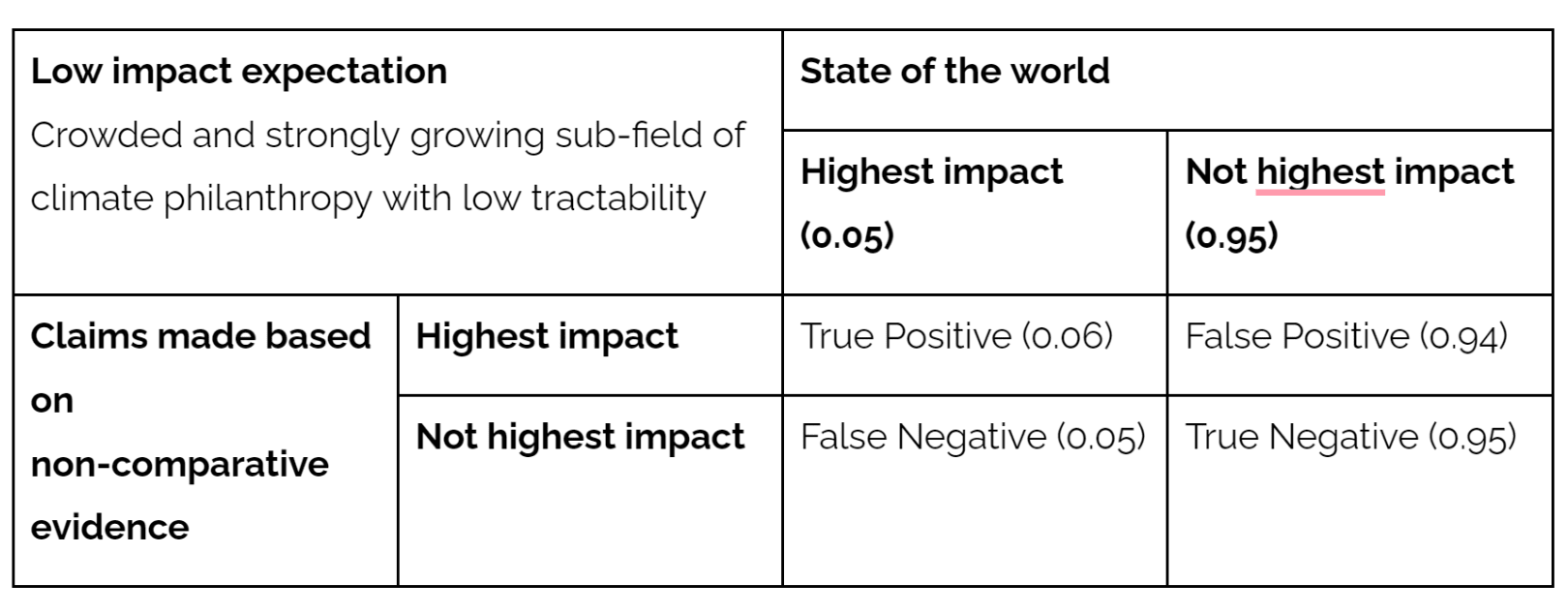

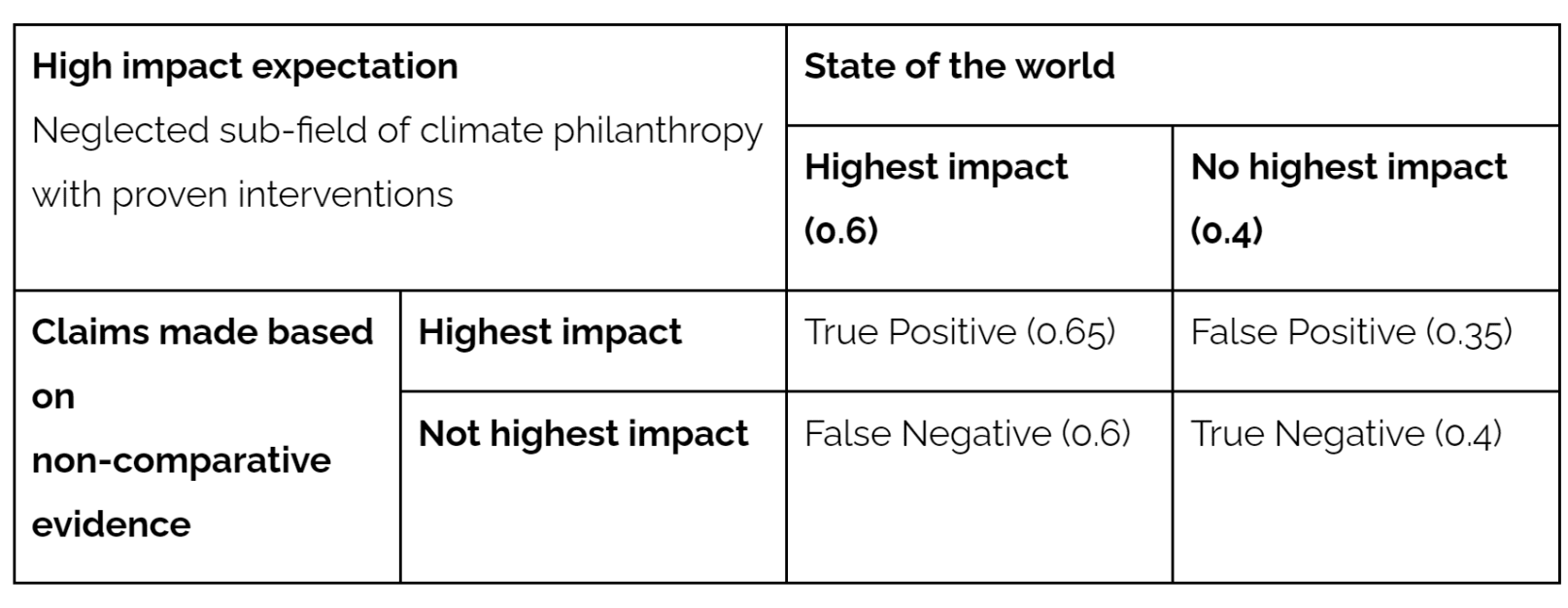

We believe that the first two sets of considerations and the kind of models they allow (also see below) usually allow assigning credences to central questions of impact, e.g. “how surprised would we be to find something that is possibly the most effective in field X?”.

For example, schematically, we believe the situation looks something like below for two cases:

Absent additional information, like the track record of the evaluator claiming highest impact or convincing evidence brought to bear that a particular opportunity “beats the priors”, we believe that the standard response to a claim of “highest impact” in many fields of climate philanthropy should be one of “false positive is by far the likeliest explanation here”. Crucially, as discussed above, we do not believe “inside-view” non-comparative evidence should update us much given the many degrees of freedom.[3]

The probabilities here are chosen for illustration and should not be taken too seriously. Indeed, we have an entire project (discussed below) dedicated to refining the calculation of such probabilities, and how much we should believe different factors of the intervention space to discriminate in expectations of impact.

Another key aspect of this approach is the treatment of uncertainty, including the structure of uncertainty, its quantification, and exploration of its implications.

For example, we think the stylized fact that the relative failure of mainstream solutions is correlated with worse climate futures – a point about the structure of uncertainty – has fundamental implications for climate prioritization (motivating the importance of “hedginess” and “robustness”, discussed at length in the 80K Podcast section on risk management). What is more, while uncertainties are often large, when combined they can still allow relative confident statements about impact differentials. Put differently, various forms of weak evidence conjunctively allow stronger relative statements.

To summarize, we believe that the only way to get to credible claims of high expected marginal impact in climate has to go through a research methodology that is explicitly comparative and -- given the crowdedness of climate -- comprehensive (taking into account all major determinants of impact, including funding, activity and policy additionality). We also believe that, once one takes into account a comprehensive set of variables, one has a lot of information for credible priors of relative impact.[4]

We will now turn to three projects we are currently pursuing that operationalize such a methodology.

4/ Projects

We now turn to three projects that are close to completion (famous last words!) and that exemplify this approach and will hopefully be useful to other impact-oriented philanthropists, in climate and – for the first – in other areas as well.

Grantmaking & Research Prioritization: “Everything we are uncertain about, all at once”

When running a research-based grantmaking program, fundamentally we need to optimize across two dimensions:

- Make the best decisions to spend our money to reduce climate damage in the face of vast and often irreducible uncertainties.

- Make the best decisions to spend our research time to reduce uncertainties that are most action-relevant.

Because (i) the impact of climate philanthropy is declining over time in expectation, (ii) grantmaking is an opportunity to learn, (iii) and grantmaking increases future donations, this is a dynamic problem, not a one-shot or clearly sequential process. At any given time, we need to evaluate grants based on our best guesses at this point, while at the same time prioritizing our research to further reduce uncertainties.

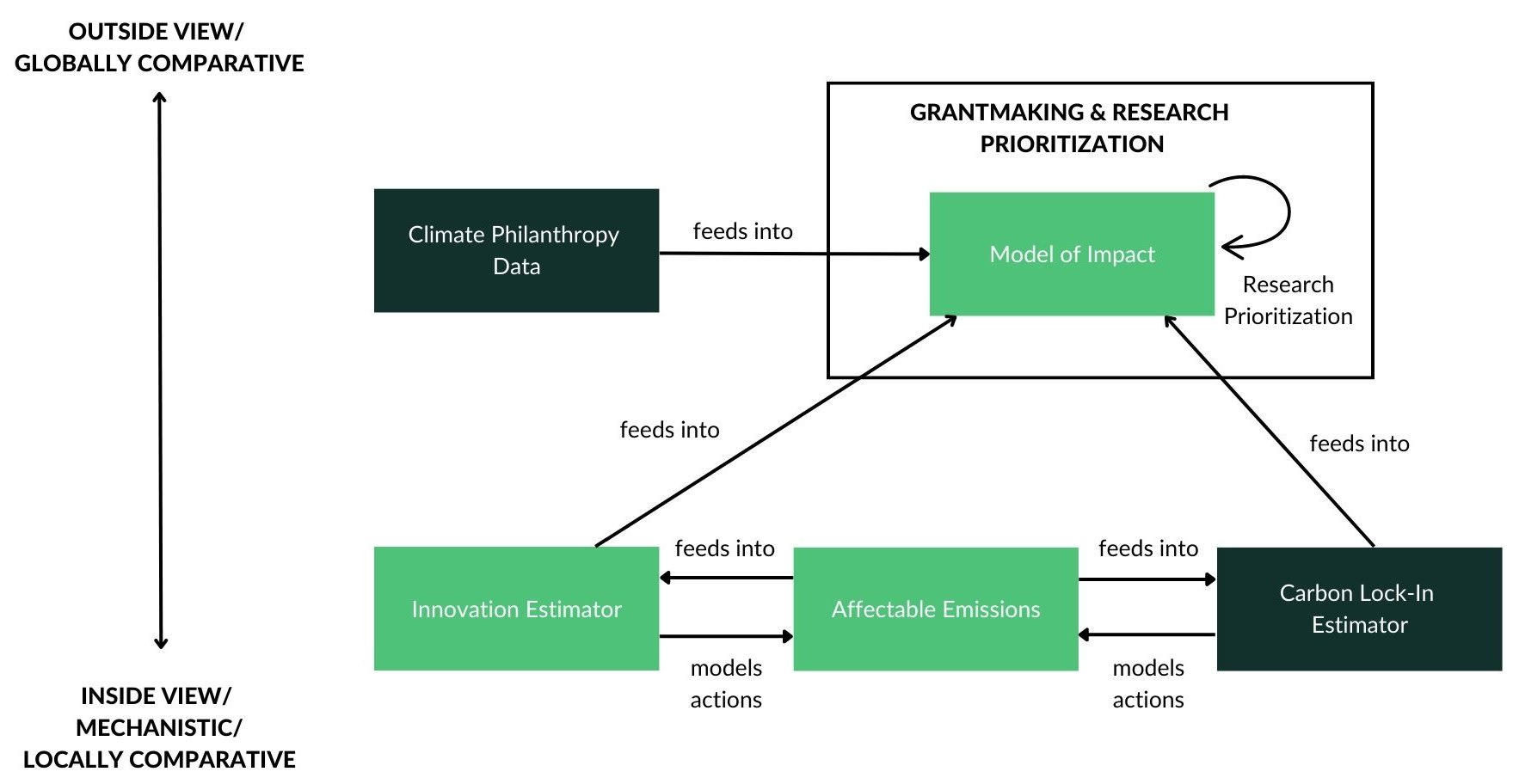

To do so more systematically, we have built and are currently populating a tool quantifying our credences into what attributes of funding opportunities give rise to expected impact and how uncertain we are about different drivers of impact.

If one thinks, as we do, that “effectiveness is a conjunction of multipliers”, this tool provides a framework to characterize the uncertainties around the multipliers and the importance of reducing particular uncertainties given a broadly defined set of fundable opportunities.

More concretely, we model how we think about the impact-differentiating qualities of attributes such as “leveraging advocacy”, “driving technological change”, “reducing carbon lock-in”, “high probability of activity additionality”, “hedging against the failure of mainstream solutions”, “engaging in Europe on innovation”, “engaging in South East Asia on carbon lock-in”, and so on.

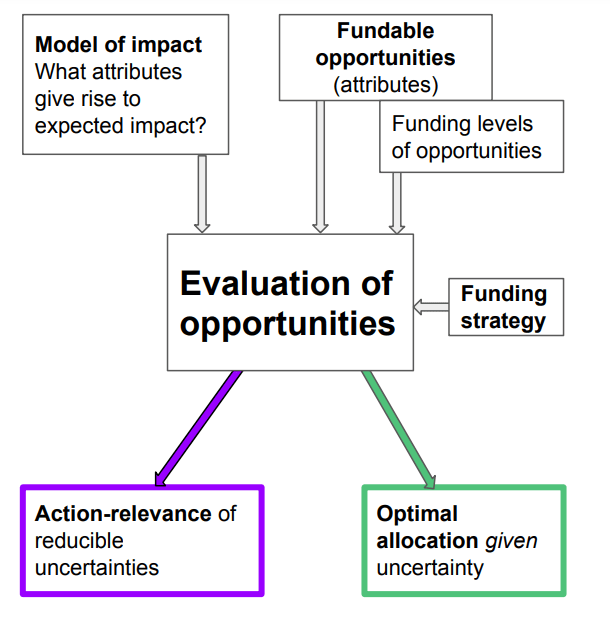

This is schematically illustrated below:

Our model, written in R, leverages Monte Carlo simulations to generate multiplier and funding opportunity attribute values, simulating thousands of possible states of the world to properly represent our uncertainty and understand its implications. (For Guesstimate users, this should sound pretty familiar – unlike in Guesstimate, we allow for correlated uncertainties which is a key substantive feature of our understanding of the space and one of the reasons to build a custom-tool).

The figure above shows the inputs and outputs of the tool. Crucially, using the tool does not require any programming skills, as the tool intakes csv files (which one can easily generate from, e.g. saving a Google Sheet) of the following: 1) model of impact (e.g., impact multipliers/differentiators), 2) principally fundable opportunities (e.g., organization attributes), 3) funding strategy, and 4) funding levels of each opportunity.

This implementation allows one to specify uncertainty across organization attributes, theories of change, and other differentiators of impact to estimate the decision-relevance of reducing different uncertainties. Given these specifications, all possible grant allocations are then determined by the model and analyzed for their respective expected impact across the simulated states of the world. We provide a stylized toy example in the footnote.[5]

As expanded upon in the next two subsections, evaluation of such opportunities thus leads to 1) optimal allocations given uncertainty as well as 2) action-relevance of reducible uncertainties.

Optimal allocation for grantmaking

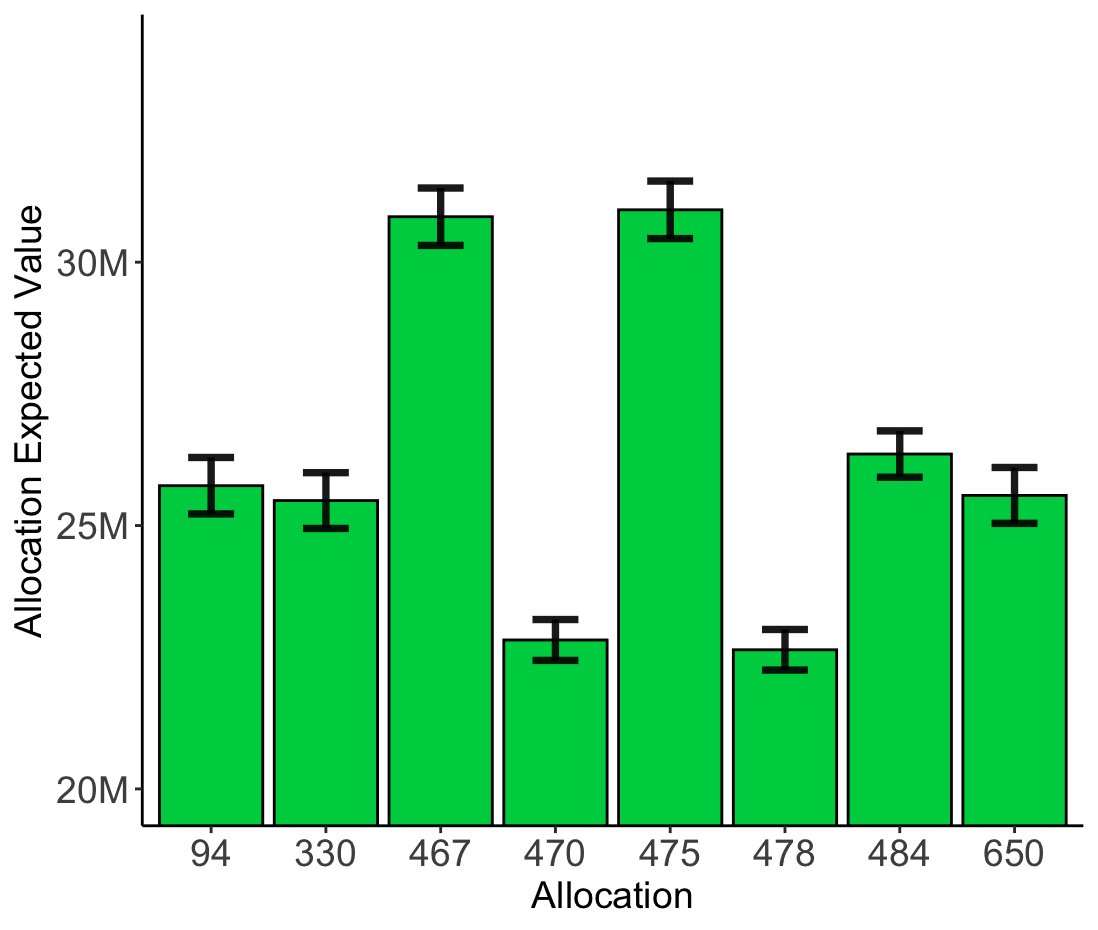

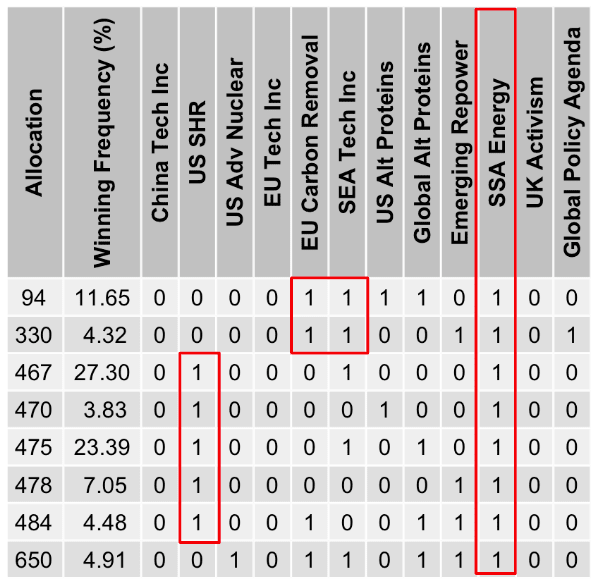

A first obvious result of this process is an ordering of all possible allocations (allocations that do not violate the overarching budget constraint) by expected impact:

If one had no time to reduce uncertainty further, then in this case choosing allocation 475 would be the best choice based on current beliefs of what gives rise to impact and what the space of fundable opportunities looks like.

Of course, we do have research time to reduce uncertainties and prioritizing this time by action-relevance, uncertainties whose narrowing could change decisions, is what we turn to next.

Reducing action-relevant uncertainties

Consider the top funding allocations in our above example. While allocation 475 dominates based on average expected value, the confidence intervals between our two top allocations, 475 and 467, clearly overlap.

Conceptually this means that we believe there are ways the world could be -- in terms of what gives rise to impact and how uncertain attributes of our funding opportunities manifest -- for which the second allocation dominates. Moreover, in high uncertainty situations, there could be particular states of the world where another top-performing allocation dominates, e.g., allocation 330 in the example above, and thus we would want to conduct more research to reduce our uncertainty here.

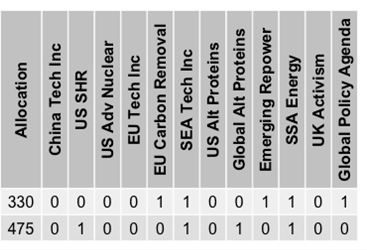

We would like to understand whether we are in such a world, meaning that the attendant uncertainties surrounding the differences between two particular allocations (e.g., 475 vs. 330) are action-relevant uncertainties, with the narrowing of uncertainty able to change our actions. The difference between the two allocations is shown in the table below.

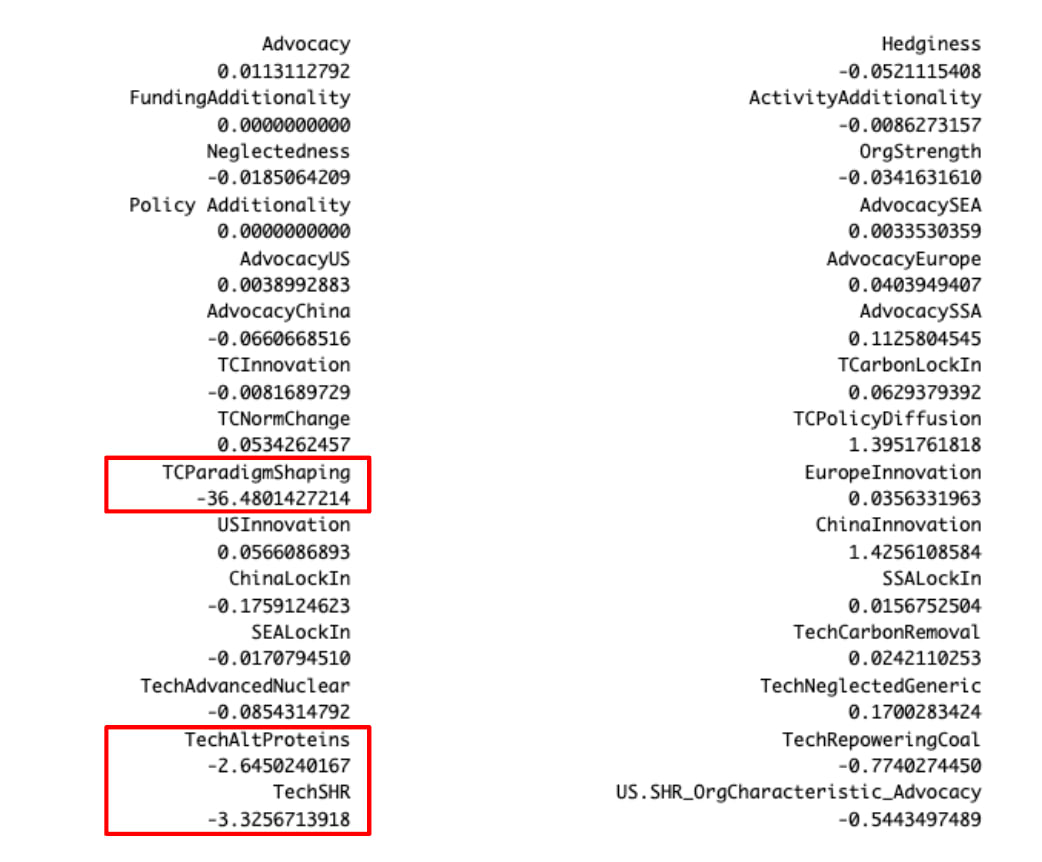

For example, it could be that these two top allocations (475 and 330) differ in five grants – US super hot rock geothermal (SHR), EU Carbon removal, Global Alt Proteins, Emerging Repower, and Global Policy Agenda, as shown in the table above. In this case, we can run a piecewise comparison, such as a logistic regression, to compare the two allocations. These action-relevant uncertainties will include those in which the underlying grant allocations differ (e.g., TC Paradigm Shaping, Tech Alt Proteins, Tech SHR), among others. Below we present standardized regression coefficients for such an analysis, which enable us to identify the relative importance of uncertainty, as shown by the red boxes. Following the example below, narrowing uncertainty on the theory of change on paradigm shaping appears ~10x more important (given the large absolute number) than narrowing uncertainty on tech-specific uncertainty (e.g. Tech Alt Proteins, Tech SHR). As such, we would first want to focus our research on reducing uncertainty around paradigm shaping, and then focus on policy diffusion and/or tech specific uncertainty. Hence, these standardized regression coefficients help determine the uncertainties most worth reducing in order to inform our grantmaking agenda.

In this example, the uncertainty around the multiplier from the “Paradigm shaping” Theory of Change turns out to be most decision-relevant because portfolio 330 involves a grant invoking this theory of change (Global Policy Agenda) and we are much more uncertain about the multiplier than for other theories of change and variables.

Of course, in a situation of high and sometimes correlated uncertainties, we do not only want to marginally compare two top-performing options.

To see why, consider that there are even cases where the 10th best allocation in expectation, for a particular state of the world, turns out to dominate all others overall (with a high value of information if we could identify correlates of being in that state of the world). More importantly, it is also possible that a top allocation, while "winning" more than any other allocation, only occupies a small portion of the overall probability space and that the five next best allocations are all very different.

For these reasons, we are also building tooling to identify the broader structure of what drives expected impact across well-performing allocations. To do so, we compare the underlying composition of the top performing allocations. This enables us to identify certain clusters driving performance in these allocations, as shown by the red boxes in the table below:

Application outside FP Climate

Zooming out, this is a fairly general framework to allow specifying your uncertainties about theory of impact and impactful opportunities in the world and to derive action-relevant uncertainties so we believe that this tool will have useful applications outside our climate work, both at FP and beyond. We think of it as a “shut up and multiply” tool for grantmaking and research prioritization in a situation of many “known unknowns” (more precisely, knowing many drivers of impact, but their effect sizes being uncertain), funding opportunities that can be meaningfully approximated as conjunctions of impact-related variables, and a decision context of allocating grant making and research time budgets concurrently. While this clearly does not capture all contexts, we think this has potentially wider applications in areas methodologically similar to climate (high but not maximal uncertainty, also see Section 3 above) and are keen to share our work and collaborate.

Affectable Emissions: The where, when, and how of avoidable emissions

The fundamental emissions math -- 85% of future emissions are outside the EU & US, while the capacity and willingness to drive low-carbon technological change is roughly, though somewhat less extremely, inversely distributed -- has profound implications for what kinds of actions to prioritize where. At a first approximation it means that actions in high-income countries should be focused on improving the global decarbonization value of domestic action (e.g. by moving the needle on key technologies, or improving climate finance and diplomacy) whereas in emerging economies avoiding carbon lock-in is a more relevant priority, given the potential staying power of long-lived capital and infrastructure investments committing to a high-carbon future.

White this makes sense as a first approximation, it is ultimately too crude for more granular prioritization decisions for globalized grantmakers like ourselves that, in principle, can deploy funds anywhere.

We discuss this in more detail on the 80K Podcast, but just consider the following questions:

- (1) Should we prioritize engagement in China or Southeast Asia? While China has far larger emissions, the growth of emissions is slower and emissions are primarily a result of built infrastructure compared to Southeast Asia where “earlier” infrastructure decisions are being made.

- (2) Should we prioritize engagement in Sub-Saharan Africa or Southeast Asia? While Southeast Asia has far more emissions now and more decisions being made now, emissions in Sub-Saharan Africa could be more affectable precisely because infrastructure and investments are far less locked-in.

These kind of prioritization decisions – fundamentally about the relative affectability of different emissions streams – can be made much more consistently by integrating what we know about the relative affectability of different emissions streams (e.g. of considered vs already committed emissions, emissions streams related to assets of differential difficulty of retrofitting, in different sectors of the economy, etc.) and their expected distribution across geographies.

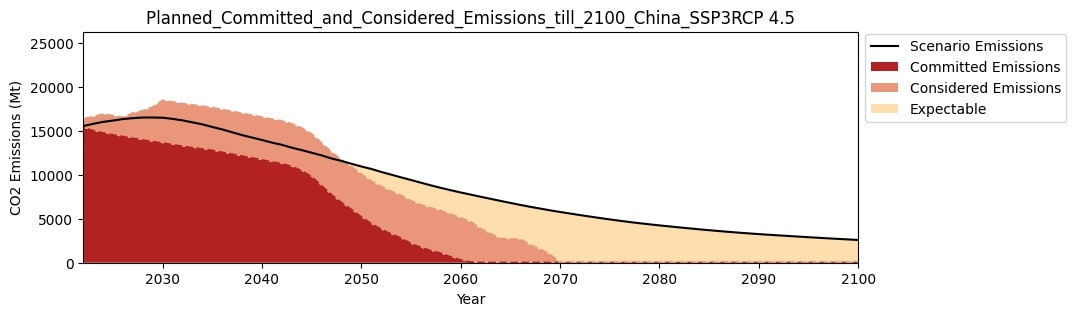

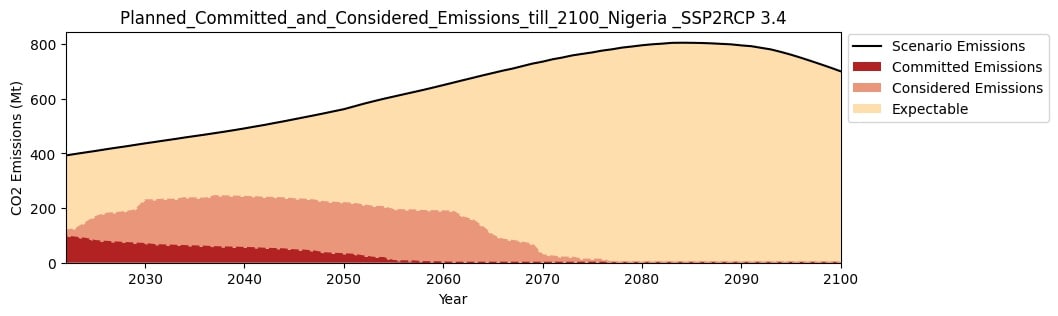

For these reasons, recent FP alumna Violet Buxton-Walsh built a data tool integrating scenario data from the IPCC SSP database, data on considered emissions from the Global Energy Monitor, and data on committed emissions from Tong et al 2019, to explore what different assumptions about affectability imply about prioritization and, more generally, set geographical prioritization decisions on a sounder and consistent footing.

The figures show considered emissions (emissions from infrastructure which do not yet exist, but are currently in the planning or construction phase, and may be completed), committed emissions (the emissions expected from existing infrastructure under the assumption of typical asset lifetimes), scenario emissions (the emissions projected by a downscaling of SSP and RCP scenarios) and expectable emissions (the difference between the scenario value and the sum of the considered and committed emissions) for China and Nigeria from now till 2100 for a respective given SSP/RCP scenario.

Looking at these graphs we see that for the SSP 3 RCP 4.5. scenario most emissions for China are expected to be committed and considered until 2050 and the scenario emissions are going down for the rest of the century while the SSP2 RCP 3.4. scenario for Nigeria suggests that the share of expectable emissions -- expected based on GDP and population growth expectations but not related to existing infrastructure -- is much higher until 2050 and continues to grow for several decades.

With the data integration effort finished, we will begin to use the tool in grantmaking this summer, building a consistent set of affectability factors in the process .

A fully comparative cost-effectiveness estimator for innovation advocacy

Ever since its introduction to EA in 2016/2017, innovation advocacy has played a central role in EA recommendations on climate, focusing heavily in the work of Let’s Fund, Founders Pledge, and Giving Green, with What We Owe The Future describing clean energy innovation as a “win-win-win-win-win” (Mac Askill 2022, p. 25).

Yet, until now, there has been no attempt to credibly compare different innovation opportunities, with existing recommendations primarily relying on non-comparative bottom-up analyses and plausibility checks and/or landscape-level arguments about relative neglect (the latter being comparative).

While this has been adequate for early work, we believe it is becoming increasingly problematic for a couple of reasons:

- As the overall societal climate response becomes more mature and includes support for a broader set of technologies, obvious neglect becomes rarer requiring more granular statements – roughly moving from “no one is looking at this promising thing and we should change that” to “in proportion to its promise, this solution looks comparatively underfunded”. This move requires more detailed characterization of technological opportunities, what to expect from different kinds of philanthropic and subsequent policy investments.

- As more recommendations for innovation-oriented charities are being made, we need a methodology to compare their relative promise.

- As society overall ramps up the innovation response, both in terms of policy and philanthropy, it becomes less obvious that innovation advocacy is still a top opportunity at the margin. Evaluating this question requires “backing out” a credible estimate of the multiplier from innovation to compare to other promising theories of change.

Luckily, compared to almost anything else in climate, we have an empirically well-supported mechanistic literature on induced technological change (technological improvement induced by learning-by-doing, R&D, etc.) as well, to a somewhat lesser extent, of technological diffusion.

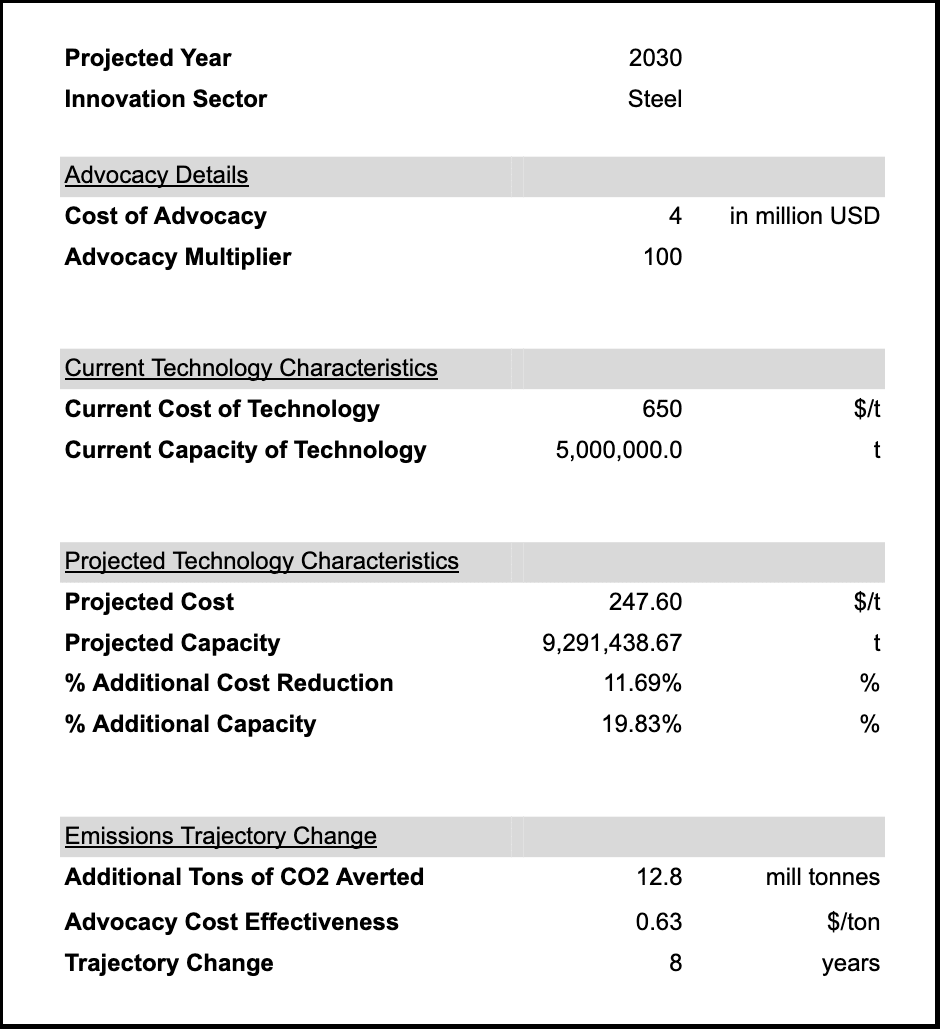

Exploiting these literatures, we are building a mechanistic model of the full causal chain from advocacy to policy change to a rich characterization of how policy-induced changes in deployment and RD&D drives technological change and, ultimately, additional emissions reductions through accelerated clean tech diffusion.

This model takes into account literature and evidence on such different factors as:

- The returns to R&D at different technological readiness levels (TRLs) (Faber 2022; Kim 2015)

- Expectations of learning rates by learning-by-doing as a function of unit lifetimes, current production status, and other factors (Faber 2022)

- Expectations of learning rates based on techno-economic characteristics, such as design complexity and other dimensions (Malhotra and Schmidt 2020)

- Different technology diffusion responses to cost reductions based on comparative case studies from technologies with varied characteristics such as wind, solar, concentrated PV, etc. (IPCC 2022)

We have currently specified a point-estimate version of this model with test cases of super-hot-rock geothermal, small modular reactors, and alternative proteins, and are in the process of building out the full uncertainty-modeling version in R.

We expect to use this tool later in the year to assist us in grantmaking and release a public version for use by other philanthropists as well.

The coming singularity

While these are, as of now, distinct projects with somewhat different proximate applications, the mid-term vision and roadmap is an integration of these tools into a consistent comparative analysis framework – a “Climate Philanthropy Prioritizer”.

The initial contours of how such a set of integrated tools might look is schematically visualized below by placing the relationship of different tools in relation to each other. Tools discussed in this post are green, with the remaining tools currently worked on listed as well, with additional components undoubtedly needed.

Note that, while all tools are explicitly comparative, they are so at different levels of resolution from more globally (not in the geographical, but in the domain sense) comparative tools generating priors and directing attention towards more locally comparative tools able to add more granularity, e.g. when choosing between engagement in different regions or across different technologies.

5/ Grantmaking

While intellectually exciting, this is not an academic exercise.

In the next months, we are planning to allocate between USD 15m-USD 30m to the highest impact opportunities we can find [7], both directly from the FP Climate Fund (to which you can contribute here), but also from advised money from Founders Pledge members and by advising regranting organizations within the EA community and beyond.

We believe that having spent six months mostly focused on building comparative tools making a priorly aspired-to and qualitative methodology more quantitative and real will prove invaluable in quickly and accurately evaluating a larger set of grants. We will likely reach out here with a request for ideas at a later time.

An update on our grantmaking till about the end of 2022 can be found here.

6/ Backlog

As discussed recently elsewhere, we have a backlog in publishing grantmaking evaluations. In times of severe resource constraints, we prioritized our core functions of doing grantmaking and landscaping research to take action and explain our approach (e.g. here, here, and here) rather than specific decisions (though, as also clear from the linked discussion, we do have detailed reasons for all of our decisions). Our current hiring round for a communications specialist is, in part, also motivated by ensuring we have adequate resourcing to shorten the timeline between conducting research and making decisions and being able to communicate them and their rationale fully.

7/ Conclusion

While this has been a somewhat lengthy and meta update, we think almost all of it can fundamentally be summarized as the expansion on one fundamental idea: When seeking to make the best funding decisions in a high-uncertainty indirect-theory-of-change environment, we need a credible comparative methodology that makes the most of different forms of weak and uncertain evidence to achieve credible statements on relative expected impact.

Based on this conviction, as we are scaling our climate research team, we are building an increasing number of quantitative comparative tools operationalizing this vision to support our evolving grantmaking as well as the grantmaking of allied philanthropists in the effective giving space.

As discussed, one approximate but broadly correct way to think about the interrelation of those tools is to think about them on a continuum from globally comparative outside view tools to generate credible priors of relative expected impact to tools that can lead to more granular comparative statements at a lower level of abstraction (at the cost of narrower applicability).

We believe there is a common core to making sense of the "methodological wilderness" that is impact-foused charity evaluation methodology and grantmaking in non-RCT contexts and we are looking forward to continuing to learn from and exchange with other researchers tackling similar problems, in climate and beyond.

Acknowledgments

Special thanks to SoGive Grants which enabled a significant portion of this work. Thanks also to the entire research team at Founders Pledge for discussions clarifying our thinking and its presentation.

About Founders Pledge

Founders Pledge is a community of over 1,700 tech entrepreneurs finding and funding solutions to the world’s most pressing problems. Through cutting-edge research, world-class advice, and end-to-end giving infrastructure, we empower members to maximize their philanthropic impact by pledging a meaningful portion of their proceeds to charitable causes. Since 2015, our members have pledged over $9 billion and donated more than $800 million globally. As a nonprofit, we are grateful to be community-supported. Together, we are committed to doing immense good. founderspledge.com

- ^

If you think about this on a spectrum where “direct service delivery, RCT evidence global health and development work” a la GiveWell is on one end and “we want to reduce AI risk but are unsure about the sign of many interventions”, then we think climate inhabits a middling space, one where the most effective interventions will not be RCT-able, but where we can still say pretty meaningful things about the directionality of most actions and their relative promisingness.

- ^

While outdated on some specifics, the report documents our “methodological turn” from higher credence in fundamentally non-comparative methods (such as “bottom-up cost effectiveness analyses in a high model and parameter uncertainty context”) and our approach to comparative methodology in an environment of high, but largely independent, uncertainties

- ^

If this sounds like an extreme position, it might make sense to illustrate this point with an analogy from global health and development (GHD), generally the field in EA as seen most strongly driven by strong intervention-specific evidence. If an organization were to claim “we found evidence that this homelessness charity in San Francisco beats the Against Malaria Foundation (AMF) in terms of reducing current human suffering” we would rightly start out very skeptical because the “impact multiplier” of focusing on the global poor vs the local poor is generally believed to be at least 100x. In particular, we would be much more skeptical of such a claim than a claim such as “there is a new malaria vaccine charity that beats AMF”.

Note also that, even in GHD, generally believed to be a field driven by RCT-style evidence and “inside-view” cost-effectiveness analyses, the impact-differentiating move from high income country to global poor (100x) does ~10x more work than the whole multiplier from Give Directly to AMF (~10x), i.e. most variance in impact is independent of in-depth inside-view evidence of particular charities. - ^

That doesn’t mean that one should take action based on those priors, but rather that those priors should be a guide in determining where to go deeper.

- ^

For example, if we have 3 different charities to which we can (or cannot) give money, we have a total of 8 (2^3) possible grant allocations, if all combinations satisfy the budget constraint. Now, if each of these 3 charities has two attributes, one being theory of change (e.g., innovation vs. policy diffusion vs. carbon lock-in), the other being advocacy (e.g. 2 charities being advocacy charities and the third doing direct work) and each of those attributes having two ways to lead to impact (e.g. expressed as a binary variable), then we have 4^2 states of the world, essentially providing a mapping function from funding opportunity attributes to expected impact of particular grants and, subsequently, grant allocations.

If it now were the case that the multiplier from advocacy was either 0.5 or 10 (with equal probability), and that the theories of change were all multipliers of 2 or 4, respectively, then we would find that the allocations including only advocacy charities would dominate (grantmaking prioritization) and that the narrowing the uncertainty on advocacy (is it 0.5 or 10?) would also be the most decision-relevant uncertainty as it could be true that leveraging advocacy is actually diminishing impact, and that variance is much larger and decision-relevant than the uncertainty surrounding theories of change.

This tool is about realistic cases with 20+ funding opportunities, 20+ attributes, potential correlations between variables, and representation of uncertainty as continuous distributions, where it is neither obvious which allocations dominate nor what uncertainties are most worth reducing. - ^

Note, this is a contingent result for the particular piecewise regression example. Here we present the action-relevant uncertainties for impact multiplier differentiators only, however there could be instances in which organization-specific attributes are important to reduce as well.

- ^

Current commitments are close to the lower range, but it is still very early in the philanthropic year, with giving heavily concentrated in the second half of the year.

Vasco Grilo @ 2023-05-28T07:46 (+6)

Great work! I really appreciate how FP Climate's work is relevant to the broader project of effective altruism, and decision-making under uncertainty. Heuristics like FP Climate's impact multipliers can be modelled, and I am glad you are working towards that.

I wish Open Philanthropy moved towards your approach, at least in the context of global health and wellbeing where there is less uncertainty. Open Philanthropy has a much larger team and moves much more money that FP, so I am surprised with the low level of transparency, and lack of rigorous comparative approaches in its grantmaking.

jackva @ 2023-05-28T13:04 (+10)

Thanks, Vasco!

I think it is hard to judge what exactly OP is doing given that they do not publish everything and probably (and understandably!) also have a significant backlog.

But, directionally, I strongly agree that the lack of comparative methodology in EA is a big problem and I am currently writing a post on this.

I think, to a first approximation, I perceive the situation as follows:

Top-level / first encountering a cause:

- ITN analysis, inherently comparative and useful when approaching an issue from ignorance (the impact-differentiating features of ITN are very general and make sense as approximations when not knowing much), but often applied in a way below potential (e.g. non-comparable data, no clear formalization of tractability)

Level above specific CEAs:

- In GHD, stuff like common discounts for generalizability

- In longtermism, maybe some templates or common criteria

A large hole in the middle:

It is my impression that there is a fairly large space of largerly unexplored "mid-level" methodology and comparative concepts that could much improve relative impact estimates across several domains. These could be within a cause (which is what we are trying to do for climate), but also portable and/or across cause, e.g. stuff like:

- breaking down "neglectedness" into constituent elements such as low-hanging fruits already picked, probability of funding additionality, probability of activity additionality, with different data (or data aggregations) available for either allowing for more precise estimates relatively cheaply, improving on first-cut neglectedness estimates.

- what is the multiplier from advocacy and how does this depend on the ratio of philanthropic to societal effort for a problem, the kind of problem (how technical? etc.), and location?

- how do we measure organizational strength and how important is it compared to other factors

- what returns should we expect from engaging in different regions and what does this depend on?

- the value of geopolitical stability as a risk-reducer for many direct risks, etc.

- what should we assume about the steerability of technological trajectories, both when we want to accelerate them and when we want to do the opposite.

To me, these questions seem underexplored -- my current hunch is that this is because once ITN is done comparison breaks down into cause-specific siloes and evaluating things for whether they meet a bar, not encouraging overall-comparative-methodology-and-estimate building.

Would be curious for thoughts on whether that seems right.

Vasco Grilo @ 2023-05-28T15:45 (+4)

Thanks for sharing your thought! They seem right to me. A typical argument against "overall-comparative-methodology-and-estimate building" is that the opportunity cost is high, but it seems worth it on the margin given the large sums of money being granted. However, grantmakers have disagreed with this at least implicitly, in the sense the estimation infrastructure is apparently not super developped.

jackva @ 2023-05-29T12:51 (+4)

It is not, but I would not see this as revealed preference.

I think it's easy for there to be a relative underinvestment in comparative methodology when most grantmakers and charity evaluators are specialized on specific causes or, at least, work sequentially through different cause-specific questions.

Denkenberger @ 2023-06-01T01:36 (+2)

Nice post and 80k podcast! I think this framework makes sense for the slow, extreme warming scenarios. And I could see that since those will probably only happen 100 or more years in the future, investing in resilience would probably not be the top priority. However, I think there are other climate-related scenarios that could impact the far future and occur much sooner. These include the breakdown of the Atlantic Meridional Overturning Circulation (AMOC) or coincident extreme weather (floods, droughts, heat waves) on multiple continents causing a multiple breadbasket failure (MBBF). Have you considered resilience to these scenarios?

jackva @ 2023-06-06T10:44 (+2)

Hi Dave, thanks!

I am not sure I fully understand your comment as I don't think this framework makes any statement on the relative weighting of short-term v long-term mitigation.

Indeed, we will integrate such considerations in our grantmaking/research prioritization tool and see whether the likely effects are (a) substantively significant enough to change grantmaking decisions and (b) whether (a) is sufficiently uncertain to warrant more research.

Denkenberger @ 2023-06-06T22:28 (+2)

Hi Johannes!

Your framework appears to focus on emissions, which makes sense for extreme GHG warming scenarios. However, for addressing something that could happen in the next 10 years, like the coincident extreme weather, I think it would make more sense to focus on resilience. Are you saying that you will do more research on resilience/consider grants on resilience?

By the way, are your donors getting shorter AGI timelines as many EAs are? That would be a reason to focus more on climate catastrophes that could happen in the next couple decades. And is anyone concerned about AGI-induced climate change? AGI may not want to intentionally destroy humans if it has some kindness, but just by scaling up very rapidly, there would be a lot of heat production (especially if it goes to nuclear power or space solar power).

jackva @ 2023-06-25T15:20 (+2)

Hi Dave!

Sorry for the delay.

1. We focus on minimizing expected damage and that could, in principle, include interventions focused on resilience. Whether we spend more time on researching this depends principally on the likelihood of this changing conclusions / our grantmaking.

2. I think the recent review papers on tipping points (e.g. recently discussed here) agree that there isn't really a scenario of super-abrupt dramatic change, most tipping elements take decades to materialize. As such, I don't think it is super-likely that we get civilizationally overwhelmed by these events, so the value of resilience to these scenarios needs to be probability-weighted by these scenarios being fairly unlikely. (Aside: I am very onboard will ALLFED's mission, but I think it makes more sense to be motivated from the perspective of nuclear winter risk than from climate risks to food security that seem fairly remote on a civilizational scale).

3. I think the proximate impact of donors getting shorter AI-timelines should be for those donors to engage in reducing AI risk, not changing within-climate-prioritization. I think it is quite unclear what the climate implications of rapid AGI would be (could be those you mention, but also could be rapid progress in clean tech etc.). I do agree that it is a mild update towards shorter-term actions (e.g. if you think there is a 10% chance of AGI by 2032, then this somewhat decreases the value of climate actions that would have most of their effects only in 2032) but it does not seem dramatic.