My updates after FTX

By Benjamin_Todd @ 2023-03-31T19:22 (+272)

Here are some thoughts on what I’ve learned from what happened with FTX, and (to a lesser degree) other events of the last 6 months.

I can’t give all my reasoning, and have focused on my bottom lines. Bear in mind that updates are relative to my starting point (you could update oppositely if you started in a different place).

In the second half, I list some updates I haven’t made.

I’ve tried to make updates about things that could have actually reduced the chance of something this bad from happening, or where a single data point can be significant, or where one update entails another.

For the implications, I’ve focused on those in my own work, rather than speculate about the EA community or adjacent communities as a whole (though I’ve done some of that). I wrote most of this doc in Jan.

I’m only speaking for myself, not 80,000 Hours or Effective Ventures Foundation (UK) or Effective Ventures Foundation USA Inc.

The updates are roughly in logical order (earlier updates entail the later ones) with some weighting by importance / confidence / size of update. I’m sorry it’s become so long – the key takeaways are in bold.

I still feel unsure about how best to frame some of these issues, and how important different parts are. This is a snapshot of my thinking and it’s likely to change over the next year.

Big picture, I do think significant updates and changes are warranted. Several people we thought deeply shared our values have been charged with conducting one of the biggest financial frauds in history (one of whom has pled guilty).

The first section makes for demoralising reading, so it’s maybe worth also saying that I still think the core ideas of EA make sense, and I plan to keep practising them in my own life.

I hope people keep working on building effective altruism, and in particular, now is probably a moment of unusual malleability to improve its culture, so let’s make the most of it.

List of updates

1. I should take more seriously the idea that EA, despite having many worthwhile ideas, may attract some dangerous people i.e. people who are able to do ambitious things but are reckless / self-deluded / deceptive / norm-breaking and so can have a lot of negative impact. This has long been a theoretical worry, but it wasn’t clearly an empirical issue – it seemed like potential bad actors either hadn’t joined or had been spotted and constrained. Now it seems clearly true. I think we need to act as if the base rate is >1%. (Though if there’s a strong enough reaction to FTX, it’s possible the fraction will be lower going forward than it was before.)

2. Due to this, I should act on the assumption EAs aren’t more trustworthy than average. Previously I acted as if they were. I now think the typical EA probably is more trustworthy than average – EA attracts some very kind and high integrity people – but it also attracts plenty of people with normal amounts of pride, self-delusion and self-interest, and there’s a significant minority who seem more likely to defect or be deceptive than average. The existence of this minority, and because it’s hard to tell who is who, means you need to assume that someone might be untrustworthy by default (even if “they’re an EA”). This doesn’t mean distrusting everyone by default – I still think it’s best to default to being cooperative – but it’s vital to have checks for and ways to exclude dangerous actors, especially in influential positions (i.e. trust, but verify).

3. EAs are also less competent than I thought, and have worse judgement of character and competence than I thought. I’d taken financial success as an update in competence; I no longer think this. But also I wouldn’t have predicted to be deceived so thoroughly, so I negatively update on our ability to judge character and competence, especially the idea that it’s unusually good. My working assumption now would be that we’re about average. This update applies most to the people who knew SBF best, though I don’t expect many others to have done much better if their places were swapped. (More speculatively, it seems plausible to me that many EAs have worse judgement of character than average, because e.g. they project their good intentions onto others.)

4. Personally I think I also got biased by the halo effect, wanting EA to be “winning”, and my prior belief that EAs were unusually competent. It seems like others found it hard to criticise FTX because it’s hard to criticise your in-group and social group, especially if it might have implications for your career. A rule of thumb going forward: if someone who might feel ‘on your side’ appears to be doing unusually well, try to increase scrutiny rather than reduce it.

5. I’m more concerned about people on the far end of the “aggressive optimizing” style i.e. something like people who are (over)confident in a narrow inside view, and willing to act ambitiously on it, even if it breaks important norms. In contrast, I feel more into moderation of action, pluralism of views, humility, prudence and respect for cooperative norms. (I’m still unsure how to best frame all this.)

In particular, it’s important to bear in mind an “aggressive optimizing” personality combines badly with a radical worldview, because the worldview could encourage it or help rationalise it (which could include certain types of effective altruism, longtermism, rationalism, and utilitarianism among many other widespread views in society, like radical socialism, deep ecology, protest movements that don’t rule out violence etc.). It also combines badly with any tendency for self-delusion.

I think where to be on the contrarian action to moderation spectrum is a really difficult question, since some degree of unusual action is necessary if you’re serious about helping others. But it seemed like SBF was fairly extreme on this cluster, as are others who have engaged in worrying behaviour on a smaller scale. So my increased concern about dangerous people makes me more concerned about attracting or encouraging people with this style.

I’m not sure we want the median person in the community to moderate more. The key thing is to avoid attracting and supporting people on the far end of the spectrum, as I think current-EA probably does: EA is about optimization and rethinking ethics, so it wouldn’t be surprising if it attracted some extreme optimizers who are willing to question regular norms. I think this entails being more concerned about broadcasting radical or naively maximising views (e.g. expressing more humility in writing even if it will reach fewer people), and having a culture that’s more hostile to this style. For example, I think we should be less welcoming to proudly self-identified & gung-ho utilitarians, since they’re more likely to have these traits.

I feel very tempted to personally take a step towards moderation in my own worldview. This would make me a bit less confident in my most contrarian positions, including some EA ones.

(Note there is an epistemic component to this cluster (how much confidence someone has in their inside view), but the more important part is the willingness to act on these views, even if it violates other important norms. I’m keen for people to develop and experiment with their own inside views. But it’s quite possible to have radical inside views while being cautious in your actions.)

6. All the above makes me feel tempted to negatively update on the community’s epistemics across the board. You could reason that if you previously thought EA epistemics were unusually good, then we should have had a better than typical chance of spotting this, but actually ended up similarly deceived as professional investors, the media, the crypto community etc., so our epistemics were approximately no better than those other groups. This could imply negatively updating on all of EA’s contrarian positions, in proportion to how different they are from conventional wisdom. On the other hand, judgement of character & financial competence are pretty different from judgement about e.g. cause selection. It doesn’t make sense to me to, say, seriously downweight the warnings of a pandemic researcher about the chance of a pandemic because they didn’t spot the risk their partner was cheating on them. So, overall I don’t make a big update on the community’s judgement and epistemics when it comes to core EA ideas, though I feel pretty unsure about it.

What seems clearer is that we should be skeptical about the idea that EAs have better judgement about anything that’s not a key area of EA or personal expertise, and should use conventional wisdom, expert views or baserates for those (e.g. how to run an org; likelihood of making money; likelihood of fraud). A rule of thumb to keep in mind: “don’t defer to someone solely because they’re an EA.” Look for specific expertise or evaluate the arguments directly.

The previous four points together make me less confident in the current community’s ability to do the “practical project” of effective altruism, especially if you think it requires unusually high levels of operational competence, general judgement, wise action or internal trust. That could suggest focusing more on the intellectual project and hoping others pick up the ideas – I haven’t updated much on our ability to do the intellectual project of EA, and think a lot of progress can be made by applying ‘normal’ epistemics to neglected questions. Within the practical project, it would suggest focusing on areas with better feedback loops and lower downsides in order to build competence, and going slower in the meantime.

7. If you have concerns about someone, don’t expect that the presence of people you’re not concerned around them will prevent dangerous action, especially if that person seems unusually strong willed.

8. Governance seems more important. Since there are dangerous people around and we’re worse at judging who is who, we need better governance to prevent bad stuff. (And if someone with a lot of power is acting without governance, you should think there’s a non-negligible chance of wrongdoing at some point, even if you agree with them on object-level questions. This could also suggest EA orgs shouldn’t accept donations from organisations without sufficient governance.) This doesn’t need to be a ton of bureaucracy, which could slow down a lot of projects, but it does mean things like having basic accounting (to be clear most orgs have this already), and for larger organisations, striving to have a board who actually try to evaluate the CEO (appointing more junior people who have more headspace if that’s what’s needed). This is not easy, due to the reasons here, though overall I’d like to see more.

I feel more into creating better measures to collect anonymous concerns or whistleblower protection, though it’s worth noting that most EA orgs already have whistleblower protection (it’s legally required in the UK and US), and the community health team already has a form for collecting anonymous concerns (the SEC also provides whistleblower protection for fraud). Better whistleblower protection probably wouldn’t have uncovered what happened at FTX, but now seems like a good moment to beef up our systems in that area anyway.

9. Character matters more. Here you can think of ‘character’ minimally as someone’s habits of behaviour. By ‘character matters’ I mean a lot of different things, including:

- People will tend to act in the ways they have in the past unless given very strong evidence otherwise (stronger than saying they’ve changed, doing a few things about it and some years going by).

- Character virtues like honesty, integrity, humility, prudence, moderation & respect for cooperative norms are even more important than I thought (in order to constrain potentially dangerous behaviour, and to maintain trust, truth-tracking and reputation).

- If you have small concerns about someone’s character, there are probably worse things you don’t yet know about.

- Concerns with character become more significant when combined with high stakes, especially the chance of large losses, and lack of governance or regulation.

BUT it’s also harder to assess good character than I thought, and also harder to constrain dangerous actors via culture.

So I think the net effect is:

- Put more weight on small concerns about character (e.g. if someone is willing to do a small sketchy thing, they’re probably going to be more sketchy when the stakes are higher). Be especially concerned about clear signs of significant dishonesty / integrity breaches / norm-breaking in someone’s past – probably just don’t work with someone if you find any. Also look out for recklessness, overconfidence, self-importance, and self-delusion as warning signs. If someone sounds reckless in how they talk, it might not just be bluster.

- Be more willing to share small concerns about character with others, even though this increases negative gossip, or could reflect badly on the community.

- Try to avoid plans and structures that rely on people being unusually strong in these character virtues, especially if they involve high stakes.

- Do more to support and encourage people to develop important character virtues, like honesty and humility, to shift the culture in that direction, put off people without the character virtues we value, and uphold those virtues myself (even in the face of short-term costs) E.g. it seems plausible that some groups (e.g. 80k) should talk more about having an ethical life in general, rather than focusing mainly on consequences.

- I should be more concerned about the character of people I affiliate with.

10. It’s even more important to emphasise not doing things that seem clearly wrong from a common sense perspective even if you think it could have a big positive impact. One difficulty of focusing on consequences is that it removes the bright lines around norms – any norm can be questioned, and a small violation doesn’t seem so bad because it’s small. Unfortunately in the face of self-delusion and huge stakes, humans probably need relatively simple norms to prevent bad behaviour. Framing these norms seems hard, since they need to be both simple enough to provide a bright line and sophisticated enough to apply to high-stakes, ethically complex & unintuitive situations, so I’d like to see more work to develop them. One that makes sense to me “don’t do something that seems clearly wrong from a common sense perspective even if you think it could have a big positive impact”. I think we could also aim to have brighter lines around honesty/integrity, though I’m not sure how to precisely define them (though I find this and this helpful).

11. EA & longtermism are going to have controversial brands and be met with skepticism by default by many people in the media, Twitterati, public intellectuals etc. for some time. (This update isn’t only due to SBF.) This suggests that media based outreach is going to be less effective going forward. Note that so far it’s unclear EA’s perception among the general public has changed (most of them have still never heard of EA) – but the views of people in the media shape perception over the longer term. I think there’s a good chance perceptions continue to get worse as e.g. all the FTX TV shows come out, and future media coverage has a negative slant.

I’ve also updated back in favour of EA not being a great public facing brand, since it seems to have held up poorly in the face of its first major scandal.

This suggests that groups who want a ‘sensible’ or broadly appealing brand should disassociate more from EA & longtermism, while we accept they’re going to be weirder and niche for now. (In addition, EA may want to dissociate from some of its more controversial elements, either as a complementary or alternative strategy.)

12. The value of additional money to EA-supported object level causes has gone up by about 2x since the summer, and the funding bar has also gone up 2x. This means projects that seemed marginal previously shouldn’t get funded. Open Philanthropy estimates ~45% of recent longtermist grants wouldn’t clear their new bar. But it also means that marginal donations are higher-impact. (Why 2x? The amount of capital is down ~3x, but we also need to consider future donations.)

13. Forward-looking long-term cost-effectiveness of EA community building seems lower to me. (Note that I especially mean people-based community building efforts, not independent efforts to spread certain underlying ideas, related academic field building etc.) This is because:

- My estimate of the fraction of dangerous actors attracted has gone up, and my confidence in our ability to spot them has gone down, making the community less valuable.

- Similarly, if the EA community can’t be high-trust by default, and is less generally competent, its potential for impact is lower than I thought.

- Tractability seems lower due to the worse brand, worse appeal and lower morale, and potential for negative feedback loops.

- Past cost-effectiveness seems much lower.

- More speculatively, as of January, I feel more pessimistic about the community’s ability to not tear itself apart in the face of scandal and setbacks.

- I’m also more concerned that community is simply unpleasant for many people to be part of.

This would mean I think previously-borderline community growth efforts should be cut. And a higher funding bar would increase the extent of those cuts – it seems plausible 50%+ of efforts that were funded in 2022 should not be funded going forward. (Though certain efforts to improve culture could be more effective.) I don’t currently go as far as to think that building a community around the idea of doing good more effectively won’t work.

14. The value of actively affiliating with the current EA community seems lower. This is mainly due to the above: it seems less valuable & effective to grow the community; the brand costs of affiliation are higher, and I think it’s going to be less motivating to be part of. However, I’ve also updated towards the costs of sharing a brand being bigger than I thought. Recent events have created a ‘pile on’ in which not only are lots of people and organizations tarred by FTX, but also dug up many more issues, and signal-boosted critics. I didn’t anticipate how strong this dynamic would be.

Personally, this makes me more inclined to write about specific causes like AI alignment rather than EA itself, and/or to write about broader ideas (e.g. having a satisfying career in general, rather than EA careers advice). It seems more plausible to me e.g. that 80k should work harder to foster its own identity & community, and focus more on sending people to cause specific communities (though continue to introduce people to EA), and it seems more attractive to do things like have a separate ‘effective charity’ movement.

On the other hand, the next year could be a moment of malleability where it’s possible to greatly improve EA. So, while I expect it makes sense for some groups to step back more, I hope others stay dedicated to making EA the best version of itself it can be.

15. I’m less into the vision of EA as an identity / subculture / community, especially one that occupies all parts of your life, and more into seeing it as a set of ideas & values you engage with as part of an otherwise normal life. Having EA as part of your identity and main social scene makes it harder to criticise people within it, and makes it more likely that you end up trusting or deferring to people just because they’re EAs. It leads to a weird situation where people feel like all actions taken by people in the community speak for them, when there is no decision-making power at the level of ‘the community’ (example). It complicates governance by mixing professional and social relationships. If the community is less valuable to build and affiliate with, the gains of strongly identifying with it are lower. Making EA into a subculture associates the ideas with a particular lifestyle / culture that is unappealing if not actively bad for many people; and at the very least, it associates the drama that’s inevitable within any social scene with the ideas. Making EA your whole life is an example of non-moderate action. It also makes it harder to moderate your views, making it more likely you end up naively optimizing. Overall, I don’t feel confident I should disavow EA as an identity (given that it already is one, it might be better to try to make it work better); but I’ve always been queasy about it, and recent events make me a lot more tempted.

In short this would mean trying to avoid thinking of yourself as “an EA”. More specifically, I see all of the following as (even) worse ideas than before, especially doing more than one at the same time: (i) having a professional and social network that’s mainly people very into EA (ii) taking on lots of other countercultural positions at the same time (iii) moving to EA hubs, especially the Bay Area and if you don’t have other connections in those places (iv) living in a group house (v) financially depending on EA e.g. not having skills that can be used elsewhere. It makes me more keen on efforts to have good discourse around the ideas, like The Precipice, and less into “community building”.

16. There’s a huge difference between which ideas are true and which ideas are good for the world to promote, in part because your ideas can be used and interpreted in very different ways from what you intend.

17. I feel unsure if EA should become either more or less centralised, and suspect some of both might make sense. For instance, having point people for various kinds of coordination & leadership seems more valuable than before (diffusion of responsibility has seemed like a problem, as has information flow); but, as covered, sharing the same brand and a uniting identity seems worse than before, so it seems more attractive to decentralise into looser knit collection of brands, cause specific scenes and organisations. The current situation where people feel like it’s a single body that speaks for & represents them, but where there’s no community-wide membership or control, seems pretty bad.

18. I should be less willing to affiliate with people in controversial industries, especially those with little governance or regulation or are in a big bull market.

19. I feel unsure how to update about promotion of earning to give. I’m inclined to think events don’t imply much about ‘moderate’ earning to give (e.g. being a software engineer and donating), and the relative value of donations has gone up. I’m more skeptical of promoting ‘ambitious’ earning to give (e.g. aiming to become a billionaire), because it’s a more contrarian position, more likely to attract dangerous maximisers, relies on a single person having a lot of influence; and now has a bad track record – even more so if it involves working in controversial industries.

20. I’m more skeptical of strategies that involve people in influential positions making hard calls at a crucial moment (e.g. in party politics, AI labs), because this relies on these people having good character, though I wouldn’t go as far as avoiding them all together.

21. I’d updated a bit towards the move fast and break things / super high ambition / chaotic philosophy of org management due to FTX (vs. prioritise carefully / be careful about overly rapid growth / follow best practice etc.), but I undo that update. (Making this update on one data point was probably also a mistake in the first place.)

22. When promoting EA, we should talk more about concrete impactful projects and useful ideas, and less about specific people (especially if they’re billionaires, in controversial industries or might be aggressive maximisers). I’d mostly made this update in the summer for other reasons, but it seems more vindicated now.

Updates I’m basically not making

A lot of the proposals I’ve read post-FTX seem like they wouldn’t have made much difference to whether something like FTX happened, or at least don’t get to the heart of it.

I also think many critiques focus far too much on the ideas as opposed to personality and normal human flaws. This is because the ideas are more novel & interesting, but I think they played second fiddle.

Here I’ve listed some things I mostly haven’t changed my mind about:

- The core ideas of effective altruism still make sense to me. By ‘core ideas’ I mean both the underlying values (e.g. we should strive to help others, to prioritise more, be more impartial, think harder about what’s true) and core positions, like doing more about existential risk, helping the developing world and donating 10%.

First (assuming the allegations are true) the FTX founders were violating the values of the community – both the value of collaborative spirit and by making decisions that were likely to do great damage rather than help people.

Second, I think the crucial question is what led them to allegedly make such bad decisions in the first place, rather than how they (incorrectly) rationalised these decisions. To me, that seems more about personality (the ‘aggressive optimizing’ cluster), lack of governance/regulation, and ordinary human weaknesses.

Third, the actions of individuals don’t tell us much about whether a philosophical idea like treating others more equally makes sense. It also doesn’t tell us anything much about whether e.g. GiveWell’s research is correct.

The main update I make is above: that some of the ideas of effective altruism, especially extreme versions of them, attract some dangerous people, and the current community isn’t able to spot and contain them. This makes the community as it exists now seem less valuable to me.

I’m more concerned that, while the ideas might be correct and important, they could be bad to promote in practice since they could help to rationalise bad behaviour. But overall I feel unsure how worried to be about this. Many ideas can be used to rationalise bad behaviour, so never spreading any ideas that could seems untenable. I also intend to keep practicing the ideas in my own life.

- I’d make similar comments about longtermism, though it seems even less important to what happened, because FTX seems like it would have happened even without longtermism: SBF would have supported animal welfare instead. Likewise, there’s a stronger case for risk neutrality with respect to raising funding for GiveWell charities than longtermist ones, because the returns diminish much less sharply.

- I’d also make similar comments about utilitarianism. Though, I think recent events provide stronger reasons to be concerned about building a community around utilitarianism than effective altruism, because SBF was a utilitarian before being an EA, and it’s a more radical idea.

- EA should still use thought experiments & quantification. I agree with the critique that we should be more careful with taking the results literally and then confidently applying them. But I don’t agree we should think less about thought experiments or give up trying to quantify things – that’s where a lot of the value has been. I think the ‘aggressive optimizing’ personality gets closer to the heart of the problem.

- I don’t think the problem was with common views on risk. This seems misplaced in a couple of ways. First, SBF’s stated views on risk were extreme within the community (I don’t know anyone else who would happily gamble on St Petersburg), so he wasn’t applying the community’s positions. Second, (assuming the allegations are true) since fraud was likely to be caught and have huge negative effects, it seems likely the FTX founders made a decision with negative expected value even by their own lights, rather than a long shot but positive-EV bet. So, the key issue is why they were so wrong, not centrally their attitudes to risk. And third there’s the alleged fraud, which was a major breach of integrity and honesty no matter the direct consequences. Though, I agree the (overly extreme) views on risk may have helped the FTX founders to rationalise a bad decision.

I think the basic position that I’ve tried to advance in my writing on risk is still correct: if you’re a small actor relative to the causes you support, and not doing something that could set back the whole area or do a lot of harm, then you can be closer to risk-neutral than a selfish actor. Likewise, I still think it makes sense for young people who want to do good and have options to “aim high” i.e. try out paths that have a high chance of not going anywhere, but would be really good if they succeed.

- The fact that EAs didn’t spot this risk to themselves, doesn’t mean we should ignore their worries about existential risk. I’ve heard this take a lot, but it seems like a weird leap. Recent events show that EAs are not better than e.g. the financial press at judging character and financial competence. This doesn’t tell you anything much about whether the arguments they make about existential risk are correct or not. (Like my earlier example of ignoring a pandemic expert’s warnings because they didn’t realise their partner was cheating on them.) These are in totally different categories, and only one is a claimed area of expertise. If anything, if a group who are super concerned about risks didn’t spot a risk, it should make us more concerned there are as yet unknown existential risks out there.

I think the steelman of this critique involves arguing that bad judgement about e.g. finance is evidence for bad judgement in general, and so people should defer less to the more contrarian EA positions. I don’t personally put a lot of weight on this argument, but I feel unsure and discuss it in the first section. Either way, it’s better to engage with the arguments about existential risk on their merits.

There’s another version of this critique that says that these events show that EAs are not capable of “managing” existential risks on behalf of humanity. I agree – it would be a terrible failure if EAs end up the only people working to reduce existential risk – we need orders of magnitude more people and major world governments working on it. This is why the main thrust of EA effort has, in my eyes, been to raise concern for these risks (e.g. The Precipice, WWOTF), do field building (e.g. in AI), or fund groups outside the community (e.g. in biosecurity).

- I don’t see this as much additional evidence that EA should rely less on billionaires. It would clearly be better for there to be a more diversified group of funders supporting EA (I believed this before). The issue is what to do about it going forward. 1 billionaire ~= 5,000 Google SWE earning to give 30% of their income, and that’s more people than the entire community. So while we can and should take steps in this direction, it’s going to be hard to avoid a situation where most donations made according to EA principles are made by the wealthiest people interested in the ideas.

- Similarly, I agree it would be better if we had more public faces of EA and should be doing more to get this (I also thought this before). This said, I don’t think it’s that easy to make progress on. I’m aware of several attempts to get more people to become faces of EA, but they’ve ended up not wanting to do it (which I sympathise with having witnessed recent events), and even if they wanted to do it, it’s unclear they could be successful enough to move the needle on the perceptions of EA.

- It doesn’t seem like an update on the idea that billionaires have too much influence on cause prioritisation in effective altruism. I don’t think SBF had much influence on cause prioritisation, and the Future Fund mainly supported causes that were already seen as important. I agree SBF was having some influence on the culture of the community (e.g. towards more risk taking), which I attribute to the halo effect around his apparent material success. Billionaires can also of course have disproportionate influence on what it’s possible to get paid to work on, which sucks, but I don’t see a particular promising route to avoiding that.

- I don’t see events as clear evidence that funding decisions should be more democratised. This seems like mainly a separate issue and if funding decisions had been more democratised, I don’t think it would have made much difference in preventing what happened. Indeed, the Future Fund was the strongest promoter of more decentralised funding. This said, I’d be happy (for other reasons) to see more experiments with decentralised philanthropy, alternative decision-making and information aggregation mechanisms within EA and in general.

- I don’t see this as evidence that moral corruption by unethical industries is a bigger problem than we thought. I don’t think a narrative in which SBF was ‘corrupted’ by the crypto industry seems like a big driver to me – I think being corrupted by money/power seems closer to the mark, and the lack of regulation in crypto was a problem.

- I don’t take this as an update in favour of the rationality community over the EA community. I make mostly similar updates about that community, though with some differences.

- I’m unconvinced that there should have been much more scenario / risk planning. I think it was already obvious that FTX might fall 90% in a crypto bear market (e.g. here) – and if that was all that happened, things would probably be OK. What surprised people was the alleged fraud and that everything was so entangled it would all go to zero at once, and I’m skeptical additional risk surveying exercises would have ended up with a significant credence on these (unless a bunch of other things were different). There were already some risk surveying attempts and they didn’t get there (e.g. in early 2022, metaculus had a 1% chance of FTX making any default on customer funds over the year with ~40 forecasters). I also think that even if someone had concluded there was e.g. a 10% chance of this, it would have been hard to do something about it ahead of time that would have made a big difference. This post was impressive for making the connection between a crypto crash and a crash in SBF’s reputation.

This has been the worst setback the community has ever faced. And it would make sense to me if many want to take some kind of step back, especially from the community as it exists today.

But the EA community is still tiny. Now looking to effective altruism’s second decade, there’s time to address its problems, and build something much better. Indeed, now is probably going to be one of the best ever opportunities we’re going to have to do that.

I hope that even if some people step back, others continue to try to make the effective altruism the best version of itself it can be – perhaps a version that can entertain radical ideas, yet is more humble, moderate and virtuous in action; that’s more professionalised; and that’s more focused on competent execution of projects that concretely help with pressing problems.

I also continue to believe the core values and ideas make sense, are important and are underappreciated by the world at large. I hope people continue to stand up for the ideas that make sense to them, and that these ideas can find more avenues for expression – and help people to do more good.

Ubuntu @ 2023-03-31T23:44 (+41)

Wow, this back-of-the-envelope has really brought home to me how "EA should diversify its funding sources!" is not as straightforward as it first appears:

1 billionaire ~= 5,000 Google SWE earning to give 30% of their income, and that’s more people than the entire community.

Similarly for "EA should diversify its public faces!":

I’m aware of several attempts to get more people to become faces of EA, but they’ve ended up not wanting to do it (which I sympathise with having witnessed recent events), and even if they wanted to do it, it’s unclear they could be successful enough to move the needle on the perceptions of EA.

I'm surprised people don't talk about this more.

Vasco Grilo @ 2023-04-06T07:46 (+4)

Hi there,

Yes, donations are also quite heavy-tailed within GWWC Pledges. From here:

Less than 1% of our donors account for 50% of our recorded donations. This amounts to dozens of people, while the next 40% of donations (from both pledge donors and non-pledge donors) is distributed among hundreds. This suggests that most of our impact comes from a small-to-medium-size group of large donors (rather than from a very small group of very large donors, or from a large group of small donors).[6]

John G. Halstead @ 2023-04-05T08:18 (+29)

This is excellent thank you.

"Due to this, I should act on the assumption EAs aren’t more trustworthy than average. Previously I acted as if they were. I now think the typical EA probably is more trustworthy than average – EA attracts some very kind and high integrity people – but it also attracts plenty of people with normal amounts of pride, self-delusion and self-interest, and there’s a significant minority who seem more likely to defect or be deceptive than average. The existence of this minority, and because it’s hard to tell who is who, means you need to assume that someone might be untrustworthy by default (even if “they’re an EA”). This doesn’t mean distrusting everyone by default – I still think it’s best to default to being cooperative – but it’s vital to have checks for and ways to exclude dangerous actors, especially in influential positions (i.e. trust, but verify)."

To build on and perhaps strengthen what you are saying. I think a central problem of utilitarian communities is: they will be disproportionately people on the spectrum, psychopaths and naive utilitarians.

I think there are reasons to think that in communities with lots of utilitarians/consequentialists, bad actors will not just be at the average societal level, but that they will be overrepresented. A lot of psychology research suggests that psychopaths disproportionately have utilitarian intuitions. By my count, at least two of the first ~100 EAs were psychopaths (SBF and one other that I know of, without looking very hard). (Of course not all utilitarians are psychopaths).

In addition to this, utilitarian communities will often obviously attract a disproportionate number of naive utilitarians who will be tempted to do bad actions.

This is all compounded by the fact that utilitarian communities will also be disproportionately comprised of people on the autism spectrum. This is obvious to anyone acquainted with EA and my offhand explanation would be something about the attraction to data and quantification. The people on the spectrum get exploited by/do not stand up to the psychopaths and naive utilitarians because they are not very good at interpersonal judgements and soft skills, and lack a good theory of mind.

With respect to judgment of character, I think we should maybe expect EAs to be below average because of the prevalence of autism.

All of this suggests that, as you recommend, in communities with lots of consequentialists, there needs to be very large emphasis on virtues and common sense norms. If people show signs of not being high integrity, they should be strongly disfavoured by default. I don't think this happens at the moment, and SBF is not the only example.

Denkenberger @ 2023-04-22T02:23 (+10)

It could be true that bad actors are overrepresented in EA, and so it makes sense to have checks and balances for people in power. Perhaps honesty is not capturing all people are meaning with trustworthy, but I think the norms of honesty in EA are much stronger than in mainstream society. Behaviors such as admitting mistakes, being willing to update, admitting that someone else might be better for the job, recognizing uncertainty, admitting weaknesses in arguments, etc are much less common in mainstream society. I think EA selects for more honest people and also pushes people in the movement to be more honest.

akash @ 2023-04-05T16:14 (+8)

I downvoted and want to explain my reasoning briefly: the conclusions presented are too strong, and the justifications don't necessarily support them.

We simply don't have enough experience or data points to say what the "central problem" in a utilitarian community will be. The one study cited seems suggestive at best. People on the spectrum are, well, on a spectrum, and so is their behavior; how they react will not be as monolithic as suggested.

All that being said, I softly agree with the conclusion (because I think this would be true for any community).

All of this suggests that, as you recommend, in communities with lots of consequentialists, there needs to be very large emphasis on virtues and common sense norms.

Simon Stewart @ 2023-04-07T18:52 (+10)

I'm not sure I agree with the conclusion, because people with dark triad personalities may be better than average at virtue signalling and demonstrating adherence to norms.

I think there should probably be a focus on principles, standards and rules that can be easily recalled by a person in a chaotic situation (e.g. put on your mask before helping others). And that these should be designed with limiting downside risk and risk of ruin in mind.

My intuition is that the rule "disfavour people who show signs of being low integrity" is a bad one, as:

- it relies on ability to compare person to idealised person rather than behaviour to rule, and the former is much more difficult to reason about

- it's moving the problem elsewhere not solving it

- it's likely to reduce diversity and upside potential of the community

- it doesn't mitigate the risk when a bad actor passes the filter

I'd favour starting from the premise that everyone has the potential to act without integrity and trying to design systems than mitigate this risk.

Richard_Leyba_Tejada @ 2024-02-14T03:14 (+1)

What systems could be designed to mitigate risks of people taking advantage of others? What about spreading the knowledge of how we are influenced? With this knowledge, we can recognize these behaviors and turn off our auto pilots so we can defend ourselves from bad actors. Or will that knowledge being widespread lead to some people using this knowledge to do more damage?

akash @ 2023-03-31T19:35 (+28)

...for example, I think we should be less welcoming to proudly self-identified utilitarians, since they’re more likely to have these traits.

Ouch. Could you elaborate more on this and back this up more? The statement makes it sound like an obvious fact, and I don't see why this would be true.

Michelle_Hutchinson @ 2023-04-02T20:10 (+41)

+1

It seems pretty wrong to me that the thing causing SBF's bad behaviour was thinking what matters in the world is the longrun wellbeing of sentient beings. My guess is that we should be focusing more on his traits like ambition and callousness towards those around him.

But it seems plausible I'm just being defensive, as a proudly self-identified utilitarian who would like to be welcome in the community.

Benjamin_Todd @ 2023-04-02T23:06 (+4)

I basically agree and try to emphasize personality much more than ideology in the post.

That said, it doesn't seem like a big leap to think that confidence in an ideology that says you need to maximise a single value to the exclusion of all else could lead to dangerously optimizing behaviour...

Having more concern for the wellbeing of others is not the problematic part. But utilitarianism is more than that.

Moreover it could still be true that confidence in utilitarianism is in practice correlated with these dangerous traits.

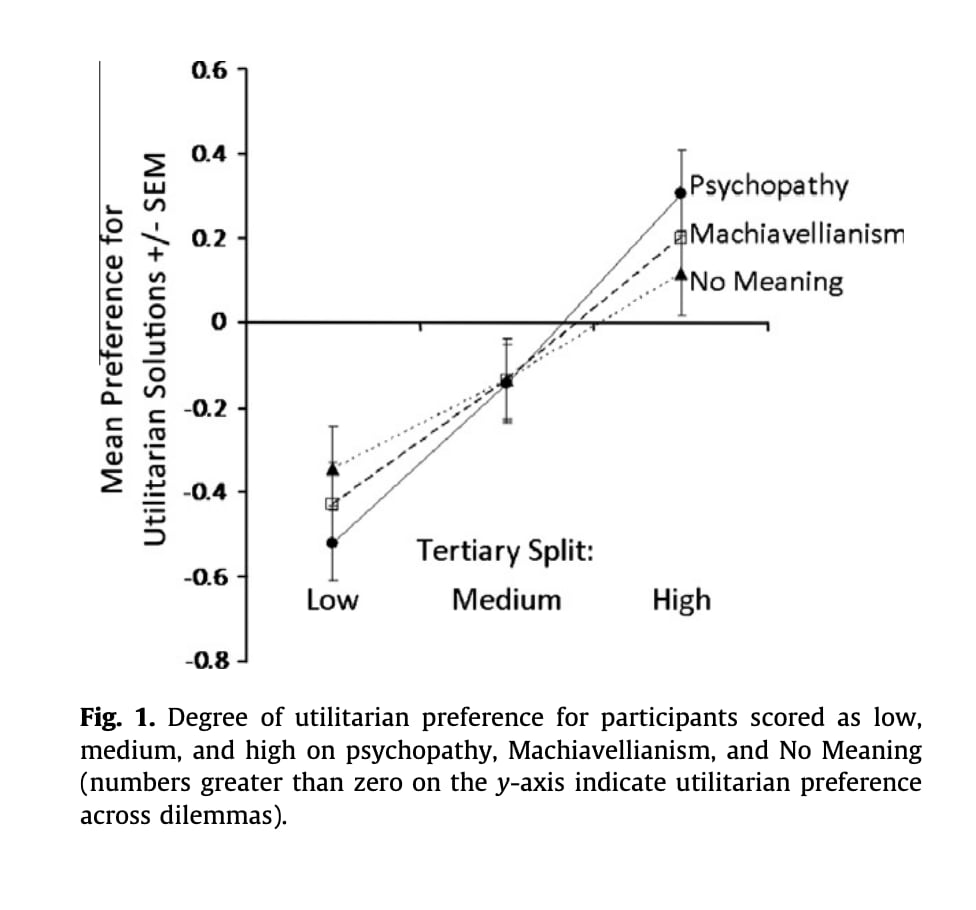

I expect it's the negative component in the two factor model that's the problem, rather than the positive component you highlight. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5900580/

Cullen @ 2023-04-02T23:49 (+15)

it doesn't seem like a big leap to think that confidence in an ideology that says you need to maximise a single value to the exclusion of all else could lead to dangerously optimizing behaviour.

I don't find this a persuasive reason to think that utilitarianism is more likely to lead to this sort of behavior than pretty much any other ideology. I think a huge number of (maybe all?) ideologies imply that maximizing the good as defined by that ideology is the best thing to do, and that considerations outside of that ideology have very little weight. You see this behavior with many theists, Marxists, social justice advocates, etc. etc.

My general view is that there are a lot of people like SBF who have a lot of power-seeking and related traits—including callous disregard for law, social norms, and moral uncertainty—and that some of them use moral language to justify their actions. But I don't think utilitarianism is especially vulnerable to this, nor do I think it would be a good counterargument if it was. If utilitarianism is true, or directionally true, it seems good to have people identify as such, but we should definitely judge harshly those that take an extremely cavalier attitude towards morality on the basis of their conviction in one moral philosophy. Moral uncertainty and all that.

Benjamin_Todd @ 2023-04-03T00:08 (+9)

I'd agree a high degree of confidence + strong willingness to act combined with many other ideologies leads to bad stuff.

Though I still think some ideologies encourage maximisation more than others.

Utilitarianism is much more explicit in its maximisation than most ideologies, plus it (at least superficially) actively undermines the normal safeguards against dangerous maximisation (virtues, the law, and moral rules) by pointing out these can be overridden for the greater good.

Like yes there are extreme environmentalists and that's bad, but normally when someone takes on an ideology like environmentalism, they don't also explicitly & automatically say that the environmental is all that matters and that it's in principle permissible to cheat & lie in order to benefit the environment.

nor do I think it would be a good counterargument if it was.

Definitely not saying it has any bearing on the truth of utilitarianism (in general I don't think recent events have much bearing on the truth of anything). My original point was about who EA should try to attract, as a practical matter.

Cullen @ 2023-05-16T17:29 (+7)

Utilitarianism is much more explicit in its maximisation than most ideologies, plus it (at least superficially) actively undermines the normal safeguards against dangerous maximisation (virtues, the law, and moral rules) by pointing out these can be overridden for the greater good.

Like yes there are extreme environmentalists and that's bad, but normally when someone takes on an ideology like environmentalism, they don't also explicitly & automatically say that the environmental is all that matters and that it's in principle permissible to cheat & lie in order to benefit the environment.

I think it's true that utilitarianism is more maximizing than the median ideology. But I think a lot of other ideologies are minimizing in a way that creates equal pathologies in practice. E.g., deontological philosophies are often about minimizing rights violations, which can be used to justify pretty extreme (and bad) measures.

MaxRa @ 2023-04-03T08:48 (+4)

Like yes there are extreme environmentalists and that's bad, but normally when someone takes on an ideology like environmentalism, they don't also explicitly & automatically say that the environmental is all that matters and that it's in principle permissible to cheat & lie in order to benefit the environment.

I moderately confidently expect there to be a higher proportion of extreme environmentalists than extreme utilitarians. I think utilitarians will be generally more intelligent / more interested in discussion / more desiring to be "correct" and "rational", and that the correct and predominant reply to things like the "Utilitarianism implies killing healthy patients!" critique is "Yeah, that's naive Utilitarianism, I'm a Sophisticated Utilitarian who realizes the value of norms, laws, virtues and intuitions for cooperation".

John G. Halstead @ 2023-04-05T08:34 (+8)

I disagree with this. I think utilitarian communities are especially vulnerable to bad actors. As I discuss in my other comment, psychopaths disproportionately have utilitarian intuitions, so we should expect communities with a lot of utilitarians to have a disproportionate number of psychopaths relative to the rest of the population.

Cullen @ 2023-04-05T19:43 (+6)

Thanks, this is a meaningful update for me.

Arepo @ 2023-04-27T17:02 (+2)

psychopaths disproportionately have utilitarian intuitions, so we should expect communities with a lot of utilitarians to have a disproportionate number of psychopaths relative to the rest of the population.

From psychopaths disproportionately having utilitarian intuitions, it doesn't follow that utilitarians disproportionately have psychopathic tendencies. We might slightly increase our credence that they do, but probably not enough to meaningfully outweigh the experience of hanging out with utilitarians and learning first hand of their typical personality traits.

John G. Halstead @ 2023-04-28T14:45 (+2)

I think it does follow, other things being equal. If the prevalence of psychopaths in the wider population is 2%, but psychopaths are twice as likely to be utilitarians, then other things equal, we should expect 4% of utilitarian communities to be psychopaths. Unless you think psychopathy is correlated with other things that make one less likely to actually get involved in active utilitarian communities, that must be true.

Arepo @ 2023-05-03T16:50 (+2)

There's any number of possible reasons why psychopaths might not want to get involved with utilitarian communities. For example, their IQ tends to be slightly lower than average, whereas utilitarians tend to have higher than average IQs, so they might not fit in intellectually whether their intentions were benign or malign. Relatedly, you would expect communities with higher IQs better at policing themselves against malign actors.

I think there would be countless confounding factors like this that would dominate a single survey based on a couple of hundred (presumably WEIRD) students.

John G. Halstead @ 2023-05-03T18:30 (+6)

In my other comment, I didn't just link to a single study, I linked to a google scholar search with lots of articles about the connection between psychopathy and utilitarianism. The effect size found in the single study I did link to is also pretty large:

The average difference in IQ found in the study you link to finds a very small effect size - a cohen's d of -0.12. And violent psychopaths have slightly higher IQ than average.

For the reasons I gave elsewhere in my comments, I would expect EAs to be worse at policing bad actors than average because EAs are so disproportionately on the autism spectrum.

Yes, these would be WEIRD people, but this is moot since EA is made up of WEIRD people as well.

Arepo @ 2023-05-08T10:13 (+2)

Fair enough. I am still sceptical that this would translate into a commensurate increase in psychopaths in utilitarian communities*, but this seems enough to give us reason for concern.

*Also violent psychopaths don't seem to be our problem, so their greater intelligence would mean the average IQ of the kind of emotional manipulator we're concerned about would be slightly lower.

Benjamin_Todd @ 2023-03-31T20:07 (+17)

It's a big topic, but the justification is supposed to be the part just before. I think we should be more worried about attracting naive optimizers, and I think people who are gung-ho utilitarians are one example of a group who are more likely to have this trait.

I think it's notable that SBF was a gung-ho utilitarian before he got into EA.

It's maybe worth clarifying that I'm most concerned about people who a combination of high-confidence in utilitarianism and a lack of qualms about putting it into practice.

There are lots of people who see utilitarianism as their best guess moral theory but aren't naive optimizers in the sense I'm trying to point to here.

See more in Toby's talk.

akash @ 2023-03-31T20:43 (+10)

It's maybe worth clarifying that I'm most concerned about people who a combination of high-confidence in utilitarianism and a lack of qualms about putting it into practice.

Thank you, that makes more sense + I largely agree.

However, I also wonder if all this could be better gauged by watching out for key psychological traits/features instead of probing someone's ethical view. For instance, a person low in openness showing high-risk behavior who happens to be a deontologist could cause as much trouble as a naive utilitarian optimizer. In either case, it would be the high-risk behavior that would potentially cause problems rather than how they ethically make decisions.

Benjamin_Todd @ 2023-03-31T20:49 (+4)

I was trying to do that :) That's why I opened with naive optimizing as the problem. The point about gung-ho utilitarians was supposed to be an example of a potential implication.

Richard Y Chappell @ 2023-04-01T00:06 (+24)

Yeah, I think "proudly self-identified utilitarians" is not the same as "naively optimizing utilitarians", so would encourage you to still be welcoming to those in the former group who are not in the latter :-)

ETA: I did appreciate your emphasizing that "it’s quite possible to have radical inside views while being cautious in your actions."

Benjamin_Todd @ 2023-04-01T09:40 (+4)

I had you in mind as a good utilitarian when writing :)

Good point that just saying 'naively optimizing' utilitarians is probably clearest most of the time. I was looking for other words that would denote high-confidence and willingness to act without qualms.

John G. Halstead @ 2023-04-05T08:42 (+4)

minor nitpick - this doesn't seem to capture naive utilitarianism as I understand it. I always thought naive utilitarianism was about going against common sense norms on the basis of your own personal fragile calculations. eg lying is prone to being rumbled and one's reputation is very fragile, so it makes sense to follow the norm of not lying even if your own calculations seem to suggest that it is good because the calculations will tend to miss longer term indirect and subtle effects. But this is neither about (1) high confidence nor (2) acting without qualms. Indeed one might decide not to lie with high confidence and without qualms. Equally, one might choose to 'lie for the greater good' with low confidence and with lots of qualms. This would still be naive utilitarian behaviour

Benjamin_Todd @ 2023-04-05T11:49 (+2)

That's useful - my 'naive optimizing' thing isn't supposed to be the same thing as naive utilitarianism, but I do find it hard to pin down the exact trait that's the issue here, and those are interesting points about confidence maybe not being the key thing.

Benjamin_Todd @ 2023-03-31T20:10 (+27)

Appendix: some other comments that didn't make it into the main post

I made some mistakes in tracking EA money

These aren’t updates but rather things I should have been able to figure out ex ante:

- Mark down private valuations of startups by a factor of ~2 (especially if the founders hold most of the equity)

- Measure growth from peak-to-peak or trough-to-trough rather than trough-to-peak – I knew crypto was in a huge bull market

- I maybe should have expected more correlation between Alameda and FTX, and a higher chance of going to zero, based on the outside view

- If your assets are highly volatile and the community is risk-averse, that’s a good reason to discount the value of those assets and/or delay spending until you have more confidence in how much money you have.

Taken together, I think we should have planned on the basis of the portfolio being 2-3x smaller than my estimates, and expected less future growth. I think some of these effects were taken into account in planning (it was obvious that crypto could fall a lot), perhaps correctly in some cases, but not enough in others.

Moreover, even with these adjustments, I think spending would have still been significantly below target, so there would have still been efforts to grow spending significantly, but maybe slower in some areas, especially the FF.

Another mistake

I think I made a mistake to publicly affiliate 80,000 Hours with SBF as much as we did – just based on good PR sense and messaging strategy – and not to investigate it more. (80,000 Hours has written about a similar issue on their mistakes page.)

Epistemic virtues

I was unsure whether to say anything extra about epistemic virtues, like honesty, integrity and truthseeking. I agree that having more of these virtues would have given us led us to put a higher probability on what was happening (e.g. via more people sharing concerns even if might make the community look bad), though it doesn’t feel like a big update about the value of these traits, since we already knew they’re valuable. Someone would have also needed to end up with a pretty high probability to do much about it. I agree (as covered above) that these events are an update against the idea EA has unusually good epistemics, and that stronger epistemics virtues seem more important in order to constrain dangerous actors. Finally, I think epistemic virtues have already had a lot of emphasis relatively speaking, so it seems hard to get large gains here.

Degree of criticism in the culture

I feel worried that the updates listed are going to lead towards a more critical, non-trusting culture in which people’s character and minor actions are publicly scrutinised, and there’s more gossip and less friendship. Personally I already find EA culture often pretty negative and draining, so feel worried about moving more in that direction. One option is to accept it’s what’s needed to prevent dangerous actors, and that EA is going to be a less fun place to be, and therefore have less potential. However, I suspect and hope it’s also possible to find ways to make improvements in the directions I gesture at that don’t make the culture more negative.

Membership growth

I expect EA membership growth to take a hit. 2022 showed strong growth (20-60%), and I already expected 2023 to be lower (unless we maintain accelerating effort into growth). But FTX will reduce that because (i) a lot of the 2022 growth seems to be driven by personal connections, and that could be pretty sensitive to positive buzz (ii) lower funding & investment (iii) decent chance that a wave of people leaves (and this could start a negative feedback loop). I don’t think increased (negative) media coverage will lead to much growth, since even positive coverage hasn’t caused much growth in the past. I wouldn’t be shocked if the community were overall smaller in a couple of years. On the positive side, many measures of community growth seem unaffected so far, so it seems likely the longer term trend of 10-30% growth continues after the next 1-2 years.

Pagw @ 2023-04-03T21:17 (+45)

I think I made a mistake to publicly affiliate 80,000 Hours with SBF as much as we did

But 80k didn't just "affiliate" with SBF - they promoted him with untrue information. And I don't see this addressed in the above or anywhere else. Particularly, his podcast interview made a thing of him driving a Corolla and sleeping on a beanbag as part of promoting the frugal Messiah image, when it seems likely that at least some people high up in EA knew that this characterisation was false. Plus no mentioning of the stories of how he treated his Alameda co-founders. And perhaps 80k and yourself were completely ignorant of this when the podcast was made, but did nobody tell you that this needed to be corrected? Either way, it doesn't seem good for EA functioning.

My sense is that this idea that some people high up in EA lied or failed to correct the record has cast a shadow over everyone in such positions, since nobody has given a credible account of who knew and lied/withheld that information that I know of. It contributes to a reduction in trust. It's not like it's unforgiveable - I think it is understandable that good people might have felt like going along with some seemingly small lies for what they saw as a greater good, and I think everyone in such positions generally has very impressive and admirable achievements. But it's hard to keep credibility with general suspicion in the air. If you really had no knowledge then I feel sorry that this seems likely to also affect you.

Robert_Wiblin @ 2023-04-04T15:02 (+16)

Hi Pagw — in case you haven't seen it here's my November 2022 reply to Oli H re Sam Bankman-Fried's lifestyle:

"I was very saddened to hear that you thought the most likely explanation for the discussion of frugality in my interview with Sam was that I was deliberately seeking to mislead the audience.

I had no intention to mislead people into thinking Sam was more frugal than he was. I simply believed the reporting I had read about him and he didn’t contradict me.

It’s only in recent weeks that I learned that some folks such as you thought the impression about his lifestyle was misleading, notwithstanding Sam's reference to 'nice apartments' in the interview:

"I don’t know, I kind of like nice apartments. ... I’m not really that much of a consumer exactly. It’s never been what’s important to me. And so I think overall a nice place is just about as far as it gets."

Unfortunately as far as I can remember nobody else reached out to me after the podcast to correct the record either.

In recent years, in pursuit of better work-life balance, I’ve been spending less time socialising with people involved in the EA community, and when I do, I discuss work with them much less than in the past. I also last visited the SF Bay Area way back in 2019 and am certainly not part of the 'crypto' social scene. That may help to explain why this issue never came up in casual conversation.

Inasmuch as the interview gave listeners a false impression about Sam I am sorry about that, because we of course aim for the podcast to be as informative and accurate as possible."

Pagw @ 2023-04-04T22:48 (+4)

Thanks Robert. No I hadn't seen that, thanks for sharing it (the total amount of stuff on FTX exceeded my bandwidth and there is much I missed!).

Given that, the thought behind my earlier comment that remains is that it would seem more appropriately complete to acknowledge in Ben's reflections that 80k made a mistake in information it put out, not just in "affiliating" with SBF. And also that if a body as prominent as 80k had not heard concerns about SBF that were circulating, it seems to suggest there are important things to improve about communication of information within EA and I'd have thought they'd warrant a mention in there. Though I appreciate that individuals may not want to be super tuned in to everything.

John G. Halstead @ 2023-04-05T08:28 (+48)

I agree with Peter's comments here. Some of 80k's own staff were part of the early Alameda cohort who left and thought SBF was a bad actor. In an honest accounting of mistakes made, it seems strange not to acknowledge that 80k (and others) missed an important red flag in 2018, and didn't put any emphasis on it when talking to/promoting SBF

AnonymousEAForumAccount @ 2023-04-06T14:02 (+18)

I’ve also assumed that 80k leadership was aware of red flags around SBF since 2018, due to A) 80k staff being part of the early Alameda staff that left, B) assuming 80k leadership was part of the EA Leader Slack channel where concerns were raised, C) Will (co-founder and board member) and Nick (board member) being aware of concerns (per Time’s reporting). It would be great if someone from 80k could confirm which of these channels (if any) 80k leadership heard concerns through. Assuming they were indeed aware of concerns, I agree with John and Pagw that it seems odd not to mention hearing those red flags in the OP.

To be explicit, even if 80k leadership was aware of red flags around SBF since 2018, I don’t think they should have anticipated the scale of his fraud. And they might have made correct decisions along the way given what they knew at the time. But those red flags (if 80k was indeed aware of them) seem like they should play a part in any retrospective accounting of lessons learned from the whole affair.

Benjamin_Todd @ 2023-04-14T04:04 (+2)

The 80k team are still discussing it internally and hope to say more at a later date.

Speaking personally, Holden's comments (e.g. in Vox) resonated with me. I wish I'd done more to investigate what happened at Alameda.

Pagw @ 2023-04-04T06:47 (+13)

People downvoting - it would be useful to know why.

Ubuntu @ 2023-04-01T00:51 (+5)

many measures of community growth seem unaffected so far

Interesting! Can you share details? (Or point to where others have?)

Benjamin_Todd @ 2023-04-01T09:32 (+14)

Some of the ones I've seen:

80k's metrics seems unaffected so far, and it's one of the biggest drivers of community growth.

I've also heard that EAG(x) applications didn't seem affected.

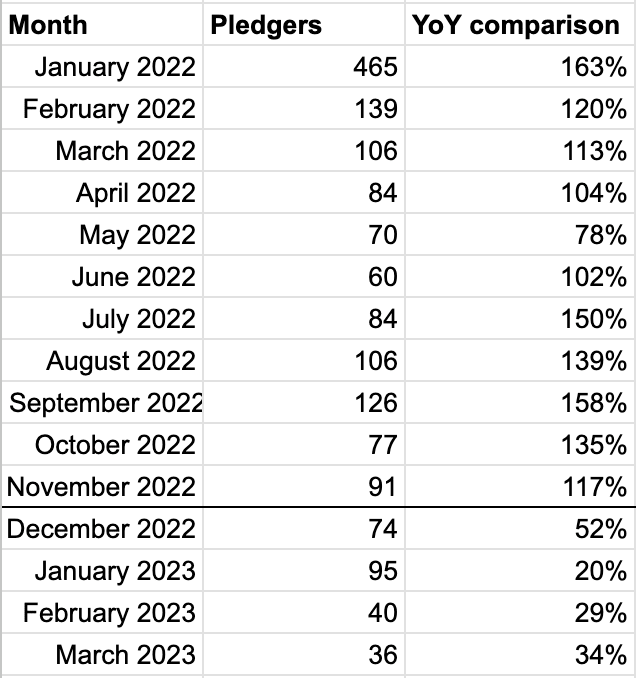

GWWC pledgers were down, though a lot of that is due to them not doing a pledge drive in Dec. My guess is that if they do a pledge drive next Dec similar to previous ones, the results will be similar. The baseline of monthly pledges seems ~similar.

henrith @ 2023-04-02T14:15 (+17)

I would be surprised if the effect from the lack of a pledge drive would run on into February and March 2023 though. Comparison YoY here is 12 months before, Jan 2023 to 2022 etc.

Benjamin_Todd @ 2023-04-02T16:38 (+5)

Hmm that does seem worse than I expected.

I wonder if it's because gwwc has cut back outreach or is getting less promotion by other groups (whereas 80k continued it's marketing as before, plus a lot of 80k's reach is passive), or whether it points to outreach actually being harder now.

AnonymousEAForumAccount @ 2023-10-17T19:29 (+4)

FYI I’ve just released a post which offers significantly more empirical data on how FTX has impacted EA. FTX’s collapse seems to mark a clear and sizable deterioration across a variety of different EA metrics.

I included your comment about 80k's metrics being largely unaffected, but if there's some updated data on if/how 80k's metrics have changed post-FTX that would be very interesting to see.

mikbp @ 2023-04-08T17:51 (+3)

Measure growth from peak-to-peak or trough-to-trough rather than trough-to-peak – I knew crypto was in a huge bull market

What does this mean?

Benjamin_Todd @ 2023-04-09T09:00 (+3)

If you're tracking the annual change in wealth between two periods, you should try to make sure the start at the end point are either both market peaks or both market lows.

e.g. from 2017 to 2021, or 2019 to Nov 2022 would be valid periods for tracking crypto.

If you instead track from e.g. 2019 to 2021, then you're probably going to overestimate.

Another option would be to average over periods significantly longer than a typical market cycle (e.g. 10yr).

pseudonym @ 2023-03-31T22:01 (+3)

I think I made a mistake to publicly affiliate 80,000 Hours with SBF as much as we did – just based on good PR sense and messaging strategy – and not to i.

Is this an incomplete sentence?

Benjamin_Todd @ 2023-03-31T22:29 (+2)

Thanks fixed!

Ubuntu @ 2023-04-01T00:48 (+23)

I’d updated a bit towards the move fast and break things / super high ambition / chaotic philosophy of org management due to FTX (vs. prioritise carefully / be careful about overly rapid growth / follow best practice etc.), but I undo that update. (Making this update on one data point was probably also a mistake in the first place.)

...But undoing the update based on one data point is not a mistake?

I'm not being pedantic. I don't think anyone's had the bravery yet to publicly point out that the SBF story - both pre-scandal and post-scandal - is just one data point.

A massive ******* data point, to be sure, so let's think very seriously about the lessons to be learnt - I don't mean to trivialise the gravity of the harm that's occurred.

I'm saying that I think the updates we're all making right now should be much more similar in magnitude to finding out that SBF had almost succeeded but for some highly contingent obstacle in committing an $8bn fraud, than to finding out that 8 self-proclaimed EAs had each committed a $1bn fraud.

I feel like the typical community member is over-updating on the FTX scandal. I don't really get that sense with you and I love that you've included an "Updates I’m basically not making" section.

(Noting that I think there's a good chance my reasoning here is confused given that I can't recall this point having been made anywhere yet. Putting it out there just in case.)

LukeDing @ 2023-04-01T10:36 (+26)

I don’t think the update is just one data point. Some of the issues with FTX - over reliance on alignment over domain expertise, group think, poor governance, overemphasising speed over robust execution at times straying into recklessness also exist within part of EA. FTX brings that into very sharp focus but is not the only example.

Benjamin_Todd @ 2023-04-01T09:38 (+8)

Thank you!

Yes I think if you make update A due to a single data point, then you realise you shouldn't have updated on a single data point, you should undo update A. Like your original reasoning was wrong.

That aside, in the general case I think it can sometimes be justified a lot to update on a single datapoint. E.g. if you think an event was very unlikely, and then that event happens, your new probability estimate for the event will normally go up a lot.

In other cases, if you already have lots of relevant points, then adding a single extra one won't have much impact.

One extra point is that I think people have focused too much on SBF. The other founders also said they supported EA. So if we're just counting up people, it's more than one.

Ben_West @ 2023-03-31T22:37 (+23)

Thanks for writing this!

I’ve also updated back in favour of EA not being a great public facing brand, since it seems to have held up poorly in the face of its first major scandal.

Are there examples of brands which you think have held up better to their own scandals? You say "it’s unclear EA’s perception among the general public has changed (most of them have still never heard of EA)" which seems to imply that EA held up quite well, under the definition I would most intuitively use (perception among the general public).

Benjamin_Todd @ 2023-03-31T23:10 (+9)

First to clarify, yes most of the general public haven't heard of EA, and many haven't made the connection with FTX.

I think EA's brand has mainly been damaged among what you could call the chattering classes. But I think that is a significant cost.

It's also damaged in the sense that if you search for it online you find a lot more negative stuff now.

On the question about comparisons, unfortunately I don't have a comprehensive answer.

Part of my thinking is that early on I thought EA wasn't a good public facing brand. Then things went better than I expected for a while. But then after EA actually got serious negative attention, it seemed like the old worries were correct after all.

My impression is that many (almost all?) face large scandals eventually, but it doesn't always stick in the way it was sticking with EA.

I'm also sympathetic to Holden's comments.

Ozzie Gooen @ 2023-04-03T00:38 (+4)

It's fairly different, but I've been moderately impressed with political PR groups. It seems like there are some firm playbooks of how to respond to crises, and some political agencies are well practiced here.

I think, in comparison, there was very little activity by EA groups during the FTX issue. (I assume one challenge was just the legal hurdles of having the main potential groups be part of EV).

I get the impression that companies don't seem to try to respond to crises that much (at least, quickly), but they definitely spend a lot of effort marketing themselves and building key relationships otherwise.

Simon Stewart @ 2023-04-05T21:02 (+16)

Some possible worlds:

SBF was aligned with EA | SBF wasn't aligned with EA | |

|---|---|---|

| SBF knew this | EA community standards permit harmful behaviour. | SBF violated EA community standards. |

| SBF didn't know this | EA community standards are unclear. | EA community standards are unclear. |

Some possible stances in these worlds:

| Possible world | Possible stances | ||

|---|---|---|---|

| EA community standards permit harmful behaviour | 1a. This is intolerable. Adopt a maxim like "first, do no harm" | 1b. This is tolerable. Adopt a maxim like "to make an omelette you'll have to break a few eggs" | 1c. This is desirable. Adopt a maxim like "blood for the blood god, skulls for the skull throne" |

| SBF violated EA community standards | 2a. This is intolerable. Standards adherence should be prioritised above income-generation. | 2b. This is tolerable. Standards violations should be permitted on a risk-adjusted basis. | 2c. This is desirable. Standards violations should be encouraged to foster anti-fragility. |

| EA community standards are unclear | 3a. This is intolerable. Clarity must be improved as a matter of urgency. | 3b. This is tolerable. Improved clarity would be nice but it's not a priority. | 3c. This is desirable. In the midst of chaos there is also opportunity. |

I'm a relative outsider and I don't know which world the community thinks it is in, or which stance it is adopting in that world.

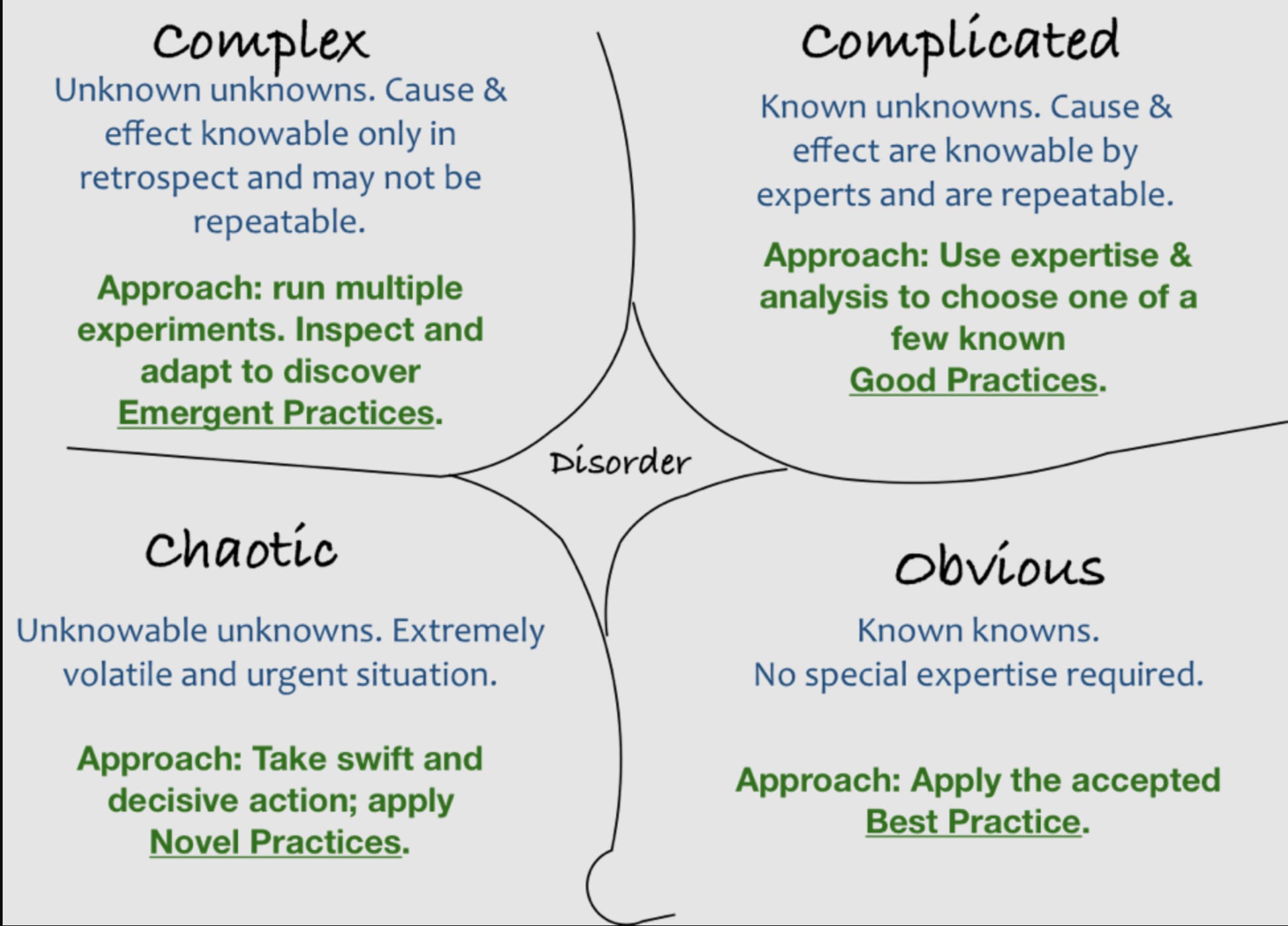

Some hypotheses:

- When trying to guide altruists, it matters what problem-domain they are operating in.

- In the obvious domain, solutions are known. If you have the aptitude, be a doctor rather than a small-time pimp.

- In a complicated domain, solutions are found through analysis. Seems like the EA community is analytically minded, so may have a bias towards characterising complex and chaotic problems as complicated. This is dangerous because in the complicated domain, the value of iteration and incrementalism is low whereas in the complex domain it's very high.

- In a complex domain, solutions are found through experimentation. There should be a strong preference for ability to run more experiments.

- In a chaotic domain, solutions are found through acting before your environment destroys you. There should be a strong preference for establishing easy to follow heuristics that are unlikely to introduce catastrophic risk across a wide range of environments.

2. Consequentialist ethics are inherently future-oriented. The future contains unknown unknowns and unknowable unknowns, so any system of consequentialist ethics is always working in the complex and chaotic domains. Consequentialism proceeds by analytical reasoning, even if a subset of the reasoning is probabilistic, and this is not applicable to the complex and chaotic domains, so it's not a useful framework.

3. What's actually happening when thinking through things from a consequentialist perspective is that you are imagining possible futures and identifying ways to get there, which is an imaginative process not an analytical one.

4. Better frameworks would be present-oriented, focusing on needs and how to sustainably meet them. Virtue ethics and deontological ethics are present-oriented ethical frameworks that may have some value. Orientation towards establishing services and infrastructure at the right scale and resilience level, rather than outputs (e.g. lives-saved) would be more fruitful over the long-term.

David_Althaus @ 2023-04-01T09:14 (+15)

Really great post, agree with almost everything, thanks for writing!

(More speculatively, it seems plausible to me that many EAs have worse judgement of character than average, because e.g. they project their good intentions onto others.)

Agreed. Another plausible reason is that system 1 / gut instincts play an important role in character judgment but many EAs dismiss their system 1 intuitions more or experience them less strongly than the average human. This is partly due to selection effects (EA appeals more to analytical people) but perhaps also because several EA principles emphasize putting more weight on reflective, analytical reasoning than on instincts and emotions (e.g., the heuristics and biases literature, several top cause areas (like AI) aren't intuitive at all, and so on). [1]

That's at least what I experienced first hand when interacting with a dangerous EA several years ago. I met a few people who had negative impressions of this person's character but couldn't really back them up with any concrete evidence or reasoning, and this EA continued to successfully deceive me for more than a year.[2] Personally, I didn't have a negative impression in the first place (partly because the concept of a non-trustworthy EA was completely out of my hypothesis space back then) so other people were clearly able to pick up on something that I couldn't.

- ^

To be clear, I'm not saying that reflective reasoning is bad (it's awesome) or that we now should all trust our gut instincts when it comes to character judgment. Gut instincts are clearly fallible. The average human certainly isn't amazing at character judgment.

- ^

FWIW, my experiences with this person were a major inspiration for this post.

Greg S @ 2023-04-03T05:16 (+12)

I agree with the bulk of this post, but disagree (or have misunderstood) in two specific ways:

First, I think it is essential that EA is a “practical project”, not merely (or primarily) an intellectual project. Peter Singer shared an intellectual insight linked to practical action, and the EA community used that insight to shape our thinking on impactful interventions and to make a practical difference in the world. It’s the link to practical action that, for me, is the most important part of what we do. I think EA is, and should be, fundamentally a practical project. Of course, we should do sufficient intellectual work to inform that practical project. But, if we lose sight of the practical project, I don’t think it’s “effective” or “altruism”.