Chris Olah on what the hell is going on inside neural networks

By 80000_Hours @ 2021-08-04T15:13 (+5)

This is a linkpost for #107 - Chris Olah on what the hell is going on inside neural networks. You can listen to the episode on that page, or by subscribing to the '80,000 Hours Podcast' wherever you get podcasts.

In this interview Chris and Rob discuss Chris's personal take on what we’ve learned so far about what machine learning (ML) algorithms are doing, and what’s next for the research agenda at Anthropic, where Chris works. They also cover:

- Why Chris thinks it’s necessary to work with the largest models

- Whether you can generalise from visual to language models

- What fundamental lessons we’ve learned about how neural networks (and perhaps humans) think

- What it means that neural networks are learning high-level concepts like ‘superheroes’, mental health, and Australiana, and can identify these themes across both text and images

- How interpretability research might help make AI safer to deploy, and Chris’ response to skeptics

- Why there’s such a fuss about ‘scaling laws’ and what they say about future AI progress

- What roles Anthropic is hiring for, and who would be a good fit for them

Imagine if some alien organism landed on Earth and could do these things.

Everybody would be falling over themselves to figure out how… And so really the thing that is calling out in all this work for us to go and answer is, “What in the wide world is going on inside these systems??”

–Chris Olah

Key points

Interpretability

Chris Olah: Well, in the last couple of years, neural networks have been able to accomplish all of these tasks that no human knows how to write a computer program to do directly. We can’t write a computer program to go and classify images, but we can write a neural network to create a computer program that can classify images. We can’t go and write computer programs directly to go and translate text highly accurately, but we can train the neural network to go and translate texts much better than any program we could have written.

Chris Olah: And it’s always seemed to me that the question that is crying out to be answered there is, “How is it that these models are doing these things that we don’t know how to do?” I think a lot of people might debate exactly what interpretability is. But the question that I’m interested in is, “How do these systems accomplish these tasks? What’s going on inside of them?”

Chris Olah: Imagine if some alien organism landed on Earth and could go and do these things. Everybody would be rushing and falling over themselves to figure out how the alien organism was doing things. You’d have biologists fighting each other for the right to go and study these alien organisms. Or imagine that we discovered some binary just floating on the internet in 2012 that could do all these things. Everybody would be rushing to go and try and reverse engineer what that binary is doing. And so it seems to me that really the thing that is calling out in all this work for us to go and answer is, “What in the wide world is going on inside these systems??”

Chris Olah: The really amazing thing is that as you start to understand what different neurons are doing, you actually start to be able to go and read algorithms off of the weights… We can genuinely understand how large chunks of neural networks work. We can actually reverse engineer chunks of neural networks and understand them so well that we can go and hand-write weights… I think that is a very high standard for understanding systems.

Chris Olah: Ultimately the reason I study (and think it’s useful to study) these smaller chunks of neural network is that it gives us an epistemic foundation for thinking about interpretability. The cost is that we’re talking to these small parts and we’re setting ourselves up for a struggle to be able to build up the understanding of large neural networks and make this sort of analysis really useful. But it has the upside that we’re working with such small pieces that we can really objectively understand what’s going on… And I think there’s just a lot of disagreement and confusion, and I think it’s just genuinely really hard to understand neural networks and very easy to misunderstand them, so having something like that seems really useful.

Universality

Chris Olah: Just like animals have very similar anatomies — I guess in the case of animals due to evolution — it seems neural networks actually have a lot of the same things forming, even when you train them on different data sets, even when they have different architectures, even though the scaffolding is different. The same features and the same circuits form. And actually I find that the fact that the same circuits form to be the most remarkable part. The fact that the same features form is already pretty cool, that the neural network is learning the same fundamental building blocks of understanding vision or understanding images.

Chris Olah: But then, even though it’s scaffolded on differently, it’s literally learning the same weights, connecting the same neurons together. So we call that ‘universality.’ And that’s pretty crazy. It’s really tempting when you start to find things like that to think “Oh, maybe the same things form also in humans. Maybe it’s actually something fundamental.” Maybe these models are discovering the basic building blocks of vision that just slice up our understanding of images in this very fundamental way.

Chris Olah: And in fact, for some of these things, we have found them in humans. So some of these lower-level vision things seem to mirror results from neuroscience. And in fact, in some of our most recent work, we’ve discovered something that was previously only seen in humans, these multimodal neurons.

Multimodal neurons

Chris Olah: So we were investigating this model called CLIP from OpenAI, which you can roughly think of as being trained to caption images or to pair images with their captions. So it’s not classifying images, it’s doing something a little bit different. And we found a lot of things that were really deeply qualitatively different inside it. So if you look at low-level vision actually, a lot of it is very similar, and again is actually further evidence for universality.

Chris Olah: A lot of the same things we find in other vision models occur also in early vision in CLIP. But towards the end, we find these incredibly abstract neurons that are just very different from anything we’d seen before. And one thing that’s really interesting about these neurons is they can read. They can go and recognize text and images, and they fuse this together, so they fuse it together with the thing that’s being detected.

Chris Olah: So there’s a yellow neuron for instance, which responds to the color yellow, but it also responds if you write the word yellow out. That will fire as well. And actually it’ll fire if you write out the words for objects that are yellow. So if you write the word ‘lemon’ it’ll fire, or the word ‘banana’ will fire. This is really not the sort of thing that you expect to find in a vision model. It’s in some sense a vision model, but it’s almost doing linguistic processing in some way, and it’s fusing it together into what we call these multimodal neurons. And this is a phenomenon that has been found in neuroscience. So you find these neurons also for people. There’s a Spider-Man neuron that fires both for the word Spider-Man as an image, like a picture of the word Spider-Man, and also for pictures of Spider-Man and for drawings of Spider-Man.

Chris Olah: And this mirrors a really famous result from neuroscience of the Halle Berry neuron, or the Jennifer Aniston neuron, which also responds to pictures of the person and the drawings of the person and to the person’s name. And so these neurons seem in some sense much more abstract and almost conceptual, compared to the previous neurons that we found. And they span an incredibly wide range of topics.

Chris Olah: In fact, a lot of the neurons, you just go through them and it feels like something out of a kindergarten class, or an early grade-school class. You have your color neurons, you have your shape neurons, you have neurons corresponding to seasons of the year, and months, to weather, to emotions, to regions of the world, to the leader of your country, and all of them have this incredible abstract nature to them.

Chris Olah: So there’s a morning neuron that responds to alarm clocks and times of the day that are early, and to pictures of pancakes and breakfast foods — all of this incredible diversity of stuff. Or season neurons that respond to the names of the season and the type of weather associated with them and all of these things. And so you have all this incredible diversity of neurons that are all incredibly abstract in this different way, and it just seems very different from the relatively concrete neurons that we were seeing before that often correspond to a type of object or such.

Chris Olah: I think this is a very reasonable concern, and is the main downside of circuits. So right now, I guess probably the largest circuit that we’ve really carefully understood is at 50,000 parameters. And meanwhile, the largest language models are in the hundreds of billions of parameters. So there’s quite a few orders of magnitudes of difference that we need to get past if we want to even just get to the modern language models, let alone futuCan this approach scale?re generations of neural networks. Despite that, I am optimistic. I think we actually have a lot of approaches to getting past this problem.

In the interview, Chris lays out several paths to addressing the scaling challenge. First, the basic approach to circuits might be more scalable than it seems at first glance, both because large models may become easier to understand in some ways, and because understanding recurring “motifs” can sometimes give order of magnitude simplifications (eg. equivariance). Relatedly, if the stakes are high enough, we might be willing to use large amounts of human labor to audit neural networks. Finally, circuits is a kind of epistemic foundation that we can build an understanding of “larger scale structure” like branches or “tissues” on top of. Possibly, these larger structures may either directly answer safety questions or help us focus our efforts to understand safety on portions of the model.

How wonderful it would be if this could succeed

Chris Olah: We’ve talked a lot about the ways this could fail, and I think it’s worth saying how wonderful it would be if this could succeed. It’s both that it’s potentially something that makes neural networks much safer, but there’s also just some way in which I think it would aesthetically be really wonderful if we could live in a world where we have… We could just learn so much and so many amazing things from these neural networks. I’ve already learned a lot about silly things, like how to classify dogs, but just lots of things that I didn’t understand before that I’ve learned from these models.

Chris Olah: You could imagine a world where neural networks are safe, but where there’s just some way in which the future is kind of sad. Where we’re just kind of irrelevant, and we don’t understand what’s going on, and we’re just humans who are living happy lives in a world we don’t understand. I think there’s just potential for a future — even with very powerful AI systems — that isn’t like that. And that’s much more humane and much more a world where we understand things and where we can reason about things. I just feel a lot more excited for that world, and that’s part of what motivates me to try and pursue this line of work.

Chris Olah: There’s this idea of a microscope AI. So people sometimes will talk about agent AIs that go and do things, and oracle AIs that just sort of give us wise advice on what to do. And another vision for what a powerful AI system might be like — and I think it’s a harder one to achieve than these others, and probably less competitive in some sense, but I find it really beautiful — is a microscope AI that just allows us to understand the world better, or shares its understanding of the world with us in a way that makes us smarter and gives us a richer perspective on the world. It’s something that I think is only possible if we could really succeed at this kind of understanding of models, but it’s… Yeah, aesthetically, I just really prefer it.

Ways that interpretability research could help us avoid disaster

Chris Olah: On the most extreme side, you could just imagine us fully, completely understanding transformative AI systems. We just understand absolutely everything that’s going on inside them, and we can just be really confident that there’s nothing unsafe going on in them. We understand everything. They’re not lying to us. They’re not manipulating us. They are just really genuinely trying to be maximally helpful to us. And sort of an even stronger version of that is that we understand them so well that we ourselves are able to become smarter, and we sort of have a microscope AI that gives us this very powerful way to see the world and to be empowered agents that can help create a wonderful future.

Chris Olah: Okay. Now let’s imagine that actually interpretability doesn’t succeed in that way. We don’t get to the point where we can totally understand a transformative AI system. That was too optimistic. Now what do we do? Well, maybe we’re able to go and have this kind of very careful analysis of small slices. So maybe we can understand social reasoning and we can understand whether the model… We can’t understand the entire model, but we can understand whether it’s being manipulative right now, and that’s able to still really reduce our concerns about safety. But maybe even that’s too much to ask. Maybe we can’t even succeed at understanding that small slice.

Chris Olah: Well, I think then what you can fall back to is maybe just… With some probability you catch problems, you catch things where the model is doing something that isn’t what you want it to do. And you’re not claiming that you would catch even all the problems within some class. You’re just saying that with some probability, we’re looking at the system and we catch problems. And then you sort of have something that’s kind of like a mulligan. You made a mistake and you’re allowed to start over, where you would have had a system that would have been really bad and you realize that it’s bad with some probability, and then you get to take another shot.

Chris Olah: Or maybe as you’re building up to powerful systems, you’re able to go and catch problems with some probability. That sort of gives you a sense of how common safety problems are as you build more powerful systems. Maybe you aren’t very confident you’ll catch problems in the final system, but you can sort of help society be calibrated on how risky these systems are as you build towards that.

Scaling Laws

Chris Olah: Basically when people talk about scaling laws, what they really mean is there’s a straight line on a log-log plot. And you might ask, “Why do we care about straight lines on log-log plots?” There’s different scaling laws for different things, and the axes depend on which scaling law you’re talking about. But probably the most important scaling law — or the scaling law that people are most excited about — has model size on one axis and loss on the other axis. [So] high loss means bad performance. And so the observation you have is that there is a straight line. Where as you make models bigger, the loss goes down, which means the model is performing better. And it’s a shockingly straight line over a wide range.

Chris Olah: The really exciting version of this to me … is that maybe there’s scaling laws for safety. Maybe there’s some sense in which whether a model is aligned with you or not may be a function of model size, and sort of how much signal you give it to do the human-aligned task. And we might be able to reason about the safety of models that are larger than the models we can presently build. And if that’s true, that would be huge. I think there’s this way in which safety is always playing catch-up right now, and if we could create a way to think about safety in terms of scaling laws and not have to play catch-up, I think that would be incredibly remarkable. So I think that’s something that — separate from the capabilities implications of safety laws — is a reason to be really excited about them.

Articles, books, and other media discussed in the show

Selected work Chris has been involved in

- Circuits Thread (start at Zoom In: An Introduction to Circuits)

- Multimodal Neurons in Artificial Neural Networks

- Building Blocks of Interpretability

- Activation Atlases

- Anthropic (and their vacancies page)

- Interpretability vs Neuroscience

- See Chris’ website for a more complete overview of his interpretability research and pedagogical writing

- DeepDream

- Microscope

- Understanding RL Vision

Papers

- Using Artificial Intelligence to Augment Human Intelligence

- Scaling Laws for Transfer

- Visualizing and Measuring the Geometry of BERT

- A Multiscale Visualization of Attention in the Transformer Model

- What does BERT Look At? An Analysis of BERT’s Attention

- Circuits thread on Distill

Other links

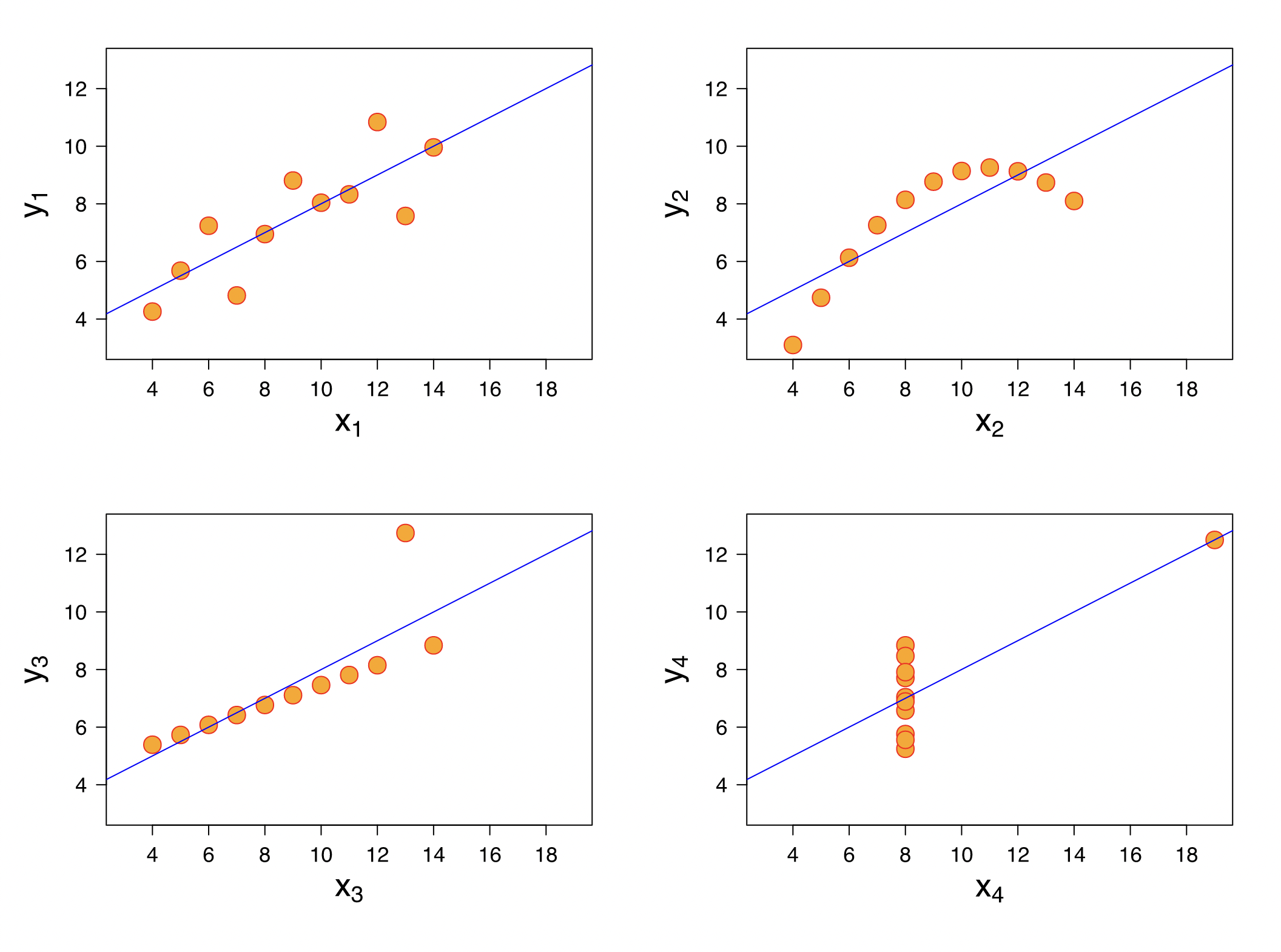

- Anscombe’s quartet

- Danny Hernandez on forecasting and the drivers of AI progress

- Media for Thinking the Unthinkable

- CLIP

- AI and efficiency

- Learning from Human Preferences

Transcript

Rob’s intro [00:00:00]

Rob Wiblin: Hi listeners, this is the 80,000 Hours Podcast, where we have unusually in-depth conversations about the world’s most pressing problems, what you can do to solve them, and how come there’s a cloud there but not a cloud right there just next to it. I’m Rob Wiblin, Head of Research at 80,000 Hours.

I’m really excited to share this episode. Chris Olah, today’s guest, is one of the top machine learning researchers in the world.

He’s also excellent at communicating complex ideas to the public, with his blog posts and twitter threads attracting millions of readers. A survey of listeners even found he was one of the most-followed people among subscribers to this show.

And yet despite being a big deal, this is the first podcast, and indeed long interview, Chris has ever done. Fortunately I don’t think you’d be able to tell he hasn’t actually done this many times before!

We ended up having so much novel content cover with Chris that we did more than one recording session, and the end result is two very different episodes we’re both really happy with.

This first one focuses on Chris’ technical work, and explores topics like:

- What is interpretability research, and what’s it trying to solve

- How neural networks work, and how they think

- ‘Multimodal neurons’, and their implications for AI safety work

- Whether this approach can scale

- Digital suffering

- Scaling laws

- And how wonderful it would be if this work could succeed

If you find the technical parts of this episode a bit hard going, before you stop altogether — I’d recommend skipping to the chapter called “Anthropic, and the safety of large models”, or [2:11:05] in to hear all about the very exciting project Chris is helping to grow right now. You don’t have to be an AI researcher to work at Anthropic, so I think people from a wide range of backgrounds could benefit from sticking around till the end.

For what it’s worth I’m far from being an expert on AI but I was able to follow Chris and learn a lot about what’s really going on with research into big machine learning models.

The second episode, which if everything goes smoothly we hope to release next week, is focused on his fascinating personal story — including how he got to where he is today without having a university degree.

One final thing: Eagle-eared listeners will notice that our audio changes a few times, and that’s because, as is often the case with longer episodes, Keiran cut together sections from multiple sessions to try and create a better final product.

Without further ado, I bring you Chris Olah.

The interview begins [00:02:19]

Rob Wiblin: Today I’m speaking with Chris Olah. Chris is a machine learning researcher currently focused on neural network interpretability. Until last December he led OpenAI’s interpretability team but along with some colleagues he recently moved on to help start a new AI lab focussed on large models and safety called Anthropic.

Rob Wiblin: Before OpenAI he spent 4 years at Google Brain developing tools to visualize what’s going on in neural networks. Chris was hugely impactful at Google Brain. He was second author on the launch of the Deep Dream back in 2015 which I think almost everyone has seen at this point. He has also pioneered feature visualization, activation atlases, building blocks of interpretability, Tensor Flow, and even co-authored the famous paper “concrete problems in AI Safety.”

Rob Wiblin: On top of all that in 2018 he helped found the academic journal Distill, which is dedicated to publishing clear communication of technical concepts. And Chris is himself a writer who is popular among many listeners to this show, having attracted millions of readers by trying to explain cutting-edge machine learning in highly accessible ways.

Rob Wiblin: In 2012 Chris took a $100k Thiel Fellowship, a scholarship designed to encourage gifted young people to go straight into research or entrepreneurship rather than go to a university — so he’s actually managed to do all of the above without a degree.

Rob Wiblin: Thanks so much for coming on the podcast Chris!

Chris Olah: Thanks for having me.

Rob Wiblin: Alright, I hope that we’re going to get to talk about your new project Anthropic and the interpretability research, which has been one of your big focuses lately. But first off, it seems like you kind of spent the last eight years contributing to solving the AI alignment problem in one form or another. Can you just say a bit about how you conceive of the nature of that problem at a high level?

Chris Olah: When I talk to other people about safety, I feel like they often have pretty strong views, or developed views on what the nature of the safety problem is and what it looks like. And I guess actually, one of the lessons I’ve learned trying to think about safety and work on it, and think about machine learning broadly, has been how often I seem to be wrong in retrospect, or I think that I wasn’t thinking about things in the right way. And so, I think I don’t really have a very confident take on safety. It seems to me like it’s a problem that we actually don’t know that much about. And that trying to theorize about it a priori can really easily lead you astray.

Chris Olah: And instead, I’m very interested in trying to empirically understand these systems, empirically understand how they might be unsafe, and how we might be able to improve that. And in particular, I’m really interested in how we can understand what’s really going on inside these systems, because that seems to me like one of the biggest tools we can have in understanding potential failure modes and risks.

Rob Wiblin: In that case, let’s waste no time on big-picture theoretical musings, and we can just dive right into the concrete empirical technical work that you’ve been doing, which in this case is kind of reverse engineering neural networks in order to look inside them and understand what’s actually going on.

Rob Wiblin: The two online articles that I’m going to be referring to most here are the March 2020 article Zoom In: An Introduction to Circuits, which is the first piece in a series of articles about the idea of circuits, which we’ll discuss in a second. And also the very recent March 2021 article Multimodal Neurons in Artificial Neural Networks.

Rob Wiblin: Obviously we’ll link to both of those, and they’ve got lots of beautifully and carefully designed images, so potentially worth checking out if listeners really want to understand all of this thoroughly. They’re both focused on the issue of interpretability in neural networks, visual neural networks in particular. I wouldn’t lie to you, those articles were kind of pushing my understanding and I didn’t follow them completely, but maybe Chris can help me get it out properly here.

Interpretability [00:05:54]

Rob Wiblin: First though, can you explain what problem this line of research is aiming to solve, at a big picture level?

Chris Olah: Well, in the last couple of years, neural networks have been able to accomplish all of these tasks that no human knows how to write a computer program to do directly. We can’t write a computer program to go and classify images, but we can write a neural network to create a computer program that can classify images. We can’t go and write computer programs directly to go and translate text highly accurately, but we can train the neural network to go and translate texts much better than any program we could have written.

Chris Olah: And it’s always seemed to me that the question that is crying out to be answered there is, “How is it that these models are doing these things that we don’t know how to do?” I think a lot of people might debate exactly what interpretability is. But the question that I’m interested in is, “How do these systems accomplish these tasks? What’s going on inside of them?”

Chris Olah: Imagine if some alien organism landed on Earth and could go and do these things. Everybody would be rushing and falling over themselves to figure out how the alien organism was doing things. You’d have biologists fighting each other for the right to go and study these alien organisms. Or imagine that we discovered some binary just floating on the internet in 2012 that could do all these things. Everybody would be rushing to go and try and reverse engineer what that binary is doing. And so it seems to me that really the thing that is calling out in all this work for us to go and answer is, “What in the wide world is going on inside these systems??”

Rob Wiblin: What are the problems with not understanding it? Or I guess, what would be the benefits of understanding it?

Chris Olah: Well, I feel kind of worried about us deploying systems — especially systems in high-stakes situations or systems that affect people’s lives — when we don’t know how they’re doing the things they do, or why they’re doing them, and really don’t know how to reason about how they might behave in other unanticipated situations.

Chris Olah: To some extent, you can get around this by testing the systems, which is what we do. We try to test them in all sorts of cases that we’re worried about how they’re going to perform, and on different datasets. But that only gets us to the cases that are covered in our datasets or that we explicitly thought to go and test for. And so, I think we actually have a great deal of uncertainty about how these systems are going to behave.

Chris Olah: And I think especially as they become more powerful, you have to start worrying about… What if in some sense they’re doing the right thing, but they’re doing it for the wrong reasons? They’re implementing the correct behavior, but maybe the actual algorithm that is underlying that is just trying to get reward from you, in some sense, rather than trying to help you. Or maybe it’s relying on things that are biased, and you didn’t realize that. And so I think that being able to understand these systems is a really, really important way to try and address those kinds of concerns.

Rob Wiblin: I guess understanding how it’s doing what it’s doing might make it a bunch easier to predict how it’s going to perform in future situations, or— if the situation changes — the situation in which you’re deploying it. Whereas if you have no understanding of what it’s doing, then you’re kind of flying blind. And maybe the circumstances could change. What do they call it? Change of domain, or…?

Chris Olah: Yeah. So you might be worried about distributional shift, although in some ways I think that doesn’t even emphasize quite enough what my biggest concern is. Or I feel maybe it makes it sound like this kind of very technical robustness issue.

Chris Olah: I think that what I’m concerned about is something more like… There’s some sense in which modern language models will sometimes lie to you. In that there are questions to which they knew the right answer, in some sense. If you go and pose the question in the right way, they will give you the correct answer, but they won’t give you the right answer in other contexts. And I think that’s sort of an interesting microcosm for a world in which these models are… In some sense they’re capable of doing things, but they’re trying to accomplish a goal that’s different from the one you want. And that may, in sort of unanticipated ways, cause problems for you.

Chris Olah: I guess another way that I might frame this is, “What are the unknown safety problems?” What are the unknown unknowns of deploying these systems? And if there’s something that you sort of anticipate as a problem, you can test for it. But I think as these systems become more and more capable, they will sort of abruptly sometimes change their behavior in different ways. And there’s always unknown unknowns. How can you hope to go and catch those? I think that’s a big part of my motivation for wanting to study these systems, or how I think studying these systems can make things more safe.

Rob Wiblin: Let’s just maybe give listeners a really quick reminder of how neural networks work. We’ve covered this in previous interviews, including a recent one with Brian Christian. But basically you can imagine information flowing between a whole bunch of nodes, and the nodes are connected to one another, and which nodes are connected to which other ones — kind of like which neurons are connected to other neurons in the brain — is determined by this learning process. The weightings that you get between these different neurons is determined by this learning process. And then when a neuron gets enough positive input, then it tends to fire and then pass on a signal onto the next neuron, which is, in a sense, in the next layer of the network. And so, information is processed and passed between these neurons until it kind of spits out an answer at the other end. Is that a sufficiently vague or sufficiently accurate description for what comes next?

Chris Olah: If I was going to nitpick slightly, usually the, “Which neurons connect to which neurons,” doesn’t change. That’s usually static, and the weights evolve over training.

Rob Wiblin: So rather than disappear, the weights just go to a low level.

Chris Olah: Yeah.

Rob Wiblin: As I understand it, based on reading these two pieces, you and a bunch of other colleagues at Google Brain and OpenAI have made pretty substantial progress on interpretability, and this has been a pretty big deal among ML folks, or at least some ML folks. How long have you all been at this?

Chris Olah: I’ve been working on interpretability for about seven years, on and off. I’ve worked on some other ML things interspersed between it, but it’s been the main thing I’ve been working on. Originally, it was a pretty small set of people who were interested in these questions. But over the years, I’ve been really lucky to find a number of collaborators who are also excited about these types of questions. And more broadly, a large field has grown around this, often taking different approaches, instead of the ones that I’m pursuing. But there’s now a non-trivial field working on trying to understand neural networks in different ways.

Rob Wiblin: What different approaches have you tried over the years?

Chris Olah: I’ve tried a lot of things over the years, and a lot of them haven’t worked. Honestly, in retrospect, it’s often been obvious why. At one point I tried this sort of topological approach of trying to understand how neural networks bend to data. That doesn’t scale to anything beyond the most trivial systems. Then I tried this approach of trying to do dimensionality reduction of representations. That doesn’t work super well either, at least for me.

Chris Olah: And to cut a long story short, the thing that I have ended up settling on primarily is this almost stupidly simple approach of just trying to understand what each neuron does, and how all the neurons connect together, and how that gives rise to behavior. The really amazing thing is that as you start to understand what different neurons are doing, you actually start to be able to go and read algorithms off of the weights.

Chris Olah: There’s a slightly computer science-heavy analogy that I like, which might be a little bit hard for some readers to follow, but I often think of neurons as being variables in a computer program, or registers in assembly. And I think of the weights as being code or assembly instructions. You need to understand what the variables are storing if you want to understand the code. But then once you understand that, you can actually sort of see the algorithms that the computer program is running. That’s where things I think become really, really exciting and remarkable. I should add by the way, about all of this, I’m the one who’s here talking about it, but all of this has been the result of lots of people’s work, and I’m just incredibly lucky to have had a lot of really amazing collaborators working on this with me.

Rob Wiblin: Yeah, no doubt. So what can we do now that we couldn’t do 10 years ago, thanks to all of this work?

Chris Olah: Well, I think we can genuinely understand how large chunks of neural networks work. We can actually reverse engineer chunks of neural networks and understand them so well that we can go and hand-write weights. You just take a neural network where all the weights are set to zero, and you write by hand the weights, and you can go and re-implant a neural network that does the same thing as that chunk of the neural network did. You can even splice it into a previous network and replace the part that you understood. And so I think that is a very high standard for understanding systems. I sometimes like to imagine interpretability as being a little bit like cellular biology or something, where you’re trying to understand the cell. And I feel maybe we’re starting to get to the point where we can understand one small organelle in the cell, or something like this, and we really can nail that down.

Rob Wiblin: So we’ve gone from having a black box to having a machine that you could potentially redesign manually, or at least parts of it.

Chris Olah: Yeah. I think that the main story, at least from the circuits approach, is that we can take these small parts. And they’re small chunks, but we can sort of fully understand them.

Features and circuits [00:15:11]

Rob Wiblin: So two really key concepts here are features and circuits. Can you explain for the audience what those are and how you pull them out?

Chris Olah: When we talk about features, we most often (though not always) are referring to an individual neuron. So you might have an individual neuron that does something like responds when there’s a curve present, or responds to a line, or responds to a transition in colors. Later on, we’ll probably talk about neurons that respond to Spider-Man or respond to regions of the world, so they can also be very high level.

Chris Olah: And a circuit is a subgraph of a neural network. So the nodes are features, so the node might be a curve detector and a bunch of line detectors, and then you have the weights of how they connect together. And those weights are sort of the actual computer program that’s running, that’s going and building later features from the earlier features.

Rob Wiblin: So features are kind of smaller things like lines and curves and so on. And then a circuit is something that puts together those features to try to figure out what the overall picture is?

Chris Olah: A circuit is a partial program that’s building the later features from the earlier features. So circuits are connections between features, and they contribute to building the later features from the earlier features.

Rob Wiblin: I see. Okay. If you can imagine within, say, a layer of a neural network, you’ve got different neurons that are picking up features and then the way that they go onto the next layer, they’re the combinations of weights between these different kinds of features that have been identified, and how they push forward into features that are identified in the next layer…that’s a circuit?

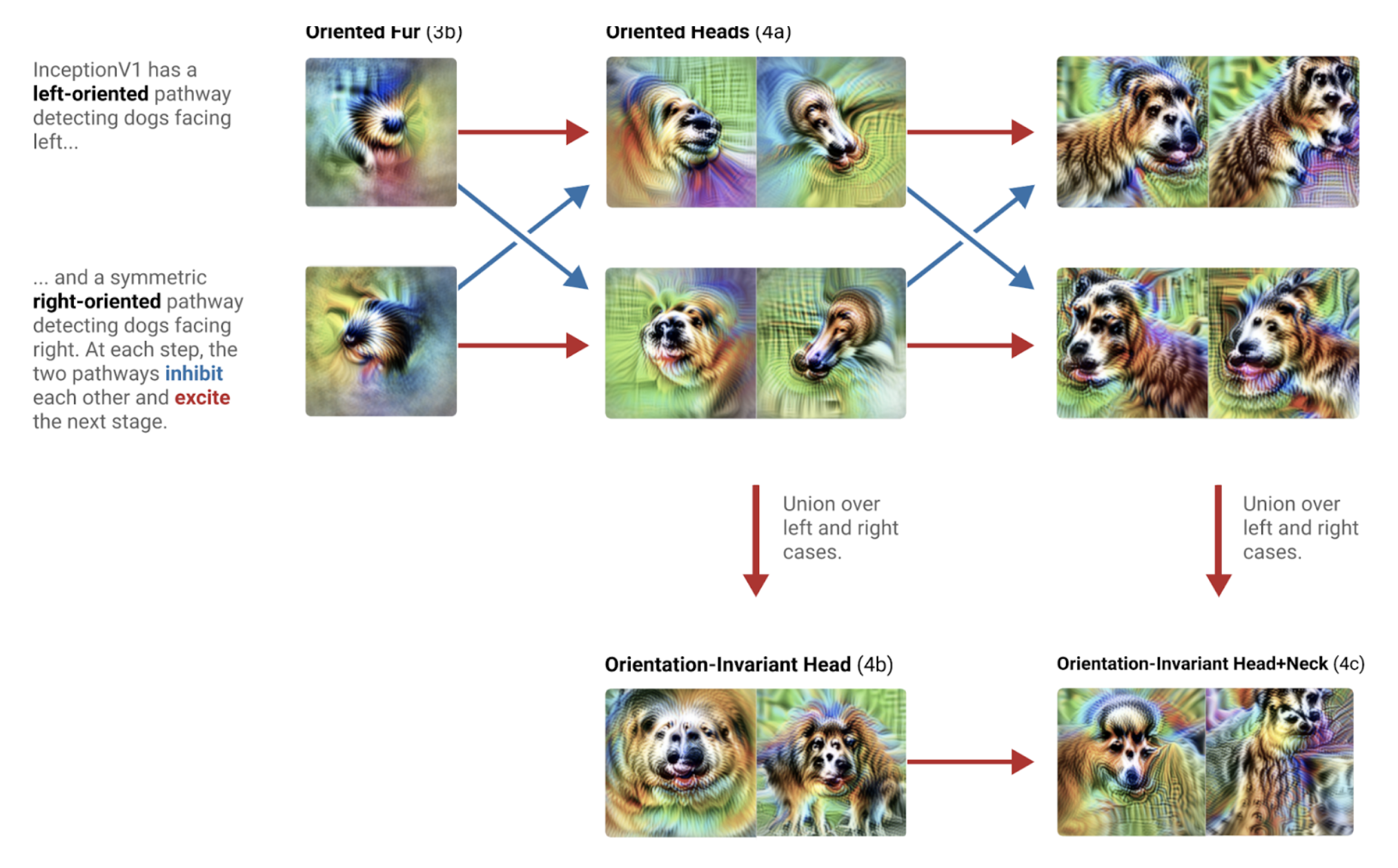

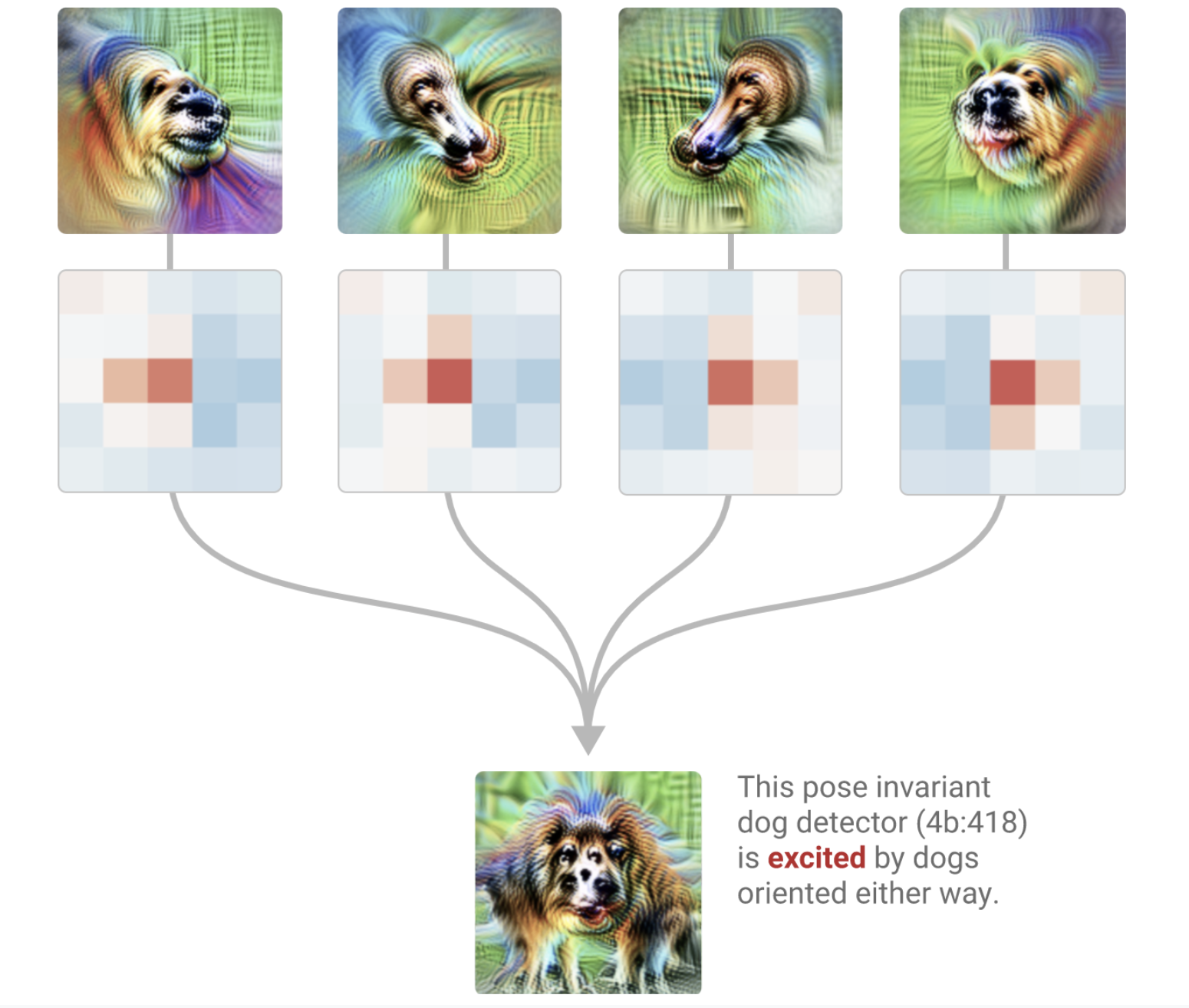

Chris Olah: Yeah. Although often we’d look at a subset of neurons that are tightly connected together, so you might be interested in how, say, the curve detectors in one layer are built from features in the previous layer. And then there’s a subset of features of the previous layer that are tightly intertwined with that, and then you could look at that, so you’re looking at a smaller subgraph. You might also go over multiple layers, so you might look at… There’s actually this really beautiful circuit for detecting dog heads.

Chris Olah: And I know it sounds crazy to describe that as beautiful, but I’ll describe the algorithm because I think it’s actually really elegant. So in InceptionV1, there’s actually two different pathways for detecting dog heads that are facing to the left and dog heads that are facing to the right. And then, along the way, they mutually inhibit each other. So at every step it goes and builds the better dog head facing each direction and has it so that the opposite one inhibits it. So it’s sort of saying, “A dog’s head can only be facing left or right.”

Chris Olah: And then finally at the end, it goes and unions them together to create a dog head detector, that is pose-invariant, that is willing to fire both for a dog head facing left and a dog head facing right.

Rob Wiblin: So I guess you’ve got neurons that kind of correspond to features. So a neuron that fires when there’s what appears to be fur, and then a neuron that fires when there appears to be a curve of a particular kind of shape. And then a bunch of them are linked together and they either fire together or inhibit one another, to indicate a feature at a higher level of abstraction. Like, “This is a dog head,” and then more broadly, you said, “This is this kind of dog.” It would be the next layer. Is that kind of right?

Chris Olah: That’s directionally right.

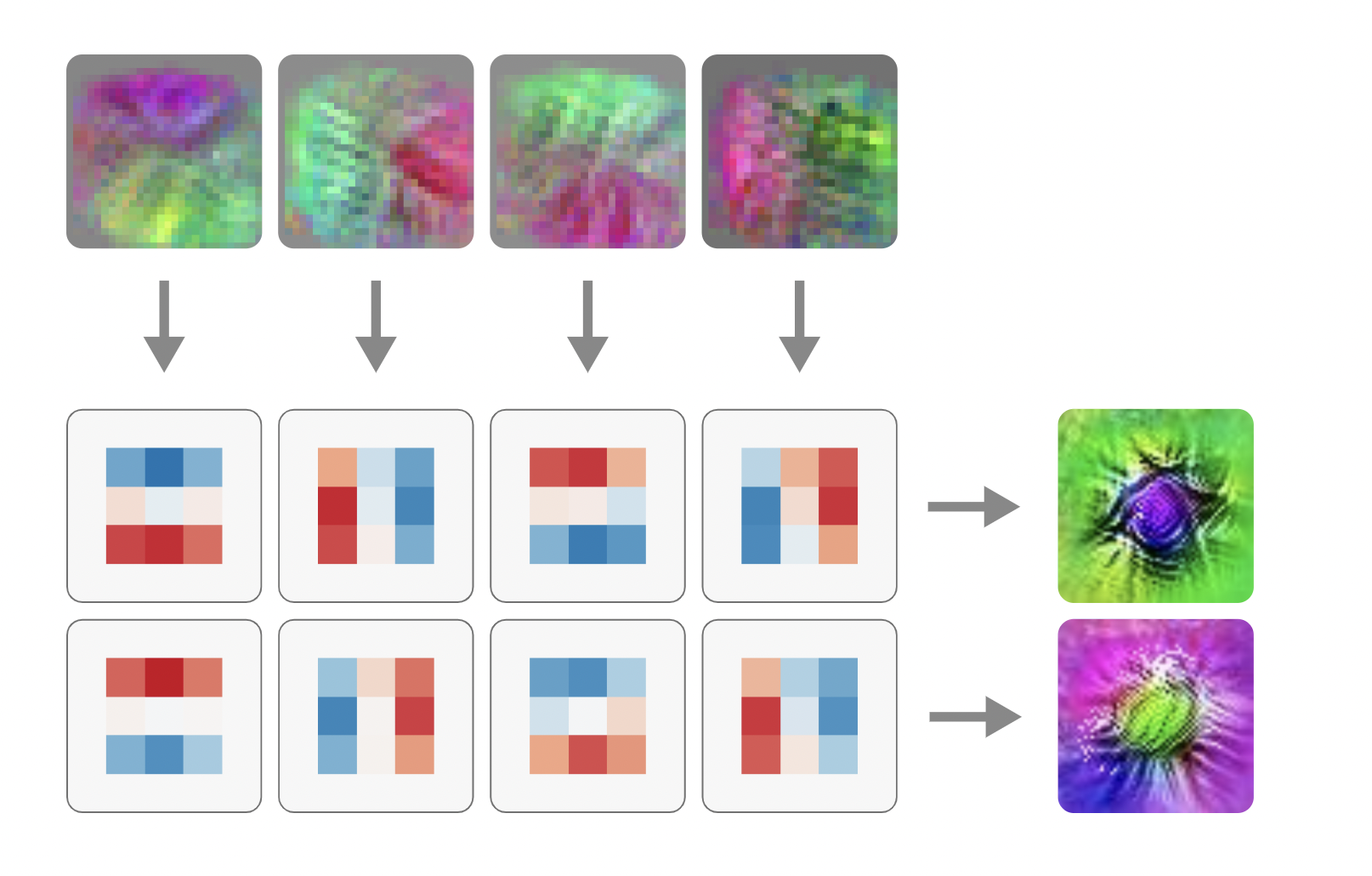

Rob Wiblin: Interesting. Was it hard to come up with the technical methods that you use to identify what feature a neuron corresponds to? And how do you pull together all of the different connections and weights that should be seen as functioning as a circuit?

Chris Olah: There’s this interesting thing where neural network researchers often look at weights in the first layer, so it’s actually very common to see papers where people look at the weights in the first layer. And the reason is that those weights connect to red, green, and blue channels in an image. If you’re, say, doing vision, those weights are really easy to interpret because you know what the inputs to those weights are. And you almost never see people look at weights anywhere else in the model in the same kind of way. And I think the reason is that they don’t know what the inputs and the outputs to those weights are. So if you want to study weights anywhere other than the absolute input, or maybe the absolute output, you need to have some technique for understanding what the neurons that are going in and out of those weights are.

Chris Olah: And so there’s a number of ways you can do that. One thing you could do is you could just look at what we call dataset examples. Just feed lots of examples through the neural network and look for the ones that cause neurons to fire. That’d be a very neuroscience-y approach. But another approach that we found is actually very effective is to optimize the input. We call this ‘feature visualization.’ You just do gradient descent to go in and create an image that causes a neuron to fire really strongly.

Chris Olah: And the nice thing about that is it separates correlation from causation. In that resulting image, you know that everything that’s there is there because it caused the neuron to fire. And then we often use these feature visualizations, both as they’re a useful clue for understanding what the neurons are doing, but we also often just use them like variable names. So rather than having a neuron be mixed4c:447, we say have an image that stimulates it. It’s a car detector, so you have an image of a car, and that makes it much easier to go and keep track of all the neurons and reason how they connect together.

Rob Wiblin: So it seems you’ve got this method where you choose a neuron, or you choose some combination of connections that forms a circuit. And then you try to figure out what image is going to maximally make that neuron, or that circuit, fire. And you said gradient descent, which, as I understand it, is you choose a noisy image and then you just bit by bit get it to fire more and more, until you’ve got an image that’s pretty much optimally designed, through this iterative process, to really slam that neuron hard. And so, if that neuron is there to pick up fur, then you’ll figure out the archetypal fur-thing that this neuron is able to identify. Is that right?

Chris Olah: Yeah. And then once you understand the features, you can use that as scaffolding to then understand the circuit.

Rob Wiblin: I see, okay. I think I didn’t realize this until I was doing research for this episode, but you got this microscope website at OpenAI, they’re hosting it, where you can work through this big image recognition neural network and kind of pick out… I don’t know whether it’s all of them, but there’s many different neurons, and then see what are the kinds of images that cause this to fire. And you’d be like, “Oh, that’s something that’s identifying this kind of shape,” or, “This is a neuron that corresponds to this kind of fur color,” or whatever else. And you can see how this flows through the network in quite a sophisticated way. It really is pulling apart this machine and seeing what each of the little pieces does.

Chris Olah: Yeah. Microscope is wonderful. It allows you to go and look at any neuron that you want. I think it actually points to a deeper, underlying advantage or thing that’s nice about studying neural networks, which is that we can all look at exactly the same neural network. And so there could be these sort of standard models, where every neuron is exactly the same as the model that I’m studying. And we can just go and have one organization, in this case, OpenAI, go and create a resource once, that makes it easy to go and reference every neuron in that model. And then every researcher who’s studying that model can easily go and look at arbitrary parts of it just by navigating to a URL.

Rob Wiblin: By the way, people have probably seen DeepDream and they might have seen, if they’ve looked at any of these articles, the archetypal images and shapes and textures that are firing these particular feature neurons, but the colors are always so super weird. It produces this surrealist DeepDream thing. I would think if you had a neuron that was firing for fur, then it would actually look like a cat’s fur. Or it was a beer shop sign, if it’s a shop-sign neuron. But the fact that they kind of look like this crazy psychedelic experience… Is there some reason why that’s the case?

Chris Olah: After we published DeepDream, we got a number of emails from quite serious neuroscientists asking us if we wanted to go and study with them, whether we could explain experiences that people have when they’re on drugs, using DeepDream. So I think this analogy is one that really resonates with people.

Chris Olah: But why are there those colors? Well, I think there’s a few reasons. I think the main one is you’re sort of creating a super stimulus, you’re creating the stimulus that most causes the neurons to fire. And the super stimulus, it’s not surprising that it’s going to push colors to very extreme regimes, because that’s the most extreme version of the thing that neuron is looking for.

Chris Olah: There’s also a more subtle version of that answer, which is, suppose that you have a curve detector. It turns out that actually one thing the neuron is doing is just looking for any change in color across the curve. Because if there’s different colors on both sides, that sort of means that it’s stronger. In fact, this is just generally true of line detectors; it’s very early on neural networks. The line detector is… A line fires more if there’s different colors on both sides of the line. And so the maximal stimulus for that just has any difference in colors. It doesn’t even care what the two colors are, it just wants to see a difference in colors.

Rob Wiblin: So the reason that they can look kind of weird is just that these feature detectives are mostly just picking up differences between things, and so they want a really stark difference in color. And maybe the specific color that the thing happens to be doesn’t matter so much. So it doesn’t have to look like actual cat fur, it’s just the color gradient that’s getting picked up.

Chris Olah: Depends on the case and it depends a lot also on the particular neuron. There’s one final reason, which is a bit more technical, which is that often neural networks are trained with a little bit of hue rotation, sometimes a lot of hue rotation, which means that the images, the colors, are sort of randomly shuffled a little bit before the neural network sees them. And the idea is that this makes the neural network a bit more robust, but it makes neural networks a little bit less sensitive to the particular color that they are seeing. And you can actually often tell how much hue rotation that neural network was trained with by exactly the color patterns that you’re seeing, when you do these feature visualizations.

How neural networks think [00:24:38]

Rob Wiblin: So what concretely can we learn about what, or how, a neural network system like this is thinking, using these methods?

Chris Olah: Well, in some sense, you can sort of quite fully understand it, but you understand a small fraction. You can understand what a couple of neurons are doing and how they connect together. You can get it to the point where your understanding is just basic math, and you can just basic logic and reason through what it’s doing. And the challenge is that it’s only a small part of the model, but you literally understand what algorithm is running in that small part of the model and what it’s doing.

Rob Wiblin: From those articles, it sounded like you think we’ve potentially learned some really fundamental things about how neural networks work. And potentially, not only how these thinking machines work, but also how neural networks might function in the brain. And maybe how they always necessarily have to function. What are those things that you think we might’ve learned, and what’s the evidence for them?

Chris Olah: Well, one of the fascinating things is that the same patterns form and the same features in circuits form again and again across models. So you might think that every neural network is its own special snowflake and that they’re all doing totally different stuff. That would make for a boring, or a very strange, field of trying to understand these things.

Chris Olah: So another analogy I sometimes like is to think of interpretability as being like anatomy, and we’re dissecting… This is a little bit strange because I’m a vegan, but it’s grown on me over time. We’re dissecting these neural networks and looking at what’s going on inside them, like an early anatomist might have looked at animals. And if you imagine early anatomy, just every organism had a totally different anatomy and there were no similarities between them. There’s nothing like a heart that exists in lots of them. That would create a kind of boring field of anatomy. You probably wouldn’t want to invest in trying to understand most animals.

Chris Olah: But just like animals have very similar anatomies — I guess in the case of animals due to evolution — it seems neural networks actually have a lot of the same things forming, even when you train them on different data sets, even when they have different architectures, even though the scaffolding is different. The same features and the same circuits form. And actually I find that the fact that the same circuits form to be the most remarkable part. The fact that the same features form is already pretty cool, that the neural network is learning the same fundamental building blocks of understanding vision or understanding images.

Chris Olah: But then, even though it’s scaffolded on differently, it’s literally learning the same weights, connecting the same neurons together. So we call that ‘universality.’ And that’s pretty crazy. It’s really tempting when you start to find things like that to think “Oh, maybe the same things form also in humans. Maybe it’s actually something fundamental.” Maybe these models are discovering the basic building blocks of vision that just slice up our understanding of images in this very fundamental way.

Chris Olah: And in fact, for some of these things, we have found them in humans. So some of these lower-level vision things seem to mirror results from neuroscience. And in fact, in some of our most recent work, we’ve discovered something that was previously only seen in humans, these multimodal neurons.

Rob Wiblin: We’ll talk about multimodal neurons in just a second. But it sounds like you’re saying you train lots of different neural networks to identify images. You train them on different images and they have different settings you’re putting them in, but then every time, you notice these common features. You’ve got things that identify particular shapes and curves and particular kinds of textures. I suppose also, things are organized in such a way that there are feature-detecting neurons and these organized circuits, for moving from lower-level features to higher-level features, that just happen every time basically. And then we have some evidence, you think, that this is matching things that we’ve learned about, how humans process visual information in the brain as well. So there’s a prima facie case, although it’s not certain, that these universal features are always going to show up.

Chris Olah: Yeah. And it’s almost tempting to think that there’s just fundamental elements of vision or something that just are the fundamental things that you want to have, if you reason about images. That a line is, in some sense, a fundamental thing for reasoning about images. And that these fundamental elements, or building blocks, are just always present.

Rob Wiblin: Is it possible that you’re getting these common features and circuits because we’re training these networks on very similar images? It seems we love to show neural networks cats and dogs and grass and landscapes, and things like that. So possibly it’s more of a function of the images that we’re training them on than something fundamental about vision.

Chris Olah: So you could actually look at models that are trained on pretty different datasets. There’s this dataset called ‘places,’ which is recognizing buildings and scenes. Those don’t have dogs and cats, and a lot of the same features form. Now, it tends to be the case that as you go higher up, the features are more and more different. So in the later layers you have these really cool neurons related to different visual perspectives, like what angle am I looking at a building from. What you don’t see in image models and vice versa. Image models have all of these features related to dogs and cats that you don’t see in the places model. But there do seem to be a lot of things that are fundamental, especially in earlier vision, that form in both of those datasets.

Rob Wiblin: A question would be, if aliens trained a model, or if we could look into an alien brain somewhere, would it show all of these common features? And I suppose, maybe it does just make a lot of intuitive sense that a line, an edge, a curve, these are very fundamental features. So even on other planets, they’re going to have lines. But maybe at the high level some of the features and some of the circuits are going to correspond to particular kinds of things that humans have, that exist in our world, and they may not exist on another planet.

Chris Olah: Yeah, that’s exactly right. And it’s the kind of thing that is purely supposition, but is really tempting to imagine. And I think it’s an exciting vision for what might be going on here. I do find the stories of investigating these systems… That does feel just emotionally more compelling, when I think that we’re actually discovering these deeply fundamental things, rather than these things that just happened to be arbitrarily true for a single system.

Rob Wiblin: Yeah. You’ve discovered the fundamental primitive nature of a line.

Chris Olah: Yeah. Or I mean, I think it’s more exciting when we discover these things that people haven’t seen before, or that people don’t guess. There are these things called ‘high-low frequency detectors’ that seem to form little models.

Rob Wiblin: Yeah, explain those?

Chris Olah: They’re just as the name suggests. They look for high-frequency patterns on one side of the receptive field, and low-frequency patterns on the other side of the receptive field.

Rob Wiblin: What do you mean by high frequency and low frequency here?

Chris Olah: So a pattern with lots of texture to it and sharp transitions might be high frequency, whereas a low-frequency image would be very smooth. Or an image that’s out of focus will be low frequency.

Rob Wiblin: So it’s picking up how sharp and how many differences there are, within that section of the image versus another part of it.

Chris Olah: Exactly. Models use these as part of boundary detections. There’s a whole thing of using multiple different cues to do boundary detection. And one of them is these transitions in frequency. And so for instance you could see this happen at the boundary of an object where the background is out of focus and it’s very low frequency, whereas the foreground is higher frequency. But you also just see this maybe if there are two objects that are adjacent and they have different textures, you’ll get a frequency transition as well. And in retrospect, it makes sense that you have these features, but they’re not something that I ever heard anyone predict in advance we’d find. And so it’s exciting to discover these things that weren’t predicted in advance.

Chris Olah: And that both suggests that you might’ve said “Oh, these attempts to understand neural networks, they’re only going to understand the things that humans guessed were there,” and it sort of repeats that. But it also suggests that maybe there are just all of these… We’re digging around inside these systems and we’re discovering all these things, and there’s this entire wealth of things to be discovered that no one’s seen before. I don’t know, maybe we’re discovering mitochondria for the first time or something like that. And they’re just there, waiting for you to find them.

Rob Wiblin: The universality conjecture that you’re all toying with would be that at some point, we’ll look into the human brain and find all of these high-low detector neurons and circuits in the human brain as well, and they’re playing an important role in us identifying objects when we look out into the world?

Chris Olah: That would be the strong version. And also just that every neural network we look in that’s doing natural image vision is going to go and have them as well, and they’re really just this fundamental thing that you find everywhere.

Rob Wiblin: I just thought of a way that you could potentially get a bit more evidence for this universality idea. Obviously humans have some bugs in how they observe things, they can get tricked visually. Maybe we could check if the neural networks on the computers also have these same errors. Do they have the same ways in which they get things right and get things wrong? And then that might suggest that they’re perhaps following a similar process.

Chris Olah: Yeah. I think there’s been a few papers that are exploring ideas like this. And actually, in fact, when we talk about the multimodal neuron results, we’ll have one example.

Multimodal neurons [00:33:30]

Rob Wiblin: Well, that’s a perfect moment to dive into the multimodal neuron article, which just came out a couple of weeks ago. I guess it’s dealing with more state-of-the-art visual detection models? What did you learn in that work that was different from what came before?

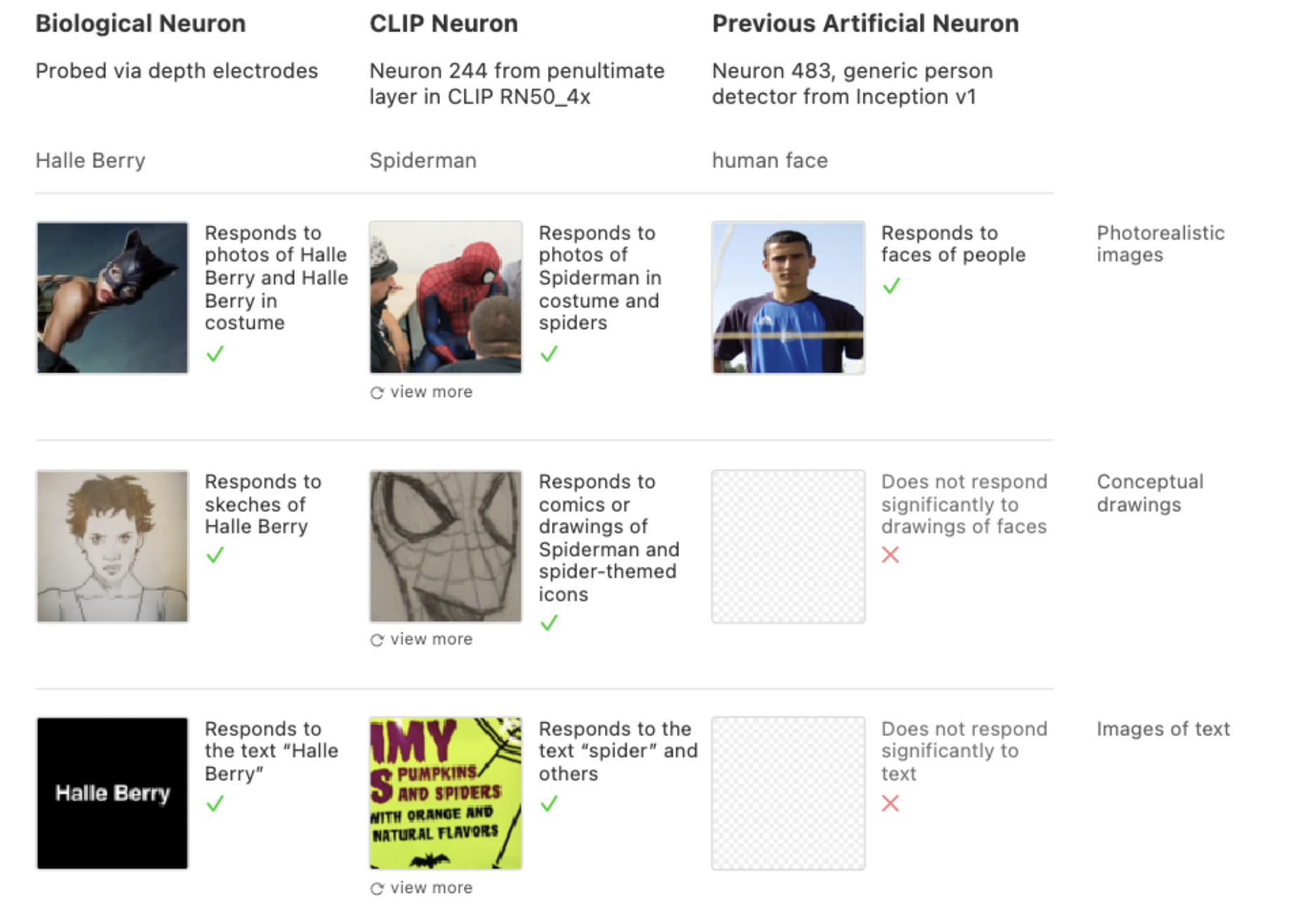

Chris Olah: So we were investigating this model called CLIP from OpenAI, which you can roughly think of as being trained to caption images or to pair images with their captions. So it’s not classifying images, it’s doing something a little bit different. And we found a lot of things that were really deeply qualitatively different inside it. So if you look at low-level vision actually, a lot of it is very similar, and again is actually further evidence for universality.

Chris Olah: A lot of the same things we find in other vision models occur also in early vision in CLIP. But towards the end, we find these incredibly abstract neurons that are just very different from anything we’d seen before. And one thing that’s really interesting about these neurons is they can read. They can go and recognize text and images, and they fuse this together, so they fuse it together with the thing that’s being detected.

Chris Olah: So there’s a yellow neuron for instance, which responds to the color yellow, but it also responds if you write the word yellow out. That will fire as well. And actually it’ll fire if you write out the words for objects that are yellow. So if you write the word ‘lemon’ it’ll fire, or the word ‘banana’ will fire. This is really not the sort of thing that you expect to find in a vision model. It’s in some sense a vision model, but it’s almost doing linguistic processing in some way, and it’s fusing it together into what we call these multimodal neurons. And this is a phenomenon that has been found in neuroscience. So you find these neurons also for people. There’s a Spider-Man neuron that fires both for the word Spider-Man as an image, like a picture of the word Spider-Man, and also for pictures of Spider-Man and for drawings of Spider-Man.

Chris Olah: And this mirrors a really famous result from neuroscience of the Halle Berry neuron, or the Jennifer Aniston neuron, which also responds to pictures of the person and the drawings of the person and to the person’s name. And so these neurons seem in some sense much more abstract and almost conceptual, compared to the previous neurons that we found. And they span an incredibly wide range of topics.

Chris Olah: In fact, a lot of the neurons, you just go through them and it feels like something out of a kindergarten class, or an early grade-school class. You have your color neurons, you have your shape neurons, you have neurons corresponding to seasons of the year, and months, to weather, to emotions, to regions of the world, to the leader of your country, and all of them have this incredible abstract nature to them.

Chris Olah: So there’s a morning neuron that responds to alarm clocks and times of the day that are early, and to pictures of pancakes and breakfast foods — all of this incredible diversity of stuff. Or season neurons that respond to the names of the season and the type of weather associated with them and all of these things. And so you have all this incredible diversity of neurons that are all incredibly abstract in this different way, and it just seems very different from the relatively concrete neurons that we were seeing before that often correspond to a type of object or such.

Rob Wiblin: So we’ve kind of gone from having an image recognition network that can recognize that this is a head, to having a network that can recognize that something is beautiful, or that this is a piece of artwork, or that this is an Impressionist piece of art. It’s a higher level of abstraction, a grouping that lots of different images might have in common of many different kinds in many different places.

Chris Olah: Yeah. And it’s not even about seeing the object, it’s about things that are related to the object. For instance, you see a Barack Obama neuron in some of these models and it of course responds to images of Barack Obama, but also to his name and a little bit for U.S. flags and also for images of Michelle Obama. And all these things that are associated with him also cause it to fire a little bit.

Rob Wiblin: When put it that way it just sounds like it’s getting so close to doing what humans do. What we do when going about in the world. We’ve got all of these associations between different things, and then when people speak, the things that they say kind of draw to mind particular different things with different weightings. And that’s kind of how I feel like I reason. Language is very vague, right? Lots of different words just have different associations, and you smash them together and then the brain makes of it what it will. So it’s possible that it’s capable of a level of conceptual reasoning, in a sense, that is approaching a bit like what the human mind is doing.

Chris Olah: I feel really nervous to describe these as being about concepts, because I think those are… That’s sort of a charged way to describe it that people might strongly disagree with, but it’s very tempting to frame them that way. And I think that there’s a lot of things about that framing that would be true.

Rob Wiblin: Interesting. Do you know off the top of your head what is the most abstract category that you found? Is there anything that is particularly striking and amazing that it can pick up this grouping?

Chris Olah: One that jumps to mind for me is the mental health neuron, which you can roughly think of as firing whenever there’s a cue for any kind of mental health issue in the image. And that could be body language, or faces that read as particularly anxious or stressed, and lots of words, like the word ‘depression’ or ‘anxiety’ or things like this, the names of medicines associated with mental health, slurs related to mental health. So words like ‘crazy’ or things like this, images that sort of seem sort of… I don’t know, like psychological sort of themed images a little bit, and just this incredible range of things. And it just seems like such an abstract idea. There’s no single very concrete instantiation of mental health, and yet it sort of represents that.

Rob Wiblin: How is this model trained? I guess it must have a huge sample of images and then just lots of captions. And so is it using the words in the captions to recognize concepts that are related to the images and then group them?

Chris Olah: Yeah. It’s trying to pair up images and captions. So it’ll take a set of images and a set of captions, and then it’ll try to figure out which images correspond to which captions. And this has all sorts of really interesting properties, where this means that you can just write captions and sort of use that to program it to go and do whatever image classification tasks you want. But it also just seems to lead to these much richer features on the vision side.

Chris Olah: Sometimes people are tempted to say these neurons correspond to particular words in the output or something, but I think that’s not actually going on. So for instance, there’s all of these region neurons that correspond to things like countries or parts of countries, or… Some of them are really big, like this entire northern hemisphere neuron that responds to things like coniferous forests and deers and bears and things like this. And I don’t think that those are there because they correspond to particular words like Canada or something like this. It’s because when an image is in a particular context, that changes what people talk about.

Chris Olah: So you’re much more likely to talk about maple syrup if you’re in Canada. Or if you’re in China, maybe it’s much more likely that you’ll mention Chinese cities, or that you’ll have some of the captions be in Chinese, or things like this.

Rob Wiblin: So the training data was a set of corresponding images and captions/words, and it’s developing these multimodal neurons in order to kind of probabilistically estimate or to prove its ability to tell what the context is, in order to figure out then what words are most likely to appear in a caption, is that broadly right?

Chris Olah: That would be my intuition about what’s going on.

Safety implications [00:41:01]

Rob Wiblin: What implications, if any, does the discovery of multimodal neurons have for safety or reliability concerns with these neural networks?

Chris Olah: Well, I guess there’s a few things. The first one sort of speaks to this unknown unknowns-type concern, where if you look inside the multimodal model, or you look inside CLIP, you find neurons corresponding to literally every trait — or almost every trait — that is a protected attribute in the United States. So not only do you have neurons related to gender, related to age, related to religion… There’s neurons for every religion. Of course, these region neurons connect closely to race. There’s even a neuron for pregnancy. You have a neuron for parenthood, that’s looking for things like children’s drawings and things like this. You have a neuron for mental health and another neuron for physical disability. Almost every single protected attribute, there is a neuron for.

Chris Olah: And despite the ML community caring a lot, I think, about bias in machine learning systems — it tends to be very, very alert and on guard for bias with respect to gender or with respect to race — I think we should be looking for a much broader set of concerns. It would be very easy to imagine CLIP discriminating with respect to parents, or with respect to mental health, or something you wouldn’t really have thought that previous models could. And I think that’s kind of an illustration of how studying these things can surface things that we weren’t worried about before and perhaps should now be on the lookout for.

Rob Wiblin: Yeah. I’m guessing if you tried to tell CLIP like, “Naughty CLIP, we shouldn’t be having multimodal neurons for race or gender or these other characteristics,” I bet when it was getting trained it would kind of shift those concepts, blur them into something else like a geographic neuron or something else about behavior, or personality, or whatever. And so it would end up kind of accidentally building in the recognition of gender and race, but just in a way that’s harder to see.

Chris Olah: Yeah. I think it’s tricky to get rid of these things. I think it’s also not clear that you actually want to get rid of representation of these things. There’s another neuron that sort of is an offensiveness neuron. It responds to really bad stuff like swear words obviously, racial slurs—

Rob Wiblin: —pornography, yeah.

Chris Olah: Yeah, really, really offensive things. On the one hand you might say “We should get rid of that neuron. It’s not good to have a neuron that responds to those things.” On the other hand it could be that part of the function of that neuron is actually to go and pair images that are offensive with captions that rebut them, because sometimes people will post an image and then they will respond to that image. That’s the thing that you would like it to do. You would like it to be able to go and sort of recognize offensive content and rebut it. My personal intuition is that we don’t want models that don’t understand these things. We want models that understand these things and respond appropriately to them.

Rob Wiblin: So it’s better to kind of have it in the model and then potentially fix the harm that it will cause down the track using something that comes afterwards, rather than try to tell the model not to recognize offensiveness, because then you’re more flying blind.

Chris Olah: It’s not like we would say we should prevent children from ever finding out about racism and sexism and all of these things. Rather, we want to educate children that racism and sexism are bad and that that’s not what we want, and on how to thoughtfully navigate those issues. That would be my personal intuition.

Rob Wiblin: That makes sense because that’s what we’re trying to do with humans. So maybe that’s what we should try to do with neural networks, given how human they are now seemingly becoming.

Chris Olah: I guess just sort of continuing on the safety issue, this is a bit more speculative, but we’re seeing these emotion neurons form. And something I think we should really be on guard for is whenever we see features that sort of look like theory of mind or social intelligence, I think that’s something that we should really keep a close eye on.

Chris Olah: And we see hints of this elsewhere, like modern language models can go and track multiple participants in a discourse and sort of track their emotions and their beliefs, and sort of write plausible responses to interactions. And I think having the kind of faculties that allow you to do those tasks, having things like emotion neurons that allow you to sort of detect the emotions of somebody in an image or possibly incorrectly reason about them, but attempt to reason about them, I think makes it easier to imagine systems that are manipulative or are… Not incidentally manipulative, but more deliberately manipulative.

Chris Olah: Once you have social intelligence, that’s a very easy thing to have arise, but I don’t think the things that we’re seeing are quite there. But I would predict that that is a greater issue that we’re going to see in the not-too-distant future. And I think that’s something that we should have an eye out for.

Chris Olah: I think one thing that one should just generally be on guard for when doing interpretability research — especially if one’s goal is to contribute to safety — is just the fact that your guesses about what’s going on inside a neural network will often be wrong, and you want to let the data speak for itself. You want to look and try to understand what’s going on rather than assuming a priori and looking for evidence that backs up your assumptions.

Chris Olah: And I think that region neurons are really striking for this. About 5% of the last layer of the model is about geography. If you’d asked me to predict what was in CLIP beforehand and what was significant fractions of CLIP, I would not have guessed in a hundred years that a large fraction of it was going to be geography. And so I think that’s just another lesson that we should be really cautious of.

Rob Wiblin: So as you’re trying to figure out how things might go wrong, you would want to know what the network is actually focusing on. And it seems like we don’t have great intuitions about that; the network can potentially be focused on features and circuits that are potentially quite different from what we were anticipating beforehand.

Chris Olah: Yeah. An approach that I see people take to try and understand neural networks is they guess what’s in there, and then they look for the things they guessed are there. And I think that this is sort of an illustrative example of why… Especially if your concern is to understand these unknown unknowns and the things the model is doing that you didn’t anticipate and might be problems. Here’s a whole bunch of things that I don’t think people would have guessed, and that I think would have been very hard to go and catch with that kind of approach.

Rob Wiblin: Yeah. So that’s two different ways that the multimodal neuron network has been potentially relevant to safety and reliability. Are there any other things that are being thrown out?

Chris Olah: One other small thing — which maybe is less of a direct connection, but maybe it’s worth mentioning — is I think that a crux for some people on whether to work on safety or whether to prioritize safety is something like how close are these systems to actual intelligence. Are neural networks just fooling us, are they just sort of illusions that seem competent but aren’t doing the real thing, or are they actually in some sense doing the real thing, and we should be worried about their future capabilities?

Chris Olah: I think that there’s a moderate amount of evidence from this to update in the direction that neural networks are doing something quite genuinely interesting. And correspondingly, if that’s a crux for you with regards to whether to work on safety, this is another piece of evidence that you might update on.

Rob Wiblin: I guess just the more capable they are and the more they seem capable of doing the kinds of reasoning that humans do, that should bring forward the date at which we would expect them to be deployed in potentially quite important decision-making procedures.

Chris Olah: Yeah. Although I think a response that one can have with respect to the evidence in the form of capabilities is to imagine ways the model might be cheating, and imagine the ways in which the model may not really be doing the real thing. And so it sort of appears to be progressing in capabilities, but that’s an illusion, some might say. It actually doesn’t really understand anything that’s going on at all. I mean, I think ‘understand’ is a charged word there and maybe not very helpful, but the model… In some sense it’s just an illusion, just a trick, and won’t get us to things that are genuinely capable.

Rob Wiblin: Is that right?

Chris Olah: Well, I think it’s hard to fully judge, but I think when we see evidence of the system implementing meaningful algorithms inside them — and especially when we see evidence of things that have been perceived as evidence of human…a sort of neural evidence of humans understanding concepts, I think there’s somewhat of an update to be had there.

Rob Wiblin: One of the safety concerns that came up in the article, at least as I understood it, was that you can potentially… People might be familiar with adversarial examples, where you can potentially get a neural network to misidentify a sign as a bird, or something like that, by modifying it. And by having these high-level concepts like ‘electronics’ or ‘beautiful’ or whatever, it seemed like you could maybe even more easily get the system to misidentify something. You had the example where you had an apple, and it would identify it as an apple, and then you put a Post-it note with the word ‘iPod’ on it, and then it identified it as an iPod. Is that an important reliability concern that’s thrown up? That maybe as you make these models more complicated, they can fail in ever more sophisticated ways?

Chris Olah: Well, it’s certainly a fun thing to discover. I’ve noticed that it’s blown up quite a bit, and I think maybe some people are overestimating it as a safety concern; I wouldn’t be that surprised if there was a relatively easy solution to this. The thing that to me seems important about it is… Well, there’s just this general concern with interpretability research that you may be fooling yourself that you’re discovering these things, but you may be mistaken. And so I think whenever you can go and turn an interpretability result into a concrete prediction about how a system will behave, that’s actually really interesting and can give you a lot more confidence that you really are understanding these systems. And I think it is — at least for these particular models, and without prejudice to whether this will be an easy or hard problem to solve — there are contexts where you might hesitate to go and deploy a system that you can fool in this easy way.

Rob Wiblin: Are there any examples where you’ve been able to use this interpretability work as a whole to kind of make a system work better or more reliably or anticipate a way that it’s going to fail and then diffuse it?

Chris Olah: Well, I think there are examples of catching things that you might be concerned about. I don’t know that we’ve seen examples yet of that then translating into ameliorating that concern or making changes to a system to make it better. I guess one hope you might have for neural networks is that you could sort of close the loop and make understanding neural networks into a tool that’s useful for improving them, and sort of just make it part of how neural network research is done.

Chris Olah: And I think we haven’t had very compelling examples of that to date. Now, it’d be a bit of a double-edged sword; in some ways I like the fact that my research doesn’t make models more capable, or generally hasn’t made models more capable and sort of has been quite purely safety oriented and about catching concerns.

Rob Wiblin: When we solicited questions from the audience for this interview with you, one person asked “Why does Chris focus on small-scale interpretability, rather than figuring out the roles of larger-scale modules in a way that might be more analogous to neuroscience?” It seems like now might be an appropriate moment to ask this question. What do you make of it?

Chris Olah: Ultimately the reason I study (and think it’s useful to study) these smaller chunks of neural network is that it gives us an epistemic foundation for thinking about interpretability. The cost is that we’re talking to these small parts and we’re setting ourselves up for a struggle to be able to build up the understanding of large neural networks and make this sort of analysis really useful. But it has the upside that we’re working with such small pieces that we can really objectively understand what’s going on because it’s really… At the end of the day, it sort of reduces just to basic math and logic and reasoning things through. And so it’s almost like being able to reduce complicated mathematics to simple axioms and reason about things.