My Overview of the AI Alignment Landscape: A Bird’s Eye View

By Neel Nanda @ 2021-12-15T23:46 (+45)

This is a linkpost to https://www.alignmentforum.org/posts/SQ9cZtfrzDJmw9A2m/my-overview-of-the-ai-alignment-landscape-a-bird-s-eye-view

Disclaimer: I recently started as an interpretability researcher at Anthropic, but I wrote this doc before starting, and it entirely represents my personal views not those of my employer

Intended audience: People who understand why you might think that AI Alignment is important, but want to understand what AI researchers actually do and why.

Epistemic status: My best guess.

Epistemic effort: About 70 hours into the full sequence, and feedback from over 30 people

Special thanks to Sydney von Arx and Ben Laurense for getting me to actually finish this, and to all of the many, many people who gave me feedback. This began as my capstone project in the first run of the AGI Safety Fellowship, organised by Richard Ngo and facilitated by Evan Hubinger - thanks a lot to them both!

Meta: This is a heavily abridged overview (4K words) of a longer doc (25K words) I’m writing, giving my birds-eye conceptualisation of the field of Alignment. This post should work as a standalone and accessible overview, without needing to read the full thing. I’ve been polishing and re-polishing the full doc for far too long, so I’m converting it into a sequence and I’m publishing this short summary now as an introductory post, and trying to get the rest done over Christmas. Each bolded and underlined section header is expanded into a full section in the full thing, and will be posted to the Alignment Forum. I find detailed feedback super motivating, so please let me know what you think works well and doesn’t!

Terminology note: There is a lot of disagreement bout what “intelligence”, “human-level”, “transformative” or AGI even means. For simplicity, I will use AGI as a catch-all term for ‘the kind of powerful AI that we care about’. If you find this unsatisfyingly vague, OpenPhil’s definition of Transformative AI is my favourite precise definition.

Introduction

What needs to be done to make the development of AGI safe? This is the fundamental question of AI Alignment research, and there are many possible answers.

I've spent the past year trying to get into AI Alignment work, and broadly found it pretty confusing to get my head around what's going on at first. Anecdotally, this is a common experience. The best way I've found of understanding the field is by understanding the different approaches to this question. In this post, I try to write up the most common schools of thought on this question, and break down the research that goes on according to which perspective it best fits

There are already some excellent overviews of the field: I particularly like Paul Christiano’s Breakdown and Rohin Shah’s literature review and interview. The thing I’m trying to do differently here is focus on the motivations behind the work. AI Alignment work is challenging and confusing because it involves reasoning about future risks from a technology we haven’t invented yet. Different researchers have a range of views on how to motivate their work, and this results in a wide range of work, from writing papers on decision theory to training large language models to summarise text. I find it easiest to understand this range of work by framing it as different ways to answer the same fundamental question.

My goal is for this post to be a good introductory resource for people who want to understand what Alignment researchers are actually doing today. I assume familiarity with a good introductory resource, eg Superintelligence, Human Compatible or Richard Ngo’s AGI Safety from First Principles, and that readers have a sense for what the problem is and why you might care about it. I begin with an overview of the most prominent research motivations and agendas. I then dig into each approach, and the work that stems from that view. I especially focus on the different threat models for how AGI leads to existential risk, and the different agendas for actually building safe AGI. In each section, I link to my favourite examples of work in each area, and the best places to read more. Finally, as another way to understand the high-level differences in research motivations, I discuss the different underlying beliefs about how AGI will go, which I’ll refer to as crucial considerations.

Overview

I broadly see there as being 5 main types of approach to Alignment research. I break this piece into five main sections analysing each approach.

Note: The space of Alignment research is quite messy, and it's hard to find a categorisation that carves reality at the joints. As such, lots of work will fit into multiple parts of my categorisation.

- Addressing threat models: We keep a specific threat model in mind for how AGI causes an existential catastrophe, and focus our work on things that we expect will help address the threat model.

- Agendas to build safe AGI: Let’s make specific plans for how to actually build safe AGI, and then try to test, implement, and understand the limitations of these plans. With an emphasis on understanding how to build AGI safely, rather than trying to do it as fast as possible.

- Robustly good approaches: In the long-run AGI will clearly be important, but we're highly uncertain about how we'll get there and what, exactly, could go wrong. So let's do work that seems good in many possible scenarios, and doesn’t rely on having a specific story in mind. Interpretability work is a good example of this.

- De-confusion: Reasoning about how to align AGI involves reasoning about complex concepts, such as intelligence, alignment and values, and we’re pretty confused about what these even mean. This means any work we do now is plausibly not helpful and definitely not reliable. As such, our priority should be to do some conceptual work on how to think about these concepts and what we’re aiming for, and trying to become less confused.

- I consider the process of coming up with each of the research motivations outlined in this post to be examples of good de-confusion work

- Field-building: One of the biggest factors in how much Alignment work gets done is how many researchers are working on it, so a major priority is building the field. This is especially valuable if you think we’re confused about what work needs to be done now, but will eventually have a clearer idea once we’re within a few years of AGI. When this happens, we want a large community of capable, influential and thoughtful people doing Alignment work.

- This is less relevant to technical work than the previous sections. I include it because I both think that technical researchers are often best placed to do outreach and grow the field, and because an excellent way to grow the field is by doing high-quality work that other researchers are excited to build upon.

Within this framework, I find the addressing threat models and agendas to build safe AGI sections the most interesting and think they contain the most diversity of views, so I expand these into several specific models and agendas.

Addressing threat models

There are a range of different concrete threat models. Within this section, I focus on three threat models that I consider most prominent, and which most current research addresses.

- Treacherous turns: We create an AGI that is pursuing large-scale end goals that differ from ours. This results in convergent instrumental goals: the agent is incentivised to do things such as preserve itself and gain power, because these will help it achieve its end goals. In particular, this incentivises the AGI to deceive us into thinking it is aligned until it has enough power to decisively take over and pursue its own arbitrary end goals, known as a treacherous turn. This is the classic case outlined in Nick Bostrom’s Superintelligence, and Eliezer Yudkowsky’s early writing.

- Sub-Threat model: Inner Misalignment. A particularly compelling way this could happen is inner misalignment - the system is itself pursuing a goal, which may not have been the goal that we gave it.. This is notoriously confusing, so I’ll spend more time explaining this concept than the others. See Rob Miles’ video for a more in-depth summary.

- A motivating analogy: Evolution is an optimization process that produced humans, but from the perspective of evolution, humans are misaligned. Evolution is an optimization process which selects for organisms that are good at reproducing themselves. This produced humans, who were themselves optimizers pursuing goals such as food, status, and pleasure. In the ancestral environment pursuing these goals meant humans were good at reproducing, but in the modern world these goals do not optimize for reproduction, eg we use birth control.

- The core problem is that evolution was optimizing organisms for the objective of ‘how well do they survive and reproduce’, but was selecting them according to their performance in the ancestral environment. Reproduction is a hard problem, so it eventually produced organisms that were themselves optimizers pursuing goals. But because these goals just needed to lead to reproduction in the ancestral environment, these goals didn’t need to be the same as evolution’s objective. And now humans are in a different environment, the difference is clear, and this is an alignment failure

- Analogously, we train neural networks with an objective in mind, but just select them according to their performance on the training data. For a sufficiently hard problem, the resulting network may be an optimizer pursuing a goal, but all we know is that the network’s goal has good performance on the training data, according to our goal. We have no guarantee that the network’s goal is the objective we had in mind, and so cannot resolve treacherous turns by setting the right training objective. The problem of aligning the network’s goal with the training objective is the inner alignment problem.

- Sub-Threat model: Inner Misalignment. A particularly compelling way this could happen is inner misalignment - the system is itself pursuing a goal, which may not have been the goal that we gave it.. This is notoriously confusing, so I’ll spend more time explaining this concept than the others. See Rob Miles’ video for a more in-depth summary.

- You get what you measure: The case given by Paul Christiano in What Failure Looks Like (Part 1):

- To train current AI systems we need to give them simple and easy-to-measure reward functions. So, to achieve complex tasks, such as winning a video game, we often need to give them simple proxies, such as optimising score (which can go wrong…)

- Extrapolating into the future, as AI systems become increasingly influential and are trained to solve complex tasks in the real world, we will need to give them easy-to-measure proxies to optimize. Something analogous to, in order to maximise human prosperity, telling them to optimize GDP

- By definition, these proxies will not capture everything we value and will need to be adjusted over time. But in the long-run they may be locked-in, as AI systems become increasingly influential and an indispensable part of the global economic system. An example of partial lock-in is climate change, though the hidden costs of fossil fuels are now clear, they’re so ubiquitous and influential that society is struggling to transition away from them.

- The phenomenon of ‘you get what you measure’ is already common today, but may be much more concerning for AGI for a range of reasons. For example: AI systems are a human incomprehensible black box, meaning it’s hard to notice problems with how they understand their proxies; and AI capabilities may progress very rapidly, making it far harder to regulate the systems, notice problems, or adjust the proxies

- AI Influenced Coordination Failures: The case put forward by Andrew Critch, eg in What multipolar failure looks like. Many players get AGI around the same time. They now need to coordinate and cooperate with each other and the AGIs, but coordination is an extremely hard problem. We currently deal with this with a range of existing international norms and institutions, but a world with AGI will be sufficiently different that many of these will no longer apply, and we will leave our current stable equilibrium. This is such a different and complex world that things go wrong, and humans are caught in the cross-fire.

- This is of relevance to technical researchers because there is research that may make cooperation in a world with many AGIs easier, eg interpretability work.

- Further, the alignment problem is mostly conceived of as ensuring AGI will cooperate with its operator, rather than ensuring a world with many operators and AGIs can all cooperate with each other; a big conceptual shift

Note that this decomposition is entirely my personal take, and one I find useful for understanding existing research. For an alternate perspective and decomposition, see this recent survey of AI researcher threat models. They asked about five threat models (only half of which I cover here), and found that while opinions were often polarised, on average, the five models were rated as equally plausible.

Agendas to build safe AGI

There are a range of agendas proposed for how we might build safe AGI, though note that each agenda is far from a complete and concrete plan. I think of them more as a series of confusions to explore and assumptions to test, with the eventual goal of making a concrete plan. I focus on three agendas I consider most prominent - see Evan Hubinger’s Overview of 11 proposals for building safe advanced AI for more.

- Iterated Distillation and Amplification (IDA): We start with a weak system, and repeatedly amplify it to a more capable but expensive to run system, and distill that amplified version down to one that’s cheaper to run.

- This is a notoriously hard idea to explain well, so I spend more words on it than most other sections. Feel free to skip if you’re already familiar.

- Motivation 1: We distinguish between narrow learning, where a system learns how to take certain actions, eg imitating another system, and ambitious learning, where a system is given a goal but may take arbitrary actions to achieve that goal. Narrow learning seems much easier to align because it won’t give us surprising ways to achieve a goal, but this also inherently limits the capabilities of our system. Can we achieve arbitrary capabilities only with narrow techniques?

- Motivation 2: If a system is less capable than humans, we may be able to look at what it’s doing and understand it, and verify whether it is aligned. But it is much harder to scale this oversight to systems far more capable than us, as we lose the ability to understand what they’re doing. How can we verify the alignment of systems far more capable than us?

- The core idea of IDA:

- We want to build a system to perform a task, eg being a superhuman personal assistant.

- We start with some baseline below human level, which we can ensure is aligned, eg imitating a human personal assistant.

- We then Amplify this baseline, meaning we make a system that’s more expensive to run, but more capable. Eg, we give a human personal assistant many copies of this baseline, and the human can break tasks down into subtasks, and use copies of the system to solve them. Crucially in this example, as we have amplified the baseline by just making copies and giving them to a human, we should expect this to remain aligned.

- We then Distill this amplified system, using a narrow technique to compress it down to a system that’s cheaper to run, though may not be as capable. Eg, we train a system to imitate the amplified baseline. As we are using a narrow technique, we expect this distilled system to be easy to align. And as the amplified baseline is more capable than the distilled system, we can use that to help ensure alignment, achieving scalable oversight.

- We repeatedly amplify then distill. Each time we amplify, our capabilities increase, each time we distill they decrease, but overall they improve - we take two steps forward, then one step back. This means that by repeatedly applying narrow techniques, we could be able to achieve far higher capabilities.

- Caveat: The idea I’ve described is a fairly specific form of IDA. The term is sometimes used to vaguely describe a large family of approaches that recursively break down a complex problem, using some analogue of Amplification and Distillation, and which ensure alignment at each step.

- AI Safety via Debate: Our goal is to produce AI systems that will truthfully answer questions. To do this, we need to reward the system when it says true things during training. This is hard, because if the system is much smarter than us, we cannot distinguish between true answers and sophisticated deception. AI Safety via Debate solves this problem by having two AI systems debate each other, with a third (possibly human) system judging the debate. Assuming that the two debaters are evenly matched, and assuming that it is easier to argue for true propositions than false ones, we can expect the winning system to give us the true answer, even if both debaters are far more capable than the judge.

- Solving Assistance Games: This is Stuart Russell’s agenda, which argues for a perspective shift in AI towards a more human-centric approach.

- This views the fundamental problem of alignment as learning human values. These values are in the mind of the human operator, and need to be loaded into the agent. So the key thing to focus on is how the operator and agent interact during training.

- In the current paradigm, the only interaction is the operator writing a reward function to capture their values. This is an incredibly limited approach, and the field needs a perspective shift to have training processes with much more human-agent interaction. Russell calls these new training processes assistance games.

- Russell argues for a paradigm with 3 key features: we judge systems according to how well they optimise our goals, the systems are uncertain about what these goals are, and these are inferred from our behaviour.

- The focus is on changing the perspective and ways of thinking in the field, rather than on specific technical details, but Russell has also worked on some specific implementations of these ideas, such as Cooperative Inverse Reinforcement Learning

Robustly good approaches

Rather than the careful sequence of logical thought underlying the two above categories, robustly good approaches are backed more by a deep and robust-feeling intuition. They are the cluster thinking to the earlier motivation’s sequence thinking. This means that the motivations tend to be less rigorous and harder to clearly analyse, but are less vulnerable to identifying a single weak point in a crucial underlying belief. Instead there are lots of rough arguments all pointing in the direction of the area being useful. Often multiple researchers may agree on how to push forwards on these approaches, while having wildly different motivations. I focus on the 3 key areas of interpretability, robustness and forecasting.

Note that robustly good does not mean that ‘there is no way this agenda is unhelpful’, it’s just a rough heuristic that there are lots of arguments for the approach being net good. It’s entirely possible that the downsides in fact outweigh the upsides.

(Conflict of interest: Note that I recently started work on interpretability under Chris Olah, and many of the researchers behind scaling laws are now at Anthropic. I formed the views in this section before I started work there, and they entirely represent my personal opinion not those of my employer or colleagues)

- Interpretability: The key idea of interpretability is to demystify the black box of a neural network and better understand what’s going on inside. This often rests on the implicit assumption that a network can be understood. I focus on mechanistic interpretability, which focuses on finding the right tools and conceptual frameworks to interpret a network’s parameters.

- I consider Chris Olah’s Circuits Agenda to be one of the most ambitious and exciting efforts here. It seeks to break a network down into understandable pieces, connected together via human-comprehensible algorithms implemented by the parameters. This has produced insights such as neurons in image networks often encoding comprehensible features, or reverse engineering the network’s parameters to extract the algorithm used to detect curves.

- The key intuition for why to care about this is that many risks are downstream of us not fully understanding the capabilities and limitations of our systems, and this leading to unwise and hasty deployment. Particular reasons I find striking:

- This may allow a line of attack on inner alignment - training a network is essentially searching for parameters with good performance. If many sets of parameters have good performance, then the only way to notice subtler differences is via interpretability

- Understanding systems better may allow better coordination and cooperation between actors with different powerful AIs

- It may allow a saving throw to detect misalignment before deploying a dangerous system in the real world

- We may better understand concrete examples of misaligned systems gaining insight to be used to align them and better understand the problem.

- This case is laid out more fully in Chris Olah’s Views on AGI Safety.

- Robustness: The study of systems that generalise nicely off of the distribution of data it was trained on without catastrophically breaking. Adversarial examples are a classic example of this - where image networks detect subtle textures of an image that are imperceptible to a human. By changing these subtle textures, networks become highly confident that an image is eg a gibbon, while a human thinks it looks like a panda. More generally, robustness is a large subfield of modern Machine Learning, focused on questions of ensuring systems fail gracefully on unfamiliar data, can give appropriate confidences and uncertainties on difficult data points, are robust to adversaries, etc.

- Why care? Fundamentally, many concerns about AI misalignment are forms of accident risk. The operators are not malicious, so if a disaster happens it is likely because the system did well in training but acted unexpectedly badly when deployed in the real world. The operators aren’t trying to cause extinction! The real world is a different distribution of data than training data, and so this is fundamentally a failure of generalisation. And better understanding these failures seems valuable.

- Eg, deception during training that is stopped once the AI is no longer under our control is an example of (very) poor generalisation

- Eg, systemic risks such as all self-driving cars in a city failing all at once

- Eg, systems failing to sensibly during unprecedented world events, eg a self-driving car not coping with snow in Texas, or a personal assistant AI scheduling in-person appointments during a pandemic

- Dan Hendrycks makes the case for the importance of robustness (and other subfields of ML)

- Why care? Fundamentally, many concerns about AI misalignment are forms of accident risk. The operators are not malicious, so if a disaster happens it is likely because the system did well in training but acted unexpectedly badly when deployed in the real world. The operators aren’t trying to cause extinction! The real world is a different distribution of data than training data, and so this is fundamentally a failure of generalisation. And better understanding these failures seems valuable.

- Forecasting: A key question when thinking about AI Alignment is timelines - how long until we produce human-level AI? If the answer is, say, over 50 years, the problem is far less urgent and high-priority than if it’s 20. On a more granular level, with forecasting we might seek to understand what capabilities to expect when, which approaches might scale to AGI and which will hit a wall, which capabilities will see continuous growth vs a discontinuous jump, etc.

- In my opinion, some of the most exciting work here is scaling laws, which take a high-energy physics style approach to systematically studying large language models. These have found that scale is a major driver in model performance, and further that this follows smooth and predictable laws, as we might expect from a natural process in physics.

- The loss can be smoothly extrapolated from our current models, and seems to be driven by power laws in the available data, compute and model size

- These extrapolations have been confirmed by later models such as GPT-3, and so have made genuine predictions rather than overfitting to existing data.

- Ajeya Cotra has extended this research to estimate timelines until our models scale to the capabilities of the human brain.

- The case for this is fairly simple - if we better understand how long we have until AGI and what the path there might look like, we are far better placed to tackle the ambitious task of doing useful work now to influence a future technology.

- This may be decision relevant, eg a 10 year plan to go into academia and become a professor makes far more sense with long timelines, while doing directly useful work in industry now may make more sense with short timelines

- If we understand which methods will and will not scale to AGI, we may better prioritise our efforts towards aligning the most relevant current systems.

- Jacob Steinhardt gives a longer case for the importance of forecasting

- In my opinion, some of the most exciting work here is scaling laws, which take a high-energy physics style approach to systematically studying large language models. These have found that scale is a major driver in model performance, and further that this follows smooth and predictable laws, as we might expect from a natural process in physics.

Key considerations

The point of this post is to help you gain traction on what different alignment researchers are doing and what they believe. Beyond focusing on research motivations, another way I’ve found valuable to get insight is to focus on key considerations - underlying beliefs about AI that often generate the high-level differences in motivation and agendas. So in the sixth and final section I focus on these. There are many possible crucial considerations, but I discuss four that seem to be the biggest generators of action-relevant disagreement:

- Prosaic AI Alignment: To build AGI, we will need to have a bunch of key insights. But perhaps we have already found all the key insights. If so, AGI will likely look like our current systems but better and more powerful. And we should focus on aligning our current most powerful systems and other empirical work. Alternately, maybe we’re missing some fundamental insights and paradigm shifts. If so, we should focus more on robustly good approaches, field-building, conceptual work, etc.

- Sharpness of takeoff: Will the capabilities of our systems smoothly increase between now and AGI? Or will we be taken by surprise, by a discontinuous jump in capabilities? The alignment problem seems much harder in the second case, and we are much less likely to get warning shots - examples of major alignment failures in systems that are too weak to successfully cause a catastrophe.

- Timelines: How long will it be until we have AGI? Work such as de-confusion and field-building look much better on longer timelines, empirical work may look better on shorter timelines, and if your timelines are long enough you probably don’t prioritise AI Alignment work at all.

- How hard is alignment?: How difficult is it going to be to align AGI? Is there a good chance that we’re safe by default, and just need to make that more robustly likely? Or are most outcomes pretty terrible, and we likely need to slow down and radically rethink our approaches?

—------------------------

Regarding future posts in the sequence:

The hope is that this introduction will serve as an accessible and standalone overview of the field, and allows me to get feedback on my breakdown, while providing more urgency on publishing the full sequence. I expect to work on the full thing over Christmas, and expect to publish each section as it’s ready as a further post in a sequence on the Alignment Forum. Each section header that is bolded and underlined will be significantly expanded - I will link from here to posts in the sequence when they’re done. Note: The sequence is not linear, you can read the posts in any order, according to your interests

My main intended contribution is to break down the field of alignment into different research agendas, and to analyse the motivations and theories of change behind them, and to give a lens to analyse the field for someone new and overwhelmed by what’s going on. Please give any feedback you have on ways I do and do not succeed at this, and ways this could have been more useful to you!

You can read a draft of the full sequence here.

Mauricio @ 2021-12-16T03:32 (+8)

Thanks for writing this! Seems useful.

Questions about the overview of threat models:

- Why is it useful to emphasize a relatively sharp distinction between "treacherous turn" and "you get what you measure"? Since (a) outer alignment failures could cause treacherous turns, and (b) there's arguably* been no prominent case made for "you get what you measure" scenarios not involving treacherous turns, my best guess is that "you get what you measure" threat models are a subset of "treacherous turn" threat models.

- Why is it useful to think of AI-influenced coordination failures as a major threat model in the alignment landscape? My intuition would be to think of it as falling under capabilities (since the worry, if I understand it, is that--even if AI systems are aligned with their users--bad things will still happen because coordination is hard?).

*As noted here, WFLLP1 includes, as a key mechanism for bad things getting locked in: "Eventually large-scale attempts to fix the problem are themselves opposed by the collective optimization of millions of optimizers pursuing simple goals." Christiano's more recent writings on outer alignment failures also seem to emphasize deceptive/adversarial dynamics like hacking sensors, which seems pretty treacherous to me. This emphasis seems right; it seems like, by default, any kind of misalignment of sufficiently competent agents (which people are incentivized to eventually design) creates incentives for deception (followed by a treacherous turn), making "you get what you measure" / outer misalignment a subcategory of "treacherous turn."

Neel Nanda @ 2021-12-26T17:56 (+4)

- Why is it useful to think of AI-influenced coordination failures as a major threat model in the alignment landscape? My intuition would be to think of it as falling under capabilities (since the worry, if I understand it, is that--even if AI systems are aligned with their users--bad things will still happen because coordination is hard?).

This may be a disagreement about semantics. As I see it, my goal as an alignment researcher is to do whatever I can to reduce x-risk from powerful AI. And given my skillset, I mostly focus on how I can do this with technical research. And, if there are ways to shape technical development of AI that leads to better cooperation, and this reduces x-risk, I count that as part of the alignment landscape.

Another take is Critch's description of extending alignment to groups of systems and agents, giving the multi-multi alignment problem of ensuring alignment between groups of humans and groups of AIs who all need to coordinate. I discuss this a bit more in the next post.

Mauricio @ 2021-12-26T18:20 (+5)

You're right, this seems like mostly semantics. I'd guess it's most clear/useful to use "alignment" a little more narrowly--reserving it for concepts that actually involve aligning things (i.e. roughly consistently with non-AI-specific uses of the word "alignment"). But the Critch(/Dafoe?) take you bring up seems like a good argument for why AI-influenced coordination failures fall under that.

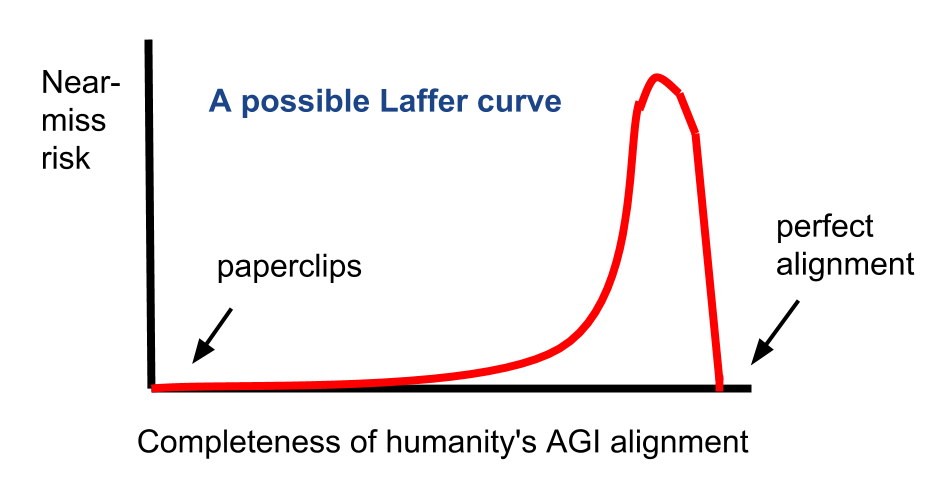

Question Mark @ 2021-12-16T06:50 (+5)

What do you think is the risk of a "near miss" in AI alignment? From a suffering-focused perspective, Brian Tomasik has argued that a slightly misaligned AI has the potential to cause far more suffering compared to a totally unaligned AI.

Jamie_Harris @ 2021-12-26T12:43 (+4)

This seems very useful to me. I've read books by Russel, Christiansen, and Bostrom, plus a load of other misc EA content (EA Forum, EAG, 80k, etc) about AI Alignment but wouldn't have been able to distinguish these separate strands. So for me at least, this seems like very helpful de-confusion.

A couple of questions, if you've got time:

1 In your ~30 conversations with and feedback from others, did you get much of a sense that others disagreed with your general categorisations here? That is, I'm sure that there are various ways that one could conceptually carve up the space, but did you get much feedback suggesting that yours might be wrong in some substantial way? I'm trying to get a sense if this post represents a reasonable but controversial interpretation of the landscape or if it would be widely accepted.

2 You helpfully list some existing resources for each approach. Do you have a sense of roughly how resources (e.g. number of researchers / research hours; philanthropic $s) are currently divided between these different approaches?

(3) (I'd also be interested in how you or others would see the ideal distribution of resources, but I infer from your post that there might be a lot of disagreement about that.)

Neel Nanda @ 2021-12-26T17:51 (+8)

Thanks for the feedback! Really glad to hear it was helpful de-confusion for people who've already engaged somewhat with AI Alignment, but aren't actively researching in the field, that's part of what I was aiming for.

1

I didn't get much feedback on my categorisation, I was mostly trying to absorb other people's inside views on their specific strand of alignment. And most of the feedback on the doc was more object-level discussion of each section. I didn't get feedback suggesting this was wrong in some substantial way, but I'd also expect it to be considered 'reasonable but controversial' rather than widely accepted.

If it helps, I'm most uncertain about the following parts of this conceptualisation:

- Separating power-seeking AI and inner misalignment, rather than merging them - inner misalignment seems like the most likely way this happens

- Having assistance games as an agenda, rather than as a way to address the power-seeking AI or you get what you measure threat models

- Not having recursive reward modelling as a fully fledged agenda (this may just be because I haven't read enough about it to really have my head around it properly)

- Putting reinforcement learning from human feedback under you get what you measure - this seems like a pretty big fraction of current alignment effort, and might be better put under a category like 'narrowly aligning superhuman models'

2

It's hard to be precise, but there's definitely not an even distribution. And it depends a lot on which resources you care about.

A lot of the safety work at industry labs revolves around trying to align large language models, mostly with tools like reinforcement learning from human feedback. I mostly categorise this under you get what you measure, though I'm open to pushback there. This is very resource intensive, especially if you include the costs of training those large language models in the first place, and consumes a lot of capital, engineer time, and researcher time. Though much of the money comes from companies like Google, rather than philanthropic sources.

The other large collections of researchers are at MIRI, who mostly do deconfusion work, and CHAI, who do a lot of things, including a bunch of good field-building, but probably the modal type of work is on training AIs with assistance games? This is more speculative though.

Most of the remaining areas are fairly small, though these are definitely not clear-cut distinctions.

It's unclear which of these resources are most important to track - training large models is very capital intensive, and doing anything with them is fairly labour intensive and needs good engineers. But as eg OpenPhil's recent RFPs show, there's a lot of philanthropic dollars available for researchers who have a credible case for being able to do good alignment research, suggesting we're more bottlenecked by researcher time? And there we're much more bottlenecked by senior researcher time than junior researcher time.

3

Very hard to say, sorry! Personally, I'm most excited about inner alignment and interpretability and really want to see those having more resources. Generally, I'd also want to see a more even distribution of resources for exploration, diversification and value of information reasons. I expect different people would give wildly varying opinions.

Anthony Repetto @ 2021-12-16T06:07 (+3)

My odd angle on your Key Considerations:

- Prosaic AGI: Considering Geoff Hinton's GLOM, and implementations of equivariant capsules (which recently generalized to out-of-distribution grasps after only ten demonstrations!) as well as Sparse Representations of Numenta, the Mixture of Experts models which Jeff Dean seems to support in Google's Pathways speech... it DOES seem like all the important components for a sort of general intelligence are in place. We even have networks extracting symbolic logic and constraints from a few examples. The barriers to composability, analogy, and equivariance don't seem to be that high, and once those are managed I don't see many other hinderances to AGI.

- Sharpness: Improvements in neural networks took years, from the effort of thousands of the best brains; we're likely to have a SLOW take-off, unless the first AGI is magically thousands of times faster than us, too. (If so, why didn't we build a real-time AGI when we had 1/1,000th the processing power?) And, each new improvement is likely to be more difficult to achieve than the last, to such an extent that AGI will hit a maximum - some "best algorithm". That limit to algorithms necessitates a slowing rate of improvement, and we're likely to already be close to that peak. (Narrow AI has already seen multiple 100x and 1,000x gains in performance characteristics, and that can't go on forever.)

- Timeline: With the next round of AI-specialized chips due 2022 (Cerebras has a single chip the size of thousands of normal chips, with memory imbedded throughout to avoid the von Neumann Bottleneck) we'll see a 100x boost to energy-efficiency, which was the real barrier to human-scale AI. Given that the latest AIs are ~1% of a human brain, then a 100x boost puts AI within striking-distance of humans, this next year! I expect AGI to be achievable within 5 years... just look at where neural networks were five years ago.

- Hardness: I suspect that AGI alignment will be forever hard. Like an alien intelligence, I don't see how we can ever really trust it. Yet, I also suspect that narrow super-intelligences will provide us with MOST of the utility that could have been gained from AGI, and those narrow AIs will give us those gains earlier, cheaper, with greater explainability and safety. I would be happy banning AGI until narrow AI is tapped-out and we've had a sober conversation about the remaining benefits of AGI. If narrow AI turns-out to do almost everything we needed, then we can ban AGI without risk or loss. We won't know if we really even need AGI, until we see the limits of narrow AI - and we are nowhere near those limits, yet!

Greg_Colbourn @ 2021-12-16T21:27 (+3)

Thanks for the heads up about Hinton's GLOM, Numenta's Sparse Representations and Google's Pathways. The latter in particular seems especially worrying, given Google's resources.

I don't think your arguments regarding Sharpness and Hardness are particularly reassuring though. If an AGI can be made that runs at "real time", what's to stop someone throwing 10, 100, 1000x more compute at it to make it run correspondingly faster? Will they really have spent all the money they have at their disposal on the first prototype? And even if they did, others with more money could quickly up the ante. (And then obviously once the AGI is running much faster than a human, it can be applied to making itself smarter/faster still, etc -> FOOM)

And as for banning AGI - if only this were as easily done as said. How exactly would we go about banning AGI? Especially in such a way that Narrow AI was allowed to continue (so e.g. banning large GPU/TPU clusters wouldn't be an option)?

Anthony Repetto @ 2021-12-17T02:31 (+1)

Oh, my apologies for not linking to GLOM and such! Hinton's work toward equivariance is particularly interesting because it allows an object to be recognized under myriad permutations and configurations; the recent use of his style of NN in "Neural Descriptor Fields" is promising - their robot learns to grasp from only ten examples, AND it can grasp even when pose is well-outside the training data - it generalizes!

I strongly suspect that we are already seeing the "FOOM," entirely powered by narrow AI. AGI isn't really a pre-requisite to self-improvement: Google used a narrow AI to lay their chips' architecture, for AI-specialized hardware. My hunch is that these narrow AI will be plenty, yet progress will still lurch. Each new improvement is a harder-fought victory, for a diminishing return. Algorithms can't become infinitely better, yet AI has already made 1,000x leaps in various problem-sets ... so I don't expect many more such leaps, ahead.

And, in regards to '100x faster brain'... Suppose that an AGI we'd find useful starts at 100 trillion synapses, and for simplicity, we'll call that the 'processing speed' if we run a brain in real-time. "100 trillion synapses-seconds per second" So, if we wanted a brain which was equally competent, yet also running 100x faster, then we would need 100x the computing power, running in parallel to speed operations. That would be 100x more expensive, and if you posit that you had such power on-hand today, then there must have been an earlier date when the amount of compute was only "100 trillion synapses-seconds per second", enough for a real-time brain, only. You can't jump past that earlier date, when only a real-time brain was feasible. You wouldn't wait until you had 100x compute; your first AGI will be real-time, if not slower. GPT-3 and Dall-E are not 'instantaneous', with inference requiring many seconds. So, I expect the same from the first AGI.

More importantly, to that concept of "faster AGI is worth it" - an AGI that requires 100x more brain than a narrow AI (running at the same speed regardless of what that is) would need to be more than 100x as valuable. I doubt that is what we will find; the AGI won't have magical super-insight compared to narrow AI given the same total compute. And, you could have an AGI that is 1/10th the size, in order to run it 10x faster, but that's unlikely to be useful anywhere except a smartphone. For any given quantity of compute, you'd prefer the half-second-response super-sized brain over the micro-second-response chimp brain. At each of those quantities of compute, you'll be able to run multiple narrow AIs at similar levels of performance to the singular AGI, so those narrow AIs are probably worth more.

As for banning AGI - I have no clue! Hardware isn't really the problem; we're still far from tech which could cheaply supply human-brain-scale AI to the nefarious individual. It'd really be nations doing AGI. I only see some stiff sanctions and inspections-type stuff, a la nuclear, as ever really happening. Deployment would be difficult to verify, especially if narrow AI is really good at most things such that we can't tell them apart. If nations formed a kind of "NATO-for-AGI", declaring publicly to attack any AGI? Only the existing winners would want to play on the side of reducing options for advancement like that, it seems. What do you think?

Greg_Colbourn @ 2021-12-17T16:10 (+3)

"Neural Descriptor Fields" is promising - their robot learns to grasp from only ten examples

Thanks for these links. Incredible (and scary) progress!

cheaply supply human-brain-scale AI to the nefarious individual

I think we're coming at this from different worldviews. I'm coming from much more of a Yudkowsky/Bostrom perspective, where the thing I worry about is misaligned superintelligent AGI; an existential risk by default. For a ban on AGI to be effective against this, it has to stop every single project reaching AGI. There won't be a stage that lasts any appreciable length of time (say, more than a few weeks) where there are AGIs that can be destroyed/stopped before reaching a point of no return.

then there must have been an earlier date when the amount of compute was only "100 trillion synapses-seconds per second", enough for a real-time brain, only.

Yes, but my point above was that the very first prototype isn't going to use all the compute available. Available compute is a function of money spent. So there will very likely be room to significantly speed up the first prototype AGI as soon as it's deployed. We may very well be at a point now where if all the best algorithms were combined, and $10T spent on compute, we could have something approximating an AGI. But that's unlikely to happen as there are only maybe 2 entities that can spend that amount of money (the US government and the Chinese government), and they aren't close to doing so. However, if it gets to only needing $100M in compute, then that would be within reach of many players that could quickly ramp that up to $1B or $10B.

Each new improvement is a harder-fought victory, for a diminishing return.

Do you think this is true even in the limit of AGI designing AGI? Do you think human level is close to the maximum possible level of intelligence? When I mentioned "FOOM" I meant it in the classic Yudkowskian fast takeoff to superintelligence sense.

Anthony Repetto @ 2021-12-18T22:52 (+1)

Oh, and my apologies for letting questions dangle - I think human intelligence is very limited, in the sense that it is built hyper-redundant against injuries, and so its architecture must be much larger in order to achieve the same task. The latest upgrade to language models, DeepMind's RETRO architecture achieves the same performance as GPT-3 (which is to say, it can write convincing poetry) while using only 1/25th the network. GPT-3 was only 1% of a human brain's connectivity, so RETRO is literally 1/2,500th of a human brain, with human-level performance. I think narrow super-intelligences will dominate, being more efficient than AGI or us.

In regards to overall algorithmic efficiency - in only five years we've seen multiple improvements to training and architecture, where what once took a million examples needs ten, or even generalizes to unseen data. Meanwhile, the Lottery Ticket can make a network 10x smaller, while boosting performance. There was even a supercomputer simulation which neural networks sped 2 BILLION-fold... which is insane. I expect more jumps in the math ahead, but I don't think we have many of those leaps left before our intelligence-algorithms are just "as good as it gets". Do you see a FOOM-event capable of 10x, 100x, or larger gains left to be found? I would bet there is a 100x is waiting, but it might become tricky and take successively more resources, asymptotic...

Greg_Colbourn @ 2021-12-21T20:42 (+2)

I think AGI would easily be capable of FOOM-ing 100x+ across the board. And as for AGI being developed, it seems like we are getting ever closer with each new breakthrough in ML (and there doesn't seem to be anything fundamentally required that can be said to be "decades away" with high conviction).

Anthony Repetto @ 2021-12-18T22:38 (+1)

Thank you for diving into this with me :) We might be closer on the meat of the issues than it seems - I sit in the "alignment is exceptionally hard and worthy of consideration" camp, AND I see a nascent FOOM occurring already... yet, I point to narrow superintelligence as the likely mode for profit and success. It seems that narrow AI is already enough to improve itself. (And, the idea that this progress will be lumpy, with diminishing returns sometime soon, is merely my vague forecast informed by general trends of development.) AGI may be attainable at any point X, yet narrow superintelligences may be a better use of those same total resources.

More importantly, if narrow AI could do most of the things we want, that tilts my emphasis toward "try our best to stop AGI until we have a long, sober conversation, having seen what tasks are left undone by narrow AI." This is all predicated on my assumption that "narrow AI can self-iterate and fulfill most tasks competently, at lower risk than AGI, and with fewer resources." You could call me a "narrow-minded FOOMist"? :)

Greg_Colbourn @ 2021-12-21T20:33 (+2)

Maybe your view is closer to Eric Drexler's CAIS? That would be a good outcome, but it doesn't seem very likely to be a stable state to me, given that the narrow AIs could be used to speed AGI development. I don't think the world will coordinate around the idea of narrow AIs / CAIS being enough, without a lot of effort around getting people to recognise the dangers of AGI.

Anthony Repetto @ 2021-12-23T11:56 (+1)

Oh, thank you for showing me his work! As far as I can tell, yes, Comprehensive AI Services seems to be what we are entering already - with GPT-3's Codex writing functioning code a decent percentage of the time, for example! And I agree that limiting AGI would be difficult; I only suppose that it wouldn't hurt us to restrict AGI, assuming that narrow AI does most tasks well. If narrow AI is comparable in performance, (given equal compute) then we wouldn't be missing-out on much, and a competitor who pursues AGI wouldn't see an overwhelming advantage. Playing it safe might be safe. :)

And, that would be my argument nudging others to avoid AGI, more than a plea founded on the risks by themselves: "Look how good narrow AI is, already - we probably wouldn't see significant increases in performance from AGI, while AGI would put everyone at risk." If AGI seems 'delicious', then it is more likely to be sought. Yet, if narrow AI is darn-good, AGI becomes less tantalizing.

And, for the FOOMing you mentioned in the other thread of replies, one source of algorithmic efficiency is a conversion to symbolic formalism that accurately models the system. Once the over-arching laws are found, modeling can be orders of magnitude faster, rapidly. [e.g. the distribution of tree-size in undisturbed forests always follows a power-law; testing a pair of points on that curve lets you accurately predict all of them!]

Yet, such a reduction to symbolic form seems to make the AI's operations much more interpretable, as well as verifiable, and those symbols observed within its neurons by us would not be spoofed. So, I also see developments toward that DNN-to-symbolic bridge as key to BOTH a narrow-AI-powered FOOM, as well as symbolic rigor and verification to protect us. Narrow AI might be used to uncover the equations we would rather rely upon?