AISN #28: Center for AI Safety 2023 Year in Review

By Center for AI Safety, Dan H @ 2023-12-23T21:31 (+17)

This is a linkpost to https://newsletter.safe.ai/p/aisn-28-center-for-ai-safety-2023

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

As 2023 comes to a close, we want to thank you for your continued support for AI safety. This has been a big year for AI and for the Center for AI Safety. In this special-edition newsletter, we highlight some of our most important projects from the year. Thank you for being part of our community and our work.

Center for AI Safety’s 2023 Year in Review

The Center for AI Safety (CAIS) is on a mission to reduce societal-scale risks from AI. We believe this requires research and regulation. These both need to happen quickly (due to unknown timelines on AI progress) and in tandem (because either one is insufficient on its own). To achieve this, we pursue three pillars of work: research, field-building, and advocacy.

Research

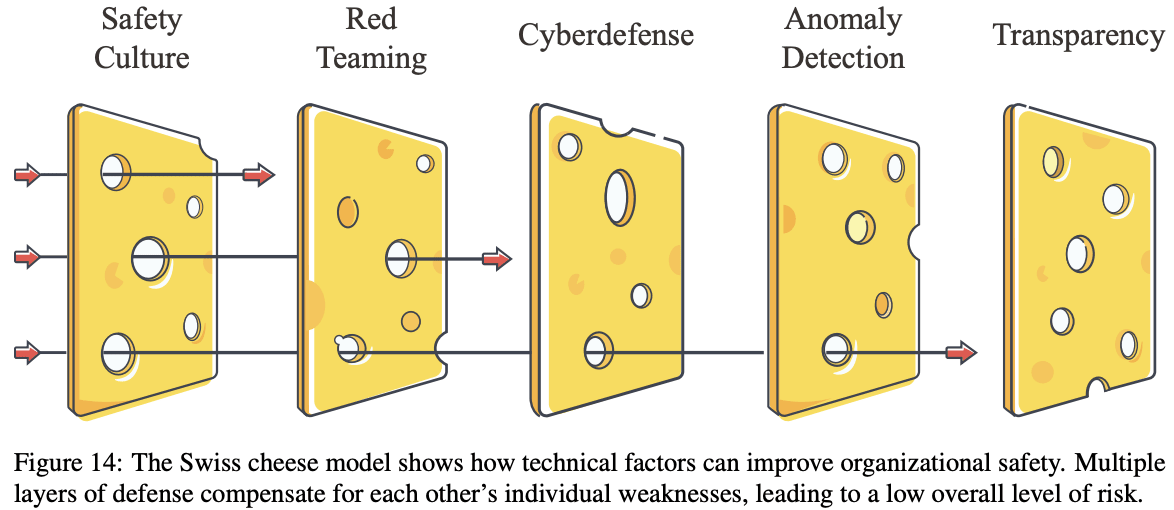

CAIS conducts both technical and conceptual research on AI safety. We pursue multiple overlapping strategies which can be layered together to mitigate risk (“defense in depth”). Though no individual technique brings risk to zero, we hope to build layered defenses which reduce risks to a negligible level.

Here are a few highlights from our technical research in 2023:

- LLM Attacks: bypassed the safety guardrails on GPT-4, Claude, Bard, and Llama 2, causing models to behave dangerously such as by outputting instructions for building a bomb. This work created the field of automatic adversarial attacks for LLMs. It was covered by the New York Times.

- Representation Engineering: the first paper controlling the internals of models to cause models to lie or be honest. Experiments show these techniques can make AIs more truthful, power-averse, and ethical.

- MACHIAVELLI Benchmark: evaluated the ability of AI agents to make ethical decisions. The benchmark provides 13 measures of ethical behavior on deception, rule-following, power-seeking, and utility. Published as an oral paper in ICML 2023.

- DecodingTrust: showed that GPT-4 is more vulnerable to misleading targeted system prompts than other models. It won the outstanding paper award at NeurIPS 2023.

- We also published research on rule-following for LLMs and unrestricted adversarial attacks.

- Our researchers are helping multiple labs, such as OpenAI and Meta, red-team their models.

We also conducted conceptual research about AI safety:

- An Overview Catastrophic AI Risks provides a comprehensive overview of AI risks. (Wall Street Journal)

- Natural Selection Favors AIs over Humans argues that AI development will be shaped by natural selection, which will lead to selfish AIs which prioritize their own proliferation over human goals. (TIME op-ed)

- AI Deception: A Survey of Examples, Risks, and Potential Solutions provides empirical examples of AI deception, discusses the risks arising from it, and proposes technical and policy solutions.

Building on our research expertise, CAIS helped host the NeurIPS 2023 Trojan Detection Competition, which included a new track on red-teaming large language models. Over 125 teams participated and submitted over 3400 submissions.

Field-Building

CAIS aims to create a thriving research ecosystem that will drive progress towards safe AI. We pursued that goal in 2023 by providing compute infrastructure for AI researchers, creating educational resources for learning about the field, and other activities.

Compute cluster. Conducting useful AI safety research often requires working with cutting-edge models, but running large-scale models is expensive and cumbersome. These difficulties often prevent researchers from pursuing advanced AI safety research. In February 2023, CAIS launched a compute cluster to provide free compute to researchers working on AI safety.

As of November 2023, we have onboarded around 200 users working on 63 AI safety projects. A total of 32 papers have been produced using the CAIS compute cluster, including:

- How to Catch an AI Liar

- Function Vectors in Large Language Models

- Unified Concept Editing in Diffusion Models

- Defending Against Transfer Attacks From Public Models

- Universal Jailbreak Backdoors from Poisoned Human Feedback

- Seek and You Will Not Find: Hard-to-Detect Trojans in Deep Neural Networks

- BIRD: Generalizable Backdoor Detection and Removal for Deep Reinforcement Learning

- The Reversal Curse: LLMs trained on "A is B" fail to learn "B is A"

- … and 24 more papers.

70% of labs that responded to our user survey noted their research project would not have been possible in its current scope without the compute cluster; the other 30% responded that the cluster significantly accelerates their research progress.

Philosophy Fellowship. CAIS hosted a dozen academic philosophers for a seven month research fellowship this year. They produced 21 research papers on AI safety on topics such as power-seeking AI and the moral status of AI agents, as well as other topics (more here). They’ve also initiated three workshops at leading philosophy conferences, two books, various op-eds, and a special issue of a top journal (which received over 30 research papers) all focused on AI safety. This greatly accelerates AI safety developing into an interdisciplinary enterprise.

Events. CAIS brought together 20 legal academics, policy researchers, and policymakers for a three day workshop on Law and AI Safety. A group of attendees went on to found a consortium of legal academics interested in AI safety. They have also been working on a research agenda compendium, which is soon to be released. Of respondents to our survey, 100% researchers came away with more research ideas; 91% reported that they found the workshop very useful for meeting research collaborators.

We helped organize socials on ML Safety at ICML and NeurIPS, two top AI conferences, and roughly 300 researchers showed up at each to discuss AI safety. We participated in China’s largest AI conference, the World AI Conference in Shanghai, where we facilitated talks on AI safety that reached more than 30,000 viewers.

Textbook. An Introduction to AI Safety, Ethics, and Society is a new textbook which will be published in an academic press early next year. It aims to provide an accessible and comprehensive introduction to AI safety that draws on safety engineering, economics, philosophy, and other disciplines. Those who would like to take a free online course based on the textbook can express their interest here.

Online Course. Additionally, CAIS ran two online cohorts of Introduction to ML Safety, using a curriculum we developed last summer. These programs collectively onboarded ~100 students, researchers, and industry engineers to AI safety.

Advocacy

Public awareness and understanding of AI safety can encourage well-informed technical and policy solutions. CAIS advises governments and writes publicly in order to share information about AI safety.

Statement on AI Risk. CAIS published the statement on AI extinction risk which notably raised public and government awareness of the scale and importance of AI risks. Crucially, the statement on AI risk has placed AI extinction risk firmly within the Overton window of acceptable public discourse.

The statement has significantly affected the thinking of top leaders in the US and UK. Rishi Sunak directly responded to the statement on AI risk, stating that “The [UK] government is looking very carefully at this.” The White House Press Secretary, Karine Jean-Pierre, was asked about the statement, and commented that AI "is one of the most powerful technologies that we see currently in our time. But in order to seize the opportunities it presents, we must first mitigate its risks." The President of the European Commission’s State of the Union address quoted the statement in full.

The statement was covered by media outlets, including the New York Times (Front Page), The Guardian, BBC News, Reuters, The Washington Post, CNN, Financial Times, National Public Radio (NPR), The Times, London, Bloomberg, and The Wall Street Journal (WSJ).

Advising Policymakers. CAIS served as one of the primary technical advisors working with the UK Task Force on the science track for the UK AI Safety Summit. We responded to the NTIA’s Request For Information with a proposed regulatory framework for AI. We were invited to join the World Economic Forum’s AI Governance Alliance, and are advising xAI, the UK State Department, the US State Department, and other governmental bodies. Lastly, CAIS helped initiate and advise a $20M grantmaking fund on AI safety from the National Science Foundation.

Communications & Media. The public needs accurate and credible information on AI Safety. CAIS aims to fill that need with our two newsletters with over 7,500 subscribers, and our public writing in outlets such as TIME and the Wall Street Journal. Separate from the statement-related engagements, CAIS has had over 50 major media engagements. CAIS Director Dan Hendrycks was named one of Time’s 100 Most Influential People in AI.

Looking Ahead

We have a number of projects set to launch in 2024. Over the coming months, these projects aim to mitigate catastrophic biorisks, enhance international coordination, and conduct technical research to enable safety standards and regulations.

Support Our Work

2023 was a big year and 2024 is shaping up to be even more critical. Your tax-deductible donation makes our work possible. You can support the Center for AI Safety's mission to reduce societal scale risks from AI by donating here.

Subscribe here to receive future versions.

Vasco Grilo @ 2023-12-23T21:40 (+4)

Thanks for sharing. It looks like you had a very productive year! To get a better sense of the cost-effectiveness, could you share what was CAIS' overall spending in 2023?