Attention on AI X-Risk Likely Hasn't Distracted from Current Harms from AI

By Erich_Grunewald 🔸 @ 2023-12-21T17:24 (+194)

This is a linkpost to https://www.erichgrunewald.com/posts/attention-on-existential-risk-from-ai-likely-hasnt-distracted-from-current-harms-from-ai/

Summary

In the past year, public fora have seen growing concern about existential risk (henceforth, x-risk) from AI. The thought is that we could see transformative AI in the coming years or decades, that it may be hard to ensure that such systems act with humanity's best interests in mind and that those highly advanced AIs may be able to overpower us if they aimed to do so, or otherwise that such systems may be catastrophically misused. Some have reacted by arguing that concerns about x-risk distract from current harms from AI, like algorithmic bias, job displacement and labour issues, environmental impact and so on. And in opposition to those voices, others have argued that attention on x-risk does not draw resources and attention away from current harms -- that both concerns can coexist peacefully.

The claim that x-risk distracts from current harms is contingent. It may be true, or not. To find out whether it is true, it is necessary to look at the evidence. But, disappointingly, and despite people confidently asserting the claim's truth and falsity, no one seems to have looked at the evidence. In this post, I do look at evidence. Overall, the data I look at provides some reason to think that attention on x-risk has not, so far, reduced attention or resources devoted to current harms. In particular, I consider five strands of evidence, none conclusive on its own:

- Policies enacted since x-risk gained traction

- Search interest

- Twitter/X followers of AI ethics advocates

- Funding of organisations working to mitigate current harms

- Parallels from environmentalism

I now do not think this is an important discussion. It would be better if everyone involved discussed the probability and scale of the risks, of current harms or x-risk, rather than whether one distracts from another. That is because agreement on the risks would dissolve the disagreement over whether x-risk is a distraction, and disagreement over distractions may be intractable while there are such strong disagreements over the risks.

The Argument

Meredith Whitaker (June 2023): "A fantastical, adrenalizing ghost story is being used to hijack attention around what is the problem that regulation needs to solve." Deborah Raji (June 2023): "[The] sci-fi narrative distracts us from those tractable areas that we could start working on today." Nature (June 2023): "Talk of AI destroying humanity plays into the tech companies' agenda, and hinders effective regulation of the societal harms AI is causing right now." Mhairi Aitken (of the Alan Turing Institute) for New Scientist (June 2023): "These claims are scary -- but not because they are true. They are scary because they are significantly reshaping and redirecting conversations about the impacts of AI and what it means for AI to be developed responsibly." Aidan Gomez (June 2023): "[T]o spend all of our time debating whether our species is going to go extinct because of a takeover by a superintelligent AGI is an absurd use of our time and the public's mindspace. [...] [Those debates are] distractions from the conversations that should be going on." Noema (July 2023): "Making AI-induced extinction a global priority seems likely to distract our attention from other more pressing matters outside of AI, such as climate change, nuclear war, pandemics or migration crises."[1] Joy Buolamwini (October 2023): "One problem with minimizing existing AI harms by saying hypothetical existential harms are more important is that it shifts the flow of valuable resources and legislative attention." Lauren M. E. Goodlad (October 2023): "The point is [...] to prevent narrowly defined and far-fetched risks from diverting attention from actually existing harms and broad regulatory goals." Nick Clegg, President of Global Affairs at Meta (November 2023): "[It's important to prevent] proximate challenges [from being] crowded out by a lot of speculative, sometimes somewhat futuristic predictions."

These opinions -- all expressed during the past summer and fall -- were a response to the increased attention given to existential risk (henceforth, x-risk) from advanced AI last spring. Interest in x-risk[2] increased rapidly then, largely due to the release of GPT-4 on 14 March, and four subsequent events:

- 22 March: The Future of Life Institute publishes an open letter advocating for a pause on training frontier AI.

- 29 March: Eliezer Yudkowsky writes an opinion piece in Time magazine, arguing that AI will pose a risk to humanity and that AI development should therefore be shut down.

- Early May: Geoffrey Hinton quits Google to warn over dangers of AI.

- 30 May: The Center for AI Safety (CAIS) publishes a statement on extinction risk signed by researchers, scientists and industry leaders including Hinton, Yoshua Bengio, Demis Hassabis of Google DeepMind, Sam Altman of OpenAI, Dario Amodei of Anthropic, Ya-Qin Zhang of Tsinghua University and many others.

Such a dizzying array of sentiment could, of course, be backed up by evidence or argument, but that rarely happens. Instead it is simply asserted that attention on x-risk draws interest and resources away from current harms and those who focus on them. There is one laudable exception worth mentioning. Blake Richards, Blaise Agüera y Arcas, Guillaume Lajoie and Dhanya Sridhar, writing for Noema, do provide a causal model, rudimentary though it is, of how this may happen:

Those calling for AI-induced extinction to be a priority are also calling for other more immediate AI risks to be a priority, so why not simply agree that all of it must be a priority? In addition to finite resources, humans and their institutions have finite attention. Finite attention may in fact be a hallmark of human intelligence and a core component of the inductive biases that help us to understand the world. People also tend to take cues from each other about what to attend to, leading to a collective focus of attention that can easily be seen in public discourse.

It is true that humans and institutions have finite attention. That does not necessarily mean there is a trade-off between resources devoted to x-risk and resources devoted to current harms from AI. For example, it could be the case that concern about x-risk draws attention from areas completely unrelated to AI. It could also be the case that attention on one possible harm from a technology draws attention to, and not from, other harms of the same technology.[3] Everyone can think of these possibilities. Why did no one bother to ask themself which effect is more likely? I understand why Liv Boeree seems so exasperated when she writes, "Of course trade-offs exist, and attention is finite, but this growing trend of AI demagogues who view memespace purely as a zero-sum battleground, blindly declaring that their pet concern is more important than all others, who relentlessly straw-man anyone who disagrees, is frankly embarrassing for AI." I understand her, but though I understand her -- and not to pick on Liv Boeree, whom I admire -- she is still committing the same mistake that all those others are, because her claim, closer to the truth though it may be, is asserted without evidence too. The same, by the way, is true of Séb Krier, who writes, "At the moment the discourse feels a bit like if geothermal engineers were angry at carbon capture scientists -- seems pretty counterproductive to me, as there is clearly space for different communities and concerns to thrive. [...] Specialization is fine and you don't have a positive duty to consider and talk about everything."

Simplifying a bit, there are two camps here. One, those who focus on current harms from AI, like algorithmic bias, job displacement and labour issues, environmental impact, surveillance and concentration of power (especially the power of Silicon Valley tech firms, but also other corporations and governments). Central examples here include the field of AI ethics, Joy Buolamwini and the Algorithmic Justice League, Meredith Whittaker, the FAccT conference, Bender et al. (2021), AI Snake Oil and the Toronto Declaration. Two, those who focus on x-risk and catastrophic risk from AI, either by misuse, or accidents, or through AI interacting with other risks, for example, by accelerating or democratising bioengineering capabilities. Central examples here include the field of AI safety, Eliezer Yudkowsky, Stuart Russell, Open Philanthropy, the Asilomar Conference on Beneficial AI, Amodei et al. (2016), LessWrong and the CAIS statement on extinction risk. (When I say that the first camp focuses on "current harms", and the second on "x-risk", I am following convention, but, for reasons I will explain later, I think those labels are flawed, and it would be more accurate to talk about grounded/certain risk versus speculative/ambiguous risk.) Some people and groups work on or care about both sets of issues, but there are clearly these two distinct clusters. (Disclosure: I work in AI governance, and closer to the second camp than the first, but this post represents only my personal views, and not those of my employer.)

Evidence

This section is an attempt at finding out whether attention on x-risk has in fact drawn interest or resources away from current harms and those who focus on them. There is, of course, no individual piece of evidence that can prove whether the proposition is true or not. So I look at five different strands of evidence, and see what they have to say taken together. They are (1) policies enacted since x-risk gained traction, (2) search interest, (3) Twitter/X followers of AI ethics advocates, (4) funding of organisations working to mitigate current harms and (5) parallels from other problem areas, in particular environmentalism. (I will use "AI ethics person/organisation" as a synonym of "person/organisation focusing on current harms", even though some of the harms those people/organisations focus on, e.g. concentration of power, are somewhat speculative, and even though people focusing on x-risk typically do so for ethical reasons, too.) Each strand of evidence taken alone is weak, but together I think they are compelling, and while they don't prove it is the case, I think they provide some reason to think that attention on x-risk does not reduce attention or resources devoted current harms, or at least has not done so up to this time.

AI Policy

The one major piece of Western AI policy developed and issued since x-risk gained traction is the Biden administration's Executive Order on AI, announced at the end of October. If you think x-risk distracts from current harms, you may have expected the Executive Order to have ignored current harms, due to x-risk now being more accepted. But that did not happen. The Executive Order found room to address many different concerns, including issues around bias, fraud, deception, privacy and job displacement.

Joy Buolamwini, founder of the Algorithmic Justice League, says: "And so with what's been laid out, it's quite comprehensive, which is great to see, because we need a full-press approach given the scope of AI." The AI Snake Oil writers say: "On balance, the EO seems to be good news for those who favor openness in AI", openness being the favoured way of ensuring AI is fair, safe, transparent and democratic. The White House announcement itself gestures at "advancing equity and civil rights" and rejects "the use of AI to disadvantage those who are already too often denied equal opportunity and justice". The Scientific American mentions some limitations of the Executive Order, but then writes that "every expert [we] spoke or corresponded with about the order described it as a meaningful step forward that fills a policy void". I have seen criticism of the Executive Order from people focusing on current harms, but those criticisms are of the type, "it focuses too much on applications, and not enough on data and the development of AI systems", or "it will be hard to enforce", not "it does not focus enough on current harms".

Of course that is only the executive branch. The legislative branch, and by far the least popular one, has also kept busy, or as busy as is possible without legislating. Lawmakers have introduced bipartisan bills on content liability (June), privacy-enhancing technologies (July), worker's rights (July), content disclosure (July), electoral interference (September), deepfakes (September) and transparency and accountability (November). Whatever you think about Congress, it does seem to pay close attention to the more immediate harms caused by AI.

The AI Safety Summit, organised by the United Kingdom, is not policy, but seems important enough, and likely enough to influence policy, to mention here. It took place in November, and focused substantially, though not exclusively, on x-risk. For example, of the four roundtables about risks, three covered subjects related to x-risk, and one covered risks to "democracy, human rights, civil rights, fairness and equality". I think this is some evidence that x-risk is crowding out other concerns, but weaker evidence than the Executive Order. That is because it makes sense for an international summit to focus on risks that, if they happen, are necessarily (and exclusively) global in nature. It is the same with environmentalism: littering, habitat loss and air pollution are largely local problems and most effectively debated and addressed locally, whereas climate change is a global problem, and needs to be discussed in international fora. Whenever anyone holds a summit on environmentalism, it may make more sense for them to make it about climate change than habitat loss, even if they care more about habitat loss than climate change. This, at least, was the explicit reasoning of the Summit's organisers, who wrote at the outset that its focus on misuse and misalignment risks "is not to minimise the wider societal risks that such AI [...] can have, including misinformation, bias and discrimination and the potential for mass automation", and that the UK considers these current harms "best addressed by the existing international processes that are underway, as well as nations' respective domestic processes".

Search Interest

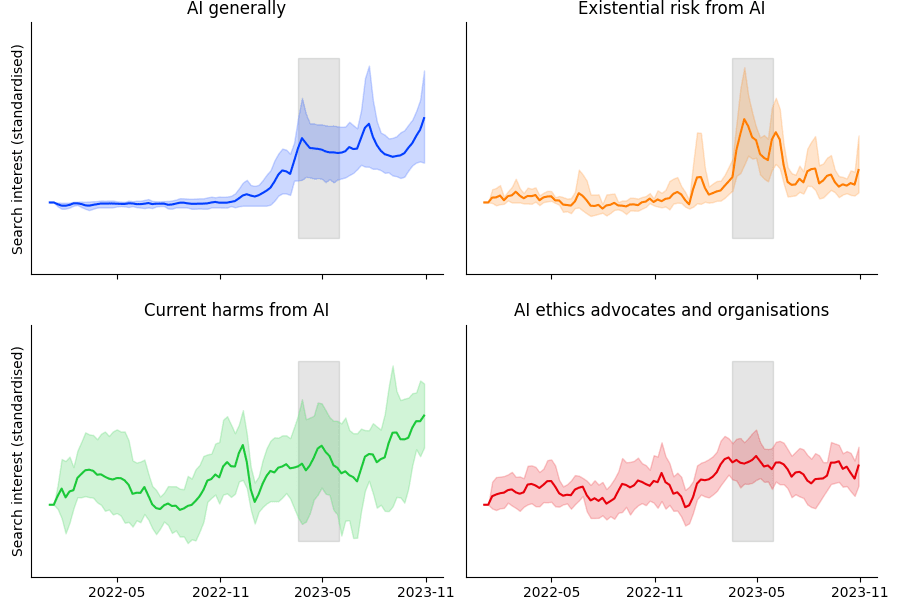

The field of AI ethics -- advocates, researchers, organisations -- is mainly focused on current harms. When looking at Google search data, AI ethics advocates and organisations seem to have gotten as much or more, but not less, attention during and after the period of intense x-risk coverage last spring, as before. The same is true for some current harms themselves. Given a simple causal model, described below, interest in x-risk does not cause less interest in AI ethics advocates or organisations, and for some of them even seems to have increased interest. Given the same causal model, interest in x-risk has not decreased interest in current harms, with the possible exception of copyright issues.

The following plot shows search interest in various topics before, during and after the period last spring when x-risk received increased attention (marked in grey). (Note that the variables are standardised, so you cannot compare different topics to one another.) Interest in AI ethics advocates (Emily M. Bender, Joy Buolamwini, Timnit Gebru, Deborah Raji and Meredith Whittaker) and organisations (the Ada Lovelace Institute, AI Now Institute, Alan Turing Institute, Algorithmic Justice League and Partnership on AI) seems to have been about as large, or larger, during and after x-risk was in the news, as before. Interest in current harms ("algorithmic bias", "AI ethics", "lethal autonomous weapons"[4] and "copyright"[5]) has been increasing since spring, contradicting worries about x-risk being a distraction. If any group has suffered since the increase in attention around x-risk, it is AI safety advocates, and x-risk itself, by regressing back to the mean.

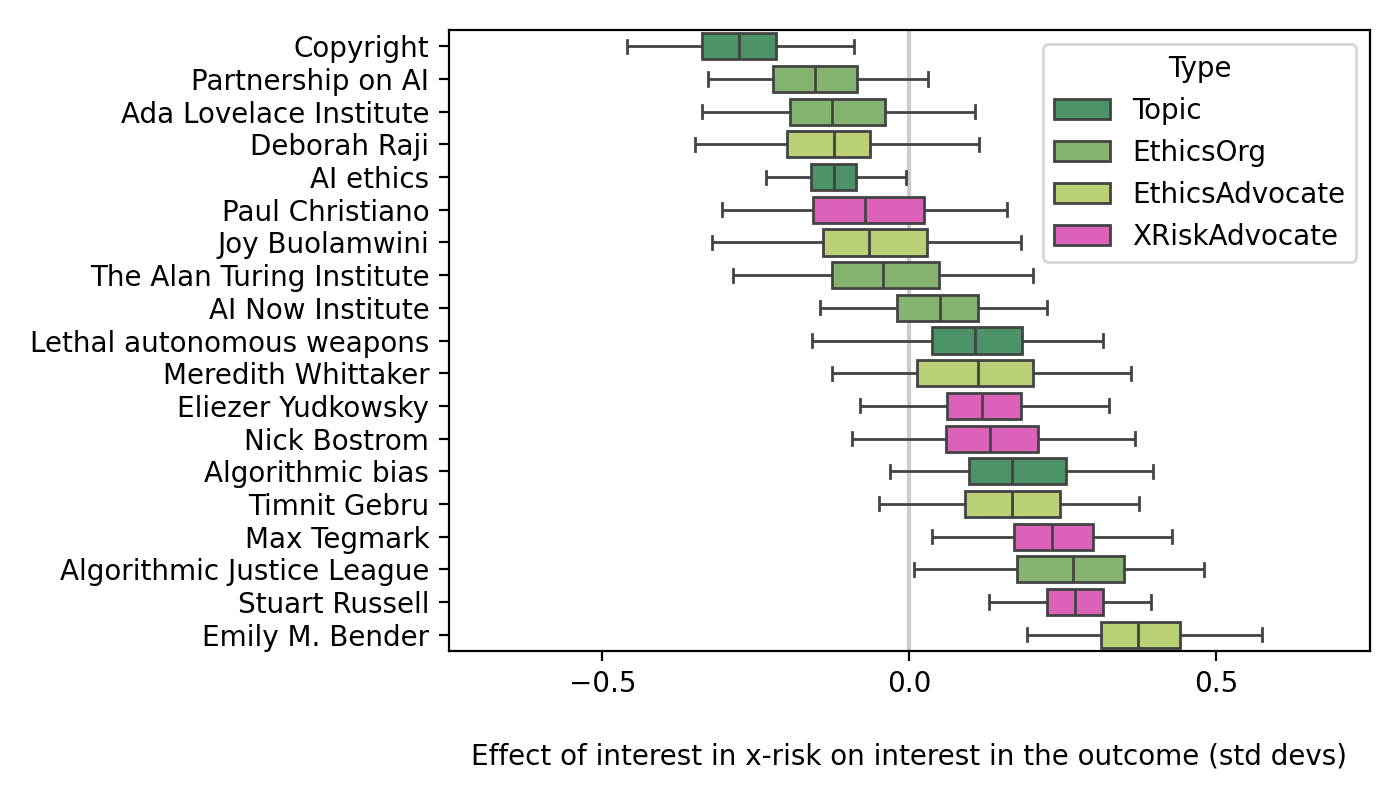

Next, I do statistical inference, using a simple causal model, on Google search data from 1 January 2022. In that model, the outcome -- interest in a current harm, or in an AI ethics advocate, or in an AI ethics organisation -- is causally influenced by interest in x-risk, by interest in AI generally and by unobserved variables. Interest in x-risk is in turn also caused by interest in AI generally, and by other unobserved variables. Interest in AI generally is only caused by yet other unobserved variables. If we assume this causal model, and in particular if we assume that the different unobserved variables are independent of each other, then we get the results shown in the plot below. The plot shows, for each outcome variable, a probability distribution of how large the effect of interest in x-risk is on interest in the outcome variable, measured in standard deviations. For example, an increase of 1 standard deviation in interest in x-risk is associated with a change in interest in copyright of -0.3 (95% CI: -0.4 to -0.1) standard deviations, and with a change in interest in the Algorithmic Justice League of +0.3 (95% CI: +0.1 to +0.5) standard deviations. (These are relatively weak effects. For example, moving from the 50th to the 62nd percentile involves an increase of 0.3 standard deviations.) That is, if the causal model is accurate, interest in x-risk reduces interest in copyright, but increases interest in the Algorithmic Justice League, by a moderate amount. For the most part, the causal effect of interest in x-risk on other topics cannot be distinguished from zero.

Of course the causal model is not accurate. There are likely confounding variables that the model does not condition on. So I admit that this is weak evidence. Still, it is some evidence. As a robustness check, the model does say that interest in x-risk causes more interest in various AI safety advocates, including Nick Bostrom (0.1 standard deviations), Stuart Russell (0.3), Max Tegmark (0.2) and Eliezer Yudkowsky (0.1), which is to be expected, with only the regrettable exception of Paul Christiano (-0.1), who is not very high profile anyway. The table below shows the estimated coefficients for each outcome. It also shows the change in search interest from the period between the launch of ChatGPT and x-risk receiving a lot of attention, to the period after x-risk received a lot of attention; these deltas are almost all positive, sometimes substantially so, suggesting either that x-risk has not decreased interest in those topics because there is no causal mechanism, or that x-risk has not decreased interest in those topics because it is itself no longer attracting much attention, or that x-risk has decreased interest in those topics, but other factors have increased interest in them even more.

| Search topic | Influence of x-risk on topic (std devs) | Change after x-risk gained traction (std devs) |

|---|---|---|

| Algorithmic bias | 0.2 (95% CI: -0.03 to 0.39) | 0.5 |

| AI ethics | -0.1 (95% CI: -0.24 to -0.02) | 1.0 |

| Copyright | -0.3 (95% CI: -0.44 to -0.10) | 1.3 |

| Lethal autonomous weapons | 0.1 (95% CI: -0.16 to 0.30) | -0.5 |

| Emily M. Bender | 0.4 (95% CI: 0.20 to 0.59) | -0.1 |

| Joy Buolamwini | -0.1 (95% CI: -0.24 to 0.13) | 0.3 |

| Timnit Gebru | 0.2 (95% CI: -0.04 to 0.37) | 0.1 |

| Deborah Raji | -0.1 (95% CI: -0.38 to 0.10) | 0.3 |

| Meredith Whittaker | 0.1 (95% CI: -0.06 to 0.35) | 0.6 |

| Ada Lovelace Institute | -0.1 (95% CI: -0.36 to 0.13) | 0.3 |

| AI Now Institute | 0.0 (95% CI: -0.13 to 0.29) | 0.9 |

| Alan Turing Institute | -0.0 (95% CI: -0.26 to 0.17) | -0.2 |

| Algorithmic Justice League | 0.3 (95% CI: 0.06 to 0.49) | 0.3 |

| Partnership on AI | -0.1 (95% CI: -0.30 to 0.03) | 1.0 |

Twitter/X Followers

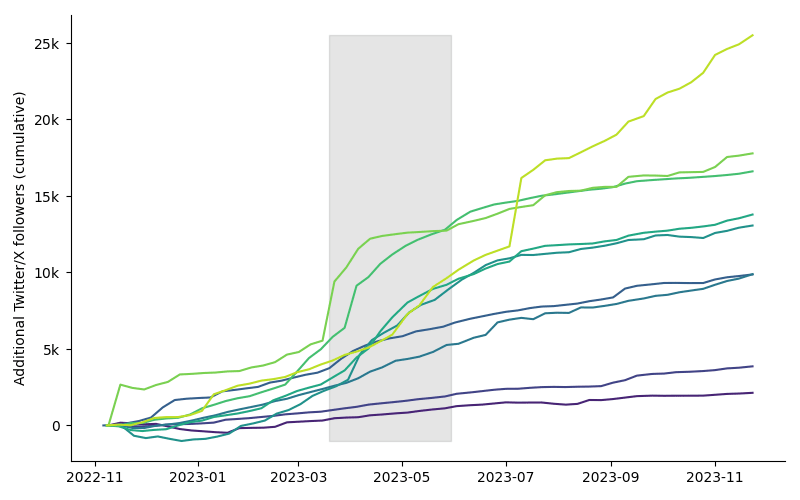

One proxy for how much attention a set of problems is given is how much attention advocates of that set are given, and a proxy for that is how many Twitter/X followers those advocates have. The plot below shows cumulative gains, since just prior to the release of ChatGPT, in Twitter/X followers for people focusing on current harms. It suggests that, during and after the period of intense x-risk coverage last spring (marked in grey), the rate at which AI ethics advocates gained Twitter/X followers not only did not slow, but seems to have increased. At least three advocates saw step change increases in their follower counts around or right after x-risk gained traction: Meredith Whittaker, Arvind Narayanan (of AI Snake Oil) and Emily M. Bender.

I am almost tempted to complain that a fantastical, adrenalizing ghost story is being used to hijack attention around the problem that regulation really needs to solve: x-risk. But I will not, as that would be derisive and also wrong. It would be wrong because, whatever it has done to attention on current harms, it is a tautology that interest in x-risk has also increased attention on x-risk.

Funding

Based on the data I could find, organisations focused on current harms from AI so far don't seem to have a harder time fundraising than they did before x-risk concerns gained traction. The table below shows grants awarded by the Ford Foundation and the MacArthur Foundation, two major funders of AI ethics organisations, in the last few years. The Algorithmic Justice League (AJL), AI for the People (AFP) and the Distributed AI Research Institute (DAIR) received major grants this year, including well after the period of increased attention on x-risk.[6] In addition, on 1 November, ten prominent American philanthropies announced their intention to give >$200M to efforts focusing on current harms, though without specifying a timeline.

| Donor | Recipient | Year | Amount |

|---|---|---|---|

| Ford Foundation | AJL | 2019 | $0.21M |

| " | " | 2020 | $0.20M |

| " | " | 2021 | $0.57M (5y) |

| " | " | August 2023 | $1.33M (3y) |

| " | AFP | 2021 | $0.30M |

| " | DAIR | 2021 | $1.00M (3y) |

| " | " | August 2023 | $1.10M (2y) |

| MacArthur Foundation | AJL | 2023 | $0.50M (2y) |

| " | AFP | 2021 | $0.20M (2y) |

| " | " | 2023 | $0.35M (2y) |

| " | DAIR | 2021 | $1.00M (2y) |

It is true that grantmaking processes can go on for months, and it may take longer than that for broad sentiment shifts to affect grantmakers' priorities. But if you thought that x-risk is drawing resources away from current harms, and imagined a range of negative outcomes, then this should at least make you think the worst and most immediate of those outcomes are implausible, even if the moderately bad, and less immediate, outcomes still could be.

Climate Change

One of the arguments you sometimes hear against the claim that x-risk is a distraction is more of a retort than an argument, and goes like this: "Worries about attention don't seem to crop up in other domains, e.g. environmentalism. That is, for all the complaints that people have about climate 'doomers' -- those who focus on human extinction, tipping points and mass population displacement due to sea level rise -- you hear very little about them being a distraction from current environmental harms, by which we mean habitat loss, overfishing and the decline of bird populations." It goes unsaid that the reason why near-term environmentalists don't say tipping points etc. are a distraction from the very real problems of bird genocide etc., is that they have considered all relevant factors and determined that doomers' concerns do not in fact draw attention or resources away from their own.

I am less sure. I think the real reason is that pure near-term environmentalists hardly exist, and when they do, they do indeed grumble about climate change absorbing all the attention. Within climate change, a mature field of research, the mainstream opinion is that the more speculative harms matter, and current harms, like heat stress or extreme weather, are just not that important in comparison. There are very few people who care about current harms caused by climate change and don't care about more speculative harms caused by climate change. There is far more disagreement in AI. That is true not only for more speculative risks, like x-risk, but also more grounded harms like the use of copyrighted material as training data. So, unlike environmentalism, in AI there is a large group of people who care about current harms, and not about more speculative harms. Of course you will see more disagreement over attention in the field of AI than in environmentalism.

Maybe there should be complaints of this sort. After all, air pollution contributes to about 10% of deaths globally, and in low-income countries, is near or at the top of the list of leading risk factors (Ritchie and Roser 2021). Both air pollution and climate change are caused by emissions. Rob Wiblin brought this argument up recently in an interview with Santosh Harish: "[G]iven that air pollution seems to do so much harm to people's health [...] it's funny that we've focused on trying to get people to do this thing that's a really hard sell because of its global public good nature, when we could have instead been focusing on particulate pollution. Which is kind of the reverse, in that you benefit very immediately." But Santosh Harish did not seem to think climate change had been a distraction. According to him, rich countries started caring about climate change after getting a handle on air pollution, and poor countries have never forgotten about air pollution. It is true that the share of deaths from air pollution (overall, and for high and middle income countries, but not for low and lower middle income countries) are down over the last three decades (Ritchie and Roser 2021), and exposure to outdoor air pollution above WHO guidelines is down in richer countries, and flat (and terrible) in poorer countries, over the last decade (Ritchie and Roser 2022). But of course that could have happened even if climate change has been a distraction. The point is that whether a topic meaningfully distracts from another, more important topic, is contingent. There is no substitute for looking at the details.

Maybe the Real Disagreement Is about How Big the Risks Are

There is something wrong about all this. In fact, several things have gone wrong. The first failure lies in framing the issue as one of future versus current harms. People who object to x-risk do not do so because it lies, if it is real, in the future.[7] They do it because they think the risk is not real, or that it is impossible to know, or that we, at any rate, do not know. Likewise, those who do focus on x-risk do not do so because it is located in the future; that would be absurd. They do so because they think x-risk, which (if it occurs) involves the death of everyone, is more important than current risks, even if it is years away.[8] It would be better to distinguish between grounded/certain risk versus speculative/ambiguous risk. While the "current harms" camp is highly skeptical of speculative/ambiguous risks, the "x-risk" camp is more willing to accept such risks, if they see compelling arguments for them, and to focus on them if the expected value of doing so is great enough.

The other thing that has gone wrong is to do with the level on which the discussion is taking place. The problem is that, instead of discussing what the risks are, people discuss what should be done, given that no one agrees on what the risks are. Have we not put the cart before the horse? You may say, "But given that we do, as a matter of fact, disagree about what the risks are, is it not productive for us to find a way to prioritise, and compromise, nonetheless?" That is possible. But have you actually discussed, at any substantial length, the underlying questions, like whether it is likely that superintelligence emerges in the next decades, or whether AI-powered misinformation will in fact erode democracy? With x-risk people, you see a constant and tormented grappling with the probability of x-risk, and little engagement with the scale and power of other risks. With AI ethics people, there is, in my experience, little of either, with some notable exceptions, e.g. Obermeyer et al. (2019), Strubell, Ganesh, and McCallum (2019) and Acemoglu (2021).[9]

When a normal human imagines a world with millions or billions of AI agents that can perform >99% of the tasks that a human working from home can do, she is concerned. Because she realises that silicon intelligence does not sleep, eat, complain, age, die or forget, and that, as many of those AI agents would be directed at the problem of creating more intelligent AI systems, it would not stop there. We humans would soon cease to be the most intelligent, by which I mean capable, species. That does not on its own mean we are doomed, but it is enough to make her take seriously the risk of doom. Surely whether or not this will happen, is what should really be debated.

If, on the other hand, it turns out that x-risk is minuscule, and we can be reasonably certain about that, then it should indeed be awarded no attention, except in works of science fiction and philosophical thought experiments. No serious person would permit Mayan doomsday fears into policy debates, regardless of how good their policy proposals happen to be. Whichever way you look at it, the crux lies in the risks, and the discussion about distractions is, by way of a pleasing irony, a distraction from more important discussions.

References

Acemoglu, Daron. 2021. “Harms of Ai.” National Bureau of Economic Research.

Amodei, Dario, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, and Dan Mané. 2016. “Concrete Problems in Ai Safety.” https://doi.org/10.48550/ARXIV.1606.06565

Bender, Emily M., Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell. 2021. “On the Dangers of Stochastic Parrots.” In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency. ACM. https://doi.org/10.1145/3442188.3445922

Obermeyer, Ziad, Brian Powers, Christine Vogeli, and Sendhil Mullainathan. 2019. “Dissecting Racial Bias in an Algorithm Used to Manage the Health of Populations.” Science 366 (6464): 447--53.

Ord, Toby. 2020. The Precipice: Existential Risk and the Future of Humanity. Hachette Books.

Ritchie, Hannah, and Max Roser. 2021. “Air Pollution.” Our World in Data.

------. 2022. “Outdoor Air Pollution.” Our World in Data.

Strubell, Emma, Ananya Ganesh, and Andrew McCallum. 2019. “Energy and Policy Considerations for Deep Learning in Nlp.”

The authors of the Noema piece argue not that AI x-risk distracts from current harms of AI, but that it distracts from other sources of societal-scale risk. In that way, they are different from the others listed here. ↩︎

I use "x-risk" here to refer to x-risk from AI in particular. Other potential sources of x-risk include engineered pandemics and nuclear war, but I am not concerned with them here, except to the extent that they are increased or decreased by the existence of advanced AI. "Existential risks" are "risks that threaten the destruction of humanity's long-term potential", for example, by human extinction (Ord 2020). ↩︎

Some discussions around existential risk from AI are also in part discussions around current harms and vice versa, for example, how auditing, liability and evaluations should be designed, if they should. But of course the policy that perfectly mitigates x-risk is necessarily not identical to the policy that perfectly mitigates current harms. There may need to be some amount of compromise, or prioritisation. ↩︎

It is true that lethal autonomous weapons, and military applications of AI in general, are not things that the "current harms" camp focuses on a lot, and are things that have seen some attention from people in the "x-risk" camp, especially the Future of Life Institute. It is still a useful variable to analyse here. If x-risk distracts from current harms, or from less speculative harms, then it should also distract from risks around military applications from AI. ↩︎

The copyright variable is fairly uninformative, given that copyright is such a broad subject, touching on far more than just AI-related issues. ↩︎

I don't know when in 2023 the MacArthur grants were made, but the grant pages were updated on 1 November, so perhaps they were awarded in September or October? Still, it could be that the two MacArthur grants this year were awarded before x-risk got attention last spring. If so, they are not evidence that the recipients are still able to fundraise effectively after x-risk gained traction, and also not evidence against that claim. ↩︎

Perhaps people focusing on current harms would disagree with prioritising future risks if those risks were centuries or millennia away. But if x-risks were so distant, most people who currently work on x-risk, would no longer do so. The reason why they work on AI x-risk is that it is, according to them, within sight. So the aspect of time is not the disagreement it is made out to be. ↩︎

Needless to say, those who advocate for x-risk mitigation believe it could happen in the coming years or decades, not centuries or millennia. And while it is true that the value of the future may motivate people who focus on x-risk more than those who do not, I do not think that is a crux either. If it could be proven beyond doubt that there is a real chance (>10%) that every human being dies in the next few decades, everyone would agree that this risk should be a key priority. And you do not need to be a utilitarian to value the continued existence of the human race. ↩︎

How often do you see texts about current harms judging the scale and magnitude of those harms, as opposed to illustrating their argument with a vivid anecdote or two, at best? It is a rhetorical question, and the answer is, not often. ↩︎

RyanCarey @ 2023-12-22T16:51 (+20)

I think that disagreement about the size of the risks is part of the equation. But it's missing what is, for at least a few of the prominent critics, the main element - people like Timnit, Kate Crawford, Meredith Whittaker are bought in leftie ideologies focuses on things like "bias", "prejudice", and "disproportionate disadvantage". So they see AI as primarily an instrument of oppression. The idea of existential risk cuts against the oppression/justice narrative, in that it could kill everyone equally. So they have to opposite it.

Obviously this is not what is happening with all people in the FATE AI, or AI Ethics community, but I do think it's what's driving some of the loudest voices, and that we should be clear-eyed about it.

freedomandutility @ 2023-12-22T23:40 (+22)

I disagree because I think these people would be in favour of action to mitigate x-risk from extreme climate change and nuclear war.

RyanCarey @ 2023-12-23T13:07 (+14)

Interesting point, but why do these people think that climate change is going to cause likely extinction? Again, it's because their thinking is politics-first. Their side of politics is warning of a likely "climate catastrophe", so they have to make that catastrophe as bad as possible - existential.

Daniel_Friedrich @ 2023-12-24T11:23 (+4)

The idea of existential risk cuts against the oppression/justice narrative, in that it could kill everyone equally. So they have to opposite it.

That seems like an extremely unnatural thought process. Climate change is the perfect analogy - in these circles, it's salient both as a tool of oppression and an x-risk.

I think far more selection of attitudes happens through paying attention to more extreme predictions, rather than through thinking / communicating strategically. Also, I'd guess people who spread these messages most consciously imagine a systemic collapse, rather than a literal extinction. As people don't tend to think about longtermistic consequences, the distinction doesn't seem that meaningful.

AI x-risk is more weird and terrifying and it goes against the heuristics that "technological progress is good", "people have always feared new technologies they didn't understand" and "the powerful draw attention away from their power". Some people, for whom AI x-risk is hard to accept happen to overlap with AI ethics. My guess is that the proportion is similar in the general population - it's just that some people in AI ethics feel particularly strong & confident about these heuristics.

Btw I think climate change could pose an x-risk in the broad sense (incl. 2nd-order effects & astronomic waste), just one that we're very likely to solve (i.e. the tail risks, energy depletion, biodiversity decline or the social effects would have to surprise us).

Ryan Greenblatt @ 2023-12-21T21:57 (+16)

[Not relevant to the main argument of this post]

They do so because they think x-risk, which (if it occurs) involves the death of everyone

I'd prefer you not fixate on literally everyone dying because it's actually pretty unclear if AI takeover would result in everyone dying. (The same applies for misuse risk, bioweapons misuse can be catastrophic without killing literally everyone.)

For discussion of whether AI takeover would lead to extinction see here, here, and here.

I wish there was a short term which clearly emphasizes "catastrophe-as-bad-as-over-a-billion-people-dying-or-humanity-losing-control-of-the-future".

SiebeRozendal @ 2023-12-27T16:57 (+4)

It's called an existential catastrophe: https://www.fhi.ox.ac.uk/Existential-risk-and-existential-hope.pdf or if you mean 1 step down, it could be a "global catastrophe".

or colloquially "doom" (though I don't think this term has the right serious connotations)

Oliver Sourbut @ 2023-12-30T10:56 (+5)

Yeah. I also sometimes use 'extinction-level' if I expect my interlocutor not to already have a clear notion of 'existential'.

Daniel_Friedrich @ 2023-12-22T15:33 (+14)

Great to see real data on the web interest! In the past weeks, I investigated the same topic myself, while taking a psychological perspective & paying attention to the EU AI act, reaching the same conclusion (just published here).

Minh Nguyen @ 2023-12-21T18:50 (+12)

Thank you for this! This is 1 less misconception to deal with.

I always get suspicious when someone treats societal issues like a zero-sum game. Yes, we can worry about more than 1 thing at a time, and it' often not very productive to frame caring about things as oppositional to caring about another thing

tobytrem @ 2024-01-04T16:55 (+9)

I'm curating this post.

I think that this is a useful intervention, which contributes to a more productive meta-debate between AI X-risk and AI ethics proponents.

Thanks for writing!

paul_dfr @ 2024-01-11T22:37 (+4)

Thank you for writing this, I found it very interesting and helpful. I have something between a belief and a hope that the antagonistic dynamics (which I agree are likely driven by the idea that AI safety is merely speculative) will settle down in the short-ish future as more empirical results emerge on the difficulty of training models with the intended goals (e.g. avoiding sycophancy) and get more widely appreciated. I think many people on the critical side still have the idea of AI safety as grounded largely in thought experiments only loosely connected to current technology.