Impact evaluation of Animal Ask: A retrospective after 5 years

By Animal Ask @ 2025-11-18T15:01 (+21)

Executive summary

Animal Ask is a research organisation that offers consultation and dedicated research to stakeholders in the animal advocacy movement to help those organisations make strategic decisions to bring about the highest possible impact for animals. Animal Ask also offers other custom research, such as written documents to help animal advocacy organisations communicate as effectively as possible with policymakers.

Since being launched in 2020, Animal Ask has completed 57 major research projects around the world, covering all major exploited animal groups (insects, shrimp, fish, chickens, pigs, and ruminants). Animal Ask has had numerous constructive collaborations with stakeholders and has usually received positive feedback (though some issues have been raised, which are also noted in this report). We have achieved all of this with a team that has ranged in size between 3 and 5 staff members.

In this report, we examine the impact of Animal Ask in terms of improving the lives of non-human animals.

To understand Animal Ask's impact, we consider six viewpoints:

- Viewpoint 0: The outside view. Projects that aim to have impact often fail, which provides a helpful base rate for the probability of success, even before accounting for any specifics about Animal Ask. .

- Viewpoint 1: Impact tracking. This is the "classic" approach to measuring impact—examining each completed project to determine whether it brought about the hoped-for effects. Most of Animal Ask's monitoring and evaluation has been conducted from this viewpoint. The outcomes caused by Animal Ask's 57 major projects over the past five years have been tracked. This evidence is vaguely promising but ultimately inconclusive. A clear conclusion should be attainable within the next few years. This is the most important viewpoint in this report, representing about 75% of our credence.

- Viewpoint 2: The skeptic's view. This is the most skeptical approach to measuring impact. Can we identify actual animals whose lives are demonstrably better than they otherwise would have been? Animal Ask can only point to a couple of examples of actual, realized impact due to its work, and these examples are ambiguous at best. However, this might be because insufficient time has transpired for similar examples to materialize.

- Viewpoint 3: The "meta = leverage" view. There is a reasonable argument that since Animal Ask is a meta-organization, it only needs to have a modest probability of success to be a stronger bet than the next best alternatives.

- Viewpoint 4: Money moved ratio. For a sub-set of our programs that can be modelled on this metric money moved can be reasonably estimated between 6.9 to 11.5 times expenditure on research. This requires that our research results in campaigns that are at least 9-14% more effective than they would have been otherwise to break even from a movement spending perspective. This ratio is within the range of charity evaluators and effective giving organisations, although their different theories of change makes 1-1 comparisons inaccurate.

- Viewpoint 5: External evaluations. There have been a couple of external evaluations of Animal Ask, but the conclusions of these evaluations have been ambiguous and uncertain.

Integrating the evidence from these six viewpoints, the conclusive assessment regarding Animal Ask's impact is as follows:

- Animal Ask's work has demonstrated some promising indications. However, a definitive determination of Animal Ask's impact will necessitate the passage of several additional years. A reasonable cut-off point would be approximately 2028–2030, which would allow sufficient time for further data to materialize while maintaining accountability.

- The critical factor is whether a greater proportion of projects fall into the "positive impact" category between the present and approximately 2028–30 (see section "Viewpoint 1: Impact tracking"). If this trend is observed, then Animal Ask is likely demonstrating an impact, at which point a cost-effectiveness analysis can be produced. Conversely, if this trend is not observed, Animal Ask is likely not having an impact. In which case Animal Ask could consider using our infrastructure to pivot to a more promising model or require dissolution.

Introduction

The big picture

Since this evaluation requires us to be critical, it is helpful to begin with a high-level perspective of Animal Ask's accomplishments over the past five years. Since its launch in 2020, Animal Ask's team of 3–5 staff members has:

- Completed 57 major research projects, with the majority of this research published on the organization's website and/or in academic journals.

- Expended 820,000 USD, equating to approximately 13,000 USD per major research project (this figure is approximate, as some expenditure was allocated to ongoing projects not included in the 57 projects examined here).

- Conducted research across all major exploited animal groups (insects, shrimp, fish, chickens, pigs, and occasionally ruminants).

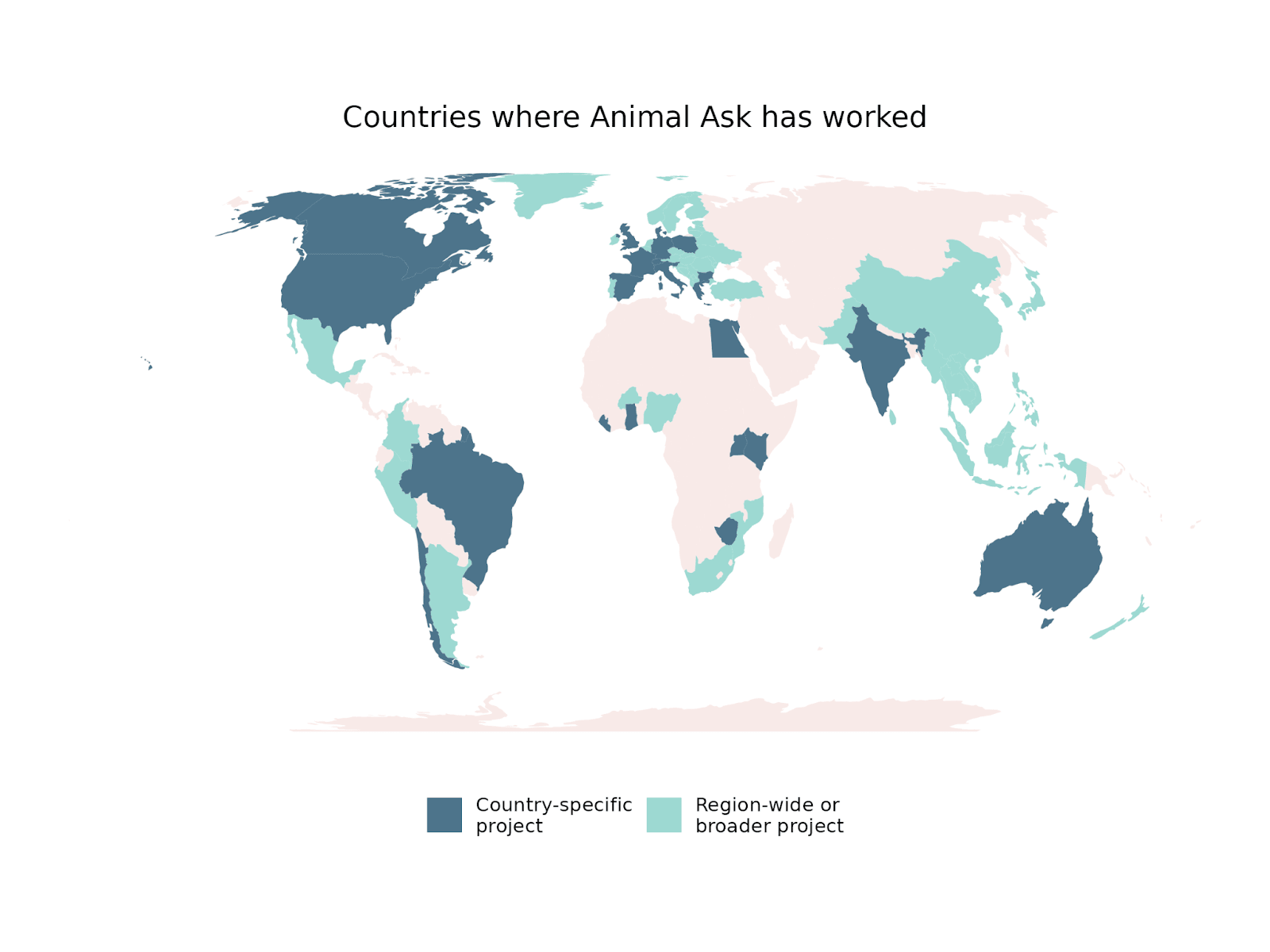

- Conducted research in dozens of countries.

- Addressed numerous important questions facing the animal advocacy movement and developed significant knowledge, both internally and as a contribution to the broader knowledge base of the animal advocacy movement.

- Collaborated with numerous stakeholders globally, both within and outside of the animal advocacy movement.

- Typically received positive feedback.

Animal Ask is committed to achieving a single objective: to deliver impact for animals. Specifically, Animal Ask aims to increase animal welfare and/or to decrease the extent of animal exploitation. The sole criterion against which the organization seeks to be evaluated is its impact on animals.

Animal Ask's theory of change

In broad strokes, Animal Ask attempts to deliver impact by following three theories of change. Our direct contribution is up to stage C of each of these theories of change:

- Strategic priorities and consultation.

- Animal Ask finds animal advocacy organisations at the early, decision-making stage of a campaign →

- Animal Ask performs research to help advise the organisation's decision-making →

- the organisation selects more impactful asks for their campaigns and/or develop a more evidence-based and nuanced strategy for achieving those asks, than they otherwise would have done →

- some of those campaigns eventually succeed →

- the laws or corporate policies that are achieved have a higher impact than they otherwise would →

- there is a net, counterfactual reduction in animal suffering or the scale of animal exploitation, compared to if Animal Ask had not acted.

- Information lobbying.

- For an upcoming campaign with a given ask, Animal Ask prepares persuasive written documents supporting the value or the feasibility of that ask (e.g. white papers, economic reports, scientific publications) →

- the campaign has a higher probability of success →

- over time, more campaigns succeed than would otherwise be the case →

- more pro-animal laws or corporate policies are achieved →

- there is a net, counterfactual reduction in animal suffering or the scale of animal exploitation, compared to if Animal Ask had not acted.

- Foundational research.

- Animal Ask identifies key uncertainties facing the movement →

- Animal Ask performs dedicated research into those foundational questions and publishes recommendations for the movement as a whole →

- decision-makers inside the animal advocacy movement read that research and keep it in mind while making decisions that involve those key uncertainties →

- [the same latter steps as in "Strategic priorities and consultation"]

Over the past five years, our division of research effort has been approximately 50% on strategic priorities and consultation, 15% on information lobbying, and 35% on foundational research. However, foundational research has been less common during 2024 and 2025. The need for foundational research was higher as Animal Ask was in its early years and we rapidly identified many key uncertainties that required foundational research. We also spent most of 2023 on foundational research, as we had a specific grant to do so. Currently, our division of research is probably something like 75% on strategic priorities and consultation, 20% on information lobbying, and 5% on foundational research.

The landscape of animal advocacy research

Numerous research organizations operate within the animal advocacy movement, each employing distinct theories of change. While an exhaustive list is beyond the scope of this discussion, illustrative examples include:

- Movement Strategic Priorities

- Rethink Priorities' animal welfare division focuses on projects addressing critical knowledge gaps and strategic needs to drive long-term progress for the most neglected animals.

- The Welfare Footprint Project is developing and applying a system for quantifying animal suffering to better understand the impact of various animal welfare interventions.

- Incubators

- Ambitious Impact's Charity Entrepreneurship program includes a branch dedicated to identifying potential charitable interventions capable of delivering significant impact for animals, subject to incubation.

- Contextual relevant messaging and movement building research

- Faunalytics aims to enhance the accessibility of existing research for advocates and conducts primary research into the best strategies to inspire change for animals.

- Good Growth conducts actionable research and evidence-based design to accelerate ethical food systems in Asia.

- Movement building research

- Social Change Lab conduct empirical research on people-powered social movements

- Animal Think Tank narrative and strategic research focused on laying the foundations for a broad-based nonviolent movement for Animal Freedom in the UK

- Pax Fauna narrative and strategic research to design a more effective social movement for animal freedom in the US

- Organisation consultants

- Animetrics provides pro-bono assistance to organisations. They specialize in economics, impact evaluation, and research tailored to local contexts focusing on the ‘Global South' and Muslim communities. Existing research has focused on attitudes and perspective on target demographics.

- Bryant Research provides low cost research services on a variety of topics as per the needs of its client groups, including surveys and climate impact modeling.

- Long term focus

- Wild Animal Initiative's research division seeks to establish wild animal welfare science as a recognized scientific discipline.

- Animal Ethics supports and research interventions to improve the lives of animals in the wild, how future technologies will enable us to avoid catastrophic risks or be used to help wild animals on a large scale.

- Sentience Institute that focus on long-term social and technological change aiming to expand humanities moral circle

This represents the landscape in which Animal Ask operates. As one of many research organizations in the animal advocacy movement, Animal Ask contributes to impact through specific research endeavors targeted at specific decisions.

For a deeper dive into animal advocacy research, see the blog article by Animal Charity Evaluators here.

Challenges in evaluating research impact

The theory of change employed by Animal Ask, and indeed by research organizations generally, operates at a "meta" level. This means that the organization does not directly engage in advocacy for specific animal policies. Instead, Animal Ask's function is to support individuals and organizations directly involved in such campaigns. This operational distance from direct action places Animal Ask's work at an abstract level above the campaigns themselves.

"Meta" work typically entails a longer and more complex theory of change. The aforementioned theories of change, for instance, often comprise half a dozen broad steps. Each sequential step introduces additional potential points of failure. Even if the initial stages of the theory of change are successfully executed, yielding high-quality and necessary outputs, subsequent links may not materialize as intended. For example, even if Animal Ask provides robust research and evidence-based strategic recommendations, the campaigns themselves may still fail. Furthermore, even when campaigns succeed and "meta" work demonstrably produces impact, the realization of this impact can often require several years.

In practical terms, it is exceedingly difficult to definitively ascertain the impact of most research on animal welfare outcomes. While there are valid reasons to believe that certain types of research, including that conducted by Animal Ask, do contribute to animal welfare, measuring this impact remains exceptionally challenging.

A crucial consideration is the protracted timeline associated with long theories of change, such as that of Animal Ask. Campaigns typically require years to achieve success, implying a commensurate delay in determining the impact of advisory services on those campaigns. Animal Ask has been in operation for five years, a relatively short period. Many campaigns in which the organization has been involved have simply not had sufficient time to manifest either success or failure. Conversely, it is necessary to establish a reasonable cutoff point for evaluation. An organization lacking genuine impact could potentially persist for decades without demonstrating effectiveness if every evaluation concludes with the rationale of insufficient time for the theory of change to operate and for impact to materialize. For Animal Asks consultation model, an additional few years of evaluation would likely be warranted, but not indefinitely. A commitment to accountability is essential. A conservative estimate for this evaluative threshold might fall within the 8- to 10-year mark (e.g., 2028–2030), while a more optimistic assessment might extend it to the 15- or 20-year mark.

This discussion also raises an important, unresolved debate about strategy. Given a particular set of evidence about an organization's impact, what is the correct rate at which that organization should scale? If an organization scales too soon on the basis of insufficient evidence, it risks wasting effort and resources. If an organization scales too late and waits for perfect evidence, it risks wasting opportunities for impact. This is a question that has faced all impact-oriented organizations and organisations in the same ecosystem will take different approaches.

The six viewpoints of this report

The remainder of this report will assess whether Animal Ask is achieving a satisfactory impact. This assessment will proceed from six distinct perspectives, each employing a different line of reasoning:

- Viewpoint 0: The outside view. Projects aiming for impact often fail, providing a useful baseline probability of success prior to considering specifics about Animal Ask.

- Viewpoint 1: Impact tracking. This represents the conventional approach to measuring impact—evaluating each completed project to determine if anticipated effects were realized. Most of Animal Ask's monitoring and evaluation efforts have been conducted from this viewpoint. This is the most important viewpoint in this report, representing about 75% of our credence.

- Viewpoint 2: The skeptic's view. This is a strict approach to measuring impact: Can demonstrable improvements in the lives of specific animals be attributed to the intervention?

- Viewpoint 3: The "meta = leverage" view. A reasonable theoretical argument supports Animal Ask's research, even in the absence of empirical data.

- Viewpoint 4: Money Moved. This is often used for effective giving organisations and charity evaluators and can be applied to a subset of projects in Strategic priorities and consultation which may shift resource allocation across campaign options.

- Viewpoint 5: External evaluations. Animal Ask has undergone evaluations by independent third-party organizations on several occasions.

In the conclusion, the results from each viewpoint will be summarized, and methods for integrating these disparate perspectives will be explored.

Viewpoint 0: The outside view

Organizational failure is a common outcome, particularly within the non-profit sector. In this context, "failure" denotes the absence of measurable impact. It is important to distinguish this from an unsuccessful organizational launch; rather, it signifies that, despite the founders' best efforts, an intervention simply did not deliver the intended impact. Identifying this outcome allows for the better allocation of resources and effort to more impactful endeavors instead.

Animal Ask was established in 2020 by Amy Odene and George Bridgwater, emerging from the Ambitious Impact (AIM) Charity Entrepreneurship (CE) incubation program.

A 2023 AIM assessment of 50 launched charities categorized their progression into three states (link):

- Success: "40% of our charities reach or exceed the cost-effectiveness of the strongest charities in their fields (e.g., GiveWell/ACE recommended)."

- Steady state: "40% are in a steady state. This means they are having impact, but not at the GiveWell-recommendation level yet, or their cost-effectiveness is currently less clear-cut (all new charities start in this category for their first year)."

- Shutting down: "20% have already shut down or might in the future."

This suggests that between 20% and 60% of AIM-incubated charities experience failure, while 40% to 80% achieve success. Thus, a randomly selected AIM-incubated charity could be expected to have a probability of success within this range.

A 2019 AIM report (link) recommended the establishment of an animal advocacy-focused research organization, which subsequently became Animal Ask in 2020. This report explored various research models, with Animal Ask's current model—consulting with other animal advocacy organizations on strategic decisions—identified as one of the most promising strategies.

However, the authors of the 2019 report estimated the probability of achieving impact on any given research project to be approximately 5%. This implies a 95% project-level failure rate. This high probability of failure was deemed acceptable due to the substantial potential payoff.

The low probability of success was attributed to two critical links in the theory of change: a) "High-impact ask used instead of a less high-impact ask" (estimated probability: ~30%) and b) "Using a high-impact ask, leading to more implemented change for animals" (estimated probability: ~25%). The prescience of these predictions regarding key uncertainties has been borne out by subsequent observations, which will be discussed further in this report.

Viewpoint 1: Impact tracking

Summary of viewpoint: We have tracked the outcomes of 57 of Animal Ask's research projects over the past five years. The collected evidence offers tentative support for Animal Ask's impact but remains ultimately inconclusive. A definitive conclusion is anticipated within the coming years.

A common approach to monitoring and evaluation (M&E) for organizations such as Animal Ask involves cataloging all projects, specifying their intended outcomes, and subsequently assessing their actual outcomes.

This is the most important viewpoint in this report. We probably put about 75% of our credence into this viewpoint alone.

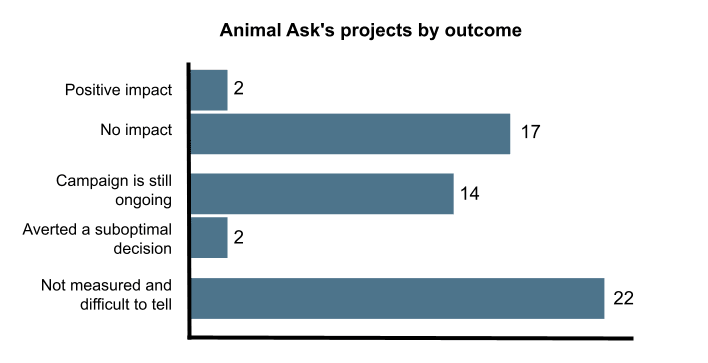

As an end-line metric, Animal Ask's projects can be classified into five categories:

- No discernible impact.

- Positive impact. The research was successfully conducted, and its recommendations were adopted by the partner organization. This resulted in demonstrable, real-world impact for animals.

- Campaign ongoing. The research was successfully conducted, and its recommendations were adopted by the partner organization. However, the success of the resulting campaign in achieving a policy objective remains to be determined.

- Not measured and difficult to determine. This category presents a challenge for impact assessment. This does not signify an absence of impact, but rather the inherent difficulty in quantifying the impact for specific projects. While applicable to some past projects, this category is anticipated to be less relevant for future initiatives. Further discussion on this point is provided below.

- Averted a suboptimal decision. The research was successfully conducted, and its recommendations were adopted by the partner organization. However, the recommendation specifically advised against conducting a particular campaign. This indicates a successful prevention of what is considered an inefficient allocation of organizational and movement resources. The ultimate translation of this outcome into measurable impact is currently unclear.

Between 2021 and 2025, we have completed 57 major projects. The graph below shows the outcome by category.

Two of our projects have shown good results, which are discussed further below (see "Viewpoint 2: The skeptic's view"). An additional two projects helped prevent wasted resources for movements.

Seventeen of our projects haven't had a clear impact yet. This includes cases where (a) our advice wasn't used by partner organizations, and/or (b) the related campaign didn't achieve its goal. These problems were identified as early as 2019 as key uncertainties for Animal Ask (see above, "Viewpoint 0: The outside view"). We know that sometimes, our advice might not have been used because it didn't fit the partner organizations' situation or changing priorities, or because of outside factors we couldn't control (e.g. changing political landscape, decision-making staff turnover, funding constraints). We also acknowledge that organizations may already be making the best decisions possible given their situation—in these situations, additional research from Animal Ask does not add any value. We've put a lot of effort into understanding these uncertainties better and improving our methods to increase the chance our research will add value and organisations are well positioned to take action. For example, we now conduct significantly more pre-vetting for projects covering the broader stakeholders outside of the relevant departments in an organization or even outside of the organization themselves.

However, 14 projects are part of ongoing campaigns. These campaigns haven't reached a final decision about their goals yet. These projects will eventually be classified as either having a "Positive impact" or "No impact." Their final classification depends on what happens in the future.

For some examples of case-studies for projects in each category outside of “positive impact” which is covered more below.

- Campaign ongoing

- We worked with Sentience (previously Sentience Politics) in 2021 evaluating potential changes to Swiss legislation. We highlighted a few high impact areas including low welfare imports, cruel product bans as potential initiatives and a selection of motions that looked promising. Including expanded protections for fish and decapods in aquaculture and bans on rat poison. These are now part of their Invisible Animals campaign which they launched in 2024. In 2021 they noted we helped “identifying topics and problem areas we hadn't yet considered”.

- Animal Welfare Competence Center for Africa (AWeCCA) in 2022 for the Uganda priorities report. They were initially looking at some form of institutional outreach and have subsequently focused on government outreach to prevent industrialised animal farming. They have also received support from Animal Advocacy Africa without which the collaboration might not have happened.

- Averted a suboptimal decision

- CCTV research for Equalia in 2022 after they had secured local victories and were considering the value of focusing on this across the EU. While the change was likely to have some positive impact the value of the campaign was less valuable than Equalia's other work so they did not continue with it outside of their context.

- Review of the value of an Independent Office for Animal Protection in Slovenia for Animal Enterprise Transparency Project. Concluded that there was mixed evidence of regulatory capture and given the difficulty of establishing a full independent office a commission would be an appropriate first step or focus on other campaigns.

- Not measured and difficult to determine

- Foundation piece on Corporate Campaigns: determining the scale of the ask which was focused on developing a better understanding of the scale of demands that can be made through corporate campaigns to inform the development of future asks.

- Minor party politics research covering the potential of the approach in several countries. We received some initial interest from a few stakeholders including donors but there were other barriers to political donations. We are unsure if this ultimately resulted in any altered decisions in the movement.

- No Impact

- Bulgaria prioritisation for Invisible Animals Bulgaria in 2022. While the report was well received due the changing political landscape in Bulgaria and the EU and considerations of longer term organisational strategy they pursued other campaign ideas.

- Anonymous organisation. We provided a review of some campaign options being considered by their campaigns team. It was received well by this team but was ultimately ignored by leadership due to internal momentum towards an idea. This resulted in increased staff turnover within the team.

- Anonymous Insect organisation. Examined a variety of campaign ideas within the insect industry in a certain context but found we needed a lot of information on current strategy and interaction between groups that the founders of groups were more up to speed on. This made us unable to make any significant contributions to assist their decision making in the time allocated.

Based on this data, Animal Ask's work appears somewhat promising, but the evidence is ultimately inconclusive.

- If some of the 14 ongoing campaigns demonstrates positive impact over the coming years, it will indicate that Animal Ask is achieving demonstrable real-world impact. This will also provide insight into the effectiveness of efforts invested in addressing the two key uncertainties. At that juncture, cost-effectiveness can be calculated and compared to alternative uses of movement resources; however, this stage has not yet been reached.

- Conversely, if all or most of the 14 ongoing campaigns demonstrate no impact over the coming years, Animal Ask will need to pivot or cease operations. The exception would be if Animal Ask secures one or two additional impactful projects whose impact is exceptionally high—in which case, the magnitude of impact might still justify the operational costs of Animal Ask. A cost-effectiveness analysis would elucidate this, but this stage has not yet been reached.

One category, "Not measured and difficult to tell," warrants further discussion.

- Projects within this category primarily comprise foundational research and the 2023 movement strategy research. Animal Ask conducts foundational research to address critical uncertainties that serve as linchpins in other decisions; this was more prevalent during the organization's early stages, when numerous significant uncertainties existed, and is less common currently. Similarly, the 2023 movement strategy research was supported by a dedicated grant and is no longer an ongoing area of research.

- Nevertheless, there is some indication that these 17 reports may be generating positive impact. For these reports, impact is defined as influencing the decisions of organizations and grantmakers within the movement, informing future research projects, and providing novel insights to advocates and organizations.

- This diffuse form of impact presents measurement challenges, as the absence of a specific partner organization makes it difficult to observe decisions that have been made differently due to the research. Surveys of the animal advocacy movement have been conducted to assess awareness and utilization of these reports. Unfortunately, this data source is of limited quality due to low response rates and a tendency for responses to be positively biased.

- However, instances where these reports have demonstrably altered specific decisions or strategic choices are collected. While this represents only an initial step toward impact, it is a necessary one. Clear examples of such influence exist for 5 of the 17 reports: minor political parties, insider activism, movement tactics, mass media for meat reduction, and subsidies.

- Furthermore, other reports in this category have been cited as generally helpful or informative for individuals working on specific issues, though such examples are considered less valuable than instances of documented changes in specific decisions and strategic choices.

Viewpoint 2: The skeptic's view

Summary of viewpoint: Animal Ask can identify only a limited number of instances where its work has demonstrably led to impact. These examples are, at best, ambiguous. However, this observation may be because insufficient time has passed for further examples to materialize.

A skeptical perspective suggests that while past projects can be cataloged and their outcomes reviewed, this approach risks overlooking the broader context. There is a potential for self-deception regarding actual impact. To substantiate a claim of significant impact, it is necessary to demonstrate tangible improvements in the lives of specific animals (e.g., fish, chickens, or pigs on a farm) or, alternatively, to point to specific legislative or corporate policy changes directly attributable to the organization's efforts.

An organization like Animal Ask should be able to provide numerous instances where its influence led to distinct changes in law, governmental decisions, corporate policies, or company practices. Such evidence represents the strongest form of validation and sets a high benchmark for accountability. That said, it is important to keep in mind that this viewpoint represents much stronger skepticism than that applied to most organizations like Animal Ask. If applied strictly, this viewpoint would make it extremely difficult to estimate the impact of most meta organizations, as meta organizations can usually only access data that represents a small proportion of their actual impact—as Kevin Xia writes: "I have found that meta orgs in [the effective altruism community] often only report the impact they know they have caused, and extrapolation is almost a bit frowned upon or at least difficult to get right, due to various biases at play."

Animal Ask's work has demonstrably influenced two specific laws or decisions, though both examples possess some ambiguity around the level of contribution and the counterfactuals.

- The cancellation of a particular factory farm.

- The inclusion of fish in a state's Animal Welfare Act in Australia.

This limited number of outcomes, when viewed in isolation after five years of operation, appears underwhelming. However, this assessment is incomplete, as numerous campaigns are ongoing. Reducing the entirety of the work to these two points, while other initiatives are still in progress, is overly simplistic.

Therefore, the true measure of Animal Ask's efficacy under a skeptical evaluation will depend on the emergence of additional examples on this list in the coming years.

Viewpoint 3: The "meta = leverage" view

Summary of viewpoint: There is a reasonable argument that since Animal Ask is a meta organisation, it only needs to have a modest probability of success to be a stronger bet than the next best alternatives.

This entire viewpoint is, at its core, expressing the idea that meta organisations can have higher leverage. As described by Peter Wilderford here, this argument roughly goes: "If you think a typical EA [effective altruism] cause has very high impact, it seems quite plausible that you can have even higher impact by working one level of 'meta' up -- working not on that cause directly, but instead working on getting more people to work on that cause. For example, while the impact of a donation to the Against Malaria Foundation seems quite large, it should be even more impactful to donate to Charity Science, Giving What We Can, The Life You Can Save, or Raising for Effective Giving, all of which claim to be able to move many dollars to AMF for every dollar donated to them. Likewise, if you think an individual EA is quite valuable because of the impact they’ll have, you may want to invest your time and money not in having a direct impact, but in producing more EAs!"

Under this view Animal ask are able to assist or influence the campaigns of approximately 10 organisations a year at similar costs to running an additional campaign. If several organisations pivot or are more successful because of Animal Asks research each year then this meta work is likely to create more effective campaigns than using the same resources directly on said campaigns.

Viewpoint 4: Money moved ratio

For a sub-set of our research projects in strategic priorities and consultation that work with groups in the decision-making stage of a campaign there are often a wide variety of options being considered. One way to model the impact of Animal Ask is through a money moved ratio between expenditure on research and expenditure on these campaigns.

This ratio allows us to see the minimum amount our research needs to have increased the impact of these campaigns to have provided equal value to marginal spending on these organisations. For example a ratio of 1 would imply campaigns need to be at least twice as effective, whereas a ratio of 4 would imply they need to be at least 25% more impactful. This also serves as a benchmark to other meta-organisations in charity evaluation and effective giving space who evaluate themselves on the ratio of money moved to effective causes compared to their operational costs.

Of the 18 projects that resolved with positive impact, ongoing campaigns or averted a sub-optimal decision only 6 in the ongoing category can be evaluated under this metric. Across these projects our partner organisations have a combined yearly budget of approximately $2,870,000 of which around $940,000 is allocated to campaigns we provided research to help inform decision making. Estimated as a fraction of the total budget across active campaigns. Given approximately half our historic spending has been on projects of this kind ($410,000 USD) then ongoing money moved is 2.3 per year. If campaigns run for 3,4 or 5 years on average the money moved ratio is 6.9, 9.2 and 11.5 respectively. Which would require that our research results in campaigns that are at least 9-14% more effective than they would have been otherwise, assuming one to one counterfactual value of funds from both organisations donors.

| Organisation | Money Moved | Type | Budget | Ratio |

| Founders pledge 2020 | Raised/Influenced | |||

| Giving what we Can | Raised | |||

| Animal Ask (3 Years) | $2,833,721 | Influenced | $410,000 | 6.9 |

| Animal Ask (4 Years) | $3,778,295 | Influenced | $410,000 | 9.2 |

| Ace | $11,000,000 | Influenced | $1,114,991 | 9.9 |

| Founders Pledge 2023 | Raised/Influenced | |||

| Animal Ask (5 Years) | $4,722,869 | Influenced | $410,000 | 11.5 |

| Founders Pledge 2024 | Raised/Influenced | |||

| Givewell | $397,000,000 | Influenced | 18.6 |

Taken at face value this would put our money moved ratio above Giving What we Can and comparable to Animal Charity Evaluators, however this is not a one to one comparison. There is wide gradation in the counterfactual use of funds across these groups and the relative impact of their final destination.

Giving what we Can multiplier discounts for potential counterfactual donations should represent an approximation for fresh funds directed to top opportunities. Founders Pledge does not make such discounts and also directs to a wider variety of causes.

While groups like Animal Ask, ACE and Givewell are directing or influencing donations/ organisation funds that will already be spent to more effective areas. In which case the impact of that work needs to discount the value of the counterfactual use of funds. Animal Ask is also the only organisation on this list who works with organisations themselves rather than solely with donors.

Viewpoint 5: External evaluations of our work

Summary of viewpoint: There have been a couple of external evaluations of Animal Ask, but the conclusions of these evaluations have been ambiguous and uncertain.

There have been a couple of times when Animal Ask has been evaluated by people outside the organisation.

Aidan Whitfield and Bridget Loughhead, during the 2023 pilot of Ambitious Impact's Research Training Program, spent two weeks evaluating Animal Ask. Since the authors had to use our own modelling as part of their evaluation, the cost-effectiveness model cannot be considered as independent from our own data. Key passages are as follows:

- "Although our reading of the internal evidence for Animal Ask’s effectiveness is, so far, relatively inconclusive, external evidence suggests that more research in the animal advocacy movement has significant potential to improve the efficacy of the movement. Additionally, at least one external model suggests that the kind of research Animal Ask does can be highly cost-effective. Unfortunately, the validity of many assumptions in Animal Ask’s theory of change remains uncertain…"

- "Animal Ask’s pattern of impact is likely to be relatively hits based, with the majority of their impact stemming from a few exceptionally impactful projects, where they might increase the expected value of a large campaign by 2x or more. As such, it’s very possible that Animal Ask just hasn’t yet achieved a large hit. We believe that Animal Ask’s work is likely to be of high value, however, we also are not convinced that this has yet been borne out by the evidence."

- "For the cost-effectiveness analysis, we used the modelling from Animal Ask’s own assessment of their counterfactual impact to estimate the total impact and costs [...] Under the assumptions of our cost-effectiveness model, Animal Ask’s work improves the lives of farmed animals in a cost-effective way. The spreadsheet model determined a cost-effectiveness of about 39 chicken-years per dollar. The final distribution that accounted for the long tail of potential upside estimated a median impact of 115 chicken-years per dollar. These estimates are still highly uncertain and the results should be interpreted cautiously in light of the limitations of the model that likely inflate the estimated cost-effectiveness. Estimating the counterfactual impact of research is a hard question. Nevertheless, we end this evaluation with a positive view of the work being done by Animal Ask."

- "Regarding transparency, Animal Ask did not achieve any of the transparency benchmarks we assessed. Very little information about their undertakings and impact is available publicly and some things that were available have been since removed from the website. It would not be possible for third-parties to evaluate the impact of Animal Ask without engaging substantially with them. This is a major area for improvement identified by this report."

Since Animal Ask is funded by grants, our work has also been evaluated a number of times by grantmakers. While we don't have specific information, some general themes that have been raised by our funders are:

- (Pro) In theory, Animal Ask's approach of developing strategic campaign asks (e.g. targeting impact, neglectedness, and tractability) helps the animal advocacy movement to ensure that campaign funds are spent as effectively as possible.

- (Pro or con) Many or most of Animal Ask's partner organisations are considered effective or impactful organisations, indicating that Animal Ask could plausibly be working to improve campaigns that are indeed likely to succeed. On the other hand, if these organisations are already doing a good job at strategic decision-making on their own, then Animal Ask might be unnecessary.

- (Con) Animal Ask has not always been super successful at convincing organisations to adopt Animal Ask's recommendations (see viewpoint 1). A low success rate in this area could still be consistent with high impact, if Animal Ask occasionally succeeds at convincing organisations to adopt its recommendations and those few organisations subsequently have an outsized impact, but there isn't necessarily the data to support this conclusion.

Issues raised about our work

Before we conclude, we would like to take this opportunity to be transparent about issues that have been raised with our work over time. Most of the feedback we have received has been positive, but we have also heard occasional criticisms of our work. When we notice issues with our work, or when issues are raised by others, we do our best to address those issues if necessary.

During a couple of projects, we did a poor job of communicating with partner organisations. This led to us misunderstanding the organisations' expectations and needs, causing inefficiency in our work and occasionally causing additional work for the partner organisations.

One partner organisation said that Animal Ask should utilise the expertise in the movement more proactively, especially on-the-ground expertise (e.g. the perspectives of the movements' lobbyists). A few people have pointed out that Animal Ask could improve the presentation of its reports, especially with correcting typos, linking internal sections, etc. And Aidan Whitfield and Bridget Loughhead's 2023 report (described above) recommended that Animal Ask be more transparent about its impact, as this would make it easier for people to evaluate Animal Ask without engaging significantly with us.

In response to issues around communication and project scoping, we have developed a detailed method for communicating with our partner organisations. We now spend much more effort up front talking with potential partner organisations about whether we can offer any benefit, and we are more proactive about following up with organisations over time to see whether they need any more support.

Conclusion — How should we integrate this evidence?

This analysis considered six major viewpoints offering evidence regarding Animal Ask's organizational impact. The conclusions reached by each viewpoint are as follows:

- 0. The outside view: Even without accounting for the specifics of Animal Ask's work since its incubation by AIM, it is known that any AIM-incubated charity selected at random has a probability of success ranging from 40% to 80%. Upon its initial launch, AIM estimated Animal Ask's probability of success at approximately 5%.

- 1. Impact tracking: Outcomes from Animal Ask's 57 major projects over the past five years have been tracked. The number of ongoing campaigns is promising but ultimately inconclusive given the current rates of positive impact. A clear conclusion should be attainable within the next few years.

- 2. The skeptic's view: Animal Ask can identify only a few instances of actual, realized impact directly attributable to its work, and these examples are ambiguous at best. However, this may be due to insufficient time for similar examples to materialize.

- 3. The "meta = leverage" view: A reasonable argument can be made that, as a meta-organization, Animal Ask requires only a modest probability of success to represent a stronger investment than alternative options.

- 4: Money moved ratio: It’s difficult to compare what this ratio means for Animal Ask compared to other meta-organisations given our different theory of change and because we can only apply it to some of our projects. It does imply that we are likely having enough impact to justify our direct cost but fails to account for salary sacrifices or alternative uses of time of staff.

- 5: External evaluations of our work: A limited number of external evaluations of Animal Ask have been conducted, but their conclusions have been ambiguous and uncertain.

Three of these viewpoints (1, 2, and 4) converge on similar conclusions: Animal Ask's work has shown promising signs, but the data is currently inconclusive. These findings are enriched by two viewpoints providing background context: viewpoint 0 suggests an expectation of Animal Ask's likely failure, while Viewpoint 3 suggests strong prospects for success.

The general implications for Animal Ask are as follows:

- Animal Ask's work has shown some promising signs. However, conclusive determination of Animal Ask's impact will require several more years. A reasonable cut-off point would be approximately 2028–2030, allowing sufficient time for additional data to materialize while maintaining accountability.

- The key information pertains to whether more projects transition into the "positive impact" category between the present and approximately 2028–2030 (see above section, "Viewpoint 1: Impact tracking"). If so, Animal Ask is likely having an impact, and a cost-effectiveness estimate can then be produced. If not, Animal Ask will need to consider a pivot or cease operations.

- Animal Ask may wish to reconsider whether its current staff composition, including a substantial human effort invested in research, remains the optimal choice given recent developments in AI since key hires were made (see below).

Appendix: How does generative AI change Animal Ask's strategy?

The staff composition of Animal Ask may be suboptimal due to developments in AI that have occurred since key hires were made.

A brief timeline of Animal Ask:

- Animal Ask was founded in 2020 by Amy Odene and George Bridgwater, as part of the Ambitious Impact (AIM) Charity Entrepreneurship (CE) incubation programme. Specifically, AIM produced this report in 2019, which recommended the launch of an animal advocacy-oriented research organisation. Amy and George were deemed the most suitable candidates to establish such an organisation.

- In 2021, the prevailing understanding was that effective secondary research necessitated the employment of skilled researchers. Consequently, Amy and George initially hired Jamie Gittins, followed by two full-time researchers, Max Carpendale and Ren Ryba. This marked the beginning of the organisation's current operational structure. Broadly, Animal Ask performs secondary research in collaboration with animal advocacy organisations to facilitate impactful decision-making for animals. This process involves significant human effort dedicated to secondary research.

- In 2022, OpenAI released ChatGPT. In 2025, OpenAI launched ChatGPT Deep Research, and other companies have introduced similar "deep research" tools.

In the three-year period from 2022 to 2025, the landscape of secondary research (and, to a lesser extent, primary research) has undergone significant transformation. It is now possible for an AI agent to generate a report that, at the beginning of the decade, would have required human authorship. While the quality of reports from ChatGPT Deep Research (and its equivalents) is not yet perfect, and the complete replacement of human researchers is not currently advocated, the quality of AI-generated research reports is remarkably high and continues to improve. Crucially, these agents can produce a report in approximately 15 minutes, compared to several weeks for a human.

A key question arises: Is the optimal staff composition of 2020-21 still optimal in 2025? In 2020-21, delegating most meaningful secondary research tasks to AI agents was prohibitively difficult, leading to the decision to hire human personnel. Currently, delegating numerous secondary research tasks to AI agents is straightforward and routine.

Despite this notable shift in global secondary research methodologies, Animal Ask has largely maintained a consistent structure and approach. The organisation has adopted occasional AI research tools for specific purposes (e.g., brainstorming, certain automated classification tasks, suggesting overlooked sources), while exercising caution and thoughtfulness in their integration. Animal Ask's reports from 2021 were human-authored, as are its reports from 2025.

Perhaps it would have been more strategic for Animal Ask to employ a single researcher (rather than two) and for that researcher to leverage AI tools significantly, thereby freeing up the rest of Animal Ask to concentrate on stakeholder engagement and communication.

It is possible that Animal Ask, despite its substantial investment in human research effort, continues to operate optimally. The purpose of this discussion is not to assert that Animal Ask is making incorrect decisions. Rather, it is to suggest that the optimal composition of Animal Ask may have changed between 2021 and 2025, given the major developments in AI during that period.