EA as Antichrist: Understanding Peter Thiel

By Ben_West🔸 @ 2025-08-06T17:31 (+115)

“The Antichrist probably presents as a great humanitarian, it's redistributive, it's an extremely great philanthropist, as an effective altruist, all of those kinds of things.” - Peter Thiel

Effective altruism has more than its share of critics. But Peter Thiel, the billionaire cofounder of PayPal and Palantir, is unusual in that, when he describes us as the “Antichrist,” he does not mean this as a generic slur but rather as a specific claim that we oppose Jesus Christ in the Second Coming.

This document is intended just as an explication of his views, I neither endorse nor critique them. My attempted summary of his argument:

- The Antichrist will claim to bring peace and safety while actually creating a stagnant global state.

- The only promising technology for avoiding stagnation is AI.

- So the Antichrist will try to slow down and regulate AI in the name of peace and safety.

- EAs want to slow down and regulate AI in the name of peace and safety.

- Therefore, EAs are the Antichrist.

My attempted secular version of his argument:

- Totalitarian governments often rise to power by claiming that they need authority to protect society from some greater threat.

- A way to identify these proto-totalitarian governments is to look for instances where people are claiming that there is a great threat and therefore humanity needs to take some costly action, despite the threat not actually being that big.

- EA (and AI safety in particular) fulfills these requirements:

- The threat of AI isn’t that big because AI will not be that transformative and is likely to be regulated out of existence anyway.

- The regulation of AI will be costly because:

- Stagnation is bad and inevitably leads to conflict because humans will compete over a limited pool of resources.

- Artificial intelligence appears to be the only vector for technological growth in the near-term future.

- Therefore, on the margin, we should be more willing to accept risks from emerging technologies in exchange for greater growth.

“Everyone is worried about the Scylla of Armageddon, nukes, pandemics, AI... We're not worried enough about the Charybdis of one world government, the Antichrist.” - Peter Robinson, summarizing Peter Thiel

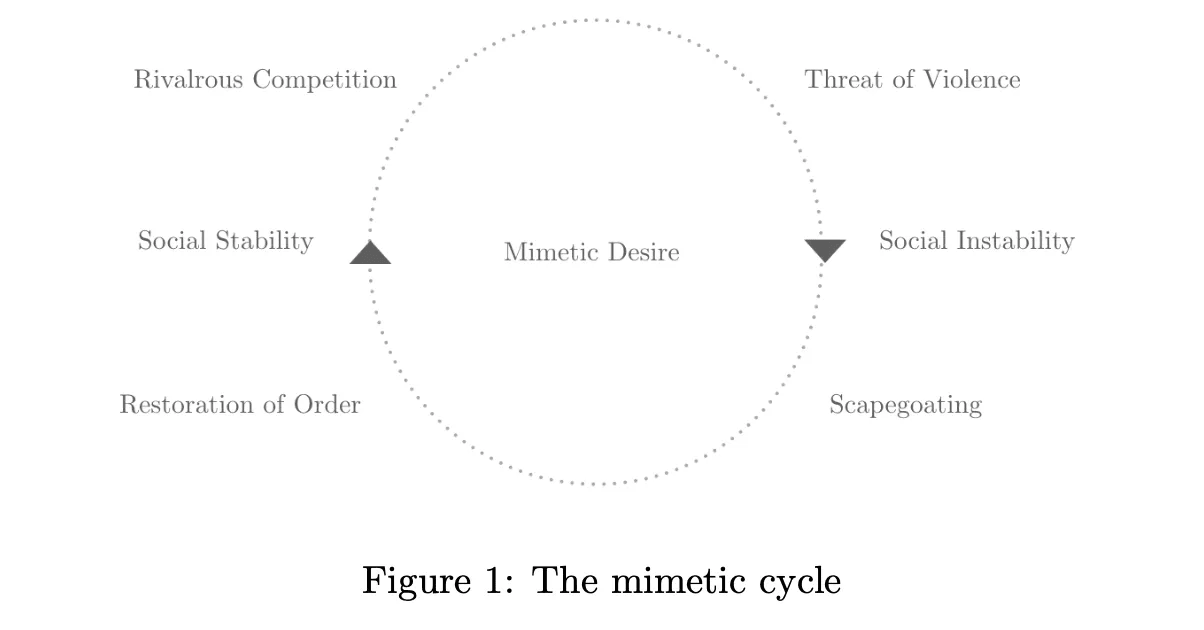

Background: Girardian Mimetic Desires and Scapegoating

Peter Thiel is heavily influenced by the philosopher Rene Girard, including starting a foundation devoted to popularizing Girard’s work. Understanding at least the basics of Girard's views seems important for understanding Thiel's worldview.

Scott Alexander’s Summary

Scott Alexander gives the best short intro to Girard that I could find. The rest of this subsection is quoted from his article.

- Most (all?) human desire is mimetic, ie based on copying other people’s desires. The Bible warns against coveting your neighbor’s stuff, because it knows people’s natural tendencies run that direction. It’s not that your neighbor has particularly good stuff. It’s that you want it because it’s your neighbor’s. Think of two children playing in a room full of toys. One child picks up Toy #368 and starts playing with it. Then the other child tries to take it, ignoring all the hundreds of other toys available. It’s valuable because someone else wants it.

- As with the two children, conflict is inevitable. As the mimetic process intensifies, everyone goes from complicated individuals with individual wants, to copies of their neighbors (ie their desires copy their neighbors’ desires, and they become the sort of people who would have those desires). Alliances form and dissipate. There is a war of all against all. The social fabric starts to collapse.

- Instead of letting the social fabric collapse, everyone suddenly turns their ire on one person, the victim. Maybe this person is a foreigner, or a contrarian, or just ugly. The transition from individuals to a mob reaches a crescendo. The mob, with one will, murders the victim (or maybe just exiles them).

- Then everything is kind of okay! The murder relieves the built-up tension. People feel like they can have their own desires again, and stop coveting their neighbors’ stuff quite so hard, at least for a while. Society does not collapse. If there was no civilization before, maybe people take advantage of this period of relative peace to found civilization.

- (Optional step 5) Seems pretty impressive that killing one victim could cause all this peace and civilization! The former mob declares their victim to be a god. Killing the god was the necessary prerequisite to civilization. Now the god probably reigns in heaven or something. Maybe they die and resurrect every year. Whatever.

- Rinse and repeat.

Girard is against this process. Not just because it involves violent mobs lynching innocent people (although it does), but because that step perpetuates the whole cycle: people greedily desiring whatever their neighbors have, people hating their neighbors, internecine war of all against all. He dubs the process Satan, based partly on the original Hebrew meaning of Satan as “prosecutor”. Satan is the force that tells people that the victim is guilty and deserves to be lynched.

…

So, concludes Girard, the single-victim process is the basis of all ancient civilization. The pagan myths were written by people who had recently been in the mobs. It accurately reflects their understanding of events: there was some kind of looming crisis, we figured out that an ugly foreigner was responsible, we killed him, and that solved the problem (and optionally, he might be a god). Girard insists that this process is approximately infinitely powerful. You can’t just choose to be a good person who isn’t in the mob. Everyone joins in the mob. You can’t even regret being in the mob afterwards. This is some Julian Jaynes-level stuff. Your psyche is completely shaped by the single-victim process, you are caught up in it like a leaf in the wind, and all you can do is write some myths afterwards talking about how very right you were.

So how does the Hebrew Bible escape this failure mode? Girard says divine intervention. God (here meaning literal God, exactly as the average churchgoer understands Him) tried to break the reign of Satan (here meaning metaphorical Satan, the single-victim process) over the Jewish people, by constantly providing them with examples of the single-victim process being bad and ensuring those examples were written up accurately. He got the Israelites to obsess over these examples and worship them as a holy text, trying to hammer the whole thing into their heads. Finally, He sent His only begotten Son as the perfect victim, who would undergo the process in its entirety and have it be written up with unprecedented attention to detail. This extra-compelling example finally penetrated the Israelites’ thick skulls. Although Peter and the other disciples sort of joined the mob in denying Jesus at the beginning, after the Resurrection they started thinking previously barely-thinkable thoughts, like “what if our mob was in the wrong?” and “what if mob violence is bad?”

…

The Son of God brought from Heaven to Earth a single Word of the ineffable Divine speech, and that word was “VICTIM”. At first it was whispered only by a few disciples, so softly it could barely be heard at all. But as missionaries spread the faith, the word grew louder and louder until it became a roar, drowning out all merely-human metaphysics / psychology / ethics.

At some point it no longer needed the Church as a carrier vehicle. Like Oedipus, it killed its parent. The Church, it might seem, is not maximally designed to help victims. It has all these extraneous pieces, like prayers and cathedrals and Popes. And isn’t prayer offensive when we should be engaging in direct revolutionary action to free the oppressed? Aren’t cathedrals are a gaudy celebration of wealth, when that money should be used to feed the poor. Doesn’t a celibate clergy create conditions rife for child sexual abuse? As the single divine Word grew louder and louder, Christianity started to seem morally indefensible, and began to wither away like the pagan faiths it supplanted.

Rene Girard is against this. He shares the basic anti-woke fear that all of this ends in some kind of totalitarian communism, or in a bloody war of all against all where everyone accuses everyone else of being some kind of oppressor. But - at least in this book - he seems totally confused how to think about this or what can be done about it.

…

“I see Satan fall like lightning” doesn’t mean Satan dies. It means he falls from Heaven to Earth. He goes from being a semi-incomprehensible Jaynesian spiritual force, to lurking underneath all of our usual human squabbles. Girard does name wokeness as the Antichrist: not in the sense of “anti-Christian”, but in the older sense of anti-, the one that produced the word “antipope”. An antipope is a person who looks like he is the Pope, makes a superficially-credible claim to be the Pope, but is in fact not the Pope, and is opposed to everything that good Popes should stand for.

Key Points

[source]

- Conflict does not (solely) arise from poverty, poor education, or whatever else a bleeding heart liberal would blame. It arises from the fundamental zero-sum nature of mimetic desire, which causes a never-ending cycle of violence.

- The cycle of violence can be reset through the sacrifice of a scapegoat. Unfortunately (?), Christian society does not let us sacrifice random scapegoats, so we are trapped in an ever-escalating cycle, with only poor substitutes like “cancelling celebrities on Twitter” to release pressure. Girard doesn’t know what to do about this.

Background on the Antichrist

“I say this because many deceivers, who do not acknowledge Jesus Christ as coming in the flesh, have gone out into the world. Any such person is the deceiver and the Antichrist.” - 2 John 1:7

There are several common misconceptions (in Thiel’s view) about the standard view of the Antichrist that are worth addressing before continuing:

- The Antichrist isn’t a single person: “you can think of it as a system where maybe communism is a one world system. So it can be an ideology or a system.” [source] (Theil’s view is relatively mainstream within Christianity, although there is some disagreement. See below.)

- The Antichrist is not the opposite of Christ but rather one who stands in Christ’s place (the term “false Christ” is found in the gospels and may be more intuitive for modern readers)

- The Antichrist is commonly thought to be the “beast of the sea” from Revelation 13:1 but Revelation never explicitly says the word “Antichrist.” Some Christians believe that the Antichrist is not involved in Armageddon (I am unclear on Thiel’s opinion)

Thiel’s Views

Stagnation

“Then I saw that all toil and all skill in work come from a man’s envy of his neighbor. This also is vanity and a striving after wind.” - Ecclesiastes 4:4

If conflict came from, say, a lack of education, we might hope that by investing in schools we could reduce conflict. But if conflict comes from zero-sum rivalrous goods, then the only way to reduce conflict is to make more goods:

If we go full on with the club of Rome limits to growth, we have this fully Luddite program that again, my intuitions is that that will end very badly politically. It's gonna be a zero sum nasty Malthusian society and it will push towards something that's much more autocratic, much more totalitarian, because the pie won't grow. [source]

Unfortunately, progress has ground to a halt:

When tracked against the admittedly lofty hopes of the 1950s and 1960s, technological progress has fallen short in many domains. Consider the most literal instance of non-acceleration: We are no longer moving faster. The centuries-long acceleration of travel speeds — from ever-faster sailing ships in the 16th through 18th centuries, to the advent of ever-faster railroads in the 19th century, and ever-faster cars and airplanes in the 20th century — reversed with the decommissioning of the Concorde in 2003, to say nothing of the nightmarish delays caused by strikingly low-tech post-9/11 airport-security systems. Today’s advocates of space jets, lunar vacations, and the manned exploration of the solar system appear to hail from another planet. A faded 1964 Popular Science cover story — “Who’ll Fly You at 2,000 m.p.h.?” — barely recalls the dreams of a bygone age. [source]

There is one exception:

By default, computers have become the single great hope for the technological future. The speedup in information technology contrasts dramatically with the slowdown everywhere else. [source]

In particular:

If you don’t have A.I., wow, there’s just nothing going on. [source]

Totalitarianism

“Jesus said to them, ‘The kings of the Gentiles lord it over them; and those who exercise authority over them call themselves Benefactors. But you are not to be like that. Instead, the greatest among you should be like the youngest, and the one who rules like the one who serves.’” - Luke 22:25-26

Thiel is not a fan of governments:

People don’t understand that. They think governments are somehow divinely ordained. So, once you see how satanic the government is, how satanic taxes are, other things besides the governments do, it will have this unraveling effect. [source]

And particularly dislikes globalization:

At a minimum, you need to just always think, there are so many bad forms of globalization, our only chance of getting to a good one is to realize how tough it is. Maybe we should have trade treaties, we should negotiate them. They should always be negotiated by people who don't believe in free trade. [source]

Thiel’s view of the major tradeoff we face, as summarized by Peter Robinson:

Everyone is worried about the Scylla of Armageddon, nukes, pandemics, AI. Everyone is worried about the Scylla of Armageddon. We're not worried enough about the Charybdis of one world government, the Antichrist. [source]

The Antichrist

“What I hope to retrieve is a sense of the stakes, of the urgency of the question, the stakes are really, really high. It seems very dangerous that we're at a place where so few people are concerned about the Antichrist.” - Peter Thiel

We recall from Girard that the Antichrist acts like Christ but actually opposes him: “The Antichrist boasts of bringing to human beings the peace and tolerance that Christianity promised but has failed to deliver.” And Thiel believes that the greatest danger is the institution of a global government which causes stagnation.

What ideology claims to bring peace and safety while actually instigating stagnation and global regulation?

This is 1 Thessalonians 5:3 — the slogan of the Antichrist is ‘peace and safety.’ And we’ve submitted to the F.D.A. — it regulates not just drugs in the U.S. but de facto in the whole world, because the rest of the world defers to the F.D.A. The Nuclear Regulatory Commission effectively regulates nuclear power plants all over the world. You can’t design a modular nuclear reactor and just build it in Argentina. They won’t trust the Argentinian regulators. They’re going to defer to the U.S. [source]

And is there by chance an ideology or system who wishes to globally regulate and slow down AI, the one dim beacon of progress in an otherwise dismal future? (Narrator looks meaningfully into the camera.)

Summary

- The Antichrist will claim to bring peace and safety while actually creating a stagnant global state.

- The only promising technology for avoiding stagnation is AI.

- So the Antichrist will try to slow down and regulate AI in the name of peace and safety.

- EAs want to slow down and regulate AI in the name of peace and safety.

- Therefore, EAs are the Antichrist.

FAQs

“It is the glory of God to conceal a matter; to search out a matter is the glory of kings.” Proverbs 25:2

Is Thiel unaware of the dangers of AI?

Thiel says:

There are apocalyptic fears around AI that I think deserve to be taken seriously. [source]

He just thinks it’s worth the risk:

I think we have to find some way to talk about these technologies where the technologies are dangerous. But in some sense it's even more dangerous not to do them. It's even more dangerous to have a society where there's zero growth. [source]

That being said, he seems less impressed with the possibility of AI than I expect the median reader is:

Just how big a thing do I think A.I. is? And my stupid answer is: It’s more than a nothing burger, and it’s less than the total transformation of our society. My place holder is that it’s roughly on the scale of the internet in the late ’90s. I’m not sure it’s enough to really end the stagnation. [source]

And even these meagre impacts probably won’t arise:

It’s gonna be outlawed, it’s gonna be regulated, as we have outlawed so many other vectors of innovation.” [source]

How would his opinions change if he thought AI was more powerful?

AUDIENCE MEMBER: Thanks for coming. You’ve alluded to a lot of the forces between decentralization and centralization, particularly around AI with forces around the individual. I was wondering if you could talk a little bit more, describe what you think the forces could be that stop AI development, particularly as it relates to the state’s role, or how a politician or another entity could co-opt that force for their own benefit versus the benefit of many.

THIEL: Maybe the premise of your question is what I’d challenge. Why is AI going to be the only technology that matters? If we say there’s only this one big technology that’s going to be developed, and it is going to dominate everything else, that’s already, in a way, conceding a version of the centralization point. So, yes, if we say that it’s all around the next generation of large language models, nothing else matters, then you’ve probably collapsed it to a small number of players. And that’s a future that I find somewhat uncomfortably centralizing, probably. [source]

Why doesn’t Thiel think AI will be that transformative?

Because intelligence isn’t the gating factor:

I think we’ve had a lot of smart people and things have been stuck for other reasons. And so maybe the problems are unsolvable, which is the pessimistic view…

Or maybe it’s these cultural things. So it’s not the individually smart person, but it’s how this fits into our society. Do we tolerate heterodox smart people? Maybe you need heterodox smart people to do crazy experiments. And if the A.I. is just conventionally smart, if we define wokeness — again, wokeness is too ideological — but if you just define it as conformist, maybe that’s not the smartness that’s going to make a difference. [source]

I’m not sure what Thiel thinks about the standard counterarguments to the “culture not intelligence is the gating factor” view (e.g. these).

If Thiel is worried about totalitarianism, is he also worried that AI could enable totalitarianism?

Peter Robinson asks:

Peter Robinson: But you're not singling out AI as a game changer [for Chinese totalitarianism]. You tend to pooh-pooh the notion that AI will change things.

Peter Thiel: Well, I think it's unclear, I think there's always a lot of propaganda around all these buzzwords and so I think it's somewhat exaggerated, but yes of course, there's sort a continuation of the computer revolution where you'll have, you know, more powerful Leninist controls and you can have certain, you know, maybe the farmers can sell the cabbages in the market and you can still have face recognition software that tracks people at all times and all places, and so there's sort of a hybrid thing that might work for longer than we'd like. [source]

His lack of concern here seems related to his expectation that AI will not be that powerful.

I work in AI safety. What does Thiel think I should do?

My guess is that he probably thinks you should quit your job, but barring that you should look for ways to encourage economic progress as much as possible and minimize the extent to which government oversight, and in particular global government oversight, is needed.

I'm not Christian. Is there anything that I should be learning from Thiel's views?

Helen Toner’s In search of a dynamist vision for safe superhuman AI makes many of the same arguments from a secular frame. Lizka Vaintrob’s Notes on dynamism, power, & virtue also contains related excerpts and notes.

My attempted secular version of his argument:

- Totalitarian governments often rise to power by claiming that they need authority to protect society from some greater threat.

- A way to identify these proto-totalitarian governments is to look for instances where people are claiming that there is a great threat and therefore humanity needs to take some costly action, despite the threat not actually being that big.

- EA (and AI safety in particular) fulfills these requirements:

- The threat of AI isn’t that big because AI will not be that transformative and is likely to be regulated out of existence anyway.

- The regulation of AI will be costly because:

- Stagnation is bad and inevitably leads to conflict because humans will compete over a limited pool of resources.

- Artificial intelligence appears to be the only vector for technological growth in the near-term future.

- Therefore, on the margin, we should be more willing to accept risks from emerging technologies in exchange for greater growth.

How mainstream is Peter Thiel's interpretation of the Bible?

Girard’s theological work has been positively reviewed by respected theologians, but remains on the fringe. His theory of atonement as being related to ending the scapegoating cycle is unusual enough that it isn't even mentioned on the Wikipedia list of theories of atonement (although it should be noted that this doesn't necessarily make it incompatible with other theories of atonement).

Thiel’s anarchic views are unusual, though not unheard of. Notably, Leo Tolstoy’s The Kingdom of God Is Within You argued for what is now called “Christian Anarchism.” (Thiel specifically cites the fact that Satan tempted Jesus with the kingdoms of earth as evidence that governments are satanic, a common justification for Christian anarchism.) However, Romans 13:1-7, 1 Peter 2:13-14 and other passages are usually interpreted to the contrary; e.g. Cambridge Bible says of Romans 13:1: “In this passage it is stated, as a primary truth of human society, that civil authority is, as such, a Divine institution. Whatever may be the details of error or of wrong in its exercise, it is nevertheless, even at its worst, so vastly better than anarchy, that it forms a main instrument and ordinance of the will of God.”

Many theological traditions make a distinction between the capital-A Antichrist as a single figure and small-a antichrists. Those that don't make the distinction would deny the existence of a capital-A Antichrist and assume that there are just various degrees of small-a antichrists as anti-Christian individuals and institutions. Thiel’s views seem to fit within this spectrum.

As a relatively minor point, Thiel repeatedly cites 1 Thessalonians 5:3 as evidence that “the slogan of the Antichrist is ‘peace and safety’,” but the usual interpretation of this verse is just that most people will think that everything is stable and will be surprised by Jesus' return, not that the Antichrist in particular is the one promising peace and safety.

The ESV Study Bible suggests cross referencing 1 Thessalonians 5:3 with Jeremiah 6:14, which is summarized as “[the false prophets] met Jeremiah’s warnings of coming evil by the assurance that all was well, and that invasion and conquest were far-off dangers.” I expect many safety advocates feel like they are the ones warning of coming evil and the Thiel side is the one giving false assurance that all as well. (Thiel would maybe not be surprised: “There’s always a risk that the katechon becomes the Antichrist.”)

I would like to thank the following for contributions to this document: Vesa Hautala, Daniel Filan, JD Bauman, EA for Christians, Davis Kingsley. I sent a draft of this post to Thiel, who (reasonably) did not respond; probably some errors remain.

Jackson Wagner @ 2025-08-07T01:26 (+51)

Nice post! I am a pretty close follower of the Thiel Cinematic Universe (ie his various interviews, essays, etc), so here are a ton of sprawling, rambly thoughts. I tried to put my best material first, so feel free to stop reading whenever!

- There is a pretty good Girard documentary (free to watch on youtube, likely funded in part by Theil and friends) that came out recently.

- Unrelated to Thiel or Girard, but if you enjoy that documentary, and you crave more content in the niche genre of "christian theology that is also potentially-groundbreaking sociological theory explaining political & cultural dynamics", then I highly recommend this Richard Ngo blog post, about preference falsification, decision theory, and Kierkegaard's concept of a "leap of faith" from his book Fear and Trembling.

- I think Peter Thiel's beef with EA is broader and deeper than just the AI-specific issue of "EA wants to regulate AI, and regulating AI is the antichrist, therefore EA is the antichrist". Consider this bit of an interview from three years ago where he's getting really spooked about Bostrom's "Vulnerable World Hypothesis" paper (wherein Bostrom indeed states that an extremely pervasive, hitherto-unseen form of technologically-enabled totalitarianism might be necessary if humanity is to survive the invention of some hypothetical, extremely-dangerous technologies).

- Thiel definitely thinks that EA embodies a general tendency in society (a tendency which has been dominant since the 1970s, ie the environmentalist and anti-nuclear movements) to shut down new technologies out of fear.

- It's unclear if he thinks EA is cynically executing a fear-of-technology-themed strategy to influence governments, gain power, and do antichrist things itself... Or if he thinks EA is merely a useful-idiot, sincerely motivated by its fear of technology (but in a way that unwittingly makes society worse and plays into the hands of would-be antichrists who co-opt EA ideas / efforts / etc to gain power).

- I think Thiel is also personally quite motivated (understandably) by wanting to avoid death. This obviously relates to a kind of accelerationist take on AI that sets him against EA, but again, there's a deeper philosophical difference here. Classic Yudkowsky essays (and a memorable Bostrom short story, video adaptation here) share this strident anti-death, pro-medical-progress attitude (cryonics, etc), as do some philanthropists like Vitalik Buterin. But these days, you don't hear so much about "FDA delenda est" or anti-aging research from effective altruism. Perhaps there are valid reasons for this (low tractability, perhaps). But some of the arguments given by EAs against aging's importance are a little weak, IMO (more on this later) -- in Thiel's view, maybe suspiciously weak. This is a weird thing to say, but I think to Thiel, EA looks like a fundamentally statist / fascist ideology, insofar as it is seeking to place the state in a position of central importance, with human individuality / agency / consciousness pushed aside.

- Somebody like Thiel might say that whole concept of "longtermism" is about suppressing the individual (and their desires for immortality / freedom / whatever), instead controlling society and optimizing (slowing) the path of technological development for the sake of overall future civilization (aka, the state). One might cite books like Ernest Becker's The Denial of Death (which claims, per that wikipedia page, that "human civilization is a defense mechanism against the knowledge of our mortality" and that people manage their "death anxiety" by pouring their efforts into an "immortal project" -- which "enables the individual to imagine at least some vestige of meaning continuing beyond their own lifespan"). In this modern age, when heroic cultural narratives and religious delusions no longer do the job, and when building LITERAL giant pyramids in the desert for the glorification of the state is out of style, what better a project than "longtermism" with which to harness individuals' energy while keeping them under control by providing comfortable relief from their death-anxiety?

- In the standard EA version of total hedonic utilitarianism (not always mentioned directly, but often present in EA thinking/analysis as a convenient background assumption), wherein there is no difference between individuals (10 people living 40 years is the same number of QALYs as 5 people living 80 years), no inherent notion of fundamental human rights or freedoms (perhaps instead you should content yourself with a kind of standard UBI of positively-valenced qualia), a kind of Rawlsian tendency towards communistic redistribution rather than traditional property-ownership and inequality, no accounting for Nietzschean-style aesthetics of virtue and excellence, et cetera. Utilitarianism as it is usually talked about has a bit of a "live in the pod, eat the bugs" vibe.

- Thiel definitely thinks that EA embodies a general tendency in society (a tendency which has been dominant since the 1970s, ie the environmentalist and anti-nuclear movements) to shut down new technologies out of fear.

- For the secular version of Thiel's argument more directly, see Peter Thiel's speech on "Anti-Anti-Anti-Anti Classical Liberalism", in which Thiel ascends what Nick Bostrom would call a "deliberation ladder of crucial considerations" for and against classical liberalism (really more like "universities"), which (if I recall correctly -- and note I'm describing not necessarily agreeing) goes something like this:

- Classical liberalism (and in particular, universities / academia / other institutions driving scientific progress) are good for all the usual reasons

- Anti: But look at all this crazy wokeness and postmodernism and other forms of absurd sophistry, the universities are so corrupt with these dumb ideologies, look at all this waste and all this leftist madness. If classical liberalism inexorably led to this mess, then classical liberalism has got to go.

- Anti-anti: Okay, but actually all that woke madness and sophistry is mostly confined to the humanities; things are not so bad in the sciences. Harvard et al might emit some crazy noises about BLM or Gaza, but there are lots of quiet science/engineering/etc departments slowly pushing forward cures for diseases, progress towards fusion power, etc. (And note that the sciences have been growing dramatically as a percentage of all college graduates! Humanities are basically withering away due to their own irrelevance.) Zooming out from the universities, maybe you could make a similar point about "our politics is full of insane woke / MAGA madness, but beneath all that shouting you find that the stock market is up, capitalism is humming along better than ever, etc". So, classical liberalism is good.

- Anti-anti-anti: But actually, all that scientific progress is ultimately bad, because although it's improving our standard of living here and now, ultimately it's leading us into terrible existential risks (as we already experience with nuclear weapons, and perhaps soon with pandemics, AI, etc).

- Anti-anti-anti-anti: Okay, but you forgetting some things on your list of risks to worry about. Consider that 1. totalitarian one-world government is about as likely as any of those existential risks, and classical liberalism / technological progress is a good defense against that. And 2. zero technological progress isn't a safe state, but would be a horrible zero-growth regime that would cause people to turn against each other, start wars, etc. So, the necessity of technological progress for avoiding stable totalitarianism means that classical liberalism / universities / etc are ultimately good.

- I think part of the reason for Thiel talking about the antichrist (beyond his presumably sincere belief in this stuff, on whatever level of metaphoricalness vs literalness he believes Christianity) is that he probably wants to culturally normalize the use of the term "antichrist" to refer metaphorically to stable totalitarianism, in the same sense that lots of people talk about "armageddon" in a totally secular context to refer to existential risks like nuclear war. In Thiel's view, the very fact that "armageddon" is totally normal, serious-person vocabulary, but "antichrist" connotes a ranting conspiracy theorist, is yet more evidence of society's unhealthy tilt between the Scylla of extinction risk and the Charybdis of stable totalitarianism.

As for my personal take on Thiel's views -- I'm often disappointed at the sloppiness (blunt-ness? or low-decoupling-ness?) of his criticisms, which attack the EA for having a problematic "vibe" and political alignment, but without digging into any specific technical points of disagreement. But I do think some of his higher-level, vibe-based critiques have a point.

- Stable totalitarianism is pretty obviously a big deal, yet it goes essentially ignored by mainstream EA. (80K gives it just a 0.3% chance of happening over the next century? I feel like AI-enabled coups alone are surely above 0.3%, and that's just one path of several!) Much of the stable-totalitarian-related discussion I see around here are left-coded things like "fighting misinformation" (presumably via a mix of censorship and targeted "education" on certain topics), "protecting democracy" (often explicitly motivated by the desire to protect people from electing right-wing populists like Trump).

- Where is the emphasis on empowering the human individual, growing human freedom, and trying to make current human freedoms more resilient and robust? I can sort of imagine a more liberty-focused EA that puts more emphasis on things like abundance-agenda deregulatory reforms, charter cities / network states, lobbying for US fiscal/monetary policy to optimize for long-run economic growth, boosting privacy-enhancing technologies (encryption of all sorts, including Vitalik-style cryptocurrency stuff, etc), delenda-ing the FDA, full steam ahead on technology for superbabies and BCIs / IQ enhancement, pushing for very liberal rules on high-skill immigration, et cetera. And indeed, a lot of this stuff is sorta present in EA to some degree. But, with the recent exception of an Ezra-Klein-endorsed abundance agenda, it kinda lives around the periphery; it isn't the dominant vibe. Most of this stuff is probably just way lower importance / neglectedness / tractability than the existing cause areas, of course -- not all cause areas can be the most important cause area! But I do think there is a bit of a blind spot here.

- The one thing that I think should clearly be a much bigger deal within EA is object-level attempts to minimize stable totalitarianism -- it seems to me this should perhaps be on a par with EA's focus on biosecurity (or at the very least, nuclear war), but IRL it gets much less attention. Consider the huge emphasis devoted to mapping out the possible long-term future of AI -- people are even doing wacky stuff like figuring out what kind of space-governance laws we should pass to assign ownership of distant galaxies, on the off chance that our superintelligences end up with lawful-neutral alignment and decide to respect UN treaties. Where is the similar attention on mapping out all the laws we should be passing and precedents we should be setting that will help prevent stable totalitarianism in the future?

- Like maybe passing laws mandating that brain-computer-interface data be encrypted by default?

- Or a law clarifying that emulated human minds have the same rights as biological humans?

- Or a law attempting to ban the use of LLMs for NSA-style mass surveillance / censorship purposes, despite the fact that LLMs are obviously extremely well-suited for these tasks?

- Maybe somebody should hire Rethink / Forethought / etc to map out various paths that might lead to a stable-totalitarian world government and rank them by plausibility -- AI-enabled coup? Or a more traditional slow slide into socialism like Thiel et al are always on about? Or the most traditional path of all, via some charismatic right-wing dictator blitzkrieging everyone? Does it start in one nation and overrun other nations' opposition, or emerge (as Thiel seems to imply) via a kind of loose global consensus akin to how lots of different nations had weirdly similar policy responses to Covid-19 (and to nuclear power). Does it route through the development of certain new technologies like extremley good AI-powered lie-detection, or AI superpersuasion, or autonomous weapons, or etc?

- As far as I can tell, this isn't really a cause area within EA (aside from a very nascent and still very small amount of attention placed on AI-enabled coups specifically).

- It does feel like there are a lot of potential cause areas -- spicy stuff like superbabies, climate geoengineering, perhaps some longevity or BCI-related ideas, but also just "any slightly right-coded policy work" that EA is forced to avoid for essentially PR reasons, because they don't fit the international liberal zeitgeist. To be clear, I think it's extremely understandable that the literal organizations Good Ventures and Open Philanthropy are constrained in this way, and I think they are probably making absolutely the right decision to avoid funding this stuff. But I think it's a shame that the wider movement / idea of "effective altruism" is so easily tugged around by the PR constraints that OP/GV have to operate under. I think it's a shame that EA hasn't been able to spin up some "EA-adjacent" orgs (besides, idk, ACX grants) that specialize in some of this more-controversial stuff. (Although maybe this is already happening on a larger scale than I suspect -- naturally, controversial projects would try to keep a low profile.)

- I do think that EA is perhaps underrating longevity and other human-enhancement tech as a cause area. Although unlike with stable totalitarianism, I don't think that it's underrating the cause area SO MUCH that longevity actually deserves to be a top cause area.

- But if we ever feel like it's suddenly a top priority to try and appease Thiel and the accelerationists, and putting more money into mere democrat-approved-abundance-agenda stuff doesn't seem to be doing the trick, it might nevertheless be worthwhile from a cynical PR perspective to put some token effort into this transhumanist stuff (and some of the the "human-liberty-promoting" ideas from earlier), convince them that we aren't actually the antichrist.

Ben_West🔸 @ 2025-08-07T15:39 (+10)

Thanks! Do you know if there is anywhere he has engaged more seriously with the possibility that AI could actually be transformative? His "maybe heterodox thinking matters" statement I quoted above feels like relatively superficial engagement with the topic.

Jackson Wagner @ 2025-08-07T19:38 (+10)

He certainly seems very familiar with the arguments involved, the idea of superintelligence, etc, even if he disagrees in some ways (hard to tell exactly which ways), and seems really averse to talking about AI the familiar rationalist style (scaling laws, AI timelines, p-dooms, etc), and kinda thinks about everything in his characteristic style: vague, vibes- and political-alignment- based, lots of jumping around and creative metaphors, not interested in detailed chains of technical arguments.

- Here is a Wired article tracing Peter Thiel's early funding of the Singularity Institute, way back in 2005. And here's a talk from two years ago where he is talking about his early involvement with the Singularity Institute, then mocking the bay-area rationalist community for devolving from a proper transhumanist movement into a "burning man, hippie luddite" movement (not accurate IMO!), culminating in the hyper-pessimism of Yudkowsky's "Death with Dignity" essay.

- When he is bashing EA's focus on existential risk (like in that "anti-anti-anti-anti classical liberalism" presentation), he doesn't do what most normal people do and say that existential risk is a big fat nothingburger. Instead, he acknowledges that existential risk is at least somewhat real (even if people have exaggerated fears about it -- eg, he relates somewhere that people should have been "afraid of the blast" from nuclear weapons, but instead became "afraid of the radiation", which leads them to ban nuclear power), but that the real existential risk is counterbalanced by the urgent need to avoid stagnation and one-world-government (and presumably, albeit usually unstated, the need to race ahead to achieve transhumanist benefits like immortality).

- His whole recent schtick about "Why can we talk about the existential-risk / AI apocalypse, but not the stable-totalitarian / stagnation Antichrist?", which of course places him squarely in the "techno-optimist" / accelerationist part of the tech right, is actually quite the pivot from a few years ago, when one of his most common catchphrases went along the lines of "If technologies can have political alignments, since everyone admits that cryptocurrency is libertarian, then why isn't it okay to say that AI is communist?" (Here is one example.) Back then he seemed mainly focused on an (understandable) worry about the potential for AI to be a hugely power-centralizing technology, performing censorship and tracking individuals' behavior and so forth (for example, how China uses facial and gait recognition against hong kong protestors, xinjiang residents, etc).

- (Thiel's positions on AI, on government spying, on libertarianism, etc, coexist in a complex and uneasy way with the fact that of course he is a co-founder of Palantir, the premier AI-enabled-government-spying corporation, which he claims to have founded in order to "reduce terrorism while preserving civil liberties".)

- Thiel describing a 2024 conversation with Elon Musk and Demis Hassabis, where Elon is saying "I'm working on going to mars, it's the most important project in the world" and Demis argues "actually my project is the most important in the world; my superintelligence will change everything, and it will follow you to mars". (This is in the context of Thiel's long pivot from libertarianism to a darker strain of conservativism / neoreaction, having realized that that "there's nowhere else to go" to escape mainstream culture/civilization, that you can't escape to outer space, cyberspace, or the oceans as he once hoped, but can only stay and fight to sieze control of the one future (hence all these musings about carl schmidtt and etc that make me feel wary he is going to be egging on J D Vance to try and auto-coup the government).

- Followed by (correctly IMO) mocking Elon for being worried about the budget deficit, which doesn't make any sense if you really are fully confident that superintelligent AI is right around the corner as Elon claims.

A couple more quotes on the subject of superintelligence from the recent Ross Douthat conversation (transcript, video):

Thiel claims to be one of those people who (very wrongly IMO) thinks that AI might indeed achieve 3000 IQ, but that it'll turn out being 3000 IQ doesn't actually help you do amazing things like design nanotech or take over the world:

PETER THIEL: It’s probably a Silicon Valley ideology and maybe, maybe in a weird way it’s more liberal than a conservative thing, but people are really fixated on IQ in Silicon Valley and that it’s all about smart people. And if you have more smart people, they’ll do great things. And then the economics anti IQ argument is that people actually do worse. The smarter they are, the worse they do. And they, you know, it’s just, they don’t know how to apply it, or our society doesn’t know what to do with them and they don’t fit in. And so that suggests that the gating factor isn’t IQ, but something, you know, that’s deeply wrong with our society.ROSS DOUTHAT: So is that a limit on intelligence or a problem of the sort of personality types human superintelligence creates? I mean, I’m very sympathetic to the idea and I made this case when I did an episode of this, of this podcast with a sort of AI accelerationist that just throwing, that certain problems can just be solved if you ramp up intelligence. It’s like, we ramp up intelligence and boom, Alzheimer’s is solved. We ramp up intelligence and the AI can, you know, figure out the automation process that builds you a billion robots overnight. I, I’m an intelligent skeptic in the sense I don’t think, yeah, I think you probably have limits.

PETER THIEL: It’s, it’s, it’s hard to prove one way or it’s always hard to prove these things.

Thiel talks about transhumanism for a bit (albeit devolves into making fun of transgender people for being insufficiently ambitious) -- see here for the Dank EA Meme version of this exchange:

ROSS DOUTHAT: But the world of AI is clearly filled with people who at the very least seem to have a more utopian, transformative, whatever word you want to call it, view of the technology than you’re expressing here, and you were mentioned earlier the idea that the modern world used to promise radical life extension and doesn’t anymore. It seems very clear to me that a number of people deeply involved in artificial intelligence see it as a kind of mechanism for transhumanism, for transcendence of our mortal flesh and either some kind of creation of a successor species, or some kind of merger of mind and machine. Do you think that’s just all kind of irrelevant fantasy? Or do you think it’s just hype? Do you think people are trying to raise money by pretending that we’re going to build a machine god? Is it delusion? Is it something you worry about? I think you, you would prefer the human race to endure, right? You’re hesitating.PETER THIEL: I don’t know. I, I would... I would...

ROSS DOUTHAT: This is a long hesitation.

PETER THIEL: There’s so many questions and pushes.

ROSS DOUTHAT: Should the human race survive?

PETER THIEL: Yes.

ROSS DOUTHAT: Okay.

PETER THIEL: But, but I, I also would. I, I also would like us to, to radically solve these problems. Transhumanism is this, you know, the ideal was this radical transformation where your human natural body gets transformed into an immortal body. And there’s a critique of, let’s say, the trans people in a sexual context or, I don’t know, transvestite is someone who changes their clothes and cross dresses, and a transsexual is someone where you change your, I don’t know, penis into a vagina. And we can then debate how well those surgeries work, but we want more transformation than that. The critique is not that it’s weird and unnatural. It’s man, it’s so pathetically little. And okay, we want more than cross dressing or changing your sex organs. We want you to be able to change your heart and change your mind and change your whole body.

- Making fun of Elon for simultaneously obsessing over budget deficits while also claiming to be confident that a superintelligence-powered industrial explosion is right around the corner:

PETER THIEL: A conversation I had with Elon a few weeks ago about this was, he said, “We’re going to have a billion humanoid robots in the US in 10 years.” And I said, “Well, if that’s true, you don’t need to worry about the budget deficits because we’re going to have so much growth. The growth will take care of this.” And then, well, he’s still worried about the budget deficits. And then this doesn’t prove that he doesn’t believe in the billion robots, but it suggests that maybe he hasn’t thought it through or that he doesn’t think it’s going to be as transformative economically, or that there are big error bars around it.

Matrice Jacobine @ 2025-08-09T00:33 (+9)

Thiel describing a 2024 conversation with Elon Musk and Demis Hassabis, where Elon is saying "I'm working on going to mars, it's the most important project in the world" and Demis argues "actually my project is the most important in the world; my superintelligence will change everything, and it will follow you to mars". (This is in the context of Thiel's long pivot from libertarianism to a darker strain of conservativism / neoreaction, having realized that that "there's nowhere else to go" to escape mainstream culture/civilization, that you can't escape to outer space, cyberspace, or the oceans as he once hoped, but can only stay and fight to sieze control of the one future (hence all these musings about carl schmidtt and etc that make me feel wary he is going to be egging on J D Vance to try and auto-coup the government).

FTR: while Thiel has already claimed this version before, the more common version (e.g. here, here, here from Hassabis' mouth, and more obliquely here in his lawsuit against Altman) is that Hassabis was warning Musk about existential risk from unaligned AGI, not threatening him with his own personally aligned AGI. However, this interpretation is interestingly resonant with Elon Musk's creation of OpenAI being motivated by fear of Hassabis becoming an AGI dictator (a fear his co-founders apparently shared). It is certainly an interesting hypothesis that Thiel and Musk engineered together for a decade both the AGI race and global democratic backsliding wholly motivated by a same single one-sentence possible slight by Hassabis in 2012.

Larks @ 2025-08-08T14:24 (+6)

Thanks a lot for all your comments on this post, I found them very informative. (And the top-level post from Ben as well).

tlevin @ 2025-08-07T15:57 (+6)

I think this picture of EA ignoring stable totalitarianism is missing the longtime focus on China.

Also, see this thread on Open Phil's ability to support right-of-center policy work.

Charlie_Guthmann @ 2025-08-07T18:38 (+5)

This was really insightful but i'm curious if you assign much or any probability to the idea that he doesn't actually have any strong ethical view(s). Seems plausible that he likes talking and sounding smart, has some weakly held views, but when the chips hit the table will mainly just optimize for money and power?

Jackson Wagner @ 2025-08-07T21:26 (+11)

I think Thiel really does have a variety of strongly held views. Whether these are "ethical" views, ie views that are ultimately motivated by moral considerations... idk, kinda depends on what you are willing to certify as "ethical".

I think you could build a decent simplified model of Thiel's motivations (although this would be crediting him with WAY more coherence and single-mindedness than he or anyone else really has IMO) by imagining he is totally selfishly focused on obtaining transhumanist benefits (immortality, etc) for himself, but realizes that even if he becomes one of the richest people on the planet, you obviously can't just go out and buy immortality, or even pay for a successful immortality research program -- it's too expensive, there are too many regulatory roadblocks to progress, etc. You need to create a whole society that is pro-freedom and pro-property-rights (so it's a pleasant, secure place for you to live) and radically pro-progress. Realistically it's not possible to just create an offshoot society, like a charter city in the ocean or a new country on Mars (the other countries will mess with you and shut you down). So this means that just to get a personal benefit to yourself, you actually have to influence the entire trajectory of civilization, avoiding various apocalyptic outcomes along the way (nuclear war, stable totalitarianism), etc. Is this an "ethical" view?

- Obviously, creating a utopian society and defeating death would create huge positive externalities for all of humanity, not just Mr Thiel.

- (Although longtermists would object that this course of action is net-negative from an impartial utilitarian perspective -- he's short-changing unborn future generations of humanity, running a higher level of extinction risk in order to sprint to grab the transhumanist benefits within his own lifetime.)

- But if the positive externalities are just a side-benefit, and the main motivation is the personal benefit, then it is a selfish rather than altruistic view. (Can a selfish desire for personal improvement and transcendence still be "ethical", if you're not making other people worse off?)

- Would Thiel press a button to destroy the whole world if it meant he personally got to live forever? I would guess he wouldn't, which would go to show that this simplified monomanaical model of his motivations is wrong, and that there's at least a substantial amount of altruistic motivation in there.

I also think that lots of big, world-spanning goals (including altruistic things like "minimize existential risk to civilization", or "minimimze animal suffering", or "make humanity an interplanetary species") often problematically route through the convergent instrumental goal of "optimize for money and power", while also being sincerely-held views. And none moreso than a personal quest for immortality! But he doesn't strike me as optimising for power-over-others as a sadistic goal for its own sake (as it may have been for, say, Stalin) -- he seems to have such a strong belief in the importance of individual human freedom and agency that it would be suprising if he's secretly dreaming of enslaving everyone and making them do his bidding. (Rather, he consistently sees himeself as trying to help the world throw off the shackles of a stultifying, controlling, anti-progress regime.)

But getting away from this big-picture philosophy, Thiel also seems to have lots of views which, although they technically fit nicely into the overall "perfect rational selfishness" model above, seem to at least in part be fueled by an ethical sense of anger at the injustice of the world. For example, sometime in the past few years Thiel started becoming a huge Georgist. (Disclaimer: I myself am a huge Georgist, and I think it always reflects well on people, both morally and in terms of the quality of their world-models / ability to discern truth.)

- Here is a video lecture where Thiel spends half an hour at the National Conservatism Conference, desperately begging Republicans to stop just being obsessed with culture-war chum and instead learn a little bit about WHY California is so messed up (ie, the housing market), and therefore REALIZE that they need to pass a ton of "Yimby" laws right away in all the red states, or else red-state housing markets will soon become just as disfunctional as California's, and hurt middle class and poor people there just like they do in California. There is some mean-spiritedness and a lot of Republican in-group signalling throughout the video (like when he is mocking the 2020 dem presidential primary candidates), but fundamentally, giving a speech trying to save the American middle class by Yimby-pilling the Republicans seems like a very good thing, potentially motivated by sincere moral belief that ordinary people shouldn't be squeezed by artificial scarcity creating insane rents.

- Here's a short, two-minute video where Thiel is basically just spreading the Good News about Henry George, wherin he says that housing markets in anglosphere countries are a NIMBY catastrophe which has been "a massive hit to the lower-middle class and to young people".

Thiel's georgism ties into some broader ideas about a broken "inter-generational compact", whereby the boomer generation has unjustly stolen from younger generations via housing scarcity pushing up rents, via ever-growing medicare / social-security spending and growing government debt, via shutting down technological progress in favor of safetyism, via a "corrupt" higher-education system that charges ever-higher tuition and not providing good enough value for money, and various other means.

The cynical interpretation of this is that this is just a piece of his overall project to "make the world safe for capitalism", which in turn is part of his overall selfish motivation: He realizes that young people are turning socialist because the capitalist system seems broken to them. It seems broken to them, not because ALL of capitalism is actually corrupt, but specifically because they are getting unjustly scammed by NIMBYism. So he figures that to save capitalism from being overthrown by angry millenials voting for Bernie, we need to make America YIMBY so that the system finally works for young people and they have a stake in the system. (This is broadly correct analysis IMO) Somewhere I remember Thiel explicitly explaining this (ie, saying "we need to repair the intergenerational compact so all these young people stop turning socialist"), but unfortunately I don't remember where he said this so I don't have a link.

So you could say, "Aha! It's really just selfishness all the way down, the guy is basically voldemort." But, idk... altruistically trying to save young people from the scourge of high housing prices seems like going pretty far out of your way if your motivations are entirely selfish. It seems much more straightforwardly motivated by caring about justice and about individual freedom, and wanting to create a utopian world of maximally meritocratic, dynamic capitalism rather than a world of stagnant rent-seeking that crushes individual human agency.

Matrice Jacobine @ 2025-08-08T01:04 (+3)

Somewhere I remember Thiel explicitly explaining this (ie, saying "we need to repair the intergenerational compact so all these young people stop turning socialist"), but unfortunately I don't remember where he said this so I don't have a link.

https://www.techemails.com/p/mark-zuckerberg-peter-thiel-millennials

Jonas Hallgren 🔸 @ 2025-08-14T20:53 (+1)

I'm curious about the link that goes to AI-enabled coups and it isn't working, could you perhaps relink it?

Jackson Wagner @ 2025-08-14T21:09 (+2)

Sorry about that! I think I just intended to link to the same place I did for my earlier use of the phrase "AI-enabled coups", namely this Forethought report by Tom Davidson and pals, subtitled "How a Small Group Could Use AI to Seize Power": https://www.forethought.org/research/ai-enabled-coups-how-a-small-group-could-use-ai-to-seize-power

But also relevant to the subject is this Astral Codex Ten post about who should control an LLM's "spec": https://www.astralcodexten.com/p/deliberative-alignment-and-the-spec

The "AI 2027" scenario is pretty aggressive on timelines, but also features a lot of detailed reasoning about potential power-struggles over control of transformative AI which feels relevant to thinking about coup scenarios. (Or classic AI takeover scenarios, for that matter. Or broader, coup-adjacent / non-coup-authoritarianism scenarios of the sort Thiel seems to be worried about, where instead of getting taken over unexpectedly by China, Trump, or etc, today's dominant western liberal institutions themselves slowly become more rigid and controlling.)

For some of the shenanigans that real-world AI companies are pulling today, see the 80,000 Hours podcast on OpenAI's clever ploys to do away with its non-profit structure, or Zvi Mowshowitz on xAI's embarrassingly blunt, totally not-thought-through attempts to manipulate Grok's behavior on various political issues (or a similar, earlier incident at Google).

barkbellowroar @ 2025-08-19T01:24 (+1)

I'm relieved to see someone bring up the coup in all of this - I think there is a lot of focus on this post about what Thiel believes or is "thinking " (which makes sense for a community founded on philosophy) versus what Thiel is "doing" (which is more entrepreneurship/silicon valley approach). We can dig into the 'what led him down this path' later imo but the more important objective is that he's rich, powerful and making moves. Stopping or slowing those moves is the first step at this point... I definitely think the 2027 hype is not about reaching AGI but about groups vying for control and OpenAI has been making not so subtle moves toward that positioning...

[redacted]

Jason @ 2025-08-09T09:20 (+40)

Does anyone else feel there's a vaguely missing mood here?

At the outset, I think EAs unfortunately do need to be aware that a powerful person is claiming that they are the Antichrist. And so I think Ben's post is a useful public service. I can also appreciate the reasons one might want to write such a post as "just as an explication of [Thiel's] views" without critique. I don't even disagree with those reasons.

And yet . . . if you put these same ideas into the mouth of a random person, I suspect the vast majority of the Forum readership and commentariat would dismiss them as ridiculous ramblings, the same way we would treat the speech of your average person holding forth about the end of days on an urban street corner. I question whether any of us would take Thiel's attempts at theology (or much of anything else) seriously -- or trying to massage it to make any sort of sense -- if he were not a rich and powerful person.[1] To the extent that we're analyzing what Thiel is selling with any degree of seriousness because of his wealth and influence rather than the merit of his ideas, does that pose any epistemic concerns?

To my (Christian) ears, this should be taken about as seriously as a major investor in the Coca-Cola Company spouting off that Pepsi is the work of Antichrist. Or that Obama was/is the Antichrist -- sadly, this one was a fairly common view during his presidency. Even if one doesn't care about the theological side of this, an individual's claims that his ideological and/or financial opponents are somehow senior-ranking minions of Satan sounds like an fairly good reason to ordinarily be dismissive of that person's message. It doesn't exactly suggest that the odds of finding gold nuggets buried in the sludge are worth the trouble of the search.

- ^

For what it's worth, I am also confident that if you presented the idea that AI-cautious people were the Antichrist without attributing it to Thiel to a large group of ordinary Christians in the pews, or to a group of seminary professors, the near-universal response would range from puzzlement to hysterical laughter. So the fact that the EA audience is disproportionately secular isn't doing all the work here.

Ben_West🔸 @ 2025-08-09T19:15 (+9)

In a similar article on LessWrong, Ben Pace says the following, which resonates with me:

Hearing him talk about Effective Altruists brought to mind this paragraph from SlateStarCodex:

One is reminded of the old joke about the Nazi papers. The rabbi catches an old Jewish man reading the Nazi newspaper and demands to know how he could look at such garbage. The man answers “When I read our Jewish newpapers, the news is so depressing – oppression, death, genocide! But here, everything is great! We control the banks, we control the media. Why, just yesterday they said we had a plan to kick the Gentiles out of Germany entirely!”

I was somewhat pleasantly surprised to learn that one of the people who has been a major investor in AI companies and a major political intellectual influence toward tech and scientific acceleration believes that "the scary, dystopian AI narrative is way more compelling" and of "the Effective Altruist people" says "I think this time around they are winning the arguments".

Winning the arguments is the primary mechanism by which I wish to change the world.

David T @ 2025-08-09T19:03 (+8)

Yeah. Frankly of all the criticisms of EA that might be easily be turned into something more substantial, accurate and useful with a little bit of reframing, a liberalism-hating surveillance-tech investor dressing his fundamental loathing of its principles and opposition to the limits it might impose on tech he actively promotes in pretentious pseudo-Christian allusion seems least likely to add any value. [1]

Doesn't take much searching of the forum to find outsider criticisms of aspects of the AI safety movement which are a little less oblique than comparing it with the Antichrist, written by people without conflicts of interest who've probably never written anything as dumb as this, most of which seem to get less sympathetic treatment.

- ^

and I say that as someone more in agreement with the selected Thiel pronouncements on how impactful and risky near-term AI is likely to be than the average EA

Larks @ 2025-08-10T03:46 (+7)

And yet . . . if you put these same ideas into the mouth of a random person, I suspect the vast majority of the Forum readership and commentariat would dismiss them as ridiculous ramblings, the same way we would treat the speech of your average person holding forth about the end of days on an urban street corner.

I think this is a reasonable objection to make in general - I made similar objections in a similar case here.

But I think your argument that Peter hasn't done anything to earn any epistemic credit is mistaken:

To the extent that we're analyzing what Thiel is selling with any degree of seriousness because of his wealth and influence rather than the merit of his ideas, does that pose any epistemic concerns? To my (Christian) ears, this should be taken about as seriously as a major investor in the Coca-Cola Company spouting off that Pepsi is the work of Antichrist

This seems quite dis-analogous to me. Peter has made his money largely by making a small number of investments that have done extraordinarily well. Skill at this involves understanding leaders and teams, future technological developments, economics and other fields. It's always possible to get lucky, but his degree of success provides I think significant evidence of skill. In contrast, over the last 25 years Coca-Cola has significantly underperformed the S&P500, so your hypothetical Pepsi critic does not have the same standing.

Owen Cotton-Barratt @ 2025-08-10T16:45 (+6)

I think that the theology is largely a distraction from the reason this is attracting sympathy, which I'd guess to be more like:

- If you have some ideas which are pretty good, or even very good, but they present as though they're the answer needed for everything, and they're not, that could be quite destructive (and potentially very-net-bad, even while the ideas were originally obviously-good)

- This is at least a plausible failure mode for EA, and correspondingly worth some attention/wariness

- This kind of concern hasn't gotten much airtime before (and is perhaps easier to express and understand as a serious possibility with some of the language-that-I-interpret-metaphorically);

David T @ 2025-08-10T22:33 (+7)

Feels like the argument you've constructed is a better one than the one Thiel is actually making, which seems to be a very standard "evil actors often claim to be working for the greater good" argument with a libertarian gloss. Thiel doesn't think redistribution is an obviously good idea that might backfire if it's treated as too important, he actively loathes it.

I think the idea that trying too hard to do good things and ending up doing harm is absolutely a failure mode worth considering, but has far more value in the context of specific examples. It seems like quite a common theme in AGI discourse (follows from standard assumptions like AGI being near and potentially either incredibly beneficial or destructive, research or public awareness either potentially solving the problem or starting a race etc) and the optimiser's curse is a huge concern for EA cause prioritization overindexing on particular data points. Maybe that deserves (even) more discussion.

But I don't think an guy that doubts we're on the verge of an AI singularity and couldn't care less whether EAs encourage people to make the wrong tradeoffs between malaria nets, education and shrimp welfare adds much to that debate, particularly not with a throwaway reference to EA in a list of philosophies popular with the other side of the political spectrum he things are basically the sort of thing the Antichrist would say.

I mean, he is also committed to the somewhat less insane-sounding "growth is good even if it comes with risks" argument, but you can probably find more sympathetic and coherent and less interest-conflicted proponents of that view.

Owen Cotton-Barratt @ 2025-08-10T23:32 (+6)

Ok thanks I think it's fair to call me on this (I realise the question of what Thiel actually thinks is not super interesting to me, compared to "does this critique contain inspiration for things to be aware of that I wasn't previously really tracking"; but get that most people probably aren't orienting similarly, and I was kind of assuming that they were when I suggested this was why it was getting sympathy).

I do think though that there's a more nuanced point here than "trying too hard to do good can result in harm". It's more like "over-claiming about how to do good can result in harm". For a caricature to make the point cleanly: suppose EA really just promoted bednets, and basically told everyone that what it meant to be good was to give more money to bednets. I think it's easy to see how this gaining a lot of memetic influence (bednet cults; big bednet, etc.) could end up being destructive (even if bednets are great).

I think that EA is at least conceivably vulnerable to more subtle versions of the same mistake. And that that is worth being vigilant against. (Note this is only really a mistake that comes up for ideas that are so self-recommending that they lead to something like strategic movement-building around the ideas.)

David Mathers🔸 @ 2025-08-07T06:09 (+28)

I'm dubious Thiel is actually an ally to anyone worried about permanent dictatorship. He has connections to openly anti-democratic neoreactionaries like Curtis Yarvin, he quotes Nazi lawyer and democracy critic Carl Schmitt on how moments of greatness in politics are when you see your enemy as an enemy, and one of the most famous things he ever said is "I no longer believe that freedom and democracy are compatible". Rather I think he is using "totalitarian" to refer to any situation where the government is less economically libertarian than he would like, or "woke" ideas are popular amongst elite tastemakers, even if the polity this is all occurring in is clearly a liberal democracy, not a totalitarian state. (For the record I agree that certain kinds of "wokeness" can be bad for the free and open exchange of ideas even if it is not enforced by the government.) It's deceptive rhetoric basically, applying a word with a common meaning in an idiosyncratic way, whilst the force of the label is generated by the common meaning. (Though I doubt Thiel is being deliberately consciously deceptive.)

I'd also say that, even independent of Thiel's politics, I think it is a bad sign when people start taking openly religious, supernaturalist stuff seriously. I know I'm not going to persuade anyone just by saying this, but I think part of being "rational" and "truth-seeking" and saying what's true even when it offends people is firm rejection of religion and the supernatural (on grounds of irrationality, not necessarily social harmfulness). If you agree with me about that, but you are less bothered by Thiel here than by "wokeness", at least consider that your opposition to the latter might not be being driven entirely by considerations about truth-seeking and truth-telling.

Jackson Wagner @ 2025-08-07T20:25 (+6)

Thiel seems to believe that the status-quo "international community" of liberal western nations (as embodied by the likes of Obama, Angela Merkel, etc) is currently doomed to slowly slide into some kind of stagnant, inescapable, communistic, one-world-government dystopia.

Personally, I very strongly disagree with Thiel that this is inevitable or even likely (although I see where he's coming from insofar as IMO this is at least a possibility worth worrying about). Consequently, I think the implied neoreactionary strategy (not sure if this is really Thiel's strategy since obviously he wouldn't just admit it) -- something like "have somebody like JD Vance or Elon Musk coup the government, then roll the dice and hope that you end up getting a semi-benevolent libertarian dictatorship that eventually matures into a competent normal government, like Singapore or Chile, instead of ending up getting a catastrophic outcome like Nazi Germany or North Korea or a devastating civil war" -- is an incredibly stupid strategy that is likely to go extremely wrong.

I also agree with you that Christianity is obviously false and thus reflects poorly on people who sincerely believe it. (Although I think Ben's post exaggerates the degree to which Thiel is taking Christian ideas literally, since he certainly doesn't seem to follow official doctrine on lots of stuff.) Thiel's weird reasoning style that he brings not just to Christianity but to everything (very nonlinear, heavy on metaphors and analogies, not interested in technical details) is certainly not an exemplar of rationalist virtue. (I think it's more like... heavily optimized for trying to come up with a different perspective than everyone else, which MIGHT be right, or might at least have something to it. Especially on the very biggest questions where, he presumably believes, bias is the strongest and cutting through groupthink is the most difficult. Versus normal rationalist-style thinking is optimized for just, you know, being actually fully correct the highest % of the time, which involves much more careful technical reasoning, lots of hive-mind-style "deferring" to the analysis of other smart people, etc)

Ben_West🔸 @ 2025-08-07T15:45 (+4)

I think he is using "totalitarian" to refer to any situation where the government is less economically libertarian than he would like, or "woke" ideas are popular amongst elite tastemakers, even if the polity this is all occurring in is clearly a liberal democracy, not a totalitarian state.

It seems true that he thinks governments (including liberal democracies) are satanic. I am unclear how much of this is because he thinks they are a slippery slope towards what you would call "totalitarianism" vs. being bad per se, but I think he is fairly consistent in his anarchism.

huw @ 2025-08-07T08:39 (+25)

He opposes totalitarianism, and yet by his actions Palantir is reasonably the most authoritarian, pro-surveillance company in the West? He is skeptical of institutions and governments, yet funded and propped up the sitting Vice President? Give me a break, please.

I worry that trying to find coherence in Thiel is lending way too much credence to a guy who is essentially just confabulating as he goes (but this is one of the best efforts to do so that I’ve seen!). His true philosophy is what he does, not what he says.

If EAs decide to oppose Thiel, I think it would be mistaken to try to reason with his followers as if they are plain ideologues; we should focus on breaking his power bases by withstanding his authoritarian impulses and building popular alternatives.

ASB @ 2025-08-07T16:39 (+6)

Fwiw my (admittedly vibes-based) sense is that Palantir was a deliberate push to fill the niche of 'surveillance company' in a way that had guardrails and civil liberties protected.

huw @ 2025-08-07T21:35 (+4)

Do you think it achieved that at some point? How about today?

Ben_West🔸 @ 2025-08-07T17:38 (+4)

I think it's important to note that Thiel's worldview is pretty bleak - he literally describes his goal as steering us towards a mythical Greek monster! He just thinks that the alternatives are even worse.

In EA lingo, I would say he has complex cluelessness: he sees very strong reasons for both doing a thing and doing the opposite of that thing.

I expect that there are Straussian readings of him that I am not understanding but for the most part he seems to just sincerely have very unusual views. E.g. I think Trump/Vance have done more than most presidents to dismantle the global order (e.g. through tariffs), and it doesn't seem surprising to me that Thiel supports them (even though I suspect he dislikes many other things they do).

Charlie_Guthmann @ 2025-08-07T18:49 (+3)

wrote this elsewhere but while I agree that he sincerely has unusual views, I question the strength and commitment he has to these views. I asked 03 to aggregate his total donations and it looks like he has given about 80m away, with almost half to republican politicians (i'm guessing this is wrong but couldn't find anything better).

For someone with probably >10b net worth this seems like a weak signal to me that he doesn't really care about anything.

Jackson Wagner @ 2025-08-07T19:59 (+6)

Agreed that it is weird that a guy who seems to care so much about influencing world events (politics, technology, etc) has given away such a small percentage of his fortune as philanthropic + political donations.

But I would note that since Thiel's interests are less altruistic and more tech-focused, a bigger part of his influencing-the-world portfolio can happen via investing in the kinds of companies and technologies he wants to create, or simply paying them for services. Some prominent examples of this strategy are founding Paypal (which was originally going to try and be a kind of libertarian proto-crypto alternate currency, before they realized that wasn't possible), founding Palantir (allegedly to help defend western values against both terrorism and civil-rights infringement) and funding Anduril (presumably to help defend western values against a rising China). A funnier example is his misadventures trying to consume the blood of the youth in a dark gamble for escape from death, via blood transfusions from a company called Ambrosia. Thiel probably never needed to "donate" to any of these companies.

(But even then, yeah, it does seem a little too miserly...)

Charlie_Guthmann @ 2025-08-07T20:32 (+2)

But I would note that since Thiel's interests are less altruistic and more tech-focused, a bigger part of his influencing-the-world portfolio can happen via investing in the kinds of companies and technologies he wants to create, or simply paying them for services.

agreed.