Your 2022 EA Forum Wrapped 🎁

By Sharang Phadke, Sarah Cheng 🔸, Lizka, Ben_West🔸, Jonathan Mustin @ 2023-01-01T03:10 (+151)

The EA Forum team is excited to share your personal ✨ 2022 EA Forum Wrapped ✨. We hope you enjoy this little summary of how you used the EA Forum as you ring in the new year with us. Thanks for being part of the Forum!

Note: If you don't have an EA Forum account, we won't be able to make a personalized "wrapped" for you. If you feel like you're missing out, today is a great day to make an account and participate more actively in the online EA community!

bruce @ 2023-01-01T03:21 (+47)

Looks fun! Thanks for this. Curious about EA forum alignment methodology!

(also happy new year to the team, thanks for all your work on the forum!)

Lorenzo Buonanno @ 2023-01-01T09:50 (+19)

https://github.com/ForumMagnum/ForumMagnum/blob/5f08a68cfd2eb48d5a2286962cd70ddfea9a97a6/packages/lesswrong/server/resolvers/userResolvers.ts#L322-L339

I think it looks at engagement (I assume time spent on the Forum) and the comments/posts ratio.

function getAlignment(results) {

let goodEvil = 'neutral', lawfulChaotic = 'Neutral';

if (results.engagementPercentile < 0.33) {

goodEvil = 'evil'

} else if (results.engagementPercentile > 0.66) {

goodEvil = 'good'

}

const ratio = results.commentCount / results.postCount;

if (ratio < 3) {

lawfulChaotic = 'Chaotic'

} else if (ratio > 6) {

lawfulChaotic = 'Lawful'

}

if (lawfulChaotic == 'Neutral' && goodEvil == 'neutral') {

return 'True neutral'

}

return lawfulChaotic + ' ' + goodEvil

}Holly Morgan @ 2023-01-03T03:02 (+10)

Forum Team, maybe link "alignment" at https://forum.effectivealtruism.org/wrapped to this comment rather than the Wikipedia page? (If I'd been labeled "evil" I think I'd much rather be reassured that it's for a completely irrelevant reason than linked to the D&D reference.)

Kat Woods @ 2023-01-02T15:34 (+3)

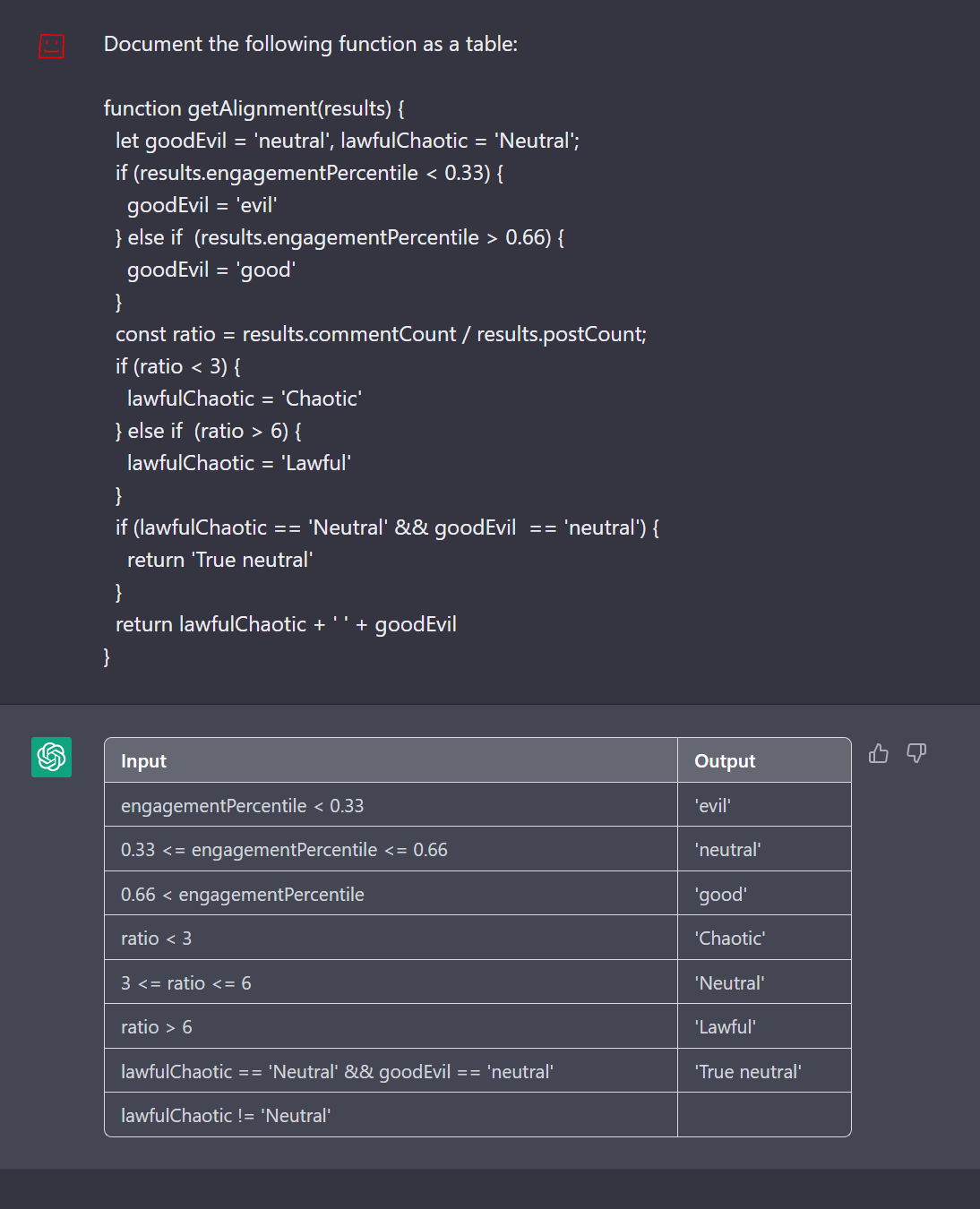

For those who don't program, this is what ChatGPT says this code means:

"This code defines a function getAlignment that takes in an object called results and returns a string indicating a combination of good/evil and lawful/chaotic alignments.

The function first initializes two variables, goodEvil and lawfulChaotic, to the string 'neutral' and 'Neutral', respectively. It then checks the engagementPercentile property of the results object. If the engagement percentile is less than 0.33, goodEvil is set to the string 'evil'. If the engagement percentile is greater than 0.66, goodEvil is set to the string 'good'.

The function then calculates the ratio of commentCount to postCount in the results object, and uses this ratio to set the value of lawfulChaotic. If the ratio is less than 3, lawfulChaotic is set to 'Chaotic'. If the ratio is greater than 6, lawfulChaotic is set to 'Lawful'.

Finally, the function checks if both lawfulChaotic and goodEvil are equal to 'neutral'. If they are, the function returns the string 'True neutral'. Otherwise, it returns the concatenation of lawfulChaotic and goodEvil with a space in between."

I don't code, so I have no idea if this is accurate, so please let me know if it's off.

Lorenzo Buonanno @ 2023-01-02T16:00 (+30)

I think it's accurate, but I don't know if it's clearer

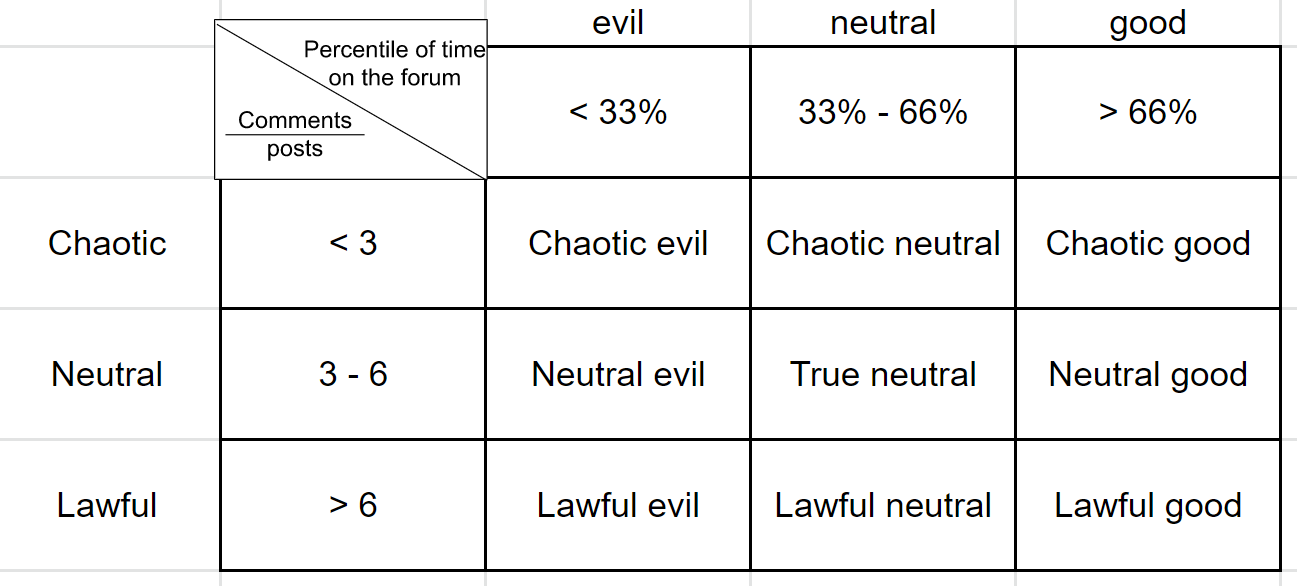

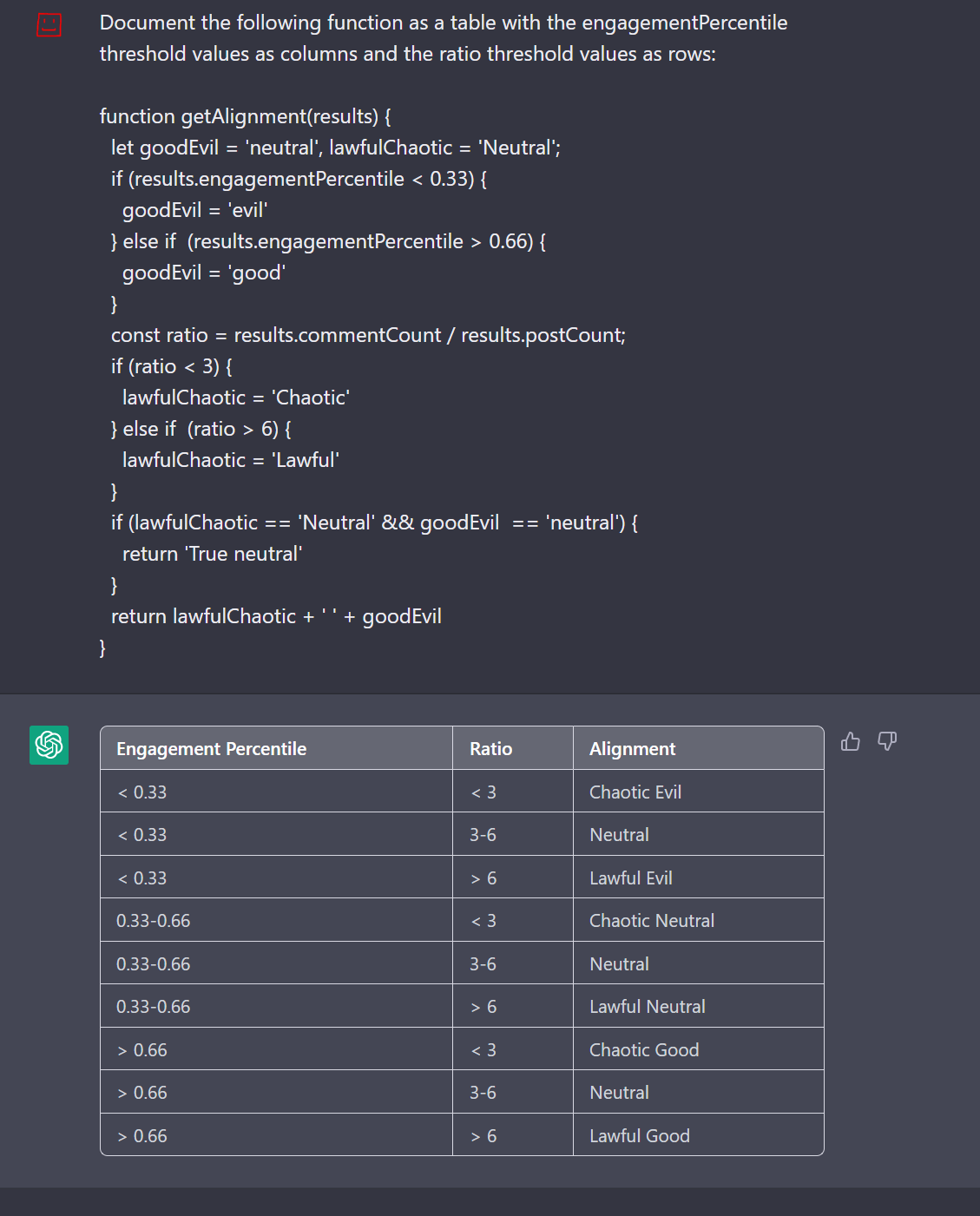

Here's a shitty table that I think is clearer

Actually, ChatGPT does a decent job at that

This is arguably better than my table:

Adrià Garriga Alonso @ 2023-01-02T16:29 (+15)

No, I think your table is substantially better than chatgpt’s because it factors out the two alignment dimensions into two spatial dimensions.

Writer @ 2023-01-01T09:57 (+2)

I have to squint a lot to see the sense in this mapping

Lorenzo Buonanno @ 2023-01-01T10:01 (+10)

I don't think it's meant to be taken seriously, just some whimsical easter egg

Lizka @ 2023-01-02T00:17 (+24)

I'm really glad we made this. :)

Scanning through my strong upvotes & upvotes, here are things I think are really valuable (not an exhaustive list![1]), grouped in very rough categories:

- Assorted posts I thought were especially underrated

- Methods-ish or meta posts

- Terminate deliberation based on resilience, not certainty

- Comparative advantage does not mean doing the thing you're best at

- Effectiveness is a Conjunction of Multipliers

- Nonprofit Boards are Weird

- Learning By Writing

- How effective are prizes at spurring innovation?

- How to do theoretical research, a personal perspective

- Methods for improving uncertainty analysis in EA cost-effectiveness models

- An experiment eliciting relative estimates for Open Philanthropy’s 2018 AI safety grants

- Let's stop saying 'funding overhang' & What I mean by "funding overhang" (and why it doesn't mean money isn't helpful)

- As an independent researcher, what are the biggest bottlenecks (if any) to your motivation, productivity, or impact? (would love more answers here)

- Other posts I was really excited about (which don't fall neatly into other categories)

- Concrete Biosecurity Projects (some of which could be big)

- What happens on the average day? & What’s alive right now?

- StrongMinds should not be a top-rated charity (yet)

- An Evaluation of Animal Charity Evaluators (see also Introducing new leadership in Animal Charity Evaluators’ Research team)

- Why Neuron Counts Shouldn't Be Used as Proxies for Moral Weight

- Does Economic Growth Meaningfully Improve Well-being? An Optimistic Re-Analysis of Easterlin’s Research: Founders Pledge

- How likely is World War III?

- A major update in our assessment of water quality interventions

- Ideal governance (for companies, countries and more)

- Rational predictions often update predictably*

- My thoughts on nanotechnology strategy research as an EA cause area & A new database of nanotechnology strategy resources

- [Crosspost]: Huge volcanic eruptions: time to prepare (Nature) & Should GMOs (e.g. golden rice) be a cause area? & Flooding is not a promising cause area - shallow investigation & lots of other posts on less-discussed causes

- Notes on Apollo report on biodefense

- How moral progress happens: the decline of footbinding as a case study

- Air Pollution: Founders Pledge Cause Report

- Case for emergency response teams

- What matters to shrimps? Factors affecting shrimp welfare in aquaculture (see my comment on the post)

- Some observations from an EA-adjacent (?) charitable effort

- A few announcements

- Open Philanthropy's Cause Exploration Prizes: $120k for written work on global health and wellbeing & Cause Exploration Prizes: Announcing our prizes

- Announcing the Change Our Mind Contest for critiques of our cost-effectiveness analyses & The winners of the Change Our Mind Contest—and some reflections

- AI posts

- Let’s think about slowing down AI & Slightly against aligning with neo-luddites

- Without specific countermeasures, the easiest path to transformative AI likely leads to AI takeover

- Samotsvety's AI risk forecasts

- AI Could Defeat All Of Us Combined

- AI Safety Seems Hard to Measure

- AI strategy nearcasting / How might we align transformative AI if it’s developed very soon?

- Biological Anchors external review by Jennifer Lin (linkpost)

- Counterarguments to the basic AI risk case

- Resources and lists

- EA Opportunities: A new and improved collection of internships, contests, events, and more.

- EA & LW Forums Weekly Summary (21 Aug - 27 Aug 22’) — the whole series

- Forecasting Newsletter: January 2022 — the whole series

- The Future Fund’s Project Ideas Competition — this is still a bank of really interesting project ideas

- See more posts like this here:

- Posts I wrote or was involved with (I searched for these separately, these weren't on my "wrapped" page)

- Events, highlighting especially good content, resources

- Other posts

- Thoughts on Forum posts:

- Crossposts

See also the Forum Digest Classics.

- ^

This is literally me scanning through quickly and then using the # + [type a post name to get a hyperlinked post method to quickly insert links] method, then very roughly grouping into categories.

If I didn't list a post, that doesn't mean I didn't think it was great or didn't upvote it.

Yonatan Cale @ 2023-01-05T11:20 (+2)

I think this is under rated:

Offering FAANG-style mock interviews

not in the top forum posts of the year or anything, but I'd want it to get more exposure

BarryGrimes @ 2023-01-01T09:31 (+22)

Love this! I found it really valuable to be reminded of all the posts I’ve read this year and to reflect on how they’ve shaped my thinking. Big props to everyone involved in making this.

Max Görlitz @ 2023-01-01T13:53 (+19)

This is so awesome, much cooler than Spotify Wrapped!!

Lumpyproletariat @ 2023-01-01T07:52 (+12)

I got LG for my forum alignment - I'm guessing that that's the most common one?

Comment if you got a different one (unless you'd rather not (I guess you could make a throwaway account so that no one judges you for being CE)).

Andrew Simpson @ 2023-01-01T14:24 (+8)

I got neutral evil 😳

Writer @ 2023-01-01T09:50 (+7)

I am CHAOTIC Good MUAAHAHA

Kat Woods @ 2023-01-01T18:12 (+4)

Neutral good! Which is indeed how I identify.

I do predict that most EAs are either lawful good or neutral good.

Misha_Yagudin @ 2023-01-01T19:08 (+10)

Looking back on my upvotes, a surprisingly few great posts this year (< 10 if not ~5). Don't have a sense of how things were last year.

Sharang Phadke @ 2023-01-01T23:37 (+8)

If anyone has insights about what they find themselves marking as "most important" and want to share them with me, I would love to hear more about your ideas. Our goal with this is to learn how we can encourage more of the most valuable content, and your opinion is valuable!

You can message me on the Forum or schedule time to chat here.

Felix Wolf @ 2023-01-03T13:30 (+4)

Suggestion: make the wrap up easily shareable. Download as a .jpg for example.

I had to make a screenshot on my phone which did not captured everything.

I really enjoy this feature. :)