Orienting towards the long-term future (Joseph Carlsmith)

By EA Global @ 2017-11-03T07:43 (+17)

This is a linkpost to https://www.youtube.com/watch?v=Ccq2Ql8FcY0&list=PLwp9xeoX5p8POB5XyiHrLbHxx0n6PQIBf&index=22

In this talk, Joseph Carlsmith discusses the importance of the long-term future, and why some people in the effective altruist community to focus on it.

The Talk

I'm Joseph Carlsmith. I'm a PhD student at NYU in philosophy and a research assistant at the Centre for Effective Altruism and the Future of Humanity Institute.

[00:01:20] A fair number of people in the effective altruism community, including me, think that the long-term future is extremely important — so important as to warrant the focus of our altruistic attention. The central fact that impresses many of us is that the future could be really big, it could last a really long time, and it could involve a huge number of people.

I'm going to talk a little bit about that possibility and how we might relate to it ethically. And I should say at the outset that very few of these ideas are new or mine. A lot of them come from Nick Bostrom and Toby Ord, both philosophers at Oxford. In fact, some of the framing here — even some of the language — either comes from or might end up in Toby Ord's upcoming book, which I've been helping with. [Editor’s note: The book is The Precipice, and has since been published.]

[00:02:27] This is the Cave of the Hands in Argentina. Our ancestors made these stencils somewhere between 9,000 and 13,000 years ago. At the time, Homo sapiens had been around for at least 185,000 years. We've come a long way, and some of the biggest changes in our condition are relatively recent. We've had agriculture for only about 10,000 years and written language for only about 5,000. And all of recorded history has happened since then — just a few thousand years.

[00:03:17] We can take all of human history — everything that has ever happened to humanity — hold it in mind, and now look to the future that could be in store for us. And what we see, I think, is that human history could be just beginning. We stand on the brink of a future that could be astonishingly vast and astonishingly valuable. And it's the scale of that possible future that persuades many of us to focus on it.

I'm going to talk a little bit about the dimensions of this possible future, but I should say that I'm not yet making any claims as to how probable it is that we reach a future of the scale I'm going to describe, except to say that it's probable enough to be worth taking seriously.

[00:04:14] How long might the human species last? Well, the median duration of a mammalian species is around 2,200,000 years. This is Homo erectus, our closest relative. Homo erectus lived for 1,800,000 years. That's a long time. If two million years were a single 80-year lifespan, our species would be, at this point, about eight years old — just getting started.

[00:04:48] But obviously, we're not typical mammals. For one thing, we've recently been increasing in our capacity to destroy ourselves, a capacity I'll be returning to later on. But we also have other unique capacities that could help us survive for much longer than Homo erectus.

[00:05:11] Suppose, then, that we live for as long as we can on Earth. How long might that be?

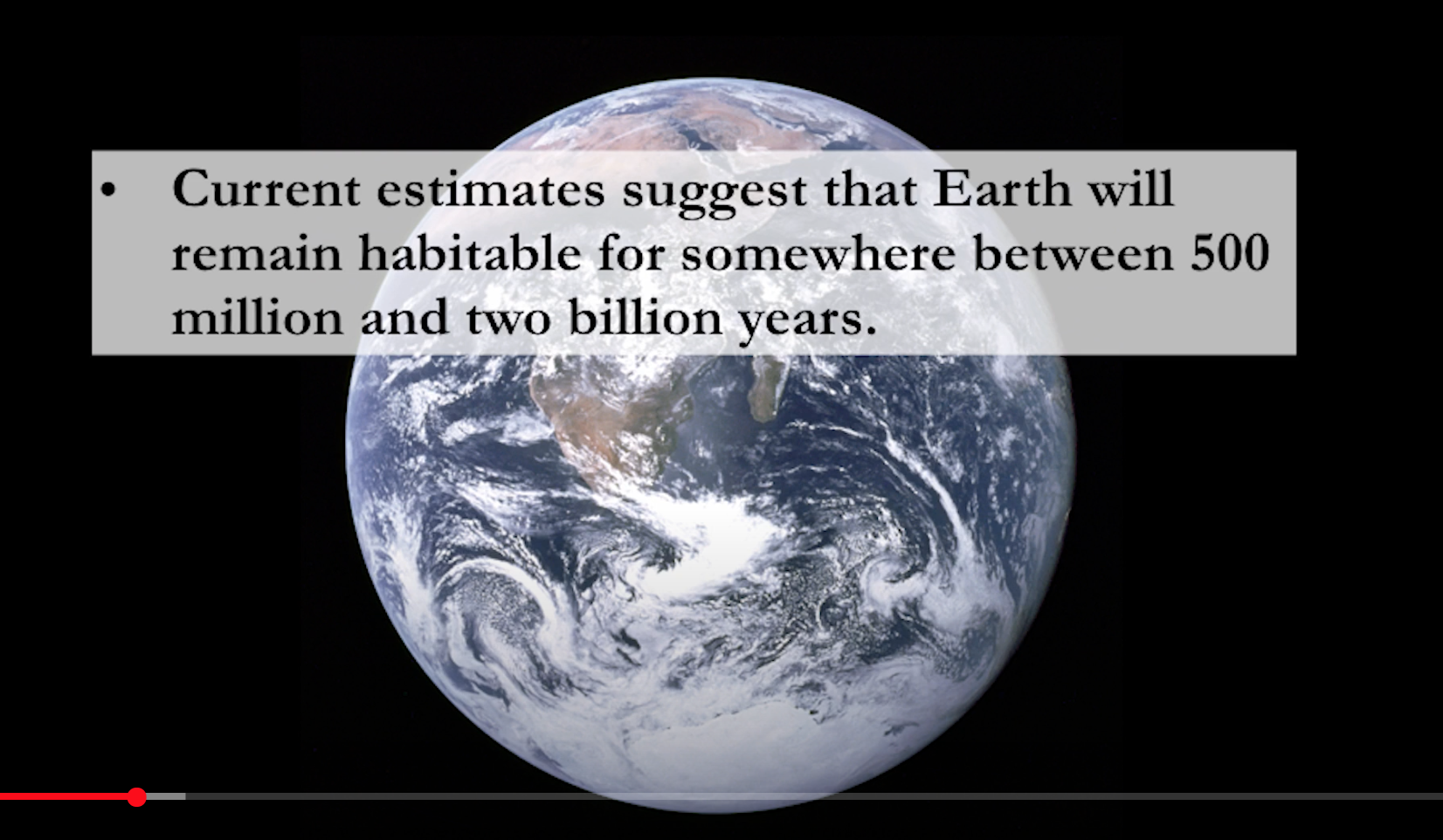

Well, current estimates suggest that Earth will remain habitable for somewhere between 500 million and two billion years. That’s enough time for millions of future generations and trillions of human beings. It’s also enough time to heal this planet from some of the damages that we've caused in our immaturity. In fact, I think awareness of just how long we might live on Earth puts in perspective the stakes of how we care for our home. At some point, though, we are going to either die here or leave. As Konstantin Tsiolkovsky put it, “Earth is the cradle of humanity, but one cannot live in a cradle forever.”

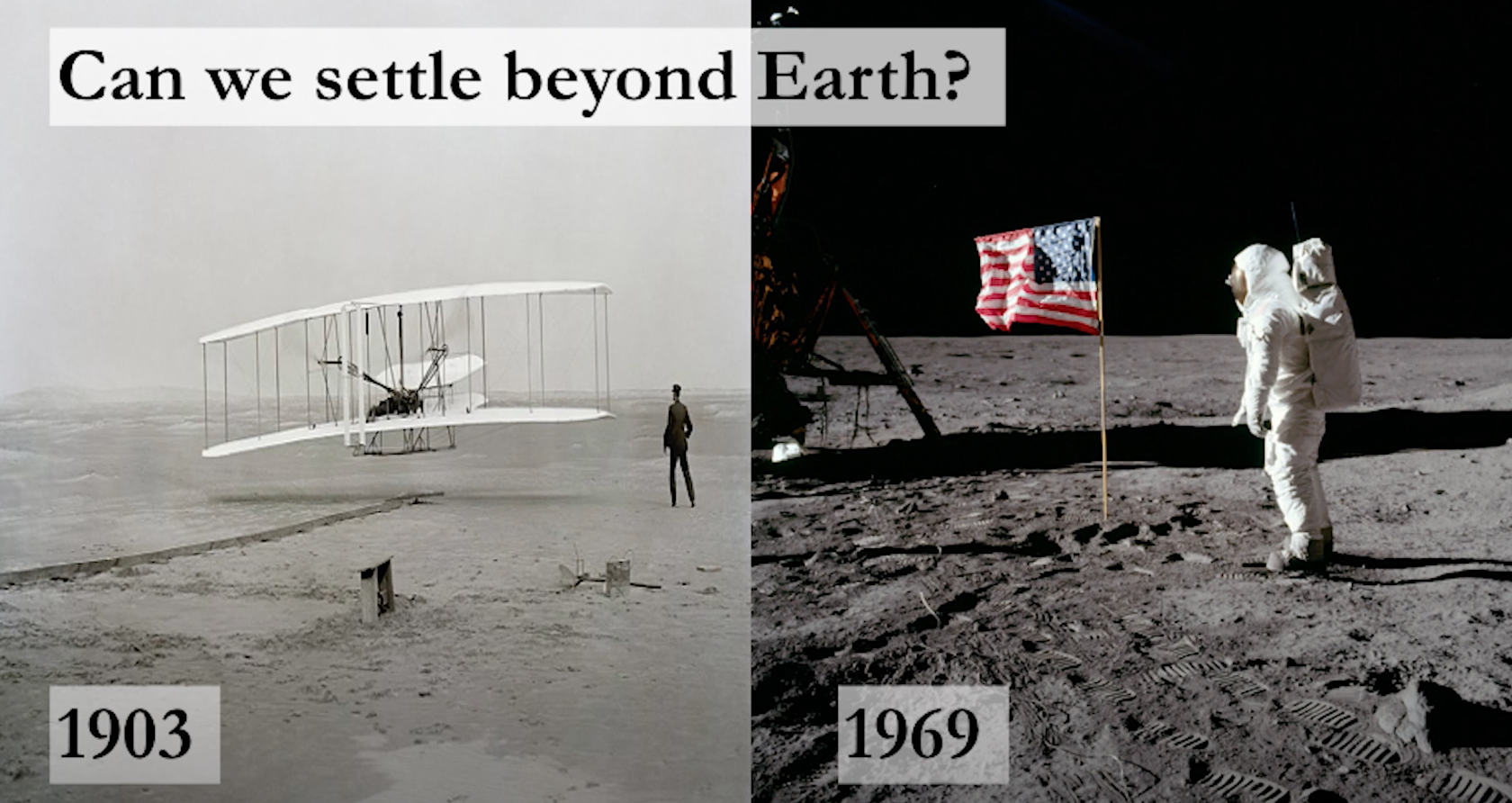

[00:06:12] Can we settle beyond Earth? The main obstacle, I think, is the time necessary to learn how.

This is the Wright Brothers' first flight in 1903. And here, 66 years later, is Buzz Aldrin walking on the Moon. That's a lot of progress in 66 years — less than a single lifetime. Our species learns quickly, especially in recent times, and 500 million years is a long education. I think we will need far less.

[00:06:55] I'm not saying that settling beyond Earth should be our focus at the moment, just that awareness of the possibility is important, I think, to our sense of what sort of future could be in store for us. Where might we go from here?

The Milky Way contains roughly 100 billion stars, maybe more, some of which will last for trillions of years, and there are billions of other galaxies we might be able to settle and explore as well. On such a scale of time and space, we might have a truly staggering number of descendants. Bostrom (2012) estimates a lower bound of 10^32 if everyone lives for 100 years. But, as he puts it, what matters is not the precise numbers, but the fact that they are huge.

[00:07:57] So, that's two possible dimensions of our possible future: it could last a really long time and it could involve a huge number of people. I want to also talk about a third dimension that's particularly dear to my heart — namely that, with enough time, human life could become extraordinarily good.

[00:08:20] The present world, as we all know, is marred by agony and injustice. The litany is familiar: malaria, HIV, depression, dementia, oppression, torture, discrimination, prisons, mental hospitals, factory farms. With enough time, we can end these horrors. In the words of Robert Kennedy, who was quoting Aeschylus, “We can make gentle the life of this world.”

[00:08:58] But putting an end to agony and injustice is a lower bound on how good human life could be. The upper bound remains, I think, unexplored.

And we get some inkling of this during life's best moments of raw joy, luminous beauty, soaring love. Moments when we are truly awake. And these moments, however brief, point to depths of possible flourishing far beyond the status quo, but also far beyond our capacity for comprehension.

Our descendants would have a lot of time, and many new ways, to explore these depths. And it's not just welfare. Our descendants would be in a position to plumb the depths of whatever you value: beauty, art, adventure, discovery, understanding, love, consciousness, culture. They would also potentially discover entirely new categories of value, utterly unknown to us, such as music that we lack the ears to hear.

[00:10:18] That's one possible future for humanity and our descendants: millions or maybe billions of years (or even longer), and huge numbers of future generations living with astonishing depths of flourishing. Such a future is, in Carl Sagan's words, “a worthy goal,” profoundly good. And if we get our act together, I think we have a real chance. Obviously, though, such a future is not the only possibility.

[00:10:59] Our future might be much shorter — for example, if we go extinct in the next few centuries. Or even if our future is very long, it might be much worse than it could have been. Our trajectory as a civilization might veer in the wrong direction. And because our future could be so big, the stakes as to which of these possibilities are realized are extremely high — so high as to persuade many effective altruists to focus their impact on what happens to humanity in the long, long term.

[00:11:41] In particular, many people in the effective altruism community, including me, think that reducing the risk of human extinction (especially by our own hand) is a particularly good opportunity to have an impact on the long-term future of humanity. In recent times, we have grown more and more capable of destroying ourselves and our entire future forever, whether by nuclear war, catastrophic climate change, synthetic biology, artificial intelligence, or some other risk as yet unknown.

[00:12:27] Because the expected value of our future is so high, many think even a small reduction in the risk of extinction is extremely worthwhile in expectation. That said, I want to be clear that the case for focusing on our long-term future and the case for reducing the risk of extinction are conceptually distinct. There are ways to focus on the long-term future that don't involve reducing the risk of extinction. For example, instead of trying to ensure that our future is very long, you could just try to ensure that, to the extent that our future is long, it goes better rather than worse. Similarly, there are reasons to reduce the risk of extinction that don't have to do with the long-term future (for example, reasons to prevent the deaths of seven billion people, including potentially yourself and everyone you love).

[00:13:27] Nevertheless, many people who are focused on the long-term future, as a matter of fact, think that reducing the risk of extinction is a particularly good opportunity to make a difference.

Let me talk briefly about a few possible objections. The first is discounting, which, in the context relevant here, means thinking that things matter less the further away from you they are in time. This just seems wrong to me and to many people. Imagine that you can save 10 people from the flu in 100 years or a thousand people from torture in a million years. Who should you save? You should save the thousand people from torture, but views that discount say otherwise. You can raise other similar objections.

[00:14:26] What about views based on the belief that we have no reason to add happy people to the population, including happy people in the future? I'm somewhat more sympathetic to these views than some others in this community, but it turns out they're extremely difficult to make work. The central difficulty, as I see it, is that even if you think we don't have a particularly strong reason to add happy people to the population, you should still think that we have very strong reason to ensure that the lives that we do add to the population go better rather than worse, even if those lives are worth living overall.

[00:15:05] For example, it matters whether we pollute this planet and leave to future generations a biosphere that leaves them substantially worse off, even if who lives in the future is contingent on our choice — and even if everyone has a life worth living, regardless. And that conviction, in conjunction with the conviction that we have no reason to add happy people to the population, produces either fairly counterintuitive results or violations of very plausible constraints on rationality. I'm happy to talk more about that in the Q&A. Chapter 4 of Nick Beckstead's thesis is also a nice introduction to these issues.

[00:15:51] Finally, you might be skeptical about our ability to make the future better in expectation, either because you think we might not have that kind of influence over the future or because we're just not in a position to know what will make the future better. I think that’s a real question. But surely at least some people are in a position to make the future better or worse, in expectation. Donald Trump, for example, is in such a position. And when it comes to the risk of extinction, I, at least, find it pretty hard to think that we're totally clueless as to which options or outcomes would be better or worse.

[00:16:34] For example, it seems fairly clear that an all-out nuclear war between the US and Russia would be bad, in expectation, for our future. The same is true for a global engineered pandemic. And I think at least some people are in a position to lower the probability of those sorts of catastrophes.

Even if you don't buy that, though, the stakes are high enough that I think the thing to do is not to give up but to try to learn more. These are only a few possible objections. There are many others and many further questions we can raise, but some of those questions are still open and we need people working on them. So if you are gripped by the broad idea here — even if you still have questions and objections — I encourage you to get involved.

[00:17:32] Here are some ways you might do that. 80,000 Hours released a long article about reducing the risk of extinction, which has a lot of information about how you might make a difference. You can listen to this podcast with Toby Ord, which goes into more depth than I have. Or, you can talk to people at this conference from various organizations interested in the long-term future.

[00:17:58] I want to close with an idea inspired by Carl Sagan. Imagine our descendants millions of years from now, spread out across the galaxy, having experienced life with a depth and understanding unimaginable to us. And imagine them looking back on Earth, and on the 21st century, in light of everything that has happened since then. And what they would see, I think, is a seed: this tiny, fragile planet from which everything would spring.

As Sagan puts it, “They will marvel at how vulnerable the repository of all our potential once was, how perilous our infancy, how humble our beginnings, how many rivers we have to cross before we found our way.”

Thank you.

Q&A

Nathan Labenz (Moderator) [00:19:15]: Here’s the first question from an audience member. You kind of addressed this when you talked about discounting, but one particular form of discounting that a questioner is asking about is discounting by the background rate of human extinction. Do you think that there is a rate at which we just go away, and that maybe that is a reasonable way to discount?

Joseph [00:19:48]: Yeah. If you think that we are very, very unlikely to reach a particularly long future, then some of this dialectic does alter a little bit. You might think it's less worthwhile, for example, to try to change the trajectory of human civilization in the long term. I don't actually think that it reduces the value in expectation of reducing the risk of extinction if you still think that the future is positive in expectation, but it might alter how you think about trajectory changes.

Nathan [00:20:25]: The next question is: Do you know of any information or have any thoughts on how people can maintain some sense of being action-oriented when they move into this line of thinking? It seems that people often end up chasing their tails getting bogged down in all of the considerations.

Joseph [00:20:49]: Yeah, that's a good question. One thing that can be helpful psychologically is to be producing concrete output. So instead of just sitting around and talking to people, write something that people can respond to, or organize an event. I don't know if that is, in fact, any better, but it can feel better.

Nathan [00:21:18]: What are your thoughts on the signal that it sends to people outside of the EA community to focus on long-term risks rather than shorter term (and seemingly more immediately pressing) issues?

Joseph [00:21:35]: Well, in many cases, I think the goals are quite convergent. For example, I think reducing the risk of nuclear war or a global pandemic is something that everyone has a reason to care about, regardless of how they feel about the long-term future. Exactly how we prioritize those sorts of catastrophes relative to other goals we have in the short term is a further question. But in a lot of cases, I think the things that are going to be good for us in the long term are good for us in the short term too.

Nathan [00:22:13]: We have a few related questions on this theme of helping people now versus in the distant future. One person puts it pretty directly: You could argue that doing work to help people in the present is also a way of helping people in the future, maybe indirectly. So for somebody who is really focused on doing things that affect the here and now, obviously you don't have a lot of information about this person's behavior. But do you have thoughts on what the typical person who is very focused on the present should do to orient themselves more toward the long term?

Joseph [00:22:54]: I think that's going to depend a lot on the person's circumstances and what they're interested in — what they're good at or in a position to do. The 80,000 Hours article is helpful in this respect. That organization is very interested in tailoring the advice they give to a person's particular circumstances.

Nathan [00:23:17]: Here's a short question that has come in: Is emphasis on the long-term future consistent with hedonism?

Joseph [00:23:29]: Yes, very consistent. [Laughter.] The simplest argument for the long-term future is if you're a hedonistic total utilitarian and you think that there will be a lot of hedons in the future.

Nathan [00:23:44]: Do you think that EA as a community should be more direct in its communication with the rest of the world about the ways in which it focuses on the long-term future, as opposed to letting the poverty cause area lead the way in our marketing (and having things like existential risk remain more sacred knowledge for those who are more deeply initiated into the community)?

Joseph [00:24:13]: I'm not sure if I would want to describe existential risk in those terms. In general, I think many people in the EA community are fairly open, both about their interest in the long term and their remaining uncertainty about it. Will [MacAskill], for example, has done a lot of work on moral uncertainty and how to act when you're compelled by a view, but you also think you have doubts. And one thing I really want to emphasize is that it's possible to care a lot about the long-term future while also holding in mind other possibilities for what you might do, and even some skepticism toward the general argument for the long-term future.

Nathan [00:25:00]: I think those are all of the questions we have for now. Tell us where people can follow you online if they want to learn more about you.

Joseph [00:25:10]: I have a website, josephcarlsmith.com. That's a good way to learn more about me.

Nathan [00:25:14]: All right. A round of applause for Joseph Carlsmith for helping us orient towards the the long-term future. Thank you very much.