The Happier Lives Institute is funding constrained and needs you!

By MichaelPlant @ 2023-07-06T12:00 (+84)

The Happier Lives Institute (HLI) is a non-profit research institute that seeks to find the best ways to improve global wellbeing, then share what we find. Established in 2019, we have pioneered the use of subjective wellbeing measures (aka ‘taking happiness seriously’) to work out how to do the most good.

HLI is currently funding constrained and needs to raise a minimum of 205,000 USD to cover operating costs for the next 12 months. We think we could usefully absorb as much as 1,020,000 USD, which would allow us to expand the team, substantially increase our output, and provide a runway of 18 months.

This post is written for donors who might want to support HLI’s work to:

- identify and promote the most cost-effective marginal funding opportunities at improving human happiness.

- support a broader paradigm shift in philanthropy, public policy, and wider society, to put people’s wellbeing, not just their wealth, at the heart of decision-making.

- improve the rigour of analysis in effective altruism and global priorities research more broadly.

A summary of our progress so far:

- Our starting mission was to advocate for taking happiness seriously and see if that changed the priorities for effective altruists. We’re the first organisation to look for the most cost-effective ways to do good, as measured in WELLBYs (Wellbeing-adjusted life years)[1]. We didn’t invent the WELLBY (it’s also used by others e.g. the UK Treasury) but we are the first to apply it to comparing which organisations and interventions do the most good.

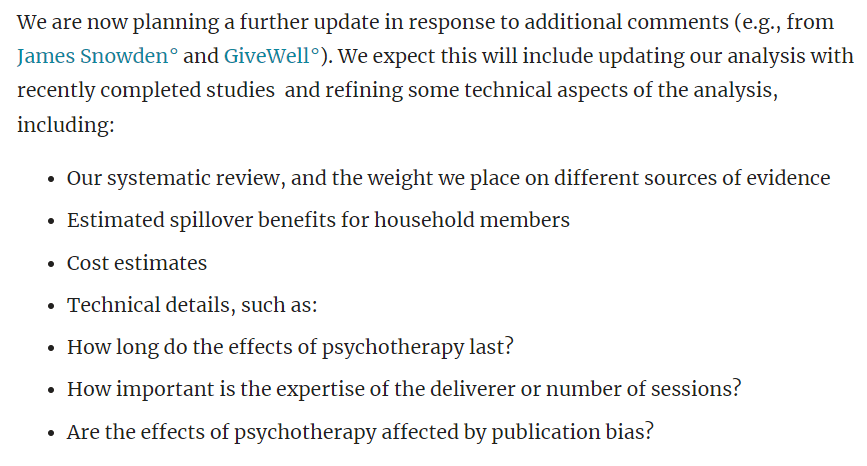

- Our focus on subjective wellbeing (SWB) was initially treated with a (understandable!) dose of scepticism. Since then, many of the major actors in effective altruism’s global health and wellbeing space seem to have come around to it (e.g., see these comments by GiveWell, Founders Pledge, Charity Entrepreneurship, GWWC). [Paragraph above edited 10/07/2023 to replace 'all' with 'many' and remove a name (James Snowden) from the list. See below]

- We’ve assessed several top-regarded interventions for the first time in terms of WELLBYs: cash transfers, deworming, psychotherapy, and anti-malaria bednets. We found treating depression is several times more cost-effective than either cash transfers or deworming. We see this as important in itself as well as a proof of concept: taking happiness seriously can reveal new priorities. We've had some pushback on our results, which was extremely valuable. GiveWell’s own analysis concludes treating depression is 2x as good as cash transfers (see here, which includes our response to GiveWell).

- We strive to be maximally philosophically and empirically rigorous. For instance, our meta-analysis of cash transfers has since been published in a top academic journal. We’ve shown how important philosophy is for comparing life-improving against life-extending interventions. We’ve won prizes: our report re-analysing deworming led GiveWell to start their “Change Our Mind” competition. Open Philanthropy awarded us money in their Cause Exporation Prize.

- Our work has an enormous global scope for doing good by influencing philanthropists and public policy-makers to both (1) redirect resources to the top interventions we find and (2) improve prioritisation in general by nudging decision-makers to take a wellbeing approach (leading to resources being spent better, even if not ideally).

- Regarding (1), we estimate that just over the period of Giving Season 2022, we counterfactually moved around $250,000 to our top charity, StrongMinds; this was our first campaign to directly recommend charities to donors[2].

- Regarding (2), the Mental Health Funding Circle started in late 2022 and has now disbursed $1m; we think we had substantial counterfactual impact in causing them to exist. In a recent 80k podcast, GiveWell mention our work has influenced their thinking (GiveWell, by their count, influences $500m a year)[3].

- We’ve published over 25 reports or articles. See our publications page.

- We’ve achieved all this with a small team. Presently, we’re just five (3.5 FTE researchers). We believe we really 'punch above our weight', doing high impact research at a low cost.

- However, we are just getting started. It takes a while to pioneer new research, find new priorities, and bring people around to the ideas. We’ve had some impact already, but really we see that traction as evidence we’re on track to have a substantial impact in the future.

What’s next?

Our vision is a world where everyone lives their happiest life. To get there, we need to work out (a) what the priorities are and (b) have decision-makers in philanthropy and policy-making (and elsewhere) take action. To achieve this, the key pieces are:-

- conducting research to identify different priorities compared to the status quo approaches (both to do good now and make the case)

- developing the WELLBY methodology, which includes ethical issues such as moral uncertainty and comparing quality to quantity of life

- promoting and educating decision-makers on WELLBY monitoring and evaluation

- building the field of academic researchers taking a wellbeing approach, including collecting data on interventions.

Our organisational strategy is built around making progress towards these goals. We've released, today, a new Research Agenda for 2023-4 which covers much of the below in more depth.

In the next six months, we have two priorities:

Build the capacity and professionalism of the team:

- We’re currently recruiting a communications manager. We’re good at producing research, but less good at effectively telling people about it. The comms manager will be crucial to lead the charge for Giving Season this year.

- We’re about to open applications for a Co-Director. They’ll work with me and focus on development and management; these aren’t my comparative advantage and it’ll free me up to do more research and targeted outreach.

- We’re likely to run an open round for board members too.

And, to do more high-impact research, specifically:

- Finding two new top recommended charities. Ideally, at least one will not be in mental health.

- To do this, we’re currently conducting shallow research of several causes (e.g., non-mood related mental health issues, child development effects, fistula repair surgery, and basic housing improvements) with the aim of identifying promising interventions.

- Alongside that, we’re working on wider research agenda, including: an empirical survey to better understand how much we can trust happiness surveys; summarising what we’ve learnt about WELLBY cost-effectiveness so we can share it with others; revise working papers on the nature and measurement of wellbeing; a book review Will MacAskill’s ‘What We Owe The Future’.

The plan for 2024 is to continue developing our work by building the organisation, doing more good research, and then telling people about it. In particular:

- Investigate 4 or 5 more cause areas, with the aim of adding a further three top charities by the end of 2024.

- Develop the WELLBY methodology, exploring, for instance, the social desirability bias in SWB scales

- Explore wider global priorities/philosophical issues, e.g. on the badness of death and longtermism.

- For a wider look at these plans, see our Research Agenda for 2023-4, which we’ve just released.

- If funding permits, we want to grow the team and add three researchers (so we can go faster) and a policy expert (so we can better advocate for WELLBY priorites with governments)

- (maybe) scale up providing technical assistance to NGOs and researchers on how to assess impact in terms of WELLBYs (we do a tiny amount of this now)

- (maybe) launch a ‘Global Wellbeing Fund’ for donors to give to.

- (maybe) explore moving HLI inside a top university.

We need you!

We think we’ve shown we can do excellent, important research and cause outsized impact on a limited budget. We want to thank those who’ve supported us so far. However, our financial position is concerning: we have about 6 months’ reserves and need to raise a minimum of 205,000 USD to cover our operational costs for the next 12 months. This is even though our staff earn about ½ what they would in comparable roles in other organisations. At most, we think we could usefully absorb 1,020,000 USD to cover team expansion to 11 full time employees over the next 18 months.

We hope the problem is that donors believe the “everything good is fully funded” narrative and don’t know that we need them. However, we’re not fully-funded and we do need you! We don’t get funding from the two big institutional donors, Open Philanthropy and the EA Infrastructure fund (the former doesn’t fund research in global health and wellbeing; we didn’t get feedback from the latter). So, we won’t survive, let alone grow, unless new donors come forward and support us now and into the future.

Whether or not you’re interested in supporting us directly, we would like donors to consider funding our recommended charities; we aim to add two more to our list by the end of 2023. We expect these will be able to absorb millions or tens of dollars, and this number will expand as we do more research.

We think that helping us ‘keep the lights on’ for the next 12-24 months represents an unusually large counterfactual opportunity for donors as we expect our funding position to improve. We’ll explore diversifying our funding sources by:

- Seeking support from the wider world of philanthropy (where wellbeing and mental health are increasing popular topics)

- Acquiring conventional academic funding (we can’t access this yet as we’re not UKRI registered, but we’re working on this; we are also in discussions about folding HLI into a university)

- Providing technical consultancy on wellbeing-based monitoring and evaluation of projects (we’re having initial conversations about this too).

To close, we want to emphasise that taking happiness seriously represents a huge opportunity to find better ways to help people and reallocate enormous resources to those things, both in philanthropy and in public-policymaking. We’re the only organisation we know of focusing on finding the best ways to measure and improve the quality of lives. We sit between academia, effective altruism and policy-making, making us well-placed to carry this forward; if we don’t, we don’t know who else will.

If you’re considering funding us, I’d love to speak with you. Please reach out to me at michael@happierlivesinstitute.org and we’ll find time to chat. If you’re in a hurry, you can donate directly here.

Appendix 1: HLI budget

- ^

One WELLBY is equivalent to a 1-point increase on a 0-10 life satisfaction scale for one year

- ^

The total across two matching campaigns at the Double-Up Drive, the Optimus Foundation as well as donations via three effective giving organisations (Giving What We Can, RC Forward, and Effectiv Spenden) was $447k. Note not all this data is public and some public data is out of date. The sum donated be larger as donations may have come from other sources. We encourage readers to take this with a pinch of salt and how to do more accurate tracking in future.

- ^

Some quotes about HLI’s work from the 80k podcast:

[Elie Hassenfeld] ““I think the pro of subjective wellbeing measures is that it’s one more angle to use to look at the effectiveness of a programme. It seems to me it’s an important one, and I would like us to take it into consideration[Elie] “…I think one of the things that HLI has done effectively is just ensure that this [using WELLBYs and how to make tradeoffs between saving and improving lives] is on people’s minds. I mean, without a doubt their work has caused us to engage with it more than we otherwise might have. […] it’s clearly an important area that we want to learn more about, and I think could eventually be more supportive of in the future.”

[Elie] “Yeah, they went extremely deep on our deworming cost-effectiveness analysis and pointed out an issue that we had glossed over, where the effect of the deworming treatment degrades over time. […] we were really grateful for that critique, and I thought it catalysed us to launch this Change Our Mind Contest. ”

LondonGal @ 2023-07-14T23:05 (+136)

Hi everyone,

To fully disclose my biases: I’m not part of EA, I’m Greg’s younger sister, and I’m a junior doctor training in psychiatry in the UK. I’ve read the comments, the relevant areas of HLI’s website, Ozler study registration and spent more time than needed looking at the dataset in the Google doc and clicking random papers.

I’m not here to pile on, and my brother doesn’t need me to fight his corner. I would inevitably undermine any statistics I tried to back up due to my lack of talent in this area. However, this is personal to me not only wondering about the fate of my Christmas present (Greg donated to Strongminds on my behalf), but also as someone who is deeply sympathetic to HLI’s stance that mental health research and interventions are chronically neglected, misunderstood and under-funded. I have a feeling I’m not going to match the tone here as I’m not part of this community (and apologise in advance for any offence caused), but perhaps I can offer a different perspective as a doctor with clinical practice in psychiatry and on an academic fellowship (i.e. I have dedicated research time in the field of mental health).

The conflict seems to be that, on one hand, HLI has important goals related to a neglected area of work (mental health, particularly in LMICs). I also understand the precarious situation they are in financially, and the fears that undermining this research could have a disproportionate effect on HLI vs critiquing an organisation which is not so concerned with their longevity. There might be additional fears that further work in this area will be scrutinised to a uniquely high degree if there is a precedent set that HLI’s underlying research is found to be flawed. And perhaps this concern is compounded by the stats from people in this thread, which perhaps is not commonly directed to other projects in the EA-sphere, and might suggest there is an underlying bias against this type of work.

I think it’s fair to hold these views, but I’d argue this is likely the mechanism by which HLI has escaped scrutiny before now – people agree more work and funding should be directed to mental health and wanted to support an organisation addressing this. It possibly elevated the status of HLI in people’s minds, appearing more revolutionary in redirecting discussions in EA as a whole. Again, Greg donated to Strongminds on my behalf and, while he might now feel a sense of embarrassment for not delving into this research prior, in my mind I think it reflects a sense of affirmation in this cause and trust in this community which prides itself on being evidence-based. I’m mentioning it, because I think everyone here is united on these points and it’s always easier to have productive discussions from the mutual understanding of shared values and goals.

However, there are serious issues in the meta-analysis which appears to underlie the CEA, and therefore the strength of claims made by HLI. I think it is possible to uncouple this statement from arguments against HLI or any of the above points (where I don’t see disagreement). It seems critical to acknowledge the flaws in this work given the values of EA as an objective, data-driven approach to charitable giving. Failing to do this will risk the reputation of EA, and suggest there is a lack of critical appraisal and scrutiny which perhaps is driven by personal biases i.e. the number of reassurances in this thread that HLI is a good organisation where members are known personally to others in the community. Good people with good intentions can produce flawed research. Similarly, from the perspective of a clinical academic in psychiatry, there is a long history in my field of poorly-conducted, misinterpreted and rushed research which has meant establishing evidence-based care and attracting funding for research/interventions particularly difficult. Poor research in this area risks worsening this problem and mis-allocating very limited resources – it’s fairly shocking seeing the figures quoted here in terms of funding if it is based wholly or in part on outputs such as this meta-analysis which were accepted by EA. Again, as an outsider, it’s difficult for me to judge how critical this research was in attracting this allocation of funds.

While I think the issues with the analysis and all the statistics discussions are valid critiques of this work, it’s important to establish that this is only part of the reason this study would fall down under peer review. It’s concerning to me that peer-review is not the standard for organisations supported by EA; this is not just about scrutinising how the research was conducted and arguing about statistics, but establishes the involvement of expertise within the field of study. As someone who works in this field, the assumptions this meta-analysis makes about psychotherapy, outcome measures in mental health, etc, are problematic but perhaps not readily identified to those without a clinical background, and this is a much greater problem if there is an increasing interest in addressing mental health within EA. I’m not familiar with the backgrounds of people involved in HLI, but I’d be curious about who was consulted in formulating this work given the tone seems to reflect more philosophical vs psychiatric/psychotherapeutic language.

The way the statistical analysis has been heavily debated in this thread likely reflect the skills-mix in the EA community (clearly stats are well-covered!), but the statistics are somewhat irrelevant if your study design and inputs into the analysis are flawed to start with. Even if the findings of this research were not so unusual (perhaps something else which could have been flagged sooner) or were based on concrete stats, the research would still be considered flawed in my field. I imagine this will prompt some reflection in EA on this topic, but peer-review as a requirement could have avoided the bad timing of these discussions and would reduce the reliance on community members to critique research. I think this thread has demonstrated that critical appraisal is time-intensive and relies on specialist skills – it’s not likely that every area of interest will have representation within the EA community so the problem of ‘not knowing what you don’t know’ or how you weight the importance of voices in the community vs their amplification would be greatly helped by peer-review and reduce these blind spots. If the central goal of EA is using money to do the most good, and there is no robust system to evaluate research prior to attracting funding, this is an organisational problem rather than a specific issue with HLI/Strongminds.

My unofficial peer review.

Given inclusion/exclusion criteria aren’t stated clearly in the meta-analysis and the aim is pretty woolly, It seems the focus of the upcoming RCT and Strongminds research is evaluating:

-

Training non-HCPs in delivering psychotherapy in LMICs

-

Providing treatment (particularly to young women and girls) with symptoms suggestive of moderate to severe depression (PHQ-9 score of 10 and above)

-

Measuring the efficacy of this treatment on subjective symptom rating scales, such as PHQ-9, and other secondary outcome measures which might reflect broader benefits not captured in the symptom rating scales.

-

Finding some way to compare the cost-effectiveness of this treatment to other interventions such as cash transfers in broader discussions of life satisfaction and wellbeing which it obviously complicated compared to using QALYs, but important to do as the impact of mental illness is under-valued using measures geared towards physical morbidity. Or maybe it's trying to understand effectiveness of treating symptoms vs assumed precipitating/perpetuating factors like poverty.

Grand.

However, the meta-analysis design seems to miss the mark on developing anything which would support a CEA along these lines. Even from the perspective of favouring broad inclusion criteria, you would logically set these limits:

- Population

LMIC setting, people with depressive symptoms. It’s not clear if this is about effectively treating depression with psychotherapy and extrapolating that to a comment on wellbeing; or using psychotherapy as a tool to improve wellbeing, which for some reason is being measured in a reduction in various symptom scales for different mental health conditions and symptoms – this needs to be clearly stated. If it's the former, what you accept as a diagnosis of depression (ICD diagnostic codes, clinical assessment by trained professional, symptom scale cut-offs, antidepressant treatment, etc) should be defined.

If not defining the inclusion criteria of depression as a diagnosis, it's worth considering if certain psychiatric/medical conditions or settings should be excluded e.g. inpatients. As a hypothetical, extracting data on depression symptom scales for a non-HCP delivered psychotherapy in bipolar patients will obviously be misleading in isolation (i.e. the study likely accounted for measuring mania symptoms in their findings, but would be lost in this meta-analysis). One study included in this analysis (Richter et al) looked at an intervention which encouraged adherence to anti-retroviral medications via peer support for women newly diagnosed with HIV. Fortunately, this study shouldn't have been included as it didn't involve delivering psychotherapy, but for the sake of argument, is that fair given the neuropsychiatric complications of HIV/AIDS? Again, it's not about preparing for every eventuality, but it's having clear inclusion/exclusion criteria so there's no argument about cherry-picking studies because this has been discussed prior to search and analysis.

- Intervention

Delivery of a specific psychotherapeutic modality (IPT, etc) by a non-HCP. While I can agree there are shared core concepts between different modalities of psychotherapy, you absolutely have to define what you mean by psychotherapy because your dataset containing a column labelled ‘therapyness’ (high/medium/low) undermines a lot of confidence, as do some of the interventions you’ve included as meeting the bar for psychotherapy treatment. If you want to include studies which perhaps are not focussed on treating depression and might therefore involve other forms of therapy but still have benefit in alleviating depressive symptoms e.g. where the presenting complaint is trauma, the intervention might be EMDR (a specific therapy for PTSD) but the authors collected a number of outcome measures including symptom rating scales for anxiety and depression as secondary outcomes, it would be logical to stratify studies in this manner as a plan for analysis. I.e. psychotherapeutic intervention with evidence-base in relieving depressive symptoms (CBT, IPT, etc), psychotherapeutic intervention not specifically targeted at depressive symptoms (EMDR, MBT etc), with non-(psychotherapy) intervention as the control.

Several studies instead use non-psychotherapy as the intervention under study and this confusion seems to be down to papers describing them as having a ‘psychotherapeutic approach’ or being based on principles in any area of psychotherapy. This would cover almost anything as ‘psychotherapeutic’ as an adjective just means understanding people’s problems through their internal environment e.g. thoughts, feelings, behaviours and experiences. In my day-to-day work, I maintain a psychotherapeutic approach in patient interactions, but I do not sit down and deliver 14-week structured IPT. You can argue that generally having a supportive environment to discuss your problems with someone who is keen to hear them is equally beneficial to formal psychotherapy, but this leads to the obvious question of how you can use the idea of any intervention which sounds a bit ‘psychotherapy-y’ to justify the cost of training people to specifically deliver psychotherapy in a CEA from this data.

The fundamental lack of definition or understanding of these clinical terms leads to odd issues in some of the papers I clicked on i.e. Rojas et al (2007) compares a multicomponent group intervention involving lots of things but notably not delivery of any specific psychotherapy, to normal clinical care in a postnatal clinic. The next sentence describes part of normal clinical care to be providing ‘brief psychotherapeutic interventions’ – perhaps this is understood by non-clinicians as not highly ‘therapyish’ but this term is often used to describe short-term focussed CBT, or CBT-informed interventions. Not defining the intervention clearly means the control group contains patients receiving evidence-based psychotherapy of a specific modality and a treatment arm of no specific psychotherapy which is muddled by the MA.

- Comparison

As alluded to above, you need to be clear about what is an acceptable control and it’s simply not enough to state you are not sure what the ‘usual care’ is in research by Strongminds you have weighted so heavily. It can’t be then justified by an assumption mental health is neglected in LMICs so probably wouldn’t involve psychotherapy (with no citation). Especially as the definition of psychotherapy in this meta-analysis would deem someone visiting a pastor in church once a week as receiving psychotherapy. Without clearly defining the intervention, it's really difficult to understand what you are comparing against what.

- Outcome

This meta-analysis uses a range of symptom rating scales as acceptable outcome measures, favouring depression and anxiety rating scales, and scales measuring distress. This seems to be based on idea that these clusters of symptoms are highly adverse to wellbeing. This makes the analysis and discussion really confused, in my opinion, and seems to be a sign the analysis, expected findings, extrapolation to wellbeing and CEA was mixed into the methodology.

To me, the issue arises from not clearly defining the aim and inclusion/exclusion criteria. This meta-analysis could be looking at psychotherapy as a treatment for depression/depressive symptoms. This would acknowledge that depression is a psychiatric illness with congitive, psychological and biological symptoms (as captured by depression rating scales). As a clinical term, it is not just about 'negative affect' - low mood is not even required for a diagnosis as per ICD criteria. It absolutely does negatively affect wellbeing, as would any illness with unpleasant/distressing symptoms, but this therefore means generating some idea for how much patients' wellbeing improves from treatment has to be specific to depression. The subsequent CEA would then need to account for this and evaluate only psychotherapies with an evidence-base in depression. In the RCT design, I'd guess this is the rationale for a high PHQ cut-off - it's a proxy for relative certainty in a clinical diagnosis of depression (or at least a high burden of symptoms which may respond to depression treatments and therefore demonstrate a treatment effect); it's not supporting the idea that some general negative symptoms impacting a concept of wellbeing, short of depression, will likely benefit from specific psychotherapy to any degree of significance, and it would be an error to take this assumption and then further assume a linear relationship between PHQ and wellbeing/impairment.

If you are looking at depressive symptom reduction, you need to only include evaluation tools for depressive symptoms (PHQ, etc). You need to define which tools you would accept prior to the search and that these are validated for the population under study as you are using them in isolation - how mental illness is understood and presents is highly culturally-bound and these tools almost entirely developed outside of LMICs.

If, instead, you're looking at a range of measures you feel reflect poor mental health (including depression, anxiety and distress) in order to correlate this to a concept of wellbeing, these tools similarly have to be defined and validated. You also need to explain why some tools should be excluded, because this is unclear e.g. in Weiss et al, a study looking at survivors of torture and militant attacks in Iraq, the primary outcome measure was a trauma symptom scale (the HTQ), yet you've selected the secondary outcome measures of depression and anxiety symptom scores for inclusion. I would have assumed that reducing the highly distressing symptoms of PTSD in this group would be most relevant to a concept of wellbeing, yet that is not included in favour of the secondary measures. Including multiple outcome measures with no plan to stratify/subgroup per symptom cluster or disorder seems to accept double/triple counting participants who completed multiple outcome measures from the same intervention. Importantly, you can't then use this wide mix of various scales to make any comment on the benefits of psychotherapy for depression in improving wellbeing (as lots of the included scores are not measuring depression).

In both approaches, you do need to show it is accepted to pool these different rating scales to still answer your research question. It’s interesting to state you favour subjective symptom scores over functional scores (which are excluded), when both are well-established in evaluating psychotherapy. Other statements made by HLI suggest symptom rating scores include assesment of functioning - I've reproduced the PHQ-9 below for people to draw their own conclusions, but it's safe to say I disagree with this. It’s not clear to me if it’s understood functional scores are also commonly subjective measures, like the WSAS - patients are asked how rate how well they feel they are managing work activities, social activities etc. Ignoring functioning as a blanket rule seems to miss the concept of ‘insight’ in mental health, where people can struggle identifying symptoms as symptoms but are severely disabled due to an illness (this perhaps should also be considered in excluding scales completed by an informant or close relative, particularly thinking about studies involving children or more severe psychopathology). Incorporating functional scoring captures the holistic nature of psychotherapy, where perhaps people may still struggle with symptoms of anxiety/depression after treatment, but have made huge strides in being able to return to work. Again, you need to be clear why functional scores are excluded and be clear this was done when extrapolating findings to discussions of life satisfaction or wellbeing. This research has made a lot of assumptions in this regard that I don’t follow.

x. Grouping measures and relating this to wellbeing:

On that note – using a mean change in symptoms scores is a reasonable evaluation of psychotherapy as a concept if you are so inclined but I would strongly argue that this cannot be used in isolation to make any inference about how this correlates to wellbeing. As others have alluded to in this thread, symptom scores are not linear. To isolate depression as an example, this is deemed mild/moderate/severe based on the number of symptoms experienced, the presence of certain concerning symptoms (e.g. psychosis) and the degree of functional impact.

Measures like the PHQ-9 score the number of depressive symptoms present and how often they occur from 0 (not at all) to 3 (nearly every day) over the past two weeks:

- Little interest or pleasure in doing things?

- Feeling down, depressed or hopeless?

- Trouble falling or staying asleep, or sleeping too much?

- Feeling tired or having little energy?

- Poor appetite or overeating?

- Feeling bad about yourself - or that you are a failure or have let yourself or your family down?

- Trouble concentrating on things, such as reading the newspaper or watching television?

- Moving or speaking so slowly that other people have noticed? Or the opposite - being so fidgety or restless that you have been moving around a lot more than usual?

- Thoughts that you would be better off dead, or of hurting yourself in some way?

If you take the view that a symptom rating score has a linear relationship to 'negative affect' or suffering in depression, you would then imagine that the outcomes of PHQ-9 (no depression, mild, moderate, severe) would be regularly distributed in the 27-item score i.e. a score of 0-6 should be no depression, 7-13 mild depression, 14-20 moderate depression and 21-27 severe. This is not the case as the actual PHQ-9 scores are 0-4 no depression, 5-9 mild depression, 10-14 moderate, 15-19 moderately severe, 20-27 severe. This is because the symptoms asked about in the PHQ are diagnostic for depression – it’s not an attempt at trying to gather how happy or sad someone is on a scale from 0-27 (in fact 0 just indicates ‘no depression symptoms’, not happiness or fulfilment, and it's likely people with very serious depression will not be able to complete a PHQ-9). Hopefully it's clear from the PHQ-9 why the cut-offs are low and why the severity increases so sharply; the symptoms in question are highly indicative of pathology if occuring frequently. It’s also in the understanding that a PHQ-9 would be administered when there is clinical suspicion of depression to elicit severity or in evaluation of treatment (i.e. in some contexts, like bereavement, experiencing these symptoms would be considered normal, or if symptoms are better explained by another illness the PHQ is unhelpful) and it's not used for screening (vs the Edinburgh score for postnatal depression which is a screening tool and features heavily in included studies). Critically, it’s why you can’t assume it's valid to lump all symptom scales together, especially cross-disorders/symptom clusters as in this meta-analysis.

x. Search strategy

I feel this should go without saying, but once you’ve ironed out these issues to have a research question you could feasibly inform with meta-analysis, you then need to develop a search strategy and conduct a systematic review. It’s guaranteed that papers have been missed with the approach used here, and I’ve never read a peer-reviewed meta-analysis where a (10-hour) time constraint was used as part of this strategy. While I agree the funnel plot is funky, it’s likely reflecting the errors in not conducting this search systematically rather than assuming publication bias – it’s likely the highly cited papers etc were more easily found using this approach and therefore account for the p-clustering. If the search was a systematic review and there were objective inclusion/exclusion criteria and the funnel plot looked like that, you can make an argument for publication bias. As it stands, the outputs are only as good as the inputs i.e. you can't out-analyse a poor study design/methodology to produce reliable results.

Simply put, the most critical problem here is that without even getting into the problems with the data extraction I found, or the analysis as discussed in this thread, from this study design which doesn't seek to justify why any of these decisions were made, any analytic outputs are destined to be unreliable. How much of this was deliberate on the part of HLI can’t be determined as there is no possible way of replicating the search strategy they used (this is the reason to have a robust strategy as part of your study design). I think if you want to call this a back-of-napkin scoping review to generate some speculative numbers, you could describe what you found as there being early signals that psychotherapy could be more cost-effective than assumed and therefore there’s need to conduct a vigorous SR/MA. It perhaps may have been more useful in a shallow review to actually exclude the Strongminds study and evaluate existing research through the framework of (1) do the SM results make sense in the context of previous studies and (2) can we explain any differences in a narrative review. It seems instead this work generated figures which were treated as useful or reliable and fed into a CEA, which was further skewed by how this was discussed by HLI.

TL;DR

This is obviously very long and not going to be read in any detail on an online forum, but from the perspective of someone within this field, there seem to be a raft of problems with how this research was conducted and evaluated by HLI and EA. I’m not considered the Queen Overload of Psychiatry, I don’t have a PhD, but I suppose I'm trying to demonstrate that having a different background raises different questions, which seems particularly relevant if there is a recognition of the importance of peer-review (hopefully, I’m assuming, outside of EA literature). I’m also going to caveat this by saying I’ve not poured over HLI’s work, it’s just what immediately stood out to me, and haven’t made any attempt to cite my own knowledge derived from my practice – to me this is a post on a forum I’m not involved with rather than an ‘official’ attempt at peer review so I’m not holding myself to the same standard, just commenting in good faith.

I get the difficult position HLI are in with reputational salvage, but there is a similar risk to EA’s reputation if there are no checks in place given this has been accessible information for some time and did not raise questions earlier. While this might feel like Greg’s younger sister joining in to dunk on HLI, and I see from comments in this thread that perhaps criticism said passionately can be construed as hostile online, I don’t think this is anyone’s intent. Incredibly ironically given our genetic aversion to team sports, perhaps critique is intended as a fellow teammate begging a striker to get off the field when injured as they are hurting themselves and the team. Letting that player limp on is not being a supportive teammate. Personally, I hope these discussions drive discussions in HLI and EA which provide scope for growth.

In my unsolicited and unqualified opinion, I would advise withdrawing the CEA and drastically modifying the weight HLI puts on this work so it does not appear to be foundational to HLI as an organisation. Journals are encouraging the submission of meta-analysis study protocols for peer-review and publication (BMJPsych Open is one – I have acted as a peer reviewer for this journal to be transparent) in order to improve the quality of research. While conducting a whole SR/MA and publication takes time which could allow further loss of reputation, this is a quick way of acknowledging the issues here and taking concrete steps to rectify them. It’s not acceptable, to me, for the same people to offer a re-analysis or review this work because I’m sceptical this would not produce another flawed output and it seems there is a real need to involve expertise from the field of study (i.e. in formal peer review) at an earlier stage to right the ship.

Again, I do think the aims of HLI are important and I do wish them the best; and I’m interested to see how these discussions evolve in EA as it seems straying into a subject I’m passionate about. I come in peace and this feedback is genuinely meant constructively, so in the spirit of EA and younger-sibling disloyalty, I’m happy to offer HLI help above what’s already provided if they would like it.

[Edit for clarity mostly under 'outcomes' and 'grouping measures', corrected my horrid formatting/typos, and included the PHQ-9 for context. Kept my waffle and bad jokes for accountability, and was using the royal 'you' vs directing any statements at OP(!)]

Guy Raveh @ 2023-07-16T08:59 (+17)

Strongly upvoted for the explanation and demonstration of how important peer-review by subject matter experts is. I obviously can't evaluate either HLI's work or your review, but I think this is indeed a general problem of EA where the culture is, for some reason, aversive to standard practices of scientific publishing. This has to be rectified.

Jack Malde @ 2023-07-16T14:42 (+10)

I think it's because the standard practices of scientific publishing are very laborious and EA wants to be a bit more agile.

Having said that I strongly agree that more peer-review is called for in EA, even if we don't move all the way to the extreme of the academic world.

Madhav Malhotra @ 2023-08-03T20:28 (+8)

Out of curiosity @LondonGal, have you received any followups from HLI in response to your critique? I understand you might not be at liberty to share all details, so feel free to respond as you feel appropriate.

LondonGal @ 2023-08-04T21:10 (+4)

Nope, I've not heard from any current HLI members regarding this in public or private.

freedomandutility @ 2023-07-16T12:26 (+7)

Strongly upvoted.

My recommended next steps for HLI:

-

Redo the meta-analysis with a psychiatrist involved in the design, and get external review before publishing.

-

Have some sort of sensitivity analysis which demonstrates to donors how the effect size varies based on different weightings of the StrongMinds studies.

(I still strongly support funding HLI, not least so they can actually complete these recommended next steps)

John Salter @ 2023-07-16T12:59 (+4)

A professional psychotherapy researcher, or even just a psychotherapist, would be more appropriate than a psychiatrist no?

LondonGal @ 2023-07-16T14:42 (+28)

[Speaking from a UK perspective with much less knowledge of non-medical psychotherapy training]

I think the importance is having a strong mental health research background, particularly in systematic review and meta-analysis. If you have an expert in this field then the need for clinical experience becomes less important (perhaps, depends on HLI's intended scope).

It's fair to say psychology and psychiatry do commonly blur boundaries with psychotherapy as there are different routes of qualification - it can be with a PhD through a psychology/therapy pathway, or there is a specialism in psychotherapy that can be obtained as part of psychiatry training (a bit like how neurologists are qualified through specialism in internal medicine training). Psychotherapists tend to be qualified in specific modalities in order to practice them independently e.g. you might achieve accreditation in psychoanalytic psychotherapy, etc. There are a vast number of different professionals (me included, during my core training in psychiatry) who deliver psychotherapy under supervision of accredited practitioners so the definition of therapist is blurry.

Psychotherapy is similarly researched through the perspective of delivering psychotherapy which perhaps has more of a psychology focus, and as a treatment of various psychiatric illnesses (+/- in combination or comparison with medication, or novel therapies like psychadelics) which perhaps is closer to psychiatric research. Diagnosis of psychiatric illnesses like depression and directing treatment tends to remain the responsibility of doctors (psychiatrists or primary care physicians), and so psychiatry training requires the development of competencies in psychotherapy, even if delivery of psychotherapy does not always form the bulk of day-to-day practice, as it relates to formulating treatment plans for patients with psychiatric illness.

The issues I raise relate to the clinical presentation of depression as it pertains to impairment/wellbeing, diagnosis of depression, symptom rating scales, psychotherapy as a defined treatment, etc.; as well as the wide range of psychopathology captured in the dataset. My feeling is the breadth of this would benefit from a background in psychiatry for the assumptions I made about HLI's focus of the meta-analysis. However, if the importance is the depth of understanding IPT as an intervention, or perhaps the hollistic outcomes of psychotherapy particularly related to young women/girls in LMICs, then you might want a psychotherapist (PhD or psychiatrist) working with accreditation in the modality or with the population of interest. If you found someone who regularly publishes systematic reviews and meta-analyses of psychotherapy efficacy then that would probably trump both regardless of clinical background. Or perhaps all three is best.

You're both right to clarify this, though - I was giving my opinion from my background in clinical/academic psychiatry and so I talk about it a lot! When I mention the field of study etc, I meant mental health research more broadly given it depends on HLI's aims/scope to know what specific area this would be.

[Edit - Sorry, I've realised my lack of digging into the background of HLI members/contributors to this research could render the above highly offensive if there are individuals from this field on staff, and also makes me appear extremely arrogant. For clarity, it's possible all of my concerns were actually fully-rationalised, deliberate choices by HLI that I've not understood from my quick sense-check, or I might disagree with but are still valid.

[However, my impression from the work, in particular the design and methodology, is that there is a lack of psychiatric and/or psychotherapy knowledge (given the questions I had from a clinical perspective); and a lack of confidence in systematic review and meta-analysis from how far this deviates from Cochrane/PRISMA that I was trying to explain in more accessible terms in my comment without being exhaustive. It's possible contributors to this work did have experience in these areas but were not represented in the write-up, or not involved at the appropriate times in the work, etc. I'm not going to seek out whether or not that is the case as I think it would make this personal given the size of the organisation, and I'm worried that if I check I might find a psychotherapy professor on staff I've now crossed (jk ;-)).

[It's interesting to me either way, as both seem like problems - HLI not identifying they lacked appropriate skills to conduct this research, or seemingly not employing those with the relevant skills appropriately to conduct or communicate it - and it has relevance outside of this particular meta-analysis in the consideration of further outputs from HLI, or evaluation of orgs by EA. In any case, peer-review offers reassurance to the wider EA community that external subject-matter expertise has been consulted in whatever field of interest (with the additional benefit of shutting people like me down very quickly), and provides an opportunity for better research if deficits identified from peer-review suggest skills need to be reallocated or additional skills sought in order to meet a good standard.]

JamesSnowden @ 2023-07-07T23:28 (+123)

>Since then, all the major actors in effective altruism’s global health and wellbeing space seem to have come around to it (e.g., see these comments by GiveWell, Founders Pledge, Charity Entrepreneurship, GWWC, James Snowden).

I don't think this is an accurate representation of the post linked to under my name, which was largely critical.

alex lawsen (previously alexrjl) @ 2023-07-09T06:23 (+47)

[Speaking for myself here]

I also thought this claim by HLI was misleading. I clicked several of the links and don't think James is the only person being misrepresented. I also don't think this is all the "major actors in EA's GHW space" - TLYCS, for example, meet reasonable definitions of "major" but their methodology makes no mention of wellbys

MichaelPlant @ 2023-07-10T12:08 (+4)

Hello Alex,

Reading back on the sentence, it would have been better to put 'many' rather than 'all'. I've updated it accordingly. TLYCS don't mention WELLBYs, but they did make the comment "we will continue to rely heavily on the research done by other terrific organizations in this space, such as GiveWell, Founders Pledge, Giving Green, Happier Lives Institute [...]".

It's worth restating the positives. A number of organisations have said that they've found our research useful. Notably, see the comments by Matt Lerner (Research Director, Founders Pledge) below and also those from Elie Hassenfield (CEO, GiveWell), which we included in footnote 3 above. If it wasn't for HLI's work pioneering the subjective wellbeing approach and the WELLBY, I doubt these would be on the agenda in effective altruism.

alex lawsen (previously alexrjl) @ 2023-07-10T16:22 (+7)

My comment wasn't about whether there are any positives in using WELLBYs (I think there are), it was about whether I thought that sentence and set of links gave an accurate impression. It sounds like you agree that it didn't, given you've changed the wording and removed one of the links. Thanks for updating it.

I think there's room to include a little more context around the quote from TLYCs.

In short, we do not seek to duplicate the excellent work of other charity evaluators. Our approach is meant to complement that work, in order to expand the list of giving opportunities for donors with strong preferences for particular causes, geographies, or theories of change. Indeed, we will continue to rely heavily on the research done by other terrific organizations in this space, such as GiveWell, Founders Pledge, Giving Green, Happier Lives Institute, Charity Navigator, and others to identify candidates for our recommendations, even as we also assess them using our own evaluation framework.

We also fully expect to continue recommending nonprofits that have been held to the highest evidentiary standards, such as GiveWell’s top charities. For our current nonprofit recommendations that have not been evaluated at that level of rigor, we have already begun to conduct in-depth reviews of their impact. Where needed, we will work with candidate nonprofits to identify effective interventions and strengthen their impact evaluation approaches and metrics. We will also review our charity list periodically and make sure our recommendations remain relevant and up to date.

MichaelPlant @ 2023-07-10T11:40 (+14)

Hello James. Apologies, I've removed your name from the list.

To explain why we included it, although the thrust of your post was to critically engage with our research, the paragraph was about the use of the SWB approach for evaluating impact, which I believed you were on board with. In this sense, I put you in the same category as GiveWell: not disagreeing about the general approach, but disagreeing about the numbers you get when you use it.

JamesSnowden @ 2023-07-10T19:21 (+14)

Thanks for editing Michael. Fwiw I am broadly on board with swb being a useful framework to answer some questions. But I don’t think I’ve shifted my opinion on that much so “coming round to it” didn’t resonate

Gregory Lewis @ 2023-07-08T07:33 (+112)

[Own views]

- I think we can be pretty sure (cf.) the forthcoming strongminds RCT (the one not conducted by Strongminds themselves, which allegedly found an effect size of d = 1.72 [!?]) will give dramatically worse results than HLI's evaluation would predict - i.e. somewhere between 'null' and '2x cash transfers' rather than 'several times better than cash transfers, and credibly better than GW top charities.' [I'll donate 5k USD if the Ozler RCT reports an effect size greater than d = 0.4 - 2x smaller than HLI's estimate of ~ 0.8, and below the bottom 0.1% of their monte carlo runs.]

- This will not, however, surprise those who have criticised the many grave shortcomings in HLI's evaluation - mistakes HLI should not have made in the first place, and definitely should not have maintained once they were made aware of them. See e.g. Snowden on spillovers, me on statistics (1, 2, 3, etc.), and Givewell generally.

- Among other things, this would confirm a) SimonM produced a more accurate and trustworthy assessment of Strongminds in their spare time as a non-subject matter expert than HLI managed as the centrepiece of their activity; b) the ~$250 000 HLI has moved to SM should be counted on the 'negative' rather than 'positive' side of the ledger, as I expect this will be seen as a significant and preventable misallocation of charitable donations.

- Regrettably, it is hard to square this with an unfortunate series of honest mistakes. A better explanation is HLI's institutional agenda corrupts its ability to conduct fair-minded and even-handed assessment for an intervention where some results were much better for their agenda than others (cf.). I am sceptical this only applies to the SM evaluation, and I am pessimistic this will improve with further financial support.

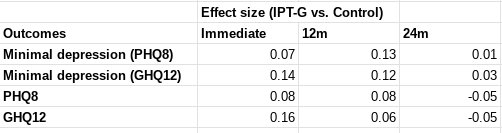

Gregory Lewis @ 2024-08-03T15:19 (+148)

An update:

I'll donate 5k USD if the Ozler RCT reports an effect size greater than d = 0.4 - 2x smaller than HLI's estimate of ~ 0.8, and below the bottom 0.1% of their monte carlo runs.

This RCT (which should have been the Baird RCT - my apologies for mistakenly substituting Sarah Baird with her colleague Berk Ozler as first author previously) is now out.

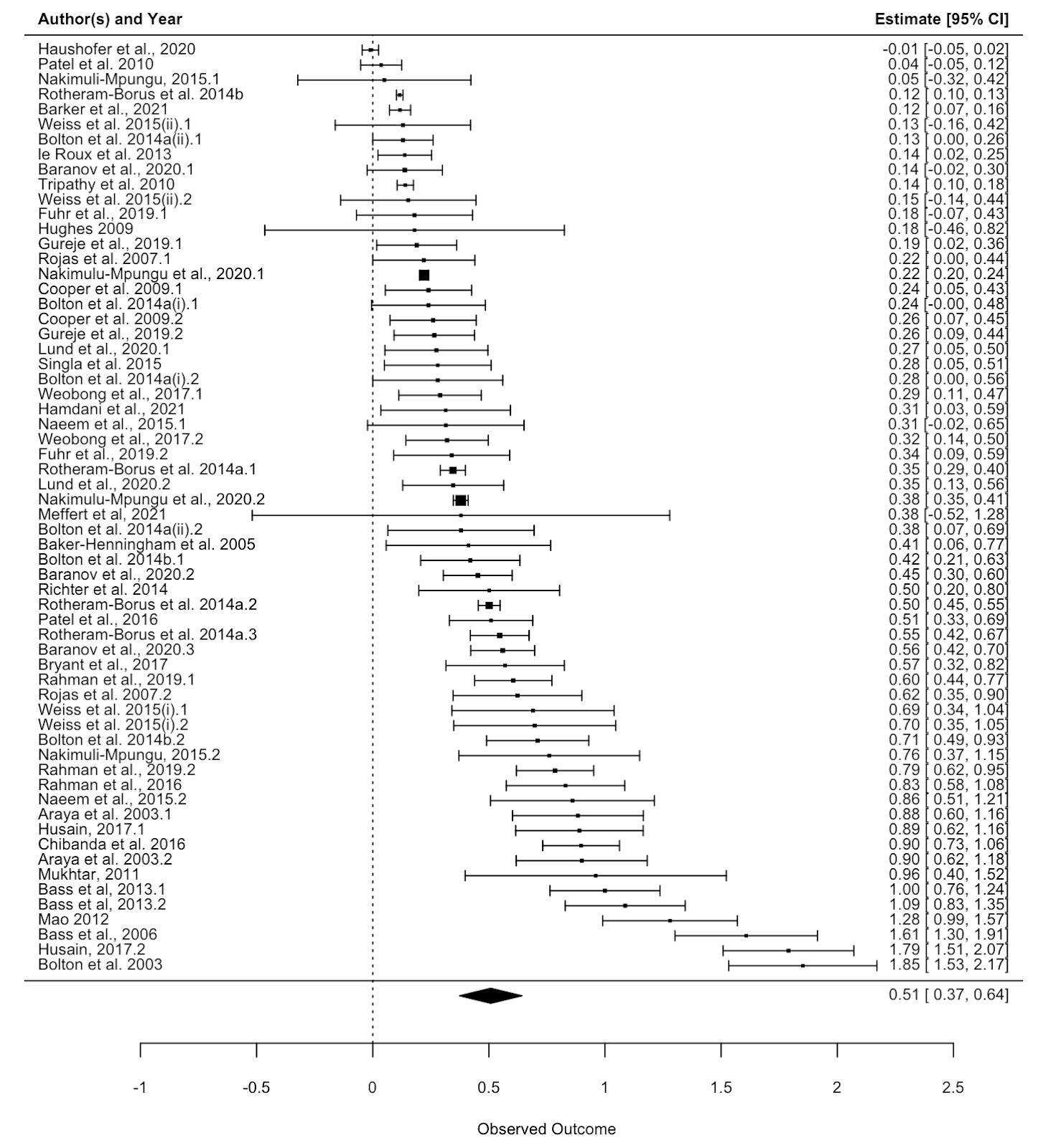

I was not specific on which effect size would count, but all relevant[1] effect sizes reported by this study are much lower than d = 0.4 - around d = 0.1. I roughly[2] calculate the figures below.

In terms of "SD-years of depression averted" or similar, there are a few different ways you could slice it (e.g. which outcome you use, whether you linearly interpolate, do you extend the effects out to 5 years, etc). But when I play with the numbers I get results around 0.1-0.25 SD-years of depression averted per person (as a sense check, this lines up with an initial effect of ~0.1, which seems to last between 1-2 years).

These are indeed "dramatically worse results than HLI's [2021] evaluation would predict". They are also substantially worse than HLI's (much lower) updated 2023 estimates of Strongminds. The immediate effects of 0.07-0.16 are ~>5x lower than HLI's (2021) estimate of an immediate effect of 0.8; they are 2-4x lower than HLI's (2023) informed prior for Strongminds having an immediate effect of 0.39. My calculations of the total effect over time from Baird et al. of 0.1-0.25 SD-years of depression averted are ~10x lower than HLI's 2021 estimate of 1.92 SD-years averted, and ~3x lower than their most recent estimate of ~0.6.

Baird et al. also comment on the cost-effectiveness of the intervention in their discussion (p18):

Unfortunately, the IPT-G impacts on depression in this trial are too small to pass a

cost-effectiveness test. We estimate the cost of the program to have been approximately USD 48 per individual offered the program (the cost per attendee was closer to USD 88). Given impact estimates of a reduction in the prevalence of mild depression of 0.054 pp for a period of one year, it implies that the cost of the program per case of depression averted was nearly USD 916, or 2,670 in 2019 PPP terms. An oft-cited reference point estimates that a health intervention can be considered cost-effective if it costs approximately one to three times the GDP per capita of the relevant country per Disability Adjusted Life Year (DALY) averted (Kazibwe et al., 2022; Robinson et al., 2017). We can then convert a case of mild depression averted into its DALY equivalent using the disability weights calculated for the Global Burden of Disease, which equates one year of mild depression to 0.145 DALYs (Salomon et al., 2012, 2015). This implies that ultimately the program cost USD PPP (2019) 18,413 per DALY averted. Since Uganda had a GDP per capita USD PPP (2019) of 2,345, the IPT-G intervention cannot be considered cost-effective using this benchmark.

I'm not sure anything more really needs to be said at this point. But much more could be, and I fear I'll feel obliged to return to these topics before long regardless.

- ^

The report describes the outcomes on p.10:

The primary mental health outcomes consist of two binary indicators: (i) having a Patient Health Questionnaire 8 (PHQ-8) score ≤ 4, which is indicative of showing no or minimal depression (Kroenke et al., 2009); and (ii) having a General Health Questionnaire 12 (GHQ-12) score < 3, which indicates one is not suffering from psychological distress (Goldberg and Williams, 1988). We supplement these two indicators with five secondary outcomes: (i) The PHQ-8 score (range: 0-24); (ii) the GHQ-12 score (0-12); (iii) the score on the Rosenberg self-esteem scale (0-30) (Rosenberg, 1965); (iv) the score on the Child and Youth Resilience Measure-Revised (0-34) (Jefferies et al., 2019); and (v) the locus of control score (1-10). The discrete PHQ-8 and GHQ-12 scores allow the assessment of impact on the severity of distress in the sample, while the remaining outcomes capture several distinct dimensions of mental health (Shah et al., 2024).

Measurements were taken following treatment completion ('Rapid resurvey'), then at 12m and 24m thereafer (midline and endline respectively).

I use both primary indicators and the discrete values of the underlying scores they are derived from. I haven't carefully looked at the other secondary outcomes nor the human capital variables, but besides being less relevant, I do not think these showed much greater effects.

- ^

I.e. I took the figures from Table 6 (comparing IPT-G vs. control) for these measures and plugged them into a webtool for Cohen's h or d as appropriate. This is rough and ready, although my calculations agree with the effect sizes either mentioned or described in text. They also pass an 'eye test' of comparing them to the cmfs of the scores in figure 3 - these distributions are very close to one another, consistent with small-to-no effect (one surprising result of this study is IPT-G + cash lead to worse outcomes than either control or IPT-G alone):

One of the virtues of this study is it includes a reproducibility package, so I'd be happy to produce a more rigorous calculation directly from the provided data if folks remain uncertain.

bruce @ 2024-08-04T09:18 (+41)

My view is that HLI[1], GWWC[2], Founders Pledge[3], and other EA / effective giving orgs that recommend or provide StrongMinds as an donation option should ideally at least update their page on StrongMinds to include relevant considerations from this RCT, and do so well before Thanksgiving / Giving Tuesday in Nov/Dec this year, so donors looking to decide where to spend their dollars most cost effectively can make an informed choice.[4]

- ^

Listed as a top recommendation

- ^

Not currently a recommendation, (but to included as an option to donate)

- ^

Currently tagged as an "active recommendation"

- ^

Acknowledging that HLI's current schedule is "By Dec 2024", though this may only give donors 3 days before Giving Tuesday.

NickLaing @ 2024-08-04T13:02 (+10)

Thanks Bruce, would you still think this if Strongminds ditched their adolescent programs as a result of this study and continued with their core groups with older women?

bruce @ 2024-08-04T18:04 (+19)

Yes, because:

1) I think this RCT is an important proxy for StrongMinds (SM)'s performance 'in situ', and worth updating on - in part because it is currently the only completed RCT of SM. Uninformed readers who read what is currently on e.g. GWWC[1]/FP[2]/HLI website might reasonably get the wrong impression of the evidence base behind the recommendation around SM (i.e. there are no concerns sufficiently noteworthy to merit inclusion as a caveat). I think the effective giving community should have a higher bar for being proactively transparent here - it is much better to include (at minimum) a relevant disclaimer like this, than to be asked questions by donors and make a claim that there wasn't capacity to include.[3]

2) If a SM recommendation is justified as a result of SM's programme changes, this should still be communicated for trust building purposes (e.g. "We are recommending SM despite [Baird et al RCT results], because ...), both for those who are on the fence about deferring, and for those who now have a reason to re-affirm their existing trust on EA org recommendations.[4]

3) Help potential donors make more informed decisions - for example, informed readers who may be unsure about HLI's methodology and wanted to wait for the RCT results should not have to go search this up themselves or look for a fairly buried comment thread on a post from >1 year ago in order to make this decision when looking at EA recommendations / links to donate - I don't think it's an unreasonable amount of effort compared to how it may help. This line of reasoning may also apply to other evaluators (e.g. GWWC evaluator investigations).[5]

- ^

GWWC website currently says it only includes recommendations after they review it through their Evaluating Evaluators work, and their evaluation of HLI did not include any quality checks of HLI's work itself nor finalise a conclusion. Similarly, they say: "we don't currently include StrongMinds as one of our recommended programs but you can still donate to it via our donation platform".

- ^

Founders Pledge's current website says:

We recommend StrongMinds because IPT-G has shown significant promise as an evidence-backed intervention that can durably reduce depression symptoms. Crucial to our analysis are previous RCTs

- ^

I'm not suggesting at all that they should have done this by now, only ~2 weeks after the Baird RCT results were made public. But I do think three months is a reasonable timeframe for this.

- ^

If there was an RCT that showed malaria chemoprevention cost more than $6000 per DALY averted in Nigeria (GDP/capita * 3), rather than per life saved (ballpark), I would want to know about it. And I would want to know about it even if Malaria Consortium decided to drop their work in Nigeria, and EA evaluators continued to recommend Malaria Consortium as a result. And how organisations go about communicating updates like this do impact my personal view on how much I should defer to them wrt charity recommendations.

- ^

Of course, based on HLI's current analysis/approach, the ?disappointing/?unsurprising result of this RCT (even if it was on the adult population) would not have meaningfully changed the outcome of the recommendation, even if SM did not make this pivot (pg 66):

Therefore, even if the StrongMinds-specific evidence finds a small total recipient effect (as we present here as a placeholder), and we relied solely on this evidence, then it would still result in a cost-effectiveness that is similar or greater than that of GiveDirectly because StrongMinds programme is very cheap to deliver.

And while I think this is a conversation that has already been hashed out enough on the forum, I do think the point stands - potential donors who disagree with or are uncertain about HLI's methodology here would benefit from knowing the results of the RCT, and it's not an unreasonable ask for organisations doing charity evaluations / recommendations to include this information.

- ^

Based on Nigeria's GDP/capita * 3

- ^

Acknowledging that this is DALYs not WELLBYs! OTOH, this conclusion is not the GiveWell or GiveDirectly bar, but a ~mainstream global health cost-effectiveness standard of ~3x GDP per capita per DALY averted (in this case, the ~$18k USD PPP/DALY averted of SM is below the ~$7k USD PPP/DALY bar for Uganda)

NickLaing @ 2024-08-04T19:31 (+4)

Nice one Bruce. I think I agree that it should be communicated like you say for reasons 2 and 3

I don't think this is a good proxy for their main programs though, as this RCT looks a very different thing than their regular programming. I think other RCTs on group therapy in adult women from the region are better proxies than this study on adolescents.

Why do you think it's a particularly good proxy? In my mind the same org doing a different treatment, (that seems to work but only a little for a short ish time) with many similarities to their regular treatment of course.

Like I said a year ago, I would have much rather this has been an RCT on Strongminds regular programs rather than this one on a very different program for adolescents. I understand though that "does similar group psychotherapy also work for adolescents" is a more interesting question from a researcher's perspective, although less useful for all of us deciding just how good regular StrongMinds group psychotherapy is.

bruce @ 2024-08-04T20:18 (+6)

It sounds like you're interpreting my claim to be "the Baird RCT is a particularly good proxy (or possibly even better than other RCTs on group therapy in adult women) for the SM adult programme effectiveness", but this isn't actually my claim here; and while I think one could reasonably make some different, stronger (donor-relevant) claims based on the discussions on the forum and the Baird RCT results, mine are largely just: "it's an important proxy", "it's worth updating on", and "the relevant considerations/updates should be easily accessible on various recommendation pages". I definitely agree that an RCT on the adult programme would have been better for understanding the adult programme.

(I'll probably check out of the thread here for now, but good chatting as always Nick! hope you're well)

NickLaing @ 2024-08-05T04:16 (+2)

Nice one 100% agree no need to check in again!

NickLaing @ 2024-08-04T13:00 (+13)

Thanks for this Gregory, I think it's an important result and have updated my views. I'm not sure why HLI were so optimistic about this. I have a few comments here.

- This study was performed on adolescents, which is not the core group of women that Strong Minds and other group IPT programs treat. This study might update me slightly negatively against the effectof their core programming with groups of older women but not by much.

As The study said, "this marked the first time SMU (i) delivered therapy to out-of-school adolescent females, (ii) used youth mentors, and (iii) delivered therapy through a partner organization."

This result then doesn't surprise me as (high uncertainty) I think it's generally harder to move the needle with adolescent mental health than with adults.

-

The therapy still worked, even though the effect sizes were much smaller than other studies and were not cost effective.

-

As you've said before, f this kind of truly independent research was done on a lot of interventions, the results might not look nearly as good as the original studies.

-

I think Strongminds should probably stop their adolescent programs based on this study. Why keep doing it, when your work with adult women currently seems far more cost effective?

-

Even with the Covid caveat, I'm stunned at the null/negative effect of the cash transfer arm. Interesting stuff and not sure what to make of it.

-

I would still love a similar independent study on the regular group IPT programs with older women, and these RCTs should be pretty cheap on the scale of things, I doubt we'll get that though as it will probably seen as being too similar and not interesting enough for researchers which is fair enough.

MichaelPlant @ 2023-07-10T14:06 (+65)

Hi Greg,

Thanks for this post, and for expressing your views on our work. Point by point:

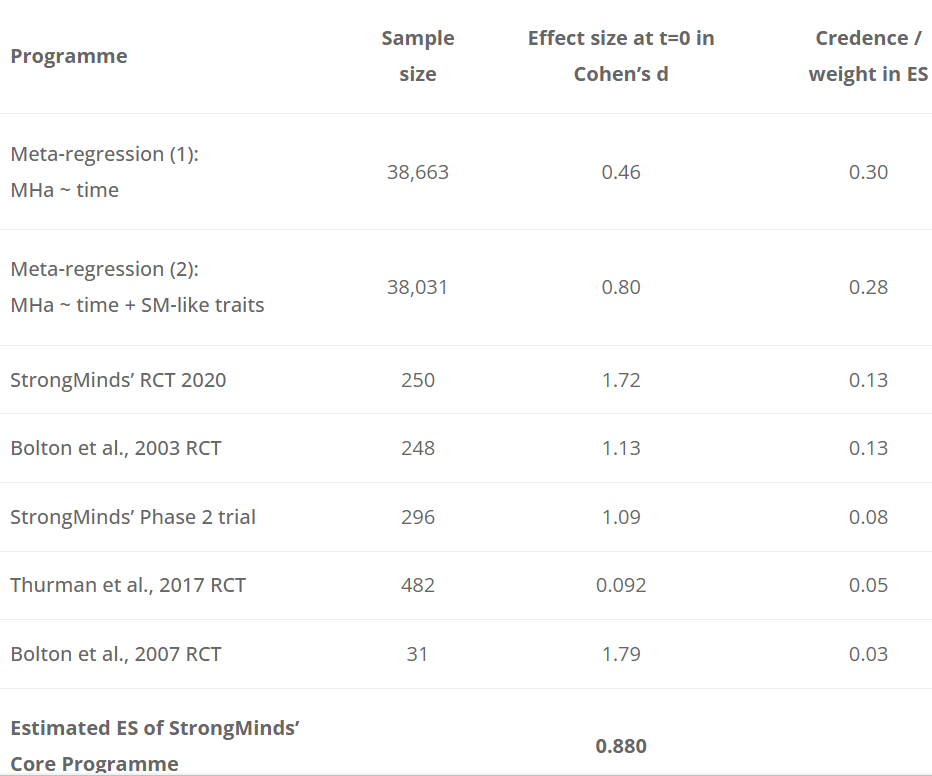

- I agree that StrongMinds' own study had a surprisingly large effect size (1.72), which was why we never put much weight on it. Our assessment was based on a meta-analysis of psychotherapy studies in low-income countries, in line with academic best practice of looking at the wider sweep of evidence, rather than relying on a single study. You can see how, in table 2 below, reproduced from our analysis of StrongMinds, StrongMinds' own studies are given relatively little weight in our assessment of the effect size, which we concluded was 0.82 based on the available data. Of course, we'll update our analysis when new evidence appears and we're particularly interested in the Ozler RCT. However, we think it's preferable to rely on the existing evidence to draw our conclusions, rather than on forecasts of as-yet unpublished work. We are preparing our psychotherapy meta-analysis to submit it for academic peer review so it can be independently evaluated but, as you know, academia moves slowly.

- We are a young, small team with much to learn, and of course, we'll make mistakes. But, I wouldn't characterise these as 'grave shortcomings', so much as the typical, necessary, and important back and forth between researchers. A claims P, B disputes P, A replies to B, B replies to A, and so it goes on. Even excellent researchers overlook things: GiveWell notably awarded us a prize for our reanalysis of their deworming research. We've benefitted enormously from the comments we've got from others and it shows the value of having a range of perspectives and experts. Scientific progress is the result of productive disagreements.

- I think it's worth adding that SimonM's critique of StrongMinds did not refer to our meta-analytic work, but focused on concerns about StrongMinds own study and analysis done outside HLI. As I noted in 1., we share the concerns about the earlier StrongMinds study, which is why we took the meta-analytic approach. Hence, I'm not sure SimonM's analysis told us much, if anything, we hadn't already incorporated. With hindsight, I think we should have communicated far more prominently how small a part StrongMinds' own studies played in our analysis, and been quicker off the mark to reply to SimonM's post (it came out during the Christmas holidays and I didn't want to order the team back to their (virtual) desks). Naturally, if you aren’t convinced by our work, you will be sceptical of our recommendations.

- You suggest we are engaged in motivated reasoning, setting out to prove what we already wanted to believe. This is a challenging accusation to disprove. The more charitable and, I think, the true explanation is that we had a hunch about something important being missed and set out to do further research. We do complex interdisciplinary work to discover the most cost-effective interventions for improving the world. We have done this in good faith, facing an entrenched and sceptical status quo, with no major institutional backing or funding. Naturally, we won’t convince everyone – we’re happy the EA research space is a broad church. Yet, it’s disheartening to see you treat us as acting in bad faith, especially given our fruitful interactions, and we hope that you will continue to engage with us as our work progresses.

Table 2.

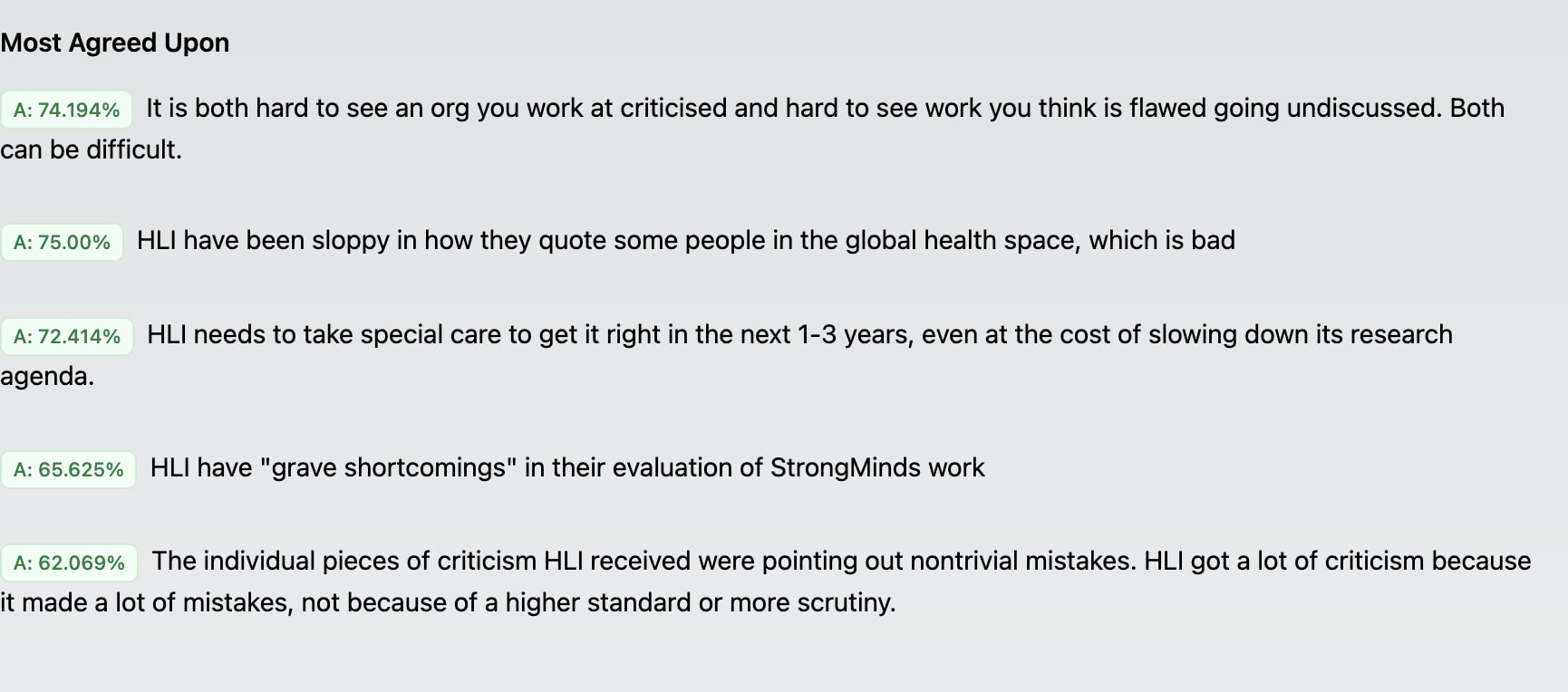

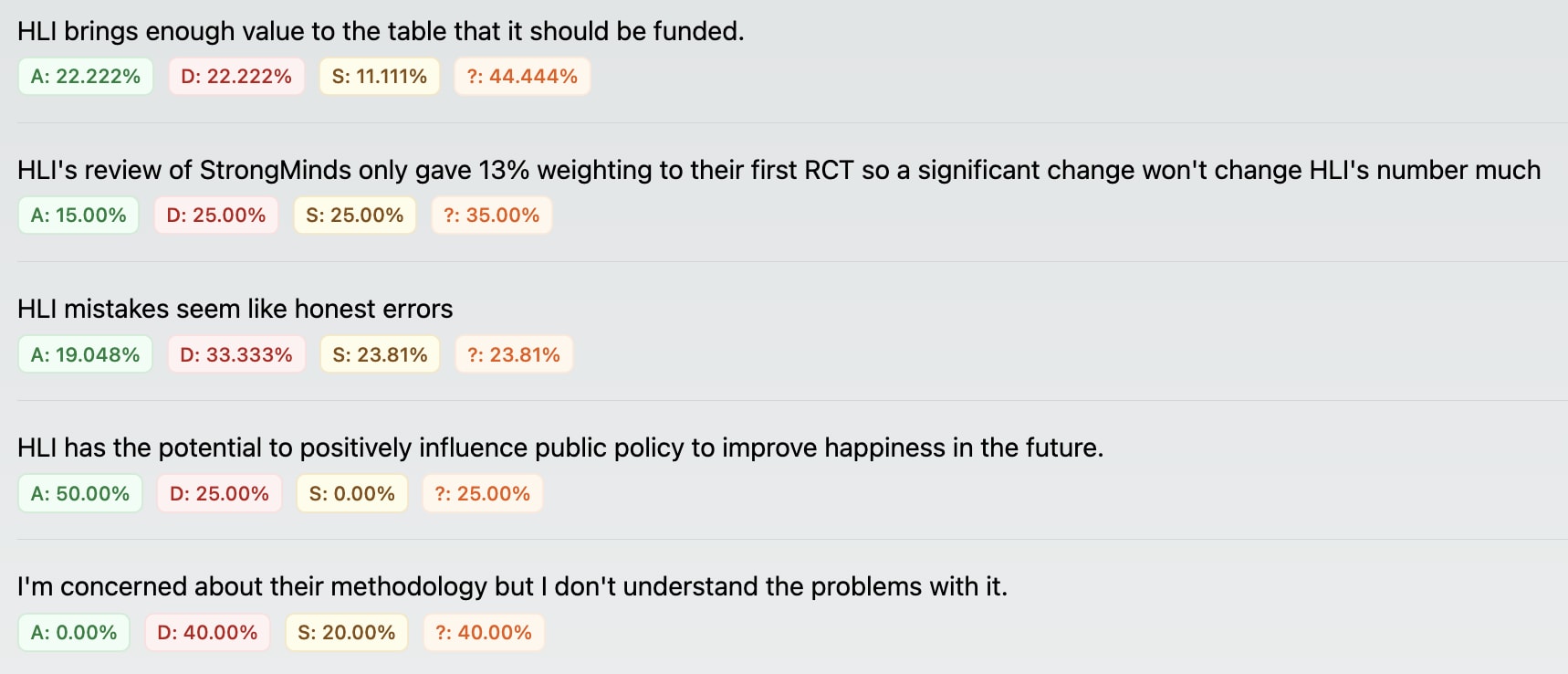

Gregory Lewis @ 2023-07-13T09:19 (+136)

Hello Michael,

Thanks for your reply. In turn:

1:

HLI has, in fact, put a lot of weight on the d = 1.72 Strongminds RCT. As table 2 shows, you give a weight of 13% to it - joint highest out of the 5 pieces of direct evidence. As there are ~45 studies in the meta-analytic results, this means this RCT is being given equal or (substantially) greater weight than any other study you include. For similar reasons, the Strongminds phase 2 trial is accorded the third highest weight out of all studies in the analysis.

HLI's analysis explains the rationale behind the weighting of "using an appraisal of its risk of bias and relevance to StrongMinds’ present core programme". Yet table 1A notes the quality of the 2020 RCT is 'unknown' - presumably because Strongminds has "only given the results and some supporting details of the RCT". I don't think it can be reasonable to assign the highest weight to an (as far as I can tell) unpublished, not-peer reviewed, unregistered study conducted by Strongminds on its own effectiveness reporting an astonishing effect size - before it has even been read in full. It should be dramatically downweighted or wholly discounted until then, rather than included at face value with a promise HLI will followup later.

Risk of bias in this field in general is massive: effect sizes commonly melt with improving study quality. Assigning ~40% of a weighted average of effect size to a collection of 5 studies, 4 [actually 3, more later] of which are (marked) outliers in effect effect, of which 2 are conducted by the charity is unreasonable. This can be dramatically demonstrated from HLI's own data:

One thing I didn't notice last time I looked is HLI did code variables on study quality for the included studies, although none of them seem to be used for any of the published analysis. I have some good news, and some very bad news.

The good news is the first such variable I looked at, ActiveControl, is a significant predictor of greater effect size. Studies with better controls report greater effects (roughly 0.6 versus 0.3). This effect is significant (p = 0.03) although small (10% of the variance) and difficult - at least for me - to explain: I would usually expect worse controls to widen the gap between it and the intervention group, not narrow it. In any case, this marker of study quality definitely does not explain away HLI's findings.

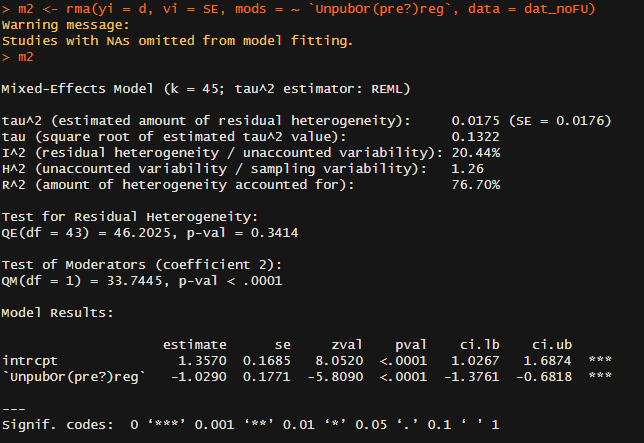

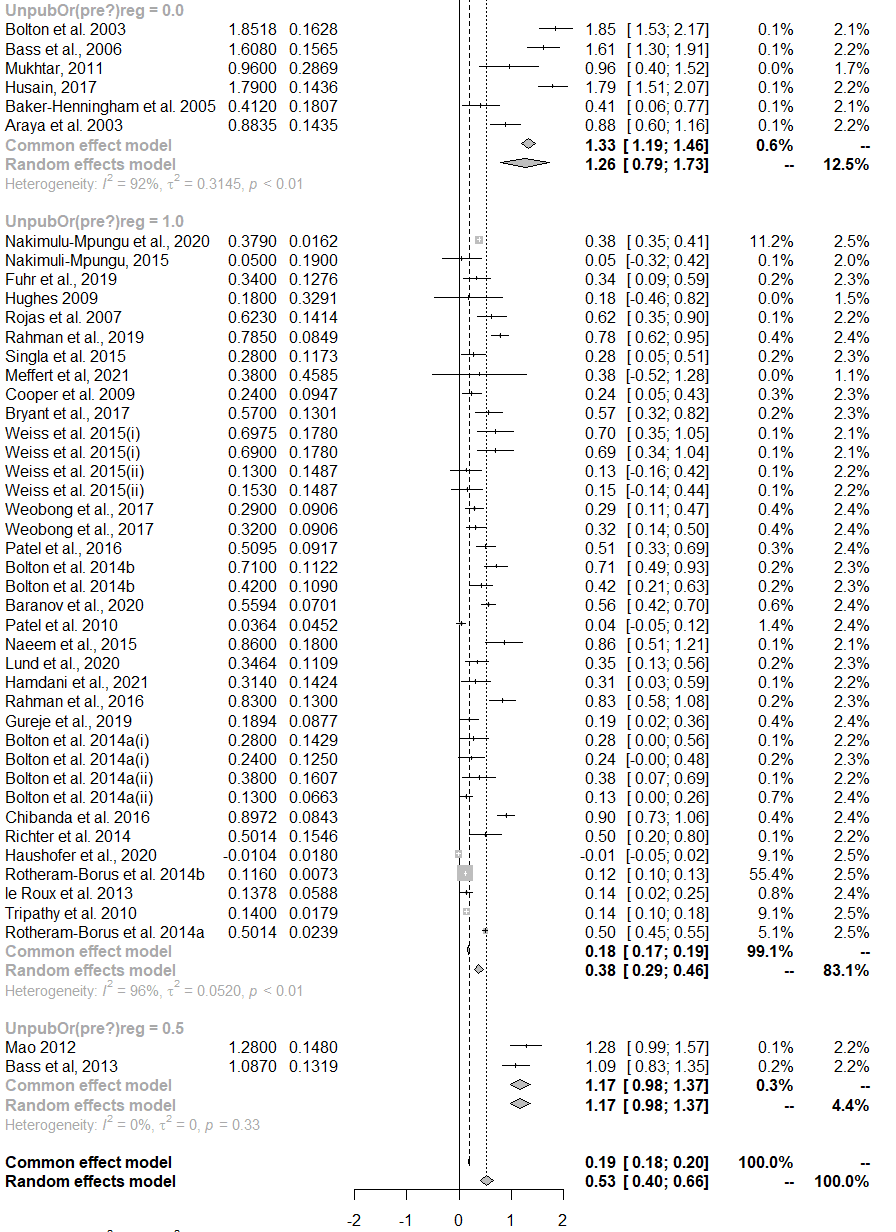

The second variable I looked at was 'UnpubOr(pre?)reg'.[1] As far as I can tell, coding 1 means something like 'the study was publicly registered' and 0 means it wasn't (I'm guessing 0.5 means something intermediate like retrospective registration or similar) - in any case, this variable correlates extremely closely (>0.95) to my own coding of whether a study mentions being registered or not after reviewing all of them myself. If so, using it as a moderator makes devastating reading:[2]

To orientate: in 'Model results' the intercept value gives the estimated effect size when the 'unpub' variable is zero (as I understand it, ~unregistered studies), so d ~ 1.4 (!) for this set of studies. The row below gives the change in effect if you move from 'unpub = 0' to 'unpub = 1' (i.e. ~ registered vs. unregistered studies): this drops effect size by 1, so registered studies give effects of ~0.3. In other words, unregistered and registered studies give dramatically different effects: study registration reduces expected effect size by a factor of 3. [!!!]

The other statistics provided deepen the concern. The included studies have a very high level of heterogeneity (~their effect sizes vary much more than they should by chance). Although HLI attempted to explain this variation with various meta-regressions using features of the intervention, follow-up time, etc., these models left the great bulk of the variation unexplained. Although not like-for-like, here a single indicator of study quality provides compelling explanation for why effect sizes differ so much: it explains three-quarters of the initial variation.[3]

This is easily seen in a grouped forest plot - the top group is the non registered studies, the second group the registered ones:

This pattern also perfectly fits the 5 pieces of direct evidence: Bolton 2003 (ES = 1.13), Strongminds RCT (1.72), and Strongminds P2 (1.09) are, as far as I can tell, unregistered. Thurman 2017 (0.09) was registered. Bolton 2007 is also registered, and in fact has an effect size of ~0.5, not 1.79 as HLI reports.[4]

To be clear, I do not think HLI knew of this before I found it out just now. But results like this indicate i) the appraisal of the literature in this analysis gravely off-the-mark - study quality provides the best available explanation for why some trials report dramatically higher effects than others; ii) the result of this oversight is a dramatic over-estimation of likely efficacy of Strongminds (as a ready explanation for the large effects reported in the most 'relevant to strongminds' studies is that these studies were not registered and thus prone to ~200%+ inflation of effect size); iii) this is a very surprising mistake for a diligent and impartial evaluator to make: one would expect careful assessment of study quality - and very sceptical evaluation where this appears to be lacking - to be foremost, especially given the subfield and prior reporting from Strongminds both heavily underline it. This pattern, alas, will prove repetitive.

I also think a finding like this should prompt an urgent withdrawal of both the analysis and recommendation pending further assessment. In honesty, if this doesn't, I'm not sure what ever could.

2:

Indeed excellent researchers overlook things, and although I think both the frequency and severity of things HLI mistakes or overlooks is less-than-excellent, one could easily attribute this to things like 'inexperience', 'trying to do a lot in a hurry', 'limited staff capacity', and so on.

Yet this cannot account for how starkly asymmetric the impact of these mistakes and oversights are. HLI's mistakes are consistently to Strongmind's benefit rather than its detriment, and HLI rarely misses a consideration which could enhance the 'multiple', it frequently misses causes of concern which both undermine both strength and reliability of this recommendation. HLI's award from Givewell deepens my concerns here, as it is consistent with a very selective scepticism: HLI can carefully scruitinize charity evaluations by others it wants to beat, but fails to mete out remotely comparable measure to its own which it intends for triumph.

I think this can also explain how HLI responds to criticism, which I have found by turns concerning and frustrating. HLI makes some splashy claim (cf. 'mission accomplished', 'confident recommendation', etc.). Someone else (eventually) takes a closer look, and finds the surprising splashy claim, rather than basically checking out 'most reasonable ways you slice it', it is highly non-robust, and only follows given HLI slicing it heavily in favour of their bottom line in terms of judgement or analysis - the latter of which often has errors which further favour said bottom line. HLI reliably responds, but the tenor of this response is less 'scientific discourse' and more 'lawyer for defence': where it can, HLI will too often further double down on calls it makes where I aver the typical reasonable spectator would deem at best dubious, and at worst tendentious; where it can't, HLI acknowledges the shortcoming but asserts (again, usually very dubiously) that it isn't that a big deal, so it will deprioritise addressing it versus producing yet more work with the shortcomings familiar to those which came before.

3: