EffectiveAdvocate's Quick takes

By EffectiveAdvocate🔸 @ 2024-01-19T19:40 (+1)

nullEffectiveAdvocate🔸 @ 2025-04-27T10:14 (+40)

Should I Be Public About Effective Altruism?

TL;DR: I've kept my EA ties low-profile due to career and reputational concerns, especially in policy. But I'm now choosing to be more openly supportive of effective giving, despite some risks.

For most of my career, I’ve worked in policy roles—first as a civil servant, now in an EA-aligned organization. Early on, both EA and policy work seemed wary of each other. EA had a mixed reputation in government, and I chose to stay quiet about my involvement, sharing only in trusted settings.

This caution gave me flexibility. My public profile isn’t linked to EA, and I avoided permanent records of affiliation. At times, I’ve even distanced myself deliberately. But I’m now wondering if this is limiting both my own impact and the spread of ideas I care about.

Ideas spread through visibility. I believe in EA and effective giving and want it to become a social norm—but norms need visible examples. If no one speaks up, can we expect others to follow?

I’ve been cautious about reputational risks—especially the potential downsides of being tied to EA in future influential roles, like running for office. EA still carries baggage: concerns about longtermism, elitism, the FTX/SBF scandal, and public misunderstandings of our priorities. But these risks seem more manageable now. Most people I meet either don’t know EA, or have a neutral-to-positive view when I explain it. Also, my current role is somewhat publicly associated with EA, and that won’t change. Hiding my views on effective giving feels less justifiable.

So, I’m shifting to increased openness: I’ll be sharing more and be more honest about the sources of my thinking, my intellectual ecosystem, and I’ll more actively push ideas around effective giving when relevant. I’ll still be thoughtful about context, but near-total caution no longer serves me—or the causes I care about.

This seems likely to be a shared challenge, curious how to hear how others are navigating it and whether your thinking has changed lately.

James Herbert @ 2025-04-28T18:06 (+8)

Speaking as someone who does community building professionally: I think this is great to hear! You’re probably already aware of this post, but just in case, I wanted to reference Alix’s nice write-up on the subject.

I also think many professional community-building organisations aim to get much better at communications over the next few years. Hopefully, as this work progresses, the general public will have a much clearer view of what the EA community actually is - and that should make it easier for you too.

Knight Lee @ 2025-04-28T11:56 (+2)

Can you describe yourself "moderately EA," or something like that, to distinguish yourself from the most extreme views?

The fact we have strong disagreements on this forum feels like evidence that EA is more like a dimension on the political spectrum, rather than a united category of people.

EffectiveAdvocate🔸 @ 2025-04-30T07:56 (+4)

Interesting idea! This got me thinking about this, and I think I find it tricky because I want to stay close to the truth, and the truth is, I’m not really a “moderate EA”. I care about shrimp welfare, think existential risk is hugely underrated, and believe putting numbers on things is one of our most powerful tools.

It’s less catchy, but I’ve been leaning toward something like: “I'm in the EA movement. To me, that means I try to ask what would do the most good, and I appreciate the community of people doing the same. That doesn’t mean I endorse everything done under the EA banner, or how it's sometimes portrayed.”

Sarah Tegeler 🔹 @ 2025-05-02T09:50 (+3)

I really like this framing, this is what I do and use all the time as well as a full-time community builder and for me it works well.

Knight Lee @ 2025-05-03T00:30 (+2)

Maybe say, I strongly believe in the principles[1] of EA.

- ^

The EA principles I follow does not include "the ends always justify the means."

Instead, it includes:

- Comparing charities and prosocial careers quantitively, not by warm fuzzy feelings

- Animal rights, judged by the subjective experience of animals not how cute they look

- Existential risk, because someday in the future we'll realize how irrational it was to neglect it

EffectiveAdvocate @ 2024-04-27T15:47 (+22)

I can't find a better place to ask this, but I was wondering whether/where there is a good explanation of the scepticism of leading rationalists about animal consciousness/moral patienthood. I am thinking in particular of Zvi and Yudkowsky. In the recent podcast with Zvi Mowshowitz on 80K, the question came up a bit, and I know he is also very sceptical of interventions for non-human animals on his blog, but I had a hard time finding a clear explanation of where this belief comes from.

I really like Zvi's work, and he has been right about a lot of things I was initially on the other side of, so I would be curious to read more of his or similar people's thoughts on this.

Seems like potentially a place where there is a motivation gap: non-animal welfare people have little incentive to convince me that they think the things I work on are not that useful.

MichaelStJules @ 2024-04-28T01:08 (+11)

Yudkowsky's views are discussed here:

EffectiveAdvocate @ 2024-05-01T12:06 (+1)

This was very helpful, thank you!

NickLaing @ 2024-04-27T19:13 (+2)

Perhaps the large uncertainty around it makes it less likely that people will argue against it publicly as well. I would imagine many people might think with very low confidence that some interventions for non-human animals might not be the most cost-effective, but stay relatively quiet due to that uncertainty.

EffectiveAdvocate @ 2024-03-28T07:35 (+8)

Reflecting on the upcoming EAGx event in Utrecht, I find myself both excited and cautiously optimistic about its potential to further grow the Dutch EA community. My experiences from the last EAGX in the Netherlands marked a pivotal moment in my own EA journey (significantly grounding it locally) and boosted the community's growth. I think this event also contributed to the growth of the 10% club and the founding of the School for Moral Ambition this year, highlighting the Netherlands as fertile ground for EA principles.

However, I'm less inclined to view the upcoming event as an opportunity to introduce proto-EAs. Recalling the previous Rotterdam edition's perceived expense, I'm concerned that the cost may deter potential newcomers, especially given the feedback I've heard regarding its perceived extravagance. I think we all understand why these events are worth our charitable Euros, but I have a hard time explaining that to newcomers who are attracted to EA for its (perceived) efficiency/effectiveness.

While the funding landscape may have changed (and this problem may have solved itself through that), I think it remains crucial to consider the aesthetics of events like these where the goal is in part to welcome new members into our community.

James Herbert @ 2024-03-28T19:54 (+3)

Thanks for sharing your thoughts!

It's a pity you don't feel comfortable inviting people to the conference - that's the last thing we want to hear!

So far our visual style for EAGxUtrecht hasn't been austere[1] so we'll think more about this. Normally, to avoid looking too fancy, I ask myself: would this be something the NHS would spend money on?

But I'm not sure how to balance the appearance of prudence with making things look attractive. Things that make me lean towards making things look attractive include:

- This essay on the value of aesthetics to movements

- This SSC review, specifically the third reason Pease mentions for the Fabians' success

- The early success of SMA and their choice to spend a lot on marketing and design

- Things I've heard from friends who could really help EA, saying things like, "ugh, all this EA stuff looks the same/like it was made by a bunch of guys"

For what it's worth, the total budget this year is about half of what was spent in 2022, and we have the capacity for almost the same number of attendees (700 instead of 750).

In case it's useful, here are some links that show the benefits of EAGx events. I admit they don't provide a slam-dunk case for cost-effectiveness, but they might be useful when talking to people about why we organise them:

- EAGx events seem to be a particularly cost-effective way of building the EA community, and we think the EA community has enormous potential to help build a better world.

- Open Philanthropy’s 2020 survey of people involved in longtermist priority work (a significant fraction of work in the EA community) found that about half of the impact that CEA had on respondents was via EAG and EAGx conferences.

- Anecdotally, we regularly encounter community members who cite EAGx events as playing a key part in their EA journey. You can read some examples from CEA’s analysis

Thanks again for sharing your thoughts! I hope your pseudonymous account is helping you use the forum, although I definitely don't think you need to worry about looking dumb :)

- ^

We're going for pink and fun instead. We're only going to spend a few hundred euros on graphic design.

EffectiveAdvocate @ 2024-03-29T12:09 (+3)

Hi James, I feel quite guilty for prompting you to write such a long, detailed, and persuasive response! Striving to find a balance between prudence and appeal seems to be the ideal goal. Using the NHS's spending habits as a heuristic to avoid extravagance seems smart (although I would not say that this should apply to other events!). Most importantly, I am relieved to learn that this year's budget per person will likely be significantly lower.

I totally agree that these events are invaluable. EAGs and EAGxs have been crucial in expanding my network and enhancing my impact and agency. However, as mentioned, I am concerned about perceptions. Having heard this I feel reassured, and I will see who I can invite! Thank you!

James Herbert @ 2024-03-29T13:00 (+3)

That's nice to read! But please don't feel guilty, I found it to be a very useful prompt to write up my thoughts on the matter.

EffectiveAdvocate🔸 @ 2024-11-13T07:14 (+6)

Does anyone have thoughts on whether it’s still worthwhile to attend EAGxVirtual in this case?

I have been considering applying for EAGxVirtual, and I wanted to quickly share two reasons why I haven't:

- I would only be able to attend on Sunday afternoon CET, and it seems like it might be a waste to apply if I'm only available for that time slot, as this is something I would never do for an in-person conference.

- I can't find the schedule anywhere. You probably only have access to it if you are on Swapcard, but this makes it difficult to decide ahead of time whether it is worth attending, especially if I can only attend a small portion of the conference.

MichaelStJules @ 2024-11-13T22:54 (+6)

EAGxVirtual is cheap to attend. I don't really see much downside to only attending one day. And you can still make connections and meet people after the conference is over.

ElliotJDavies @ 2024-11-14T14:16 (+4)

I'd be curious to know the marginal cost of an additional attendee - I'd put it between 5-30 USD, assuming they attend all sessions.

Assuming you update your availability on swapcard, and that you would get value out of attending a conference, I suspect attending is positive EV.

EffectiveAdvocate @ 2024-01-19T19:41 (+4)

Recent announcements of Meta had me thinking about "open source" AI systems more, and I am wondering whether it would be worthwhile to reframe open source models, and start referring to them as, "Models with publicly available model weights", or "Free weight-models".

This is not just more accurate, but also a better political frame for those (like me) that think that releasing model weights publicly is probably not going to lead to safer AI development.

Chris Leong @ 2024-01-19T20:23 (+2)

We can also talk about irreversible proliferation.

EffectiveAdvocate🔸 @ 2024-10-17T13:49 (+3)

Simple Forecasting Metrics?

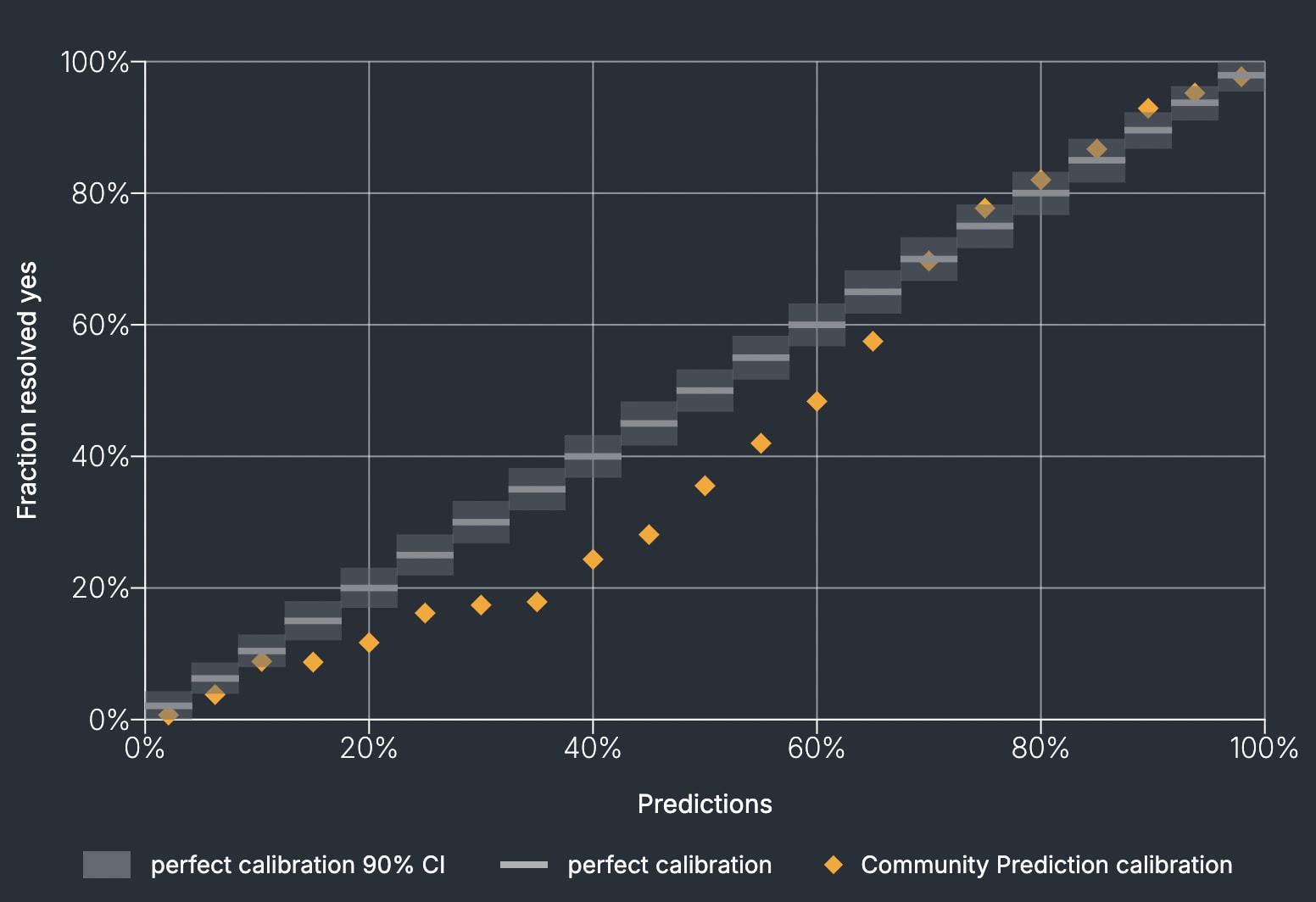

I've been thinking about the simplicity of explaining certain forecasting concepts versus the complexity of others. Take calibration, for instance: it's simple to explain. If someone says something is 80% likely, it should happen about 80% of the time. But other metrics, like the Brier score, are harder to convey: What exactly does it measure? How well does it reflect a forecaster's accuracy? How do you interpret it? All of this requires a lot of explanation for anyone not interested in the science of Forecasting.

What if we had an easily interpretable metric that could tell you, at a glance, whether a forecaster is accurate? A metric so simple that it could fit within a tweet or catch the attention of someone skimming a report—someone who might be interested in platforms like Metaculus. Imagine if we could say, "When Metaculus predicts something with 80% certainty, it happens between X and Y% of the time," or "On average, Metaculus forecasts are off by X%". This kind of clarity could make comparing forecasting sources and platforms far easier.

I'm curious whether anyone has explored creating such a concise metric—one that simplifies these ideas for newcomers while still being informative. It could be a valuable way to persuade others to trust and use forecasting platforms or prediction markets as reliable sources. I'm interested in hearing any thoughts or seeing any work that has been done in this direction.

Will Aldred @ 2024-10-17T17:54 (+3)

Imagine if we could say, "When Metaculus predicts something with 80% certainty, it happens between X and Y% of the time," or "On average, Metaculus forecasts are off by X%".

Fyi, the Metaculus track record—the “Community Prediction calibration” part, specifically—lets us do this already. When Metaculus predicts something with 80% certainty, for example, it happens around 82% of the time:

EffectiveAdvocate🔸 @ 2024-10-18T05:34 (+1)

Thank you for the response! I should have been a bit clearer: This is what inspired me to write this, but I still need 3-5 sentences to explain to a policymaker what they are looking at when you show them this kind of calibration graph. I am looking for something even shorter than that.

EffectiveAdvocate @ 2024-05-18T20:04 (+3)

In the past few weeks, I spoke with several people interested in EA and wondered: What do others recommend in this situation in terms of media to consume first (books, blog posts, podcasts)?

Isn't it time we had a comprehensive guide on which introductory EA books or media to recommend to different people, backed by data?

Such a resource could consider factors like background, interests, and learning preferences, ensuring the most impactful material is suggested for each individual. Wouldn’t this tailored approach make promoting EA among friends and acquaintances more effective and engaging?

EffectiveAdvocate @ 2024-06-08T06:14 (+1)

Are you here to win or the win the race?

I've been reflecting on the various perspectives within AI governance discussions, particularly within those concerned about AI safety.

One noticeable dividing line is between those concerned about the risks posed by advanced AI systems. This group advocates for regulating AI as it exists today and increasing oversight of AI labs. Their reasoning is that slowing down AI development would provide more time to address technical challenges and allow society to adapt to AI's future capabilities. They are generally cautiously optimistic about international cooperation. I think FLI falls into this camp.

On the other hand, there is a group increasingly focused not only on developing safe AI but also on winning the race, often against China. This group believes that the US currently has an advantage and that maintaining this lead will provide more time to ensure AI safety. They likely think the US embodies better values compared to China, or at least prefer US leadership over Chinese leadership. Many EA organizations, possibly including OP, IAPS, and those collaborating with the US government, may belong to this group.

I've found myself increasingly wary of the second group, tending to discount their views, trust them less, and question the wisdom of cooperating with them. My concern is that their primary focus on winning the AI race might overshadow the broader goal of ensuring AI safety. I am not really sure what to do about this, but I wanted to share my concern and hope to think a bit in the future about what can be done to prevent a rift emerging in the future, especially since I expect the policy stakes will get more and more important in the coming years.