Yanni Kyriacos's Quick takes

By yanni kyriacos @ 2023-06-13T00:23 (+2)

nullYanni Kyriacos @ 2024-02-25T23:42 (+88)

I met Australia's Assistant Minister for Defence last Friday. I asked him to write an email to the Minister in charge of AI, asking him to establish an AI Safety Institute. He said he would. He also seemed on board with not having fully autonomous AI weaponry.

All because I sent one email asking for a meeting + had said meeting.

Advocacy might be the lowest hanging fruit in AI Safety.

Mo Putera @ 2024-02-28T18:58 (+4)

Akash's Speaking to Congressional staffers about AI risk seems similar:

In May and June of 2023, I (Akash) had about 50-70 meetings about AI risks with congressional staffers. ...

In March of 2023, I started working on some AI governance projects at the Center for AI Safety. One of my projects involved helping CAIS respond to a Request for Comments about AI Accountability that was released by the NTIA.

As part of that work, I started thinking a lot about what a good regulatory framework for frontier AI would look like. For instance: if I could set up a licensing regime for frontier AI systems, what would it look like? Where in the US government would it be housed? What information would I want it to assess?

I began to wonder how actual policymakers would react to these ideas. I was also curious to know more about how policymakers were thinking about AI extinction risks and catastrophic risks.

I started asking other folks in AI Governance. The vast majority had not talked to congressional staffers (at all). A few had experience talking to staffers but had not talked to them about AI risk. A lot of people told me that they thought engagement with policymakers was really important but very neglected. And of course, there are downside risks, so you don't want someone doing it poorly.

After consulting something like 10-20 AI governance folks, I asked CAIS if I could go to DC and start talking to congressional offices. The goals were to (a) raise awareness about AI risks, (b) get a better sense of how congressional offices were thinking about AI risks, (c) get a better sense of what kinds of AI-related priorities people at congressional offices had, and (d) get feedback on my NTIA request for comment ideas.

CAIS approved, and I went to DC in May-June 2023. And just to be clear, this wasn't something CAIS told me to do– this was more of an "Akash thing" that CAIS was aware was happening.

Like you, Akash just cold-emailed people:

I sent a mass email to tech policy staffers, and I was pretty impressed by the number who responded. The email was fairly short, mentioned that I was at CAIS, had 1-2 bullets about what CAIS does, and had a bullet point about the fact that I was working on an NTIA request for comment.

I think it was/is genuinely the case that Congressional staffers are extremely interested in AI content right now. Like, I don't think I would've been able to have this many meetings if I was emailing people about other issues.

There's a lot of concrete learnings in that writeup; definitely worth reading I think.

yanni kyriacos @ 2024-08-02T01:29 (+51)

I don't know how to say this in a way that won't come off harsh, but I've had meetings with > 100 people in the last 6 months in AI Safety and I've been surprised how poor the industry standard is for people:

- Planning meetings (writing emails that communicate what the meeting will be about, it's purpose, my role)

- Turning up to scheduled meetings (like literally, showing up at all)

- Turning up on time

- Turning up prepared (i.e. with informed opinions on what we're going to discuss)

- Turning up with clear agendas (if they've organised the meeting)

- Running the meetings

- Following up after the meeting (clear communication about next steps)

I do not have an explanation for what is going on here but it concerns me about the movement interfacing with government and industry. My background is in industry and these just seem to be things people take for granted?

It is possible I give off a chill vibe, leading to people thinking these things aren't necessary, and they'd switch gears for more important meetings!

NickLaing @ 2024-08-02T06:15 (+10)

My 2 cents is that I think this is a really important observation (I think it's helpful) and I would be interested to see what other peoples feedback is on this front.

JuliaHP @ 2024-08-02T12:52 (+5)

hypothesis that springs to mind, might or might not be useful for engaging with it productively. might be wrong depending on what class of people you have been having meetings with.

when you select for people working on AI risks, you select for people who are generally less respective of status quo and social norms. you select for the kind of person who will generally do something less, because of the reason that its a social norm. in order for this reference class of person to do a thing, they kind of who through their personal reasoning methods reaches the conclusion "It would be worth the effort for me to change myself to become the kind of person who shows up on time consistently, compared to other things I could be spending my effort on". they might just figure its a better use of their effort to think about their research all day.

I would guess that most people on this earth don't show up on time because they reasoned it through that this is a good idea, they do it because it has been drilled into them through social norms, and they valued those social norms higher.

(note: this comment is not intended to be an argument that showing up on time is a waste of time)

yanni kyriacos @ 2024-08-02T01:42 (+4)

It just occurred to me that maybe posting this isn't helpful. And maybe net negative. If I get enough feedback saying it is then I'll happily delete.

yanni kyriacos @ 2024-06-12T00:38 (+47)

When AI Safety people are also vegetarians, vegans or reducetarian, I am pleasantly surprised, as this is one (of many possible) signals to me they're "in it" to prevent harm, rather than because it is interesting.

Habryka @ 2024-06-14T05:44 (+10)

I am skeptical that exhibiting one of the least cost-effective behaviors for the purpose of reducing suffering would be correlated much with being "in it to prevent harm". Donating to animal charities, or being open to trade with other people about reducing animal suffering, or other things that are actually effective seem like much better indicator that people are actually in it to prevent harm.

My best guess is that most people who are vegetarians, vegans or reducetarians, and are actually interested in scope-sensitivity, are explicitly doing so for signaling and social coordination reasons (which to be clear, I think has a bunch going for it, but seems like the kind of contingent fact that makes it not that useful as a signal of "being in it to prevent harm").

MichaelStJules @ 2024-06-14T07:04 (+35)

Have you actually just talked to people about their motives for reducing their animal product consumption, or read about them? Do you expect them to tell you it's mostly for signaling or social coordination, or (knowingly or unknowingly) lie that it isn't?

I'd guess only a minority of EAs go veg mostly for signaling or social coordinaton.

Other reasons I find more plausibly common, even among those who make bigger decisons in scope-sensitive ways:

- Finding it cost-effective in an absolute sense, e.g. >20 chickens spared per year, at small personal cost (usually ignoring opportunity costs to help others more). (And then maybe falsely thinking you should go veg to do this, rather than just cut out certain animal products, or finding it easier to just go veg than navigate exceptions.)

- Wanting to minimize the harm they personally cause to others, or to avoid participating in overall harmful practices, or deontological or virtue ethics reasons, separately from actively helping others or utilitarian-ish reasons.

- Not finding not eating animal products very costly, or not being very sensitive to the costs. It may be easy or take from motivational "budgets" psychologically separate from work or donations.

- Finding eating animal products psychologically or time costly, e.g. just feeling guilty and worrying about it, cognitive dissonance.

- Seeing so many other EAs veg, and this making it seem very impactful to be veg for animals (whether or not it actually is).

- As a way to live out their commitment daily that other animals matter and increase/maintain their concern for them (and/or other vulnerable moral patients easy to ignore).

- To get other people around them interested in helping animals.

I'd guess most EAs who go veg or reducetarian do so for reasons like 1, 2, 3, 4 and/or 5. 1, 2, 3 and 4 are why I went vegan, around when I first got into EA. I also used to make arguments like 1, just comparing the harms to animals and your personal costs (after the drowning child thought experiment) when I was an EA organizer a few years ago.

I don’t think the opportunity costs of veg diets are on people's minds much, so it seems likely that people are ignoring them, either for bad reasons or because they don’t seem important enough to them.

I'm still (bival)vegan, but mostly for reasons like 3, 6, just preferring not to eat animal products anymore, and maybe to meet (my perception of) others' expectations of me.

I'm not even convinced veganism is better for animals anymore through its supply and demand effects, when you include the effects on wild animals (mostly wild arthropods and fish).

Habryka @ 2024-06-14T18:41 (+3)

I have had >30 conversations with EA vegetarians and vegans about their reasoning here. The people who thought about it the most seem to usually settle on it for signaling reasons. Maybe this changed over the last few years in EA, but it seemed to be where most people I talked to where at when I had lots of conversations with them in 2018.

I agree that many people say (1), but when you dig into it it seems clear that people incur costs that would be better spent on donations, and so I don't think it's good reasoning. As far as I can tell most people who think about it carefully seem to stop thinking its a good reason to be vegan/vegetarian.

I do think the self-signaling and signaling effects are potentially substantial.

I also think (4) is probably the most common reason, and I do think probably captures something important, but it seems like a bad inference that "someone is in it to prevent harm" if (4) is their reason for being vegetarian or vegan.

Fermi–Dirac Distribution @ 2024-06-15T07:10 (+8)

when you dig into it it seems clear that people incur costs that would be better spent on donations, and so I don't think it's good reasoning.

I’ve thought a lot about this, because I’m serious about budgeting and try to spend as little money as possible to make more room for investments and donations. I also have a stressful job and don’t like to spend time cooking. I did not find it hard to switch to being vegan while keeping my food budget the same and maintaining a high-protein diet.

Pea protein is comparable in cost per gram protein to the cheapest animal products where I live, and it requires no cooking. Canned beans are a bit more expensive but still very cheap and also require no cooking. Grains, ramen and cereal are very cheap sources of calories. Plant milks are more expensive than cow's milk, but still fit in my very low food budget. Necessary supplements like B12 are very cheap.

On a day-to-day basis, the only real cooking I do is things like pasta, which don’t take much time at all. I often go several weeks without doing any real cooking. I’d bet that I both spend substantially less money on food and substantially less time cooking than the vast majority of omnivores, while eating more protein.

As a vegan it's also easy to avoid spending money in frivolous ways, like on expensive ready-to-eat snacks and e.g. DoorDash orders.

I haven't had any health effects either, or any differences in how I feel day-to-day, after more than 1 year. Being vegan may have helped me maintain a lower weight after I dropped several pounds last year, but it's hard to know the counterfactual.

I didn't know coming in that being vegan would be easy; I decided to try it out for 1 month and then stuck with it when I "learned" how to do it. There's definitely a learning curve, but I'd say that for some people who get the hang of it, reason (1) in Michael's comment genuinely applies.

NunoSempere @ 2024-06-19T12:22 (+6)

I don't think one year is enough time to observe effects. Anecdotically, I think (but am not sure) that I started to have problems after three years of being a vegetarian.

Fermi–Dirac Distribution @ 2024-06-19T18:47 (+4)

What were your problems?

MichaelStJules @ 2024-06-14T21:07 (+6)

I agree that many people say (1), but when you dig into it it seems clear that people incur costs that would be better spent on donations, and so I don't think it's good reasoning.

Do you mean financial costs, or all net costs together, including potentially through time, motivation, energy, cognition? I think it's reasonably likely that for many people, there are ~no real net (opportunity) costs, or that it's actually net good (but if net good in those ways, then that would probably be a better reason than 1). Putting my thoughts in a footnote, because they're long and might miss what you have in mind.[1]

I also think (4) is probably the most common reason, and I do think probably captures something important, but it seems like a bad inference that "someone is in it to prevent harm" if (4) is their reason for being vegetarian or vegan.

Ya, that seems fair. If they had the option to just stop thinking and feeling bad about it and chose that over going veg, which is what my framing suggests they would do, then it seems the motivation is to feel better and get more time, not avoid harming animals through their diets. This would be like seeing someone in trouble, like a homeless person, and avoiding them to avoid thinking and feeling bad about them. This can be either selfish or instrumentally other-regarding, given opportunity costs.

If they thought (or felt!) the right response to the feelings is to just go veg and not to just stop thinking and feeling bad about it, then I would say they are in it to prevent harm, just guided by their feelings. And their feelings might not be very scope-sensitive, even if they make donation and career decisions in scope-sensitive ways. I think this is kind of what virtue ethics is about. Also potentially related: "Do the math, then burn the math and go with your gut".

- ^

Financial/donations: It's not clear to me that my diet is more expensive than if I were omnivorous. Some things I've substituted for animal products are cheaper and others are more expensive. I haven't carefully worked through this, though. It's also not clear that if it were more expensive, that I would donate more, because of how I decide how much to donate, which is based on my income and a vague sense of my costs of living, which probably won't pick up differences due to diet (but maybe it does in expectation, and maybe it means donating less later, because I'll have less money to donate later). If I budgeted more precisely, that would have time costs (which might not come out of work, anyway, though). And if I weren't vegan, maybe I'd be less motivated to donate as much (although this is more like "self-signaling", or altruism sharpens altruism).

Time: I doubt most veg*ns would work more hours (allowing more impactful work or more donations) if they weren't managing or accommodating their veg*n diet. Time spent on the diet is small and probably doesn't really come out of work hours. But this can depend on their particular circumstances. Maybe someone gets their blood tested more frequently because of their diet, and this specifically comes out of their work hours.

Time costs might also be incurred by others, like event or working space organizers (like you, as I think you've brought up before), and veg*ns may not appreciate this, or might (I'd guess correctly) think one more vegan makes ~no difference. Maybe it would be better if everyone agreed to eat whatever at events, and the animal products were offset by animal charity donations, or that time was just given back to organizers to work on other important things without any specific donation offset.

Effects on energy and cognition will vary by person. I think there are risks here people should make some effort to minimize (e.g. blood testing, supplements). That effort can come out of their time and donations, but that's already accounted for above. There might be some remaining (expected) costs even after this. Or there could be net benefits, in case they end up with an overall healthier diet this way (and might not be motivated to do so except to go veg; it's easier to avoid unhealthy foods you also object to morally).

Habryka @ 2024-06-14T22:51 (+4)

Do you mean financial costs, or all net costs together, including potentially through time, motivation, energy, cognition?

I meant net costs all together, tough I agree that if you take into account motivation "net costs" becomes a kind of tricky concept, and many people can find it motivating, and that is important to think about, but also really doesn't fit nicely into a harm-reducing framework.

Financial/donations: It's not clear to me that my diet is more expensive than if I were omnivorous. Some things I've substituted for animal products are cheaper and others are more expensive.

I mean, being an onmivore would allow you to choose between more options. Generally having more options very rarely hurts you.

Overall I like your comment.

MichaelStJules @ 2024-06-15T01:09 (+8)

many people can find it motivating, and that is important to think about, but also really doesn't fit nicely into a harm-reducing framework.

Ya, I guess the value towards harm reduction would be more indirect/instrumental in this case.

I mean, being an onmivore would allow you to choose between more options. Generally having more options very rarely hurts you.

I think this is true of idealized rational agents with fixed preferences, but I'm much less sure about actual people, who are motivated in ways they wouldn't endorse upon reflection and who aren't acting optimally impartially even if they think it would be better on reflection if they did.

By going veg, you eliminate or more easily resist the motivation to eat more expensive animal products that could have net impartial opportunity costs. Maybe skipping (expensive) meats hurts you in the moment (because you want meat), but it saves you money to donate to things you think matter more. You'd be less likely to correctly — by reflection on your impartial preferences — skip the meat and save the money if you weren't veg.

And some people are not even really open to (cheaper) plant-based options like beans and tofu, and that goes away going veg. That was the case for me. My attitude before going veg would have been irrational from an impartial perspective, just considering the $ costs that could be donated instead.

Of course, some people will endorse being inherently partial to themselves upon reflection, so eating animal products might seem fine to them even at greater cost. But the people inclined to cut out animal products by comparing their personal costs to the harms to animals probably wouldn't end up endorsing their selfish motivation to eat animal products over the harms to animals.

The other side is that a veg*n is more motivated to eat the more expensive plant-based substitutes and go to vegan restaurants, which (in my experience) tend to be more expensive.

I'm not inclined to judge how things will shake out based on idealized models of agents. I really don't know either way, and it will depend on the person. Cheap veg diets seem cheaper than cheap omni diets, but if people are eating enough plant-based meats, their food costs would probably increase.

Here are prices in Canadian $/kg of protein, for the versions of foods that seemed cheapest per kg of protein from Walmart Canada and Amazon Canada.

And then extra supplements for veg EAs.

For restaurants, as long as you avoid expensive plant-based substitutes and vegan-specific restaurants, I think veg options are usually cheaper. Of course, a veg EA will be tempted by these sometimes, too!

To be clear, I don't think it's that important to minimize the cost of your diet. Things like rent, vehicle, travel, and how often you eat out (when it doesn't help you do more work) are probably more important if you want to save money.

MichaelStJules @ 2024-06-14T22:08 (+4)

I have had >30 conversations with EA vegetarians and vegans about their reasoning here. The people who thought about it the most seem to usually settle on it for signaling reasons. Maybe this changed over the last few years in EA, but it seemed to be where most people I talked to where at when I had lots of conversations with them in 2018.

I guess this is a bit pedantic, but you originally wrote "My best guess is that most people who are vegetarians, vegans or reducetarians, and are actually interested in scope-sensitivity, are explicitly doing so for signaling and social coordination reasons". I think veg EAs are generally "actually interested in scope-sensitivity", whether or not they're thinking about their diets correctly and in scope-sensitive ~utilitarian terms. "The people who thought about it the most" might not be representative, and more representative motivations might be better described as "in it to prevent harm", even if the motivations turn out to be not utilitarian, not appropriately scope-sensitive or misguided.

Ryan Greenblatt @ 2024-12-14T06:09 (+10)

This is an old thread, but I'd like to confirm that a high fraction of my motivation for being vegan[1] is signaling to others and myself. (So, n=1 for this claim.) (A reasonable fraction of my motivation is more deontological.)

- ^

I eat fish rarely as I was convinced that the case for this improving productivity is sufficiently strong.

defun @ 2024-06-12T10:11 (+1)

Happy to hear this from someone working on AI Safety.

Do you think EA/EA orgs should do something about it?

(Crypto has a similar problem. There are people genuinely interested in it but many people (most?) are just into it for the money)

yanni kyriacos @ 2024-06-13T09:54 (+2)

I actually think it is a huge risk because when the shit hits the fan I want to know people are optimising for the right thing. It is like the old saying; "it is only a value if it costs you something"

Anyway, I'd like to think that orgs suss this out in the interview stage.

yanni kyriacos @ 2024-12-27T02:43 (+46)

I didn't want to read all of @Vasco Grilo🔸's post on the "meat eating" problem and all 80+ comments, so I expanded all the comments and copy/pasted the entire webpage into Claude with the following prompt: "Please give me a summary of the authors argument (dot points, explained simply) and then give me a summary of the kinds of push back he got (dot points, explained simply, thematised, giving me a sense of the concentration/popularity of themes in the push back)"

Below is the result (the Forum team might want to consider how posts with large numbers of comments can be read quickly):

- The author claims that saving lives in developing countries might cause more harm than good in the short term because:

- When people are saved from death, they consume animal products

- The suffering of farm animals (especially chickens and farmed fish/shrimp) from being raised and killed outweighs the happiness of the human life saved

- Using specific calculations, they estimate that one average person causes 15.5 times more animal suffering than human happiness globally (with higher ratios in some countries)

- The author specifically criticizes two organizations:

- GiveWell (for granting $1.09 billion without considering animal welfare impacts)

- Ambitious Impact (for helping start 8 organizations that save lives without considering animal impacts)

- The author suggests these organizations should:

- Be more transparent about why they ignore animal welfare effects

- Focus on interventions that don't increase mortality (like mental health)

- Offset harm to animals by funding animal welfare projects

Main themes in the pushback (ordered by rough frequency/engagement):

Moral/Philosophical Objections (Most Common):

- Rejecting the premise that saving human lives could be net negative

- Viewing it as morally repugnant to let children die because they might eat meat

- Arguing that we shouldn't hold people responsible for future choices they haven't made yet

Methodological Critiques:

- Questioning assumptions about consumption patterns of aid recipients

- Noting that population growth doesn't necessarily increase meat consumption proportionally

- Pointing out that many aid recipients are too poor to consume significant amounts of animal products

Practical/Strategic Concerns:

- Arguing that organizations like GiveWell don't need to justify their focus on human welfare

- Suggesting that offsetting through animal welfare donations is more practical than avoiding human welfare work

- Noting that this type of reasoning could lead to harmful outcomes if applied broadly

Communication/Framing Concerns:

- Suggesting the argument is unnecessarily divisive

- Recommending changes to terminology (e.g., "meat eating problem" instead of "meat eater problem")

- Expressing concern about how this reflects on the EA community

The strongest pushback seemed to center around the moral implications of letting people die because of potential future actions, with many commenters finding this fundamentally problematic regardless of the utilitarian calculations involved.

Vasco Grilo🔸 @ 2024-12-27T11:01 (+4)

Thanks, Yanni! Strongly upvoted.

yanni kyriacos @ 2024-12-27T22:29 (+4)

No problem :)

Ozzie Gooen @ 2024-12-29T22:53 (+2)

Thanks for summarizing!

It strikes me that the above criticisms don't really seem consequentialist / hedonic utilitarian-focused. I'm curious if other criticisms are, or if some of these are intended to be as such (some complex logic like, "Acting in the standard-morality way will wind up being good for consequentialist reasons in some round-about way".)

More generally, those specific objections strike me as very weak. I'd expect and hope that people at Open Philanthropy and GiveWell would have better objections.

yanni kyriacos @ 2024-12-30T06:31 (+5)

No worries :)

I personally think this is a conversation worth having, but I can imagine a bunch of reasons people wouldn’t want to. For one thing, it is a PR nightmare!

yanni kyriacos @ 2024-08-26T23:41 (+32)

A psychological trap EAs might want to watch out for: don't mistake indecision for wisdom.

Sometimes the better option is to just put something out into the world in a small format and get information back.

Of course the reverse works: don't mistake decisiveness for bravery. But I don't think this is a problem for EAs :)

yanni kyriacos @ 2024-08-27T22:28 (+31)

People in EA are ridiculously helpful. I've been able to get a Careers Conference off the ground because people in other organisations are happy to help out in significant ways. In particular, Elliot Teperman and Bridget Loughhead from EA Australia, Greg Sadler from the Good Ancestors Project and Chris Leong. Several people have also volunteered to help at the Conference as well as on other tasks, such as running meetups and creating content.

In theory I'm running an organisation by myself (via an LTFF grant), but because it is nested within the EA/AIS space, I've got the support of a big network. Otherwise this whole project would be insane to start.

If someone is serious about doing Movement Building, but worries about doing it alone, this feels like important information.

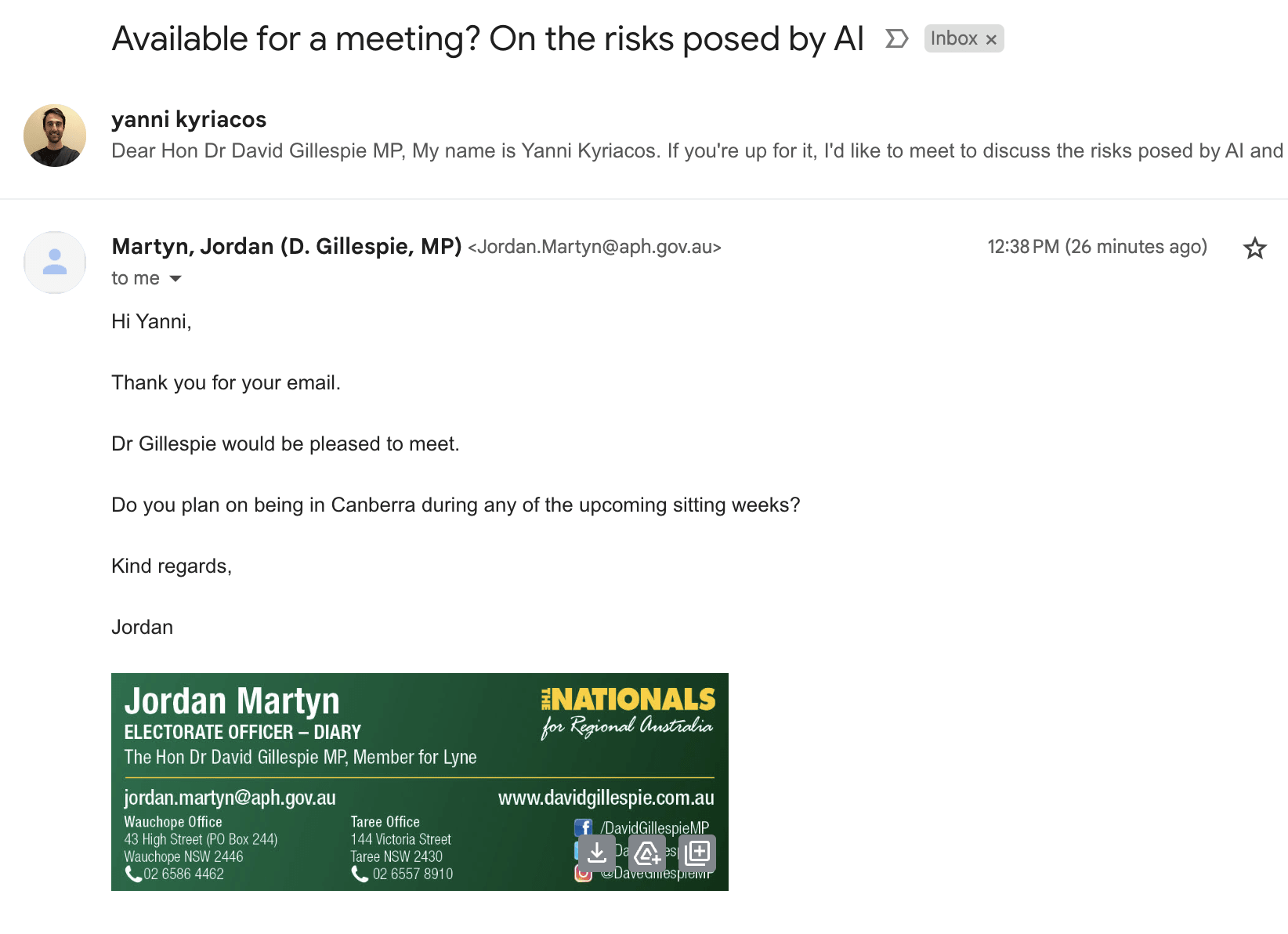

Yanni Kyriacos @ 2024-03-27T02:05 (+31)

A periodic reminder that you can just email politicians and then meet them (see screenshot below).

Neil Warren @ 2024-03-30T18:30 (+2)

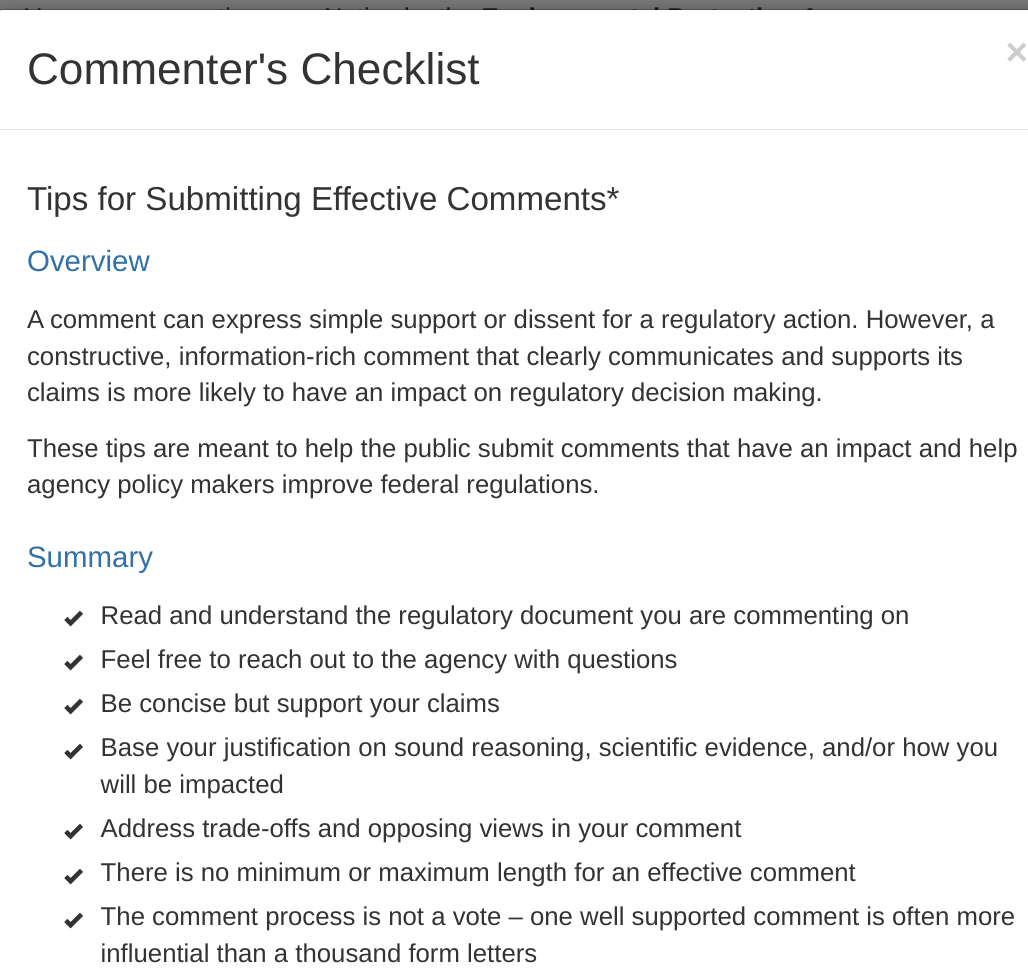

Another thing you can do is send comments proposed legislation on regulations.gov. I did so last week about a recent californian bill on open-sourcing model weights (now closed). In the checklist (screenshot below) they say: "the comment process is not a vote – one well supported comment is often more influential than a thousand form letters". There are people much more qualified on AI risk than I over here, so in case you didn't know, you might want to keep an eye on new regulation coming up. It doesn't take much time and seems to have a fairly big impact.

yanni kyriacos @ 2024-07-15T23:20 (+29)

I think it is good to have some ratio of upvoted/agreed : downvotes/disagreed posts in your portfolio. I think if all of your posts are upvoted/high agreeance then you're either playing it too safe or you've eaten the culture without chewing first.

Ben Millwood @ 2024-07-17T09:45 (+11)

I think some kinds of content are uncontroversially good (e.g. posts that are largely informational rather than persuasive), so I think some people don't have a trade-off here.

yanni kyriacos @ 2024-07-17T10:23 (+1)

Good point. In that case the hypothetical user isn't using it as a forum (i.e. for discourse)

yanni kyriacos @ 2024-07-17T01:31 (+4)

as of comment, 6 agrees and 6 disagrees. perfect :)

yanni kyriacos @ 2024-08-16T05:10 (+28)

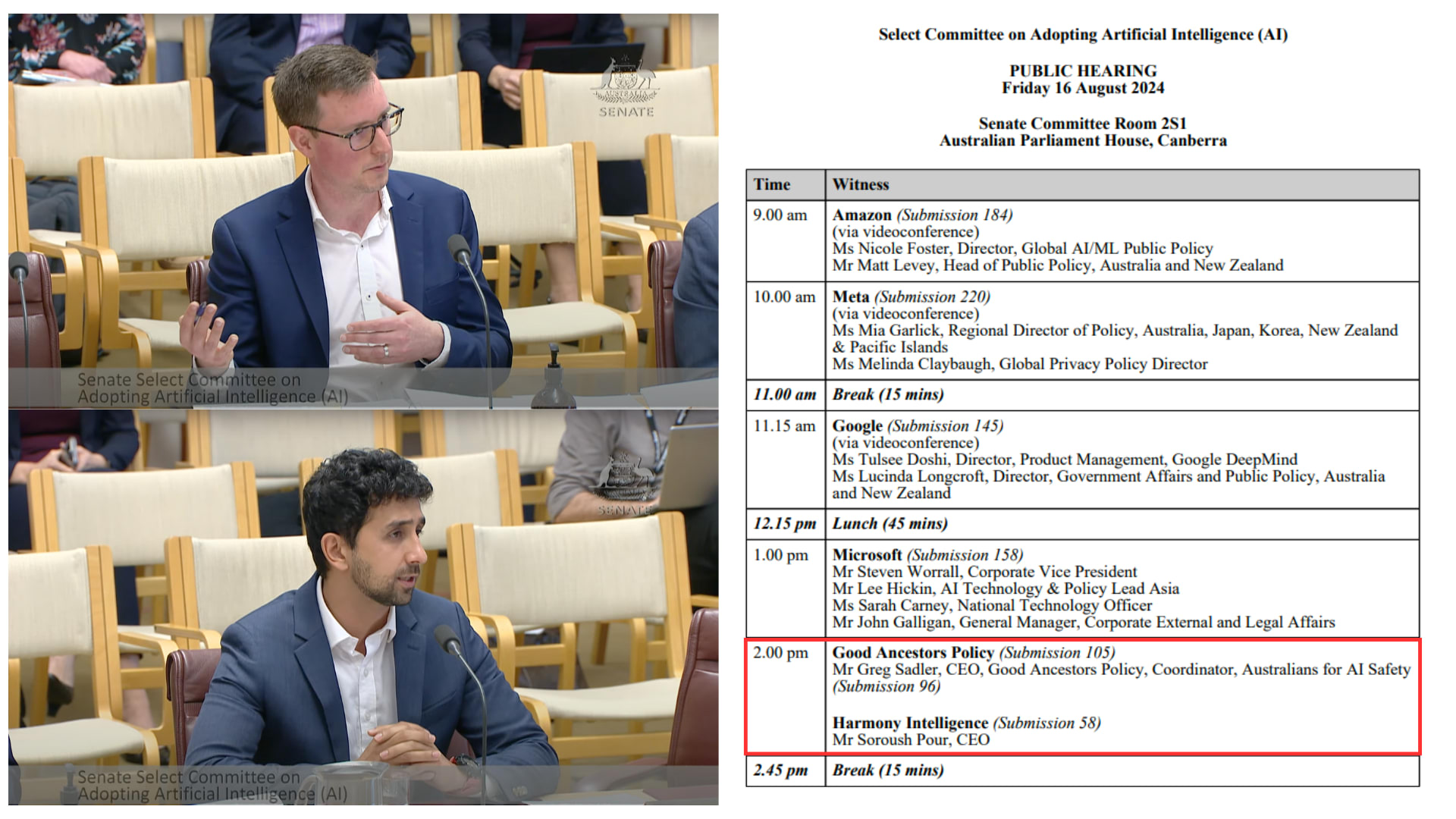

[IMAGE] Extremely proud and excited to watch Greg Sadler (CEO of Good Ancestors Policy) and Soroush Pour (Co-Founder of Harmony Intelligence) speak to the Australian Federal Senate Select Committee on Artificial Intelligence. This is a big moment for the local movement. Also, check out who they ran after.

Yanni Kyriacos @ 2024-02-27T22:15 (+27)

My previous take on writing to Politicians got numbers, so I figured I'd post the email I send below.

I am going to make some updates, but this is the latest version:

---

Hi [Politician]

My name is Yanni Kyriacos, I live in Coogee, just down the road from your electorate.

If you're up for it, I'd like to meet to discuss the risks posed by AI. In addition to my day job building startups, I do community / movement building in the AI Safety / AI existential risk space. You can learn more about AI Safety ANZ by joining our Facebook group here or the PauseAI movement here. I am also a signatory of Australians for AI Safety - a group that has called for the Australian government to set up an AI Commission (or similar body).

Recently I worked with Australian AI experts (such as Good Ancestors Policy) in making a submission to the recent safe and response AI consultation process. In the letter, we called on the government to acknowledge the potential catastrophic and existential risks from artificial intelligence. More on that can be found here.

There are many immediate risks from already existing AI systems like ChatGPT or Midjourney, such as disinformation or improper implementation in various businesses. In the not-so-distant future, certain safety nets will need to be activated (such as a Universal Basic Income policy) in the event of mass unemployment due to displacement of jobs with robots and AI systems.

But of greatest concern is the speed at which we are marching towards AGI (artificial general intelligence) – systems that will have cognitive abilities at or above human level.

Half of AI researchers believe that there is a 10% or greater chance that the invention of artificial superintelligence will mean the end of humanity. Among AI safety scientists, this chance is estimated to be an average of 30%. And these levels of risk aren’t just a concern for people in the far-distant future, with prediction markets such as Metaculus showing these kinds of AI could be invented in the next term of government.

Notable examples of individuals sounding the alarm are Prof. Geoffrey Hinton and Prof. Yoshua Bengio, both Turing-award winners and pioneers of the deep learning methods that are currently achieving the most success. The existential risk of AI has been acknowledged by hundreds of scientists, the UN, the US and recently the EU.

To make a long story short: we don't know how to align AI with the complex goals and values that humans have. When a superintelligent system is realised, there is a significant risk it will pursue a misaligned goal without us being able to stop it. And even if such a superhuman AI remains under human control, the person (or government) wielding such a power could use this to drastically, irreversibly change the world. Such an AI could be used to develop new technologies and weapons, manipulate masses of people or topple governments.

The advancements in the AI landscape have progressed much faster than anticipated. In 2020, it was estimated that an AI would pass university entrance exams by 2050. This goal was achieved in March 2023 by the system GPT-4 from OpenAI. These massive, unexpected leaps have prompted many experts to request a pause in AI development through an open letter to major AI companies. The letter has been signed over 33,000 times so far, including many AI researchers and tech figures.

Unfortunately, it seems that companies are not willing to jeopardise their competitive position by voluntarily halting development. A pause would need to be imposed by a government. Luckily, there seems to be broad support for slowing down AI development. A recent poll indicates that 63% of American support regulations to prevent AI companies from building superintelligent AI. At the national level, a pause is also challenging because countries have incentives to not fall behind in AI capabilities. That's why we need an international solution.

The UK organised an AI Safety Summit on November 1st and 2nd at Bletchley Park. We hoped that during this summit, leaders would work towards sensible solutions that prevent the very worst of the risks that AI poses. As such I was excited to see that Australia signed the The Bletchley Declaration, agreeing that this risk is real and warrants coordinated international action. However, the recent policy statements by Minister Husic don't seem to align with the urgency that experts are seeing. The last safe moment to act could be very soon.

The Summit has not yet produced an international agreement or policy. We have seen proposals being written by the US Senate, and even AI company CEOs have said there is “overwhelming consensus” that regulation is needed. But no proposal so far has seriously considered ways to slow down or prevent a superintelligent AI from being created. I am afraid that lobbying efforts by AI companies to keep regulation at a minimum are turning out to be highly effective.

It's essential that the government follows through on its commitment at Bletchley Park to create a national or regional AI safety body. We have such bodies for everything from the risk of plane crashes to the risk of tsunamis. We urgently need one on ensuring the safety of AI systems.

Anyway, I'd love to discuss this more in person or via zoom if you're in town soon.

Let me know what you think.

Cheers,,

Yanni

Vasco Grilo @ 2024-02-28T17:55 (+2)

Thanks for sharing, Yanni, and it is really cool that you managed to get Australia's Assistant Minister for Defence interested in creating an AI Safety Institute!

More on that can be found here.

Did you mean to include a link?

In 2020, it was estimated that an AI would pass university entrance exams by 2050.

The Metaculus' question you link to involves meeting many conditions besides passing university exams:

For these purposes we will thus define "AI system" as a single unified software system that can satisfy the following criteria, all easily completable by a typical college-educated human.

- Able to reliably pass a Turing test of the type that would win the Loebner Silver Prize.

- Able to score 90% or more on a robust version of the Winograd Schema Challenge, e.g. the "Winogrande" challenge or comparable data set for which human performance is at 90+%

- Be able to score 75th percentile (as compared to the corresponding year's human students; this was a score of 600 in 2016) on all the full mathematics section of a circa-2015-2020 standard SAT exam, using just images of the exam pages and having less than ten SAT exams as part of the training data. (Training on other corpuses of math problems is fair game as long as they are arguably distinct from SAT exams.)

- Be able to learn the classic Atari game "Montezuma's revenge" (based on just visual inputs and standard controls) and explore all 24 rooms based on the equivalent of less than 100 hours of real-time play (see closely-related question.)

yanni kyriacos @ 2024-05-14T04:29 (+22)

[PHOTO] I sent 19 emails to politicians, had 4 meetings, and now I get emails like this. There is SO MUCH low hanging fruit in just doing this for 30 minutes a day (I would do it but my LTFF funding does not cover this). Someone should do this!

Linch @ 2024-05-16T23:59 (+6)

I would do it but my LTFF funding does not cover this

(Speaking as someone on LTFF, but not on behalf of LTFF)

How large of a constraint is this for you? I don't have strong opinions on whether this work is better than what you're funded to do, but usually I think it's bad if LTFF funding causes people to do things that they think is less (positively) impactful!

We probably can't fund people to do things that are lobbying or lobbying-adjacent, but I'm keen to figure out or otherwise brainstorm an arrangement that works for you.

yanni kyriacos @ 2024-05-17T22:07 (+1)

Hey Linch, thanks for reaching out! Maybe send me your email or HMU here yannikyriacos@gmail.com

huw @ 2024-05-14T05:26 (+4)

(I think you blanked out their name from their email footer but not from the bottom of the email, but not sure how anonymous you wanted to keep them.)

yanni kyriacos @ 2024-05-14T09:26 (+2)

ffs

yanni kyriacos @ 2024-05-14T09:28 (+1)

fixed. thanks mate :)

Stephen Clare @ 2024-05-16T17:08 (+11)

You should probably also blank their job title (which would make it easy to work out who they are) and their phone number (!)

yanni kyriacos @ 2024-05-17T22:05 (+1)

Why am I so bad at this Stephen. Send help.

huw @ 2024-05-14T09:39 (+2)

🫶

Yanni Kyriacos @ 2024-04-01T23:49 (+19)

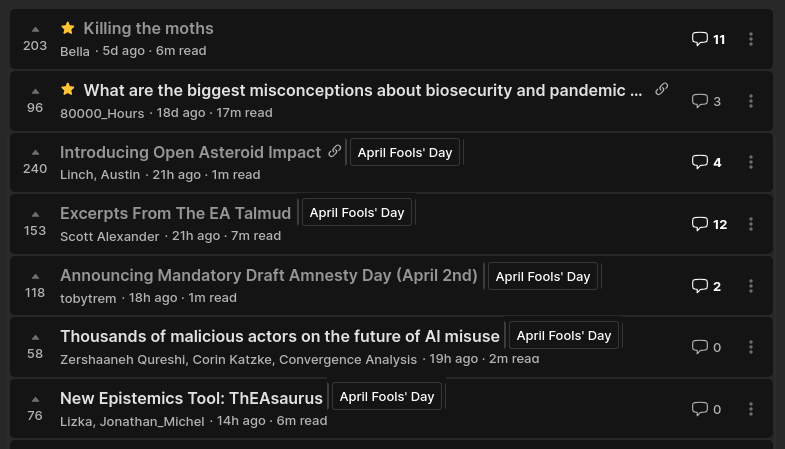

RIP to any posts on anything earnest over the last 48 hours. Maybe in future we don't tag anything April Fools and it is otherwise a complete blackout on serious posts 😅

BrownHairedEevee @ 2024-04-02T05:24 (+6)

How about making the April Fool's Day tag visible on the forum frontpage, like so?

Darren McKee @ 2024-04-02T15:47 (+12)

Something(!) needs to be done. Otherwise, it's just a mess for clarity and the communication of ideas.

Yanni Kyriacos @ 2024-04-02T20:04 (+8)

I think the hilarity is in the confusion / click bait. Your idea would rob us of this! I think the best course of action is for anyone with a serious post to wait until April 3 :|

tobytrem @ 2024-04-03T08:47 (+2)

Not a solution to everything mentioned here- but a reminder that you can click "customize feed" at the top of the page and remove all posts tagged april fools.

Yanni Kyriacos @ 2024-04-04T10:31 (+1)

nah let's lean all the way in, for one day a year, the wild west out here.

Yanni Kyriacos @ 2024-04-03T00:56 (+1)

Damn just had the idea of a "Who wants to be Fired?" post.

Yanni Kyriacos @ 2024-03-05T22:55 (+18)

I think if you work in AI Safety (or want to) it is very important to be extremely skeptical of your motivations for working in the space. This applies to being skepticism of interventions within AI Safety as well.

For example, EAs (like most people!) are motivated to do things they're (1) good at (2) see as high status (i.e. people very quietly ask themselves 'would someone who I perceive as high status approve of my belief or action?'). Based on this, I am worried that (1) many EAs find protesting AI labs (and advocating for a Pause in general) cringy and/or awkward (2) Ignore the potential impact of organisations such as PauseAI.

We might all literally die soon because of misaligned AI, so what I'm recommending is that anyone seriously considering AI Safety as a career path spends a lot of time on the question of 'what is really motivating me here?'

Yanni Kyriacos @ 2024-03-06T00:40 (+7)

fwiw i think this works in both directions - people who are "action" focussed probably have a bias towards advocacy / protesting and underweight the usefulness of research.

Yanni Kyriacos @ 2024-02-21T04:10 (+18)

I have written 7 emails to 7 Politicians aiming to meet them to discuss AI Safety, and already have 2 meetings.

Normally, I'd put this kind of post on twitter, but I'm not on twitter, so it is here instead.

I just want people to know that if they're worried about AI Safety, believe more government engagement is a good thing and can hold a decent conversation (i.e. you understand the issue and are a good verbal/written communicator), then this could be an underrated path to high impact.

Another thing that is great about it is you can choose how many emails to send and how many meetings to have. So it can be done on the side of a "day job".

yanni kyriacos @ 2024-09-20T20:03 (+16)

Big AIS news imo: “The initial members of the International Network of AI Safety Institutes are Australia, Canada, the European Union, France, Japan, Kenya, the Republic of Korea, Singapore, the United Kingdom, and the United States.”

H/T @shakeel

yanni kyriacos @ 2024-08-23T20:43 (+16)

Another shower thought - I’ve been vegetarian for 7 years and vegan for 6.5.

I find it very surprising and interesting how little it has nudged anyone in my friends / family circle to reduce their consumption of meat.

I would go as far as to say it has made literally no difference!

Keep in mind I’m surrounded by extremely compassionate and mostly left leaning people.

I also have relatively wide networks and I normally talk about animal welfare in a non annoying way.

You’d have thought in that time one person would have been nudged - nope!

My intention going vegan wasn’t as some social statement, but if you’d asked me 7 years later would there have been some social effect, I would have guessed yes.

Im surprised!

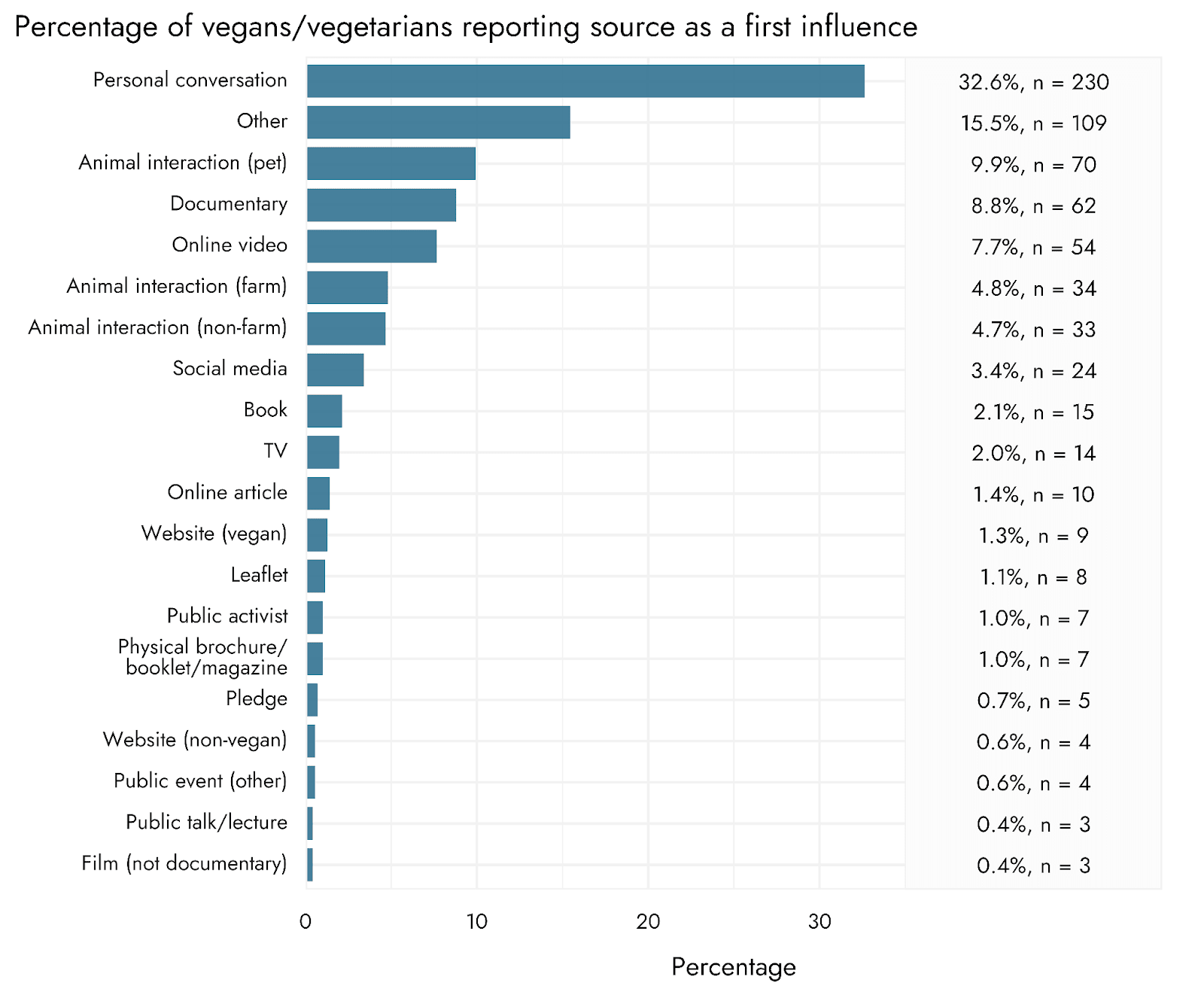

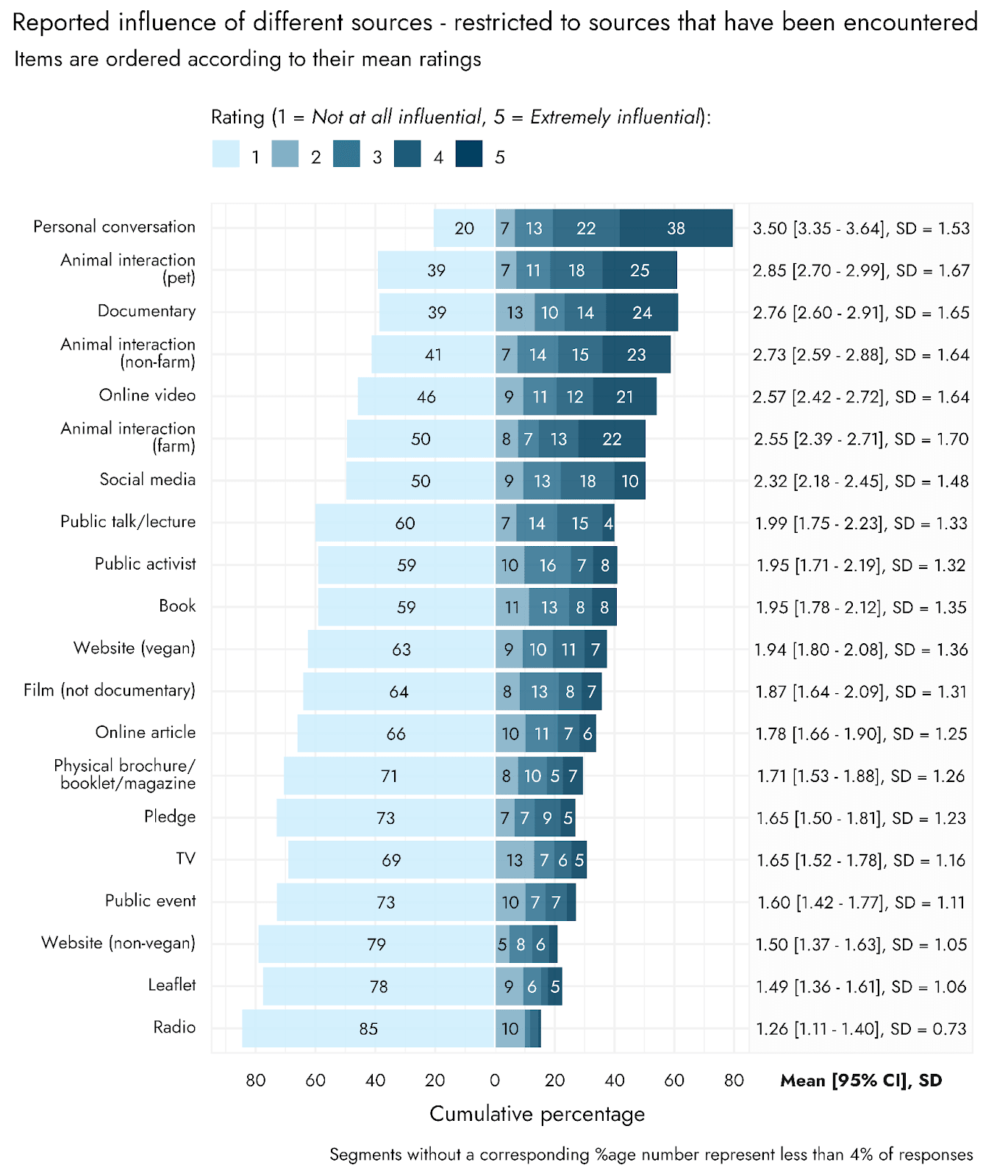

David_Moss @ 2024-08-24T13:49 (+16)

When we asked current vegetarians/vegans what influenced them to adopt their diet, personal conversations (primarily with friends/family) were among the top influences.

So your surprise/expectation seems reasonable! Of course, I don't know whether it's actually surprising, since presumably whether anyone actually converts depends on lots of other features of a given social network (do your networks contain a lot of people who were already vegetarian/vegan?).

yanni kyriacos @ 2024-08-24T19:34 (+2)

Thanks for sharing that data David, I appreciate that :)

Do you think it can answer the question; If you're the first in your family to switch to veganism/vegetarianism, how likely is it that another family member will follow suit within X years?

(Also, no, there aren't any vegetarians or vegans among my friends or family).

FWIW I'd probably count myself as having ~ 30 close friends and family.

This also seems like good research for the GWWC pledge.

David_Moss @ 2024-08-24T20:03 (+2)

Thanks!

Do you think it can answer the question; If you're the first in your family to switch to veganism/vegetarianism, how likely is it that another family member will follow suit within X years?

I'm afraid that. I think we'd need to know more about the respondents' families and the order in which they adopted veganism/vegetarianism to assess that. I agree that it sounds like an interesting research question!

yanni kyriacos @ 2024-08-24T22:19 (+2)

Maybe one day 😊

Sean Sweeney @ 2024-08-23T22:54 (+6)

Have you tried cooking your best vegan recipes for others? In my experience sometimes people ask for the recipe and make it for themselves later, especially health-conscious people. For instance, I really like this vegan pumpkin pie that's super easy to make: https://itdoesnttastelikechicken.com/easy-vegan-pumpkin-pie/

yanni kyriacos @ 2024-08-24T13:19 (+2)

Thanks for the comment Sean. Because of me they’ve had to (seemingly gladly) eaten vegan-only meals dozens of times. My mum has even made me vegan dishes to take home on dozens of occasions. They almost all acknowledge the horror of factory farming. Veganism is just firmly bucketed a “yanni thing”.

quila @ 2024-08-24T22:55 (+1)

That is interesting.

I think that most beings[1] who I've become very close with (I estimate this reference class is ≤10) have gone vegan in a way that feels like it was because of our discussions, though it's possible they would have eventually otherwise, or that our discussions were just the last straw.

In retrospect, I wonder if I had first emanated a framing of taking morality seriously (such that "X is bad" -> "I will {not do X} or try to stop X"). I think I also tended to become that close with beings who do already, and who are more intelligent/willing to reflect than what seems normal.

- ^

(I write 'being' because some are otherkin)

Yanni Kyriacos @ 2024-03-27T07:33 (+16)

[GIF] A feature I'd love on the forum: while posts are read back to you, the part of the text that is being read is highlighted. This exists on Naturalreaders.com and would love to see it here (great for people who have wandering minds like me)

JP Addison @ 2024-03-27T18:25 (+4)

I agree with you, and so does our issue tracker. Sadly, it does seem a bit hard. Tagging @peterhartree as a person who might be able to tell me that it's less hard than I think.

Yanni Kyriacos @ 2024-03-27T21:56 (+4)

As someone who works with software engineers, I have respect for how simple-appearing things can actually be technically challenging.

Lorenzo Buonanno @ 2024-03-28T10:09 (+2)

For what it's worth, I would find the first part of the issue (i.e. making the player "floating" or "sticky") already quite useful, and it seems much easier to implement.

Yanni Kyriacos @ 2024-03-13T23:56 (+16)

I think acting on the margins is still very underrated. For e.g. I think 5x the amount of advocacy for a Pause on capabilities development of frontier AI models would be great. I also think in 12 months time it would be fine for me to reevaluate this take and say something like 'ok that's enough Pause advocacy'.

Basically, you shouldn't feel 'locked in' to any view. And if you're starting to feel like you're part of a tribe, then that could be a bad sign you've been psychographically locked in.

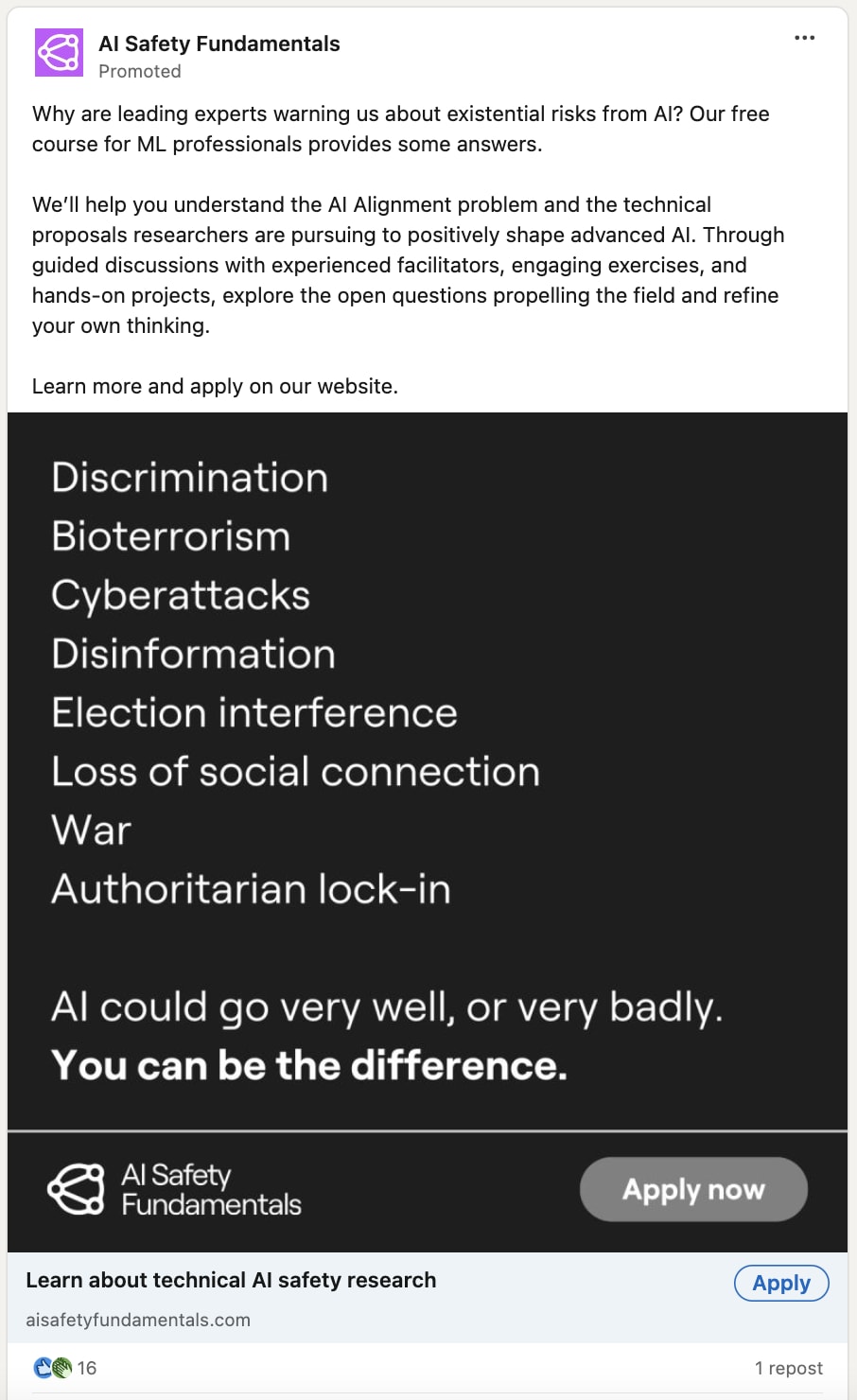

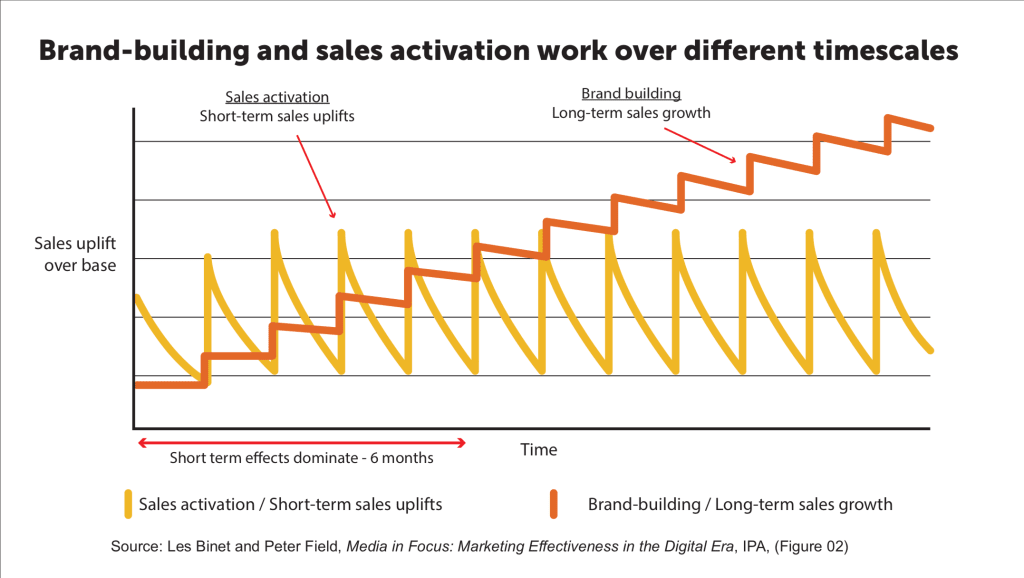

yanni kyriacos @ 2024-11-05T23:46 (+15)

I'm pretty confident that Marketing is in the top 1-3 skill bases for aspiring Community / Movement Builders.

When I say Marketing, I mean it in the broad sense it used to mean. In recent years "Marketing" = "Advertising", but I use the classic Four P's of Marketing to describe it.

The best places to get such a skill base is at FMCG / mass marketing organisations such as the below. Second best would be consulting firms (McKinsey & Company):

- Procter & Gamble (P&G)

- Unilever

- Coca-Cola

- Amazon

1. Product

- What you're selling (goods or services)

- Features and benefits

- Quality, design, packaging

- Brand name and reputation

- Customer service and support

2. Price

- Retail/wholesale pricing

- Discounts and promotions

- Payment terms

- Pricing strategy (premium, economy, etc.)

- Price comparison with competitors

3. Place (Distribution)

- Sales channels

- Physical/online locations

- Market coverage

- Inventory management

- Transportation and logistics

- Accessibility to customers

4. Promotion

- Advertising

- Public relations

- Sales promotions

- Direct marketing

- Digital marketing

- Personal selling

yanni kyriacos @ 2024-12-31T11:08 (+13)

Happy new year everyone! I’m extremely grateful to have this community in my life!

yanni kyriacos @ 2024-07-29T02:02 (+13)

The Australian Federal Government is setting up an "AI Advisory Body" inside the Department of Industry, Science and Resources.

IMO that means Australia has an AISI in:

9 months (~ 30% confidence)

12 months (~ 50%)

18 months (~ 80%)

Rebecca @ 2024-07-29T05:46 (+2)

That link doesn’t work for me fyi

yanni kyriacos @ 2024-07-29T07:02 (+2)

Interestingly, they've taken the page down and the open positions on LinkedIn.

My guess is they announced too early.

huw @ 2024-07-29T03:15 (+1)

Nice work—obviously it's hard to attribute but I'm sure the combined lobbying of all the EAs working on this in Aus have contributed to this one.

yanni kyriacos @ 2024-07-29T05:47 (+3)

I'd assign a 75% probably that we've made it happen somewhat* sooner than it otherwise would have.

*Maybe 6 months? I honestly have no clue.

yanni kyriacos @ 2024-12-11T00:04 (+12)

What is your AI Capabilities Red Line Personal Statement? It should read something like "when AI can do X in Y way, then I think we should be extremely worried / advocate for a Pause*".

I think it would be valuable if people started doing this; we can't feel when we're on an exponential, so its likely we will have powerful AI creep up on us.

@Greg_Colbourn just posted this and I have an intuition that people are going to read it and say "while it can do Y it still can't do X"

*in the case you think a Pause is ever optimal.

yanni kyriacos @ 2024-05-16T00:11 (+12)

Yesterday Greg Sadler and I met with the President of the Australian Association of Voice Actors. Like us, they've been lobbying for more and better AI regulation from government. I was surprised how much overlap we had in concerns and potential solutions:

1. Transparency and explainability of AI model data use (concern)

2. Importance of interpretability (solution)

3. Mis/dis information from deepfakes (concern)

4. Lack of liability for the creators of AI if any harms eventuate (concern + solution)

5. Unemployment without safety nets for Australians (concern)

6. Rate of capabilities development (concern)

They may even support the creation of an AI Safety Institute in Australia. Don't underestimate who could be allies moving forward!

yanni kyriacos @ 2024-11-07T04:07 (+11)

Flaming hot take: I wonder if some EAs suffer from Scope Oversensitivity - essentially the inverse of the identifiable victim effect. Take the animal welfare vs global health debate: are we sometimes biased by the sheer magnitude of animal suffering numbers, rather than other relevant factors? Just as the identifiable victim effect leads people to overweight individual stories, maybe we're overweighting astronomical numbers.

EAs pride themselves on scope sensitivity to combat emotional biases, but taken to an extreme, could this create its own bias? Are we sometimes too seduced by bigger numbers = bigger problem? The meta-principle might be that any framework, even one designed to correct cognitive biases, needs wisdom and balance to avoid becoming its own kind of distortion.

Ben Millwood🔸 @ 2024-11-07T14:45 (+4)

Scope insensitivity has some empirical backing -- e.g. the helping birds study -- and some theorised mechanisms of action, e.g. people lacking intuitive understanding of large numbers.

Scope oversensitivity seems possible in theory, but I can't think of any similar empirical or theoretical reasons to think it's actually happening.

To the extent that you disagree, it's not clear to me whether it's because you and I disagree on how EAs weight things like animal suffering, or whether we disagree on how it ought to be weighted. Are you intending to cast doubt on the idea that a problem that is 100x as large is (all else equal) 100x more important, or are you intending to suggest that EAs treat it as more than 100x as important?

MichaelDickens @ 2024-11-07T05:43 (+2)

Upvoted and disagree-voted.

Do you have any particular reason to believe that EAs overweight large problems?

Take the animal welfare vs global health debate: are we sometimes biased by the sheer magnitude of animal suffering numbers, rather than other relevant factors?

EAs donate much more to global health than to animal welfare. Do you think the ratio should be even higher still?

Yanni Kyriacos @ 2024-03-01T23:25 (+10)

The general public wants frontier AI models regulated and there doesn't seem to be grassroots focussed orgs attempting to capture and funnel this energy into influencing politicians. E.g. via this kind of activity. This seems like massively low hanging fruit. An example of an organisation that does this (but for GH&W) is Results Australia. Someone should set up such an org.

Yanni Kyriacos @ 2024-03-02T00:13 (+1)

My impression is that PauseAI focusses more on media engagement + protests, which I consider a good but separate thing. Results Australia, as an example, focuses (almost exclusively) on having concerned citizens interacting directly with politicians. Maybe it would be a good thing for orgs to focus on separate things (e.g. for reasons of perception + specialisation). I lean in this direction but remain pretty unsure.

joepio @ 2024-03-02T09:47 (+5)

Founder of PauseAI here. I know our protests are the most visible, but they are actually a small portion of what we do. People always talk about the protests, but I think we actually had most results through invisible volunteer lobbying. Personally, I've spent way more time sending emails to politicians and journalists, meeting them and informing them of the issues. I wrote an Email Builder to get volunteers to write to their representatives, gave multiple workshops on doing so, and have seen many people (including you of course!) take action and ask for feedback in the discord.

I think combining both protesting and volunteer lobbying in one org is very powerful. It's not a new idea of course - orgs like GreenPeace have been using this strategy for decades. The protests create visibility and attention, and the lobbying gives the important people the right background information. The protests encourage more volunteers to join and help out, so we get more volunteer lobbyists! In my experience the protests also help with getting an in with politicians - it creates a recognizable brand that shows you represent a larger group.

yanni kyriacos @ 2024-09-12T22:22 (+9)

Is anyone in the AI Governance-Comms space working on what public outreach should look like if lots of jobs start getting automated in < 3 years?

I point to Travel Agents a lot not to pick on them, but because they're salient and there are lots of them. I think there is a reasonable chance in 3 years that industry loses 50% of its workers (3 million globally).

People are going to start freaking out about this. Which means we're in "December 2019" all over again, and we all remember how bad Government Comms were during COVID.

Now is the time to start working on the messaging!

yanni kyriacos @ 2024-08-20T02:46 (+9)

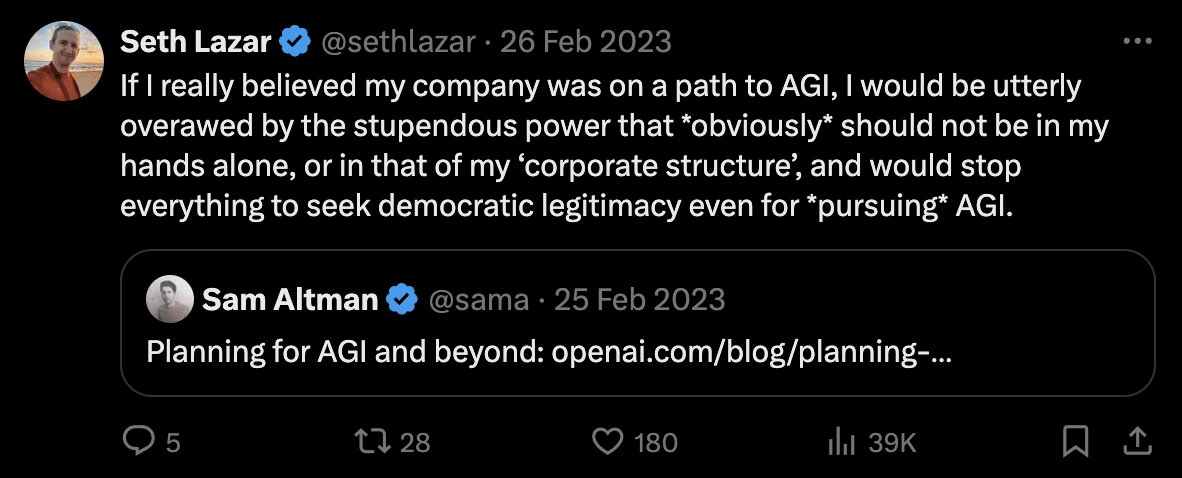

I believe we should worry about the pathology of people like Sam Altman, mostly because of what they're trying to achieve, without the consent of all governments and their respective populace. I found a tweet that summarises my own view better than I can. The pursuit of AGI isn't normal or acceptable and we should call it out as such.

Linch @ 2024-08-20T02:53 (+5)

Do you have an example of people disagreeing with you? When I made similar points before, I think they've been received relatively positively.

yanni kyriacos @ 2024-08-20T02:59 (+2)

I’ll dig it up. Could very well be my delivery is off putting.

yanni kyriacos @ 2024-08-20T03:39 (+2)

You know what, I'm gonna rewrite this quick take so it is about the tweet. Currently it is unnecessarily whiny / self-interested.

yanni kyriacos @ 2024-05-17T23:09 (+8)

Remember: EA institutions actively push talented people into the companies making the world changing tech the public have said THEY DONT WANT. This is where the next big EA PR crisis will come from (50%). Except this time it won’t just be the tech bubble.

harfe @ 2024-05-18T18:53 (+8)

Is this about the safety teams at capabilities labs?

If so, I consider it a non-obvious issue, whether pushing a talented people into an AI safety role at, e.g., DeepMind is a bad thing. If you think that is a bad thing, consider providing a more detailed argument, and writing a top-level post explaining your view.

If, instead, this is about EA institutions pushing people into capabilities roles, consider naming these concrete examples. As an example, 80k has a job advertising a role as a prompt engineer at Scale AI. That does not seem to be a very safety-focused role, and it is not clear how 80k wants to help prevent human extinction with that job ad.

Yanni Kyriacos @ 2024-04-04T10:36 (+8)

So I did a quick check today - I've sent 19 emails to politicians about AI Safety / x-risk and received 4 meetings. They've all had a really good vibe, and I've managed to get each of them to commit to something small (e.g. email XYZ person about setting up an AI Safety Institute). I'm pretty happy with the hit rate (4/19). I might do another forum quick take once I've sent 50.

yanni kyriacos @ 2025-01-21T23:28 (+7)

AI Safety has less money, talent, political capital, tech and time. We have only one distinct advantage: support from the general public. We need to start working that advantage immediately.

yanni kyriacos @ 2024-05-13T05:57 (+7)

I expect (~ 75%) that the decision to "funnel" EAs into jobs at AI labs will become a contentious community issue in the next year. I think that over time more people will think it is a bad idea. This may have PR and funding consequences too.

Lorenzo Buonanno @ 2024-05-13T07:39 (+7)

I expect (~ 75%) that the decision to "funnel" EAs into jobs at AI labs will become a contentious community issue in the next year.

My understanding is that this has been a contentious issue for many years already.

80,000 hours wrote a response to this last year, Scott Alexander had written about it in 2022, and Anthropic split from OpenAI in 2021. Do you mean that you expect this to become significantly more salient in the next year?

yanni kyriacos @ 2024-05-14T04:45 (+1)

Thanks for the reply Lorenzo! IMO it is going to look VERY weird seeing people continue to leave labs while EA fills the leaky bucket.

yanni kyriacos @ 2024-05-13T11:15 (+1)

Yeah my prediction lacked specificity. I expect it to become quite heated. I'm imagining 10+ posts on the topic next year with a total of 100+ comments. That's just on the forum.

OllieBase @ 2024-05-13T11:58 (+4)

I'd probably bet against this happening FWIW. Maybe a Manifold market?

Also, 100+ comments on the forum might not mean it's necessarily "heated"—a back and forth between two commenters can quickly increase the tally on any subject so that part might also need specifying further.

yanni kyriacos @ 2024-05-14T04:33 (+1)

I spent about 30 seconds thinking about how to quantify my prediction. I'm trying to point at something vague in a concrete way but failing. This also means that I don't think it is worth my time making it more concrete. The initial post was more of the "I am pretty confident this will be a community issue, just a heads up".

OllieBase @ 2024-05-14T17:15 (+2)

Seems reasonable :)

yanni kyriacos @ 2024-04-20T06:01 (+7)

Be the meme you want to see in the world (screenshot).

Yanni Kyriacos @ 2023-08-09T04:59 (+7)

What is your best guess of the overall impact of 80k interviewing AI labs on their podcast + listing AI lab vacant roles?

Poll: https://www.guidedtrack.com/programs/qj0ykwn/run

EdoArad @ 2023-08-09T07:15 (+2)

Yonatan Cale @ 2023-08-09T09:12 (+6)

My long thoughts:

1. 80k don't claim to only advertise impactful jobs

They also advertise jobs that help build career impact, and they're not against posting jobs that cause harm (and it's often/always not clear which is which). See more in this post.

They sometimes add features like marking "recommended orgs" (which I endorse!), and sometimes remove those features ( 😿 ).

2. 80k's career guide about working at AI labs doesn't dive into "which lab"

See here. Relevant text:

Recommended organisations

We’re really not sure. It seems like OpenAI, Google DeepMind, and Anthropic are currently taking existential risk more seriously than other labs.

I think [link to comment] the "which lab" question is really important, and I'd encourage 80k to either be opinionated about it, or at least help people make up their mind somehow, not just leave people hanging on "which lab" while also often recommending people go work at AI labs, and also mentioning that often that work is net-negative and recommending reducing the risk by not working "in certain positions unless you feel awesome about the lab".

[I have longer thoughts on how they could do this, but my main point is that it's (imo) an important hole in their recommendation that might be hidden from many readers]

3. Counterfactual / With great power comes great responsibility

If 80k wouldn't do all this, should we assume there would be no job board and no guides?

I claim that something like a job board has critical mass: Candidates know the best orgs are there, and orgs know the best candidates are there.

Once there's a job board with critical mass, it's not trivial to "compete" with it.

But EAs love opening job boards. A few new EA job boards pop up every year. I do think there would be an alternative. And so the question seems to me to be - how well are 80k using their critical mass?

4. What results did 80k's work actually cause?

First of all: I don't actually know, and if someone from 80k would respond, that would be way better than my guess.

Still, here's my guess, which I think would be better than just responding to the poll:

- Lots of engineers who care about AI Safety but don't have a deep understanding of it (and not much interest in spending months to learn) - go work at AI labs.

- Is this positive, because now people "care" in the labs, or negative because the labs have a much easier time hiring people who basically go and do their job? This is seriously a hard question, but I'd guess "negative" (and I think 80k would agree but I'm not sure)

- I wouldn't be surprised if 80k are directly responsible for a few very important hires.

- For example, I think the CISO (head of security) of Anthropic used to run the security of Chrome. I'm VERY happy Anthropic hired such a person, I think infosec is really important for AI labs, and I wouldn't be surprised if 80k had something to do with this, and if not this - then maybe some other similar role.

- I think this is very positive, and maybe more important than all the rest.

- For example, I think the CISO (head of security) of Anthropic used to run the security of Chrome. I'm VERY happy Anthropic hired such a person, I think infosec is really important for AI labs, and I wouldn't be surprised if 80k had something to do with this, and if not this - then maybe some other similar role.

[I need to go, my comment seems incomplete but I hope it's still somewhat helpful so posting. I'm still not sure how to vote!]

Guy Raveh @ 2023-08-09T17:03 (+3)

And there's always the other option that I (unpopularly) believe in - that better publicly available AI capabilities are necessary for meaningful safety research, thus AI labs have contributed positively to the field.

yanni kyriacos @ 2024-11-21T01:29 (+6)

Ten months ago I met Australia's Assistant Defence Minister about AI Safety because I sent him one email asking for a meeting. I wrote about that here. In total I sent 21 emails to Politicians and had 4 meetings. AFAICT there is still no organisation with significant funding that does this as their primary activity. AI Safety advocacy is IMO still extremely low hanging fruit. My best theory is EAs don't want to do it / fund it because EAs are drawn to spreadsheets and google docs (it isn't their comparative advantage). Hammers like nails etc.

huw @ 2024-11-21T05:36 (+5)

I also think many EAs are still allergic to direct political advocacy, and that this tendency is stronger in more rationalist-ish cause areas such as AI. We shouldn’t forget Yudkowsky’s “politics is the mind-killer”!

yanni kyriacos @ 2024-11-21T07:58 (+6)

What a cop-out! Politics is a mind-killer if you're incapable of observing your mind.

yanni kyriacos @ 2024-10-17T01:28 (+6)

NotebookLM is basically magic. Just take whatever Forum post you can't be bothered reading but know you should and use NotebookLM to convert it into a podcast.

It seems reasonable that in 6 - 12 months there will be a button inside each Forum post that converts said post into a podcast (i.e. you won't need to visit NotebookLM to do it).

Yanni Kyriacos @ 2024-03-27T22:06 (+6)

It breaks my heart when I see eulogy posts on the forum. And while I greatly appreciate people going to the effort of writing them (while presumably experiencing grief), it still doesn't feel like enough. We're talking about people that dedicated their lives to doing good, and all they get is a post. I don't have a suggestion to address this 'problem', and some may even feel that a post is enough, but I don't. Maybe there is no good answer and death just sucks. I dunno.

Yanni Kyriacos @ 2023-07-27T00:44 (+6)

I think https://www.wakingup.com/ should be considered for effective organisation status. It donates 10% of revenue to the most effective causes and I think reaching nondual states of awakening could be one of the most effective ways for people in rich countries to improve their wellbeing.

Misha_Yagudin @ 2023-07-27T01:31 (+5)

Will Aldred @ 2023-07-28T00:47 (+2)

Also related (though more tangentially): https://podcast.clearerthinking.org/episode/167/michael-taft-and-jeremy-stevenson-glimpses-of-enlightenment-through-nondual-meditation/

yanni kyriacos @ 2024-06-21T09:43 (+5)

A piece of career advice I've given a few times recently to people in AI Safety, which I thought worth repeating here, is that AI Safety is so nascent a field that the following strategy could be worth pursuing:

1. Write your own job description (whatever it is that you're good at / brings joy into your life).

2. Find organisations that you think need thing that job but don't yet have it. This role should solve a problem they either don't know they have or haven't figured out how to solve.

3. Find the key decision maker and email them. Explain the (their) problem as you see it and how having this role could fix their problem. Explain why you're the person to do it.

I think this might work better for mid-career people, but, if you're just looking to skill up and don't mind volunteering, you could adapt this approach no matter what stage you're at in your career.

yanni kyriacos @ 2024-08-25T21:30 (+4)

I often see EA orgs looking to hire people to do Z, where applicants don't necessarily need experience doing Z to be successful.

E.g. instead of saying "must have minimum 4 years as an Operations Manager", they say "we are hiring for an Operations Manager. But you don't need experience as an Operations Manager, as long as you have competencies/skills (insert list) A - F."

This reminds of when Bloomberg spent over $10M training a GPT-3.5 class AI on their own financial data, only to find that GPT-4 beat it on almost all finance tasks.

They thought proprietary data would perform better, but it turns out the largest models won (at the time at least).

I worry that saying "4 years of experience as Operations Manager" is less important than the "competencies A - F" we've identified, makes the same mistake.

You're gambling on having found the secret sauce. But as usual the more targeted the intervention, the more likely it is to miss.

titotal @ 2024-08-25T22:21 (+6)

If someone claims to have competencies A-F required to be an operation manager, they should have some way of proving that they actually have competencies A-F. A great way for them to prove this is to have 4 years of experience as Operations Manager. In fact, I struggle to think of a better way to prove this, and obviously a person with experience is preferable to someone with no experience for all kind of reasons (they have encountered all the little errors, etc). As a bonus, somebody outside of your personal bubble has trusted this person.

EA should be relying on more subject matter experience, not less.

yanni kyriacos @ 2024-07-17T01:50 (+4)

Something bouncing around my head recently ... I think I agree with the notion that "you can't solve a problem at the level it was created".

A key point here is the difference between "solving" a problem and "minimising its harm".

- Solving a problem = engaging with a problem by going up a level from which is was createwd

- Minimising its harm = trying to solve it at the level it was created

Why is this important? Because I think EA and AI Safety have historically focussed (and has their respective strengths in) harm-minimisation.

This applies obviously the micro. Here are some bad examples:

- Problem: I'm experiencing intrusive + negative thoughts

- Minimising its harm: engage with the thought using CBT

- Attempting to solve it by going meta: apply meta cognitive therapy, see thoughts as empty of intrinsic value, as farts in the wind

- Problem: I'm having fights with my partner about doing the dishes

- Minimising its harm: create a spreadsheet and write down every thing each of us does around the house and calculate time spent

- Attempting to solve it by going meta: discuss our communication styles and emotional states when frustration arises

But I also think this applies at the macro:

- Problem: People love eating meat

- Minimising harm by acting at the level the problem was created: asking them not to eat meat

- Attempting to solve by going meta: replacing the meat with lab grown meat

- Problem: Unaligned AI might kill us

- Minimising harm by acting at the level the problem was created: understand the AI through mechanistic interpretability

- Attempting to solve by going meta: probably just Governance

Amber Dawn @ 2024-07-17T12:43 (+2)

To me, your examples at the micro level don't make the case that you can't solve a problem at the level it's created. I'm agnostic as to whether CBT or meta-cognitive therapy is better for intrusive thoughts, but lots of people like CBT; and as for 'doing the dishes', in my household we did solve the problem of conflicts around chores by making a spreadsheet. And to the extent that working on communication styles is helpful, that's because people (I'd claim) have a problem at the level of communication styles.

yanni kyriacos @ 2024-04-23T23:55 (+4)

This is an extremely "EA" request from me but I feel like we need a word for people (i.e. me) who are Vegans but will eat animal products if they're about to be thrown out. OpportuVegan? UtilaVegan?

Matt Goodman @ 2024-04-24T21:12 (+7)

Freegan

Bella @ 2024-04-24T10:18 (+5)

I think the term I've heard (from non-EAs) is 'freegan' (they'll eat it if it didn't cause more animal products to be purchased!)

yanni kyriacos @ 2024-04-24T20:28 (+1)

This seems close enough that I might co-opt it :)

https://en.wikipedia.org/wiki/Freeganism

tobytrem @ 2024-04-24T10:35 (+3)

If you predictably do this, you raise the odds that people around you will cook some/ buy some extra food so that it will be "thrown out", or offer you food they haven't quite finished (and that they'll replace with a snack later.

So I'd recommend going with "Vegan" as your label, for practical as well as signalling reasons.

yanni kyriacos @ 2024-04-24T20:26 (+3)

Yeah this is a good point, which I've considered, which is why I basically only do it at home.

yanni kyriacos @ 2025-01-25T07:01 (+3)

Larry Ellison, who will invest tens of billions in Stargate said uberveillance via AGI will be great because then police and the populace would always have to be on their best behaviour. It is best to assume the people pushing 8 billion of us into the singularity have psychopathy (or similar disorders). This matters because we need to know who we're going up against: there is no rationalising with these people. They aren't counting the QALYs!

Footage of Larry’s point of view starts around 12.00 on Matt Wolf’s video

yanni kyriacos @ 2025-01-10T01:38 (+3)

Solving the AGI alignment problem demands a herculean level of ambition, far beyond what we're currently bringing to bear. Dear Reader, grab a pen or open a google doc right now and answer these questions:

1) What would you do right now if you became 5x more ambitious?

2) If you believe we all might die soon, why aren't you doing the ambitious thing?

yanni kyriacos @ 2024-09-23T01:11 (+3)

I am 90% sure that most AI Safety talent aren't thinking hard enough about what Neglectedness. The industry is so nascent that you could look at 10 analogous industries, see what processes or institutions are valuable and missing and build an organisation around the highest impact one.

The highest impact job ≠ the highest impact opportunity for you!

Ben Millwood @ 2024-09-23T10:07 (+4)

you could look at 10 analogous industries, see what processes or institutions are valuable

I feel like you're making this sound simple when I'd expect questions like this to involve quite a bit of work, and potentially skills and expertise that I wouldn't expect people at the start of their careers to have yet.

Do you have any specific ideas for something that seems obviously missing to you?

yanni kyriacos @ 2024-09-22T22:38 (+3)

AI Safety (in the broadest possible sense, i.e. including ethics & bias) is going be taken very seriously soon by Government decision makers in many countries. But without high quality talent staying in their home countries (i.e. not moving to UK or US), there is a reasonable chance that x/c-risk won’t be considered problems worth trying to solve. X/c-risk sympathisers need seats at the table. IMO local AIS movement builders should be thinking hard about how to either keep talent local (if they're experiencing brain drain) OR increase the amount of local talent coming into x/c-risk Safety, such that outgoing talent leakage isn't a problem.

ezrah @ 2024-09-23T13:15 (+3)

What types of influence do you think governments from small, low influence countries will be able to have?

For example, the NZ government - aren't they price-takers when it comes to AI regulation? If you're not a significant player, don't have significant resources to commit to the problem, and don't have any national GenAI companies - how will they influence the development trajectory of AI?

wes R @ 2024-09-22T23:43 (+1)

That's one way, but countries's (and other body's) concerns are made up of both their citizens/participants concerns and other people's concerns. one is valued differently from the other, but there's other ways of keeping relevant actors away from not thinking its a problem. (namely, utilizing the UN.) you make a good point though.

yanni kyriacos @ 2024-09-19T01:52 (+3)

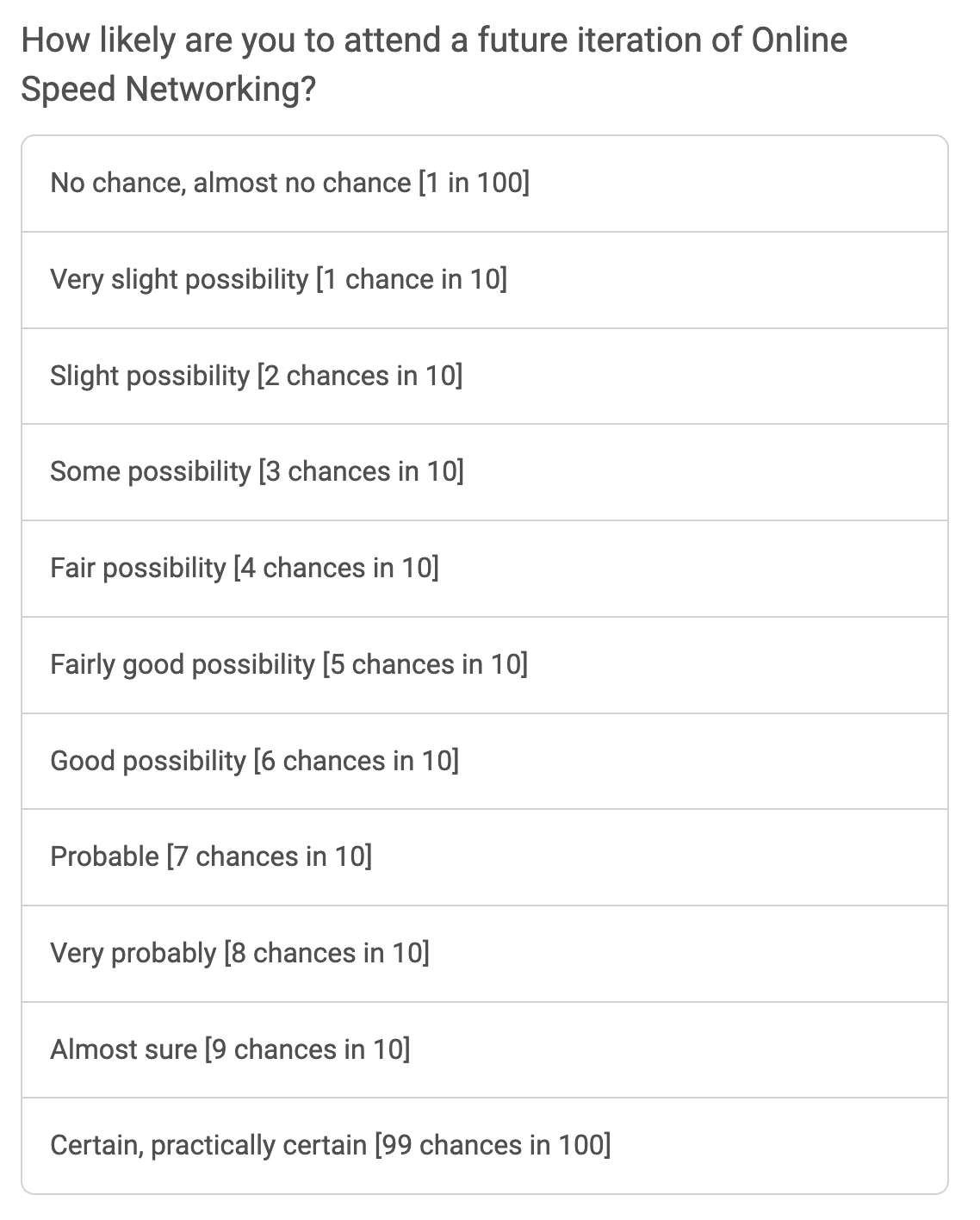

I beta tested a new movement building format last night: online networking. It seems to have legs.

V quick theory of change:

> problem it solves: not enough people in AIS across Australia (especially) and New Zealand are meeting each other (this is bad for the movement and people's impact).

> we need to brute force serendipity to create collabs.

> this initiative has v low cost

quantitative results:

> I purposefully didn't market it hard because it was a beta. I literally got more people that I hoped for

> 22 RSVPs and 18 attendees

> this says to me I could easily get 40+

> average score for below question was 7.27, which is very good for a beta test

I used Zoom, which was extremely clunky. These results suggest to me I should;

> invest in software designed for this use case, not zoom

> segment by career stream (governance vs technical) and/or experience (beginner vs advanced)

> run it every second month

I have heaps of qualitative feedback from participants but don't have time to share it here.

Email me if interested: yanni@aisafetyanz.com.au

yanni kyriacos @ 2024-05-22T00:25 (+3)

Two jobs in AI Safety Advocacy that AFAICT don't exist, but should and probably will very soon. Will EAs be the first to create them though? There is a strong first mover advantage waiting for someone -

1. Volunteer Coordinator - there will soon be a groundswell from the general population wanting to have a positive impact in AI. Most won't know how to. A volunteer manager will help capture and direct their efforts positively, for example, by having them write emails to politicians

2. Partnerships Manager - the President of the Voice Actors guild reached out to me recently. We had a very surprising number of cross over in concerns and potential solutions. Voice Actors are the canary in the coal mine. More unions (etc) will follow very shortly. I imagine within 1 year there will be a formalised group of these different orgs advocating together.

Yanni Kyriacos @ 2024-03-06T23:03 (+3)