kuhanj's Quick takes

By kuhanj @ 2025-04-30T22:15 (+6)

nullkuhanj @ 2025-04-30T22:15 (+45)

I've been feeling increasingly strongly over the last couple of years that EA organizations and individuals (myself very much included) could be allocating resources and doing prioritization much more effectively. (That said, I think we're doing extremely well in relative terms, and greatly appreciate the community's willingness to engage in such difficult prioritization.)

Reasons why I think we're not realizing our potential:

- Not realizing our lack of clarity about how to most impactfully allocate resources (time, money, attention, etc). Relatedly, an implicit assumption that we're doing a pretty good job, and couldn't be doing significantly better. I speculate that FTX trauma has significantly reduced the community's ambition to an unwarranted degree.

- Path dependence + insufficient intervention prioritization research (in terms of quality, quantity, and frequency). I thought this post brought up good points about the relative lack of cross-cause prioritization research + dissemination in the EA community despite its importance.

- Being insufficiently open-minded about which areas and interventions might warrant resources/attention, and unwarranted deference to EA canon, 80K, Open Phil, EA/rationality thought leaders, etc.

- Poorly applying heuristics as a substitute to prioritization work. See this comment and discussion about the neglectedness heuristic causing us to miss out on impact. FWIW I believe this very strongly and think the community has missed out on a ton of impact because of this specific mistake, but I’m unable to write about specifics in detail publicly. Feel free to reach out if you’d like to discuss this in private.

- Aversion to feeling uncertainty and confusion (likely exacerbated by stress about AGI timelines).

- Attachment to feeling certainty, comfort and assurance about the ethical and epistemic justification of our past actions, thinking, and deference.

- Being slow to re-orient to important, quickly-evolving technological and geopolitical developments, and being unresponsive to certain kinds of evidence (e.g. inside AI world - not taking into account important political developments, outside of AI world - not taking AGI into account).

- Strong non-impact tracking incentives (e.g. strong social incentives to have certain beliefs, work at certain orgs, focus on certain topics), and weak incentives to figure out and act on what is actually most impactful. We don't hear future beings (or current beings for the most part) letting us know that we could be helping them much more effectively by taking one action over another. We do feel judgment from our friends/in group very saliently.

- Lacking the self-confidence/agency/courage/hero-licensing/interest/time/etc to figure things out ourselves, and share what we believe (and what we're confused about) with others - especially when it diverges from the aforementioned common sources of deference.

- This is a shame given how brilliant, dedicated, and experienced members of the community are, and how much insight people have to offer – both within the community, and to the broader world.

I'm collaborating on a research project exploring how to most effectively address concentration of power risks (which I think the community has been neglecting) to improve the LTF/mitigate x-risk, considering implications of AGI and potentially short timelines, and the current political landscape (mostly focused on the US, and to a lesser extent China). We're planning to collate, ideate, and prioritize among concrete interventions to work on and donate to, and compare their effectiveness against other longtermist/x-risk mitigation interventions. I'd be excited to collaborate with others interested in getting more clarity on how to best spend time, money, and other resources on longtermist grounds. Reach out (e.g. by EA Forum DM) if you're interested. :)

I would also love to see more individuals and orgs conduct, fund, and share more cross-cause prioritization analyses (especially in areas under-explored by the community) with discretion about when to share publicly vs. privately.

JWS 🔸 @ 2025-05-01T11:40 (+42)

I wish this post - and others like it - had more specific details when it comes to these kind of criticisms, and had a more specific statement of what they are really taking issue with, because otherwise it sort of comes across as "I wish EA paid more attention to my object-level concerns" which approximately ~everyone believes.

If the post it's just meant to represent your opinions thats perfectly fine, but I don't really think it changed my mind on its own merits. I also just don't like withholding private evidence, I know there are often good reasons for it, but it means I just can't give much credence to it tbqh. I know it's a quick take but still, it left me lacking in actually evaluating.

I general this discussion reminds me a bit of my response to another criticism of EA/Actions by the EA Community, and I think what you view as 'sub-optimal' actions are instead best explained by people having:

- bounded rationality and resources (e.g. You can't evaluate every cause area, and your skills might not be as useful across all possible jobs)

- different values/moral ends (Some people may not be consequentialists at all, or prioritise existing vs possible people difference, or happiness vs suffering)

- different values in terms of process (e.g. Some people are happy making big bets on non-robust evidence, others much less so)

And that, with these constraints accepted, many people are not actually acting sub-optimally given their information and values (they may later with hindsight regret their actions or admit they were wrong, of course)

Some specific counterexamples:

- Being insufficiently open-minded about which areas and interventions might warrant resources/attention, and unwarranted deference to EA canon, 80K, Open Phil, EA/rationality thought leaders, etc. - I think this might be because of resource constraints, or because people disagree about what interventions are 'under invested' vs 'correctly not much invested in'. I feel like you just disagree with 80K & OpenPhil on something big and you know, I'd have liked you to say what that is and why you disagree with them instead of dancing around it.

- Attachment to feeling certainty, comfort and assurance about the ethical and epistemic justification of our past actions, thinking, and deference - What decisions do you mean here? And what do you even mean by 'our'? Surely the decisions you are thinking of apply to a subset of EAs, not all of EA? Like this is probably the point that most needed something specific.

Trying to read between the lines - I think you think the AGI is going to be a super big deal soon, and that its political consequences might be the most important and consequently political actions might be the most important ones to take? But OpenPhil and 80K haven't been on the ball with political stuff and now you're disillusioned? And people in other cause areas that aren't AI policy should presumably stop donating there and working their and pivot? I don't know, it doesn't feel accurate to me,[1] but like I can't get a more accurate picture because you just didn't provide any specifics for me to tune my mental model on 🤷♂️

Even having said all of this, I do think working on mitigating concentration-of-power-risks is a really promising direction for impact and wish you the best in pursuing it :)

- ^

As in, i don't think you'd endorse this as a fair or accurate description of your views

kuhanj @ 2025-05-02T06:23 (+7)

Thanks for your comment, and I understand your frustration. I’m still figuring out how to communicate about specifics around why I feel strongly that incorrectly applying the neglectedness heuristic as a shortcut to avoid investigating whether investment in an area is warranted has led to tons of lost potential impact. And yes, US politics are, in my opinion, a central example. But I also think there are tons of others I’m not aware of, which brings me to the broader (meta) point I wanted to emphasize in the above take.

I wanted to focus on the case for more independent thinking, and discussion of how little cross-cause prioritization work there seems to be in EA world, rather than trying to convince the community of my current beliefs. I did initially include object-level takes on prioritization (which I may make another quick take about soon) in the comment, but decided to remove them for this reason: to keep the focus on the meta issue.

My guess is that many community members implicitly assume that cross-cause prioritization work is done frequently and rigorously enough to take into account important changes in the world, and that the takeaways get communicated such that EA resources get effectively allocated. I don’t think this is the case. If it is, the takeaways don’t seem to be communicated widely. I don’t know of a longtermist Givewell alternative for donations. I don’t see much rigorous cross-cause prioritization analysis from Open Phil, 80K, or on the EA forum to inform how to most impactfully spend time. Also, the priorities of the community have stayed surprisingly consistent over the years, despite many large changes in AI, politics, and more.

Given how important and difficult it is to do well, I think EAs should feel empowered to regularly contribute to cross-cause prioritization discourse, so we can all understand the world better and make better decisions.

Throwaway81 @ 2025-05-02T01:15 (+4)

I disagree voted because I think that withholding of private info should be a strong norm and that it's not the poster's job to please the community with privileged info that could hurt them when they are already doing a service by posting. I also think it could possibly serve as an indicator of some sort (eg, if people searched the forum for comments like this perhaps it might point towards a trend of posters worrying about how much blowback they think they might get from funders/other EA orgs if actual criticism of them/backdoor convos were revealed -- whether that's a warranted worry or not). I also think that by leaking private convos that will hurt a person because now every time someone interacts with that person they will think that their convo might get leaked online and will not engages with that person. Seems mean to ask someone to do that for you just so you can have more data to judge them on -- they are trying to communicate something real to you but obviously can't. I don't have any reason to doubt the poster unless they've lied before and have a strong trust norm unless there is a reason not to trust. But I double liked because most of the rest of your comment was good :-)

Bella @ 2025-05-01T07:52 (+22)

I agree with the substance but not the valence of this post.

I think it's true that EAs have made many mistakes, including me, some of which I've discussed with you :)

But I think that this post is an example of "counting down" when we should also remember the frame of "counting up."

That is — EAs are doing badly in the areas you mentioned because humans are very bad at the areas you mentioned. I don't know of any group where they have actually-correct incentives, reliably drive after truth, get big, complicated, messy questions like cross-cause prioritisation right — like, holy heck, that is a tall order!!

So you're right in substance, but I think your post has a valence of "EAs should feel embarrassed by their failures on this front", which I strongly disagree with. I think EAs should feel damn proud that they're trying.

kuhanj @ 2025-05-02T07:42 (+8)

Thanks for the feedback, and I’m sorry for causing that unintended (but foreseeable) reaction. I edited the wording of the original take to address your feedback. My intention for writing this was to encourage others to figure things out independently, share our thinking, and listen to our guts - especially when we disagree with the aforementioned sources of deference about how to do the most good.

I think EAs have done a surprisingly good job at identifying crucial insights, and acting accordingly. EAs also seem unusually willing to explicitly acknowledge opportunity cost and trade-offs (which I often find the rest of the world frustratingly unwilling to do). These are definitely worth celebrating.

However, I think our track record at translating the above into actually improving the future is nowhere near our potential.

Since I experience a lot of guilt about not being a good enough person, the EA community has provided a lot of much-needed comfort to handle the daunting challenge of doing as much good as I can. It’s been scary to confront the possibility that the “adults in charge” don’t have the important things figured out about how to do the most good. Given how the last few years have unfolded, they don’t even seem to be doing a particularly good job. Of course, this is very understandable. FTX trauma is intense, and the world is incredibly complicated. I don’t think I’m doing a particularly good job either.

But it has been liberating to allow myself to actually think, trust my gut, and not rely on the EA community/funders/orgs to assess how much impact I’m having relative to my potential. I expect that with more independent thinking, ambition, and courage, our community will do much better at realizing our potential moving forward.

Peter @ 2025-04-30T23:11 (+19)

I've been thinking about coup risks more lately so would actually be pretty keen to collaborate or give feedback on any early stuff. There isn't much work on this (for example, none at RAND as far as I can tell).

I think EAs have frequently suffered from a lack of expertise, which causes pain in areas like politics. Almost every EA and AI safety person was way off on the magnitude of change a Trump win would create - gutting USAID easily dwarfs all of EA global health by orders of magnitude. Basically no one took this seriously as a possibility, or at least I do not know of anyone. And it's not like you'd normally be incentivized to plan for abrupt major changes to a longstanding status quo in the first placce.

Oversimplification of neglectedness has definitely been an unfortunate meme for a while. Sometimes things are too neglected to make progress or don't make sense for your skillset, or are neglected for a reason, or just less impactful. To a lesser extent, I think there has been some misuse/misunderstanding of counterfactual thinking as well instead of Shapley additives. Or being overly optimistic "our few week fellowship can very likely change someone's entrenched career path" if they haven't strongly shown that as their purpose for participating.

Definitely agree we have a problem with deference/not figuring things out. It's hard and there's lots of imposter syndrome where people think they aren't good enough to do this or try to do it. I think sometimes people get early negative feedback and over-update, dropping projects before they've tested things to see results. I would definitely like to see more rigorous impact evaluation in the space. At one point I wanted to start an independent org that did this. It seems surprisingly underprioritized. There's a meme that EAs like to think and research and need to just do more things, but I think it's a bit of a false dichotomy and on net more research + iteration is valuable and amplifies your effectiveness, making sure you're prioritizing the right things in the right ways.

Another way deference expresses negative effects is that established orgs act as whirlpools that suck up all the talent and offer more "legitimacy" including frontier AI companies, but I think they're often not the highest impact thing you could do. Often there is something that would be impactful but won't happen if you don't do it. Or would happen worse. Or happen way later. People also underestimate how much the org they work at will change how they think and what they think about and what they want to do or are willing to give up. But finding alternatives can be tough - how many people really want to continue working as independent contractors with no benefits and no coworkers indefinitely? it's very adverse selection against impact. Sure, this level of competition might weed out some worse ideas but also good ones.

Yelnats T.J. @ 2025-05-01T00:22 (+18)

"Basically no one took this seriously as a possibility, or at least I do not know of anyone."

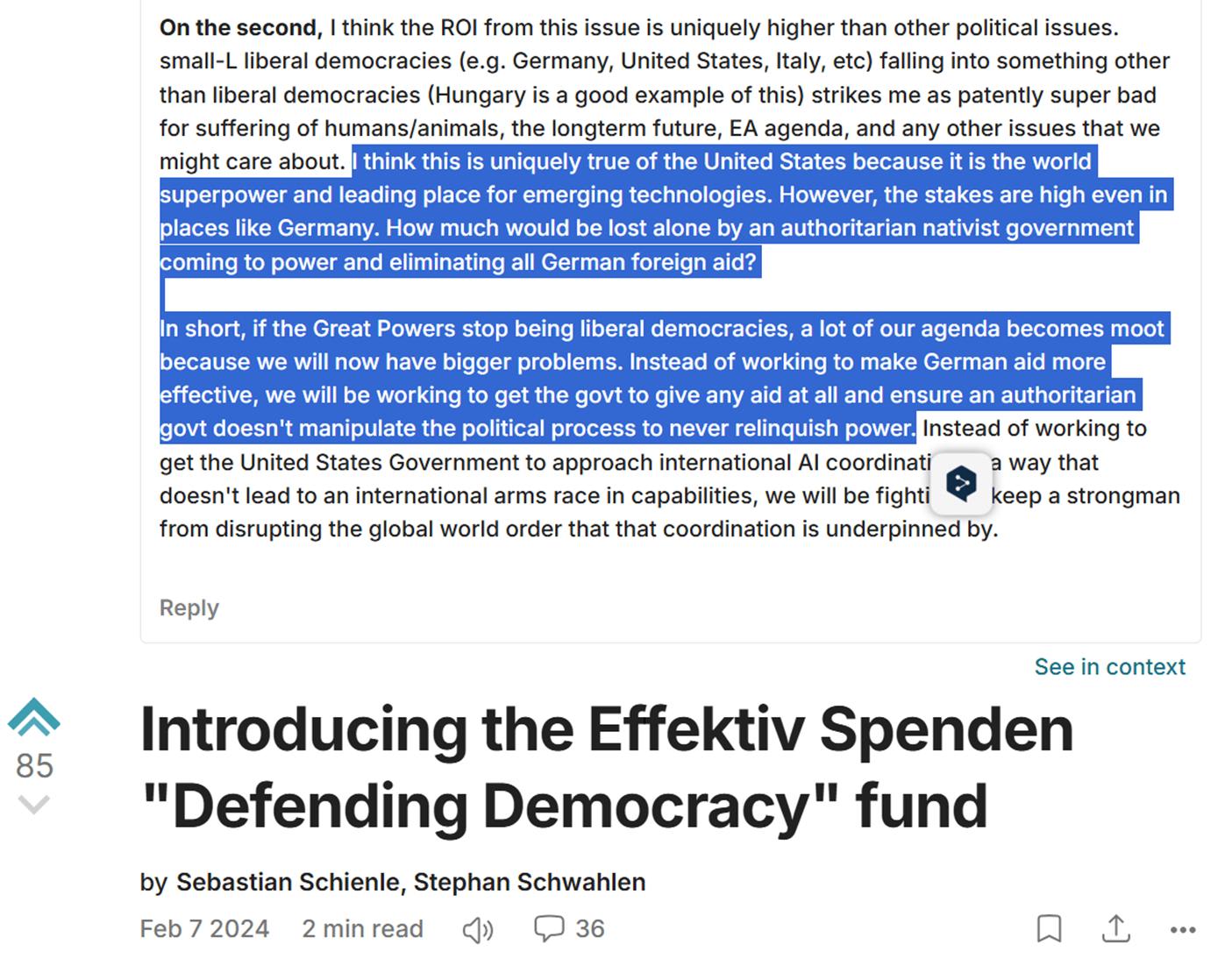

I alluded to this over a year ago in this comment, which might count in your book as taking it seriously. But to be honest, where we are at in Day 100 of this administration is not the territory I expected us to be in until at least the 2nd year.

I think these people do exist (those that appreciated the second term for the risks it presented) and I'll count myself as one of them. I think we are just less visible because we push this concern a lot less in the EA discourse than other topics because 1) the people with these viewpoints and are willing to be vocal about it are a small minority of EA*, 2) espousing these views is perceived as a way to lose social capital, and 3) EA institutions have made decisions that have somewhat gatekeeped how much of this discourse can take place in EA official venues.

Note on #1

A lot of potential EAs—people who embrace EA principles and come to the conclusion that the way to do the most good is work on democracy/political systems/politics of Great Powers—interact with the community, are offput by the little engagement and, sometimes, dismissiveness of the community towards this cause area, and then decide that rather than fight the uphill battle of moving the Overton window they will instead retreat back to other communities more aligned with their conclusions.

This characterized my own relationship with EA. Despite knowing and resonating with EA since 2013/2014, I did not engage deeply with the community until 2022 because there seemed to be little to no overlap with people that wanted to change the political system and address the politics upstream of the policies EA spend so much time thinking how to influence. I think this space is still small in EA but is garnering more interest and will only do so because I think we are at the beginning and not the end of this moment in history.

Note on #1 and #2

When I talk with EAs one-on-one, a substantial portion share my views that EA negelects politics of the world’s superpower and the political system upstream of those politics. However, very few act on these beliefs or take much time to vocalize them. I think people underestimate how much people share this sentiment, which only makes it less likely for people to speak out (which of course, leads back to people underestimating the prevalence of the belief).

Note on #3

CEA has once allowed me to speak once on the topic of risks to the US system at an EAGxVirtual—kudos where it is due. However, I’ve have inquired multiple times with CEA since 2022 about running such an event at an actual EAG and have always been declined; I think this is clear area of improvement. I’d also like to see networking meetups for people interested in this area at the EAGs themselves instead of people resorting to personally organizing satellite events around them; recently there was indication CEA was open to this.

On the Forum, posts in and around this topic can, and sometimes do, get marked as community posts and thus lose visibility. This is not to say it happens all the time. There are posts that make it to the main page that others would want to see moved to community.

calebp @ 2025-04-30T23:21 (+7)

I agree with this take, but I think that this is primarily an agency and "permission to just do things" issue, rather than people not being good at prioritisation. It requires some amount of being willing to be wrong publicly and fail in embarrassing ways to actual go and explore under-explored things, and in general, I think that current EA institutions (including people on the EA forum) don't celebrate people actually getting a bunch of stuff done and testing a bunch of hypothesis (otoh, shutting down your project is celebrated which is good imo - though ~only if you write a forum post about it).

I guess overall, I don't think that people are as bottlenecked on how much prioritisation they are doing, but are pretty bottlenecked on "just doing things" and not staring into the void enough to realise that they should move on from their current project or pivot (even when people on the forum will get annoyed with them). In part, these considerations converge because it turns out that many projects are fairly bottlenecked by the quality of execution rather than by the ideas themselves, and actually trying out a project and iterating on it seems to reliably improve projects.

In general, my modal advice for EAs has shifted from "try to think hard about what is impactful" towards "consider just doing stuff now" or "suppose you have 10x more agency, what would you do? Maybe do that thing instead?".

Jordan Arel @ 2025-05-05T23:18 (+4)

I very much agree that we need less deference and more people thinking for themselves, especially on cause prioritization. I think this is especially important for people who have high talent/skill in this direction, as I think it can be quite hard to do well.

It’s a huge problem that the current system is not great at valuing and incentivizing this type of work, as I think this causes a lot of the potentially highly competent cause prioritization people to go in other directions. I’ve been a huge advocate for this for a long time.

I think it is somewhat hard to systematically address, but I’m really glad you are pointing this out and inviting collaboration on your work, I do think concentration of power is extremely neglected and one of the things that most determines how well the future will go (and not just in terms of extinction risk but upside/opportunity cost risks as well.)

Going to send you a DM now!