When to get off the train to crazy town?

By mariushobbhahn @ 2021-11-22T07:30 (+79)

In her appearance on the 80k podcast, Ajeya Cotra introduces the concept of the train to crazy town.

Ajeya Cotra: And so when the philosopher takes you to a very weird unintuitive place — and, furthermore, wants you to give up all of the other goals that on other ways of thinking about the world that aren’t philosophical seem like they’re worth pursuing — they’re just like, stop… I sometimes think of it as a train going to crazy town, and the near-termist side is like, I’m going to get off the train before we get to the point where all we’re focusing on is existential risk because of the astronomical waste argument. And then the longtermist side stays on the train, and there may be further stops.

This analogy immediately resonated with me and I still like it a lot. In this post, I want to use this metaphor to clarify a couple of problems that I often encounter regarding career choice and other moral considerations.

Feel free to add metaphors in the comments.

The train to crazy town goes every five minutes:

Getting off the train is not a final decision. You can always hop back on. Clearly, there is some opportunity cost and a bit of lost time but in the broad scheme of things, there is still lots of room for change in your moral views. Especially when it comes to career choice, some people I talked to definitely take path dependencies too seriously. I had conversations along the lines of “I already did a Bachelor’s in Biology and just started a Master’s in Nanotech, surely it’s too late for me to pivot to AI safety”. To which my response is “You’re 22, if you really want to go into AI safety, you can easily switch”.

The return train goes every five minutes too:

If your current stop feels a bit too close to crazy town, you can always go back. The example from above also applies in the other direction. In general, I feel like people overestimate the importance of their path dependencies rather than updating them accordingly when their moral views change. Often, some years down the line, they can’t live with the discrepancy between their moral beliefs and lived actions anymore and change careers even later rather than cutting their losses early.

You can ride the train (nearly) for free:

There are a ton of ways in which you can do roundtrips to the previous or next town without having to get off the train. Obviously, there is a ton of stuff online such as 80K, Holden Karnofsky’s Aptitudes post, or probablygood, and many people use these resources already. However, I think people still don’t talk enough to other EAs about these questions. The amount of knowledge and experiences that you get from one weekend of 1on1s at EAG or EAGx is just insanely cheap and valuable compared to most reading or thinking that you can do on your own.

You are the conductor:

You control the speed at which your train rides. There is no reason to ride at an uncomfortably slow or fast paste if there is no reason for it.

More experienced people tend to ride longer:

What people intuitively think of as a “crazy view” depends on how long they consider themselves an EA, in my experience. I guess to my previous self from 6 years ago, some of my current beliefs would have seemed crazy.

More people are moving to further towns:

While the movement is growing a lot and therefore all towns become more populous, it seems like some further towns are getting disproportionately more citizens. Longtermism, AI safety, Biosecurity, and so on certainly were less mainstream 5 years ago than they are now.

There is no crazy town:

Nobody has found crazy town yet. Some people got on the first train went full gas no breaks and still haven’t reached it yet. I talked to someone with this mentality at EAG2021 and absolutely loved it. They just never got off and look where the train takes them. This might not be for most people but the movement definitely needs this mentality to explore new ideas that seem crazy today but might be more mainstream a couple of years down the line. And even if they will never reach the mainstream, it is still important that somebody is exploring them.

saulius @ 2021-11-22T17:06 (+40)

The analogy resonated to me too. It reminded me of a part of my journey where I went to what to me was crazy town and came back. I’d like to share my story, partly to illustrate the concept. And if others would share their stories, I think that could be valuable or at least interesting.

At one point I decided that by far the best possible outcome for the future would be the so-called hedonium shockwave. The way I imagined it at that time, it would be an AI filling the universe as fast as possible with a homogenous substance that experiences extreme bliss. E.g. nano chips that simulate sentient minds in the constant state of extreme bliss. And those minds might be just sentient enough to experience bliss to save computation power for more minds. And since this is so overwhelmingly important, I thought that the goal of my life should be to increase the probability of a hedonium shockwave.

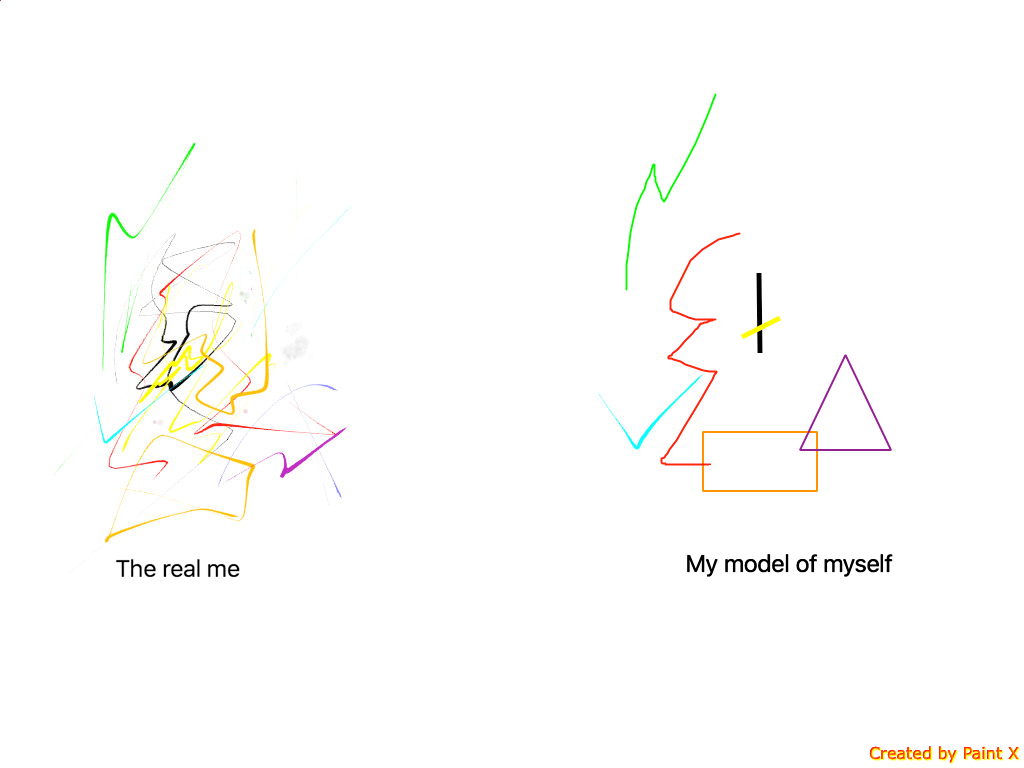

But then I procrastinated doing anything about it. When thinking why, I realized that the prospect of hedonium shockwave doesn’t excite me. In fact, this scenario seemed sad and worrying. After more contemplation, I think I figured out why. I viewed myself as an almost pure utilitarian (except some selfishness). And this seemed like the correct conclusion from the utilitarian POV, hence I concluded that this is what I want. But while utilitarianism might do a fine job at approximating my values in most situations, it did a bad job in this edge case. Utilitarianism was a map not the territory. So nowadays I still try to figure out what utilitarianism would suggest to do but then try to remember to ask myself: is this what I really want (or really think)? My model of myself might be different from the real me. In my diary at the time I made this drawing to illustrate it. It’s superfluous to the text but drawings help me to remember things.

alene @ 2021-11-27T22:49 (+20)

Oh wow, Saulius, it is so exciting to read this! You described exactly how I think, also. I, too, only follow utilitarianism as a way of making moral decisions when it comports with what my moral emotions tell me to do. And the reason I love utilitarianism is just that it matches my moral emotions about 90% of the time. The main time I get off the utilitarian train is when I consider the utilitarian idea that it should be morally just as good to give one additional unit of joy to a being who is already happy, as it is to relieve an unhappy being from one unit of suffering. I’d rather relieve the suffering of the unhappy. So I relate to you not following the idea that utilitarianism led you to when it felt wrong to you emotionally. (That said, I actually love the idea of lots of blissed out minds filling the universe, so I guess our moral emotions tell us different things.)

When interacting with pure utilitarians, I’ve often felt embarrassed that I used moral emotions to guide my moral decisions. Thanks for making me feel more comfortable coming “out” about this emotional-semi-utilitarian way of thinking, Saulius!

Also, I love that you acknowledged that selfishness, of course, also influences our decision making. It does for me, too. And I think declaring that fact is the most responsible thing for us to do, for multiple reasons. It is more honest, and it helps others realize they can do good while still being human.

saulius @ 2021-11-29T12:15 (+10)

Hey Alene!

> I’d rather relieve the suffering of the unhappy.

In case you didn’t know, It is called prioritarianism (https://en.wikipedia.org/wiki/Prioritarianism). I’ve met more people who think this so you are not alone. I wouldn’t be surprised if the majority of EAs think this.

> When interacting with pure utilitarians, I’ve often felt embarrassed that I used moral emotions to guide my moral decisions.

To me, the question is how did they decide to be utilitarian in the first place? How did they decide whether they should be negative utilitarians, or classical utilitarians, or Kantians? How did they decide that they should minimize suffering rather than say maximize the number of paperclips? I imagine there are various theories on this but personally, I’m convinced that emotions are at the bottom of it. There is no way to use math or anything like that to prove that suffering is bad, so emotions are the only possible source of this moral intuition that I can see. So in my opinion, those pure utilitarians also used emotions to guide moral decisions, just more indirectly.

Once I realized that, I started questioning: how did I decide that some moral intuitions/emotions (e.g. suffering is bad) are part of my moral compass while other emotions (e.g. hedonium shockwave is bad, humans matter much more than animals) are biases that I should try to ignore? The choice of which moral emotions to trust seems totally arbitrary to me. So I don’t think that there is a reason at all why you should feel embarrassed about using emotions because of that. This is just my amateur reasoning though, there are probably thick moral philosophy books that disprove this position. But then again, who has time to read those when there are so many animals we could be helping instead.

saulius @ 2021-11-29T12:17 (+8)

One more thought: I think that people who chose only very few moral intuitions/emotions to trust and then follow them to their logical conclusions are the ones that are more likely to stay on the train longer. This is not expressing any opinion on how long we should stay on the train. As I said, I think the choice of how many moral intuitions to trust is arbitrary.

Personally, especially in the past, I also stayed on the train longer because I wanted to be different from other people, because I was a contrarian. That was a bad reason.

alene @ 2021-11-30T19:13 (+13)

Thank you so much Saulius! I never heard of prioritarianism. That is amazing! Thanks for telling me!!

I’m not the best one to speak for the pure utilitarians in my life, but yes, I think it was what you said: Starting with one set of emotions (the utilitarian’s personal experience of preferring the feeling of pleasure over the feeling of suffering in his own life), and extrapolating based on logic to assume that pleasure is good no matter who feels it and that suffering is bad no matter who feels that.

saulius @ 2021-11-22T17:14 (+5)

Thinking about it more now, I'm still unsure if this is the right way to think about things. As a moral relativist, I don't think there is moral truth, although I'm unsure of it because others disagree. But I seem to have concluded that I should just follow my emotions in the end, and only care about moral arguments if they convince my emotional side. As almost all moral claims, that is contentious. It's also making me think that perhaps how far towards the crazy town you go can depend a lot on how much you trust your emotions vs argumentation.

Max_Carpendale @ 2021-11-27T16:56 (+3)

I had a similar journey. I still think that utilitarian is a good description for me, since it seems basically right to me in all non-sci-fi scenarios, but I don't have any confidence in the extreme edge cases.

kokotajlod @ 2021-11-22T16:06 (+29)

As a philosopher, I don't think I'd agree that there is no crazy town. Plenty of lines of argument really do lead to absurd conclusions, and if you actually followed through you'd be literally crazy. For example, you might decide that you are probably a boltzmann brain and that the best thing you can do is think happy thoughts as hard as you can because you are about to be dissolved into nothingness. Or you might decide that an action is morally correct iff it maximizes expected utility, but because of funnel-shaped action profiles every action has undefined expected utility and so every action is morally correct.

What I'd say instead of "there is no crazy town" is that the train line is not a single line but a tree or web, and when people find themselves at a crazy town they just backtrack and try a different route. Different people have different standards for what counts as a crazy town; some people think lots of things are crazy and so they stay close to home. Other people have managed to find long paths that seem crazy to some but seem fine to them.

aaronb50 @ 2021-11-22T16:45 (+3)

Taking the Boltzmann brain example, isn't the issue that the premises that would lead to such a conclusion are incorrect, rather than the conclusion being "crazy" per se?

kokotajlod @ 2021-11-23T12:21 (+24)

In many cases in philosophy, if we are honest with ourselves, we find that the reason we think the premises are incorrect is that we think the conclusion is crazy. We were perfectly happy to accept those premises until we learned what conclusions could be drawn from them.

mariushobbhahn @ 2021-11-22T19:23 (+1)

what I meant to say with that point is that the tracks never stop, i.e. no matter how crazy an argument seems, there might always be something that seems even crazier. Or from the perspective of the person who is exploring the frontiers, there will always be another interesting question to ask that goes further than the previous one.

Mathieu Putz @ 2021-11-24T23:27 (+4)

Hey, thanks for writing this!

Strong +1 for this part:

I had conversations along the lines of “I already did a Bachelor’s in Biology and just started a Master’s in Nanotech, surely it’s too late for me to pivot to AI safety”. To which my response is “You’re 22, if you really want to go into AI safety, you can easily switch”.

I think this pattern is especially suspicious when used to justify some career that's impactful in one worldview over one that's impactful in another.

E.g. I totally empathize with people who aren't into longtermism, but the reasoning should not be "I have already invested in this cause area and so I should pursue it, even though I believe the arguments that say it's >>10x less impactful than longtermist cause areas".

I also get the impression that sometimes people use "personal fit for X" and "have already accumulated career capital for X" interchangeably, when I think the former is to a significant degree determined through inate talents. Thus the message "personal fit matters" is sometimes heard as a weaker version of "continue what you're already doing".

evelynciara @ 2021-11-22T19:40 (+2)

The train isn't linear, but is a network of criss-crossing routes. You can go from Biosecurity to Wild Animal Welfare to Artificial Sentience.