Key EA decision-makers on the future of EA, reflections on the past year, and more (MCF 2023)

By michel, MaxDalton, Sophie Thomson @ 2023-11-03T22:29 (+61)

This post summarizes the results of the Meta Coordination Forum (MCF) 2023 pre-event survey. MCF was a gathering of key people working in meta-EA, intended to help them make better plans over the next two years to help set EA and related communities on a better trajectory. (More information here.)

About the survey

Ahead of the event, we sent invitees a survey to understand the distribution of views on topics relevant to invitees’ plans and the future of EA. These topics included:

- Resource allocation across causes, and across meta and direct work

- Reflections on FTX and other lessons learned over the past year

- Mistakes the EA community may be making

- The relationship between EA & AI safety (AIS)

- Projects people would like to see (summarized in this post)

The results from this pre-event survey are summarized below and in this post on field-building projects. We received 41 responses (n = 41) out of 45 people invited to take the survey. The majority of people invited to MCF in time to answer this survey are listed below:

Alexander Berger Amy Labenz Anne Schulze Arden Koehler Bastian Stern Ben West Buddy Shah Caleb Parikh Chana Messinger Claire Zabel | Dewi Erwan James Snowden Jan Kulveit Jonas Vollmer Julia Wise Kuhan Jeyapragasan Lewis Bollard Lincoln Quirk Max Dalton Max Daniel | Michelle Hutchinson Nick Beckstead Nicole Ross Niel Bowerman Oliver Habryka Peter McIntyre Rob Gledhill Sim Dhaliwal Sjir Hoeijmakers William MacAskill Zach Robinson |

We’re sharing the results from this survey (and others) in the hope that being transparent about these respondents' views can improve the plans of others in the community. But there are important caveats, and we think it could be easy to misinterpret what this survey is and isn’t.

Caveats

- This survey does not represent ‘the views of EA.’ The results of this survey are better interpreted as 'the average of what the people who RSVPd to MCF think' than a consensus of 'what EA or EA leaders think’. You can read more about the event's invitation process here.

- This group is not a collective decision-making body. All attendees make their own decisions about what they do. Attendees came in with lots of disagreements and left with lots of disagreements (but hopefully with better-informed and coordinated plans). This event was not aimed at creating some single centralized grand strategy. (See more about centralization in EA.)

- Most people filled in this survey in early August 2023, so people’s views may have changed.

- People’s answers mostly represent their own views, which may be different from the views of the orgs they work for.

- People filled in this survey relatively quickly. While the views expressed here are indicative of respondents’ beliefs, they’re probably not respondents' most well-considered views.[1]

- We often used LLMs for the summaries to make this post more digestible and save time. We checked and edited most of the summaries for validity, but there are probably still some errors. The use of tailored prompts and models also caused the tone and structure to vary a bit.

- Note: the events team has been trying to be more transparent with information about our programs, but we have limited capacity to make things as concise or clear as we would like.

Executive Summary

This section summarizes multiple-choice questions only, not the open-text responses.

Views on Resource Allocation (see more)

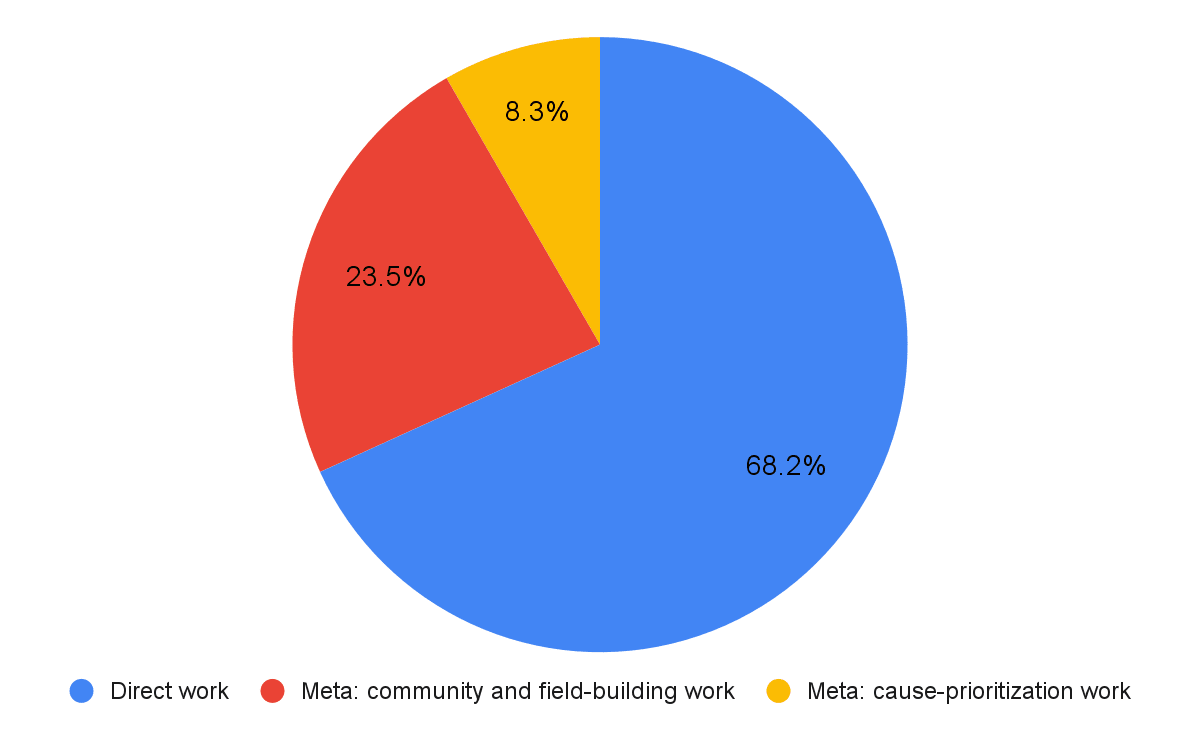

Direct vs. Meta work:

- On average, survey respondents reported that the majority of resources should be devoted to ‘Direct Work’

- 68.3%; SD = 13.5

- followed by ‘Meta: community and field-building work’

- 23.5%; SD = 12.3

- and then ‘Meta: cause-prioritization work’

- 8.3%; SD = 5.8

- But there was significant disagreement in percentages given and understandable confusion about whether e.g., AI risk advocacy is direct work or field-building work.

What goal should the EA community’s work be in service of?

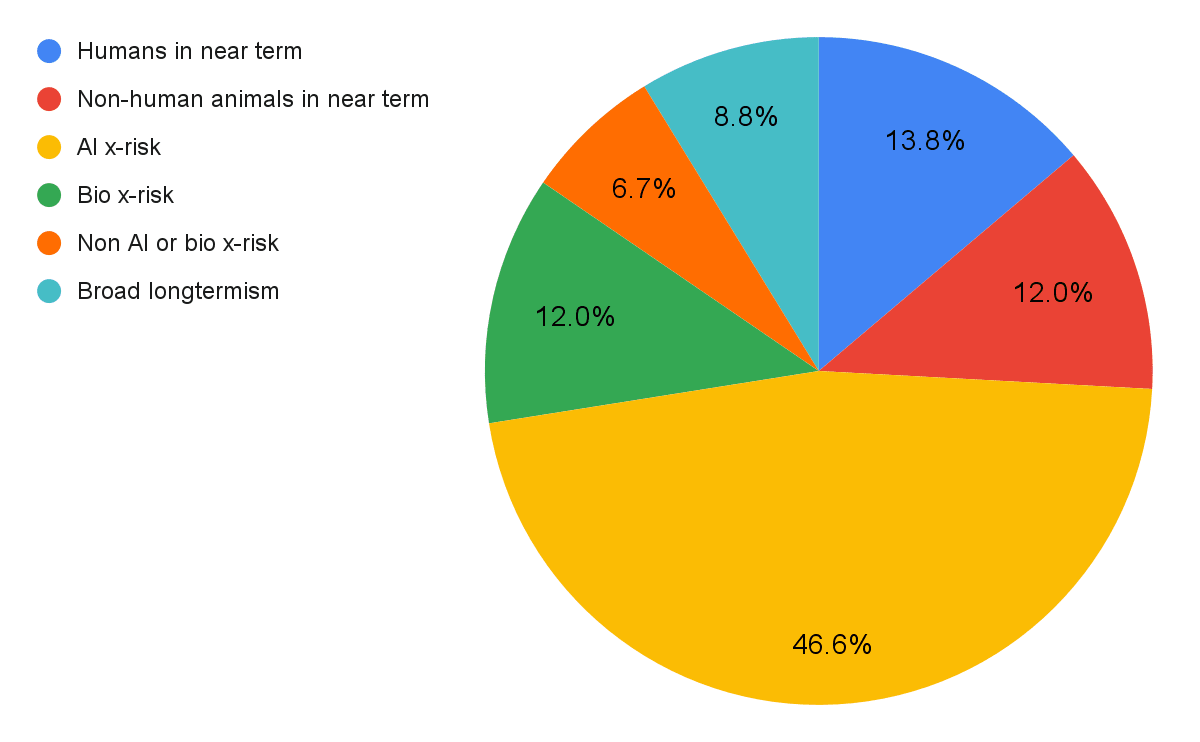

- In aggregate, survey respondents thought that a slight majority of EA work should be in service of reducing existential risks

- 65.4%; SD = 28.5

- Within the existential risk category, “work in service of reducing existential risk posed by advanced AI systems” received the most support, on average.

- 46.6%; SD = 17.6

- On average, respondents reported that the EA community’s resources (broadly construed) should be in the service of:

- Humans in the near term 13.8%,

- Followed by non-human animals 12.1%, and improving the long-term future via some other route than existential risk reduction 8.8%.

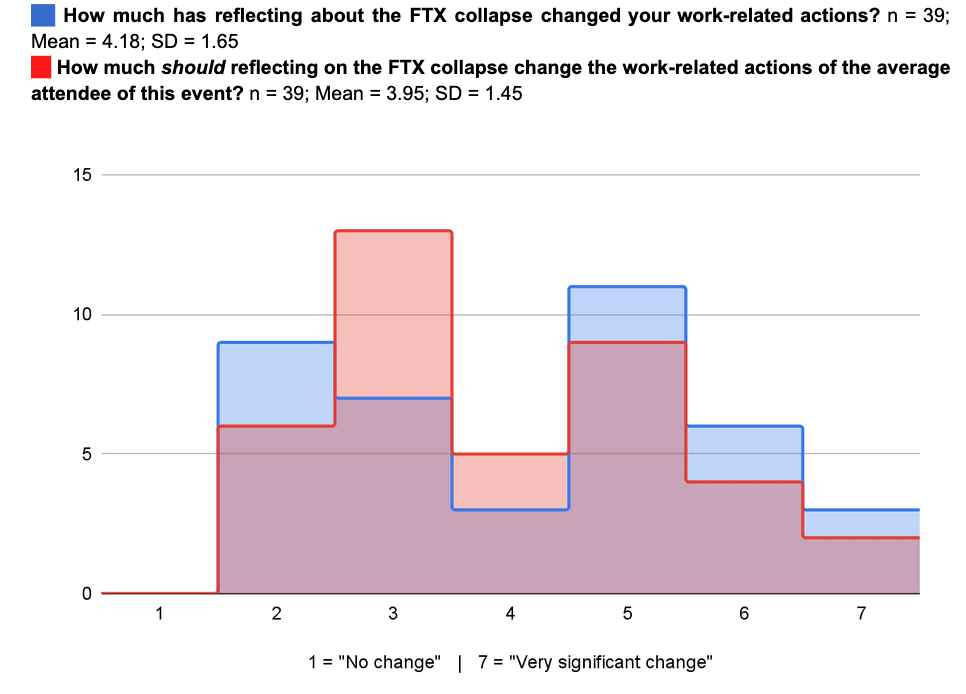

Behavioral Changes Post-FTX (see more)

- On average, respondents reported similar scores for how much they had changed their work-related actions based on FTX reflections and how much they thought the average attendee of the event should change their actions (where 1 = No change and 7 = Very significant change):

- How they changed their actions: Mean of 4.2; SD = 1.7

- How much they thought that the average attendee of this event should change their actions: mean of 4.0; SD = 1.5

Future of EA and Related Communities (see more)

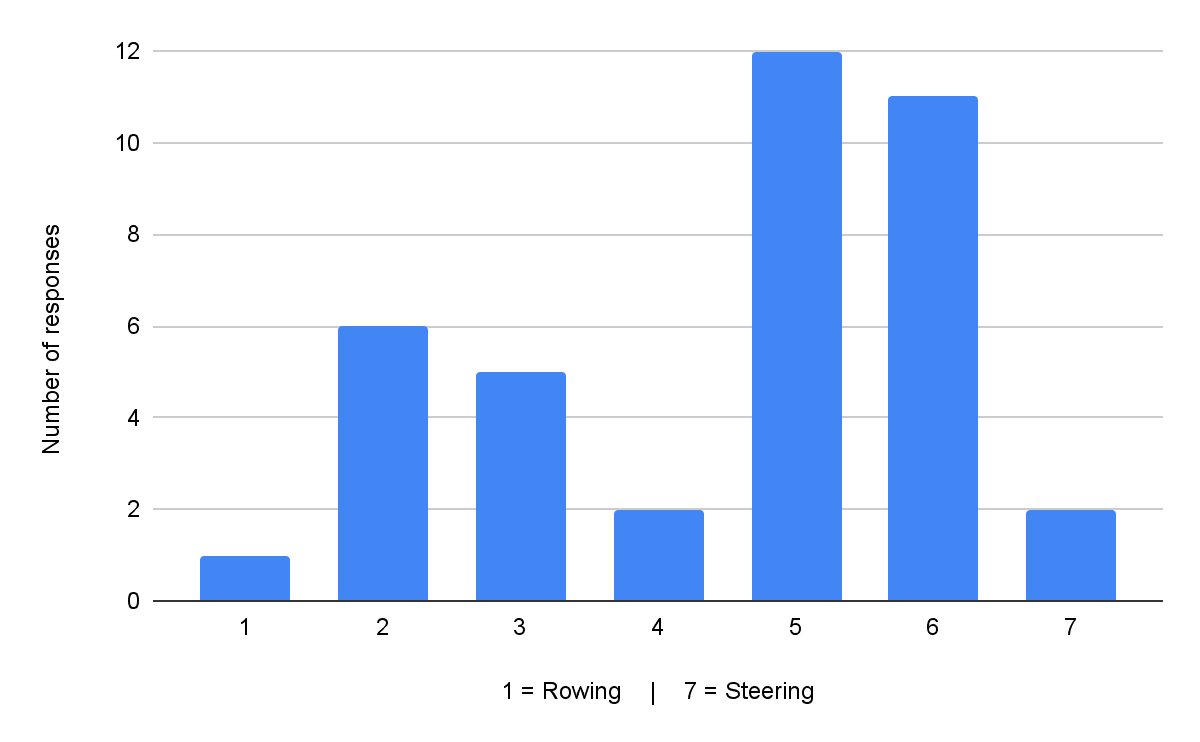

Rowing vs. Steering

- Most respondents think now is more of a time for “steering” the trajectory of the EA community and brand rather than “rowing”

- (Mean = 4.5 in favor of steering on a 1–7 scale; SD = 1.6)

- Both extremes received some support.

Cause X

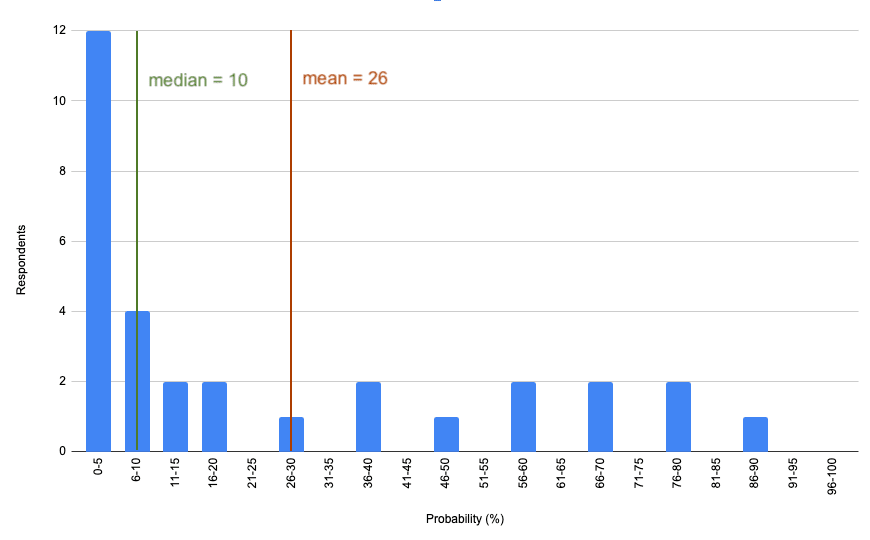

- On average, survey respondents reported a mean 26% likelihood that a cause exists that ought to receive over 20% of the EA community’s resources but currently receives little attention. (Median = 10).

- But many respondents made fair critiques about how this question was missing clearer definitions.

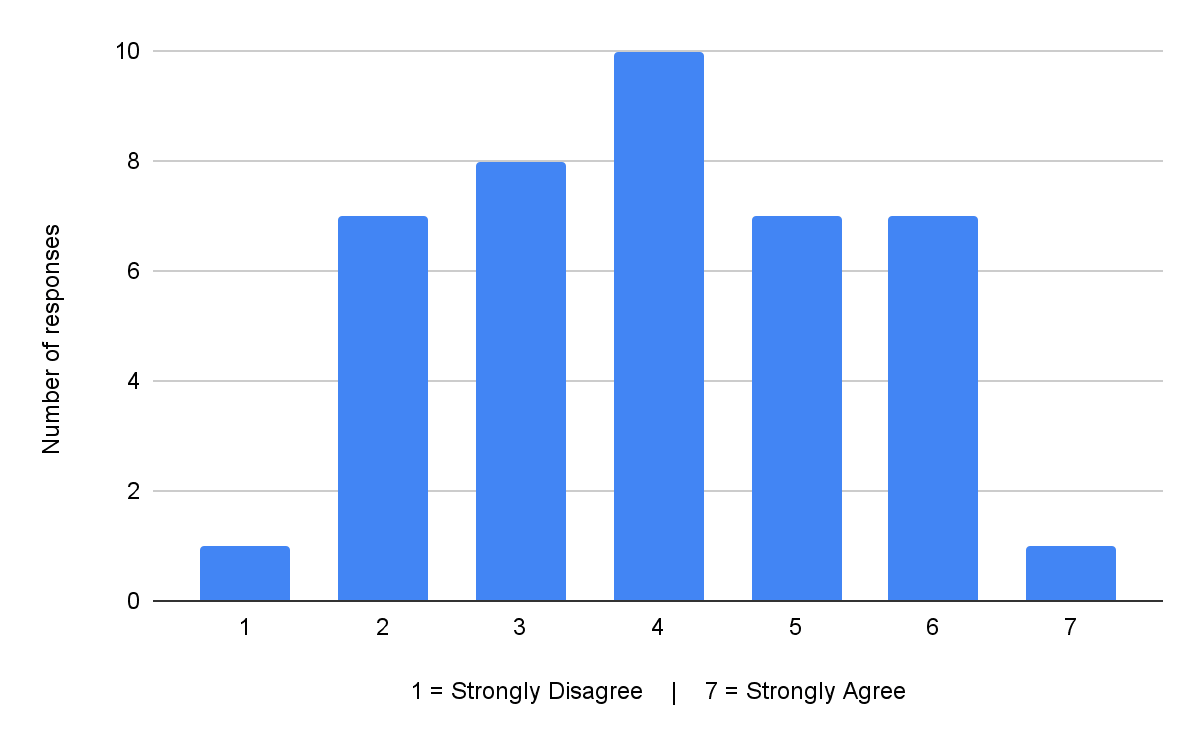

EA Trajectory Changes

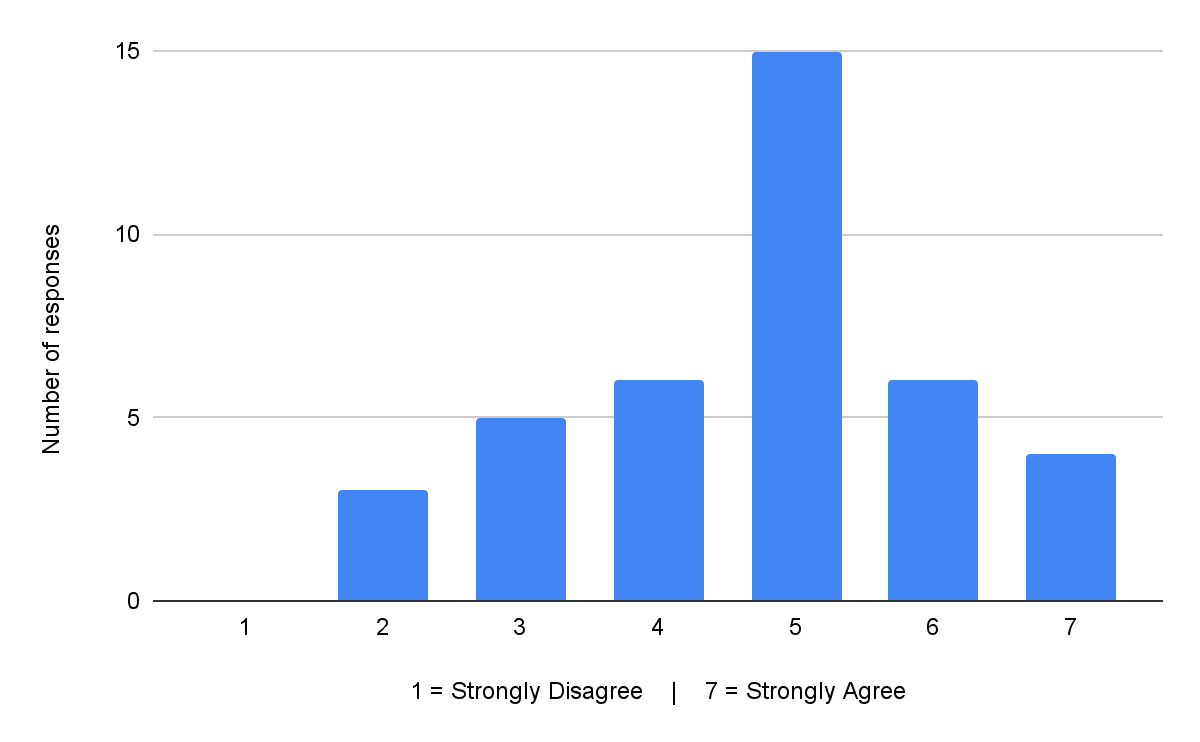

All questions in this section were asked as agree/disagree statements, where 1 = Strongly disagree; 7 = Strongly agree.

- There was a significant disagreement on nearly all statements about operationalised trajectory changes for EA.

- For example, there was no clear consensus on whether “EA orgs and individuals should stop using/emphasizing the term ‘community’”.

- Mean = 4.0; SD = 1.5

- For example, there was no clear consensus on whether “EA orgs and individuals should stop using/emphasizing the term ‘community’”.

- But some statements showed at least some directional agreement (or disagreement).

- Respondents thought that EA actors should be more focused on engaging with established institutions and networks instead of engaging with others in EA

- Mean = 4.9; SD = 1.2

- Respondents thought that there was a leadership vacuum that someone needed to fill

- Mean = 4.8; SD = 1.6

- Respondents thought that we shouldn’t make all EA Global events cause-specific

- Mean = 3.1; SD = 1.6.

- Respondents thought that EA actors should be more focused on engaging with established institutions and networks instead of engaging with others in EA

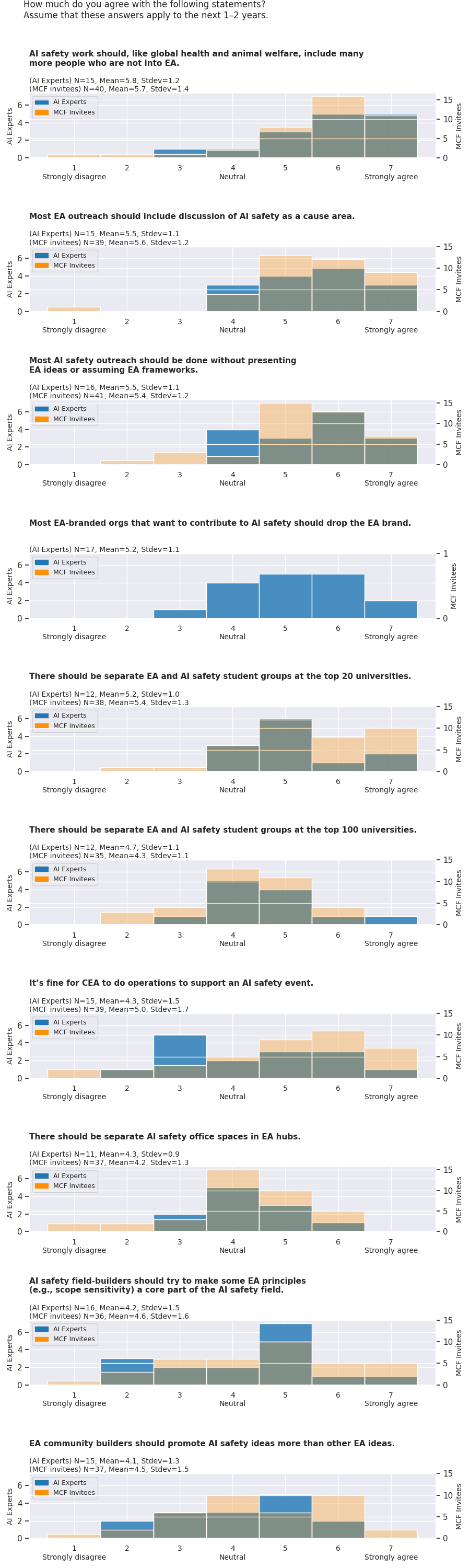

Relationship Between EA & AI Safety (see more)

All questions in this section were asked as agree/disagree statements, where 1 = Strongly disagree; 7 = Strongly agree. We compare MCF invitees’ responses to AI safety (AIS) experts’ responses here.

- Respondents expressed (at least relatively) strong consensus on some statements, although there were always people who disagreed with the consensus. On average:

- Respondents mostly agreed that “most AI safety outreach should be done without presenting EA ideas or assuming EA frameworks”

- Mean = 5.4; SD = 1.2

- Respondents mostly agreed that “Most EA outreach should include discussion of AI safety as a cause area”

- Mean = 5.6; SD = 1.2

- Respondents mostly agreed that “AI safety work should, like global health and animal welfare, include many more people who are not into effective altruism”

- Mean = 5.7; SD = 1.4

- Respondents mostly agreed that “we should have separate EA and AI safety student groups at the top 20 universities”

- Mean = 5.4; SD = 1.3

- Respondents mostly agreed that “most AI safety outreach should be done without presenting EA ideas or assuming EA frameworks”

- There was no clear consensus on some statements.

- There was no clear consensus on the statement that “we should try to make some EA principles (e.g., scope sensitivity) a core part of the AI safety field”

- Mean = 4.6; SD = 1.6

- There was no clear consensus on the statement that “We should promote AI safety ideas more than other EA ideas”

- Mean = 4.5; SD = 1.5

- There was no clear consensus on the statement that “we should try to make some EA principles (e.g., scope sensitivity) a core part of the AI safety field”

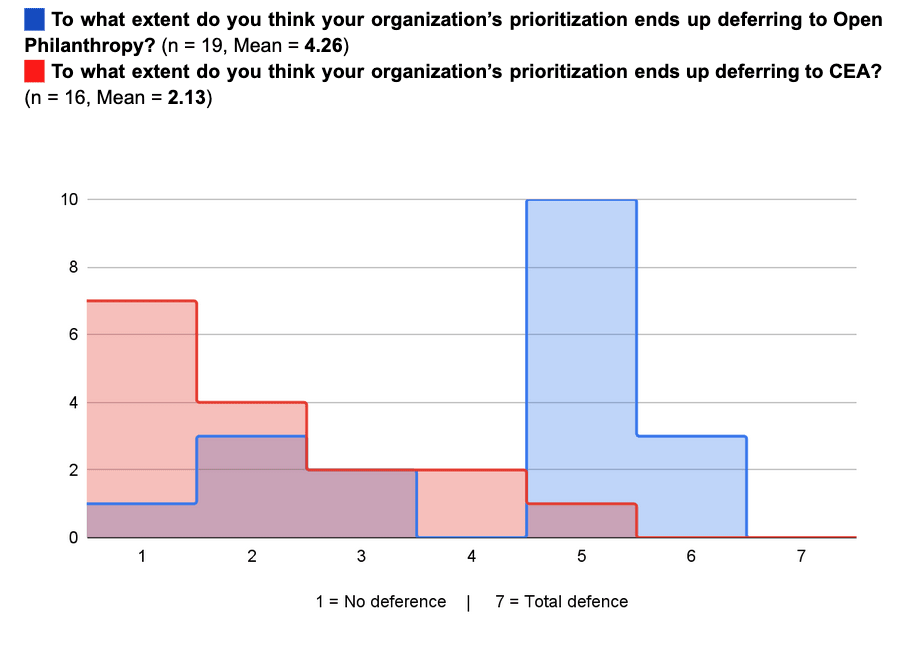

Deference to Open Phil (vs. CEA) (see more)

- Many attendees report that their organizations’ priorities end up deferring to Open Philanthropy.

- When asked “to what extent do you think your organization’s prioritization ends up deferring to Open Philanthropy?”, the mean response was 4.4 where 1 = No deference and 7 = Total deference (SD = 1.6).

- When asked the same question about deference to CEA, the mean response was 2.1 (SD = 1.3).

Results from Pre-Event Survey

Resource Allocation

Caveat for this whole section: The questions in this section were asked to get a quantitative overview of people's views coming into this event. You probably shouldn’t put that much weight on the answers since we don't think that this group is expert at resource allocation/cause prioritization research, and there was understandable confusion about what work fit into what categories.[2]

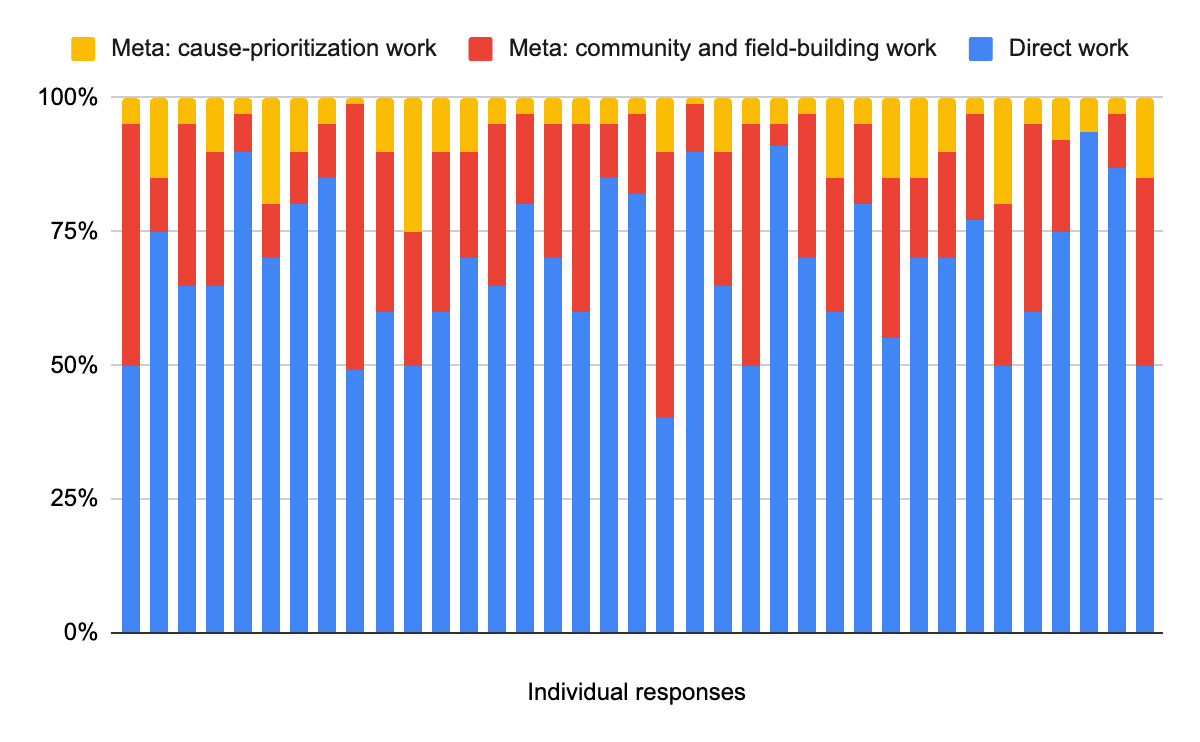

Meta vs Direct Work: What rough percentage of resources should the EA community (broadly construed) devote to the following areas over the next five years?

Group Summary Stats

- Direct Work (n = 37): Mean 68%; SD = 14%

- Meta: community and field-building work (n = 37): Mean 23%; SD = 12%

- Meta: cause-prioritization work (n = 37): Mean = 8%; SD = 6%

Summarized commentary on Meta vs Direct Work

- Difficulty in categorizing certain activities as direct vs meta work: five respondents noted the difficulty in categorizing certain activities as direct vs meta work. For example, AI policy advocacy could be seen as direct AI safety work or meta community building.

- Importance of cause prioritization work: a few respondents emphasized the high value and importance of cause prioritization work, though possibly requiring fewer resources overall than direct or meta work.

- Indirect benefits of direct work: multiple respondents highlighted the indirect benefits of direct work for field building and growth. Doing object-level work can generate excitement, training opportunities, and data to improve the field.

- More resources to go towards community and field-building: a couple of respondents advocated for more resources to go towards community and field-building activities like CEA, 80,000 Hours, and student groups.

- Cap for resources devoted to meta: a few respondents suggested meta work should be capped at around 25% of total resources to avoid seeming disproportionate.

- Difficulty in estimating due to definitions: several respondents noted it was difficult to estimate percentages without clearer definitions of categories and knowledge of current resource allocations.

- Difficulty in answering due to different views depending on the type of resource: a couple of respondents highlighted that funding proportions might differ from staffing proportions for meta vs. direct work.

- Interdependencies and theory of change: one respondent emphasized how much the theory of change for meta work relied on direct work.

- Difficulty answering before changes to the community: one respondent suggested the EA community itself should change substantially before recommending any resource allocation.

What/who should the EA community’s work be in service of?

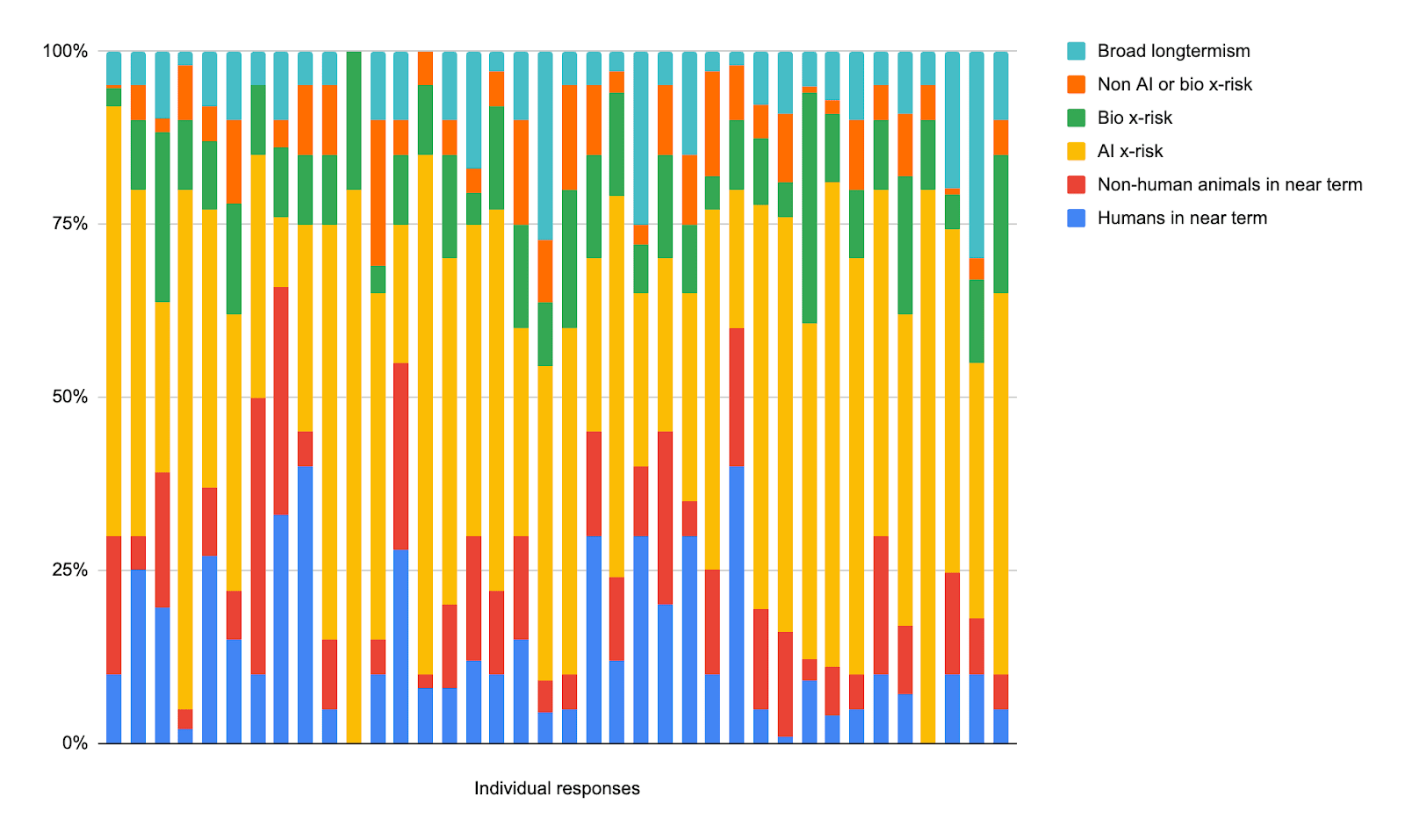

What rough percentage of resources should the EA community (broadly construed) devote to the following areas over the next five years? (n = 38)

Mean (after normalizing) for % of work that should be in service of…

- … reducing existential risk posed by advanced AI systems: 47% (SD = 18%)

- … humans in the near term (without directly targeting existential risk reduction, e.g., work on global poverty): 14% (SD = 11%)

- … non-human animals in the near term (without targeting existential risk reduction, e.g., work on farm animal welfare or near-term wild animal welfare): 12% (SD = 9%)

- … reducing existential risk posed by pandemics and biotechnology: 12% (SD = 6%)

- … improving the long term future via some other route than existential risk reduction (e.g., cause-prioritization work or work on positive trajectory changes): 9% (SD = 7%)

- … reducing existential risk via some other route than AI or pandemics 7% (SD = 5%)

The three different routes focused on reducing existential risk sum up to ~65%.

Summarized commentary on What/Who the EA Community’s Resources Should Be in Service Of

- Commentary on AI safety/alignment (mentioned by 4 respondents):

- Some respondents said AI safety/alignment should receive a large percentage of resources

- A few respondents noted that AI safety/alignment should probably be separated from EA into its own movement or cause area.

- Other efforts to improve the long-term future (mentioned by 3 respondents):

- A few respondents emphasized that there should be more work on improving the long-term future beyond just reducing existential risk.

- For example:

- "Condition on AI alignment being solved: What are the things we need to do to make the LTF go well?"

- Meta (mentioned by 2 respondents):

- Some respondents emphasized the importance of meta work like cause prioritization.

- Engagement with external experts (mentioned by 2 respondents):

- Some respondents wanted to see more engagement with non-EA actors, especially for evolving causes like AI safety or research-intensive fields like biosecurity.

- For example:

- “Fields like pandemic preparedness connecting more with established institutions could spark more valuable work that wouldn’t have to flow from scarce EA resources or infrastructure.”

- Differentiating resource type (mentioned by 2 respondents):

- Some respondents pointed out that resource allocations for funding vs labor should be different.

- For example:

- "I think the splits for ‘funding’ and ‘1000 most highly engaged EAs’ should look very different."

Reflections on Past Year

Changes in work-related actions post-FTX

EA and related communities are likely to face future “crunch times” and “crises”. In order to be prepared for these, what lessons do we need to learn from the last year?

- Improve governance and organizational structures (mentioned by 7 respondents):

- Shore up governance, diversify funding sources, build more robust whistleblower systems, and have more decentralized systems in order to be less reliant on key organizations/people.

- Build crisis response capabilities (mentioned by 6 respondents):

- Create crisis response teams, do crisis scenario planning, have playbooks for crisis communication, and empower leaders to coordinate crisis response.

- Improve vetting and oversight of leaders (mentioned by 5 respondents):

- Better vet risks from funders/leaders, have lower tolerance for bad behavior, and remove people responsible for the crisis from leadership roles.

- Diversify and bolster communication capacities (mentioned by 5 respondents):

- Invest more in communications for crises, improve early warning/information sharing, and platform diverse voices as EA representatives.

- Increase skepticism and diligence about potential harms (mentioned by 4 respondents):

- Adopt lower default trust, consult experts sooner, and avoid groupthink and overconfidence in leaders.

- Learn about human factors in crises (mentioned by 3 respondents):

- Recognize the effect of stress on behavior, and be aware of problems with unilateral action and the tendency not to solve collective action problems.

- Adopt more resilient mindsets and principles (mentioned by 3 respondents):

- Value integrity and humility, promote diverse virtues rather than specific people, and update strongly against naive consequentialism.

The Future of EA

Summary of visions for EA

- A group that embraces division of labor across 1) research focused on how to do the most good, 2) trying interventions, and 3) learning and growing. Open to debate and changing minds, as well as acting amidst uncertainty. Focused on getting things done rather than who gets credit. Committed to truth, virtue, and caring about consequences.

- A community that proves competent at creating real-world impact and influence in policy, industry, and philanthropy. Talented people use EA ideas to inform impactful career choices.

- Returning to EA's roots as a multi-cause movement focused on doing the most good via evidence and reason. A set of ideas/a brand many institutions are proud to adopt in assessing and increasing the efficacy of their altruism.

- A community exemplifying extreme (but positive) cultural norms—collaborative, humble, focused on tractable issues ignored by others. The community should be guided by principles like impartial altruism and epistemic virtue. People identify with principles and values rather than backgrounds, cultural preferences, or views on morality.

- A community that can provide funding, talent, coordination on cause prioritization, and epistemic rigor to other cause areas. But not expecting allegiance to the EA brand from those working in areas.

- A set of intellectual ideas for doing good. People are guided to doing good through EA ideas, but don't necessarily continually engage once on that path.

- A thriving, diverse community guided by principles like trust and respect. Including research, scaling ideas/organizations, and policy engagement. Appreciation for the different skills needed.

- Split into AI-focused and EA-focused communities. The latter potentially smaller in resources but focused on EA's unique value-add.

- Focused primarily on knowledge dissemination i.e.,publications and seminars. Status from contributing to understanding. Engages with policy in the same way a subject-matter expert might—there to inform.

- Promotes compassion, science, scope sensitivity, responsibility. Local groups take various actions aligned with principles. Associated with cause-specific groups.

- Either widespread, welcoming movement or weirder intellectual exploration of unconventional ideas. Can't be both, and currently in a weird middle ground.

- High-trust professional network brought together by a desire for impact.

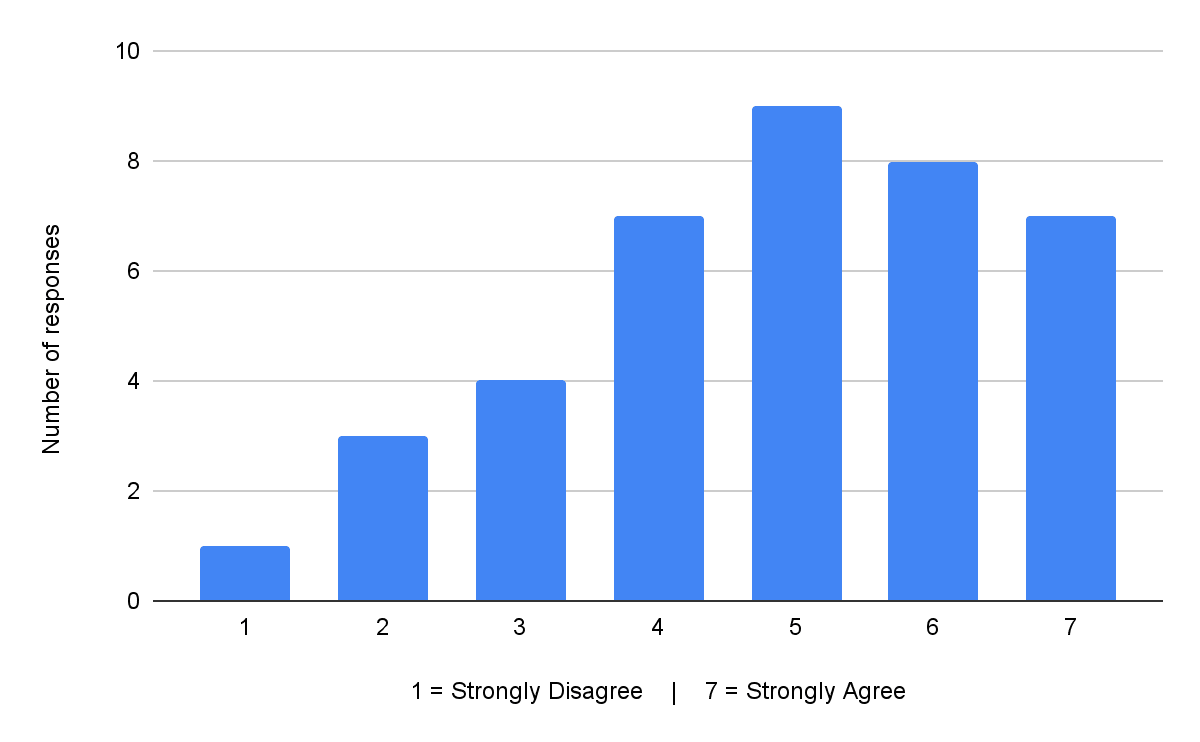

Rowing vs steering EA

To what degree do you think we should be trying to accelerate the EA community and brand’s current trajectory (i.e., “rowing”) versus trying to course-correct the current trajectory (i.e., “steering”)? (n = 39; Mean = 4.51; SD = 1.63 )

Commentary on Rowing vs Steering

- Steer to split/differentiate the EA brand from AI safety/longtermist causes (raised by 3 respondents):

- Some respondents suggested creating separate branding/groups for AI safety and longtermism instead of having them be under the broad EA umbrella.

- Steer to improve epistemics/avoiding drift from core EA principles (raised by 5 respondents):

- Multiple respondents emphasized the importance of steering EA back towards its original principles and improving community epistemics, rather than just pursuing growth.

- Both rowing and steering are important/should be balanced (raised by 4 respondents):

- Several respondents said both growing the community (rowing) and realigning it (steering) are useful, and we shouldn't focus solely on one. Some said we should alternate between them based on circumstances.

- Rowing could be focused on fundraising/Earning to Give (raised by 2 respondents):

- A couple of respondents suggested rowing/growth could be specifically focused on expanding Earning to Give rather than expanding the EA brand overall.

- We should steer towards more formal institutions and addressing harms (raised by 2 respondents):

- Some emphasized the importance of steering efforts focused on formalizing organizational structures and addressing potential downsides/harms from community growth.

- Rowing requires having clarity about EA's future direction (raised by 2 respondents):

- A couple of respondents said significant further rowing/growth doesn't make sense until there is clearer steering and alignment around EA's future trajectory and principles.

Cause X

What do you estimate is the probability (in %) that there exists a cause which ought to receive over 20% of the EA community's resources but currently receives little attention? (n = 31; Mean = 26%; Median = 10%; SD = 29%)

Summary of commentary on Cause X

Problems with the question

- This question received a lot of fair criticism, and three people chose not to answer it because of definitional issues. Issues people pointed out include:

- It’s unclear what time frame this question is referring to.

- It depends a lot on what level “cause” is defined as.

- “Little attention” was poorly operationalized.

Nuance around Cause X

- Marginal Analysis: some respondents pointed out that while there might be high-leverage individual opportunities, it may not be under the umbrella of a single, neglected 'cause'.

- Potential for Future Discovery: some respondents thought the likelihood of discovering a neglected cause would be higher in the future, particularly in a world transformed by advancements in AI.

- Low Success Rate so far: some respondents expressed the sentiment that the EA community has already been quite rigorous in evaluating potential causes, making it unlikely that a completely overlooked, yet crucial, cause exists.

Cause X Candidates proposed include:

- Digital sentience (x3)

- Invertebrate welfare

- Wild animal welfare

- Whole Brain Emulation (x2)

- Post-AGI governance stuff (and other stuff not captured in existing AI technical and governance work) (x2)

- War and international relations work

- Pro economic growth reforms

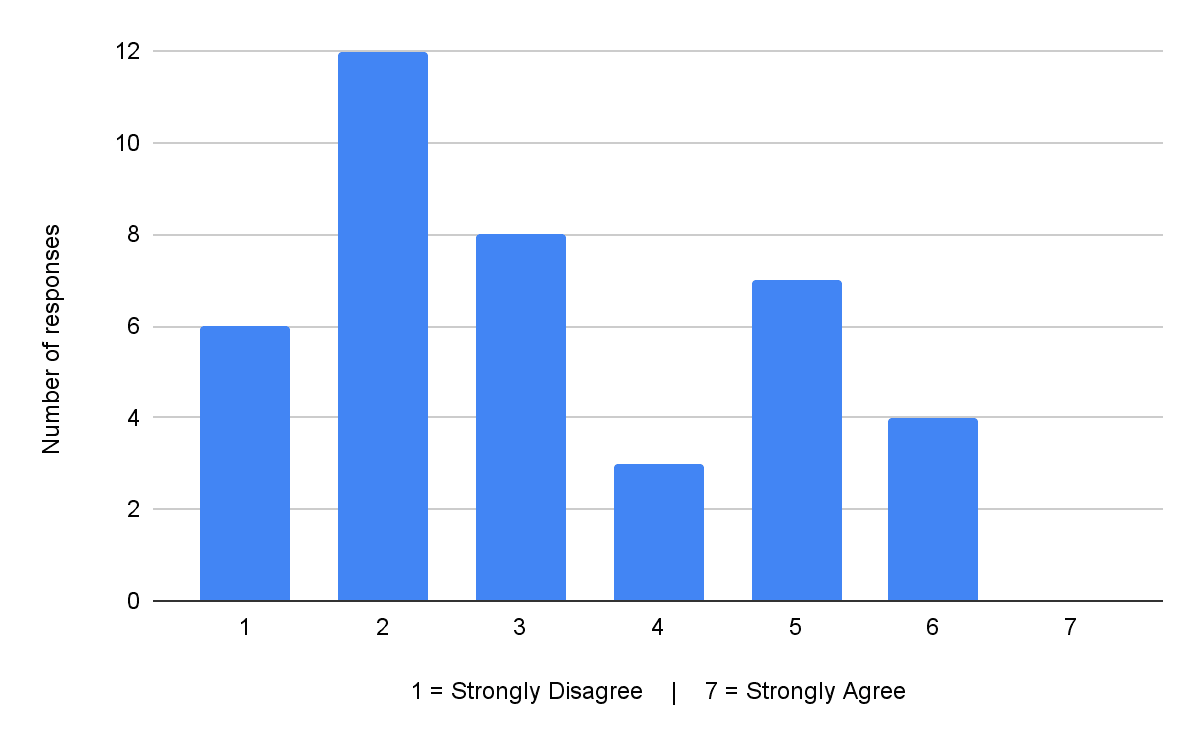

Agreement voting for EA trajectory changes

EA orgs and individuals should stop using/emphasizing the term “community.” (n = 41, Mean = 3.98, SD = 1.49)

Assuming there’ll continue to be three EAG-like conferences each year, these should all be replaced by conferences framed around specific cause areas/subtopics rather than about EA in general (e.g. by having two conferences on x-risk or AI-risk and a third one on GHW/FAW) (n = 40, Mean = 3.13, SD = 1.62)

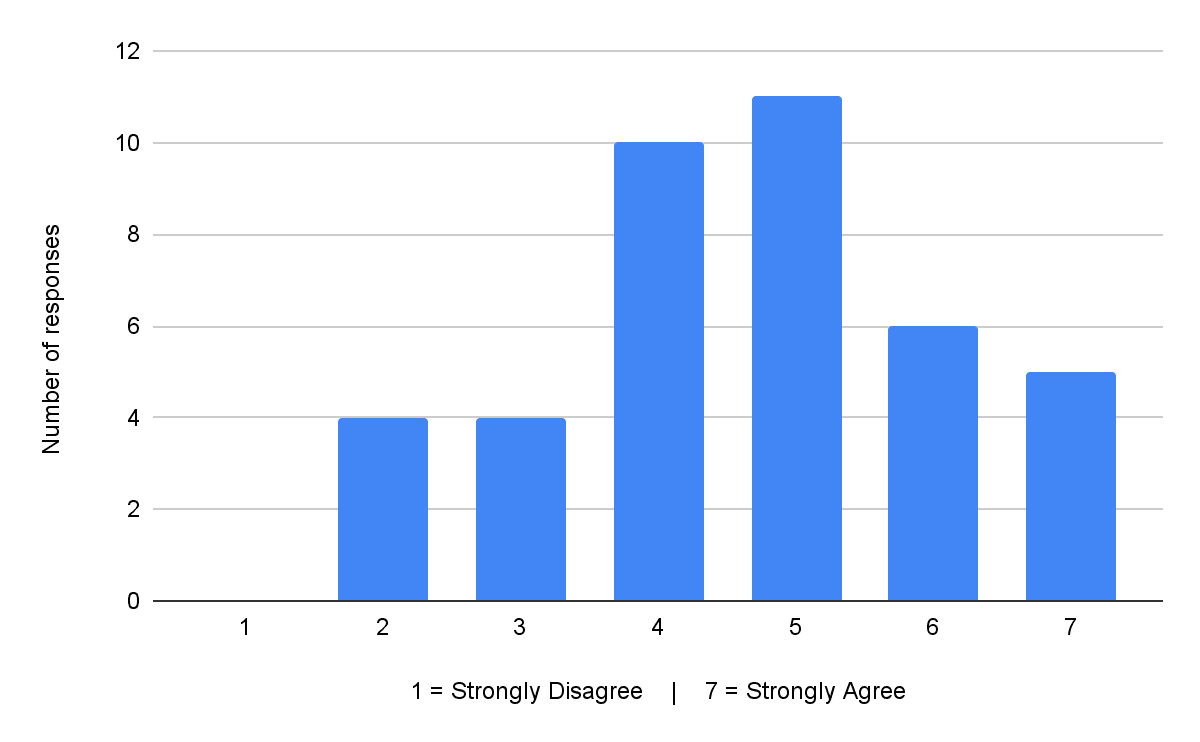

Effective giving ideas and norms should be promoted independently of effective altruism. (n = 40, Mean = 4.65, SD = 1.46)

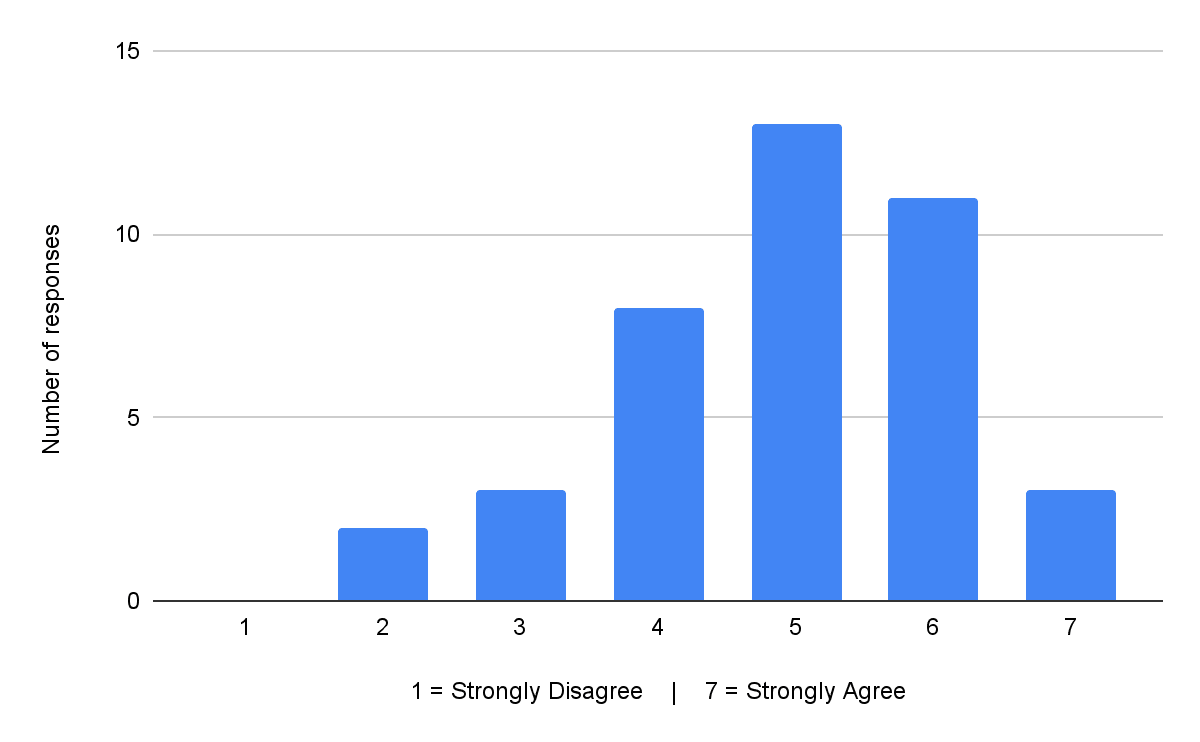

People in EA should be more focused on engaging with established institutions and networks instead of engaging with others in EA. (n = 40, Mean = 4.93, SD = 1.25)

There is a leadership vacuum in the EA community that someone needs to fill. (n = 39, Mean = 4.85, SD = 1.63)

Summary of comments about leadership vacuum question:

- Respondents' comments suggested that answers to this question should be interpreted with caution since the question conflated two questions and had definitional issues.

- Some people agreed that there was a leadership vacuum and that it would be great if more people stepped into community leadership roles, but there was skepticism that the right people for this currently existed.

- At least one other respondent reported that they think there’s a leadership vacuum, but that this shouldn’t be filled and leaders should be more explicit about how decentralized EA is.

- One respondent pointed out that leadership could mean many things, and that it wasn’t clear what people were voting on here.

We should focus more on building particular fields (AI safety, effective global health, etc.) than building EA. (n = 39, Mean = 4.72, SD = 1.38)

Summary of comments about agreement voting questions

- Who is “we”? Multiple respondents pointed out that their answers to the above question are sensitive to how ‘we’ is defined.

- Marginal thinking: Multiple respondents clarified that their answers are better interpreted as what they think “on the current margin”.

- False dichotomies: Multiple respondents thought that a lot of these questions set up false dichotomies.

What are some mistakes you're worried the EA community might be making?

Note that respondents often disagreed with each other on this question: some thought we should do more X, and others thought we should do less X.

Crisis response:

- Not learning enough from FTX, both in terms of the specific incident and naive consequentialism more broadly (x3)

- Pivoting too much or over-updating in response to setbacks such as FTX (x3)

- Becoming too risk averse (x2)

Leadership:

- Not doing enough to counteract low morale and burnout in leadership (x2)

- Allowing a leadership vacuum to stay for too long and not communicating clearly about what EA is, who leads it, how decisions are made (x2)

- Not thinking enough about the new frontier of causes people may not take seriously by default, like digital sentience and post-AGI society (x2)

Culture:

- Under-emphasizing core values and virtues in EA culture like humility, integrity, kindness and altruism (x4)

- Being too PR-y and bureaucratic instead of authentic

- Not instilling a sense of excitement about the EA project

- Caring too much about inside-the-community-reactions and drama instead of keeping gaze outward on the world / building for the sake of the world (x3)

- Caring too much about "EA alignment" and under-valuing professional expertise

- Becoming vulnerable to groupthink from too many close networks

- Not emphasizing thinking independently enough, and making it seem like EA can just tell people what to do to have an impact

Resource allocation:

- Not investing enough in building serious, ambitious projects

- Growing at the wrong pace: either growing too much (mentioned once) or not growing enough (mentioned once)

AI:

- Not updating enough about AI progress

- EA resources swinging too hard towards AI safety (x3)

- Being too trusting of AGI labs

- Not realizing how much some Transformative AI scenarios require engagement with various non-EA actors, and becoming irrelevant in such scenarios as a result

- Trying to shape policies without transparency

- Not investing money into an AI compute portfolio

- Not being nuanced enough in transmitting EA standards or culture to a new field with its own culture and processes

Other:

- Too little coordination among key orgs and leaders

- Not investing enough in funder diversity / relying too much on OP (x3)

- Too much concern for what community members think, resulting in less outreach and less useful disagreement.

- Poor strategic comms leading EA-related actors and ideas to be associated with narrow interests or weird ideologies (x2)

Relationship Between EA & AI Safety

Rating agreement to statements on the relationship between EA & AIS

Below we compare MCF invitees' responses to AI safety experts asked the same questions in the AI safety field-building survey.

Summary of Commentary on EA's Relationship with AI Safety

- Who is ‘we’?: As with other agreement voting in this survey, some respondents said their answers were sensitive to who exactly “we” is.

- Concern about CEA supporting AIS work: Two respondents mentioned concerns about CEA being involved in AI safety work.

- Importance of new CEA Executive Director: At least one respondent mentioned that a lot of these decisions may come down to the new CEA ED.

- Clearer separation: Multiple respondents clarified their view on why they think EA and AIS should be more distinct.

- For example:

- Conflation of EA and AIS can be harmful to both fields,

- Concerns that EAs views on AI safety seem too narrow and don’t include a lot of obviously good things, and

- AIS should not require EA assumptions.

- Regardless of what the split ends up being, others pushed for people and projects to communicate more clearly if they see themselves promoting EA principles or AI safety work.

- For example:

- Benefit from connecting EA to AIS: Two respondents mentioned ways EA could hopefully benefit AIS, including bringing important values, virtues, and norms to the field, like rigorous prioritization.

- Talent bottlenecks in AIS: One respondent expressed concerns with non-targeted outreach for AIS (and to a lesser extent EA), since AIS may not have enough open opportunities at the moment to absorb many people.

- Avoiding rebrands: One respondent emphasized the importance of EA owning its history, including e.g., the FTX scandal, and didn't want people to pivot to AIS for bad reasons.

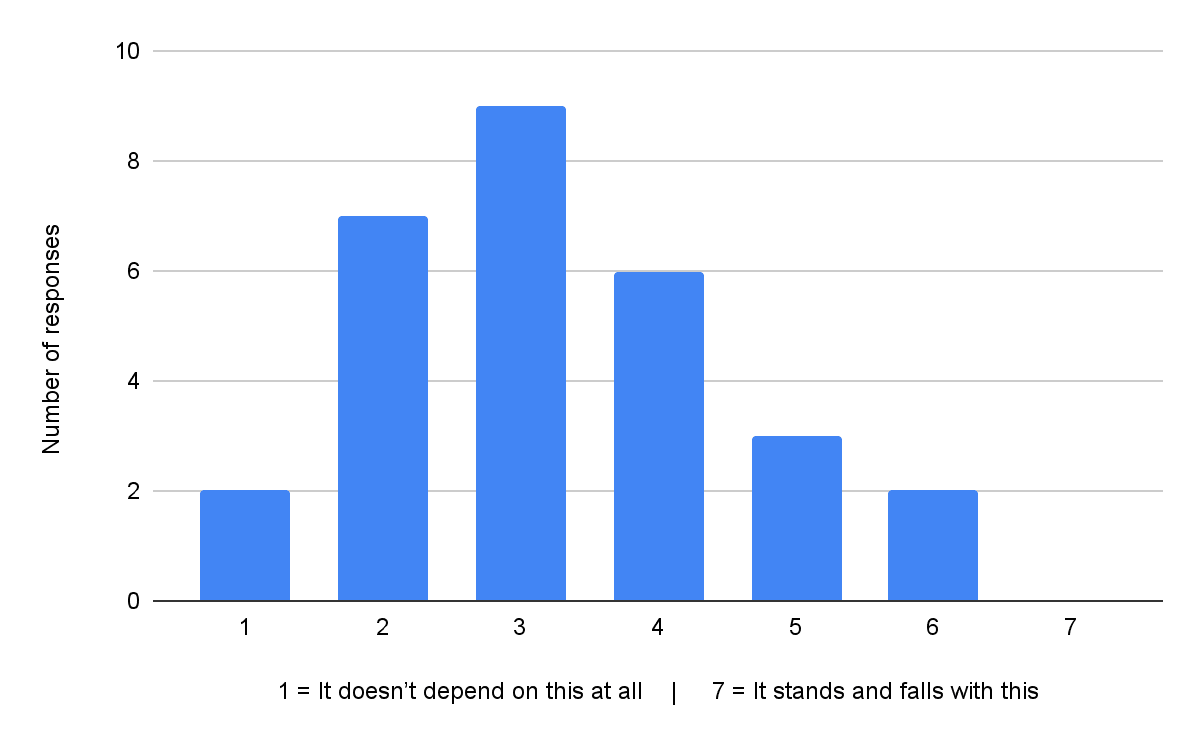

Relationship between meta work and AI timelines

How much does the case for doing EA community-building hinge on having significant probability on “long” (>2040) timelines? (n = 29, Mean = 3.24, SD = 1.33)

Commentary on relationship between meta work and AI timelines

- Depends on the community building (CB) work: Multiple respondents mentioned how the answer to this question depends on the type of CB work. For example, recruiting highly credible and experienced people to work in AI safety, or guiding more donors to give to AI safety, would probably be important levers to reducing risk even with 10-year timelines.

- Crunch time infrastructure: One respondent mentioned that community building work to improve coordination infrastructure could help a lot in short timeline worlds, since such infrastructure would be critical for people to communicate and collaborate in “crunch times.”

There has been a significant increase in the number of people interested in AI safety (AIS) over the past year. What projects and updates to existing field-building programs do you think are most needed in light of that?

- Programs to channel interest into highly impactful roles, with a focus on mid-career community-building projects.

- More work on unconventional or 'weirder' elements in AI governance and safety, such as s-risk and the long-reflection.

- Reevaluation of how much the focus on AI depends on it being a neglected field.

- More educational programs and introductory materials to help people get up to speed on AI safety.

- Enhancements to the AI Alignment infrastructure, including better peer-review systems, prizes, and feedback mechanisms.

- Regular updates about the AIS developments and opportunities

- More AI-safety-specific events

Prioritization Deference to OP vs CEA

Note that this question was asked in an anonymous survey that accompanied the primary pre-event survey summarized above. We received 23 responses (n = 23) out of 45 people invited to take the anonymous survey.

We hope you found this post helpful! If you'd like to give us anonymous feedback you can do so with Amy (who runs the CEA Events team) here.

- ^

We said this survey would take about 30 minutes to complete, but in hindsight, we think that was a significant underestimate. Some respondents reported feeling rushed because of our incorrect time estimate.

- ^

This caveat was included for event attendees when we originally shared the survey report.

michel @ 2023-11-03T23:30 (+2)

A quick note to say that I’m taking some time off after publishing these posts. I’ll aim to reply to any comments from 13 Nov.