There should be more AI safety orgs

By mariushobbhahn @ 2023-09-21T14:53 (+117)

I’m writing this in my own capacity. The views expressed are my own, and should not be taken to represent the views of Apollo Research or any other program I’m involved with.

TL;DR: I argue why I think there should be more AI safety orgs. I’ll also provide some suggestions on how that could be achieved. The core argument is that there is a lot of unused talent and I don’t think existing orgs scale fast enough to absorb it. Thus, more orgs are needed. This post can also serve as a call to action for funders, founders, and researchers to coordinate to start new orgs.

This piece is certainly biased! I recently started an AI safety org and therefore obviously believe that there is/was a gap to be filled.

If you think I’m missing relevant information about the ecosystem or disagree with my reasoning, please let me know. I genuinely want to understand why the ecosystem acts as it does right now and whether there are good reasons for it that I have missed so far.

Why?

Before making the case, let me point out that under most normal circumstances, it is probably not reasonable to start a new organization. It’s much smarter to join an existing organization, get mentorship, and grow the organization from within. Furthermore, building organizations is hard and comes with a lot of risks, e.g. due to a lack of funding or because there isn’t enough talent to join early on.

My core argument is that we’re very much NOT under normal circumstances and that, conditional on the current landscape and the problem we’re facing, we need more AI safety orgs. By that, I primarily mean orgs that can provide full-time employment to contribute to AI safety but I’d also be happy if there were more upskilling programs like SERI MATS, ARENA, MLAB & co.

Talent vs. capacity

Frankly, the level of talent applying to AI safety organizations and getting rejected is too high. We have recently started a hiring round and we estimate that a lot more candidates meet a reasonable bar than we could hire. I don’t want to go into the exact details since the round isn’t closed but from the current applications alone, you could probably start a handful of new orgs.

Many of these people could join top software companies like Google, Meta, etc. or even already are at these companies and looking to transition into AI safety. Apollo is a new organization without a long track record, so I expect the applications for other alignment organizations to be even stronger.

The talent supply is so high that a lot of great people even have to be rejected from SERI MATS, ARENA, MLAB, and other skill-building programs that are supposed to get more people into the field in the first place. Also, if I look at the people who get rejected from existing orgs like Anthropic, OpenAI, DM, Redwood, etc. it really pains me to think that they can’t contribute in a sustainable full-time capacity. This seems like a huge waste of talent and I think it is really unhealthy for the ecosystem, especially given the magnitude and urgency of AI safety.

Some people point to independent research as an alternative. I think independent research is a temporary solution for a small subset of people. It’s not very sustainable and has a huge selection bias. Almost anyone with a family or with existing work experience is not willing to take the risk. In my experience, women also have a disproportional preference against independent research compared to men, so the gender balance gets even worse than it already is (this is only anecdotal evidence, I have not looked at this in detail).

Furthermore, many people just strongly prefer working with others in a less uncertain, more regular environment of an organization, even if that organization is fairly new. It’s just more productive and more fun to work with a team than as an independent.

Additionally, getting funding for independent research right now is also quite hard, e.g. the LTFF is currently quite resource-constrained and has a high bar (not their fault!; update: may be less funding constrained now). All in all, independent research just really seems like a band-aid to a much bigger problem.

Lastly, we’re strongly undercounting talent that is not already in our bubble. There are a lot of people concerned about existential risks from AI that are already working at existing tech companies. They would be willing to “take the jump” and join AI safety orgs but they wouldn’t take the risk to do independent research. These people typically have a solid ML background and could easily contribute if they skilled up in alignment a bit.

In almost all normal industries, organizations are aware that their hires don’t really contribute for the first couple of months and see this education as an upfront investment. In AI safety, we currently have the luxury that we can hire people who can contribute from day one. While this is very comfortable for the organizations, it really is a bad sign for the ecosystem as a whole.

The opportunity costs are minimal

Even if more than half of new AI safety orgs failed, it seems plausible that the opportunity costs of funding them are minimal. A lot of the people who would be enabled by more organizations would just be unable to contribute in the counterfactual world. In fact, more people might join capability teams at existing tech companies for lack of alternatives.

In the cases where a new org would fail, their employees could likely try to join another AI safety org that is still going strong. That seems totally fine, they probably learned valuable skills in the meantime. This just seems like normal start-ups and companies operate and should not discourage us from trying.

I have heard an argument along the lines of “new orgs might lock up great talent that then can’t contribute at the frontier safety labs” which would imply some opportunity costs. This doesn’t seem like a strong argument to me. The people in the new orgs are free to move to big orgs and Anthropic/DM/OpenAI has a lot more money, status, compute, and mentorship available. If they want to snatch someone, they probably could. This feels like something that the people themselves should decide--right now they just don’t have that option in the first place.

We are wasting valuable time

Warning: This section is fairly speculative.

I know that some people have longer timelines than I do but even under more conservative timelines, it seems reasonable that there should be more people working on AI safety right now.

If we think that “solving” alignment is about as hard as the Manhatten project (130k people) or the Apollo project (400k people) then we need to scale the size of the community by about 3 orders of magnitude, assuming there are currently 100-500 full-time employees working on alignment. Let’s assume, for simplicity, Ajeya Cotra’s latest public median estimate for TAI of ~2040. This would imply about 3 OOMs in ~15 years or 1 OOM every 5 years. If you think it’s harder than the two named projects maybe 4 OOMs may be more accurate.

There are a couple of additional changes I’d personally like to make to this trajectory. First, I’d much rather eat up the first 2 OOMs early (maybe in the first 3-5 years) so we can build relevant expertise and engage in research agendas with longer payoff times. Second, I just don’t think the timelines are accurate and would rather calculate with 2033 or earlier. Under these assumptions, 10x every 2 years seems more appropriate.

A 10x growth every ~4 years could be done with a handful of existing orgs but a 10x growth every ~2 years probably requires more orgs. Since my personal timelines are much closer to the latter, I’m advocating for more organizations. Even on the slower end of the spectrum, we should probably not bank on the fact that the existing orgs are able to scale at the required pace and diversify our bets.

I sometimes hear a view that doing research now is almost irrelevant and we should keep a big war chest for “the end game”. I understand the intuition but it just feels wrong to me. Lots of research is relevant today and we do already get relevant feedback from empirical work on current AI systems. Evals and interpretability are obvious examples but research into scalable oversight or adversarial training can be done today as well and seems relevant for the future. Furthermore, if we wanted to spend >50% of the budget in the last year (assuming we knew when that was), we still need people to spend that money on. Building up the research and engineering capacity today seems already justified from a skill- and tool-building perspective alone.

The funding exists (maybe?)

I find it hard to get a good sense of the funding landscape right now (see e.g. this post), for example, I currently don’t have a good estimate of how much money OpenPhil has available and how much of that is earmarked for AI safety. Thus, I won’t speculate too much on the long-year funding plan of existing AI safety funders.

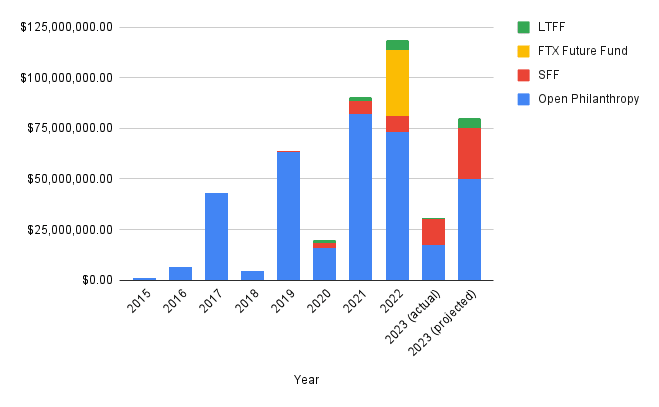

However, historically funding for AI safety has looked ~like this (copied from this post):

This indicates that OpenPhil, SFF, and others allocate high double-digit millions into AI safety every year and the trend is probably rising. My best guess is, therefore, that funders would be willing to support new orgs with reasonably-sized seed grants if they met their bar. I’m not very sure where that bar currently is, but I personally think it totally makes sense to fully fund a fairly junior group of researchers for a year who want to give it a go as long as they have a somewhat reasonable plan (as stated above, the opportunity costs just aren’t that high). Funders like OpenPhil might be hesitant to fund a new org but they may be more willing to fund a preliminary working group or collective that could then transition into an org if needed.

If funders agree with me here, I think it would be great if they signaled this willingness, e.g. by having a “starter package” for an org where a group of up to 5 people gets $100-300k (includes salary, compute, food, office, equipment, etc.) per person to do research for ~a year (where the exact amount depends on experience and promisingness). To give concrete examples, Jesse’s work on SLT and Kaarel’s work on DLK (or to be more precise, the agendas that followed from those works; the actual agendas are probably many months ahead of the public writing by now) are promising enough that I would totally give them $500k+ for a year to develop it further if I were in a position to move these amounts of money. If the project doesn’t work out, they could still close the org/working group and move on.

Another option is to look for grants from other sources, e.g. non-EA funders or VCs.

AI safety is becoming more and more mainstream and philanthropic and private high-networth individuals are becoming interested in allocating money to the space. Furthermore, there are likely going to be government grants available for AI safety in the near future. These funding schemes are often opaque and the probability of success is lower than with traditional EA funders but it is now at least possible at all.

Another source of funding that I was not really considering until recently is VC funding. There are a couple of VCs who are interested in AI safety for the right reasons and it seems to me that there will be a large market around AI safety in some form. People just want to understand what’s going on in their models and what their limitations are, so there surely is a way to create products and services to satisfy these needs. It’s very important though to check if your strategy is compatible with the VCs’ vision and to be honest about your goals. Otherwise, you’ll surely end up in conflict and won’t be able to achieve the goal of reducing catastrophic risks from AI.

VC backing obviously reduces your option space because you need to eventually make a product. On the other hand, there are many more VCs than there are donors, so it may be worth the trade-off (also getting VC backing doesn’t exclude getting donations, it just makes them less likely).

How big is the talent gap?

I don’t know exactly how big the gap between available spots and available talent is but my best guess is ~2-20x depending on where you set the bar.

I don’t have any solid methodology here and I’d love for someone to do this estimate properly but my personal intuitions come from the following:

- Seeing our own hiring process and how many applications we have received so far where I think “This person could have a meaningful contribution within the first 3 months”.

- Seeing how many people were rejected from SERI MATS despite them probably being able to contribute.

- Seeing the number of people getting rejected from MLAB and REMIX despite me thinking that they “meet the bar”.

- Seeing the number of people getting rejected from Anthropic, DeepMind, Redwood, ARC, OpenAI, etc. despite me personally thinking that they should clearly be hired by someone.

- Seeing the number of people struggling to find full-time work after SERI MATS who I would intuitively judge as “hirable”.

- Seeing the number of highly qualified candidates who want to “take the jump” from a different ML job to AI safety but can’t due to lack of capacity.

- Talking to people who are currently hiring about the level of talent they have to reject due to lack of funding or mentorship capacity.

I can’t put any direct numbers on that because it would reveal information I’m not comfortable sharing in public but I can say that my intuitive aggregate conclusion from this results in a 2-20x talent-to-capacity gap. The 2x roughly corresponds to “could meaningfully contribute within a month of employment” and the 20x roughly corresponds to “could meaningfully contribute within half a year or less if provided with decent mentorship”.

How did we end up here?

My current feeling is that AI safety is underfunded and desperately needs more capacity to allow more talent to contribute. I’m not sure how we ended up here but I could imagine the following reasons to play a role.

- Reduction in available funding, e.g. due to the FTX crash and a general economic downturn.

- A lack of good funding opportunities, e.g. there just weren’t that many organizations that could effectively use a lot of money and were clearly trying to reduce catastrophic risks from AI.

- A lack of people being able to start new orgs: Starting and running an organization requires a different skillset than research. People with both the entrepreneurial skills to build an org and the technical talent to steer a good agenda may just be fairly rare.

- A conservative funding mindset from past funding choices: OpenPhil and others have tried to fund AI safety research for years and some of the bets just didn’t turn out as hoped. For example, OpenAI got a large starting grant from OpenPhil but then became one of the biggest AGI contributors. Redwood Research wanted to scale somewhat quickly but recently decided to scale down again.

Potentially this has led to a “stand-off” situation where funders are waiting for good opportunities and people who could start something see the change of the funding situation and are more hesitant to start something and as a result nothing happens.

I think a couple of new organizations and programs from the last 2 years, e.g. Redwood, FAR, CAIS, Epoch, SERI MATS, ARENA, etc., look like promising bets so far but I’d like to see many more orgs of the same caliber in the coming years.

Redwood has recently been criticized but I think their story to date is a mostly successful experiment for the AI safety community. In the worst-case interpretation, Redwood produced a lot of really talented AI safety researchers, in the best case, their scientific outputs were also quite valuable. I personally think, for example, that causal scrubbing is an important step for interpretability. Furthermore, I think trying something like MLAB and REMIX was very valuable both for the actual content value as well as the information value. So Redwood is mostly a win for AI safety funding in my books and should not be a reason for more conservative funding strategies just because not everything worked out as intended.

Some common counterarguments

“We don’t need more orgs, we need more great agendas”

There is a common criticism that the lack of orgs is due to the lack of agendas and if there were more great agendas, there would be more orgs. This criticism is often coupled with pointing out the lack of research leads who could execute such an agenda. While I think this is technically true, it doesn’t seem quite right.

The obvious question is how good agendas are developed in the first place. It may be through mentorship at another org or years of experience in academia/independent research. Typically, the people who have this experience are hired by the big labs and therefore rarely start a new org. So if you think that only people who already have a great agenda should start an org, not starting new orgs is probably reasonable under current conditions. However, the assumption that you can only get good at research leadership through this path just seems wrong to me.

First, research leadership is obviously hard but you can learn it. I know lots of people who don’t have research leadership experience yet but who I would judge to be competent at running an agenda that could serve 3-10 people. Surely they would make many mistakes early on but they would grow into the position. Not trying seems like a strictly worse proposal than trying and failing (see section on opportunity costs).

Second, a great agenda just doesn't seem like a necessary requirement. It seems totally fine for me to replicate other people’s work, extend existing agendas, or ask other orgs if they have projects to outsource (usually they do) for a year or so and build skills during that time. After a while, people naturally develop their own new ideas and then start developing their own agendas.

“The bottleneck is mentorship”

It’s clearly true that mentorship is a huge bottleneck but great mentors don’t fall from the sky. All senior people started junior and, in my personal opinion, lots of fairly junior people in the AI safety space would already make pretty good mentors early on, e.g. because they have prior management experience outside of AI safety or because they just have good research intuitions and ideas from the start.

Furthermore, a lot of the people who are in the position to provide mentorship are in full-time positions at big labs where they get access to compute, great team members, a big salary, etc. From that position, it just doesn’t seem that exciting to mentor lots of new people even if it had more impact in some cases. Therefore, a lot of very capable mentors are locked in positions where they don’t provide a lot of mentorship to newcomers because existing AI safety labs aren’t scaling fast enough to soak up the incoming talent streams. Thus, I personally think creating more orgs and thus places where mentorship can be learned and provided would be a good response.

Similar to the point about great agendas, it seems fine to me to just let people try, especially if the alternative is independent research or not contributing at all.

“The bottleneck is operations”

Before starting Apollo Research, I was really worried that operations would be a bottleneck. Now I’m not worried anymore. There are a lot of good operations people looking to get into AI safety and there is a lot of external help available.

Historically, EA orgs have sometimes struggled with finding operations talent but I think that was largely due to these orgs not providing an interesting value proposition to the talent they were looking for. For example, ops was sometimes framed as “everything that nobody else wanted to do” (see this post for details). So if you make a reasonable proposition, operations talent will come.

Furthermore, there is a lot of external help for operations in the EA sphere. Some organizations provide fiscal sponsorship and some operations help, e.g. Rethink Priorities or Effective Ventures. Impact ops (new org) may also be able to provide help early on and significantly help with operations.

“The downside risks are too high”

Another counterargument has been that there are large downside risks in funding AI safety orgs, e.g. because they may pull a switcheroo and cause further acceleration. This seems true to me and warrants increased caution but there seem to be lots of organizations that don’t have a high risk of falling into that category. Right now, training models and making progress on them is so expensive that any org with less than ~$50M of funding probably can’t train models close to the frontier anyway.

I personally think that many agendas have some capabilities externalities, e.g. building LM agents for evals or finding new insights for interpretability, but there are ways to address this. Orgs should carefully estimate the safety-capabilities balance of their outputs, including consulting with trusted external parties and then use responsible disclosure and differential publishing to circulate their outputs.

It feels like this is solvable by funding organizations where the leadership has a track record of caring about AI safety for the right reasons and has an agenda that isn’t too entangled with capabilities. It’s a hard problem but we should be able to make reasonable positive EV bets to address it.

“The best talent get jobs, the rest doesn’t matter”

I’ve heard the notion that AI safety talent is power law distributed, and therefore most impact will come from a small number of people anyways. This argument implies that it is fine to keep the number of AI safety researchers as low as it currently is (or scale very slowly) as long as the few relevant ones can contribute. I think there are a couple of problems with this argument.

- Even if the true number of those who can meaningfully contribute is low, the 100-500 we currently have is probably still too low given the size of the problem we’re dealing with.

- It’s hard to predict who the impactful people are going to be. Thus, spreading bets wider is better. Once there is more evidence about the quality of research, the best AI safety contributors can still coordinate to work in the same organization if they want to.

- The most impactful people can be enabled and accelerated by having a team around them. So more people are still better (as long as they meet a certain bar).

- Powerlaws stay powerlaws when you zoom out. Why is the right cutoff at the 100-500 people we currently have and not at 10k or 1M? You could use this argument to justify any arbitrary cutoff.

How?

I can’t provide a perfect recipe that will work for everyone but here are some scattered thoughts and suggestions about starting an org.

- Lots of people are “lurking”, i.e. they are not willing to start an org themselves but if someone else did it, they would be on board very quickly. The main bottleneck seems to be bringing these people together. It may be sufficient if one person says “I’m gonna commit” and takes charge of getting the org off the ground. If you have an understanding of AI safety and an entrepreneurial mindset, your talents are very much needed.

- It’s been done before. There are millions of companies, there are lots of books on how to build and run them, there are lots of people with good advice who’re happy to provide it, and the density of talent in AI safety makes it fairly easy to find good starting members.

- You don’t have to go all in right away. It’s fine (probably even recommended) to start as a group of 2-5 for half a year with independent funding and see whether you work well together and find a good agenda. If it works out, great, you can scale and make it more permanent. If it doesn’t, just disband, no hard feelings, you learned a lot on the way. I personally think SERI MATS & co are great environments to start such a test run because there are lots of good people around and you don’t have to make any long-term commitments.

- Agenda: You don’t need to have a great agenda right away, you can replicate existing agendas and start extending them when you understand the main bits. There is a long list of low-hanging fruit in empirical alignment that you can just jump on. Also, most senior people are happy to provide suggestions if you ask nicely. Furthermore, some people just have a reasonable agenda fairly quickly. For example, I think Jesse’s work on SLT and Kareel’s work on DLK have a lot of potential (or to be more precise, the agendas that followed from those works; the actual agendas are probably many months ahead of the public writing by now) despite both of them being fairly junior in traditional terms. I would generally recommend more people to just try and work on an agenda you’re excited about. In the best case, you find something important, in the worst case you get a lot of valuable experience.

- Operations: In case you’re a handful of people just trying out stuff for 6 months, operations is not that important. In case you’re more committed, good operations staff make a huge difference. I recommend asking around (the EA operations network is very well connected) and running a public hiring round (lots of good operations staff don’t typically hang out with the researchers).

- Funding: There are about 5-10 EA funders you can apply to. There are loads of non-EA funders you can apply to (but the application processes are very different). Furthermore, if your agenda can lead to a concrete product that is compatible with reducing catastrophic risks from AI, e.g. interpretability software, you can also think about a VC-backed route.

- Management: Excellent management is hard but it’s doable. In my (very limited) experience, it’s mostly a question of whether the organization makes it a priority to build up internal management capacity or not. You also don’t have to reinvent the wheel, there are lots of good books on management and the basic tips are a pretty reasonable start. From there, you can still experiment and iterate a lot and then you’ll get better over time.

- Learn from others: Others have already gone through the process of starting an org or program. Typically they are very willing to share ideas and I personally learned a lot from talking to people at EAGs and other occasions. We’ve also developed a couple of fairly general resources internally, e.g. about culture, hiring, management, the research process, etc. I’m happy to share them with externals under some conditions. Also, others would do many things differently the second time, you can learn a lot from their mistakes and try to prevent them.

- Fiscal sponsorship: Some organizations like Rethink Priorities, Charity Entrepreneurship, Effective Ventres, and others sometimes provide fiscal sponsorship, i.e. they host you as an organization, help with operations, manage your funding, etc. This can be very helpful, especially if you’re just a small group.

What now?

I don’t think everyone should try to start an org but I think there are some ways in which we, as a community, could make it easier for those who want to

- Mentally add it as one of the possible options for your career. You don’t have to do it but under specific circumstances, it may be the best choice.

- Create and support situations in which orgs could be founded, e.g. Lightcone, SERI MATS or the London AIS hub. These are great environments to try it out with low commitment while getting a lot of support from others.

- Make a “standard playbook” for founding an AIS org. It doesn’t have to be spectacular, just a short list of steps you should consider seems pretty helpful already (I may write one myself later this year in case someone is interested).

- Provide a “starter package”, e.g. let’s say $250-500k for 5 people for 6 months. If OpenPhil or SFF said that there is a fast process to get a starter package, I’m pretty sure more people would try to start a new research group/org.

- If you’re part of an existing org, consider starting your own. I think in nearly all circumstances the answer is “stay where you are” but in some instances, it might be better to leave and start a new one. For example, if you think you have an agenda that could serve many more people but your current org can’t put you in a position to develop it, starting a new org may be the correct move.

Starting an org isn’t easy and lots of efforts will fail. However, given the lack of existing full-time AI safety capacity, it seems like we should try creating more orgs nonetheless. In the best case, a bunch of them will succeed, in the worst case, a “failed org” provides a lot of upskilling opportunities and leadership experience for the people involved.

I think the high quality of rejected candidates in AI safety is a very bad sign for the health of the community at the moment. The fact that lots of people with years of ML research and engineering experience with a solid understanding of alignment aren’t picked up is just a huge waste of talent. As an intuitive benchmark, I would like to get to a world where at least half of all SERI MATS scholars are immediately hired after the program and we aren’t even close to that yet.

Ajeya @ 2023-09-26T02:54 (+59)

(Cross-posted to LessWrong.)

I’m a Senior Program Officer at Open Phil, focused on technical AI safety funding. I’m hearing a lot of discussion suggesting funding is very tight right now for AI safety, so I wanted to give my take on the situation.

At a high level: AI safety is a top priority for Open Phil, and we are aiming to grow how much we spend in that area. There are many potential projects we'd be excited to fund, including some potential new AI safety orgs as well as renewals to existing grantees, academic research projects, upskilling grants, and more.

At the same time, it is also not the case that someone who reads this post and tries to start an AI safety org would necessarily have an easy time raising funding from us. This is because:

- All of our teams whose work touches on AI (Luke Muehlhauser’s team on AI governance, Claire Zabel’s team on capacity building, and me on technical AI safety) are quite understaffed at the moment. We’ve hired several people recently, but across the board we still don’t have the capacity to evaluate all the plausible AI-related grants, and hiring remains a top priority for us.

- And we are extra-understaffed for evaluating technical AI safety proposals in particular. I am the only person who is primarily focused on funding technical research projects (sometimes Claire’s team funds AI safety related grants, primarily upskilling, but a large technical AI safety grant like a new research org would fall to me). I currently have no team members; I expect to have one person joining in October and am aiming to launch a wider hiring round soon, but I think it’ll take me several months to build my team’s capacity up substantially.

- I began making grants in November 2022, and spent the first few months full-time evaluating applicants affected by FTX (largely academic PIs as opposed to independent organizations started by members of the EA community). Since then, a large chunk of my time has gone into maintaining and renewing existing grant commitments and evaluating grant opportunities referred to us by existing advisors. I am aiming to reserve remaining bandwidth for thinking through strategic priorities, articulating what research directions seem highest-priority and encouraging researchers to work on them (through conversations and hopefully soon through more public communication), and hiring for my team or otherwise helping Open Phil build evaluation capacity in AI safety (including separately from my team).

- As a result, I have deliberately held off on launching open calls for grant applications similar to the ones run by Claire’s team (e.g. this one); before onboarding more people (and developing or strengthening internal processes), I would not have the bandwidth to keep up with the applications.

- On top of this, in our experience, providing seed funding to new organizations (particularly organizations started by younger and less experienced founders) often leads to complications that aren't present in funding academic research or career transition grants. We prefer to think carefully about seeding new organizations, and have a different and higher bar for funding someone to start an org than for funding that same person for other purposes (e.g. career development and transition funding, or PhD and postdoc funding).

- I’m very uncertain about how to think about seeding new research organizations and many related program strategy questions. I could certainly imagine developing a different picture upon further reflection — but having low capacity combines poorly with the fact that this is a complex type of grant we are uncertain about on a lot of dimensions. We haven’t had the senior staff bandwidth to develop a clear stance on the strategic or process level about this genre of grant, and that means that we are more hesitant to take on such grant investigations — and if / when we do, it takes up more scarce capacity to think through the considerations in a bespoke way rather than having a clear policy to fall back on.

EvanMcVail @ 2023-10-12T03:14 (+23)

By the way, Open Philanthropy is actively hiring for roles on Ajeya’s team in order to build capacity to make more TAIS grants! You can learn more and apply here.

Ajeya @ 2023-10-12T18:33 (+4)

And a quick note that we've also added an executive assistant / operations role since Evan wrote this comment!

Tom Barnes @ 2023-09-28T12:42 (+13)

Thanks Ajeya, this is very helpful and clarifying!

I am the only person who is primarily focused on funding technical research projects ... I began making grants in November 2022

Does this mean that prior to November 2022 there were ~no full-time technical AI safety grantmakers at Open Philanthropy?

OP (prev. GiveWell labs) has been evaluating grants in the AI safety space for over 10 years. In that time the AI safety field and Open Philanthropy have both grown, with OP granting over $300m on AI risk. Open Phil has also done a lot of research on the problem. So, from someone on the outside, it seems surprising that the number of people making grants has been consistently low

OllieBase @ 2023-09-28T12:52 (+3)

Daniel Dewey was a Program Officer for potential risks from advanced AI at OP for several years. I don't know how long he was there for, but he was there in 2017 and left before May 2021.

Thomas Kwa @ 2023-09-21T17:14 (+43)

I think funding is a bottleneck. Everything I've heard suggests the funding environment is really tight: CAIS is not hiring due to lack of funding. FAR is only hiring one RE in the next few months due to lack of funding. Less than half of this round of MATS scholars were funded for independent research. I think this is because there are not really 5-10 EA funders able to fund at large scale, just OP and SFF; OP is spending less than they were pre-FTX. At LTFF the bar is high, LTFF's future is uncertain, and they tend not to make huge grants anyway. So securing funding should be a priority for anyone trying to start an org.

Edit: I now think the impact of these orgs is uncertain enough that one should not conclude with certainty there is a funding bottleneck.

mariushobbhahn @ 2023-09-21T17:27 (+21)

I have heard mixed messages about funding.

From the many people I interact with and also from personal experience it seems like funding is tight right now. However, when I talk to larger funders, they typically still say that AI safety is their biggest priority and that they want to allocate serious amounts of money toward it. I'm not sure how to resolve this but I'd be very grateful to understand the perspective of funders better.

I think the uncertainty around funding is problematic because it makes it hard to plan ahead. It's hard to do independent research, start an org, hire, etc. If there was clarity, people could at least consider alternative options.

Linch @ 2023-09-22T05:22 (+32)

(My own professional opinions, other LTFF fund managers etc might have other views)

Hmm I want to split the funding landscape into the following groups:

- LTFF

- OP

- SFF

- Other EA/longtermist funders

- Earning-to-givers

- Non-EA institutional funders.

- Everybody else

LTFF

At LTFF our two biggest constraints are funding and strategic vision. Historically it was some combination of grantmaking capacity and good applications but I think that's much less true these days. Right now we have enough new donations to fund what we currently view as our best applications for some months, so our biggest priority is finding a new LTFF chair to help (among others) address our strategic vision bottlenecks.

Going forwards, I don't really want to speak for other fund managers (especially given that the future chair should feel extremely empowered to shepherd their own vision as they see fit). But I think we'll make a bid to try to fundraise a bunch more to help address the funding bottlenecks in x-safety. Still, even if we double our current fundraising numbers or so[1], my guess is that we're likely to prioritize funding more independent researchers etc below our current bar[2], as well as supporting our existing grantees, over funding most new organizations.

(Note that in $ terms LTFF isn't a particularly large fraction of the longtermist or AI x-safety funding landscape, I'm only talking about it most because it's the group I'm the most familiar with).

Open Phil

I'm not sure what the biggest constraints are at Open Phil. My two biggest guesses are grantmaking capacity and strategic vision. As evidence for the former, my impression is that they only have one person doing grantmaking in technical AI Safety (Ajeya Cotra). But it's not obvious that grantmaking capacity is their true bottleneck, as a) I'm not sure they're trying very hard to hire, and b) people at OP who presumably could do a good job at AI safety grantmaking (eg Holden) have moved on to other projects. It's possible OP would prefer conserving their AIS funds for other reasons, eg waiting on better strategic vision or to have a sudden influx of spending right before the end of history.

SFF

I know less about SFF. My impression is that their problems are a combination of a) structural difficulties preventing them from hiring great grantmakers, and b) funder uncertainty.

Other EA/Longtermist funders

My impression is that other institutional funders in longtermism either don't really have the technical capacity or don't have the gumption to fund projects that OP isn't funding, especially in technical AI safety (where the tradeoffs are arguably more subtle and technical than in eg climate change or preventing nuclear proliferation). So they do a combination of saving money, taking cues from OP, and funding "obviously safe" projects.

Exceptions include new groups like Lightspeed (which I think is more likely than not to be a one-off thing), and Manifund (which has a regranters model).

Earning-to-givers

I don't have a good sense of how much latent money there is in the hands of earning-to-givers who are at least in theory willing to give a bunch to x-safety projects if there's a sufficiently large need for funding. My current guess is that it's fairly substantial. I think there are roughly three reasonable routes for earning-to-givers who are interested in donating:

- pooling the money in a (semi-)centralized source

- choosing for themselves where to give to

- saving the money for better projects later.

If they go with (1), LTFF is probably one of the most obvious choices. But LTFF does have a number of dysfunctions, so I wouldn't be surprised if either Manifund or some newer group ends up being the Schelling donation source instead.

Non-EA institutional funders

I think as AI Safety becomes mainstream, getting funding from government and non-EA philantropic foundations becomes an increasingly viable option for AI Safety organizations. Note that direct work AI Safety organizations have a comparative advantage in seeking such funds. In comparison, it's much harder for both individuals and grantmakers like LTFF to seek institutional funding[3].

I know FAR has attempted some of this already.

Everybody else

As worries about AI risk becomes increasingly mainstream, we might see people at all levels of wealth become more excited to donate to promising AI safety organizations and individuals. It's harder to predict what either non-Moskovitz billionaires or members of the general public will want to give to in the coming years, but plausibly the plurality of future funding for AI Safety will come from individuals who aren't culturally EA or longtermist or whatever.

- ^

Which will also be harder after OP's matching expires.

- ^

If the rest of the funding landscape doesn't change, the tier which I previously called our 5M tier (as in 5M/6 months or 10M/year) can probably absorb on the order of 6-9M over 6 months, or 12-18M over 12 months. This is in large part because the lack of other funders means more projects are applying to us.

- ^

Regranting is pretty odd outside of EA; I think it'd be a lot easier for e.g. FAR or ARC Evals to ask random foundations or the US government for money directly for their programs than for LTFF to ask for money to regrant according to our own best judgment. My understanding is that foundations and the US government also often have long forms and application processes which will be a burden for individuals to fill; makes more sense for institutions to pay that cost.

JoshuaBlake @ 2023-09-25T06:45 (+1)

There's some really useful information here. Getting it out in a more visible way would be useful.

Linch @ 2023-09-26T04:33 (+2)

Thanks! I've crossposted the comment to LessWrong. I don't think it's polished enough to repost as a frontpage post (and I'm unlikely to spend the effort to polish it). Let me know if there are other audiences which will find this comment useful

Vaidehi Agarwalla @ 2023-09-22T05:51 (+18)

"Less than half of this round of MATS scholars were funded for independent research."

-> Its not clear to me what exactly the bar for independent research should be. It seems like it's not a great fit for a lot of people, and I expect it to be incredibly hard to do it well as a relatively junior person. So it doesn't have to be a bad thing that some MATS scholars didn't get funding.

Also, I don't necessarily think that orgs being unable to hire is in and of itself a sign of a funding bottleneck. I think you'd first need to make the case that these organisations are crossing a certain impact threshold.

(I do believe AIS lacks diversify of funders and agree with your overall point).

Thomas Kwa @ 2023-09-22T06:20 (+1)

Fair point about the independent research funding bar. I think the impact of CAIS and FAR are hard to deny, simply because they both have several impressive papers.

Ben_West @ 2023-09-26T00:09 (+11)

Thanks for writing this! It seems like a valuable point to consider, and one that I have been thinking about myself recently.

My guess is that most of the people who are capable of founding an organization are also capable of being middle or senior managers within existing organizations, and my intuition is that they would probably be more impactful there. I'm curious if you have the opposite intuition?

mariushobbhahn @ 2023-09-26T07:37 (+2)

I touched on this a little bit in the post. I think it really depends on a couple of assumptions.

1. How much management would they actually get to do in that org? At the current pace of hiring, it's unlikely that someone could build a team as quickly as you can with a new org.

2. How different is their agenda from existing ones? What if they have an agenda that is different from any agenda that is currently done in an org? Seems hard/impossible to use the management skills in an existing org then.

3. How fast do we think the landscape has to grow? If we think a handful of orgs with 100-500 members in total is sufficient to address the problem, this is probably the better path. If we think this is not enough, starting and scaling new orgs seems better.

But like I said in the post, for many (probably most) people starting a new org is not the best move. But for some it is and I don't think we're supporting this enough as a community.

Ben_West @ 2023-09-26T18:51 (+4)

At the current pace of hiring, it's unlikely that someone could build a team as quickly as you can with a new org.

Can you say more about why this is?

The standard assumption is that the proportional rate of growth is independent of absolute size, i.e. a large company is as likely to grow 10% as a small company is. As a result, large companies are much more likely to grow in absolute terms than small companies are.[1]

I could imagine various reasons why AI safety might deviate from the norm here, but am not sure which of them you are arguing for. (Sorry if this was in the post and I'm not able to find it.)

- ^

My understanding is that there is dispute about whether these quantities are actually independent, but I'm not aware of anything suggesting that small companies will generally grow in absolute terms faster than large companies (and understand that there is substantial empirical evidence which suggests the opposite).

ShayBenMoshe @ 2023-09-26T07:06 (+1)

Many people live in an area (or country) where there isn't even a single AI safety organization, and can't or don't want to move. In that sense - no they can't even join an existing organization (in any level).

(I think founding an organization has other advantages over joining an existing one, but this is my top disagreement.)

Prometheus @ 2023-09-25T02:57 (+6)

(crossposted from lesswrong)

I created a simple Google Doc for anyone interested in joining/creating a new org to put down their names, contact, what research they're interested in pursuing, and what skills they currently have. Overtime, I think a network can be fostered, where relevant people start forming their own research, and then begin building their own orgs/get funding. https://docs.google.com/document/d/1MdECuhLLq5_lffC45uO17bhI3gqe3OzCqO_59BMMbKE/edit?usp=sharing

Denis @ 2023-09-28T11:33 (+2)

Great article. Identifying the problem is half the solution. You have provided one provocative answer, which challenges us to either agree with you or propose a better solution!

Your description mirrors my experience in looking to move into this area (AI Safety / Governance) and talking to others wanting to move into this area. There is so much goodwill from organisations and from individuals, but my feeling is that they are just overwhelmed - by the extent of the work needed, by the number of applications for each role, by the logistics and the funding challenges. Even if the money is "available", it requires quite a lot of investigation and paperwork to actually get it, which takes away a valuable resource.

This week in the BlueDot AI Safety/Governance course, the topic was "Career Advice". People spoke of applying for roles and discovering that there were more than 100 (even more than 500) applicants for individual roles in some cases. Which then means organisations with limited resources spend a lot of these resources on the hiring process.

And yet, you can't just take and organisation and double the work-force in a month and expect it to maintain the same quality and culture that has made it so valuable in the first place. But at the same time, one of the lessons I've been learning is that organisations who want to make an impact often need a lot of time to build credibility. You can do great work, but if decision-makers have never heard of you, it may not be very impactful.

I know that there are organisations (e.g. Rethink Priorities) who are actively looking for potential founders. The problem is that being a founder requires a quite specific skill-set, commitment and energy-level.

I think that an interesting, alternative way to address this would mirror what tends to happen in the corporate world if rapid expansion is needed. Let's say you have a company of 100 and you realise you want to become 200 by the end of the year. Here's what you might do:

- Identify the very most critical work that you're currently doing, and make sure the right people continue to work on that. (first and foremost, don't make things worse!)

- With that caveat, think about who from your organisation would have the skillset to recruit, manage, coach, train, mentor new people. Maybe pick a team of 20, including a range of levels, but, if anything, tending towards more senior.

- Treat the growth like a project, with stages - planning, sourcing funding, strategy, recruitment, ... This "project" will be the full-time work of these people for the next few years.

- Create a clear long-term vision for how the new organisation will look in a few years, and recruit towards that. (Don't just recruit 100 new-graduates and expect to have a functional organisation).

- Maybe the first round of recruiting might be 10-20 relatively senior people who will become part of the leadership team and who will take over some of the work of recruiting. In this first phase, each new person will have an experience mentor who has worked for the organisation for some time, and after 3-6 months, these new people will be in a position to coach and mentor new-hires themselves.

- Do not fully "merge" the new and old organisations until you are very confident that it will work well. At the same time, ensure that the new organisation has all the benefits of the existing one, including access to people for networking, advice, contacts, name-recognition.

Obviously this is a vastly oversimplified scheme. But the point is: if you can make this work, instead of a new organisation which may struggle for recognition, for resources, for purpose, .., you can vastly increase the potential of an already existing, successful organisation which is currently resource-limited. As you say, the talent is there, ready to work. The problems are there, ready to be solved.

Walt @ 2023-09-25T10:16 (+1)

(Cross-posted from LW)

and Kaarel’s work on DLK

@Kaarel is the research lead at Cadenza Labs (previously called NotodAI), our research group which started during the first part of SERI MATS 3.0 (There will be more information about Cadenza Labs hopefully soon!)

Our team members broadly agree with the post!

Currently, we are looking for further funding to continue to work on our research agenda. Interested funders (or potential collaborators) can reach out to us at info@cadenzalabs.org.

Roman Leventov @ 2023-09-24T10:03 (+1)

(Cross-posted from LW)

@Nathan Helm-Burger's comment made me think it's worthwhile to reiterate here the point that I periodically make:

Direct "technical AI safety" work is not the only way for technical people (who think that governance & politics, outreach, advocacy, and field-building work doesn't fit them well) to contribute to the larger "project" of "ensuring that the AI transition of the civilisation goes well".

Now, as powerful LLMs are available, is the golden age to build innovative systems and tools to improve[1]:

- Politics: see https://cip.org/, Audrey Tang's projects

- Social systems: innovative LLM/AI-first social networks that solve the social dilemma? (I don't have a good existing examples of such projects, though)

- Psychotherapy, coaching: see Inflection

- Economics: see Verses, One Project, the Gaia Consortium

- Epistemic infrastructure: see Subconscious Network, Ought, the Cyborgism agenda, Quantum Leap (AI safety edtech)

- Authenticity infrastructure: see Optic, proof-of-personhood projects

- Cybersec/infosec: see various AI startups for cybersecurity, trustoverip.org

- More?

I believe that if such projects are approached with integrity, thoughtful planning, and AI safety considerations at heart rather than with short-term thinking (specifically, not considering how the project will play out if or when AGI is developed and unleashed on the economy and the society) and profit-extraction motives, they could shape to shape the trajectory of the AI transition in a positive way, and the impact may be comparable to some direct technical AI safety/alignment work.

In the context of this post, it's important that the verticals and projects mentioned above could either be conventionally VC-funded because they could promise direct financial returns to the investors, or could receive philanthropic or government funding that wouldn't otherwise go to technical AI safety projects. Also, there is a number of projects in these areas that are already well-funded and hiring.

Joining such projects might also be a good fit for software engineers and other IT and management professionals who don't feel they are smart enough or have the right intellectual predispositions to do good technical research, anyway, even there was enough well-funded "technical AI safety research orgs". There should be some people who do science and some people who do engineering.

- ^

I didn't do serious due diligence and impact analysis on any of the projects mentioned. The mentioned projects are just meant to illustrate the respective verticals, and are not endorsements.