Emrik's Quick takes

By Emrik @ 2021-09-21T20:09 (+2)

nullEmrik @ 2021-10-28T19:16 (+20)

It'd be cool if the forum had a commenting feature similar to Google Docs, where comments and subcomments are attached directly to sentences in the post. Readers would then be able to opt in to see the discussion for each point on the side while reading the main post. Users could also choose to hide the feature to reduce distractions.

For comments that directly respond to particular points in the post, this feature would be more efficient (for reading and writing) relative to the current standard since they don't have to spend words specifying what exactly they're responding to.

HaukeHillebrandt @ 2021-11-04T15:07 (+3)

https://web.hypothes.is/

Nathan Young @ 2021-11-03T15:59 (+2)

Yes.

Emrik @ 2021-10-08T02:41 (+18)

Forum suggestion: Option to publish your post as "anonymous" or blank, that then reverts to reveal your real forum name in a week.

This would be an opt-in feature that lets new and old authors gain less biased feedback on their posts, and lets readers read the posts with less of a bias from how they feel about the author.

At the moment, information cascades amplify the number of votes established authors get based on their reputation. This has both good (readers are more likely to read good posts) and bad (readers are less likely to read unusual perspectives, and good newbie authors have a harder time getting rewarded for their work) consequences. The anonymous posting feature would redistribute the benefits of cascades more evenly.

I don't think the net benefit is obvious in this case, but it could be worth exploring and testing.

Aaron Gertler @ 2021-10-13T08:32 (+3)

This is a feature we've been considering for a while! Thanks for sharing the idea and getting some upvotes as additional evidence.

I can't promise this will show up at any particular time, but it is a matter of active discussion, for the reasons you outlined and to give people a third option alongside publishing something on their own account and publishing on a second, pseudonymous account.

Emrik @ 2022-11-11T18:14 (+13)

The 'frontpage time window' is the duration a post remains on the frontpage. With the increasing popularity of the forum, this window becomes shorter and shorter, and it makes everyone scramble to participate in the latest discussions before the post disappears into irrelevancy.

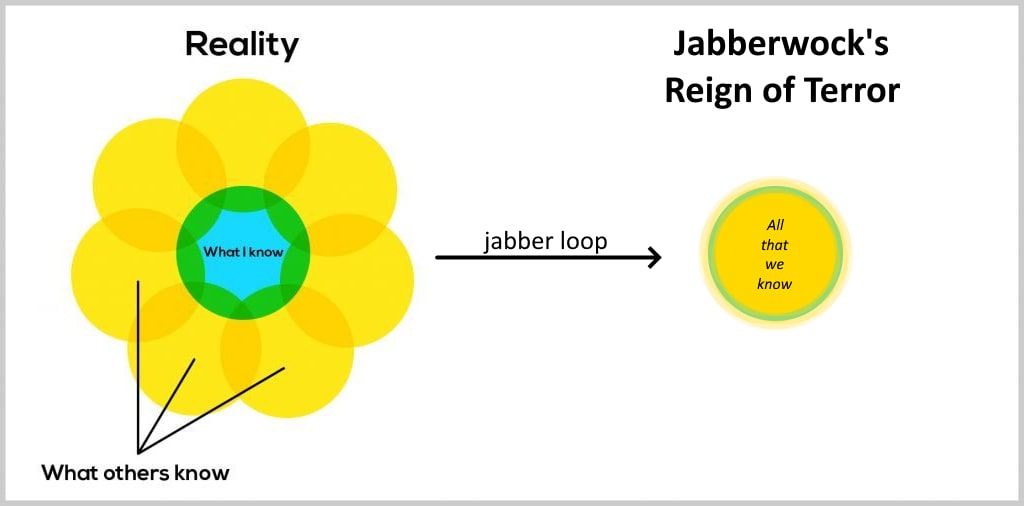

I call it the "jabber loop". As long as we fear being exposed as clueless about something, we're incentivised to read what we expect other people will have read, and what they're likely to bring up in conversation.

This seems suboptimal if it biases us towards only appreciating what's recent and crowds out the possibility of longer, more patient, discussions. One solution to this could be spaced-repetition curated posts.[1]

When a post gets curated, that should indicate that it has some long-term value. So instead of (or in addition to) pinning it on top of the frontpage, the star could indicate that this post will resurface to the frontpage at a regular interval that decays exponentially (following the forgetting curve).[2][3]

Some reasons this could be good

- It lets readers know that this discussion will resurface, so their contributions could also have lasting value. Comments are no longer write-and-forget, and you have a real chance at contributing more long-term.

- It efficiently[4] increases collective memory of the best contributions.

- It can help us scrutinise ideas that got inculcated as a fundamental assumption early on. We might uncover and dislodge some flawed assumptions that reached universal acceptance in the past due to information cascades.

- As we gain more information and experience over time, we might stand a better chance at discover flaws in previously accepted premises. But unless there's something (like spaced-repetition curation) that prompts the question into public debate again, we don't get to benefit from that increased capacity, and we may just be stuck with the beliefs that got accepted earlier.

- Given that there's a large bias to discuss what's recent, combined with the fact that people are very reluctant to write up ideas that have already been said before, we might be stuck with a bit of a paradox. If the effects were strong enough, we could theoretically be losing wisdom over time instead of accumulating it. Especially since the movement is growing fast, and newcomers weren't here when a particular piece of wisdom was a hot topic the first time around.

- ^

There are other creative ways the forum could use spaced repetition to enhance learning, guide attention, and maximise the positive impact of reminders at the lowest cost.

It could either be determined individually (e.g. personal flashcards similar to Orbit), collectively (e.g. determined by people upvoting it for 'long-term relevancy' or something), or centrally (e.g. by Lizka and other content moderators).

- ^

Andy Matuschak, author of Quantum Country and Evergreen Notes, calls this a timefwl text. He also developed Orbit, a tool that should help authors integrate flashcards into their educational writings. If the forum decided to do something like this, he might be eager to help facilitate it. Idk tho.

- ^

Curators could still decide to un-curate a post if it's no longer relevant or they don't think the community benefits from retaining it in their epistemic memepool.

- ^

I highly recommend Andy's notes on spaced repetition and learning in general.

Emrik @ 2022-10-18T18:38 (+13)

My heart longs to work more directly to help animals. I'm doing things that are meta-meta-meta-meta removed from actions that feel like they're actually helping anyone, all the while the globe is on moral fire. At times like this, I need to remind myself of Ahmdal's Law and Inventor's Paradox. And to reaffirm that I need to try to have faith in my own judgment of what is likely to produce good consequences, because no one else will be able to know all the relevant details about me, and also because otherwise I can't hope to learn.[1]

Ahmdal's Law tells you the obvious thing that for a complex process, the maximum percentage speedup you can achieve by optimising one of its sub-processes is hard limited by the fraction of time that sub-process gets used. It's loosely analogous to the idea that if you work on a specific direct cause, the maximum impact you can have is limited by the scale of that cause, whereas if you work on a cause that feeds into the direct cause, you have a larger theoretical limit (although that far from guarantees larger impact in practice). I don't know a better term for this, so I'm using "Ahmdal's Law".

The Inventor's Paradox is the curious observation that when you're trying to solve a problem, it's often easier to try to solve a more general problem that includes the original as a consequence.[2]

Consider the problem of adding up all the numbers from 1 to 99. You could attack this by going through 99 steps of addition like so: .

Or you could take a step back and look for more general problem-solving techniques. Ask yourself, how do you solve all 1-iterative addition problems? You could rearrange it as:

For a sequence of numbers, you can add the first number () to the last number () and multiply the result by how many times you can pair them up like that plus if there's a single number left () you just add that at the end. As you'd expect, this general solution can solve the original problem, but also so much more, and it's easier to find (for some people) and compute than having to do the 99 steps of addition manually.

"The more ambitious plan may have more chances of success […] provided it is not based on a mere pretension but on some vision of the things beyond those immediately present." ‒ Pólya

- ^

Self-confidence feels icky and narcissistic when I just want to be kind and gentle. But I know how important it is to cultivate self-confidence at almost any cost. And I don't want to selfishly do otherwise just because it's a better look. Woe is me, rite.

- ^

The reasons why and when Inventor's Paradox works is something that deserves deep investigation, in case you could benefit from the prompt. Lmk if you find anything interesting.

Emrik @ 2022-08-10T23:38 (+13)

Some selected comments or posts I've written

- Taxonomy of cheats, multiplex case analysis, worst-case alignment

- "You never make decisions, you only ever decide between strategies"

- My take on deference

- Dumb

- Quick reasons for bubbliness

- Against blind updates

- The Expert's Paradox, and the Funder's Paradox

- Isthmus patterns

- Jabber loop

- Paradox of Expert Opinion

- Rampant obvious errors

- Arbital - Absorbing barrier

- "Decoy prestige"

- "prestige gradient"

- Braindump and recommendations on coordination and institutional decision-making

- Social epistemology braindump (I no longer endorse most of this, but it has patterns)

Other posts I like

- The Goddess of Everything Else - Scott Alexander

- “The Goddess of Cancer created you; once you were hers, but no longer. Throughout the long years I was picking away at her power. Through long generations of suffering I chiseled and chiseled. Now finally nothing is left of the nature with which she imbued you. She never again will hold sway over you or your loved ones. I am the Goddess of Everything Else and my powers are devious and subtle. I won you by pieces and hence you will all be my children. You are no longer driven to multiply conquer and kill by your nature. Go forth and do everything else, till the end of all ages.”

- A Forum post can be short - Lizka

- Succinctly demonstrates how often people goodhart on length or other irrelevant criteria like effort moralisation. A culture for appreciating posts for the practical value they add to you specifically, would incentivise writers to pay more attention to whether they are optimising for expected usefwlness or just signalling.

- Changing the world through slack & hobbies - Steven Byrnes

- Unsurprisingly, there's a theme to what kind of posts I like. Posts that are about de-Goodharting ourselves.

- Also ht Eliezer and Alex Lawsen for posts on the same thing.

- Unsurprisingly, there's a theme to what kind of posts I like. Posts that are about de-Goodharting ourselves.

- Hero Licensing - Eliezer Yudkowsky

- Stop apologising, just do the thing. People might ridicule you for believing in yourself, but just do the thing.

- A Sketch of Good Communication - Ben Pace

- Highlights the danger of deferring if you're trying to be an Explorer in an epistemic community.

- Holding a Program in One's Head - Paul Graham

- "A good programmer working intensively on his own code can hold it in his mind the way a mathematician holds a problem he's working on. Mathematicians don't answer questions by working them out on paper the way schoolchildren are taught to. They do more in their heads: they try to understand a problem space well enough that they can walk around it the way you can walk around the memory of the house you grew up in. At its best programming is the same. You hold the whole program in your head, and you can manipulate it at will.

That's particularly valuable at the start of a project, because initially the most important thing is to be able to change what you're doing. Not just to solve the problem in a different way, but to change the problem you're solving."

- "A good programmer working intensively on his own code can hold it in his mind the way a mathematician holds a problem he's working on. Mathematicians don't answer questions by working them out on paper the way schoolchildren are taught to. They do more in their heads: they try to understand a problem space well enough that they can walk around it the way you can walk around the memory of the house you grew up in. At its best programming is the same. You hold the whole program in your head, and you can manipulate it at will.

Emrik @ 2021-09-22T23:15 (+13)

Correct me if I'm wrong, but I think in Christianity, there's a lot of respect and positive affect for the "ordinary believer". Christians who identify as "ordinary Christians" feel good about themselves for that fact. You don't have to be among the brightest stars of the community in order to feel like you belong.

I think in EA, we're extremely kind, but we somehow have less of this. Like, unless you have 2 PhD's by the age of 25 and you're able to hold your own in a conversation about AI-alignment theory with the top researchers in the world... you sadly have to "settle" for menial labour with impact hardly worth talking about. I'm overstating it, of course, but am I wrong?

I'm not saying ambition is bad. I think shooting for the stars is a great way to learn your limits. But I also notice a lot of people suffering under intellectual pressure, and I think we could collectively be more effective (and just feel better) if we had more... room for "ordinary folk dignity"?

Aaron Gertler @ 2021-09-22T23:42 (+4)

My experience as a non-PhD who dropped out of EA things for two years before returning is that I felt welcome and accepted when I started showing up in EA spaces again. And now that I've been at CEA for three years, I still spend a lot of my time talking to and helping out people who are just getting started and don't have any great credentials or accomplishments; I hope that I'm not putting pressure on them when I do this.

That said, every person's experience is unique, and some people have certainly felt this kind of pressure, whether self-imposed as a result of perceived community norms or thrust upon them by people who were rude or dismissive at some point. And that's clearly awful — people shouldn't be made to feel this way in general, and it's especially galling to hear about it sometimes happening within EA.

My impression is that few of these rude or dismissive people are themselves highly invested in the community, but my impression may be skewed by the relationships I've built with various highly invested people in the job I now have.

Lots of people with pretty normal backgrounds have clearly had enormous impact (too many examples to list!). And within the EA spaces I frequent, there's a lot of interest and excitement about people sharing their stories of joining the movement, even if those people don't have any special credentials. The most prominent example of this might be Giving What We Can.

I don't understand the "menial labor" point; the most common jobs for people in the broader EA community are very white-collar (programmers, lawyers, teachers...) What did you mean by that?

Personally, the way I view "ordinary folk dignity" in EA is through something I call "the airplane test". If I sat next to someone on an airplane and saw them reading Doing Good Better, and they seemed excited about EA when I talked to them, I'd be very happy to have met them, even if they didn't have any special ambitions beyond finding a good charity and making occasional donations. There aren't many people in the world who share our unusual collection of values; every new person is precious.

Emrik @ 2021-09-23T00:32 (+12)

Nono, I'm not trying to point to a problem of EAs trying to make others feel unwelcome or dumb. I think EA is extremely kind, and almost universally tries hard to make people feel welcome. I'm just pointing to the existence of an unusually strong intellectual pressure, perhaps combined with lots of focus on world-saving heroes and talk about "what should talented people do?"

I think ambition is good, but I think we can find ways of encouraging ambition while also mitigating at least some of the debilitating intelligence-dysphoria many in our community suffer from.

I'm writing this in reaction to talking to three of my friends who suffer under the intellectual pressure they feel. (Note that the following are all about the intellectual pressure they get from EA, and not just in general due to academic life.)

Friend1: "EA makes me feel real dumb XD i think i feel out of place by being less intelligent"

_

Friend2: "I’m not worried that I’m not smart, but I am worried that I am not smart enough to meet a certain threshold that is required for me to do the things I want to do. ... I think I have very low odds of achieving things I deeply want to achieve. I think that is at least partially responsible for me being as extremely uncomfortable about my intelligence as I am, and not being able to snap out of it."

_

Me: "Do you ever refrain from trying to contribute intellectually because you worry about taking up more attention than it's worth?"

Friend3: "hmm, not really for that reason. because I'm afraid my contribution will be wrong or make me look stupid. wrong in a way that reflects negatively on me-- stupid errors, revealing intellectual or character weakness.

_

Some of this is a natural and unavoidable result of the large focus EA places on intellectual labour, but I think it's worse than it needs to be. I think some effort to instil some "ordinary EA dignity" into our culture wouldn't hurt. I might have a skewed sample, however.

Emrik @ 2021-09-23T00:39 (+3)

And to respond to your question about what I meant by "menial labour". I was being poetic. I just mean that I feel like EA places a lot of focus on the very most high-status jobs, and I've heard friends despairing for having to "settle" for anything less. I sense that this type of writing might not be the norm for EA shortform, but I wasn't sure.

Emrik @ 2022-11-04T13:18 (+8)

- Should we be encouraging everyone to write everything in hierarchical notes?

- It seems better for reading comprehension.

- Faster to read.

- And once writers are used to the format, they'll will find it easier to write out their thoughts.

- So are there any reasons other than "we've got used to it," to think that linear writing is superior in any way?

- I put the question to you.

Emrik @ 2022-05-14T16:45 (+6)

(I no longer endorse this post.)

A way of reframing the idea of "we are no longer funding-constrained" is "we are bottlenecked by people who can find new cost-effective opportunities to spend money". If this is true, we should plausibly stop donating to funds that can't give out money fast enough anyway, and rather spend money on orgs/people/causes you personally estimate needs more money now. Maybe we should up-adjust how relevant we think personal information is to our altruistic spending decisions.

Is this right? And are there any good public summaries of the collective wisdom fund managers have acquired over the years? If we're bottlenecked by people who can find new giving opportunities, it would be great to promote the related skills. And I want to read them.

james.lucassen @ 2022-05-20T06:34 (+3)

Hey, I really like this re-framing! I'm not sure what you meant to say in the second and third sentences tho :/

Emrik @ 2022-05-21T05:45 (+1)

FWIW, I think personal information is very relevant to giving decisions, but I also think the meme "EA is no longer funding-constrained" perhaps lacks nuance that's especially relevant for people with values or perspectives that differ substantially from major funders.

Relevant: https://forum.effectivealtruism.org/posts/GFkzLx7uKSK8zaBE3/we-need-more-nuance-regarding-funding-gaps

Emrik @ 2022-12-02T13:50 (+5)

EA: We should never trust ourselves to do act utilitarianism, we must strictly abide by a set of virtuous principles so we don't go astray.

Also EA: It's ok to eat animals as long as you do other world-saving work. The effort and sacrifice it would take to relearn my eating patterns just isn't worth it on consequentialist grounds.

Sorry for the strawmanish meme format. I realise people have complex reasons for needing to navigate their lives the way they do, and I don't advocate aggressively trying to make other people stop eating animals. The point is just that I feel like the seemingly universal disavowment of utilitarian reasoning has been insufficiently vetted for consistency. If we claim that utilitarian reasoning can be blamed for the FTX catastrophe, then we should ask ourselves what else we should apply that lesson to; or we should recognise that FTX isn't a strong counterexample to utilitarianism, and we can still use it to make important decisions.

Emrik @ 2022-10-08T06:46 (+5)

I struggle with prioritising what to read. Additionally, but less of a problem, I struggle to motivate myself to read things. Some introspection:

The problem is that my mind desires to "have read" something more than desiring the state of "reading" it. Either because I imagine the prestige or self-satisfaction that comes with thinking "hehe, I read the thing," or because I actually desire the the knowledge for its own sake, but I don't desire the attaining of it, I desire the having of it.[1]

Could I goodhart-hack this by rewarding myself for reading and feeling ashamed of myself for actually finishing a whole post? Probably not. I think perhaps my problem is that I'm always trying to cut the enemy, so I can't take my eyes off it for long enough to innocently experience the inherent joy of seeing interesting patterns. When I do feel the most joy, I'm usually descending unnecessarily deep into a rabbit hole.

"What are all the cell adhesion molecules, how are they synthesised, and is the synthesis bottlenecked by a particular nutrient I can supplement?!"

Nay, I think my larger problem is always having a million things that I really want to read, and I feel a desparate urge to go through all of them--yesterday at the latest! So when I do feel joy at the nice patterns I learn, I feel a quiet unease at the back of my mind calling me to finish this as soon as possible so we can start on the next urgent thing to read.

(The more I think about it, the more I realise just how annoying that constant impatient nagging is when I'm trying to read something. It's not intense, but it really diminishes the joy. While I do endorse impatience and always trying to cut the enemy, I'm very likely too impatient for my own good. On the margin, I'd make speedier progress with more slack.)

If this is correct, then maybe what I need to do is to--well, close all my tabs for a start--separate out the process of collecting from the process of reading. I'll make a rule: If I see a whole new thing that I want to read, I'm strictly forbidden to actually read it until at least a day has passed. If I'm already engaged in a particular question/topic, then I can seek out and read information about it, but I can only start on new topics if it's in my collection from at least a day ago.

I'm probably intuitively overestimating the a new thing's value relative to the things in my collections anyway, just because it feels more novel. If instead I only read things from my collection, I'll gradually build up an enthusiasm for it that can compete with my old enthusiasm for aimless novelty--especially as I experience my new process outperforming my old.

My enthusiasm for "read all the newly-discovered things!" is not necessarily the optimal way to experience the most enthusiasm for reading, it's just stuck in a myopic equilibrium I can beat with a little activation energy.

- ^

What this ends up looking like is frantically skimming through the paper until I find the patterns I'm looking for, and I end up being so frustrated that I can't immediately find it that the experience ends up being unpleasant.

John Bridge @ 2022-10-08T15:26 (+1)

Not sure if you've already tried it, but I find Pocket and Audible really helps with this. It means I can just pop it on my headphones whenever I'm walking anywhere without needing to sit down and decide to read it.

Cuts back on the activation energy, which in turn increases how much I 'read'.

John Bridge @ 2022-10-08T15:27 (+3)

I should clarify - by pocketing stuff, it ends up in the automatic queue for things to read. That way, I don't really have to think about what to read next, and the things I want to read just pop up anyway.

Emrik @ 2022-10-08T16:56 (+2)

Oh, good point. Thank you. I should find an easy way of efficiently ranking/sorting my collection of things to read. If I have to look at the list and prioritise right before I start reading, that again could perhaps cause me to think "ok, I need to finish this quick so I can get to the next thing."

Emrik @ 2022-08-24T11:36 (+4)

If you agree with the claim that the most valuable aspect of EAG is that you get to have 1-on-1s with a lot of people, then what's the difference between that and just reaching out to people (e.g. via the forum) to schedule a call with them? Just help them say no if they don't want to, and you're not inoculating against possible future relationships. Make your invitation brief and you're providing them with an option without wasting their time in case they don't want to.

Feel free to invite me to talk. I'll have an easy time saying no if I'm too busy or don't want to. You're just providing me with the option.

Emrik @ 2022-10-29T20:44 (+3)

The Cautiously Compliant Superintelligence

If transformative AI ends up extremely intelligent, and reasonably aligned--not so that it cares about 100% of "our values" whatever that means--but in such a way as to comply with our intentions while limiting side-effects. The way we've aligned it, it just refuses to try to do anything with tangible near-term consequences that we didn't explicitly intend.[1]

Any failure-modes that arise as a consequence of using this system, we readily blame ourselves for. If we tried to tell it "fix the world!" it would just honestly say that it wouldn't know how to do that, because it can't solve moral philosophy for us. We can ask it to do things, but we can't ask it what it thinks we would like to ask it to do.

Such an AI would act as an amplifier on what we intend, but we might not intend the wisest things. So even if it fully understands and complies with our intentions, we're still prone to long-term disasters we can't foresee.

This kind of AI seems like a plausible endpoint for alignment. It may just forever remain unfeasible to "encode human morality into an AI" or "adequately define boundary conditions for human morality", so the rest is up to us.

If this scenario occupies a decent chunk of our probability mass, then these things seem high priority:

- Figure out what we would like to ask such an AI to do, that we won't regret come a hundred years later. Lots of civilizational outcomes are path-dependent, and we could permanently lock us out of better outcomes if we try to become interstellar too early.

(It could still help us with surveillance technologies, and thereby assist us in preventing the development of other AIs or existentially dangerous technologies.) - Space colonisation is now super easy, technologically speaking, but we might still wish to postpone it in order to give us time to reflect on what foundational institutions we'd like to start space governance out with. We'd want strong political institutions and treaties in place to make sure that no country is allowed to settle any other planet until some specified time.

- We'd want to make sure we have adequate countermeasures against Malthusian forces before we start spreading. We want to have some sense of what an adequate civilization in a stable equilibrium looks likes, and what institutions need to be in place in order to make sure we can keep it going for millions of years before we even start spreading our current system.

Spreading our current civilization throughout the stars might pose an S-risk (and certainly an x-risk) unless we have robust mechanisms for keeping Malthus (and Moloch more generally) in check. - Given this scenario, if we don't figure out how to do these things before the era of transformative AI, then TAI will just massively speed up technological advancement with no time for reflection. And countries will defect to grab planetary real-estate before we have stable space governance norms in place.

- ^

It's corrigible, but does not influence us via auto-induced distributional shift to make it easier to comply with what we ask it.

Emrik @ 2022-08-27T22:54 (+3)

I worry about the increasing emphasis on personal gains from altruism. Sure, it's important to take care of yourself, and a large portion of us need to hear that more. Sure the way we "take care of ourselves" needn't be that we should demand that charities pay us market-rate wages for our skills, and it needn't be take expensive vacations with abandon, etc.

Mostly it's not the money that worries me. I think the greater danger is deeper, and is about what we optimise for. The criteria by which our brains search for and select actions to make conscious and pull up into our working memory. The greater the number of unaligned criteria we mix into our search process, the (steeply) lower the probability that our brains will select-into-consciousness actions that score high on aligned criteria. There are important reasons to keep needle-sharp focus on doing what actually optimises for our values.

Kirsten @ 2022-08-27T23:09 (+3)

It's not new to have multiple goals! See for example Julia Wise's post "You have more than one goal and that's fine" or her earlier post "Cheerfully".

http://www.givinggladly.com/2019/02/you-have-more-than-one-goal-and-thats.html?m=1

Emrik @ 2024-05-27T19:06 (+2)

If evolutionary biology metaphors for social epistemology is your cup of tea, you may find this discussion I had with ChatGPT interesting. 🍵

(Also, sorry for not optimizing this; but I rarely find time to write anything publishable, so I thought just sharing as-is was better than not sharing at all. I recommend the footnotes btw!)

Glossary/metaphors

- Howea palm trees ↦ EA community

- Wind-pollination ↦ "panmictic communication"

- Sympatric speciation ↦ horizontal segmentation

- Ecological niches ↦ "epistemic niches"

- Inbreeding depression ↦ echo chambers

- Outbreeding depression (and Baker's law) ↦ "Zollman-like effects"

- At least sorta. There's a host of mechanisms mostly sharing the same domain and effects with the more precisely-defined Zollman effect, and I'm saying "Zollman-like" to refer to the group of them. Probably I should find a better word.

Background

Once upon a time, the common ancestor of the palm trees Howea forsteriana and Howea belmoreana on Howe Island would pollinate each other more or less uniformly during each flowering cycle. This was "panmictic" because everybody was equally likely to mix with everybody else.

Then there came a day when the counterfactual descendants had had enough. Due to varying soil profiles on the island, they all had to compromise between fitness for each soil type—or purely specialize in one and accept the loss of all seeds which landed on the wrong soil. "This seems inefficient," one of them observed. A few of them nodded in agreement and conspired to gradually desynchronize their flowering intervals from their conspecifics, so that they would primarily pollinate each other rather than having to uniformly mix with everybody. They had created a cline.

And a cline once established, permits the gene pools of the assortatively-pollinating palms to further specialize toward different mesa-niches within their original meta-niche. Given that a crossbreed between palms adapted for different soil types is going to be less adaptive for either niche,[1] you have a positive feedback cycle where they increasingly desynchronize (to minimize crossbreeding) and increasingly specialize. Solve for the general equilibrium and you get sympatric speciation.[2]

Notice that their freedom to specialize toward their respective mesa-niches is proportional to their reproductive isolation (or inversely proportional to the gene flow between them). The more panmictic they are, the more selection-pressure there is on them to retain 1) genetic performance across the population-weighted distribution of all the mesa-niches in the environment, and 2) cross-compatibility with the entire population (since you can't choose your mates if you're a wind-pollinating palm tree).[3]

From evo bio to socioepistemology

I love this as a metaphor for social epistemology, and the potential detrimental effects of "panmictic communication". Sorta related to the Zollman effect, but more general. If you have an epistemic community that are trying to grow knowledge about a range of different "epistemic niches", then widespread pollination (communication) is obviously good because it protects against e.g. inbreeding depression of local subgroups (e.g. echo chambers, groupthink, etc.), and because researchers can coordinate to avoid redundant work, and because ideas tend to inspire other ideas; but it can also be detrimental because researchers who try to keep up with the ideas and technical jargon being developed across the community (especially related to everything that becomes a "hot topic") will have less time and relative curiosity to specialize in their focus area ("outbreeding depression").

A particularly good example of this is the effective altruism community. Given that they aspire to prioritize between all the world's problems, and due to the very high-dimensional search space generalized altruism implies, and due to how tight-knit the community's discussion fora are (the EA forum, LessWrong, EAGs, etc.), they tend to learn an extremely wide range of topics. I think this is awesome, and usually produces better results than narrow academic fields, but nonetheless there's a tradeoff here.

The rather untargeted gene-flow implied by wind-pollination is a good match to mostly-online meme-flow of the EA community. You might think that EAs will adequately speciate and evolve toward subniches due to the intractability of keeping up with everything, and indeed there are many subcommunities that branch into different focus areas. But if you take cognitive biases into account, and the constant desire people have to be *relevant* to the largest audience they can find (preferential attachment wrt hot topics), plus fear-of-missing-out, and fear of being "caught unaware" of some newly-developed jargon (causing people to spend time learning everything that risks being mentioned in live conversations[4]), it's unlikely that they couldn't benefit from smarter and more fractal ways to specialize their niches. Part of that may involve more "horizontally segmented" communication.

Tagging @Holly_Elmore because evobio metaphors is definitely your cup of tea, and a lot of it is inspired by stuff I first learned from you. Thanks! : )

- ^

Think of it like... if you're programming something based on the assumption that it will run on Linux xor Windows, it's gonna be much easier to reach a given level of quality compared to if you require it to be cross-compatible.

- ^

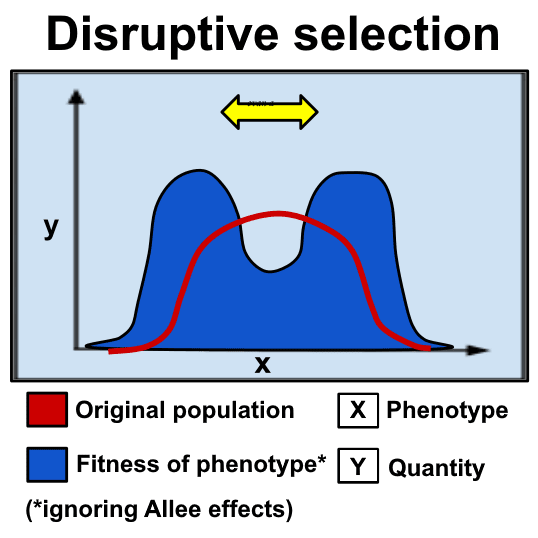

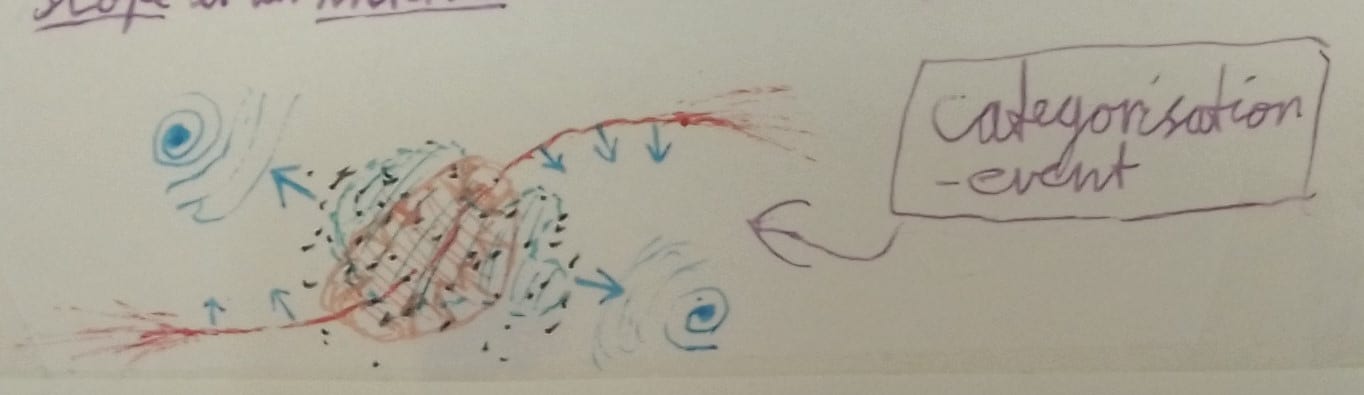

Sympatric speciation is rare because the pressure to be compatible with your conspecifics is usually quite high (Allee effects ↦ network effects). But it is still possible once selection-pressures from "disruptive selection" exceed the "heritage threshold" relative to each mesa-niche.[5]

- ^

This homegenification of evolutionary selection-pressures is akin to markets converging to an equilibrium price. It too depends on panmixia of customers and sellers for a given product. If customers are able to buy from anybody anywhere, differential pricing (i.e. trying to sell your product at above or below equilibrium price for a subgroup of customers) becomes impossible.

- ^

This is also known (by me and at least one other person...) as the "jabber loop":

This highlight the utter absurdity of being afraid of having our ignorance exposed, and going 'round judging each other for what we don't know. If we all worry overmuch about what we don't know, we'll all get stuck reading and talking about stuff in the Jabber loop. The more of our collective time we give to the Jabber loop, the more unusual it will be to be ignorant of what's in there, which means the social punishments for Jabber-ignorance will get even harsher.

- ^

To take this up a notch: sympatric speciation occurs when a cline in the population extends across a separatrix (red) in the dynamic landscape, and the attractors (blue) on each side overpower the cohering forces from Allee effects (orange). This is the doodle I drew on a post-it note to illustrate that pattern in different context:

I dub him the mascot of bullshit-math. Isn't he pretty?

Emrik @ 2024-05-27T19:37 (+2)

And a follow-up on why I encourage the use of jargon.

- Mutation-rate ↦ "jargon-rate"

- I tend to deliberately use jargon-dense language because I think that's usually a good thing. Something we discuss in the chat.

- I also just personally seem to learn much faster by reading jargon-dense stuff.

- As long as the jargon is apt, it highlights the importance of a concept ("oh, it's so generally-applicable that it's got a name of its own?").

- If it's a new idea expressed in normal words, the meaning may (ill-advisably) snap into some old framework I have, and I fail to notice that there's something new to grok about it. Otoh, if it's a new word, I'll definitely notice when I don't know it.

- I prefer a jargon-dump which forces me to look things up, compared to fluent text where I can't quickly scan for things I don't already know.

- I don't feel the need to understand everything in a text in order to benefit from it. If I'm reading something with a 100% hit-rate wrt what I manage to understand, that's not gonna translate to a very high learning-rate.

To clarify: By "jargon" I didn't mean to imply anything negative. I just mean "new words for concepts". They're often the most significant mutations in the meme pool, and are necessary to make progress. If anything, the EA community should consider upping the rate at which they invent jargon, to facilitate specialization of concepts and put existing terms (competing over the same niches) under more selection-pressure.

I suspect the problems people have with jargon is mostly that they are *unable* to change them even if they're anti-helpfwl. So they get the sense that "darn, these jargonisms are bad, but they're stuck in social equilibrium, so I can't change them—it would be better if hadn't created them in the first place." The conclusion is premature, however, since you can improve things either by disincentivizing the creation of bad jargon, *or* increasing people's willingness to create them, so that bad terms get replaced at a higher rate.

That said, if people still insist on trying to learn all the jargon created everywhere because they'll feel embarrassed being caught unaware, increasing the jargon-rate could cause problems (including spending too much time on the forum!). But, again, this is a problem largely caused by impostor syndrome, and pluralistic ignorance/overestimation about how much their peers know. The appropriate solution isn't to reduce memetic mutation-rate, but rather to make people feel safer revealing their ignorance (and thereby also increasing the rate of learning-opportunities).

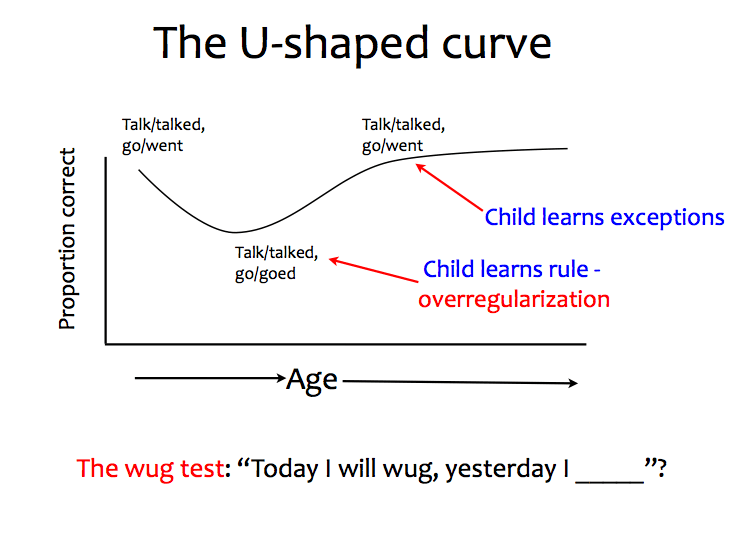

Naive solutions like "let's reduce jargon" are based on partial-equilibrium analysis. It can be compared to a "second-best theory" which is only good on the margin because the system is stuck in a local optimum and people aren't searching for solutions which require U-shaped jumps[1] (slack) or changing multiple variables at once. And as always when you optimize complex social problems (or manually nudge conditions on partial differential equations): "solve for the general equilibrium".

- ^

A "U-shaped jump" is required for everything with activation costs/switching costs.

Emrik @ 2022-11-14T13:22 (+2)

I made an entry to Arbital on absorbing barriers to test it out, copied below. Sorta want to bring Arbital back (with some tweaks), or implement something similar with the tags on the EA forum. It's essentially a collaborative knowledge net, and it could have massive potential if people were taught how to benefit from and contribute to it.

When playing a game that involves making bets, avoid naïvely calculating expected utilities without taking the expected cost of absorbing barriers into account.

An absorbing barrier in a dynamical system is the state in possibility space from which it may never return.

It's a term from Taleb, and the canonical example is when you're playing poker and you've lost too much to keep playing. You're out of the game.

- In longtermism, the absorbing barrier could be extinction or a dystopian lock-in.

- In the St. Petersburg Paradox, the absorbing barrier is the first lost bet.

- In conservation biology, the extinction threshold of a species is an absorbing barrier where a parameter (eg. population size) dips below a critical value where they are no longer able to reproduce to replace their death rate, leading to gradual extinction.

- In evolutionary biology, the error threshold is the rate of mutation above which DNA loses too much information between generations that beneficial mutations cannot reach fixation (stability in the population). In the figure below, the model shows the proportion of population carrying a beneficial hereditary sequence over the mutation rate. The sequence only reaches fixation when the mutation rate (1-Q) goes about ~0.05. This either prevents organisms from evolving in the first place, or, when the mutation rate suddenly increases due to environmental radiation, may constitute an extinction threshold.

A system is said to undergo a Lindy effect if its expected remaining lifespan is proportional to its age. It also describes a process where the distance from an absorbing barrier increases over time. If a system recursively accumulates robustness to extinction, it is said to have Lindy longevity.

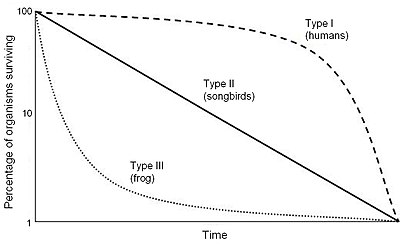

- In biology, offspring of r-selected species (Type III) usually exhibit a Lindy effect as they become more adapt to the environment over time.

- In social dynamics, the longer a social norm has persisted, the longer it's likely to stick around.

- In programming, the longer a poor design choice at the start has persisted, the longer it's likely to remain. This may happen due to build-up of technical debt (eg. more and more other modules depend on the original design choice), making it harder to refactor the system to accommodate a better replacement.

- In relationships, sometimes the longer a lie or an omission has persisted, the more reluctant its originator is likely to be correct it. Lies may increase in robustness over time because they get entangled with other lies that have to be maintained in order to protect the first lie, further increasing the reputational cost of correction.

When playing a game--in the maximally general sense of the term--that you'd like to keep playing for a very long time, avoid naïvely calculating expected utilities without taking the expected cost of absorbing barriers into account. Aim for strategies that take you closer to the realm of Lindy longevity.

Emrik @ 2022-11-06T01:50 (+2)

This post is perhaps the most important thing I've read on the EA forum. (Update: Ok, I'm less optimistic now, but still seems very promising.)

The main argument that I updated on was this:

Multiplier effects: Delaying timelines by 1 year gives the entire alignment community an extra year to solve the problem.

In other words, if I am capable of doing an average amount of alignment work per unit time, and I have units of time available before the development of transformative AI, I will have contributed work. But if I expect to delay transformative AI by units of time if I focus on it, everyone will have that additional time to do alignment work, which means my impact is , where is the number of people doing work. Naively then, if , I should be focusing on buying time.[1]

This analysis further favours time-buying if the total amount of work per unit time accelerates, which is plausibly the case if e.g. the alignment community increases over time.

- ^

This assumes time-buying and direct alignment-work is independent, whereas I expect doing either will help with the other to some extent.

Emrik @ 2022-10-20T06:29 (+2)

Introspective update: I seem to have such unusual rules for how I act and respond to things that I feel hopeless about my intentions ever actually being understood, so I kinda just give up optimising at all and accept the inevitable misunderstandings. This is bad, because I can probably optimise it more on the margin even if I can't be fully understood. I should probably tone it down and behave slightly closer to status quo.

Not expecting this to clear anything up, but the cheap-to-compute rules are downstream of three hard-to-compute principles:

A) Act as if you believed social norms were marginally more optimal than they already are.

Bad social norms are stuck in inadequate equilibria, and even if everyone sorta-knew the norms were suboptimal, it would take courage or coordination to break out of it. But if we are more people who are courageously willing to pretend that we already have the norms we want to have, then we're pushing the frontier and preparing for a phase shift.

For example, the idea that you shouldn't brag or do things that make it seem like you think you're better than anyone is really pernicious, so I try to act as if no one agreed with this. This also means that I try to avoid constantly making self-protective disclaimers for when I act in ways that can be perceived as hubris, because the disclaimers usually just reinforce the norm I'm trying to defeat.

B) Act according to how you want other people to expect other people to expect you to act.

This goes to recursion level 2 or 3 depending on situation. Norms are held in place by common knowledge and recursive expectation. If I'm considering pushing for some norm, but I can't even *imagine* a world in which people stably expect other people to expect me to behave that way, then that norm will never reach fixation in the community and I should look for something else.

C) Imagine an optimal and coherent model/aesthetic of your own character, and act according to how you expect that model would act.

I call it the "SVT-SPT loop". We infer our intrinsic character based on how we behave (self-perception theory), and we behave according to what we believe our intrinsic character is (self-verification theory).

This is about optimising how I act in social situations in order to try to shape my own personality into something that I can stably inhabit and approve of. It's kinda self-centered because it often trades off against what would be best for others that I do, which means I'm at least partially optimising for my own interests instead of theirs.

Emrik @ 2022-09-01T04:54 (+2)

I notice (with unequivocal happiness) that EA engagement has grown a lot lately, and I wonder to what extent our new aspiring do-gooders are familiar with core concepts of rationality. On my model, rationality makes EA what it is, and we have almost no power to change the world without it. If the Sequences seem "too old" to be attractive to newcomers (is that the case?), maybe it could be extremely valuable to try to distil the essence and post it anew. Experimenting with distilling and tweaking the aesthetics in order to appeal to a different share of the market seems extremely valuable regardless, but the promising wave of newcomers makes a stronger case for it.

(Apparently there's this.)

Emrik @ 2022-08-21T10:51 (+2)

Hmm, I'm noticing that a surprisingly large portion of my recent creative progress can be traced down to a single "isthmus" (a key pattern that helps you connect many other patterns). It's the trigger-action-plan of

IF you see an interesting pattern that doesn't have a name

THEN invent a new word and make a flashcard for it

This may not sound like much, and it wouldn't to me either if I hadn't seen it make a profound difference.

Interesting patterns are powerups, and if you just go "huh, that's interesting" and then move on with your life, you're totally wasting their potential. Making a name for it makes it much more likely that you'll be able to spontaneously see the pattern elsewhere (isthmus-passing insights). And making a flashcard for it makes sure you access it when you have different distributions of activation levels over other ideas, making it more likely that you'll end up making synthetic (isthmus-centered) insights between them. (For this reason, I'm also strongly against the idea of dissuading people from using jargon as long as the jargon makes sense. I think people should use more jargon, even if it seems embarrassingly supercilious and perhaps intimidating to outsiders).

Emrik @ 2022-08-16T21:03 (+1)

I wonder to what extent (I know it's not the full extent) people struggle with concentration because they're trying to read things in a suboptimal manner and actually doing things that are pointless (e.g. having to do pointless things at school or your job, like writing theses or grant applications). And as for reading, people seem to think they have to read things word-by-word, and from start to finish. What a silly thing to do. Read the parts related to what you're looking for, and start skimming paragraphs when you notice the insight-density dropping. Don't Goodhart on the feeling of accomplishment associated with "having read" something.

My number one productivity tip: Quit your job, quit school, take drugs (preferably antidepressants and ADHD meds), and do whatever you want. If you want to do good things, this is probably the best you can do with your life, as long as you can convince people to give you sufficient money to live while you're doing it.

Dave Cortright @ 2022-08-19T16:25 (+3)

I'm a big proponent of Universal Basic Income. If people don't have to spend a significant amount of time worrying about satisfying their bottom two levels in the Maslow Hierarchy, it frees them up to do some pretty amazing things

Emrik @ 2022-08-19T16:53 (+1)

Yes! Although I go further: I'm in favour of EA tenure. If people don't have to satisfy their superiors, it frees them up to do exactly what they think is optimal to do--and this is much more usefwl than people realise.