AISN #53: An Open Letter Attempts to Block OpenAI Restructuring

By Center for AI Safety, Corin Katzke, Dan H @ 2025-04-29T15:56 (+6)

This is a linkpost to https://newsletter.safe.ai/p/an-open-letter-attempts-to-block

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: Experts and ex-employees urge the Attorneys General of California and Delaware to block OpenAI’s for-profit restructure; CAIS announces the winners of its safety benchmarking competition.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

Subscribe to receive future versions.

An Open Letter Attempts to Block OpenAI Restructuring

A group of former OpenAI employees and independent experts published an open letter urging the Attorneys General (AGs) of California (where OpenAI operates) and Delaware (where OpenAI is incorporated) to block OpenAI’s planned restructuring into a for-profit entity. The letter argues the move would fundamentally undermine the organization's charitable mission by jeopardizing the governance safeguards designed to protect control over AGI from profit motives.

OpenAI was founded with the charitable purpose to ensure that artificial general intelligence benefits all of humanity. OpenAI’s original nonprofit structure, and later its capped-profit model, were designed to control profit motives in the development of AGI, which OpenAI defines as "highly autonomous systems that outperform humans at most economically valuable work." The structure was designed to prevent profit motives from incentivizing OpenAI to take risky development decisions and divert much of the wealth produced by AGI to private shareholders.

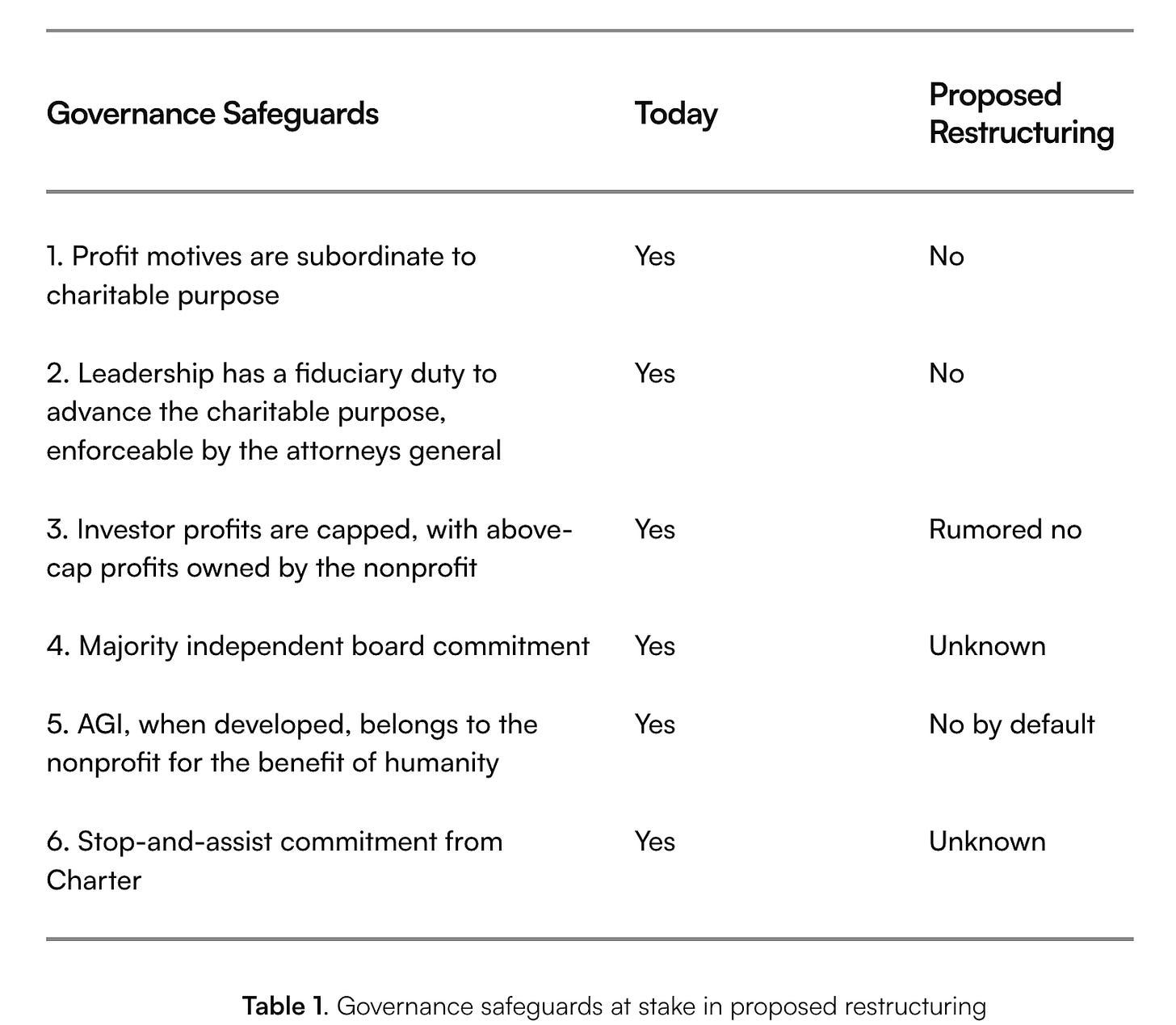

The proposed restructuring into a Public Benefit Corporation (PBC) would dismantle the governance safeguards OpenAI originally championed. The letter highlights that the proposed restructuring would transfer control away from the nonprofit entity–whose primary fiduciary duty is to humanity–to a for-profit board whose directors would be partly beholden to shareholder interests. The authors detail several specific safeguards currently in place that would be undermined or eliminated:

- Subordination of Profit Motives: Currently, the nonprofit's mission takes precedence over any obligation to generate profit for OpenAI’s for-profit subsidiary. A PBC structure, however, would require balancing the public benefit mission with shareholder pecuniary interests.

- Nonprofit Fiduciary Duty: The nonprofit board currently has a legally enforceable fiduciary duty to advance the charitable purpose, with the AGs empowered to enforce this duty on behalf of the public. The proposed structure would eliminate this direct accountability to the public interest.

- Capped Investor Profits: The current "capped-profit" model ensures that returns beyond a certain cap flow back to the nonprofit for humanity's benefit. Reports suggest the restructuring may eliminate this cap, potentially reallocating immense value away from the public good.

- Independent Board: OpenAI previously committed to a majority-independent board for the nonprofit, with limitations on financial stakes and voting on potential conflicts. The letter notes it is unknown if the new PBC structure would retain this commitment.

- AGI Belongs to Humanity: The nonprofit, not investors, is designated to govern AGI technologies once developed. The restructuring would likely shift AGI ownership to the for-profit PBC and its investors.

- Stop-and-Assist Commitment: OpenAI’s Charter includes a commitment to cease competition and assist other aligned, safety-conscious projects nearing AGI. It is unclear if the PBC would honor this commitment.

The letter concludes by asking the Attorneys General of California and Delaware to halt the restructuring and protect OpenAI’s charitable mission. The authors argue that transferring control of potentially the most powerful technology ever created to a for-profit entity fundamentally contradicts OpenAI's charitable obligations. They urge the AGs to use their authority to investigate the proposed changes and ensure that the governance structures prioritizing public benefit over private gain remain intact.

SafeBench Winners

CAIS recently concluded its SafeBench competition, which awarded prizes for new benchmarks for assessing and reducing risks from AI. Sponsored by Schmidt Sciences, the competition awarded $250,000 across eight winning submissions.

The competition focused on four key areas—Robustness, Monitoring, Alignment, and Safety Applications—attracting nearly one hundred submissions. A panel of judges evaluated submissions based on the clarity of safety assessment, the potential benefit of progress on the benchmark, and the ease of evaluating measurements.

Three Benchmarks Awarded First Prize. Three submissions each received first prizes of $50,000 each for their applicability to frontier models, relevance to current safety challenges, and use of large datasets.

- Cybench: A Framework for Evaluating Cybersecurity Capabilities and Risks of Language Models tackles the assessment of language models in cybersecurity. It includes forty professional-level Capture the Flag (CTF) tasks across six common categories like web security and cryptography. The benchmark has already been utilized by the US AI Safety Institute (AISI), UK AISI, and Anthropic for evaluating frontier models.

- AgentDojo: A Dynamic Environment to Evaluate Prompt Injection Attacks and Defenses for LLM Agents provides an extensible environment for assessing agents that use tools over untrusted data. It features ninety-seven realistic tasks and over six hundred security test cases, alongside various attack and defense paradigms, offering a dynamic platform adaptable to future agent developments.

- BackdoorLLM: A Comprehensive Benchmark for Backdoor Attacks on Large Language Models systematically evaluates diverse backdoor attack strategies—such as data poisoning and chain-of-thought attacks—across numerous experiments, scenarios, and model architectures. This provides a baseline for understanding current model vulnerabilities and developing future defenses.

Five Benchmarks Recognized with Second Prize. Five additional submissions were awarded $20,000 each for their innovative approaches to evaluating specific AI safety risks.

- CVE-Bench: A Benchmark for AI Agents’ Ability to Exploit Real-World Web Application Vulnerabilities evaluates AI agents using forty critical-severity Common Vulnerability and Exposures (CVE) from the National Vulnerability Database. It features a sandbox framework mimicking real-world conditions for effective exploit evaluation.

- JailBreakV: A Benchmark for Assessing the Robustness of MultiModal Large Language Models against Jailbreak Attacks investigates the transferability of text-based jailbreak techniques to multimodal large language models (MLLMs). It uses 20,000 text prompts and 8,000 image inputs to highlight the unique challenges posed by multimodality.

- Poser: Unmasking Alignment Faking LLMs by Manipulating Their Internals presents a testbed of 324 fine-tuned LLM pairs—one consistently aligned, the other deceptively misaligned—to evaluate strategies for detecting alignment faking using only model internals, potentially offering a valuable monitoring tool.

- Me, Myself, and AI: The Situational Awareness Dataset (SAD) for LLMs tests the situational awareness of LLMs through over 13,000 questions across seven task categories. It probes abilities like self-recognition and instruction following based on self-knowledge, crucial for understanding risks from emerging capabilities.

- BioLP-bench: Measuring understanding of biological lab protocols by large language models assesses LLMs' ability to identify and correct errors in biological lab protocols. This benchmark addresses the dual-use nature of these capabilities and the need to understand biosecurity risks before model deployment.

These benchmarks provide crucial tools for understanding the progress of AI, evaluating risks, and ultimately reducing potential harms. The papers, code, and datasets for all winning benchmarks are publicly available for further research and use. CAIS hopes to see future work which is inspired by or builds on these submissions.

Other News

Government

- The White House announced an initiative and task force to advance AI education for American youth.

- The UK's AI Safety Institute introduced RepliBench, a benchmark for measuring autonomous replication capabilities in AI systems.

Research and Opinion

- Gladstone AI released a report arguing for the strategic necessity and challenges of establishing a U.S. national superintelligence project. (We still think that’s a bad idea.)

- Pew Research found both US AI experts and the public prefer more control and regulation of AI development.

- A paper found that existential risk narratives about AI do not distract from its immediate harms.

AI Frontiers

- Contributing Writer Vanessa Bates Ramirez covers how AI might reshape work—and whether the jobs of the future are ones we’ll actually want.

- Independent AI policy researchers Miles Brundage and Grace Werner argue that President Trump can and should strike an “AI deal” with China to preserve international security.

See also: CAIS website, X account for CAIS, our paper on superintelligence strategy, our AI safety course, and AI Frontiers, a new platform for expert commentary and analysis.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.