Example of a personal ethics, values and causes review

By weeatquince🔸 @ 2022-12-16T21:54 (+31)

| This is a Draft Amnesty Day draft. That means it’s not polished, it’s probably not up to my standards, the ideas are not thought out, and I haven’t checked everything. I was explicitly encouraged to post something unfinished! |

| Commenting and feedback guidelines: I’m going with the default — please be nice. But constructive feedback is appreciated; please let me know what you think is wrong. Feedback on the structure of the argument is also appreciated. |

[In early 2020 I did a personal ethics review. Since then it has come up in conversation a few times and people have said they found it interesting to read and have suggested I write it up for the EA Forum. So here it is for Draft Amnesty Day. Have left a few comments in italics but otherwise unedited. Also please don’t read too much into my personal views from this, it was ultimately written entirely for for myself not to explain myself to others.]

[Also, if you want to do something similar some templates are here.]

Chapter 1: ethics values and causes

1.1 Ethics review

WHO MATTERS

In this section I consider who I care about morally

I find it useful to distinguish

- moral worth: how much a being matters

- moral responsibility: how responsible I am for that being.

- In practice: how much given the state of the world, this actually affects my decisions

For example I do not think my parents have greater intrinsic moral worth than other humans, but I do have a greater moral responsibility for them. In practice they do not currently need much support from me.

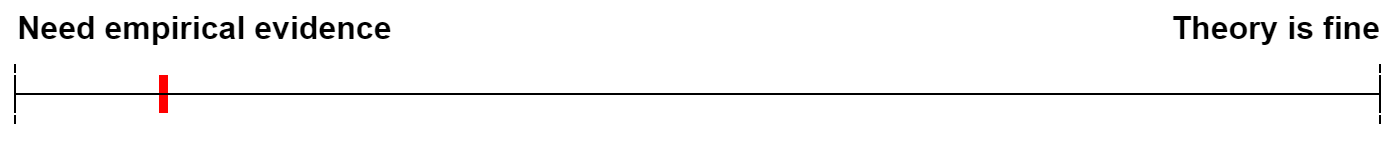

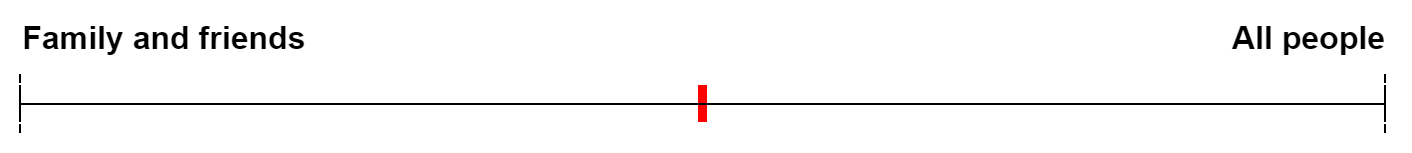

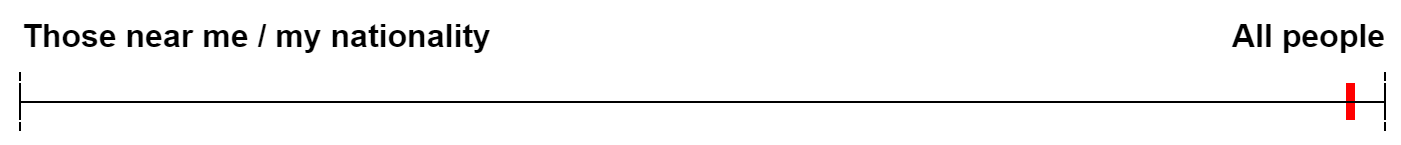

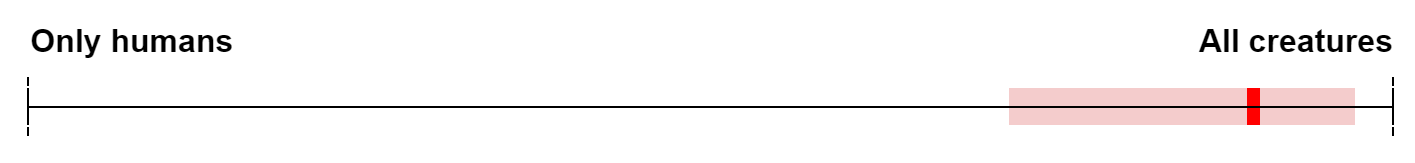

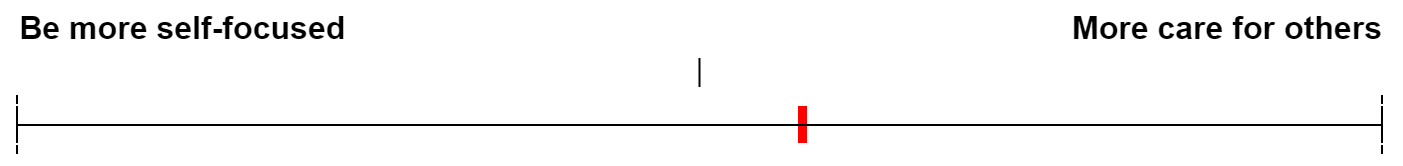

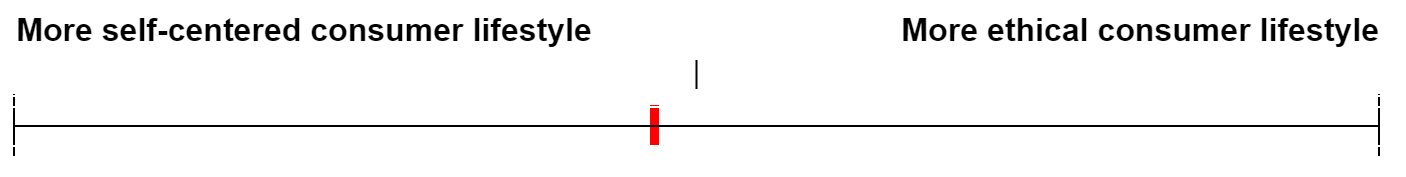

(The sliders visually sum up both worth and responsibility)

Worth: I do not believe my family and friends are intrinsically morally worth more.

Responsibility: I believe I have a responsibility to my family and friends above and beyond the responsibility I have to strangers. Especially to family. Eg: I should pay a significant amount for old age care for my parents. That said my family and friends are not my sole moral responsibility and I also have a responsibility to others.

In practice: In practice my family is sufficiently well-off that (although I should save in case this changes) the impact of my career and a significant chunk of my excess resources can currently be focused on others.

Worth: I believe that location or nationality are not tied to moral worth. So I do not believe I should, for example, donate to UK based charities above charities in other countries.

Responsibility: Similar to my responsibility to look after my family (but to a much smaller level). I do believe that I have some amount of responsibility to prevent extreme suffering of my tribe (similarly I may also have a greater moral responsibility to call out injustices perpetrated by my tribe). I would for example call out extreme anti-semitism.

In practice: I do not prioritise British people or Jewish people. Although there is antisemitism it is not at a dangerously high level where I might feel called to act. The UK is not being invaded by a hostile state. Etc

Worth: I think all creatures matter.

I have a number of instincts that suggest humans matter more (and that smarter animals matter more). This varies by domain. Comparing humans to, let's say sheep, in different domains:

| Domain | How much humans matter | Reason |

| Welfare | ~ 10 x more | More self-aware/conscious. Also moral uncertainty reasons. |

| Freedom | ~ infinity x more | Greater ability to make plans for happiness and be happy |

| Lives | ~ 10^10 x more | Greater capacity to mourn loss, and to plan and be happy |

| Non-Extinction | ~ infinity x more | In proportion to ability to affect change on the world |

In most moral cases welfare is the predominant domain for judging my actions and I do not think human welfare is not significantly more important than animal welfare except insofar as humans may have more capacity for suffering.

Responsibility: I do not have a greater responsibility to humans.

In practice: It is cheaper to help animals so I should consider donating more to animal causes.

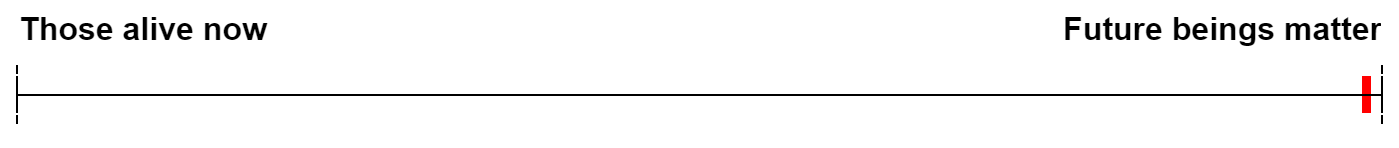

Worth: I do not believe that position in time is tied to moral worth. I believe it is important to address root causes of problems (and/or mitigate future risks), so that future beings do not suffer, rather than just address problems.

Responsibility: I do not believe I have a greater moral responsibility to people alive today, except in proportion to my ability to affect change on them.

In practice: Having a long-term impact is very difficult and it still may be worth me focusing on very immediate issues (see section below on How to decide)

WHAT MATTERS

In this section I consider what I care about morally when I am deciding how to act. (Different question to how I morally judge others, etc). As a useful starting point I compare my views to hedonistic utilitarianism.

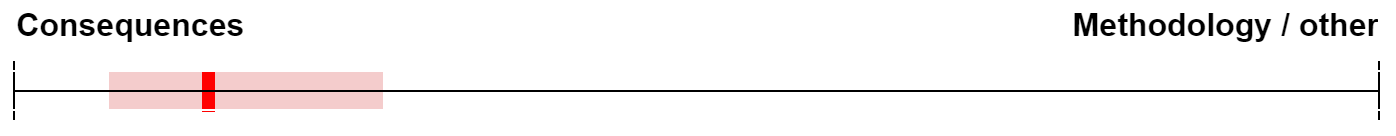

I believe I should decide what to do based on the consequences of those actions (ie consequentialism).

I believe that good intentions are not sufficient to make an action morally valuable.

I do believe there is an action / inaction distinction and that I should prioritise not causing harm. However I believe this from a consequentialist basis – I think a lot of bad can be done by people who have a twisted sense of morality and as a rule of thumb we should all endeavour to cause no harm and then work from there.

[Note: I tend to lean towards rule utilitarianism and being very high integrity.]

In practice: I try to avoid harm (eg I am vegan) and worry about consequences (eg poor meat eater problem.)

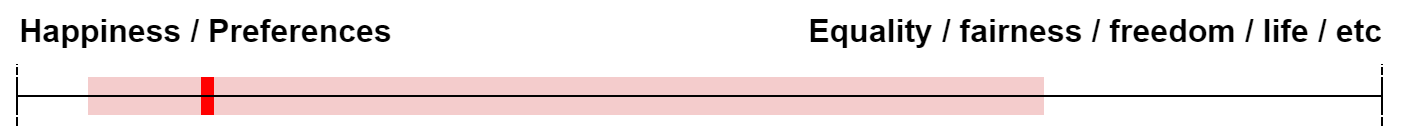

I have no strong view on preference utilitarianism (maximising the fulfilment of preferences) vs hedonistic utilitarianism (maximising happiness). So far this does not practically affect my decisions.

I am however somewhat skeptical of other value systems such as equality / fairness / self ownership / freedom: except insofar as they lead to happiness and/or preference fulfilment.

I don’t value saving lives except insofar as it leads to happiness (or preference fulfillment of others). This certainly should not be taken to mean that I would have a person die to make someone happy – I think most lives are happy so more life is good.

[Note: in practice I think most people want to live, see here, so I am in favour of saving lives.]

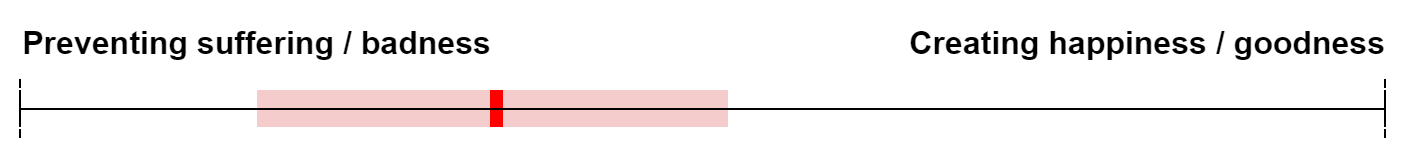

I think there is a greater moral imperative to address suffering than to create happiness. (This is not negative utilitarianism as defined here). This seems to be a fairly common moral view

I think bad pain can be extremely bad. I think I might trade 1 day of extreme pain for maybe 100 extra days of normal life or 25 days of extreme happy life.

I don’t think the worse off should be intrinsically prioritised or seen to have extra moral worth, except insofar as it is likely a lot can be done to raise them up.

HOW TO DECIDE

[Note: I have done a lot more research into how to make decisions in situations of deep empirical uncertainty since this 2020 ethics review and that would likely update how I think about ethics, a thing to think about going forward.]

I think most people trying to do good do very little. I worry about reasoning one's way to an answer or working on tasks with no good feedback loops.

For example I am not convinced by the arguments for working on AI safety (unless perhaps you are a top computer scientist then it is a good use of skills so great).

I would trust the collective view of a broad body of thoughtful people on what is good over and above my own belief in what is good.

[Note: I think it is easy to misunderstand (or I am myself confused by) what I mean here. I think the collective efforts of people trying to make the world better across a range of moral views are better than a single persons views. I think I defer to others a lot less than other people I know and believe strongly in making up my own mind on how I should live, but I also believe in helping others achieve their moral goals cooperation and mutual support.]

1.2 _ Causes

[Note: I think there is a lot of information in my head and not written down that in this document, e.g. details on the number of animals and humans suffering today, thoughts on the need to steer the world verses drive forward growth, views on what the biggest risks to future generations are. Also I think this is the weakest section of my career and ethics review and due for a re-review sometime soon.]

TOP CAUSES

I break down different ways I can affect the world into different areas. I don’t go into detail here but these are the causes that I think focusing on will have the biggest effect on the world, given my ethical views (alphabetical order):

- Animal welfare: Eg: improving farmed animal welfare.

- Animal welfare (long-term): Eg: clean meat development to end factory farming.

- Extreme suffering: Any form of Preving depression. Improving access to pain relief. Etc

- Extreme suffering (& global poverty): Eg. Improving access to pain relief for poorest

- Future: Trying to shape the future. Eg prevent disasters, climate change, WWIII, AI risk.

- Global poverty: Supporting the world’s poorest out of poverty

- Global poverty (health): Health improvements for the world’s poorest

- Institutions: Creating institutions to make good decisions. Eg: improving government.

- Institutions (& future): Shaping policy institutions so that they lead to a good future.

- Institutions (& global poverty): Making policy work well in the developing world.

- Meta: Improving how people do good. Eg: charity evaluation work.

- Meta (& future): Research on how to affect the future well.

TOP CAUSES – ORDERED LIST

I have broken this down further into specific areas of work. This is not exhaustive (eg I know nothing about improving academia so have not discussed it). This is in priority order, based on where I think I should donate the bulk of my annual donation, opportunities permitting:

- Meta: Cause prioritisation research.

- Meta: Effective altruism (EA) community building.

- Meta (& future): Research on how to affect the future well.

- Institutions (& future): Shaping policy institutions so that they lead to a good future.

- Institutions (& global poverty): Making policy work well in the developing world.

- Animals: Ending the meat industry

- Extreme suffering: Improving access to pain relief

- Extreme suffering (& global poverty): Mental health work in the developing world.

- Global poverty: Global health interventions, eg bednets

- Institutions (& global poverty): Making policy work well in the developing world.

- Future (& extreme suffering): S-risk research

It is not clear to me that this ordering makes sense.

WHERE TO DONATE

[Note: This is not about the places I want to give but how I think I should make donation decisions]

Splitting donations:

- Support: Open Philanthropy moral parliaments approach

- Support: Avoiding the meta trap

- Against: Expected value calculations

Beating Poverty: If I give ~£3k to AMF can save a human life. I could save 2 lives a year. What could be better?

1.3 _ Commitment

I ascribe to the “personal best” approach to doing good. I am not a saint and I am not perfect but I can each year be a better person than I was last year. I can set challenging yet achievable goals to do more but also be careful to ensure I am living in a way that is sustainable for myself.

Rather than comparing where I want to be to anything in the world I focus on myself. I compare my current lifestyle to where I think I should be: given how I currently live, do I think I could do more for the world or that I should be giving more to myself?

Donating less: I possibly underspend on things like fast hardware or nice clothes that could help me do more good for the world – but I think this underspend is mostly driven by a dislike of change and of shopping rather than by altruistic frugalness (although I should be wary of this). I think I could travel a bit more (I leave the UK, for non-EA stuff, about once every 2 years).

Donating more: I lead quite a comfortable lifestyle. My savings are growing I can afford to donate more. I think a bigger priority is having a savings account and pension and setting money aside and then I can be more confident in my finances and donate more. Could aim for 30% p.a.

In the short run – work less: I have worked quite hard in lockdown – in part because of lack of other things to do. I feel less motivated and more tired of (EA) work than I have done in the past so I should probably ease off the accelerator for a bit and take things a bit more slowly.

In the long run – work less: I think I neglect other things that matter to me like family and relationships as these are long-run goals and I am a short run human, so I should put more attention into these.

Looking back on my career to date the things I have been happies working on matches strongly with the things that are best for the world (as far as I can tell) so there is no conflict here.

I think I could care more for my friends and family. I think I already care a lot but doing nice things for my friends does make me happy and them happy so this should be a greater part of my current way of being.

Animal products: I am happy being a 99.5% vegan. No need to change.

Recycling: I could probably care less about recycling.

Ethically sourced products: Neutral, I assume buying cheap and donating is better but it could be worth looking into at some point.

Other: Neutral on other issues.

Chapter 2: Careers

[For now I don’t want to share my career plans publicly but you can see a template of the kinds of questions I asked myself here: