I'm interviewing Vitalik Buterin about 'my techno-optimism', E/acc and D/acc. What should I ask him?

By Robert_Wiblin @ 2024-01-12T19:58 (+26)

Next week for the 80,000 Hours Podcast I'm interviewing ethereum creator Vitalik Buterin on his recent essay 'My techno-optimism' which, among other things, responded to disagreement about whether we should be speeding up or slowing down progress in AI.

I last interviewed Vitalik back in 2019: 'Vitalik Buterin on effective altruism, better ways to fund public goods, the blockchain’s problems so far, and how it could yet change the world'.

What should we talk about and what should I ask him?

Max Nadeau @ 2024-01-12T20:18 (+6)

I'd love to hear his thoughts on defensive measures for "fuzzier" threats from advanced AI, e.g. manipulation, persuasion, "distortion of epistemics", etc. Since it seems difficult to delineate when these sorts of harms are occuring (as opposed to benign forms of advertising/rhetoric/expression), it seems hard to construct defenses.

This is a related concept mechanisms for collective epistemics like prediction markets or community notes, which Vitalik praises here. But the harms from manipulation are broader, and could route through "superstimuli", addictive platforms, etc. beyond just the spread of falsehoods. See manipulation section here for related thoughts.

Roman Leventov @ 2024-01-14T03:39 (+1)

And also: about the "AI race" risk a.k.a. Moloch a.k.a. https://www.lesswrong.com/posts/LpM3EAakwYdS6aRKf/what-multipolar-failure-looks-like-and-robust-agent-agnostic

Arepo @ 2024-01-13T20:04 (+4)

It's not on the topic you mentioned, but does he see a path to crypto usage ever overcoming its extremely high barriers to entry, such that it could become something almost everyone uses in some way? And does he have any specific visions for the future of defi, which so far seems to have basically led to a few low-liquidity prediction market and NFT apps, and as far as I can tell not much else. Or at least, does he see a specific problem it's trying to realistically solve, or was it more like 'here's a platform people could end up using a lot anyway, and it doesn't seem like there's any reason not to let it run code'?

Arepo @ 2024-01-13T20:00 (+4)

I'd be interested to hear what he thinks are both the most likely doom scenarios and the most likely non-doom scenarios; also whether he's changed his mind on either in the last few years and if so, why.

Wei Dai @ 2024-01-15T11:03 (+2)

How should we deal with the possibility/risk of AIs inherently disfavoring all the D's that Vitalik wants to accelerate? See my Twitter thread replying to his essay for more details.

Vasco Grilo @ 2024-01-15T10:52 (+2)

Hi Rob,

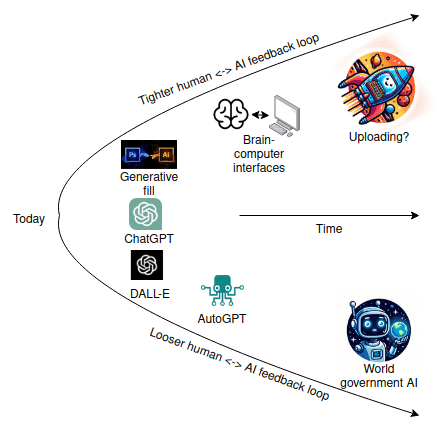

I would be curious to see Vitalik expanding on the interventions we can pursue in practice to go down the upper path. For readers' context, here is the context before the above:

But if we want to extrapolate this idea of human-AI cooperation further, we get to more radical conclusions. Unless we create a world government powerful enough to detect and stop every small group of people hacking on individual GPUs with laptops, someone is going to create a superintelligent AI eventually - one that can think a thousand times faster than we can - and no combination of humans using tools with their hands is going to be able to hold its own against that. And so we need to take this idea of human-computer cooperation much deeper and further.

A first natural step is brain-computer interfaces. Brain-computer interfaces can give humans much more direct access to more-and-more powerful forms of computation and cognition, reducing the two-way communication loop between man and machine from seconds to milliseconds. This would also greatly reduce the "mental effort" cost to getting a computer to help you gather facts, give suggestions or execute on a plan.

Later stages of such a roadmap admittedly get weird. In addition to brain-computer interfaces, there are various paths to improving our brains directly through innovations in biology. An eventual further step, which merges both paths, may involve uploading our minds to run on computers directly. This would also be the ultimate d/acc for physical security: protecting ourselves from harm would no longer be a challenging problem of protecting inevitably-squishy human bodies, but rather a much simpler problem of making data backups.