July '25 EA Newsletter Poll

By Toby Tremlett🔹 @ 2025-07-15T09:12 (+39)

This poll was linked in the July edition of the Effective Altruism Newsletter.

I've chosen this question because Marcus A. Davis's Forum post, featured in this month's newsletter, raises this difficult question: if we are uncertain about our fundamental philosophical assumptions, how can we prioritise between causes?

There is no neutral position. If we want to do something, we have to first focus on a cause. And the difference between the importance and tractability of one cause and another is likely to be the largest determinant of the impact we have.

And yet, cause-prioritisation decisions often reach a philosophical quagmire. What should we do? How do you personally deal with this difficulty?

Mo Putera @ 2025-07-15T12:54 (+34)

How do you personally deal with this difficulty?

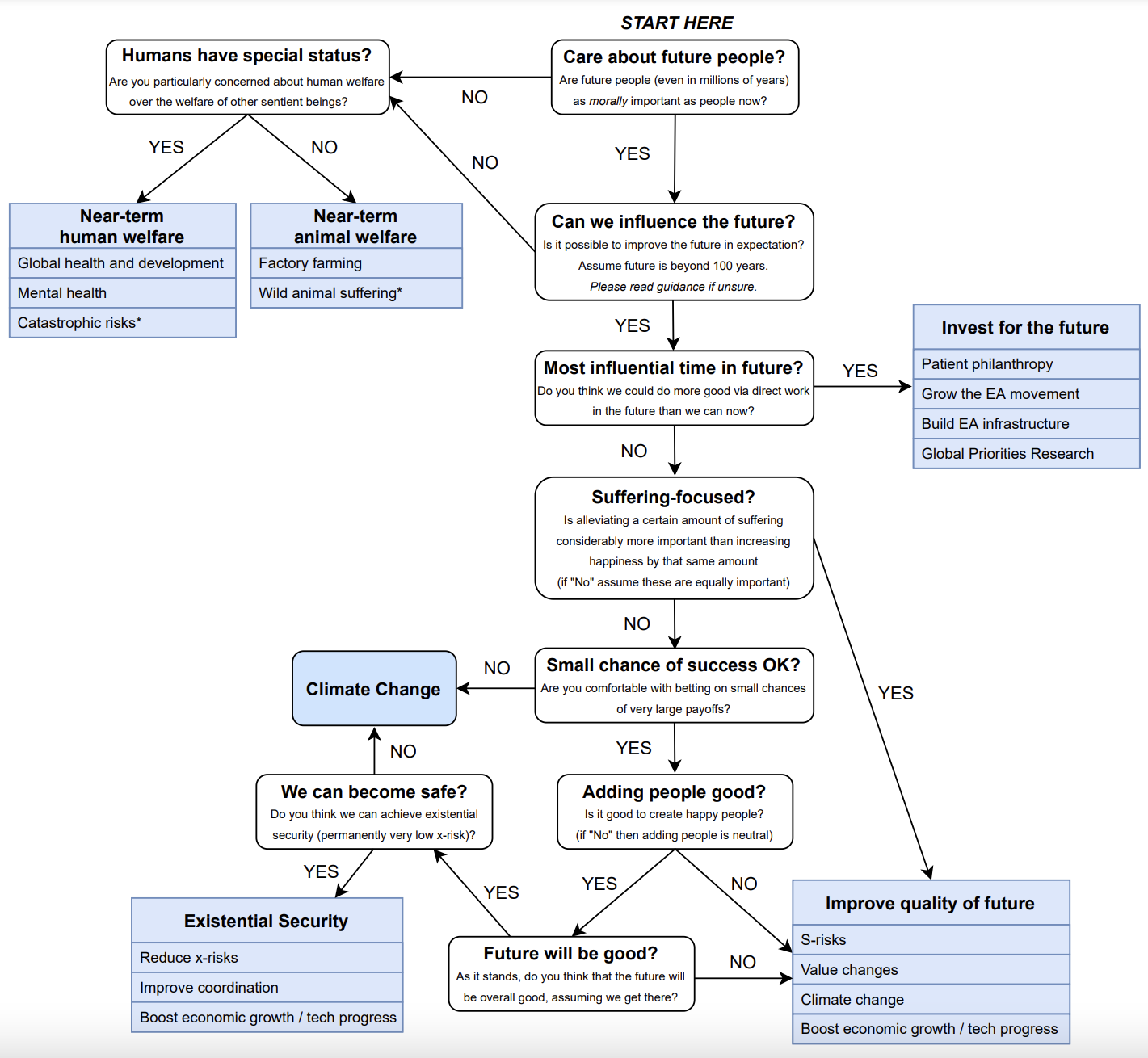

I personally dealt with this (in part) by referencing Jack Malde's excellent guided cause prio flowchart (this was a first draft to gauge forum receptivity). Sadly, when asked about updates, he replied that "Interest seemed to be somewhat limited."

JackM @ 2025-07-15T20:54 (+4)

I'm glad you found this useful Mo!

Vasco Grilo🔸 @ 2025-07-15T16:59 (+2)

Thanks for sharing, Mo! I do not think humans need to have special status for one to prioritise interventions targeting humans. I estimate GiveWell's top charities may well be more cost-effective than interventions targeting animals due to effects on soil nematodes, mites, and springtails.

JackM @ 2025-07-15T21:13 (+6)

Thanks Vasco, I really appreciate your work to incorporate the wellbeing of wild animals into cost-effectiveness analyses.

In your piece, you focus on evaluating existing interventions. But I wonder whether there might be more direct ways to reduce the living time of soil nematodes, mites, and springtails that could outperform any human life-saving intervention.

On priors it seems unlikely that optimizing for saving human lives would be the most effective strategy to reduce wild animal suffering.

Vasco Grilo🔸 @ 2025-07-15T21:41 (+2)

Thanks, Jack! I agree it is unlikely that the most cost-effective ways of increasing human-years are the most cost-effective ways of increasing agricultural-land-years. Brian Tomasik may have listed some of these. However, buying beef decreases arthropod-years the most cost-effectively among the interventions for which Brian estimated the cost-effectiveness, and I estimated GiveWell's top charities are 2.65 (= 1.69/0.638) times as cost-effective as buying beef.

Anthony DiGiovanni @ 2025-07-15T17:29 (+16)

(There’s a lot more I might want to say about this, and also don't take the precise 80% too seriously, but FWIW:)[1]

When we do cause prioritization, we’re judging whether one cause is better than another under our (extreme) uncertainty. To do that, we need to clarify what kind of uncertainty we have, and what it means to do “better” given that uncertainty. To do that, we need to reflect on questions like:

- “Should we endorse classical Bayesian epistemology (even as an ‘ideal’)?” or

- “How do we compare actions’ ‘expected’ consequences, when we can’t conceive of all the possible consequences?”

You might defer to others who’ve reflected a lot on these questions. But to me it seems there are surprisingly few people who’ve (legibly) done so. E.g., take the theorems that supposedly tell us to be (/“approximate”?) classical Bayesians. I’ve seen very little work carefully spelling out why & how these theorems tell either ideal or bounded agents what to believe, and how to make decisions. (See also this post.)

I’ve also often seen people who are highly deferred-to in EA/rationalism make claims in these domains that, AFAICT, are straightforwardly confused or question-begging. Like “precise credences lead to ‘better decisions’ than imprecise credences” — when the whole question of what makes decisions “better” depends on our credences.

Even if someone has legibly thought a lot about this stuff, their basic philosophical attitudes might be very different from yours-upon-reflection. So I think you should only defer to them as far as you have reason to think that’s not a problem.

- ^

Much of what I write here is inspired by discussions with Jesse Clifton.

Evan Fields @ 2025-07-15T11:28 (+9)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

At least on the margin, sometimes. I don't think "children dying is bad" requires deeply engaging philosophical issues, and once you have the simple goal "fewer children die" you can do some cause prioritization.

(In contrast, I think a straightforward strategy of figure out the nature of goodness and then pick the causes requires ~solving philosophy.)

JackM @ 2025-07-15T21:22 (+8)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Underlying philosophical issues have clear implications for what you should prioritize, so I'm not really sure how you can rationally prioritize between causes without engaging with these issues.

I'm also not really sure how to defer on these issues when there are lots of highly intelligent, altruistically-minded people who disagree with each other. These disagreements often arise due to value judgements, and I don't think you can defer on your underlying values.

I have written about how I think EAs should understand certain considerations to aid in cause prioritization, and that I want the EA community to make it easier for people to do so: Important Between-Cause Considerations: things every EA should know about — EA Forum

I also produced a cause prioritization flowchart to make this easier, as linked to in another comment: A guided cause prioritisation flowchart — EA Forum

David Mathers🔸 @ 2025-07-15T10:29 (+8)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Deference to consensus of people more expert than you is always potentially rational, so this is clearly possible.

Davidmanheim @ 2025-07-15T11:33 (+2)

Deference to authority is itself a philosophical contention which has been discussed and debated (in that case, in comparison to voting as a method.)

David Mathers🔸 @ 2025-07-15T12:10 (+6)

Sure, but it's an extreme view that it's never ok to outsource epistemic work to other people.

Noah Birnbaum @ 2025-07-15T15:46 (+3)

There's a large difference (with many positions in between) between never outsourcing one's epistemic work and accepting something like an equal weight view. There is, at this point, almost no consensus on this issue. One must engage with the philosophy here directly to actually do a proper and rational cause prioritization -- if, at the very least, just about conscilliationism.

David Mathers🔸 @ 2025-07-15T18:38 (+10)

I think there's an underlying assumption your making here that you can't act rationally unless you can fully respond to any objection to your action, or provide some sort of perfect rationale for it. Otherwise, it's at least possible to get things right just by actually giving the right weight to other people's views, whether or not you can also explain philosophically why that is the right weight to give them. I think if you assume the picture of rationality on which you need this kind of full justification, pretty much nothing anyone does or feasibly could do is ever rational, and so the question of whether you can do rational cause prioritization without engaging with philosophy becomes uninteresting (answer no, but in practice you can't do it even after engaging with philosophy, or really ever act rationally.)

On reflection, my actual view here is maybe that binary rational/not rational classification isn't very useful, rather things are more or less rational.

EDIT: I'd also say that something is going wrong if you think no one can ever update on testimony before deciding how much weight to give to other people's opinions. As far as I remember, the literature about conciliationism and the equal weight view is about how you should update on learning propositions that are actually about other people's opinions. But the following at least sometimes happens: someone tells you something that isn't about people's opinions at all, and then you get to add [edit: the proposition expressed by] that statement to your evidence, or do whatever it is your meant to do with testimony. The updating here isn't on propositions about other people's opinions at all. I don't automatically see why expert testimony about philosophical issues couldn't work like this, at least sometimes, though I guess I can imagine views on which it doesn't (for example, maybe you only get to update on the proposition expressed and not on the fact that the expert expressed that proposition if the experts belief in the proposition amounts to knowledge, and no one has philosophical knowledge.)

bluballoon @ 2025-07-15T13:58 (+3)

But if there's a single issue in the world we should engage with deeply instead of outsourcing, isn't it this one? Isn't this pretty much the most important question in the world from the perspective of an effective altruist?

David Mathers🔸 @ 2025-07-15T14:19 (+2)

It's definitely good if people engage with it deeply if they make the results of their engagement public (I don't think most people can outperform the community/the wider non-EA world of experts on their own in terms of optimizing their own decisions.) But the question just asked whether it is possible to rationally set priorities without doing a lot of philosophical work yourself, not whether that was the best thing to do.

Noah Birnbaum @ 2025-07-15T15:42 (+7)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

I mean, any position you take has an implied moral philosophy and decision theory associated with it. These are often not very robust (i.e. reasonable other moral philosophies/ decision theories disagree). Therefore, to then engage about an issue on a rational level requires one to take these sorts of positions -- ignoring them entirely because they're hard seems completely unjustifiable.

Davidmanheim @ 2025-07-17T09:10 (+6)

As a meta-comment, it's really important that a huge proportion of the disagreement in the comments here is about what "engage deeply" means.

If that means it is a crux that must be decided upon, the claim is clearly true that we must engage with them - because they are certainly cruxes.

It if means people must individually spend time on doing so, it is clearly false, because people can rationally choose not to engage and use some heuristic, or defer to experts which is rational[1].

- ^

In worlds where computation and consideration are not free. Using certain technical assumptions for what rational means in game theory, we could claim it's irrational because rationality typically assumes zero cost of computation. But this is mostly a stupid nitpick.

Alex Weiss @ 2025-07-18T10:20 (+3)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Deep philosophical issues are inherent to any meaningful evaluation of trade offs between moral causes!

Tunder @ 2025-07-17T21:49 (+3)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

I have tried to engage this conversation any times but it always ends up in the fundamentals, if one really digs.

But probably this is true for any topic of conversation 😅

Christina La Fleur @ 2025-07-17T05:12 (+3)

To cut straight, you need to line up the ends. You can't put causes' impact side-by-side and cut straight across. They don't line up.

For example, can you one-to-one animal and human welfare? No matter now you answer that, you're answering from a philosophical standpoint that you'd need to defend before comparing.

Jens Nordmark @ 2025-07-15T15:42 (+3)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

That would depend on the similarity between the causes being compared. In the fully general case it seems it would be hard to compare without deep philosophical reasoning.

Jai 🔸 @ 2025-07-16T02:11 (+2)

Most empirical decisions have some basis in crucial value based judgements that can make a difference in what causes to prioritize. I don't think light philosophy would be enough to really make good decisions.

James Fodor @ 2025-07-16T00:31 (+2)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Comparing causes can be done by making simple assumptions and then performing a detailed empirical analysis on the basis of these assumptions. The results will be limited by being dependent on those assumptions, but that is true for any philosophical position. It is not necessary to engage deeply with the philosophy underpinning those assumptions to do rational comparison.

BarkingMad @ 2025-07-15T17:58 (+2)

If the goal of effective altruism is to have any merit, then being able to rationally determine what causes are "worthy" and which would be a waste of resources is a necessary skill. Taking a deep philosophical dive into every decision will only lead to INdecisiveness and perpetual delay of causes getting ANY help. For anyone who has seen the TV show "The Good Place", the character of Chidi is a prime (fictional) example of this. The character is so obsessed with the minutest ethical details of any decision that he becomes essentially incapable of making ANY choices without agonizing over the details, which eventually leads to his death and his being sent to "the bad place". Engaging with one's own moral or ethical philosophy is necessary, of course, but not if it will interfere with doing genuine good. Especially when the need being met requires a timely response.

Davidmanheim @ 2025-07-15T11:29 (+2)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

As laid out at length in my 2023 post, no, it is not. For a single quote "all of axiology, which includes both aesthetics and ethics, and large parts of metaphysics... are central to any discussion of how to pursue cause-neutrality, but are often, in fact, nearly always, ignored by the community."

As to the idea that one can defer to others in place of engaging deeply, this is philosophically debated, and while rational in the decision theoretic sense, it is far harder to justify as a philosophical claim.

That said, my personal views and decisions are informed on a different basis.

NickLaing @ 2025-07-15T09:17 (+2)

We all have a moral framework, built largely on our cultural background as well as intuition and experience even if we haven't thought through it deeply. Many of us don't even know where it comes from or what it is exactly.

And yeah we can rationally prioritise based on that. Thinking deeply can help us prioritise better but I don't think its necessary.

CTroyano @ 2025-07-18T21:39 (+1)

I believe when we find a cause we care about & work with like-minded others, there is no need to go deep-it is understood.

mmKALLL @ 2025-07-17T01:52 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

I think it is important to build a diverse and comprehensive viewpoint on philosophical issues. However, once someone has selected the views that most align with their values, I think it is possible - even relatively easy - to rationally prioritize between causes. Therefore I disagree with the post's premise that the philosophical issues are unresolved; I think they're debatable but the set of cruxes is quite well defined already.

Kristen Comstock @ 2025-07-16T14:23 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

I don’t see how one could guide prioritization without deep engagement . Really curious to hear from the opposing viewpoint!

Roberto Tinoco @ 2025-07-16T12:59 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Philosophical issues might provide a foundation to structure priorities and act more strategically in the long term. Without a philosophical grounding, prioritization tends to be superficial.

Peter Morris @ 2025-07-16T11:20 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

I think that rationality is personal, so it does not require a philosophical approach.

Arkariaz @ 2025-07-16T08:45 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

I don't see how trying to rationally prioritise between causes can avoid an assessment of the greater good in some form or another, and by then you are already in deep with the philosophy of morality, whether you know it or not.

Flo 🔸 @ 2025-07-16T07:17 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Even what we think of as our terminal values may change once we examine them closer.

Jonas Søvik 🔹 @ 2025-07-15T22:41 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

It's really hard. But though intuition gets a bad rap, we at base have a sense of which things suck and how much. It can be inaccurate, but maybe wisdom of the crowds can help us pool together to estimate fx suffering weight to give to cluster headaches or losing a child.

I have no idea how much this breaks down when things get more abstract i.e. longtermism/GCR.

iestyn Bleasdale-Shepherd @ 2025-07-15T20:40 (+1)

My view is that forecasting in general is a weak concept. Our science and technology do not deal well with complex systems, and in some important ways they never can; we'll never be able to measure initial conditions accurately enough, we'll never be able to simulate accurately enough, we cannot escape chaos, and the more compute we throw at it the more complex the whole intertwined system becomes - because the compute is part of the system.

But we have an exemplar of what we can do instead; biological evolution. It is pure empirical science, no theory or prediction required. It is our existence proof that a complex context can be navigated successfully... depending on your definition of success. I suggest that we should crank up our humility to more realistic levels and adopt a more stochastic approach; ie try lots of randomized, low-risk experiments in parallel and leave the analysis until set the solution emerges.

The most important avenue of scientific inquiry is that of understanding the necessary conditions for a functional memetic evolutionary process. Given how far we have drifted from the original basis of human culture (many small groups, with low mutual bandwidth and strong life/death feedback) it seems quite plausible that our current system (a globally integrated and highly centralized civilization with increasingly abstract and recursive feedback mechanisms) is selecting strongly for worse ideas as time progresses.

Ivan Muñoz @ 2025-07-15T20:16 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

The best cause isn’t the one that wins the argument. It’s the one that survives contact with the world.

Josh T @ 2025-07-15T18:03 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Is it possible to prioritize without the luxury of deep philosophical correctness? Absolutely.

Should we prefer that type of debate when time permits? Most likely

Jess.s.bcn @ 2025-07-15T17:39 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Logic can be defined with facts on why something should be prioritised over something else. There is always some reason why something is more pressing for resources.

jhoagie @ 2025-07-15T16:06 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

I don't think basic cost/benefit analysis requires enormous engagement with philosophy. People do it off the cuff all the time - just need to introduce them to the idea in the charitable context.

I'm thinking of it similar to basic econ: people don't need to know a lot of price theory to be able to generally optimize their consumption.

Deb @ 2025-07-15T15:54 (+1)

It is necessary to establish globally endorsed guidelines and principles in order to prioritize causes. This requires a World Congress to be established. This foundation is urgent. Therefore It is not possible to rationally prioritise between causes without engaging deeply on philosophical issues

Pierre L. @ 2025-07-15T15:33 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

From the top of my mind, I'd say that it depends what counts as "philosophical issues". Typically, whether we consider animal welfare a priority raises philosophical issues, because the moral value of animal suffering is a matter of ethics—and, consequently , of philosophy. Same thing with environmental or population ethics, and so on.

However, to a certain extent, some causes can be compared without raising philosophical questions—if we can compare them with a single set a criteria (such as QALY, and so on).

Freddy from Germany @ 2025-07-15T15:30 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

If you admit that rationality has its limits and want to focus on action rather than being put off by overthinking, I think it is possible. Also pragmatically it is most important to help, not to go for highly ineffective NGOs, but it is not so critical if it goes to "best" or "fifth best" entity imho.

Dave Banerjee 🔸 @ 2025-07-15T12:41 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Population axiology is a major crux for cause prio. Additionally, different moral views on wild animals massively changes cause prio.

Leroy Dixon @ 2025-07-15T12:35 (+1)

I think some framework is needed, insofar that it doesn't get in the way of the action. If one is devoting a lot of time to something, they should have some damn good reasoning behind it

ManikSharma @ 2025-07-15T11:40 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

Without philosophical engagement, if priortization is to be guided by reason, logic or evidence only(to be rational), we hit a wall quickly. Moral and ethical frameworks are rooted in philosophy; as we ask deeper questions guided by rational enquiry, we end up asking philosophical questions. It is rational to derive priortization from first principles.

Kestrel🔸 @ 2025-07-15T09:50 (+1)

It is possible to rationally prioritise between causes without engaging deeply on philosophical issues

If I donate via Giving What We Can to a charity they list, or to a fund, I am trusting someone else's deep philosophical issues engagement. That's rational.