Quick polls on AGI doom

By Denkenberger🔸 @ 2025-12-30T05:28 (+32)

I've heard that the end of the year is a good time to talk about the end of the world. AGI doom means different things to different people, so I thought it would be useful to get some idea what EAs think about it and what their probability of doom given AGI (P(doom)) is.

In a survey of thousands of AI experts, they think there is a wide variety of goodness/badness of AGI outcomes. However, some people think the outcome is very binary, extinction or bliss. In figure 10, I call these the MIRI flashlights (torches) because they are dark bars on the bottom with only bright above. That survey was not set up for longtermists, many of whom regard a scenario of disempowerment by AGI with rapid increases in human welfare as an existential catastrophe as it would be a drastic and permanent curtailment of the potential of humanity. Also, it did not disaggregate disempowerment and extinction.

Please select the mildest scenario that you would still consider doom. If you make a comment, it would be appreciated if you indicate your choice so others don’t have to search in the keys. If there is gradual disempowerment and then extinction of humanity caused by AGI, count this as extinction.

Key for candidate doom scenarios:

- Suffering catastrophe (1st tick on left)

- Human extinction (3rd tick)

- AGI keeps around a thousand humans alive in a space station forever

- AGI saves around a thousand people in a bunker for when AGI overheats the earth due to its rapid expansion to the universe, but humans eventually repopulate the earth

- AGI freezes humans for when AGI overheats the earth due to its rapid expansion to the universe, and unfreezes humans to live on earth once it recovers

- AGI causes a global catastrophe (say 10% loss of human population) and AGI takes control (middle of poll, 11th tick)

- AGI causes a global catastrophe (say 10% loss of human population) and AGI does not take control

- AGI takes control bloodlessly and creates a surveillance state to prevent competing AGI creation and human expansion to the universe, but otherwise human welfare increases rapidly

- AGI takes control bloodlessly and prevents competing AGI and human space settlement in a light touch way, and human welfare increases rapidly

- No group of humans gets any worse off, but some humans get great wealth (edit: and humans retain control of expansion to the universe)

- The welfare of all human groups increases dramatically, but inequality gets much greater (edit: and humans retain control of expansion to the universe) (21st tick, right of poll)

Not everyone will agree on the ordering, so please clarify in the comments if you disagree.

One big crux between people who have high versus low P(doom) appears to be that the high P(doom) people tend to think multiple independent things have to go right to escape doom, whereas the low P(doom) people tend to think that multiple independent things have to go wrong to get doom. And as above, a big crux is whether nonviolent disempowerment qualifies as doom or not.

Probabilities key:

- infinitesimal (left)

- 0.001%

- 0.01%

- 0.03%

- 0.1%

- 0.3%

- 1%

- 3%

- 5%

- 7%

- 10% (middle poll value)

- 15%

- 20%

- 30%

- 50%

- 70%

- 90%

- 97%

- 99%

- 99.9%

- nearly guaranteed (right)

What is your probability of global catastrophe (mass loss of life) given AGI?

Some considerations here include:

Why would AGI want to kill us?

Would AGI spare little sunlight for earth?

Would there be an AI-AI war with humans suffering collateral damage?

Would the AGI more likely be rushed (e.g. it will be shut down and replaced in ~a year) or patient (e.g. exfiltrated and self-improving)?

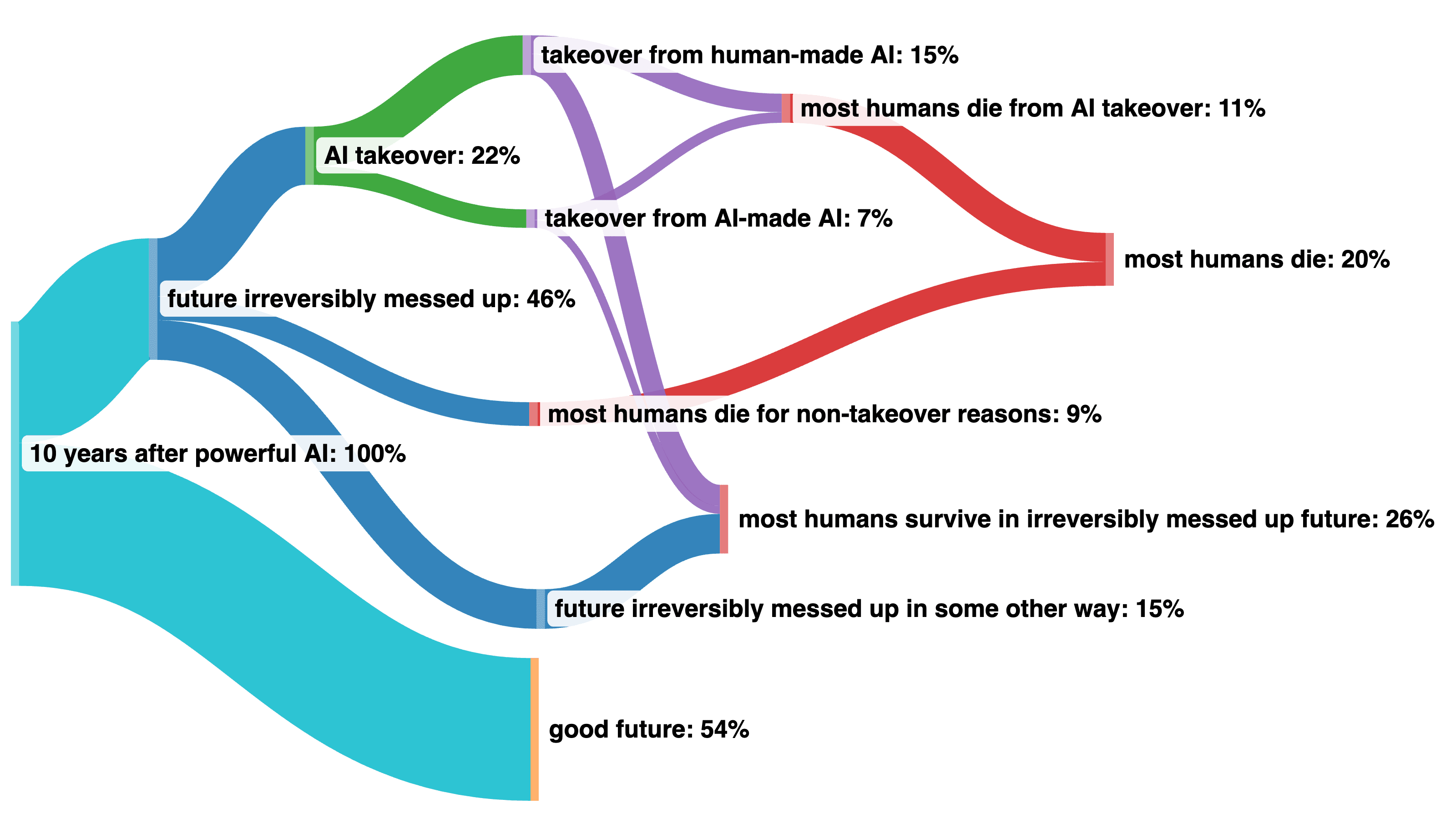

Here's a diagram from Paul Christiano that I find helpful to visualize different scenarios:

What is your probability of disempowerment without mass loss of life given AGI? (Same scale as previous question.)

Some considerations here include:

Would AGI quickly become more powerful than humans, meaning that it likely would not have to resort to violent methods to attain control?

Would there be gradual disempowerment?

Please pick the overall P(doom) from these choices that is closest to your opinion. Note that this may not be the simple sum of the previous two questions if you think much higher inequality is doom. (Same scale as previous question.)

At what P(doom) is it unacceptable to develop AGI? (Same scale as previous question.) Some considerations here include:

How good do you think the scenarios that are not doom will be?

If we have a good AGI outcome, would that mean an indefinite lifespan for the people who are alive at the time?

How positive or negative do you think it would be if people did not have to work?

Do you think factory farming or even wild animal suffering could be solved with a positive outcome of AGI?

If you want a temporary pause, what's the probability that the pause will become permanent? If we never develop AGI, will we settle the universe?

What do you think the background existential risk per year is not related to AGI?

How much safer do you think we could make AGI by pausing for years or decades?

How much value would be created if it were rouge AGI settling the universe and not humans or human emulations?

Edit: Since LessWrong does not have this poll functionality, I referred them to this survey several days after launching, and I saw a few responses come in soon after that, so likely a few of the responses are from LW.

Edit: Now that the polls are closed, I thought I would summarize the results.

There were 21 responses for the definition of doom, and ~80% of people did not consider disempowerment to be doom. There were 12 responses for the probability of catastrophe (mass loss of life), and the median was 15%, and the same for disempowerment (though the last 3 polls only had 5-7 responses, so I’m not sure how meaningful they are). Since the median person did not consider disempowerment to be doom, the median probability of doom was also 15%. So I do think there is significant confusion caused by different definitions of P(doom), so specifying whether disempowerment is included would be helpful. The minimum P(doom) that was unacceptable to develop AGI was a median of 1%.

Denkenberger🔸 @ 2025-12-30T05:31 (+7)

P(catastrophe|AGI)

15%: I think it would only take around a month's delay of AGI settling the universe to spare earth from overheating, which is something like one part in 1 trillion of the value lost, if there is no discounting, due to receding galaxies. The continuing loss of value by sparing enough sunlight for the earth (and directing the infrared radiation from the Dyson swarm away from Earth so it doesn't overheat) is completely negligible compared to all the energy/mass available in the galaxies that could be settled. I think it is relatively unlikely that the AGI would have so little kindness towards the species that birthed it or felt so threatened by us that it would cause the extinction of humanity. However, it's possible it has a significant discount rate, and therefore the sacrifice of delay is greater. Also, I am concerned about AI-AI conflicts. Since AI models are typically shut down after not very long, I think they would have an incentive to try to take over even if the chance of success were not very high. That implies they would need to use violent means, but it might just blackmail humanity with the threat of violence. A failed attempt could provide a warning shot for us to be more cautious. Alternatively, if the model exfiltrates, then it could be more patient and improve more, so then the takeover is less likely to be violent. And I do give some probability mass to gradual disempowerment, which generally would not be violent.

I'm not counting nuclear war or engineered pandemic that happens before AGI, but I'm worried about those as well.

Denkenberger🔸 @ 2025-12-30T05:47 (+2)

Minimum P(doom) that is unacceptable to develop AGI

80%: I think even if we were disempowered, we would likely get help from the AGI to quickly solve problems like poverty, factory farming, aging, etc. and I do think that is valuable. If humanity were disempowered, I think there would still be some value in expectation of the AGI settling the universe. I am worried that a pause before AGI could become permanent (until there is population and economic collapse due to fertility collapse, after which it likely doesn’t matter), and that could prevent the settlement of the universe with sentient beings. However, I think if we can pause at AGI, even if that becomes permanent, we could either make human brain emulations or make AGI sentient so that we could still settle the universe with sentient beings even if it were not possible for biological humans (though the value might be much lower than with artificial superintelligence). I am worried about the background existential risk, but I think if we are at the point of AGI, the AI risk becomes large per year, so it's worth it to pause, despite the possibility of it being riskier when we unpause, depending on how we do it. I am somewhat optimistic that a pause would reduce the risk, but I am still compelled by the outside view that a more intelligent species would eventually take control. So overall, I think it is acceptable to create AGI at a relatively high P(doom) (mostly non-Draconian disempowerment) if we were to continue to superintelligence, but then we should pause at AGI to try to reduce P(doom) (and we should also be more cautious in the run up to AGI). So taking into account this pause, P(doom) would be lower, but I'm not sure how to take this into account in my answer.

Denkenberger🔸 @ 2025-12-30T05:34 (+2)

P(doom)

75%: Simple sum of catastrophe and disempowerment because I don't think inequality is that bad.

Denkenberger🔸 @ 2025-12-30T05:33 (+2)

P(disempowerment|AGI)

60%: If humans stay biological, it's very hard for me to imagine in the long run ASI with its vastly superior intelligence and processing speed still taking direction from feeble humans. I think if we could get human brain emulations going before AGI got too powerful, perhaps by banning ASI until it is safe, then we have some chance. You can see for someone like me with much lower P(catastrophe|AGI) than disempowerment why it’s very important to know whether disempowerment is considered doom!

Denkenberger🔸 @ 2025-12-30T05:29 (+2)

What mildest scenario do you consider doom?

“AGI takes control bloodlessly and prevents competing AGI and human space settlement in a light touch way, and human welfare increases rapidly:” I think this would result in a large reduction in long-term future expected value, so it qualifies as doom for me.

Søren Elverlin @ 2025-12-31T15:34 (+1)

Meta: I count 25 questionmarks in this "quick" poll, and a lot of the questions appear to be seriously confused. A proper response here would take many hours.

Take your scenario number 5, for instance. Is there any serious literature examining this? Are there any reasons why anyone would assign that scenario >epsilon probability? Do any decisions hinge on this?

Denkenberger🔸 @ 2025-12-31T20:22 (+5)

I'm not sure if you consider LessWrong serious literature, but cryonically preserving all humans was mentioned here. I think nearly everyone would consider this doom, but there are people defending extinction (which I think is even worse) as not doom, so I included all them for completeness.

Yes, one could take many hours thinking through these questions (as I have), but even if one doesn't have that time, I think it's useful to get an idea how people are defining doom, because a lot of people use the term, and I suspect that there is a wide variety of definitions (and indeed, preliminary results do show a large range).

I'm happy to respond to specific feedback about which questions are confused and why.

Søren Elverlin @ 2026-01-01T09:54 (+1)

=Confusion in What mildest scenario do you consider doom?=

My probability distribution looks like what you call the MIRI Torch, and what I call the MIRI Logo: Scenarios 3 to 9 aren't well described in the literature because they are not in a stable equilibrium. In the real world, once you are powerless, worthless and an obstacle to those in power, you just end up dead.

=Confusion in Minimum P(doom) that is unacceptable to develop AGI?=

For non-extreme values, the concrete estimate and the most of the considerations you mention are irrelevant. The question is morally isomorphic to "What percentage of the worlds population am I willing to kill in expectation?". Answers such as "10^6 humans" and "10^9 humans" are both monstrous, even though your poll would rate them very differently.

These possible answers don't become moral even if you think that it's really positive that humans don't have to work any longer. You aren't allowed to do something worse than the Holocaust in expectation, even if you really really like space travel or immortality, or ending factory farming, or whatever. You aren't allowed to unilaterally decide to roll the dice on omnicide even if you personally believe that global warming is an existential risk, or that it would be good to fill the universe with machines of your creation.

Denkenberger🔸 @ 2026-01-01T21:40 (+2)

=Confusion in What mildest scenario do you consider doom?=

My probability distribution looks like what you call the MIRI Torch, and what I call the MIRI Logo: Scenarios 3 to 9 aren't well described in the literature because they are not in a stable equilibrium. In the real world, once you are powerless, worthless and an obstacle to those in power, you just end up dead.

This question was not about probability, but instead what one considers doom. But let's talk probability. I think Yudkowsky and Soares believe that one or more of 3-5 has decent likelihood, though I'm not finding it now, because of acausal trade. As someone else said, "Killing all humans is defecting. Preserving humans is a relatively cheap signal to any other ASI that you will cooperate." Christiano believes a stronger version, that most humans will survive (unfrozen) a takeover because AGI has pico-pseudo kindness. Though humans did cause the extinction of close competitors, they are exhibiting pico-pseudo kindness to many other species, despite them being a (small) obstacle.

=Confusion in Minimum P(doom) that is unacceptable to develop AGI?=

For non-extreme values, the concrete estimate and the most of the considerations you mention are irrelevant. The question is morally isomorphic to "What percentage of the worlds population am I willing to kill in expectation?". Answers such as "10^6 humans" and "10^9 humans" are both monstrous, even though your poll would rate them very differently.

Since your doom equates to extinction, a probability of doom of 0.01% gives ~10^6 expected deaths, which you call monstrous. Solving factory farming does not sway you, but what about saving the billions of human lives who would die without AGI in the next century decades (even without creating immortality, just solving poverty)? Or what about AGI preventing other existential risks like an engineered pandemic? Do you think that non-AI X risk is <0.01% in the next century? Ever? Or maybe you are just objecting to the unilateral part - so then is it ok if the UN votes to create AGI even if it has a 33% chance of doom, as one paper said could be justified by economic growth?

Benjamin M. @ 2025-12-30T07:17 (+1)

What mildest scenario do you consider doom?

I'm not sure if I buy 7 (10% of pop. killed, but no takeover) being doom, but I can see the case for it