Tom Barnes's Quick takes

By Tom Barnes🔸 @ 2023-06-27T21:34 (+4)

Tom Barnes @ 2023-11-27T19:29 (+156)

Mildly against the Longtermism --> GCR shift

Epistemic status: Pretty uncertain, somewhat rambly

TL;DR replacing longtermism with GCRs might get more resources to longtermist causes, but at the expense of non-GCR longtermist interventions and broader community epistemics

Over the last ~6 months I've noticed a general shift amongst EA orgs to focus less on reducing risks from AI, Bio, nukes, etc based on the logic of longtermism, and more based on Global Catastrophic Risks (GCRs) directly. Some data points on this:

- Open Phil renaming it's EA Community Growth (Longtermism) Team to GCR Capacity Building

- This post from Claire Zabel (OP)

- Giving What We Can's new Cause Area Fund being named "Risk and Resilience," with the goal of "Reducing Global Catastrophic Risks"

- Longview-GWWC's Longtermism Fund being renamed the "Emerging Challenges Fund"

- Anecdotal data from conversations with people working on GCRs / X-risk / Longtermist causes

My guess is these changes are (almost entirely) driven by PR concerns about longtermism. I would also guess these changes increase the number of people donation / working on GCRs, which is (by longtermist lights) a positive thing. After all, no-one wants a GCR, even if only thinking about people alive today.

Yet, I can't help but feel something is off about this framing. Some concerns (no particular ordering):

- From a longtermist (~totalist classical utilitarian) perspective, there's a huge difference between ~99% and 100% of the population dying, if humanity recovers in the former case, but not the latter. Just looking at GCRs on their own mostly misses this nuance.

- (see Parfit Reasons and Persons for the full thought experiment)

- From a longtermist (~totalist classical utilitarian) perspective, preventing a GCR doesn't differentiate between "humanity prevents GCRs and realises 1% of it's potential" and "humanity prevents GCRs realises 99% of its potential"

- Preventing an extinction-level GCR might move us from 0% to 1% of future potential, but there's 99x more value in focusing on going from the "okay (1%)" to "great (100%)" future.

- See Aird 2020 for more nuances on this point

- From a longtermist (~suffering focused) perspective, reducing GCRs might be net-negative if the future is (in expectation) net-negative

- E.g. if factory farming continues indefinitely, or due to increasing the chance of an S-Risk

- See Melchin 2021 or DiGiovanni 2021 for more

- (Note this isn't just a concern for suffering-focused ethics people)

- From a longtermist perspective, a focus on GCRs neglects non-GCR longtermist interventions (e.g. trajectory changes, broad longtermism, patient altruism/philanthropy, global priorities research, institutional reform, )

- From a "current generations" perspective, reducing GCRs is probably not more cost-effective than directly improving the welfare of people / animals alive today

- I'm pretty uncertain about this, but my guess is that alleviating farmed animal suffering is more welfare-increasing than e.g. working to prevent an AI catastrophe, given the latter is pretty intractable (But I haven't done the numbers)

- See discussion here

- If GCRs actually are more cost-effective under a "current generations" worldview, then I question why EAs would donate to global health / animal charities (since this is no longer a question of "worldview diversification", just raw cost-effectiveness)

More meta points

- From a community-building perspective, pushing people straight into GCR-oriented careers might work short-term to get resources to GCRs, but could lose the long-run benefits of EA / Longtermist ideas. I worry this might worsen community epistemics about the motivation behind working on GCRs:

- If GCRs only go through on longtermist grounds, but longtermism is false, then impartial altruists should rationally switch towards current-generations opportunities. Without a grounding in cause impartiality, however, people won't actually make that switch

- From a general virtue ethics / integrity perspective, making this change on PR / marketing reasons alone - without an underlying change in longtermist motivation - feels somewhat deceptive.

- As a general rule about integrity, I think it's probably bad to sell people on doing something for reason X, when actually you want them to do it for Y, and you're not transparent about that

- There's something fairly disorienting about the community switching so quickly from [quite aggressive] "yay longtermism!" (e.g. much hype around launch of WWOTF) to essentially disowning the word longtermism, with very little mention / admission that this happened or why

Vanessa @ 2023-11-28T11:34 (+39)

The framing "PR concerns" makes it sound like all the people doing the actual work are (and will always be) longtermists, whereas the focus on GCR is just for the benefit of the broader public. This is not the case. For example, I work on technical AI safety, and I am not a longtermist. I expect there to be more people like me either already in the GCR community, or within the pool of potential contributors we want to attract. Hence, the reason to focus on GCR is building a broader coalition in a very tangible sense, not just some vague "PR".

Tom Barnes @ 2023-11-28T15:47 (+10)

Is your claim "Impartial altruists with ~no credence on longtermism would have more impact donating to AI/GCRs over animals / global health"?

To my mind, this is the crux, because:

- If Yes, then I agree that it totally makes sense for non-longtermist EAs to donate to AI/GCRs

- If No, then I'm confused why one wouldn't donate to animals / global health instead?

[I use "donate" rather than "work on" because donations aren't sensitive to individual circumstances, e.g. personal fit. I'm also assuming impartiality because this seems core to EA to me, but of course one could donate / work on a topic for non-impartial/ non-EA reasons]

Vanessa @ 2023-11-28T17:08 (+5)

Yes. Moreover, GCR mitigation can appeal even to partial altruists: something that would kill most of everyone, would in particular kill most of whatever group you're partial towards. (With the caveat that "no credence on longtermism" is underspecified, since we haven't said what we assume instead of longtermism; but the case for e.g. AI risk is robust enough to be strong under a variety of guiding principles.)

IanDavidMoss @ 2023-11-29T01:39 (+9)

FWIW, in the (rough) BOTECs we use for opportunity prioritization at Effective Institutions Project, this has been our conclusion as well. GCR prevention is tough to beat for cost-effectiveness even only considering impacts on a 10-year time horizon, provided you are comfortable making judgments based on expected value with wide uncertainty bands.

I think people have a cached intuition that "global health is most cost-effective on near-term timescales" but what's really happened is that "a well-respected charity evaluator that researches donation opportunities with highly developed evidence bases has selected global health as the most cost-effective cause with a highly-developed evidence base." Remove the requirement for certainty about the floor of impact that your donation will have, and all of the sudden a lot of stuff looks competitive with bednets on expected-value terms.

(I should caveat that we haven't yet tried to incorporate animal welfare into our calculations and therefore have no comparison there.)

Michael Townsend @ 2023-11-28T10:09 (+26)

Speaking personally, I have also perceived a move away from longtermism, and as someone who finds longtermism very compelling, this has been disappointing to see. I agree it has substantive implications on what we prioritise.

Speaking more on behalf of GWWC, where I am a researcher: our motivation for changing our cause area from “creating a better future” to “reducing global catastrophic risks” really was not based on PR. As shared here:

We think of a “high-impact cause area” as a collection of causes that, for donors with a variety of values and starting assumptions (“worldviews”), provide the most promising philanthropic funding opportunities. Donors with different worldviews might choose to support the same cause area for different reasons. For example, some may donate to global catastrophic risk reduction because they believe this is the best way to reduce the risk of human extinction and thereby safeguard future generations, while others may do so because they believe the risk of catastrophes in the next few decades is sufficiently large and tractable that it is the best way to help people alive today.

Essentially, we’re aiming to use the term “reducing global catastrophic risks” as a kind of superset that includes reducing existential risk, and that is inclusive of all the potential motivations. For example, when looking for recommendations in this area, we would be happy to include recommendations that only make sense from a longtermist perspective. A large part of the motivation for this was based on finding some of the arguments made in several of the posts you linked (including “EA and Longtermism: not a crux for saving the world”) compelling.

Also, our decision to step down from managing the communications for the Longtermism Fund (now “Emerging Challenges Fund”) was based on wanting to be able to more independently evaluate Longview’s grantmaking, rather than brand association.

Stephen Clare @ 2023-11-28T17:21 (+18)

Great post, Tom, thanks for writing!

One thought is that a GCR framing isn't the only alternative to longtermism. We could also talk about caring for future generations.

This has fewer of the problems you point out (e.g. differentiates between recoverable global catastrophes and existential catastrophes). To me, it has warm, positive associations. And it's pluralistic, connected to indigenous worldviews and environmentalist rhetoric.

ClaireZabel @ 2023-11-29T21:47 (+17)

Thanks for sharing this, Tom! I think this is an important topic, and I agree with some of the downsides you mention, and think they’re worth weighing highly; many of them are the kinds of things I was thinking in this post of mine of when I listed these anti-claims:

Anti-claims

(I.e. claims I am not trying to make and actively disagree with)

- No one should be doing EA-qua-EA talent pipeline work

- I think we should try to keep this onramp strong. Even if all the above is pretty correct, I think the EA-first onramp will continue to appeal to lots of great people. However, my guess is that a medium-sized reallocation away from it would be good to try for a few years.

- The terms EA and longtermism aren’t useful and we should stop using them

- I think they are useful for the specific things they refer to and we should keep using them in situations where they are relevant and ~ the best terms to use (many such situations exist). I just think we are over-extending them to a moderate degree

- It’s implausible that existential risk reduction will come apart from EA/LT goals

- E.g. it might come to seem (I don’t know if it will, but it at least is imaginable) that attending to the wellbeing of digital minds is more important from an EA perspective than reducing misalignment risk, and that those things are indeed in tension with one another.

- This seems like a reason people who aren’t EA and just prioritize existential risk reduction are less helpful from an EA perspective than if they also shared EA values all else equal, and like something to watch out for, but I don’t think it outweighs the arguments in favor of more existential risk-centric outreach work.

This isn’t mostly a PR thing for me. Like I mentioned in the post, I actually drafted and shared an earlier version of that post in summer 2022 (though I didn’t decide to publish it for quite a while), which I think is evidence against it being mostly a PR thing. I think the post pretty accurately captures my reasoning at the time, that I think often people doing this outreach work on the ground were actually focused on GCRs or AI risk and trying to get others to engage on that and it felt like they were ending up using terms that pointed less well at what they were interested in for path-dependent reasons. Further updates towards shorter AI timelines moved me substantially in terms of the amount I favor the term “GCR” over “longtermism”, since I think it increases the degree to which a lot of people mostly want to engage people about GCRs or AI risk in particular.

titotal @ 2023-11-28T12:53 (+13)

One point that hasn't been mentioned: GCR's may be many, many orders of magnitude more likely than extinctions. For example, it's not hard to imagine a super deadly virus that kills 50% of the worlds population , but a virus that manages to kill literally everyone, including people hiding out in bunkers, remote villages, and in antarctica, doesn't make too much sense: if it was that lethal, it would probably burn out before reaching everyone.

Pablo @ 2023-12-02T12:13 (+2)

The relevant comparison in this context is not with human extinction but with an existential catastrophe. A virus that killed everyone except humans in extremely remote locations might well destroy humanity’s long-term potential. It is not plausible—at least not for the reasons provided— that “GCR's may be many, many orders of magnitude more likely than” existential catastrophes, on reasonable interpretations of “many, many”.

(Separately, the catastrophe may involve a process that intelligently optimizes for human extinction, by either humans or non-human agents, so I also think that the claim as stated is false.)

titotal @ 2023-12-02T13:38 (+4)

A virus that killed everyone except humans in extremely remote locations might well destroy humanity’s long-term potential

How?

I see it delaying things while the numbers recover, but it's not like humans will suddenly become unable to learn to read. Why would humanity not simply pick itself up and recover?

Pablo @ 2023-12-02T13:44 (+3)

Two straightforward ways (more have been discussed in the relevant literature) are by making humanity more vulnerable to other threats and by pushing back humanity past the Great Filter (about whose location we should be pretty uncertain).

titotal @ 2023-12-02T16:44 (+2)

This is very vague. What other threats? It seems like a virus wiping out most of humanity would decrease the likelihood of other threats. It would put an end to climate change, reduce the motivation for nuclear attacks and ability to maintain a nuclear arsenal, reduce the likelihood of people developing AGI, etc.

Pablo @ 2023-12-02T21:06 (+2)

Humanity’s chances of realizing its potential are substantially lower when there are only a few thousand humans around, because the species will remain vulnerable for a considerable time before it fully recovers. The relevant question is not whether the most severe current risks will be as serious in this scenario, because (1) other risks will then be much more pressing and (2) what matters is not the risk survivors of such a catastrophe face at any given time, but the cumulative risk to which the species is exposed until it bounces back.

David_Moss @ 2023-11-28T16:25 (+11)

Over the last ~6 months I've noticed a general shift amongst EA orgs to focus less on reducing risks from AI, Bio, nukes, etc based on the logic of longtermism, and more based on Global Catastrophic Risks (GCRs) directly... My guess is these changes are (almost entirely) driven by PR concerns about longtermism.

It seems worth flagging that whether these alternative approaches are better for PR (or outreach considered more broadly) seems very uncertain. I'm not aware of any empirical work directly assessing this even though it seems a clearly empirically tractable question. Rethink Priorities has conducted some work in this vein (referenced by Will MacAskill here), but this work, and other private work we've completed, wasn't designed to address this question directly. I don't think the answer is very clear a priori. There are lots of competing considerations and anecdotally, when we have tested things for different orgs, the results are often surprising. Things are even more complicated when you consider how different approaches might land with different groups, as you mention.

We are seeking funding to conduct work which would actually investigate this question (here), as well as to do broader work on EA/longtermist message testing, and broader work assessing public attitudes towards EA/longtermism (which I don't have linkable applications for).

I think this kind of research is also valuable even if one is very sceptical of optimising PR. Even if you don't want to maximise persuasiveness, it's still important to understand how different groups are understanding (or misunderstanding) your message.

Ryan Greenblatt @ 2023-11-28T03:43 (+5)

From a "current generations" perspective, reducing GCRs is probably not more cost-effective than directly improving the welfare of people / animals alive today

I think reducing GCRs seems pretty likely to wildly outcompete other traditional approaches[1] if we use a slightly broad notion of current generation (e.g. currently existing people) due to the potential for a techno utopian world which making the lives of currently existing people >1,000x better (which heavily depends on diminishing returns and other considerations). E.g., immortality, making them wildly smarter, able to run many copies in parallel, experience insanely good experiences, etc. I don't think BOTECs will be a crux for this unless we ignore start discounting things rather sharply.

- If GCRs actually are more cost-effective under a "current generations" worldview, then I question why EAs would donate to global health / animal charities (since this is no longer a question of "worldview diversification", just raw cost-effectiveness)

IMO, the main axis of variation for EA related cause prio is "how far down the crazy train do we go" not "person affecting (current generations) vs otherwise" (though views like person affecting ethics might be downstream of crazy train stops).

Mildly against the Longtermism --> GCR shift

Idk what I think about Longtermism --> GCR, but I do think that we shouldn't lose "the future might be totally insane" and "this might be the most important century in some longer view". And I could imagine focus on GCR killing a broader view of history.

- ^

That said, if we literally just care about experiences which are somewhat continuous with current experiences, it's plausible that speeding up AI outcompetes reducing GCRs/AI risk. And it's plausible that there are more crazy sounding interventions which look even better (e.g. extremely low cost cryonics). Minimally the overall situation gets dominated by "have people survive until techno utopia and ensure that techno utopia happens". And the relative tradeoffs between having people survive until techno utopia and ensuring that techno utopia happen seem unclear and will depend on some more complicated moral view. Minimally, animal suffering looks relatively worse to focus on.

Vasco Grilo🔸 @ 2026-01-15T13:57 (+2)

Hi Tom.

From a longtermist (~totalist classical utilitarian) perspective, there's a huge difference between ~99% and 100% of the population dying, if humanity recovers in the former case, but not the latter. Just looking at GCRs on their own mostly misses this nuance.

I would be curious to know your thougths on my post arguing that decreasing the risk of human extinction is not astronomically cost-effective.

From a longtermist (~totalist classical utilitarian) perspective, preventing a GCR doesn't differentiate between "humanity prevents GCRs and realises 1% of it's potential" and "humanity prevents GCRs realises 99% of its potential"

The same applies to preventing human extinction over a given period. Humans could go extinct just after the period, or go on to an astronomically valuable, and I believe the former is much more likely.

From a longtermist (~suffering focused) perspective, reducing GCRs might be net-negative if the future is (in expectation) net-negative

This also applies to reducing the risk of human extinction.

Arepo @ 2023-11-29T18:42 (+2)

I've upvoted this comment, but weakly disagree that there's such a shift happening (EVF orgs still seem to be selecting pretty heavily for longtermist projects, the global health and development fund has been discontinued while the LTFF is still around etc), and quite strongly disagree that it would be bad if it is:

From a longtermist (~totalist classical utilitarian) perspective, there's a huge difference between ~99% and 100% of the population dying, if humanity recovers in the former case, but not the latter.

That 'if' clause is doing a huge amount of work here. In practice I think the EA community is far too sanguine about our prospects post-civilisational collapse of becoming interstellar (which, from a longtermist perspective, is what matters - not 'recovery'). I've written a sequence on this here, and have a calculator which allows you to easily explore the simple model's implications on your beliefs described in post 3 here, with an implementation of the more complex model available on the repo. As Titotal wrote in another reply, it's easy to believe 'lesser' catastrophes are many times more likely, so could very well be where the main expected loss of value lies.

From a longtermist (~totalist classical utilitarian) perspective, preventing a GCR doesn't differentiate between "humanity prevents GCRs and realises 1% of it's potential" and "humanity prevents GCRs realises 99% of its potential"

I think I agree with this, but draw a different conclusion. Longtermist work has focused heavily on existential risk, and in practice the risk of extinction, IMO seriously dropping the ball on trajectory changes with little more justification that the latter are hard to think about. As a consequence they've ignored what seem to me the very real loss of expected unit-value from lesser catastrophes, and the to-me-plausible increase in it from interventions designed to make people's lives better (generally lumping those in as 'shorttermist'). If people are now starting to take other catastrophic risks more seriously, that might be remedied. (also relevant to your 3rd and 4th points)

From a "current generations" perspective, reducing GCRs is probably not more cost-effective than directly improving the welfare of people / animals alive today

This seems to be treating 'focus only on current generations' and 'focus on Pascalian arguments for astronomical value in the distant future' as the only two reasonable views. David Thorstad has written a lot, I think very reasonably, about reasons why expected value of longtermist scenarios might actually be quite low, but one might still have considerable concern for the next few generations.

From a general virtue ethics / integrity perspective, making this change on PR / marketing reasons alone - without an underlying change in longtermist motivation - feels somewhat deceptive.

Counterpoint: I think the discourse before the purported shift to GCRs was substantially more dishonest. Nanda and Alexander's posts argued that we should talk about x-risk rather than longtermism on the grounds that it might kill you and everyone you know - which is very misleading if you only seriously consider catastrophes that kill 100% of people, and ignore (or conceivably even promote) those that leave >0.01% behind (which, judging by Luisa Rodriguez's work is around the point beyond which EAs would typically consider something an existential catastrophe).

I basically read Zabel's post as doing the same, not as desiring a shift to GCR focus, but as desiring presenting the work that way, saying 'I’d guess that if most of us woke up without our memories here in 2022 [now 2023], and the arguments about potentially imminent existential risks were called to our attention, it’s unlikely that we’d re-derive EA and philosophical longtermism as the main and best onramp to getting other people to work on that problem' (emphasis mine).

Nanda, Alexander and Zabel's posts all left a very bad taste in my mouth for exactly that reason.

There's something fairly disorienting about the community switching so quickly from [quite aggressive] "yay longtermism!" (e.g. much hype around launch of WWOTF) to essentially disowning the word longtermism, with very little mention / admission that this happened or why

This is as much an argument that we made a mistake ever focusing on longtermism as that we shouldn't now shift away from it. Oliver Habryka (can't find link offhand) and Kelsey Piper are two EAs who've publicly expressed discomfort with the level of artificial support WWOTF received, and I'm much less notable, but happy to add myself to the list of people uncomfortable the business, especially since at the time he was a trustee of the charity that was doing so much to promote his career.

harfe @ 2023-11-27T20:34 (+2)

Meta: this should not have been a quick take, but a post (references, structure, tldr, epistemic status, ...)

Elizabeth @ 2023-11-29T04:31 (+16)

This sounds like an accusation, when it could so easily have been a compliment. The net effect of comments like this is fewer posts and fewer quick takes.

harfe @ 2023-11-29T13:33 (+18)

I actually meant it as a compliment, thanks for pointing out that it can be received differently. I liked this "quick take" and believe it would have been a high-quality post.

I was not aware that my comment would reduce the number of quick takes and posts, but I feel deleting my comment now just because of the downvotes would also be weird. So, if anyone reads this and felt discouraged by the above, I hope you rather post your things somewhere rather than not at all.

Tom Barnes @ 2023-11-28T15:50 (+4)

Yeah that's fair. I wrote this somewhat off the cuff, but because it got more engagement than I thought I'd make it a full post if I wrote again

Tom Barnes @ 2023-10-31T19:28 (+68)

YouGov Poll on SBF and EA

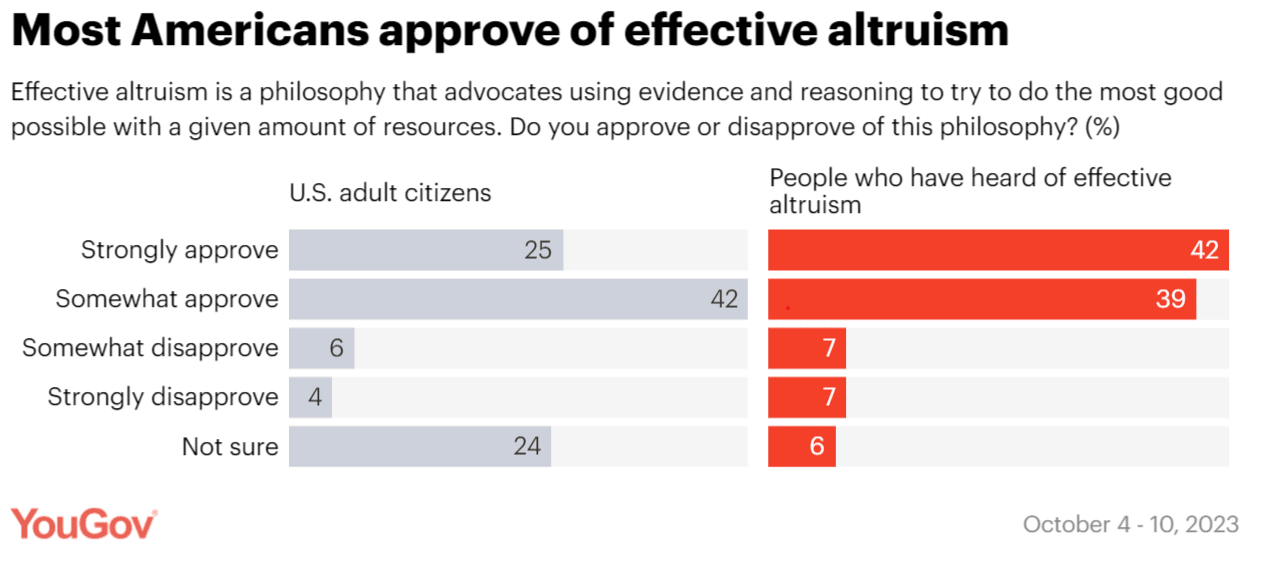

I recently came across this article from YouGov (published last week), summarizing a survey of US citizens for their opinions on Sam Bankman-Fried, Cryptocurrency and Effective Altruism.

I half-expected the survey responses to be pretty negative about EA, given press coverage and potential priming effects associating SBF to EA. So I was positively surprised that:

(it's worth noting that there were only ~1000 participants, and the survey was online only)

Sam Glover @ 2023-11-02T13:58 (+10)

I am very sceptical about the numbers presented in this article. 22% of US citizens have heard of Effective Altruism? That seems very high. RP did a survey in May 2022 and found that somewhere between 2.6% and 6.7% of the US population had heard of EA. Even then, my intuition was that this seemed high. Even with the FTX stuff it seems extremely unlikely that 22% of Americans have actually heard of EA.

Tom Barnes @ 2023-11-02T17:02 (+11)

Thanks - I just saw RP put out this post, which makes much the same point. Good to be cautious about interpreting these results!

Tom Barnes @ 2023-06-27T21:34 (+19)

Quick take: renaming shortforms to Quick takes is a mistake

Tom Barnes @ 2023-12-06T17:25 (+8)

A couple of weeks ago I blocked all mentions of "Effective Altruism", "AI Safety", "OpenAI", etc from my twitter feed. Since then I've noticed it become much less of a time sink, and much better for mental health. Would strongly recommend!

burner @ 2023-12-06T17:41 (+3)

throw e/acc on there too

Tom Barnes @ 2023-10-13T17:16 (+4)

Ten Project Ideas for AI X-Risk Prioritization

I made a list of 10 ideas I'd be excited for someone to tackle, within the broad problem of "how to prioritize resources within AI X-risk?" I won’t claim these projects are more / less valuable than other things people could be doing. However, I'd be excited if someone gave a stab at them

10 Ideas:

- Threat Model Prioritization

- Country Prioritization

- An Inside-view timelines model

- Modelling AI Lab deployment decisions

- How are resources currently allocated between risks / interventions / countries

- How to allocate between “AI Safety” vs “AI Governance”

- Timing of resources to reduce risks

- Correlation between Safety and Capabilities

- How should we reason about prioritization?

- How should we model prioritization?

I wrote up a longer (but still scrappy) doc here