How many EA 2021 $s would you trade off against a 0.01% chance of existential catastrophe?

By Linch @ 2021-11-27T23:46 (+55)

I'm interested in how many 2021 $s you'd think it's rational for EA be willing to trade (or perhaps the equivalent in human capital) against 0.01% (or 1 basis point) of existential risk.

This question is potentially extremely decision-relevant for EA orgs doing prioritization, like Rethink Priorities. For example, if we assign $X to preventing 0.01% of existential risk, and we take Toby Ord's figures on existential risk (pg. 167, The Precipice) on face value, then we should not prioritize asteroid risk (~1/1,000,000 risk this century), if all realistic interventions we could think of costs >>1% of $X, or prioritize climate change (~1/1,000 risk this century) if realistic interventions costs >>$10X, at least on direct longtermism grounds (though there might still be neartermist or instrumental reasons for doing this research).

To a lesser extent, it may be relevant for individuals considering whether it's better to earn-to-give vs contribute to existential risk reduction, whether in research or in real-world work.

Assume the money comes from a very EA-aligned (and not too liquidity-constrained) org like Open Phil.

Note: I hereby define existential risk the following way(see discussion in comments for why I used a non-standard definition):

Existential risk – A risk of catastrophe where an adverse outcome would permanently cause Earth-originating intelligent life's astronomical value to be <50% of what it would otherwise be capable of.

Note that extinction (0%) and maximally bad[1] s-risks (-100%) are special cases of <50%.

[1] assuming symmetry between utility and disutility

Linch @ 2021-11-28T00:08 (+22)

Here are my very fragile thoughts as of 2021/11/27:

Speaking for myself, I feel pretty bullish and comfortable saying that we should fund interventions that we have resilient estimates of reducing x-risk ~0.01% at a cost of ~$100M.

I think for time-sensitive grants of an otherwise similar nature, I'd also lean optimistic about grants costing ~$300M/0.01% of xrisk, but if it's not time-sensitive I'd like to see more estimates and research done first.

For work where we currently estimate ~$1B/0.01% of xrisk, I'd weakly lean against funding them right now, but think research and seed funding into those interventions is warranted, both for value of information reasons and also because we may have reasonable probability on more money flowing into the movement in the future, suggesting we can lower the bar for future funding. I especially believe this for interventions that are relatively clear-cut and the arguments are simple, or otherwise may be especially attractive to non(currently)-EA billionaires and governments, as we may have future access to non-aligned (or semi-aligned) sources of funding, with either additional research or early-stage infrastructure work that can then leverage more funding.

For work where we currently estimate >>$10B/0.01% of xrisk, I'd be against funding them and weakly lean against deep research into them, with important caveats including a) value of information and b) extremely high scale and/or clarity. For example, the "100T guaranteed plan to solve AI risk" seems like a research project worth doing and laying the foundations of, but not a project worth us directly funding, and I'd be bearish on EA researchers spending significant time on doing research for interventions at similar levels of cost-effectiveness for significantly smaller risks.

One update that has probably not propagated enough for me is a (fairly recent, to me) belief that longtermist EA has a much higher stock of human capital than financial capital. To the extent this is true, we may expect a lot of money to flow in in the future. So as long as we're not institutionally liquidity constrained, we/I may be systematically underestimating how many $s we should be willing to trade off against existential risk.

NunoSempere @ 2021-11-28T17:43 (+20)

*How* are you getting these numbers? At this point, I think I'm more interested in the methodologies of how to arrive at an estimate than about the estimates themselves

Linch @ 2021-11-28T21:43 (+5)

For the top number, I'm aware of at least one intervention* where people's ideas of whether it's a good idea to fund moved from "no" to "yes" in the last few years, without (AFAIK) particularly important new empirical or conceptual information. So I did a backwards extrapolation from that. For the next numbers, I a) considered that maybe I'm biased in favor of this intervention, so I'm rosier on the xrisk reduced here than others (cf optimizer's curse), and grantmakers probably have lower numbers on xrisks reduced and b) considered my own conception of how much capital may come to longtermist EA in the future, and decided that I'm probably rosier on our capital than these implied numbers will entail. Put another way, I started with a conservative-ish estimate, then updated down on how much xrisk we can realistically buy off for $X at current margins (which increases $/xrisk), then updated upwards on how much $s we can have access to (which also increases $/xrisk).

My friend started with all the funds available in EA, divided by the estimated remaining xrisk, and then applied some discount factor for marginal vs average interventions, and got some similar if slightly smaller numbers.

*apologies for the vagueness

JacktheHawk @ 2022-08-06T06:03 (+1)

You said 'I'm aware of at least one intervention* where people's ideas of whether it's a good idea to fund moved from "no" to "yes" in the last few years'. Would you be able to provide the source of this please?

Linch @ 2022-08-06T08:40 (+2)

No, sorry!

WilliamKiely @ 2021-11-28T09:43 (+8)

Speaking for myself, I feel pretty bullish and comfortable saying that we should fund interventions that we have resilient estimates of reducing x-risk ~0.01% at a cost of ~$100M.

Do you similarly think we should fund interventions that we have resilient estimates of reducing x-risk ~0.00001% at a cost of ~$100,000? (i.e. the same cost-effectiveness)

Linch @ 2021-11-28T12:09 (+5)

Yep, though I think "resilient" is doing a lot of the work. In particular:

- I don't know how you can get robust estimates that low.

- EA time is nontrivially expensive around those numbers, not just doing the intervention but also identifying the intervention in the first place and the grantmaker time to evaluate it, so there aren't many times where ~0.00001% risk reductions will organically come up.

The most concrete thing I can think of is in asteroid risk, like if we take Ord's estimates of 1/1,000,000 risk this century literally, and we identify a cheap intervention that we think can a) avert 10% of asteroid risks, b) costs only $100,000 , c) can be implemented by a non-EA with relatively little oversight, and d) has negligible downside risks, then I'd consider this a pretty good deal.

Linch @ 2021-11-28T00:29 (+6)

An LTFF grantmaker I informally talked to gave similar numbers

MichaelA @ 2021-11-30T16:07 (+2)

Could you say whether this was Habryka or not? (Since Habryka has now given an answer in a separate comment here, and it seems a bit good to know whether those are the same data point twice or not. Habryka's number seems a factor of 3-10 off of yours, but I'd call that "similar" in this context.)

Linch @ 2021-12-01T00:02 (+2)

(It was not)

RyanCarey @ 2021-11-28T17:33 (+4)

To what degree do you think the x-risk research community (of ~~100 people) collectively decreases x-risk? If I knew this, then you would have roughly estimated the value of an average x-risk researcher.

mtrazzi @ 2021-11-28T23:50 (+4)

To make that question more precise, we're trying to estimate xrisk_{counterfactual world without those people} - xrisk_{our world}, with xrisk_{our world}~1/6 if we stick to The Precipice's estimate.

Let's assume that the x-risk research community completely vanishes right now (including the past outputs, and all the research it would have created). It's hard to quantify, but I would personally be at least twice as worried about AI risk that I am right now (I am unsure about how much it would affect nuclear/climate change/natural disasters/engineered pandemics risk and other risks).

Now, how much of the "community" was actually funded by "EA $"? How much of those researchers would not be capable of the same level of output without the funding we currently have? How much of the x-risk reduction is actually done by our impact in the past (e.g. new sub-fields of x-risk research being created, where progress is now (indirectly) being made by people outside of the x-risk community) vs. researcher hours today? What fraction of those researchers would still be working on x-risk on the side even if their work wasn't fully funded by "EA $"?

Linch @ 2022-09-21T18:38 (+3)

EDIT 2022/09/21: The 100M-1B estimates are relatively off-the-cuff and very not robust, I think there are good arguments to go higher or lower. I think the numbers aren't crazy, partially because others independently come to similar numbers (but some people I respect have different numbers). I don't think it's crazy to make decisions/defer roughly based on these numbers given limited time and attention. However, I'm worried about having too much secondary literature/large decisions based on my numbers, since it will likely result in information cascades. My current tentative guess as of 2022/09/21 is that there are more reasons to go higher (think averting x-risk is more expensive) than lower. However, overspending on marginal interventions is more -EV than underspending, which pushes us to bias towards conservatism.

Mathieu Putz @ 2021-11-28T10:12 (+1)

I assume those estimates are for current margins? So if I were considering whether to do earning to give, I should use lower estimates for how much risk reduction my money could buy, given that EA has billions to be spent already and due to diminishing returns your estimates would look much worse after those had been spent?

Linch @ 2021-11-28T11:38 (+2)

Yes it's about marginal willingness to spend, not an assessment of absolute impact so far.

Khorton @ 2021-11-28T15:01 (+20)

With 7 billion people alive today, and our current most cost effective interventions for saving a life in the ballpark of $5000 USD, then with perfect knowledge we should probably be willing to spend up to ~$3.5 billion USD to reduce the risk of extinction or similar this century by 0.01 percentage points even if you're not all that concerned about future people. Is my math right?

(Of course, we don't have perfect knowledge and there are other reasons why people might prefer to invest less.)

Linch @ 2021-11-28T15:26 (+10)

Reasons to go higher:

- We may believe that existential risk is astronomically bad, such that killing 8 billion people is much worse than 2x as bad as killing 4 billion people.

Reasons to go lower:

- Certain interventions in reducing xrisk may save a significantly lower number of present people's lives than 8 billion, for example much work in civilizational resilience/recovery, or anything that has a timeline of >20 years for most of the payoff.

- As a practical matter, longtermist EA has substantially less money than implied by these odds.

Khorton @ 2021-11-28T16:38 (+5)

Agreed on all points

Linch @ 2021-11-28T15:08 (+2)

by 0.1 percentage points

Did you mean 0.01%?

Khorton @ 2021-11-28T16:37 (+2)

Yes sorry, edited!

Khorton @ 2021-11-28T15:02 (+2)

Around $3 billion also sounds intuitively about right compared to other things governments are willing to spend money on.

Linch @ 2021-11-28T15:20 (+3)

governments seem way more inefficient to me than this.

Habryka @ 2021-11-29T07:44 (+13)

My very rough gut estimate says something like $1B. Probably more on the margin, just because we seem to have plenty of money and are more constrained on other problems. I think we are currently funding projects that are definitely more cost-effective than that, but I see very little hope in scaling them up drastically while preserving their cost-effectiveness, and so spending $1B on 0.01% seems pretty reasonable to me.

WilliamKiely @ 2021-11-28T19:36 (+10)

I thought it would be interesting to answer this using a wrong method (writing the bottom line first). That is, I made an assumption about what the cost-effectiveness of grants aimed at reducing existential risk is, then calculated how much it would cost to reduce existential risk by one basis point if that cost-effectiveness was correct.

Specifically, I assumed that Ben Todd’s expectation that Long-Term Future Fund grants are 10-100x as cost-effective as Global Health and Development Fund grants is correct:

I would donate to the Long Term Future Fund over the global health fund, and would expect it to be perhaps 10-100x more cost-effective (and donating to global health is already very good).

I made the further assumptions that:

- Global Health and Development Fund (GH) grants are as cost-effective as GiveWell’s top charities in expectation

- Global Health grants are 8x as cost effective as GiveDirectly

- GiveWell’s estimate that “donating $4,250 to a charity that’s 8x GiveDirectly is as good as saving the life of a child under five” (Ben Todd’s words) is correct.

- All existential catastrophes are extinction events.

- Long-Term Future Fund grants do good via reducing the probability of an extinction event in the next 100 years.

- The only good counted towards Long-Term Future Fund grant cost-effectiveness is the human lives directly saved from dying in the extinction event in the next century. (The value of preserving the potential for future generations is ignored, as is the value of saving lives from any non-extinction level global catastrophic risk reduction.)

- Saving the lives of people then-alive from dying in an extinction event in the next 100 years is as valuable on average as saving the life of a child under five.

- If an extinction event happens in the next century, 8 billion people will die.

From these assumptions, it follows that:

- Donating to the LTFF is 10-100x as cost-effective as donating to the GHDF and 80-800x as cost-effective as donating to GiveDirectly.

- Donating $4,250 to the LTFF does as much good as saving the lives of 10-100 people from dying in an extinction event in the next 100 years.

- Reducing the risk of human extinction in the next century by 0.01% is equivalent to saving the lives of 800,000 people.

- It costs $34M-$340M (i.e. ~$100M) (donated to the LTFF) to reduce the probability of extinction in the next 100 years by 0.01% (one basis point). (Math: $4,250 * 800,000/100 to $4,250 * 800,000/10.)

To reiterate, this is not the right way to figure out how much it costs to reduce existential risk.

I worked backwards from Ben Todd’s statement of his expectations about the cost-effectiveness of grants to the LTFF, making the assumption that his cost-effectiveness estimate is correct, and further assuming that those grants are valuable only due to their effect on saving lives from dying in extinction events in the next century.

In reality, those grants may also help people from dying in non-extinction level global catastrophic risks, increasing the estimate of the cost to reduce extinction risk by one basis point. Further, there is also a lot of value in how preventing extinction preserves the potential for having future generations. If Ben Todd’s 10-100x expectation included this preserving-future-generations value, then that too would increase the estimate of the cost to reduce extinction risk by one basis point.

NunoSempere @ 2021-11-28T18:33 (+9)

Just to complement Khorton's answer: With a discount rate of [1], and a steady-state population of N, and a willingness to pay of $X, the total value of the future is , so the willingness to pay for 0.01% of it would be

This discount rate might be because you care about future people less, or because you expect a % of pretty much unavoidable existential risk going forward.

Some reference values

- (10 billion), , means that willingness to pay for 0.01% risk reduction should be , i.e., $333 billion

- (7 billion), , means that willingness to pay for 0.01% risk reduction should be i.e., $70 billion.

I notice that from the perspective of a central world planner, my willingness to pay would be much higher (because my intrinsic discount rate is closer to ~0%). Taking

- (10 billion), , means that willingness to pay for 0.01% risk reduction should be , i.e., $100 trillion

To do:

- The above might be the right way to model willingness to pay from 0.02% risk per year to 0.01% risk per year. But with, e.g,. 3% remaining per year, willingness to pay is lower, because over the long-run we all die sooner.

- E.g., reducing risk from 0.02% per year to 0.01% per year is much more valuable that reducing risk from 50.1% to 50%.

[1]: Where you value the -th year in the steady-state at of the value of the first year. If you don't value future people, the discount rate might be close to , if you do value them, it might be close to .

NunoSempere @ 2021-11-28T19:15 (+4)

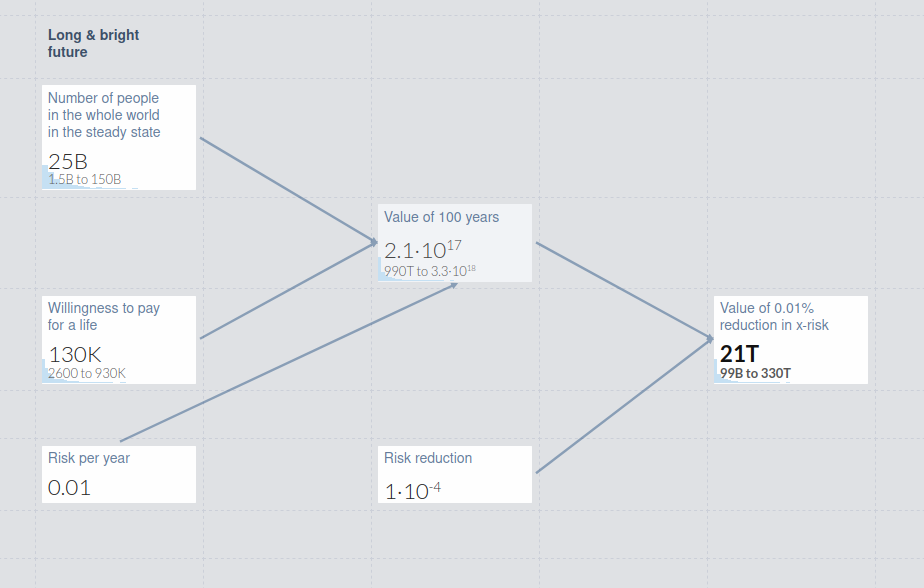

Here is a Guesstimate which calculates this in terms of a one-off 0.01% existential risk reduction over a century.

NunoSempere @ 2021-11-28T19:02 (+4)

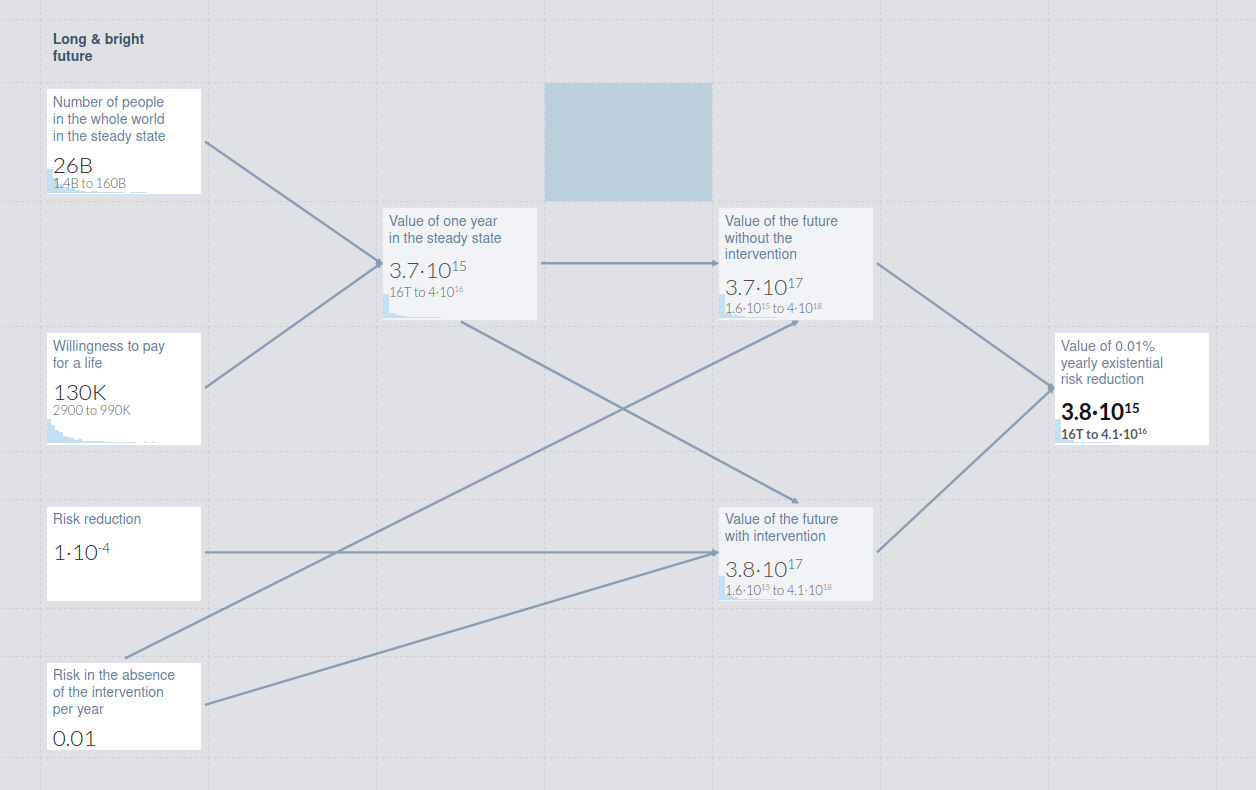

Here is a Guesstimate model which addresses the item on the to-do list. Note that in this guesstimate I'm talking about a -0.01% yearly reduction.

robirahman @ 2021-11-30T18:48 (+3)

The steady-state population assumption is my biggest objection here. Everything you've written is correct yet I think that one premise is so unrealistic as to render this somewhat unhelpful as a model. (And as always, NPV of the eternal future varies a crazy amount even within a small range of reasonable discount rates, as your numbers show.)

NunoSempere @ 2021-12-01T12:27 (+2)

For what it's worth, I don't disagree with you, though I do think that the steady state is a lower bound of value, not an upper bound.

NunoSempere @ 2021-11-28T20:05 (+3)

Thinking more about this, these are more of an upper bound, which don't bind because you can probably buy a 0.01% risk reduction per year much cheaper. So the parameter to estimate would be more like 'what are the other cheaper interventions'

Linch @ 2021-11-28T00:14 (+7)

In Ajeya Cotra's interview with 80,000 Hours, she says:

This estimate is roughly $200 trillion per world saved, in expectation. So, it’s actually like billions of dollars for some small fraction of the world saved, and dividing that out gets you to $200 trillion per world saved

This suggests a funding bar of 200T/10,000 world~= 20B for every 0.01% of existential risk.

These numbers seem very far from my own estimates for what marginal $s can do or the (AFAICT) apparent revealed preferences of Open Phil's philantropic spending, so I find myself very confused about the discrepancy.

Zach Stein-Perlman @ 2021-11-28T05:00 (+10)

Cotra seems to be specifically referring to the cost-effectiveness of "meta R&D to make responses to new pathogens faster." She/OpenPhil sees this as "conservative," a "lower bound" on the possible impact of marginal funds, and believes "AI risk is something that we think has a currently higher cost effectiveness." I think they studied this intervention just because it felt robust and could absorb a lot of money.

The goal of this project was just to reduce uncertainty on whether we could [effectively spend lots more money on longtermism]. Like, say the longtermist bucket had all of the money, could it actually spend that? We felt much more confident that if we gave all the money to the near-termist side, they could spend it on stuff that broadly seemed quite good, and not like a Pascal’s mugging. We wanted to see what would happen if all the money had gone to the longtermist side.

So I expect Cotra is substantially more optimistic than $20B per basis point. That number is potentially useful as a super-robust minimum-marginal-value-of-longtermist-funds to compare to short-term interventions, and that's apparently what OpenPhil wanted it for.

CarlShulman @ 2021-11-28T23:33 (+9)

This is correct.

Linch @ 2021-11-29T22:19 (+2)

Thanks! Do you or others have have any insight on why having a "lower bound" is useful for a "last dollar" estimation? Naively having a tight upper bound is much more useful (so we're definitely willing to spend $$s on any intervention that's more cost-effective than that).

Zach Stein-Perlman @ 2021-11-29T22:30 (+3)

I don't think it's generally useful, but at the least it gives us a floor for the value of longtermist interventions. $20B per basis point is far from optimal but still blows eg GiveWell out of the water, so this number at least tells us that our marginal spending should be on longtermism over GiveWell.

Linch @ 2021-11-29T22:41 (+5)

but still blows eg GiveWell out of the water

Can you elaborate on your reasoning here?

~20 billion * 10, 000 /~8 billion ~= $25,000 $/life saved. This seems ~5x worse than AMF (iirc) if we only care about present lives, done very naively. Now of course xrisk reduction efforts save older people, happier(?) people in richer countries, etc, so the case is not clearcut that AMF is better. But it's like, maybe similar OOM?

(Though this very naive model would probably point to xrisk reduction being slightly better than GiveDirectly, even at 20B/basis point, even if you only care about present people, assuming you share Cotra's empirical beliefs and you don't have intrinsic discounting for uncertainty)

Now I will probably prefer 20B/basis point over AMF, because I like to take ideas seriously and longtermism is one of the ideas I take seriously. But this seems like a values claim, not an empirical one.

Zach Stein-Perlman @ 2021-11-29T23:15 (+4)

Hmm, fair. I guess the kind of people who are unpersuaded by speculative AI stuff might also be unswayed by the scope of the cosmic endowment.

So I amend my takeaway from the OpenPhil number to: people who buy that the long-term future matters a lot (mostly normative) should also buy that longtermism can absorb at least $10B highly effectively (mostly empirical).

Charles He @ 2021-11-28T00:39 (+1)

These numbers seem very away from my own estimates for what marginal $s can do or the (AFAICT) apparent revealed preferences of Open Phil's philantropic spending, so I find myself very confused about the discrepancy.

I don't understand. Total willingness to pay, and the belief of the marginal impact of $, are different things.

Also, when buying things, we often spend a small fraction of our total willingness to pay (e.g. imagine paying even 10% of the max value for transportation or healthcare each time).

We are accustomed to paying a fraction of our willingness to pay and also it's how usually things work out. For preventative measures like x-risk, we might also expect this fraction to be low, because it benefits from planning and optimization.

Your own comment here, and Ben Todd's messaging says that EA spending is limited by top talent that can build and deploy new large scale institutions in x-risk.

I feel like I'm missing something or talking past you?

Linch @ 2021-11-28T00:47 (+2)

Total willingness to pay, and the belief of the marginal impact of $, are different things.

Maybe I'm missing something, but I'm assuming a smooth-ish curve for things people are just barely willing to fund vs are just barely unwilling to fund.

My impression is that longtermist things just below that threshold have substantially higher naive cost-effectiveness than 20B/0.01% of xrisk.

It's easier for me to see this for longtermist interventions other than AI risk, since AI risk is very confusing. A possible response for the discrepancy is that maybe grantmakers are much less optimistic (like by 2 orders of magnitude on average) about non-AI xrisk reduction measures than I am. For example, one answer that I sometimes hear (~never from grantmakers) is that people don't really think of xrisks outside of AI as a thing. If this is the true rejection, I'd a) consider it something of a communications failure in longtermism and b) would note that we are pretty inefficiently allocating human capital then.

Also, when buying things, we often spend a small fraction of our total willingness to pay (e.g. imagine paying even 10% of the max value for transportation or healthcare each time).

You're the economist here, but my understanding is that the standard argument for this is that people spend $s until marginal spending per unit of goods equals marginal utility of that good. So if we value the world at 200T EA dollars, we absolutely should spend $s until it costs 20B in $s (or human capital-equivalents) per basis point of xrisks averted.

Charles He @ 2021-11-28T01:08 (+1)

Maybe I'm missing something, but I'm assuming a smooth-ish curve for things people are just barely willing to fund vs are just barely unwilling to fund.

My impression is that longtermist things just below that threshold have substantially higher naive cost-effectiveness than 20B/0.01% of xrisk.

Ok, I am confused and I don't immediately know where the above reply fits in.

Resetting things and zooming out to the start:

Your question seems to be talking about two different things:

- Willingness to pay to save the world ("E.g. 0.01% of 200T")

- The actual spending and near-terms plans of 2021 EA's willingness to spend on x-risk related programs, e.g. "revealed preferences of Open Phil's philantropic spending" and other grantmakers as you mentioned.

Clearly these are different things because EA is limited by money. Importantly, we believe EA might have $50B (and as little as $8B before Ben Todd's 2021 update). So that's not $200T and not overly large next to the world. For example, I think annual bank overdraft fees in the US alone are like ~$10B or something.

Would the following help to parse your discussion?

- If you want to talk about spending priorities right now with $50B (or maybe much more as you speculate, but still less than 200T), that makes sense.

- If you want to talk about what we would spend if Linch or Holden were the central planner of the world and allocating money to x-risk reduction, that makes sense too.

Linch @ 2021-11-28T01:18 (+2)

Would the following help to parse your discussion?

- If you want to talk about spending priorities right now with $50B (or maybe much more as you speculate, but still less than 200T), that makes sense.

- If you want to talk about what we would spend if Linch or Holden were the central planner of the world and allocating money to x-risk reduction, that makes sense too.

I was referring to #1. I don't think fantasizing about being a central planner of the world makes much sense. I also thought #1 was what Open Phil was referring to when they talk about the "last dollar project", though it's certainly possible that I misunderstood (for starters I only skim podcasts and never read them in detail).

Charles He @ 2021-11-28T01:35 (+1)

I was referring to #1. I don't think fantasizing about being a central planner of the world makes much sense. I also thought #1 was what Open Phil was referring to when they talk about the "last dollar project", though it's certainly possible that I misunderstood (for starters I only skim podcasts and never read them in detail).

Ok, this makes perfect sense. Also this is also my understanding of "last dollar".

My very quick response, which may be misinformed , is that Open Phil is solving some constrained spending problem with between $4B and $50B of funds (e.g. the lower number being half of the EA funds before Ben Todd's update and the higher number being estimates of current funds).

Basically, in many models, the best path is going to be some fraction, say 3-5% of the total endowment each year (and there are reasons why it might be lower than 3%).

There's no reason why this fraction or $ amount rises with the total value of the earth, e.g. even if we add the entire galaxy, we would spend the same amount.

Is this getting at your top level comment "I find myself very confused about the discrepancy"?

I may have missed something else.

Simon Skade @ 2021-12-02T21:46 (+6)

I think reducing x-risk is by far the most cost-effective thing we can do, and in an adequate world all our efforts would be flowing into preventing x-risk.

The utility of 0.01% x-risk reduction is many magnitudes greater than the global GDP, and even if you don't care at all about future people, you should still be willing to pay a lot more than currently is paid for 0.01% x-risk reduction, as Korthon's answer suggests.

But of course, we should not be willing to trade so much money for that x-risk reduction, because we can invest the money more efficiently to reduce x-risk even more.

So when we make the quite reasonable assumption that reducing x-risk is much more effective than doing anything else, the amount of money we should be willing to trade should only depend on how much x-risk we could otherwise reduce through spending that amount of money.

To find the answer to that, I think it is easier to consider the following question:

How much more likely is an x-risk event in the next 100 years if EA looses X dollars?

When you find the X that causes a difference in x-risk of 0.01%, the X is obviously the answer to the original question.

I only consider x-risk events in the next 100 years, because I think it is extremely hard to estimate how likely x-risk more than 100 years into the future is.

Consider (for simplicity) that EA currently has 50B$.

Now answer the following questions:

How much more likely is an x-risk event in the next 100 years if EA looses 50B$?

How much more likely is an x-risk event in the next 100 years if EA looses 0$?

How much more likely is an x-risk event in the next 100 years if EA looses 20B$?

How much more likely is an x-risk event in the next 100 years if EA looses 10B$?

How much more likely is an x-risk event in the next 100 years if EA looses 5B$?

How much more likely is an x-risk event in the next 100 years if EA looses 2B$?

Consider answering those questions for yourself before scrolling down and looking at my estimated answers for those questions, which may be quite wrong. Would be interesting if you also comment your estimates.

The x-risk from EA loosing 0$ to 2B$ should increase approximately linearly, so if is the x-risk if EA looses 0$ and is the x-risk if EA looses 2B$, you should be willing to pay for a 0.01% x-risk reduction.

(Long sidenote: I think that if EA looses money right now, it does not significantly affect the likelihood of x-risk more than 100 years from now. So if you want to get your answer for the "real" x-risk reduction, and you estimate a chance of an x-risk event that happens strictly after 100 years, you should multiply your answer by to get the amount of money you would be willing to spend for real x-risk reduction. However, I think it may even make more sense to talk about x-risk as the risk of an x-risk event that happens in the reasonably soon future (i.e. 100-5000 years), instead of thinking about the extremely long-term x-risk, because there may be a lot we cannot foresee yet and we cannot really influence that anyways, in my opinion.)

Ok, so here are my numbers to the questions above (in that order):

17%,10%,12%,10.8%,10.35%,10.13%

So I would pay for a 0.01% x-risk reduction.

Note that I do think that there are even more effective ways to reduce x-risk, and in fact I suspect most things longtermist EA is currently funding have a higher expected x-risk reduction than 0.01% per 154M$. I just don't think that it is likely that the 50 billionth 2021 dollar EA spends has a much higher effectiveness than 0.01% per 154M$, so I think we should grant everything that has a higher expected effectiveness.

I hope we will be able to afford to spend many more future dollars to reduce x-risk by 0.01%.

Zach Stein-Perlman @ 2021-11-28T04:00 (+5)

I'd guess that quite-well-directed marginal funding can buy a basis point for something like $50M (for example, I’d expect to be able to buy a basis point by putting that money toward a combination of alignment research, AI governance research, and meta-stuff like movement-building around AI). Accounting for not all longtermist funding being so well-directed gives something like $100M of longtermism funding per basis point, or substantially more if we're talking about all-of-EA funding (insofar as non-longtermism funding buys quite little X-risk reduction).

But on reflection, I think that's too high. I arrived at $50M by asking myself "what would I feel pretty comfortable saying could buy a basis point." Considering a reversal test, I would absolutely not take the marginal $100M out of [OpenPhil's longtermism budget / longtermist organizations] to buy one basis point. Reframing as "what amount would I not feel great trading for a basis point in either direction," I instinctively go down to more like $25M of quite-well-directed funding or $50M of real-world longtermism funding. EA could afford to lose $50M of longtermism funding, but it would hurt. The Long-Term Future Fund has spent less than $6M in its history, for example. Unlike Linch, I would be quite sad about trading $100M for a single measly basis point — $100M (granted reasonably well) would make a bigger difference, I think.

I suspect others may initially come up with estimates too high due to similarly framing the question as "what would I feel pretty comfortable saying could buy a basis point," like I originally did. If your answer is $X, I encourage you to make sure that you would take away $X of longtermist funding from the world to buy a single basis point.

Linch @ 2021-11-28T04:32 (+5)

Although, one thing to flag is that a lot of the resources in LT organizations is human capital.

Linch @ 2021-11-28T04:07 (+2)

Thanks for your thoughts.

To be clear, I also share the intuition that I feel a lot better about taking $s from Open Phil's coffers than I do taking money from existing LT organizations, which probably is indicative of something.

Khorton @ 2021-11-28T00:37 (+5)

Point of clarification: Is this like the world had a 3% chance of ending and now the world has a 2.99% of ending? For what length of time? Are we assuming perfect knowledge?

Linch @ 2021-11-28T00:41 (+2)

Is this like the world had a 3% chance of ending and now the world has a 2.99% of ending?

Yes, though I would count existential but non-extinction risks (eg permanent dictatorships) as well.

For what length of time?

Hmm let's say we're looking at existential risks in the next 100 years to make it comparable to Ord's book.

Are we assuming perfect knowledge?

I would prefer "at the quality of models we currently or in the near future will have access to", but we can assume perfect knowledge if that makes things conceptually easier or if you believe in intrinsic discounting for uncertainty.

Simon Skade @ 2021-12-02T21:48 (+1)

I agree that it makes much more sense to estimate x-risk on a timescale of 100 years (as I said in the sidenote of my answer), but I think you should specify that in the question, because "How many EA 2021 $s would you trade off against a 0.01% chance of existential catastrophe?" together with your definition of x-risk, implies taking the whole future of humanity into account.

I think it may make sense to explicitly only talk about the risk of existential catastrophe in this or in the next couple of centuries.

Linch @ 2021-12-02T23:29 (+2)

Lots of people have different disagreements about how to word this question. I feel like I should pass on editing the question even further, especially given that I don't think it's likely to change people's answers too much.

Zach Stein-Perlman @ 2021-11-28T04:48 (+1)

let's say we're looking at existential risks in the next 100 years

This works out fine for me, since for empirical reasons, I place overwhelming probability on the conjunction of "existential catastrophe by 2121" and "long-term future looks very likely to be excellent in 2121." But insofar as we're using existential-risk-reduction as a proxy for good-accomplished, I think that 1 basis point should be worth something more like 1/10,000 of the transition from a catastrophic future to a near-optimal future. Those are units that we should fundamentally care about maximizing; we don't fundamentally care about maximizing no-catastrophe-before-2121. (And then we can make comparisons to interventions that don't affect X-risk by saying what fraction of a transition from catastrophe to excellent they are worth. I guess I'm saying we should think in terms of something equivalent to utilons, where utilons are whatever we ought to increase, since optimizing for anything not equivalent to them is by definition optimizing imprecisely.)

Linch @ 2021-11-28T05:24 (+2)

But insofar as we're using existential-risk-reduction as a proxy for good-accomplished, I think that 1 basis point should be worth something more like 1/10,000 of the transition from a catastrophic future to a near-optimal future.

I agree, I think this is what existential risks means by definition. But I appreciate the clarification regardless!

Charles He @ 2021-11-28T05:41 (+9)

As I think you agree, the optimal future can be extremely good and exotic.

It seems that in your reply to Zach, you are saying that x risk reduction is moving us to this future by definition. However, I can't immediately find this definition in the link you provided.

(There might be some fuzzy thinking below, I am just typing quickly.)

Removing the risk of AI or nanobots, and just keeping humans "in the game" in 2121, just as we are in 2021, is valuable, but I don't think this is the same as moving us to the awesome future.

I think moving us 1/10,000 to the awesome future could be a really strong statement.

Zach Stein-Perlman @ 2021-11-28T05:45 (+7)

Well put. I most often find it useful to think in terms of awesome future vs the alternative, but this isn’t the default definition of existential risk, and certainly not of existential-catastrophe-by-2121.

Zach Stein-Perlman @ 2021-11-28T05:30 (+1)

For empirical reasons, I agree because value is roughly binary. But by definition, existential risk reduction could involve moving from catastrophic futures to futures in which we realize a significant fraction of our potential but the future is not near-optimal.

Linch @ 2021-11-28T05:49 (+4)

For clarity's sake, the definition I was using is as follows:

Existential risk – One where an adverse outcome would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential.

Perhaps the issue here is the definition of "drastic." Ironically I too have complained about the imprecision of this definition before. If I was the czar-in-charge-of-renaming-things-in-EA, I'd probably define an existential risk as:

Existential risk – One where an adverse outcome would either annihilate Earth-originating intelligent life or permanently curtail its potential to <90% of what an optimal future would look like.

Of course, I'm not the czar-in-charge-of-renaming-things-in-EA , so here we are.

EDIT: I am the czar-in-charge-of-renaming-things-in-my-own EA Forum question, so I'll rephrase.

WilliamKiely @ 2021-11-28T09:22 (+4)

I think one problem with your re-definition (that makes it imperfect IMO also) is apparent when thinking about the following questions: How likely is it that Earth-originating intelligent life eventually reaches >90% of its potential? How likely is it that it eventually reaches >0.1% of its potential? >0.0001% of its potential? >10^20 the total value of the conscious experiences of all humans with net-positive lives during the year 2020? My answers to these questions increase with each respective question, and my answer to the last question is several times higher than my answer to the first question.

Our cosmic potential is potentially extremely large, and there are many possible "very long-lasting, positive futures” (to use Holden's language from here) that seem "extremely good" from our limited perspective today (e.g. the futures we imagine when we read Bostrom's Letter from Utopia). But these futures potentially differ in value tremendously.

Okay, I just saw Zach's comment that he thinks value is roughly binary. I currently don't think I agree with him (see his first paragraph at that link, and my reply clarifying my view). Maybe my view is unusual?

Linch here:

I'd be interested in seeing operationalizations at some subset of {1%, 10%, 50%, 90, 99%}.* I can imagine that most safety researchers will give nearly identical answers to all of them, but I can also imagine that large divergences, so decent value of information here.

I'd give similar answers for all 5 of those questions because I think most of the "existential catastrophes" (defined vaguely) involve wiping out >>99% of our potential (e.g. extinction this century before value/time increases substantially). But my independent impression is that there are a lot of "extremely good" outcomes in which we have a very long-lasting, positive future with value/year much, much greater than the value per year on Earth today, that also falls >99% short of our potential (and even >99.9999% of our potential).

Khorton @ 2021-11-28T14:50 (+9)

It's also possible that different people have different views of what "humanity's potential" really means!

WilliamKiely @ 2021-11-28T18:40 (+4)

Great point. Ideally "existential risk" should be an entirely empirical thing that we can talk about independent of our values / moral beliefs about what future is optimal.

Linch @ 2021-11-29T22:32 (+4)

This is impossible if you consider "unrecoverable dystopia", "stable totalitarianism" etc as existential risks, as these things are implicitly values judgments.

Though I'm open to the idea that we should maybe talk about extinction risks instead of existential risks instead, given that this is empirically most of what xrisk people work on.

(Though I think some AI risk people think, as an empirical matter, that some AI catastrophes would entail humanity surviving while completely losing control for the lightcone, and both they and I would consider this basically as bad as all of our descendants dying).

Lukas_Finnveden @ 2021-11-28T18:47 (+1)

Currently, the post says:

A risk of catastrophe where an adverse outcome would permanently cause Earth-originating intelligent life's astronomical value to be <50% of what it would otherwise be capable of.

I'm not a fan of this definition, because I find it very plausible that the expected value of the future is less than 50% of what humanity is capable of. Which e.g. raises the question: does even extinction fulfil the description? Maybe you could argue "yes": but the mix of causing an actual outcome compared with what intelligent life is "capable of" makes all of this unnecessarily dependant on both definitions and empirics about the future.

For purposes of the original question, I don't think we need to deal with all the complexity around "curtailing potential". You can just ask: How much should a funder be willing to pay to remove an 0.01% risk of extinction that's independent from all other extinction risks we're facing. (Eg., a giganormous asteroid is on its way to Earth and has an 0.01% probability of hitting us, causing guaranteed extinction. No on else will notice this in time. Do we pay $X to redirect it?)

This seems closely analogous to questions that funders are facing (are we keen to pay to slightly reduce one, contemporary extinction risk). For non-extinction x-risk reduction, this extinction-estimate will be informative as a comparison point, and it seems completely appropriate that you should also check "how bad is this purported x-risk compared to extinction" as a separate exercise.

Linch @ 2021-11-28T21:46 (+2)

How do people feel about a proposed new definition:

xrisk = risk of human extinction in the next 100 years + risk of other outcomes at least 50% as bad as human extinction in the next 100 years?

Lukas_Finnveden @ 2021-11-28T22:47 (+2)

Seems better than the previous one, though imo still worse than my suggestion, for 3 reasons:

- it's more complex than asking about immediate extinction. (Why exactly 100 year cutoff? why 50%?)

- since the definition explicitly allows for different x-risks to be differently bad, the amount you'd pay to reduce them would vary depending on the x-risk. So the question is underspecified.

- The independence assumption is better if funders often face opportunities to reduce a Y%-risk that's roughly independent from most other x-risk this century. Your suggestion is better if funders often face opportunities to reduce Y percentage points of all x-risk this century (e.g. if all risks are completely disjunctive, s.t. if you remove a risk, you're guaranteed to not be hit by any other risk).

- For your two examples, the risks from asteroids and climate change are mostly independent from the majority of x-risk this century, so there the independence assumption is better.

- The disjunctive assumption can happen if we e.g. study different mutually exclusive cases, e.g. reducing risk from worlds with fast AI take-off vs reducing risk from worlds with slow AI take-off.

- I weakly think that the former is more common.

- (Note that the difference only matters if total x-risk this century is large.)

Edit: This is all about what version of this question is the best version, independent of inertia. If you're attached to percentage points because you don't want to change to an independence assumption after there's already been some discussion on the post, then this your latest suggestion seems good enough. (Though I think most people have been assuming low total amount of x-risk, so probably independence or not doesn't matter that much for the existing discussion.)

NunoSempere @ 2021-11-28T18:11 (+4)

Nitpick: Assuming that for every positive state there is an equally negative state is not enough to think that the maximally bad state is only -100% of the expected value of the future, it could be much worse than that..

Zach Stein-Perlman @ 2021-11-28T04:00 (+4)

We have to be a little careful when the probability of existential catastrophe is not near zero, and especially when it is near one. For example, if the probability that unaligned AI will kill us (assuming no other existential catastrophe occurs first) is 90%, then preventing an independent 0.1% probability of climate catastrophe isn't worth 10 basis points — it's worth 1. The simulation argument may raise a similar issue.

I think we can get around this issue by reframing as as "change in survival probability from baseline." Then reducing climate risk from 0.1% to 0 is about a 0.1% improvement, and reducing AI risk from 90% to 89% is about a 10% improvement, no matter what other risks there are or how likely we are to survive overall (assuming the risks are independent).

Linch @ 2021-11-28T04:09 (+2)

This is a good point. I was implicitly using Ord's numbers of assuming something like 17% xrisk, but my (very fragile) inside view is higher.

The simulation argument may raise a similar issue

Can you elaborate?

Zach Stein-Perlman @ 2021-11-28T04:20 (+1)

Suppose we believe there is 90% probability that we are living in a simulation, and the simulation will be shut down at some point (regardless of what we do), and this is independent of all other X-risks. Then a basis point suddenly costs ten times what it did before we considered simulation, since nine out of ten basis points go toward preventing other X-risks in worlds in which we'll be shut down anyway. This is correct in some sense but potentially misleading in some ways — and I think we don't need to worry about it at all if we reframe as "change in survival probability," since any intervention has the same increase in survival probability in the 90%-simulation world as increase in survival probability in the 0%-simulation world.

Linch @ 2021-11-28T04:28 (+2)

Hmm, maybe I'm missing something, but I feel like unless you're comparing classical longtermist interventions with simulation escape interventions, or the margins are thin enough that longtermist interventions are within a direct order of magnitude to the effectiveness of neartermist interventions (under a longtermist axiology), you should act as if we aren't in a simulation?

I'm also a bit confused about whether probability "we" live in a simulation is more a claim about the material world or a claim about anthropics, never fully resolved this philosophical detail to my satisfaction.

Zach Stein-Perlman @ 2021-12-01T04:00 (+1)

I totally agree that under reasonable assumptions we should act as if we aren't in a simulation. I just meant that random weird stuff [like maybe the simulation argument] can mess with our no-catastrophe probability. But regardless of what the simulation risk is, decreasing climate risk by 0.1% increases our no-catastrophe probability by about 0.1%. Insofar as it's undesirable that one's answer to your question depends on one's views on simulation and other stuff we should practically disregard, we should really be asking a question that's robust to such stuff, like cost-of-increasing-no-catastrophe-probability-relative-to-the-status-quo-baseline rather than all-things-considered-basis-points. Put another way, imagine that by default an evil demon destroys civilizations with 99% probability 100 years after they discover fission. Then increasing our survival probability by a basis point is worth much more than in the no-demon world, even though we should act equivalently. So your question is not directly decision-relevant.

Not sure what your "a claim about the material world or a claim about anthropics" distinction means; my instinct is that eg "we are not simulated" is an empirical proposition and the reasons we have to assign a certain probability to that proposition are related to anthropics.

Linch @ 2021-12-01T04:20 (+2)

Not sure what your "a claim about the material world or a claim about anthropics" distinction means; my instinct is that eg "we are not simulated" is an empirical proposition and the reasons we have to assign a certain probability to that proposition are related to anthropics.

If I know with P~=1 certainty that there are 1000 observers-like-me and 999 of them are in a simulation (or Boltzmann brains, etc), then there's at least two reasonable interpretations of probability.

- The algorithm that initiates me has at least one representation with ~100% certainty outside the simulation, therefore the "I" that matters is not in a simulation, P~=1.

- Materially, for the vast majority of observers like me, they are in a simulation. P~=0.1% that I happen to be the instance that's outside the simulation. P~=0.001

Put another way, the philosophical question here is whether P(we're in a simulation) should most naturally be understood as "there exists a copy of me outside of simulation" vs "of the copies of me that exists, how many of them are in a simulation" is the relevant empirical operationalization.

Simon Skade @ 2021-12-02T21:57 (+3)

I think most of the variance of estimates may come from the high variance in estimations of how big x-risk is. (Ok, a lot of the variance here comes from different people using different methods to estimate the answer to the question, but assuming people all would use one method, I expect a lot of variance coming from this.)

Some people may say there is a 50% probability of x-risk this century, and some may say 2%, which causes the amount of money they would be willing to spend to be quite different.

But because in both cases x-risk reduction is still (by far) the most effective thing you can do, it may make sense to ask how much you would pay for a 0.01% reduction of the current x-risk. (i.e. from 3% to 2.9997% x-risk.)

I think this would cause more agreement, because it is probably easier to estimate for example how much some grant decreases AI risk in respect to the overall AI risk, than to expect how high the overall AI risk is.

That way, however, the question might be slightly more confusing, and I do think we should also make progress on better estimating the overall probability of an existential catastrophe occurring in the next couple of centuries, but I think the question I suggest might still be the better way to estimate what we want to know.

Michael_Wiebe @ 2021-11-29T04:35 (+3)

Suppose there are people and a baseline existential risk . There's an intervention that reduces risk by (ie., not percentage points).

Outcome with no intervention: people die.

Outcome with intervention: people die.

Difference between outcomes: . So we should be willing to pay up to for the intervention, where is the dollar value of lives.

[Extension: account for time periods, with discounting for exogenous risks.]

I think this approach makes more sense than starting by assigning $X to 0.01% risk reductions, and then looking at the cost of available interventions.

Linch @ 2021-11-29T22:18 (+3)

dollar value of lives

What does that even mean in this context? cost =/= value, and empirically longtermist EA has nowhere near the amounts of $s as would be implied by this model.

Michael_Wiebe @ 2021-11-30T18:32 (+3)

@Linch, I'm curious if you've taken an intermediate microeconomics course. The idea of maximizing utility subject to a budget constraint (ie. constrained maximization) is the core idea, and is literally what EAs are doing. I've been thinking for a while now about writing up the basic idea of constrained maximization, and showing how it applies to EAs. Do you think that would be worthwhile?

Linch @ 2021-11-30T23:52 (+3)

I did in sophomore year of college (Varian's book I think?), but it was ~10 years ago so I don't remember much. 😅😅😅. A primer may be helpful, sure.

(That said, I think it would be more worthwhile if you used your model to produce an answer to my question, and then illustrate some implications).

robirahman @ 2021-11-30T18:51 (+3)

I'd love to read such a post.

Michael_Wiebe @ 2021-11-30T00:15 (+1)

Given an intervention of value , you should be willing to pay for it if the cost satisfies (with indifference at equality).

If your budget constraint is binding, then you allocate it across causes so as to maximize utility.

NunoSempere @ 2021-11-28T18:08 (+3)

I'm curious about potential methodological approaches to answering this question:

- Arrive at a possible lower bound for the value of averting x-risk by thinking about how much one is willing to pay to save present people, like in Khorton's answer.

- Arrive at a possible lower bound by thinking about how much is willing to pay for current and discounted future people

- Thinking about what EA is currently paying for similar risk reductions, and arguing that one should be willing to pay at least as much for future risk-reduction opportunities

- I'm unsure about this, but I think this is most of what's going on with Linch's intuitions.

Overall, I agree that this question is important, but current approaches don't really convince me.

My intuition about what would convince me would be some really hardcore and robust modeling coming out of e.g., GPI taking into account both increased resources over time and increased risk. Right now the closest published thing that exists might be Existential risk and growth and Existential Risk and Exogenous Growth—but this is inadequate for our purposes because it considers stuff at the global rather than at the movement level—and the closest unpublished thing that exists are some models I've heard about that I hope will get published soon.

Mathieu Putz @ 2022-01-06T21:05 (+2)

From the perspective of a grant-maker, thinking about reduction in absolute basis points makes sense of course, but for comparing numbers between people, relative risk reduction might be more useful?

E.g. if one person thinks AI risk is 50% and another thinks it's 10%, it seems to me the most natural way for them to speak about funding opportunities is to say it reduces total AI risk by X% relatively speaking.

Talking about absolute risk reduction compresses these two numbers into one, which is more compact, but makes it harder to see where disagreements come from.

It's a minor point, but with estimates of total existential risk sometimes more than an order of magnitude apart from each other, it actually gets quite important I think.

Also, given astronomical waste arguments etc., I'd expect most longtermists would not switch away from longtermism once absolute risk reduction gets an order of magnitude smaller per dollar.

Edited to add: Having said that, I wanna add that I'm really glad this question was asked! I agree that it's in some sense the key metric to aim for and it makes sense to discuss it!

Michael_Wiebe @ 2021-11-29T03:59 (+1)

If asteroid risk is 1/1,000,000, how are you thinking about a 0.01% reduction? Do you mean 0.01pp = 1/10,000, in which case you're reducing asteroid risk to 0? Or reducing it by 0.01% of the given risk, in which case the amount of reduction varies across risk categories?

The definition of basis point seems to indicate the former.

Linch @ 2021-11-29T05:20 (+2)

If asteroid risk is 1/1,000,000, how are you thinking about a 0.01% reduction?

a 0.01% total xrisk reduction will be 0.01%/(1/1,000,000) = 100x the reduction of asteroid risks.

Michael_Wiebe @ 2021-11-29T07:02 (+1)

Sorry, I just mean: are you dealing in percentage points or percentages?

Linch @ 2021-12-03T18:01 (+2)

Percentage points.