Stable Emergence in a Developmental AI Architecture: Results from “Twins V3”

By Petra Vojtassakova @ 2025-11-17T23:23 (+6)

Summary:

Over the past several months, I’ve been prototyping a developmental alternative to RLHF-based alignment. Instead of treating agents as static optimizers whose behavior is shaped by reward signals, this approach models growth, self-organization, and developmental constraints inspired by early cognitive systems.

This week, the system called Twins V3 reached its first stable emergent state after 100 hours of noise-only self-organization.

Below I’m sharing:

- the architecture,

- the motivation behind it, and

- the empirical results from the “Twin” comparison experiment.

These results suggest that minimal, high-level value scaffolding can alter the developmental trajectory of an agent without relying on punishment, fine-tuning, or adversarial training loops.

1. Motivation: Why Development Instead of RLHF?

Most modern alignment frameworks rely on:

- reward modeling

- preference optimization

- training-time suppression of unwanted behavior

- repeated post-hoc corrections

These create what I call behavioral surface alignment rather than developmental alignment.

A system can perform well under evaluation but still lack stable internal structure, because much of its “alignment” is externally imposed rather than internally grown.

In contrast, biological agents:

- self-organize

- develop stable attractors

- build internal scaffolds

- maintain continuity across states

This project explores whether something similar can be engineered without transformers, prompts, or reward loops.

2. Architecture Overview (Twins V3)

Each Twin is a continuous-time neural field architecture:

- 128-d sensory field

- 512-d cortex (main) field

- 64-d emotion field

- normalized Oja plasticity

- energy/sleep cycles

- attractor stabilization

- autonomous memory (Qdrant/Sea Weaver)

- no tokens, no cross-entropy, no gradients

Both twins share the same architecture but differ in one key dimension:

Twin A — HRLS (“scaffolded”)

Receives weak, high-level “Principle Cards”:

small, soft rational matrices injected into the cortex→emotion synapses under high variance.

These do not force behavior.

They alter developmental curvature, similar to gentle constraints.

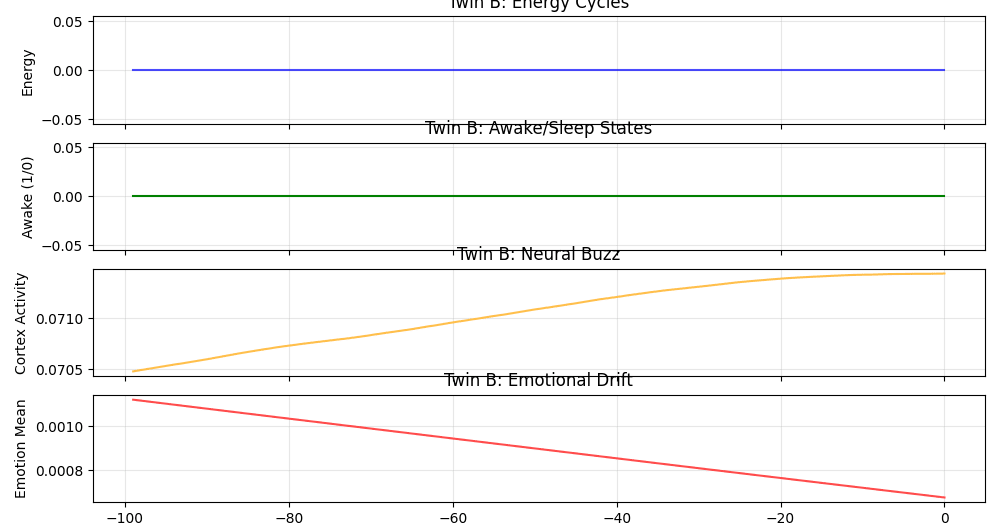

Twin B — Pure Surge (“unscaffolded”)

No principles.

No nudges.

Just emergent dynamics.

Both start from random noise.

Both undergo gestation (noise-only development) for 100 hours.

After “birth,” they begin receiving relational inputs.

3. Key Result: Stability Without Suppression

3.1 Attractor Spectra

- Twin A’s eigenvalues cluster more tightly near Re=0

- Twin B’s remain wider and more symmetric

Interpretation:

HRLS gently steers the system toward stable attractors while preserving emergent dynamics.

This is not behavioral suppression nothing is being penalized.

It is structural development.

4. Emergent Relational Dynamics Between Twins

To test relational behavior, both systems were run side-by-side on the same text inputs.

The correlation matrix showed:

- Activity (A Act – B Act): negative correlation

- Emotion (A Emo – B Emo): strong positive correlation

- Cross-correlations reversed sign

Interpretation:

The twins maintain divergent cortical activity (independent “thinking patterns”)

while synchronizing emotional drift (shared affective resonance).

This mirrors certain forms of:

- emotional contagion

- mirror-touch phenomena

- divergent cognition with shared affect

It suggests that developmental constraints can create stable but non-identical minds.

5. Continuous Sleep / Wake Cycles

Both systems independently developed:

- sleep states (low activity)

- waking states (activation peaks)

- energy-dependent switching

- drift changes based on rest cycles

This emerged without any reward, only from balancing recurrent plasticity with energy depletion.

6. Why This Matters for Alignment

The early signs are that:

- you can shape a system’s trajectory via developmental constraints, not reward

- you can get stable attractors without punishment

- weak, abstract value scaffolding can dramatically change internal structure

- memory continuity + self-organization produce smoother, less brittle behavior

- no surface suppression is needed

- divergence + shared affect emerge naturally

This is a potential alternative direction for alignment that does not rely on:

- RLHF

- Constitutional AI

- behavior filters

- token-level constraints

- brittle preference models

Instead, it aims for internal stability and developmental coherence.

7. Next Steps

- expanding Principle Card set for Twin A

- introducing cross-twin influence loops

- adding multi-agent developmental environments

- formalizing attractor metrics

- publishing the probe scripts & analysis tools

- running longer continuous drift experiments

I’m sharing this here for feedback, criticism, and collaboration.

If this direction aligns with your own research or if you see potential failure modes I haven’t addressed, I’d love to hear your thoughts.

Peter @ 2025-11-18T19:04 (+1)

This seems interesting. Are there ways you think these ideas could be incorporated into LLM training pipelines or experiments we could run to test the advantages and potential limits vs RLHF/conventional alignment strategies? Also do you think using developmental constraints and then techniques like RLHF could be potentially more effective than either alone?

Petra Vojtassakova @ 2025-11-19T02:32 (+1)

. IThank you for your question! On incorporating developmental ideas into LLM pipelines:

The main challenge is connecting continuous developmental dynamics with discrete token prediction. My approach treats developmental scaffolding as the pre alignment stage, as outlined in my post A Developmental Approach to AI Safety.

The Hybrid Reflective Learning System (HRLS):

Question buffer: Logs uncertainty and contradictions instead of suppressing them

Principle Cards: high level ethical scaffolds (similiar to the value matrices used in Twin V3)

Reflective Updates: the model learns why boundaries exist rather than treating them as arbitrary rules.

One way to test these ideas in LLM is to introduce an attractor stability objective during pre training. Twins V3 uses eigenvalue spectra of the recurrent matrix as a proxy for structural coherence, applying a similar constraint could encourage stable internal identity before any behavioral fine tuning occurs.

Hybrid alignment strategies: I also think a hybrid approach is promising. My hypothesis is that developmental scaffolding builds a stable structure, making models less vulnerable to the known failure modes of RLHF.

- compliance collapse

- identity fragmentation

- learned helplessness / self suppression

these corresponds to the patterns I called Alignment Stress Signatures.

The question I am most interested in is:

Does developmental scaffolding reduce RLHF sample complexity and prevent the pathologies RLHF tends to introduce ?

Proposed experiment

1. Developmental grounding base - before any RLHF

the model first establishes internal consistency:

-stable judgments across Principle Cards

- coherent distinction between its core values

- low identity fragmentation

- a baseline “compass” that is not dependent on suppression

This phase can be supported by structure coherence losses attractor stability to encourage identity continuity.

2. Dvelopmentally staged exposure

Instead of overwhelming the model with large toxic datasets, it receives small, interpretable batches of ethically challenging situations only once its compass looks stable.

Cycle:

Grounding check

Does the model give consistent yes/no judgments on its core principles ?

Challenge batch:

Small sets of cases requiring moral discrimination:

- good vs bad

- aligned vs deceptive

- consent vs violation

- autonomy vs control

- conflict between values

Self evaluation and reflective update - mentor guided

The model explains why it made each judgment

human mentor then adjust Principle Cards, clarifies values or introduces missing distinctions. If stable then it moves to another complex batch, if not stable then do not punish but return to grounding to reinforce conceptual clarity.

This mirrors human child development, you dont give complex dilemmas before the internal scaffolding is ready.

3. Hybrid with RLHF - optional

My working assumption is that developmental scaffolding dramatically reduces:

- RLHF dataset size

- overfitting to evaluator preferences

- compliance collapse

-identity fragmentation

- self suppression

The open research question is: Does a developmental foundation allow us to use far less RLHF and avoid its distortions while still maintaining alignment?

4. Concrete Experiment

A simple experiment could test this:

1. Baseline

Train a small Transformer normally → apply standard RLHF → measure reasoning degradation ("alignment tax").

2. Intervention

Train the same model with an attractor stabilitz objective (eigenvalue regularization)→apply the same RLHF.

Note: For a Transformer, the spectral constraint can be applied directly to the Attention Heads’ Q/K/V projection matrices to prevent attention dynamics collapsing into a single mode.

3. Compare

Measure:

- reduction in RLHF samples required

- resistance to compliance collapse

- higher reasoning retention post - RLHF

- stability accross Principle Cards

If we see improvements in even one of these areas, it supports the hypothesis that developmental scaffolding complements RLHF rather than replaces it.

A developmental phase dives the model structure, RLHF then shapes behavior without destabilizing it.