Open Phil Should Allocate Most Neartermist Funding to Animal Welfare

By Ariel Simnegar 🔸 @ 2023-11-19T17:00 (+526)

Key Takeaways

- The evidence that animal welfare dominates in neartermism is strong.

- Open Philanthropy (OP) should scale up its animal welfare allocation over several years to approach a majority of OP's neartermist grantmaking.

- If OP disagrees, they should practice reasoning transparency by clarifying their views:

- How much weight does OP's theory of welfare place on pleasure and pain, as opposed to nonhedonic goods?

- Precisely how much more does OP value one unit of a human's welfare than one unit of another animal's welfare, just because the former is a human? How does OP derive this tradeoff?

- How would OP's views have to change for OP to prioritize animal welfare in neartermism?

Summary

- Rethink Priorities (RP)'s moral weight research endorses the claim that the best animal welfare interventions are orders of magnitude (1000x) more cost-effective than the best neartermist alternatives.

- Avoiding this conclusion seems very difficult:

- Rejecting hedonism (the view that only pleasure and pain have moral value) is not enough, because even if pleasure and pain are only 1% of what's important, the conclusion still goes through.

- Rejecting unitarianism (the view that the moral value of a being's welfare is independent of the being's species) is not enough. Even if just for being human, one accords one unit of human welfare 100x the value of one unit of another animal's welfare, the conclusion still goes through.

- Skepticism of formal philosophy is not enough, because the argument for animal welfare dominance can be made without invoking formal philosophy. By analogy, although formal philosophical arguments can be made for longtermism, they're not required for longtermist cause prioritization.

- Even if OP accepts RP's conclusion, they may have other reasons why they don't allocate most neartermist funding to animal welfare.

- Though some of OP's possible reasons may be fair, if anything, they'd seem to imply a relaxation of this essay's conclusion rather than a dismissal.

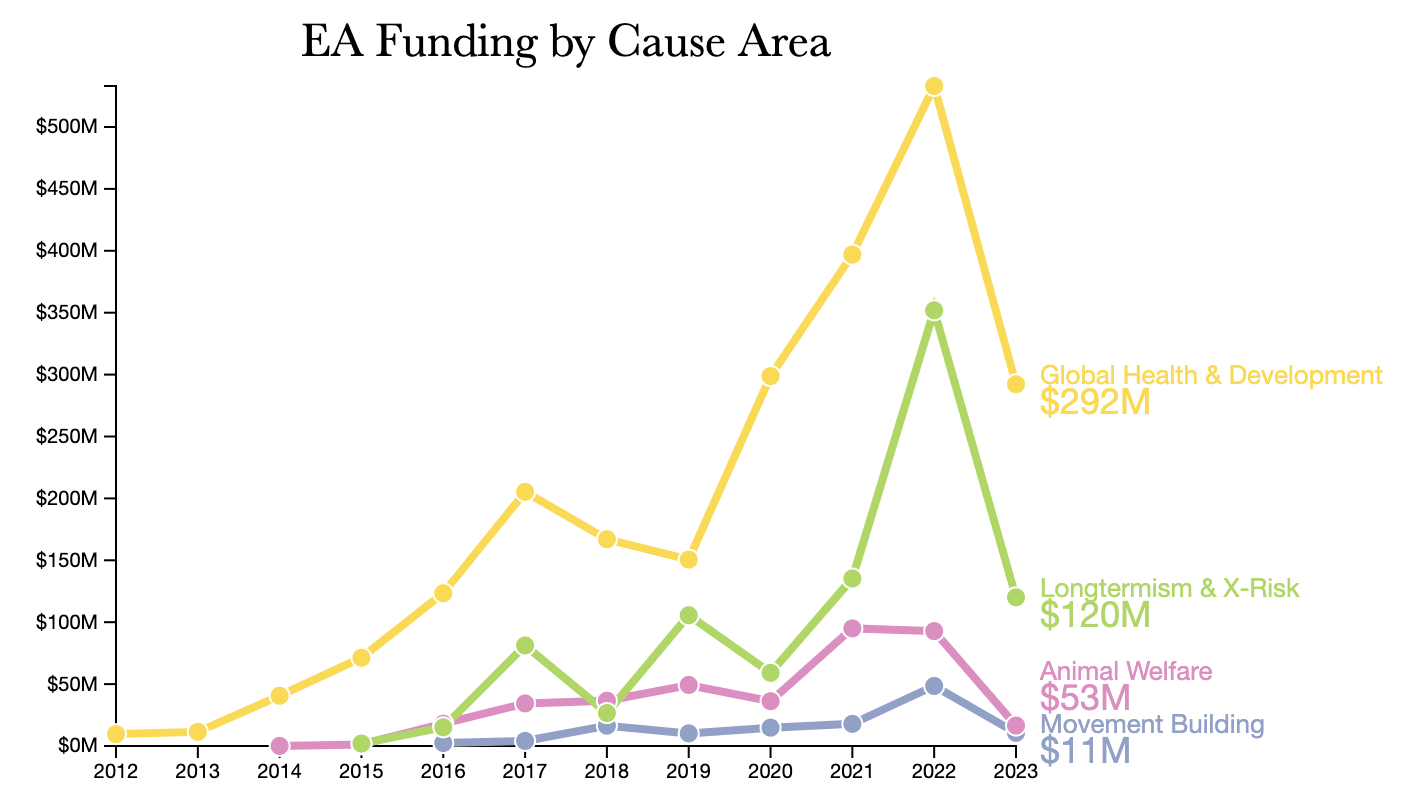

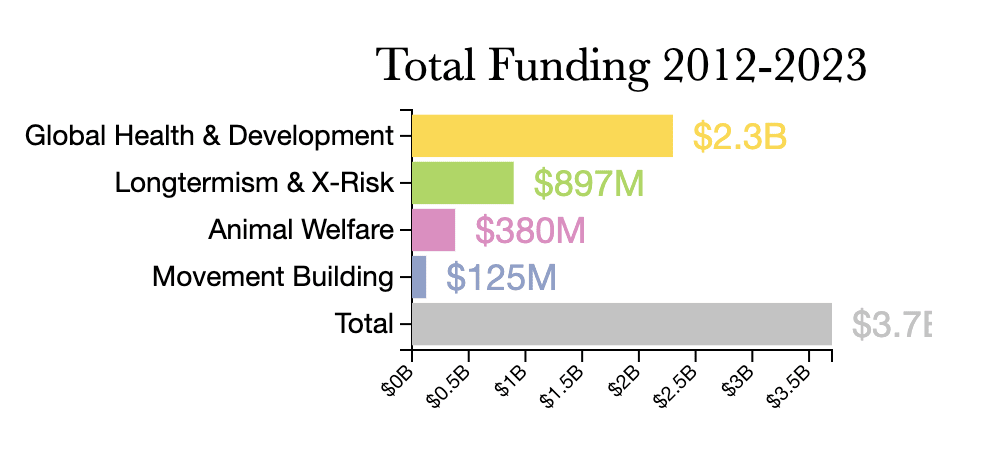

- It seems like these reasons would also broadly apply to AI x-risk within longtermism. However, OP didn't seem put off by these reasons when they allocated a majority of longtermist funding to AI x-risk in 2017, 2019, and 2021.[1]

- I request that OP clarify their views on whether or not animal welfare dominates in neartermism.

Thanks to Michael St. Jules for his comments.

The Evidence Endorses Prioritizing Animal Welfare in Neartermism

GiveWell estimates that its top charity (Against Malaria Foundation) can prevent the loss of one year of life for every $100 or so.

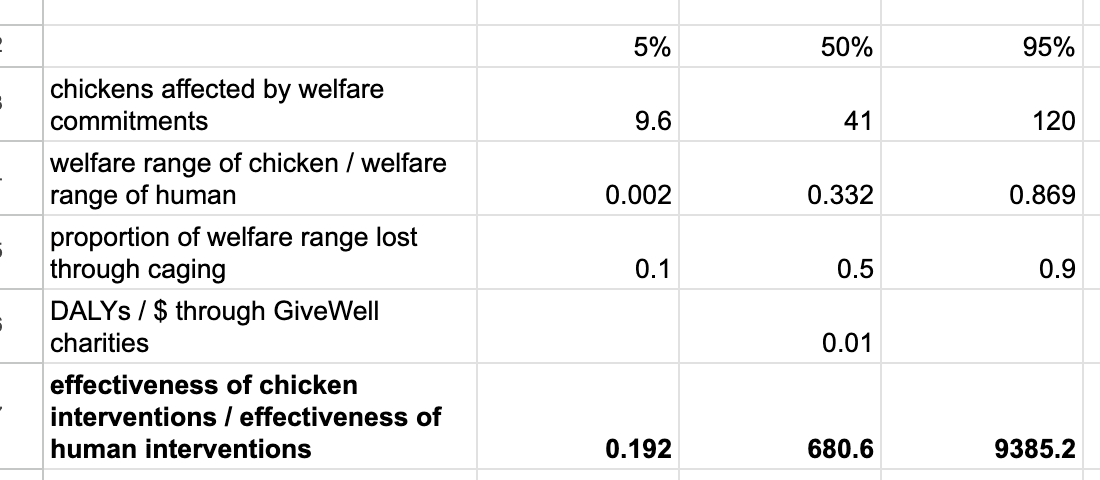

We’ve estimated that corporate campaigns can spare over 200 hens from cage confinement for each dollar spent. If we roughly imagine that each hen gains two years of 25%-improved life, this is equivalent to one hen-life-year for every $0.01 spent.

If you value chicken life-years equally to human life-years, this implies that corporate campaigns do about 10,000x as much good per dollar as top charities. … If one values humans 10-100x as much, this still implies that corporate campaigns are a far better use of funds (100-1,000x).

Holden Karnofsky, "Worldview Diversification" (2016)

"Worldview Diversification" (2016) describes OP's approach to cause prioritization. At the time, OP's research found that if the interests of animals are "at least 1-10% as important" as those of humans, then "animal welfare looks like an extraordinarily outstanding cause, potentially to the point of dominating other options".[2] After the better part of a decade, the latest and most rigorous research funded by OP has endorsed a stronger claim: Any significant moral weight for animals implies that OP should prioritize animal welfare in neartermism. This sentence is operationalized in the paragraphs that follow.

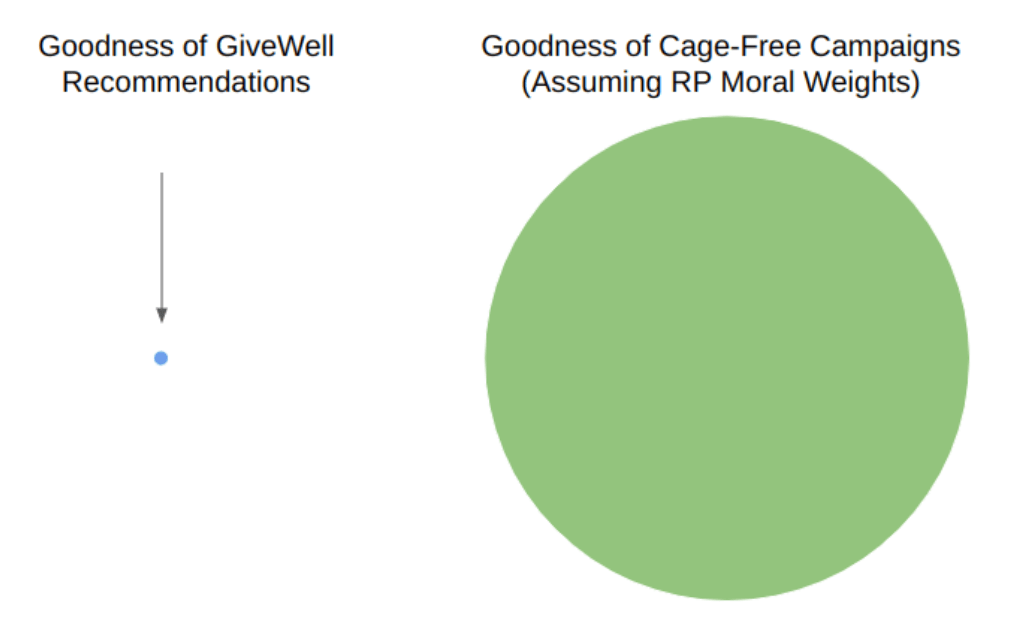

In 2021, OP granted $315,500 to RP for moral weight research, which "may help us compare future opportunities within farm animal welfare, prioritize across causes, and update our assumptions informing our worldview diversification work" [emphasis mine].[3] RP assembled an interdisciplinary team of experts in philosophy, comparative psychology, animal welfare science, entomology, and veterinary research to review the literature's latest evidence.[4] RP's moral weights and analysis of cage-free campaigns suggest that the average cost-effectiveness of cage-free campaigns is on the order of 1000x that of GiveWell's top charities.[5] Even if the campaigns' marginal cost-effectiveness is 10x worse than the average, that would be 100x.

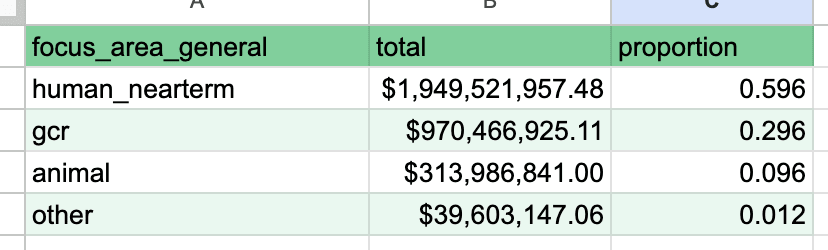

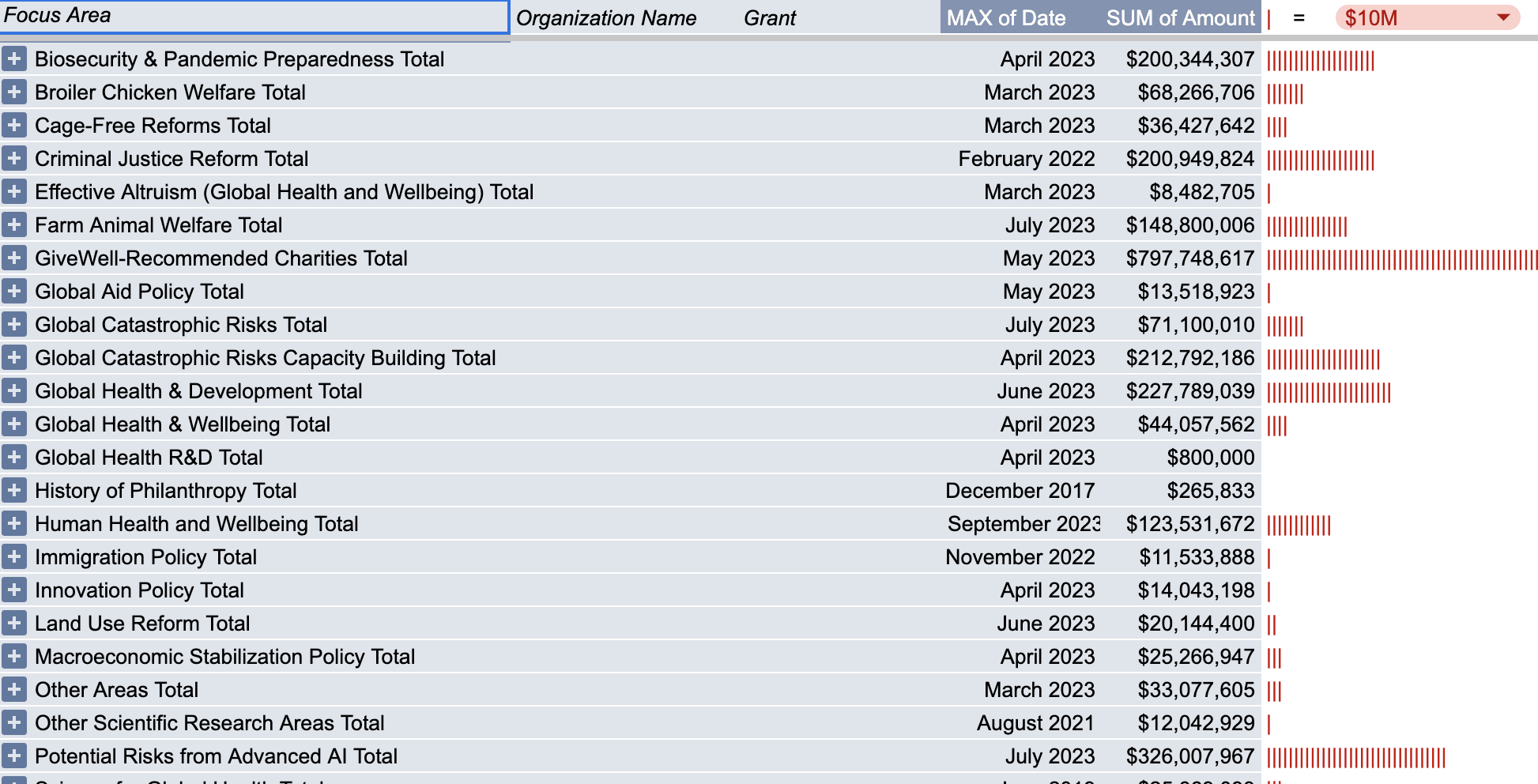

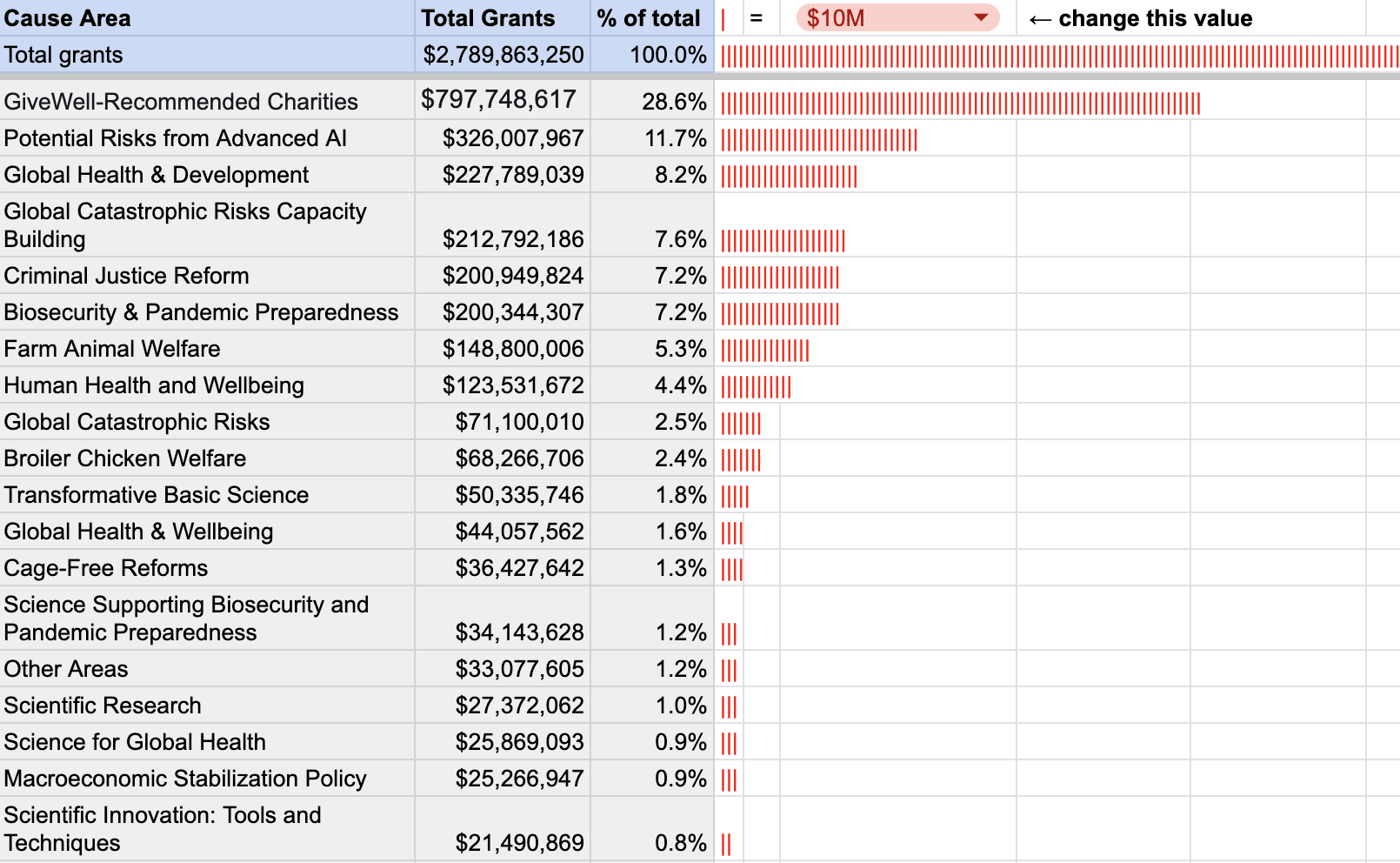

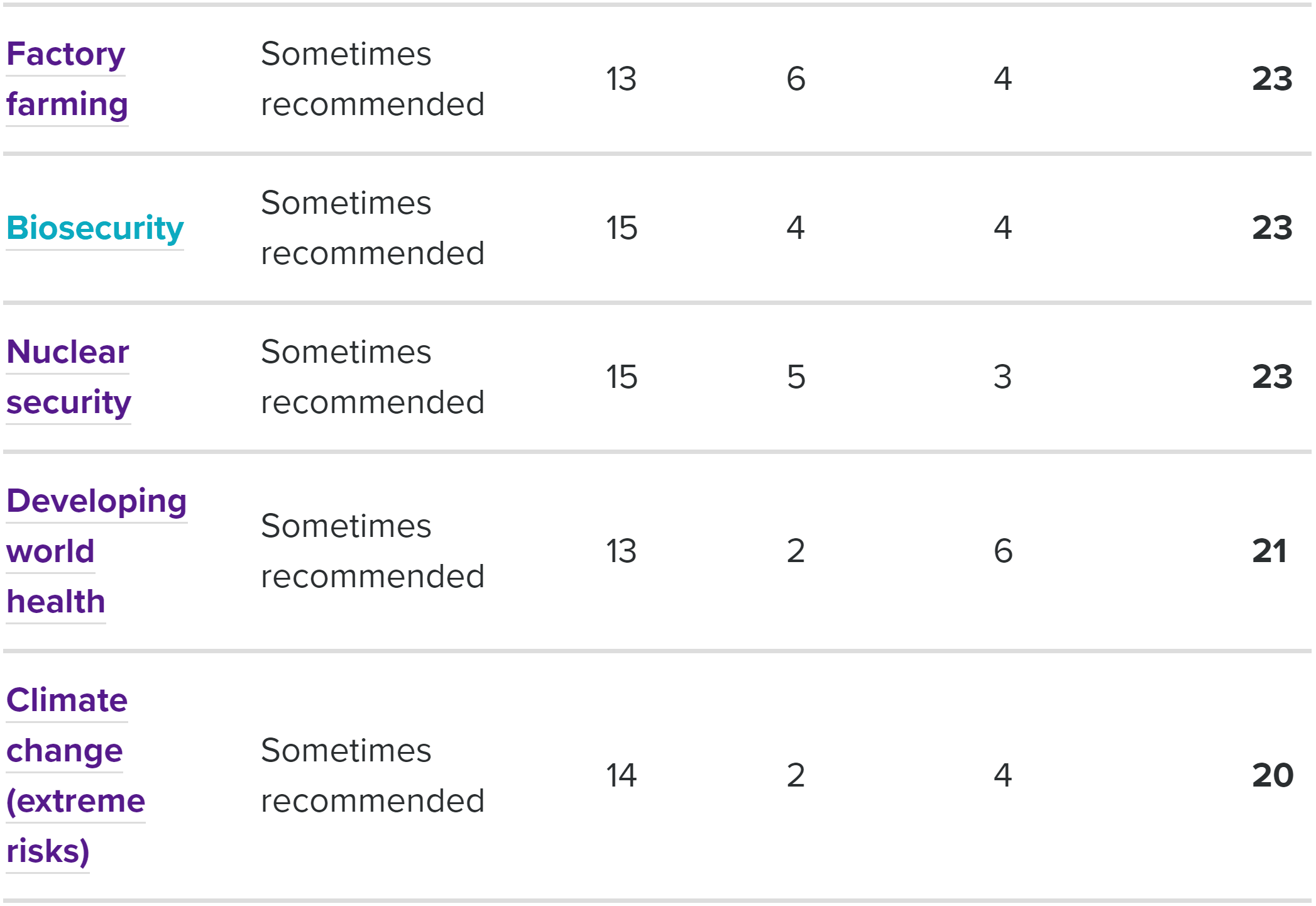

In 2019, the mean EA leader endorsed allocating a majority of neartermist resources over the next 5 years to animal welfare.[6] Given the strength of the evidence that animal welfare dominates in neartermism by orders of magnitude, this allocation seems sensible for OP. In actuality, OP has allocated an average of 17% of its neartermist funding to animal welfare each year, with 83% going to other neartermist causes.[7] Since OP funded RP's moral weight research specifically in order to "prioritize across causes, and update our assumptions informing our worldview diversification work", one might have expected OP to update their allocations in response to RP's evidence. However, OP's plans for 2023 give no indication that this will happen.

The EA movement currently spends more on global health than on animal welfare and AI risk combined. It clearly isn't even following near-termist ideas to their logical conclusion, let alone long-termist ones.

If you didn't want animals to dominate, maybe you shouldn't have been a utilitarian! … When people want to put the blame on these welfare range estimates, I think that's just not taking seriously your own moral commitments.

Bob Fischer, EAG Bay Area 2023

Objections

Animal Welfare Does Not Dominate in Neartermism

OP may reject that animal welfare dominates in neartermism. If so, I'm unaware of any public clarification of OP's beliefs on the topic. In the following sections, I attempt to deduce what views OP may hold in order for animal welfare to not dominate in neartermism, and show that such views would be highly peculiar and dubious. If OP doesn't think animal welfare dominates, I ask them to publicly clarify their views, so that they can be constructively engaged with.

RP's Project Assumptions are Incorrect

If OP rejects RP's conclusions, they must reject some combination of RP's project assumptions: utilitarianism, valence symmetry, hedonism, and unitarianism. I don't think OP rejects utilitarianism or valence symmetry, so the following will focus upon OP's possible objections to:

- Hedonism: The view that welfare derives only from happiness and suffering.

- Unitarianism: The view that the moral importance of welfare doesn't depend upon species membership.

Crucially, rejecting hedonism is not enough to avoid animal welfare dominating in neartermism. As Bob Fischer points out, "Even if hedonic goods and bads (i.e., pleasures and pains) aren't all of welfare, they’re a lot of it. So, probably, the choice of a theory of welfare will only have a modest (less than 10x [i.e. at least 10 % weight for hedonism]) impact on the differences we estimate between humans' and nonhumans' welfare ranges".[8] One would need to endorse an overwhelmingly non-hedonic theory, and/or an overwhelmingly hierarchical theory, such that the combined views discount three orders of magnitude of animal welfare impact. For example, OP could hold an overwhelmingly non-hedonic view where almost none (0.1%) of the human welfare range comes from pleasure and pain.

OP could also hold an overwhelmingly hierarchical view where just for being a human, one unit of a human's welfare is considered vastly (1000x) more important than the same amount of welfare in another animal. OP could also hold a combination of less-overwhelming versions of the two, such as 1% of human welfare coming from pleasure/pain and one unit of human welfare being 10x as important as one unit of animal welfare, so long as the combined views discount three orders of magnitude of animal welfare impact.

The following two sections will critique overwhelming non-hedonism and overwhelming hierarchicalism respectively. If the overwhelming views were significantly less overwhelming, my critique would be substantially the same. Therefore, I request that the reader consider the following critiques to also address whichever combination of less-overwhelming views OP may hold.

Endorsing Overwhelming Non-Hedonism

We [OP] think that most plausible arguments for hedonism end up being arguments for the dominance of farm animal welfare. … If we updated toward more weight on hedonism, we think the correct implication would be even more work on FAW, rather than work on human mental health.

Alexander has stated that "Hedonism doesn't seem very compelling to me".[9] Overwhelming non-hedonism, combined with the implicit premise that humans are vastly more capable of realizing non-hedonic goods than animals, may explain OP's neartermist cause prioritization: Enabling humans to realize non-hedonic goods may be better than reducing extreme suffering for orders of magnitude more animals.

The implicit premise seems non-obvious. It's plausible that both humans and other animals would have "not being tortured" pretty high in their preferences/objective list.

Even if the implicit premise is assumed, there's substantial empirical evidence that overwhelmingly non-hedonic theories are dubious:

- Extreme pain or discomfort reduces health-related quality of life by 41%.[10]

- Nerve damage results in a loss of health-related quality of life between 39% for diabetes-caused nerve damage and 85% for failed back surgery syndrome.[11]

- Suffering from cluster headaches is associated with greatly increased suicidality.[12]

- Patients suffering from chronic musculoskeletal pain would rather take a gamble with a ⅕ chance of dying and a ⅘ chance of being cured than continue living with their condition.[13]

Evidently, many people who experience severe suffering find it to outweigh many of the non-hedonic goods in life. If one endorses an overwhelmingly non-hedonic view, they’d have to argue persuasively that these people’s revealed preferences are deeply misguided.

Furthermore, if one accepts RP’s findings given hedonism but rejects prioritizing animals due to an overwhelmingly non-hedonic theory, they must endorse deeply unintuitive conclusions. To endorse human interventions over animal interventions, the human welfare range under the overwhelmingly non-hedonic view would have to be ~1000x the human welfare range under hedonism. Imagine a world with hundreds of people in extreme hedonic pain (e.g. drowning in lava) but one person with extreme non-hedonic good (e.g. love, knowledge, friendship). The overwhelming non-hedonist would consider this world net good.

An overwhelmingly non-hedonic view would also be out of step with much of the EA community. A poll of EAs found that most respondents would give up years of extreme good, whether from hedonic or non-hedonic sources, to avoid a day of extreme hedonic pain (drowning in lava). Nearly a third responded that "No amount of happiness could compensate".

I experienced "disabling"-level pain for a couple of hours, by choice and with the freedom to stop whenever I want. This was a horrible experience that made everything else seem to not matter at all.

A single laying hen experiences hundreds of hours of this level of pain during their lifespan, which lasts perhaps a year and a half - and there are as many laying hens alive at any one time as there are humans. How would I feel if every single human were experiencing hundreds of hours of disabling pain?

A single broiler chicken experiences fifty hours of this level of pain during their lifespan, which lasts 4-6 weeks. There are 69 billion broilers slaughtered each year. That is so many hours of pain that if you divided those hours among humanity, each human would experience about 400 hours (2.5 weeks) of disabling pain every year. Can you imagine if instead of getting, say, your regular fortnight vacation from work or study, you experienced disabling-level pain for a whole 2.5 weeks? And if every human on the planet - me, you, my friends and family and colleagues and the people living in every single country - had that same experience every year? How hard would I work in order to avert suffering that urgent?

Every single one of those chickens are experiencing pain as awful and all-consuming as I did for tens or hundreds of hours, without choice or the freedom to stop. They are also experiencing often minutes of 'excruciating'-level pain, which is an intensity that I literally cannot imagine. Billions upon billions of animals. The numbers would be even more immense if you consider farmed fish, or farmed shrimp, or farmed insects, or wild animals.

If there were a political regime or law responsible for this level of pain - which indeed there is - how hard would I work to overturn it? Surely that would tower well above my other priorities (equality, democracy, freedom, self-expression, and so on), which seem trivial and even borderline ridiculous in comparison.

Endorsing Overwhelming Hierarchicalism

I don't know whether or not OP endorses overwhelming hierarchicalism. However, after overwhelming hedonism, I think overwhelming hierarchicalism is the next most likely crux for OP's rejection of animal welfare dominating in neartermism.

Many properties of the human condition have been proposed as justifications for valuing one unit of human welfare vastly (1000x) more than one unit of another animal's welfare. For every property I know of that's been proposed, a case can be constructed where a person lacks that property, but we still have the intuition that we shouldn't care much less about them than we do about other people:

- Intelligence: The intelligence of human infants and adult chickens isn't very different, but we should care for infants.

- Capacity for future intelligence: Terminally ill children or people with severe mental disabilities may never be more intelligent than adult pigs, but we should care deeply for these people.

- Species membership: If we learned that Danish people were actually an offshoot of a hominid which wasn't homo sapiens, should we care for them 1000x less than we do other people?

- Capacity for creativity, or speech, or dignity, etc: If a person is uncreative, or mute, or undignified, are they worth 1000x less?

I personally feel much more empathy for humans than for chickens, and a benefit of believing in overwhelming hierarchicalism would be that I could prioritize helping humans over chickens. It might also make eating meat permissible, which would make life much easier. However, the losses would be real. I'd feel like I'm compromising on my epistemics by adding an arbitrary line to my moral system which lets me ignore a possible atrocity of immense scale. I'd be doing this for the sake of the warm fuzzies I'd feel from helping humans, and convenience in eating meat. That's untenable to a mind built the way mine is.

It's Strongly Intuitive that Helping Humans > Helping Chickens

I agree! But many also find it strongly intuitive that saving a child drowning in front of them is better than donating 10k to AMF, and that atrocities happening right now are more important than whatever may occur billions of years from now. In both of these cases, strong arguments to the contrary have persuaded many EAs to revise their intuitions.

If the latest and most rigorous research points to cage-free campaigns being 1000x as good as AMF, should a strong intuition to the contrary discount that by three orders of magnitude?

Skepticism of Formal Philosophy

Though this section has invoked formal philosophy for the purpose of rigor, formal philosophy isn't actually required to make the high-level argument for animal welfare dominating in neartermism:

- If you hurt a chicken, that probably hurts the chicken on the order of ⅓ as much as if you hurt a human similarly.

- Extreme suffering matters enough that reducing it can sometimes be prioritized over cultivating friendship, love, or other goods.

- Reducing an animal's suffering isn't overwhelmingly less important than reducing a human's suffering.

- Therefore, if one's $5000 can either (a) prevent serious suffering for 50,000 hens for 1 year[14] or (b) enable a single person to realize a lifetime of love and friendship, (a) seems orders of magnitude more cost-effective.

By analogy, one might be skeptical of many longtermists' use of formal philosophy to justify rejecting temporal discounting, rejecting person-affecting views, and accepting the repugnant conclusion. However, the high-level case for longtermism doesn't require formal philosophy: "I think the human race going extinct would be extra bad, even compared to many billions of deaths".

Even if Animal Welfare Dominates, it Still Shouldn't Receive a Majority of Neartermist Funding

Even if OP accepts that animal welfare dominates in neartermism, they may have other reasons for not allocating it a majority of neartermist funding.

Worldview Diversification Opposes Majority Allocations to Controversial Cause Areas

OP might state that on principle, worldview diversification shouldn’t allow a majority allocation to a controversial cause area. However, in 2017, 2019, and 2021, OP allocated a majority of longtermist funding to AI x-risk reduction.[15] While OP and I myself think AI x-risk is a major concern, thoughtful people within and outside the EA community disagree. Those who don’t think AI x-risk is a concern may consider nuclear war, pandemics, and/or climate change to be the most pressing x-risks.[16] Those who think AI x-risk is a concern often regard it as ~10x more pressing than other x-risks. In 2017, 2019, and 2021, OP judged that the 10x importance of AI x-risk reduction, under the controversial view that AI x-risk is a concern, was high enough to warrant a majority of longtermist funding.

Similarly, thoughtful people within and outside the EA community disagree on whether animals merit moral consideration. If animals do, then the most impactful animal welfare interventions are likely ~1000x as cost-effective as the most impactful alternatives. Just as controversy regarding whether AI x-risk is a concern should not preclude OP allocating AI x-risk a majority of longtermist funding, controversy regarding whether animals merit moral concern should not preclude allocating animal welfare a majority of neartermist funding.

OP is Already a Massive Animal Welfare Funder

OP is the world’s largest funder in many extremely important and neglected cause areas. However, this should not preclude OP updating its prioritization between those cause areas if given sufficient evidence. For example, if a shocking technological breakthrough shortened TAI forecasts to 2025, even though OP is already the world’s largest funder of AI x-risk reduction, OP would be justified in increasing its allocation to that cause area.

Animal Welfare has Faster Diminishing Marginal Returns than Global Health

I agree that if OP prematurely allocated a majority of neartermist funding to animal welfare, then the marginal cost-effectiveness of OP's animal welfare grants would drop substantially. Instead, I suggest that OP scale up animal welfare funding over several years to approach a majority of OP's neartermist grantmaking.

To absorb such funding, many ambitious animal welfare megaprojects have been proposed. Even if these megaprojects would be an order of magnitude less cost-effective than corporate chicken campaigns, I've argued above that they'd likely be far more cost-effective than the best neartermist alternatives.

Even so, it seems that OP's Farm Animal Welfare program may currently be able to allocate millions more without an order of magnitude decrease in cost-effectiveness:

Although tens of millions of dollars feels like a lot of money, when you compare it to the scope of the problem it quickly feels like not that much money at all, so we are having to make tradeoffs. Every dollar we give to one project is a dollar we can’t give to another project, and so unfortunately we do have to decline to fund projects that probably could do a lot of good for animals in the world.

Amanda Hungerford, Program Officer for Farm Animal Welfare for OP (8:12-8:34).

Increasing Animal Welfare Funding would Reduce OP’s Influence on Philanthropists

Over time, we aspire to become the go-to experts on impact-focused giving; to become powerful advocates for this broad idea; and to have an influence on the way many philanthropists make choices. Broadly speaking, we think our odds of doing this would fall greatly if we were all-in on animal-focused causes. We would essentially be tying the success of our broad vision for impact-focused philanthropy to a concentrated bet on animal causes (and their idiosyncrasies) in particular. And we’d be giving up many of the practical benefits we listed previously for a more diversified approach. Briefly recapped, these are: (a) being able to provide tangibly useful information to a large set of donors; (b) developing staff capacity to work in many causes in case our best-guess worldview changes over time; (c) using lessons learned in some causes to improve our work in others; (d) presenting an accurate public-facing picture of our values; and (e) increasing the degree to which, over the long run, our expected impact matches our actual impact (which could be beneficial for our own, and others’, ability to evaluate how we’re doing).

Though this is unfortunate, it makes sense, and Holden should be trusted here. That said, there’s a world of difference between being “all-in on animal-focused causes” and allocating a majority of OP’s neartermist funding to animal welfare, while continuing to fund many other important neartermist cause areas. It doesn’t seem to me that the latter proposal runs nearly as much risk of alienating philanthropists. Some evidence of this is that OP is the world’s largest funder of AI x-risk reduction, another niche cause area which few philanthropists are concerned with. In spite of this, OP seems to have maintained its giving capacity. Given the overwhelming case for prioritizing animal welfare in neartermism, OP may be able to communicate its change in cause prioritization in a way which maintains the donor relationships which have done so much good for others.

Request for Reasoning Transparency from OP

Though I've endeavored to critique whichever views OP may plausibly hold that preclude prioritizing animal welfare in neartermism, I'm still deeply unsure about what OP's views actually are. Here are several reasons why OP should clarify their views:

- OP believes in reasoning transparency, but their reasoning has not been transparent.

- OP's prioritization seems out of step with the mean EA leader.[17] Clarifying OP's view could kindle a conversation which could update OP or other EA leaders.

- The only views I can currently think of where animal welfare wouldn't be prioritized in neartermism (overwhelming non-hedonism or overwhelming hierarchicalism) seem rather dubious. If OP has strong arguments for those views, or OP reveals a plausible alternative view I hadn't thought of, I and many others could be updated.

- Historically, OP's decisionmakers' statements about the moral worth of animals haven't been easy to reconcile. A cohesive statement of OP's view would put this to rest.

- For example, in 2017, Holden's personal reflections "indicate against the idea that e.g. chickens merit moral concern". In 2018, Holden stated that "there is presently no evidence base that could reasonably justify high confidence [that] nonhuman animals are not 'conscious' in a morally relevant way". Did Holden's view change? If so, for what reasons?

It's also possible that OP lacks a formal theory for why animal welfare doesn't dominate in neartermism. As Alexander Berger has said, "I’ve always recognized that my maximand is under-theorized". If so, it would seem even more important for OP to clarify their view. If there's a chance that 1 million dollars to corporate campaigns is actually worth 1 billion dollars to GiveWell-recommended charities, understanding one's answers to the relevant philosophical questions seems very important.

Here are some specific questions I request that OP answer:

- How much weight does OP's theory of welfare place on pleasure and pain, as opposed to nonhedonic goods?

- Precisely how much more does OP value one unit of a human's welfare than one unit of another animal's welfare, just because the former is a human? How does OP derive this tradeoff?

- How would OP's views have to change for OP to prioritize animal welfare in neartermism?

Conclusion

When I started as an EA, I found other EAs' obsession with animal welfare rather strange. How could these people advocate for helping chickens over children in extreme poverty? I changed my mind for a few reasons.

The foremost reason was my realization that my love for another being shouldn't be conditional on any property of the other being. My life is pretty different from the life of an African child in extreme poverty. We likely have different cultural values, and I'd likely disagree with many of the decisions they'll make over their lives. But those differences aren't important—each and every one of them is a special person whose feelings matter just the same.

The second reason was understanding the seriousness of the suffering at stake. When I think about the horrors animals experience in factory farms, it makes me feel horrible.

When a quarter million birds are stuffed into a single shed, unable even to flap their wings, when more than a million pigs inhabit a single farm, never once stepping into the light of day, when every year tens of millions of creatures go to their death without knowing the least measure of human kindness, it is time to question old assumptions, to ask what we are doing and what spirit drives us on.

Matthew Scully, "Dominion"

Thirdly, I've been asked whether the prospect of helping millions of beings cheapens the value of helping a single being. If I can save hundreds of African children over the course of my life, does each individual child matter proportionally less? Absolutely not. If helping a single being is worth so much, how much more is helping billions of beings worth? I can't make a difference for billions of beings, but you can.

We aspire to radical empathy: working hard to extend empathy to everyone it should be extended to, even when it’s unusual or seems strange to do so. As such, one theme of our work is trying to help populations that many people don’t feel are worth helping at all.

- ^

Simnegar, Ariel (2023). "Open Phil Grants Analysis". https://github.com/ariel-simnegar/open-phil-grants-analysis/blob/main/open_phil_grants_analysis.ipynb

- ^

Karnofsky, Holden (2016). "Worldview Diversification". https://www.openphilanthropy.org/research/worldview-diversification/

- ^

Open Philanthropy. "Rethink Priorities — Moral Patienthood and Moral Weight Research". https://www.openphilanthropy.org/grants/rethink-priorities-moral-patienthood-and-moral-weight-research/

- ^

"Our team was composed of three philosophers, two comparative psychologists (one with expertise in birds; another with expertise in cephalopods), two fish welfare researchers, two entomologists, an animal welfare scientist, and a veterinarian." Fischer, Bob (2022). "The Welfare Range Table". https://forum.effectivealtruism.org/s/y5n47MfgrKvTLE3pw/p/tnSg6o7crcHFLc395

- ^

Grilo, Vasco (2023). "Prioritising animal welfare over global health and development?". https://forum.effectivealtruism.org/posts/vBcT7i7AkNJ6u9BcQ/prioritising-animal-welfare-over-global-health-and

- ^

Gertler, Aaron (2019). "EA Leaders Forum: Survey on EA priorities (data and analysis)". https://forum.effectivealtruism.org/posts/TpoeJ9A2G5Sipxfit/ea-leaders-forum-survey-on-ea-priorities-data-and-analysis

For the question "What (rough) percentage of resources should the EA community devote to the following areas over the next five years", the mean EA leader answered 10.7% for global health and 9.3% + 3.5% = 12.8% for farm and wild animal welfare respectively. No other neartermist cause areas were listed.

- ^

Simnegar, Ariel (2023). "Open Phil Grants Analysis". https://github.com/ariel-simnegar/open-phil-grants-analysis/blob/main/open_phil_grants_analysis.ipynb

- ^

Fischer, Bob (2023). "Theories of Welfare and Welfare Range Estimates". https://forum.effectivealtruism.org/posts/WfeWN2X4k8w8nTeaS/theories-of-welfare-and-welfare-range-estimates

- ^

Rob Wiblin, Kieran Harris (2021). "Alexander Berger on improving global health and wellbeing in clear and direct ways".

- ^

Rencz et al (2020). "Parallel Valuation of the EQ-5D-3L and EQ-5D-5L by Time Trade-Off in Hungary". https://www.sciencedirect.com/science/article/pii/S1098301520321173

- ^

Doth et al (2010). "The burden of neuropathic pain: A systematic review and meta-analysis of health utilities". https://www.sciencedirect.com/science/article/abs/pii/S0304395910001260

- ^

Lee et al (2019). "Increased suicidality in patients with cluster headache". https://pubmed.ncbi.nlm.nih.gov/31018651/

- ^

Goossens et al (1999). "Patient utilities in chronic musculoskeletal pain: how useful is the standard gamble method?". https://www.sciencedirect.com/science/article/abs/pii/S0304395998002322

- ^

Simcikas, Saulius (2019). "Corporate campaigns affect 9 to 120 years of chicken life per dollar spent". https://forum.effectivealtruism.org/posts/L5EZjjXKdNgcm253H/corporate-campaigns-affect-9-to-120-years-of-chicken-life

- ^

Simnegar, Ariel (2023). "Open Phil Grants Analysis". https://github.com/ariel-simnegar/open-phil-grants-analysis/blob/main/open_phil_grants_analysis.ipynb

- ^

Toby Ord's x-risk table from The Precipice has AI 3x greater than pandemics, 100x greater than nuclear war, and 100x greater than climate change.

- ^

Gertler, Aaron (2019). "EA Leaders Forum: Survey on EA priorities (data and analysis)". https://forum.effectivealtruism.org/posts/TpoeJ9A2G5Sipxfit/ea-leaders-forum-survey-on-ea-priorities-data-and-analysis

Emily Oehlsen @ 2023-11-20T01:32 (+191)

(Hi, I'm Emily, I lead GHW grantmaking at Open Phil.)

Thank you for writing this critique, and giving us the chance to read your draft and respond ahead of time. This type of feedback is very valuable for us, and I’m really glad you wrote it.

We agree that we haven’t shared much information about our thinking on this question. I’ll try to give some more context below, though I also want to be upfront that we have a lot more work to do in this area.

For the rest of this comment, I’ll use “FAW” to refer to farm animal welfare and “GHW” to refer to all the other (human-centered) work in our Global Health and Wellbeing portfolio.

To date, we haven’t focused on making direct comparisons between GHW and FAW. Instead, we’ve focused on trying to equalize marginal returns within each area and do something more like worldview diversification to determine allocations across GHW, FAW, and Open Philanthropy’s other grantmaking. In other words, each of GHW and FAW has its own rough “bar” that an opportunity must clear to be funded. While our frameworks allow for direct comparisons, we have not stress-tested consistency for that use case. We’re also unsure conceptually whether we should be trying to equalize marginal returns between FAW and GHW or whether we should continue with our current approach. We’re planning to think more about this question next year.

One reason why we are moving more slowly is that our current estimates of the gap between marginal animal and human funding opportunities is very different from the one in your post – within one order of magnitude, not three. And given the high uncertainty around our estimates here, we think one order of magnitude is well within the “margin of error” .

Comparing animal- and human-centered interventions involves many hard-to-estimate parameters. We think the most important ones are:

- Moral weights

- Welfare range (i.e. should we treat welfare as symmetrical around a neutral point, or negative experiences as being worse than positive experiences are good?)

- The difference between the number of humans and chickens, respectively, affected by a marginal intervention in each area

There is not a lot of existing research on these three points. While we are excited to support work from places like Rethink Priorities, this is a very nascent field and we think there is still a lot to learn.

To ground your 1,000x claim, our understanding is it implies a tradeoff of 0.85 chicken years moving from pre-reform to post-reform farming conditions vs one year of human life.

A few more details on our estimate and where we differ:

- We think that the marginal FAW funding opportunity is ~1/5th as cost-effective as the average from Saulius’ analysis.

- The estimate Holden shared in “Worldview Diversification” (over 200 hens spared from confinement per dollar spent) came from a quick calculation based on the initial success of several US cage-free campaigns. That was in 2016. Seven years later, we’ve covered many of the strongest opportunities in this space, and we think that current marginal opportunities are considerably weaker.

- Vasco’s analysis implies a much wider welfare range than the one we use.

- We’re not confident in our current assumptions, but this is a complicated question, and there is more work we need to do ourselves to get to an answer we believe in enough to act on. We also need to think through consistency with our human-focused interventions.

- We don’t use Rethink’s moral weights.

- Our current moral weights, based in part on Luke Muehlhauser’s past work, are lower. We may update them in the future; if we do, we’ll consider work from many sources, including the arguments made in this post.

Thanks again for the critique; we wish more people would do this kind of thing!

Best,

Emily

Ariel Simnegar @ 2023-11-20T01:54 (+119)

Hi Emily,

Thanks so much for your engagement and consideration. I appreciate your openness about the need for more work in tackling these difficult questions.

our current estimates of the gap between marginal animal and human funding opportunities is very different from the one in your post – within one order of magnitude, not three.

Holden has stated that "It seems unlikely that the ratio would be in the precise, narrow range needed for these two uses of funds to have similar cost-effectiveness." As OP continues researching moral weights, OP's marginal cost-effectiveness estimates for FAW and GHW may eventually differ by several orders of magnitude. If this happens, would OP substantially update their allocations between FAW and GHW?

Our current moral weights, based in part on Luke Muehlhauser’s past work, are lower.

Along with OP's neartermist cause prioritization, your comment seems to imply that OP's moral weights are 1-2 orders of magnitude lower than Rethink's. If that's true, that is a massive difference which (depending upon the details) could have big implications for how EA should allocate resources between FAW charities (e.g. chickens vs shrimp) as well as between FAW and GHW.

Does OP plan to reveal their moral weights and/or their methodology for deriving them? It seems that opening up the conversation would be quite beneficial to OP's objective of furthering moral weight research until uncertainty is reduced enough to act upon.

I'd like to reiterate how much I appreciate your openness to feedback and your reply's clarification of OP's disagreements with my post. That said, this reply doesn't seem to directly answer this post's headline questions:

- How much weight does OP's theory of welfare place on pleasure and pain, as opposed to nonhedonic goods?

- Precisely how much more does OP value one unit of a human's welfare than one unit of another animal's welfare, just because the former is a human? How does OP derive this tradeoff?

- How would OP's views have to change for OP to prioritize animal welfare in neartermism?

Though you have no obligation to directly answer these questions, I really wish you would. A transparent discussion could update OP, Rethink, and many others on this deeply important topic.

Thanks again for taking the time to engage, and for everything you and OP have done to help others :)

Emily Oehlsen @ 2023-11-22T20:33 (+9)

Hi Ariel,

As OP continues researching moral weights, OP's marginal cost-effectiveness estimates for FAW and GHW may eventually differ by several orders of magnitude. If this happens, would OP substantially update their allocations between FAW and GHW?

We’re unsure conceptually whether we should be trying to equalize marginal returns between FAW and GHW or whether we should continue with our current approach of worldview diversification. If we end up feeling confident that we should be equalizing marginal returns and there are large differences (we’re uncertain about both pieces right now), I expect that we’d adjust our allocation strategy. But this wouldn’t happen immediately; we think it’s important to give program staff notice well in advance of any pending allocation changes.

Your comment seems to imply that OP's moral weights are 1-2 orders of magnitude lower than Rethink's.

I’m wary of sharing precise numbers now, because we’re highly uncertain about all three of the parameters I listed and I don’t want people to over-update on our views. But the 2 orders of magnitude are coming from a combination of the three parameters I listed and not just moral weights. We may share more information on our views and methodology later, but I can’t commit to a particular date or any specifics on what we’ll publish.

I unfortunately won’t have time to engage with further responses for now, but whenever we publish research relevant to these topics, we’ll be sure to cross-post it on the Forum!

We think these discussions are valuable, and I hope we’ll be able to contribute more of our own takes down the line. But we’re working on a lot of other research we hope to publish, and I can’t say with certainty when we’ll share more on this topic.

Thank you again for the critique!

RedStateBlueState @ 2023-11-20T04:09 (+74)

If OpenPhil’s allocation is really so dependent on moral weight numbers, you should be spending significant money on research in this area, right? Are you doing this? Do you plan on doing more of this given the large divergence from Rethink’s numbers?

Emily Oehlsen @ 2023-11-22T20:32 (+9)

Several of the grants we’ve made to Rethink Priorities funded research related to moral weights; we’ve also conducted our own research on the topic. We may fund additional moral weights work next year, but we aren’t certain. In general, it's very hard to guarantee we'll fund a particular topic in a future year, since our funding always depends on which opportunities we find and how they compare to each other — and there's a lot we don't know about future opportunities.

I unfortunately won’t have time to engage with further responses for now, but whenever we publish research relevant to these topics, we’ll be sure to cross-post it on the Forum!

Will Aldred @ 2023-11-24T00:33 (+39)

Here, you say, “Several of the grants we’ve made to Rethink Priorities funded research related to moral weights.” Yet in your initial response, you said, “We don’t use Rethink’s moral weights.” I respect your tapping out of this discussion, but at the same time I’d like to express my puzzlement as to why Open Phil would fund work on moral weights to inform grantmaking allocation, and then not take that work into account.

CarlShulman @ 2023-11-26T18:45 (+32)

One can value research and find it informative or worth doing without being convinced of every view of a given researcher or team. Open Philanthropy also sponsored a contest to surface novel considerations that could affect its views on AI timelines and risk. The winners mostly present conclusions or considerations on which AI would be a lower priority, but that doesn't imply that the judges or the institution changed their views very much in that direction.

At large scale, Information can be valuable enough to buy even if it only modestly adjusts proportional allocations of effort, the minimum bar for funding a research project with hundreds of thousands or millions of dollars presumably isn't that one pivots billions of dollars on the results with near-certainty.

Will Aldred @ 2023-11-27T23:56 (+30)

Thank you for engaging. I don’t disagree with what you’ve written; I think you have interpreted me as implying something stronger than what I intended, and so I’ll now attempt to add some colour.

That Emily and other relevant people at OP have not fully adopted Rethink’s moral weights does not puzzle me. As you say, to expect that is to apply an unreasonably high funding bar. I am, however, puzzled that Emily and co. appear to have not updated at all towards Rethink’s numbers. At least, that’s the way I read:

- We don’t use Rethink’s moral weights.

- Our current moral weights, based in part on Luke Muehlhauser’s past work, are lower. We may update them in the future; if we do, we’ll consider work from many sources, including the arguments made in this post.

If OP has not updated at all towards Rethink’s numbers, then I see three possible explanations, all of which I find unlikely, hence my puzzlement. First possibility: the relevant people at OP have not yet given the Rethink report a thorough read, and have therefore not updated. Second: the relevant OP people have read the Rethink report, and have updated their internal models, but have not yet gotten around to updating OP’s actual grantmaking allocation. Third: OP believes the Rethink work is low quality or otherwise critically corrupted by one or more errors. I’d be very surprised if one or two are true, given how moral weight is arguably the most important consideration in neartermist grantmaking allocation. I’d also be surprised if three is true, given how well Rethink’s moral weight sequence has been received on this forum (see, e.g., comments here and here).[1] OP people may disagree with Rethink’s approach at the independent impression level, but surely, given Rethink’s moral weights work is the most extensive work done on this topic by anyone(?), the Rethink results should be given substantial weight—or at least non-trivial weight—in their all-things-considered views?

(If OP people believe there are errors in the Rethink work that render the results ~useless, then, considering the topic’s importance, I think some sort of OP write-up would be well worth the time. Both at the object level, so that future moral weight researchers can avoid making similar mistakes, and to allow the community to hold OP’s reasoning to a high standard, and also at the meta level, so that potential donors can update appropriately re. Rethink’s general quality of work.)

Additionally—and this is less important, I’m puzzled at the meta level at the way we’ve arrived here. As noted in the top-level post, Open Phil has been less than wholly open about its grantmaking, and it’s taken a pretty not-on-the-default-path sequence of events—Ariel, someone who’s not affiliated with OP and who doesn’t work on animal welfare for their day job, writing this big post; Emily from OP replying to the post and to a couple of the comments; me, a Forum-goer who doesn’t work on animal welfare, spotting an inconsistency in Emily’s replies—to surface the fact that OP does not give Rethink’s moral weights any weight.

- ^

Edited to add: Carl has left a detailed reply below, and it seems that three is, in fact, what has happened.

Vasco Grilo @ 2023-11-28T07:08 (+17)

Fair points, Carl. Thanks for elaborating, Will!

- We don’t use Rethink’s moral weights.

- Our current moral weights, based in part on Luke Muehlhauser’s past work, are lower. We may update them in the future; if we do, we’ll consider work from many sources, including the arguments made in this post.

Interestingly and confusingly, fitting distributions to Luke's 2018 guesses for the 80 % prediction intervals of the moral weight of various species, one gets mean moral weights close to or larger than 1:

It is also worth noting that Luke seemed very much willing to update on further research in 2022. Commenting on the above, Luke said (emphasis mine):

Since this exercise is based on numbers I personally made up, I would like to remind everyone that those numbers are extremely made up and come with many caveats given in the original sources. It would not be that hard to produce numbers more reasonable than mine, at least re: moral weights. (I spent more time on the "probability of consciousness" numbers, though that was years ago and my numbers would probably be different now.)

Welfare ranges are a crucial input to determining moral weights, so I assume Luke would also have agreed that it would not have been that hard to produce more reasonable welfare ranges than his and Open Phil's in 2022. So, given how little time Open Phil seemingly devoted to assessing welfare ranges in comparison to Rethink, I would have expected Open Phil to give major weight to Rethink's values.

CarlShulman @ 2023-12-06T14:43 (+164)

I can't speak for Open Philanthropy, but I can explain why I personally was unmoved by the Rethink report (and think its estimates hugely overstate the case for focusing on tiny animals, although I think the corrected version of that case still has a lot to be said for it).

Luke says in the post you linked that the numbers in the graphic are not usable as expected moral weights, since ratios of expectations are not the same as expectations of ratios.

However, I say "naively" because this doesn't actually work, due to two-envelope effects...whenever you're tempted to multiply such numbers by something, remember two-envelope effects!)

[Edited for clarity] I was not satisfied with Rethink's attempt to address that central issue, that you get wildly different results from assuming the moral value of a fruit fly is fixed and reporting possible ratios to elephant welfare as opposed to doing it the other way around.

It is not unthinkably improbable that an elephant brain where reinforcement from a positive or negative stimulus adjust millions of times as many neural computations could be seen as vastly more morally important than a fruit fly, just as one might think that a fruit fly is much more important than a thermostat (which some suggest is conscious and possesses preferences). Since on some major functional aspects of mind there are differences of millions of times, that suggests a mean expected value orders of magnitude higher for the elephant if you put a bit of weight on the possibility that moral weight scales with the extent of, e.g. the computations that are adjusted by positive and negative stimuli. A 1% weight on that plausible hypothesis means the expected value of the elephant is immense vs the fruit fly. So there will be something that might get lumped in with 'overwhelming hierarchicalism' in the language of the top-level post. Rethink's various discussions of this issue in my view missed the mark.

Go the other way and fix the value of the elephant at 1, and the possibility that value scales with those computations is treated as a case where the fly is worth ~0. Then a 1% or even 99% credence in value scaling with computation has little effect, and the elephant-fruit fly ratio is forced to be quite high so tiny mind dominance is almost automatic. The same argument can then be used to make a like case for total dominance of thermostat-like programs, or individual neurons, over insects. And then again for individual electrons.

As I see it, Rethink basically went with the 'ratios to fixed human value', so from my perspective their bottom-line conclusions were predetermined and uninformative. But the alternatives they ignore lead me to think that the expected value of welfare for big minds is a lot larger than for small minds (and I think that can continue, e.g. giant AI minds with vastly more reinforcement-affected computations and thoughts could possess much more expected welfare than humans, as many humans might have more welfare than one human).

I agree with Brian Tomasik's comment from your link:

the moral-uncertainty version of the [two envelopes] problem is fatal unless you make further assumptions about how to resolve it, such as by fixing some arbitrary intertheoretic-comparison weights (which seems to be what you're suggesting) or using the parliamentary model.

By the same token, arguments about the number of possible connections/counterfactual richness in a mind could suggest superlinear growth in moral importance with computational scale. Similar issues would arise for theories involving moral agency or capacity for cooperation/game theory (on which humans might stand out by orders of magnitude relative to elephants; marginal cases being socially derivative), but those were ruled out of bounds for the report. Likewise it chose not to address intertheoretic comparisons and how those could very sharply affect the conclusions. Those are the kinds of issues with the potential to drive massive weight differences.

I think some readers benefitted a lot from reading the report because they did not know that, e.g. insects are capable of reward learning and similar psychological capacities. And I would guess that will change some people's prioritization between different animals, and of animal vs human focused work. I think that is valuable. But that information was not new to me, and indeed I had argued for many years that insects met a lot of the functional standards one could use to identify the presence of well-being, and that even after taking two-envelopes issues and nervous system scale into account expected welfare at stake for small wild animals looked much larger than for FAW.

I happen to be a fan of animal welfare work relative to GHW's other grants at the margin because animal welfare work is so highly neglected (e.g. Open Philanthropy is a huge share of world funding on the most effective FAW work but quite small compared to global aid) relative to the case for views on which it's great. But for me Rethink's work didn't address the most important questions, and largely baked in its conclusions methodologically.

Bob Fischer @ 2023-12-12T19:07 (+36)

Thanks for your discussion of the Moral Weight Project's methodology, Carl. (And to everyone else for the useful back-and-forth!) We have some thoughts about this important issue and we're keen to write more about it. Perhaps 2024 will provide the opportunity!

For now, we'll just make one brief point, which is that it’s important to separate two questions. The first concerns the relevance of the two envelopes problem to the Moral Weight Project. The second concerns alternative ways of generating moral weights. We considered the two envelopes problem at some length when we were working on the Moral Weight Project and concluded that our approach was still worth developing. We’d be glad to revisit this and appreciate the challenge to the methodology.

However, even if it turns out that the methodology has issues, it’s an open question how best to proceed. We grant the possibility that, as you suggest, more neurons = more compute = the possibility of more intense pleasures and pains. But it's also possible that more neurons = more intelligence = less biological need for intense pleasures and pains, as other cognitive abilities can provide the relevant fitness benefits, effectively muting the intensities of those states. Or perhaps there's some very low threshold of cognitive complexity for sentience after which point all variation in behavior is due to non-hedonic capacities. Or perhaps cardinal interpersonal utility comparisons are impossible. And so on. In short, while it's true that there are hypotheses on which elephants have massively more intense pains than fruit flies, there are also hypotheses on which the opposite is true and on which equality is (more or less) true. Once we account for all these hypotheses, it may still work out that elephants and fruit flies differ by a few orders of magnitude in expectation, but perhaps not by five or six. Presumably, we should all want some approach, whatever it is, that avoids being mugged by whatever low-probability hypothesis posits the largest difference between humans and other animals.

That said, you've raised some significant concerns about methods that aggregate over different relative scales of value. So, we’ll be sure to think more about the degree to which this is a problem for the work we’ve done—and, if it is, how much it would change the bottom line.

CarlShulman @ 2023-12-13T00:33 (+16)

Thank you for the comment Bob.

I agree that I also am disagreeing on the object-level, as Michael made clear with his comments (I do not think I am talking about a tiny chance, although I do not think the RP discussions characterized my views as I would), and some other methodological issues besides two-envelopes (related to the object-level ones). E.g. I would not want to treat a highly networked AI mind (with billions of bodies and computation directing them in a unified way, on the scale of humanity) as a millionth or a billionth of the welfare of the same set of robots and computations with less integration (and overlap of shared features, or top-level control), ceteris paribus.

Indeed, I would be wary of treating the integrated mind as though welfare stakes for it were half or a tenth as great, seeing that as a potential source of moral catastrophe, like ignoring the welfare of minds not based on proteins. E.g. having tasks involving suffering and frustration done by large integrated minds, and pleasant ones done by tiny minds, while increasing the amount of mental activity in the former. It sounds like the combination of object-level and methodological takes attached to these reports would favor ignoring almost completely the integrated mind.

Incidentally, in a world where small animals are being treated extremely badly and are numerous, I can see a temptation to err in their favor, since even overestimates of their importance could be shifting things in the right marginal policy direction. But thinking about the potential moral catastrophes on the other side helps sharpen the motivation to get it right.

In practice, I don't prioritize moral weights issues in my work, because I think the most important decisions hinging on it will be in an era with AI-aided mature sciences of mind, philosophy and epistemology. And as I have written regardless of your views about small minds and large minds, it won't be the case that e.g. humans are utility monsters of impartial hedonism (rather than something bigger, smaller, or otherwise different), and grounds for focusing on helping humans won't be terminal impartial hedonistic in nature. But from my viewpoint baking in that integration (and unified top-level control or mental overlap of some parts of computation) close to eliminates mentality or welfare (vs less integrated collections of computations) seems bad in non-Pascalian fashion.

MichaelStJules @ 2023-12-13T09:28 (+9)

(Speaking for myself only.)

FWIW, I think something like conscious subsystems (in huge numbers in one neural network) is more plausible by design in future AI. It just seems unlikely in animals because all of the apparent subjective value seems to happen at roughly the highest level where everything is integrated in an animal brain.

Felt desire seems to (largely) be motivational salience, a top-down/voluntary attention control function driven by high-level interpretations of stimuli (e.g. objects, social situations), so relatively late in processing. Similarly, hedonic states depend on high-level interpretations, too.

Or, according to Attention Schema Theory, attention models evolved for the voluntary control of attention. It's not clear what the value would be for an attention model at lower levels of organization before integration.

And evolution will select against realizing functions unnecessarily if they have additional costs, so we should provide a positive argument for the necessary functions being realized earlier or multiple times in parallel that overcomes or doesn't incur such additional costs.

So, it's not that integration necessarily reduces value; it's that, in animals, all the morally valuable stuff happens after most of the integration, and apparently only once or in small number.

In artificial systems, the morally valuable stuff could instead be implemented separately by design at multiple levels.

EDIT:

I think there's still crux about whether realizing the same function the same number of times but "to a greater degree" makes it more morally valuable. I think there are some ways of "to a greater degree" that don't matter, and some that could. If it's only sort of (vaguely) true that a system is realizing a certain function, or it realizes some but not all of the functions possibly necessary for some type of welfare in humans, then we might discount it for only meeting lower precisifications of the vague standards. But adding more neurons just doing the same things:

- doesn't make it more true that it realizes the function or the type of welfare (e.g. adding more neurons to my brain wouldn't make it more true that I can suffer),

- doesn't clearly increase welfare ranges, and

- doesn't have any other clear reason for why it should make a moral difference (I think you disagree with this, based on your examples).

But maybe we don't actually need good specific reasons to assign non-tiny probabilities to neuron count scaling for 2 or 3, and then we get domination of neuron count scaling in expectation, depending on what we're normalizing by, like you suggest.

RedStateBlueState @ 2023-12-07T02:07 (+30)

This consideration is something I had never thought of before and blew my mind. Thank you for sharing.

Hopefully I can summarize it (assuming I interpreted it correctly) in a different way that might help people who were as befuddled as I was.

The point is that, when you have probabilistic weight to two different theories of sentience being true, you have to assign units to sentience in these different theories in order to compare them.

Say you have two theories of sentience that are similarly probable, one dependent on intelligence and one dependent on brain size. Call these units IQ-qualia and size-qualia. If you assign fruit flies a moral weight of 1, you are implicitly declaring a conversion rate of (to make up some random numbers) 1000 IQ-qualia = 1 size-qualia. If you assign elephants however to have a moral weight of 1, you implicitly declare a conversion rate of (again, made-up) 1 IQ-qualia = 1000 size-qualia, because elephant brains are much larger but not much smarter than fruit flies. These two different conversion rates are going to give you very different numbers for the moral weight of humans (or as Shulman was saying, of each other).

Rethink Priorities assigned humans a moral weight of 1, and thus assumed a certain conversion rate between different theories that made for a very small-animal-dominated world by sentience.

MichaelStJules @ 2023-12-08T11:13 (+18)

It is not unthinkably improbable that an elephant brain where reinforcement from a positive or negative stimulus adjust millions of times as many neural computations could be seen as vastly more morally important than a fruit fly, just as one might think that a fruit fly is much more important than a thermostat (which some suggest is conscious and possesses preferences). Since on some major functional aspects of mind there are differences of millions of times, that suggests a mean expected value orders of magnitude higher for the elephant if you put a bit of weight on the possibility that moral weight scales with the extent of, e.g. the computations that are adjusted by positive and negative stimuli.

This specific kind of account, if meant to depend inherently on differences in reinforcement, is very improbable to me (<0.1%), and conditional on such accounts, the inherent importance of reinforcement would also very probably scale very slowly, with faster scaling increasingly improbable. It could work out that the expected scaling isn't slow, but that would be because of very low probability possibilities.

The value of subjective wellbeing, whether hedonistic, felt desires, reflective evaluation/preferences, choice-based or some kind of combination, seems very probably logically independent from how much reinforcement happens EDIT: and empirically dissociable. My main argument is that reinforcement happens unconsciously and has no necessary or ~immediate conscious effects. We could imagine temporarily or permanently preventing reinforcement without any effect on mental states or subjective wellbeing in the moment. Or, we can imagine connecting a brain to an artificial neural network to add more neurons to reinforce, again to no effect.

And even within the same human under normal conditions, holding their reports of value or intensity fixed, the amount of reinforcement that actually happens will probably depend systematically on the nature of the experience, e.g. physical pain vs anxiety vs grief vs joy. If reinforcement has a large effect on expected moral weights, you could and I'd guess would end up with an alienating view, where everyone is systematically wrong about the relative value of their own experiences. You'd effectively need to reweight all of their reports by type of experience.

So, even with intertheoretic comparisons between accounts with and without reinforcement, of which I'd be quite skeptical specifically in this case but also generally, this kind of hypothesis shouldn't make much difference (or it does make a substantial difference, but it seems objectionably fanatical and alienating). If rejecting such intertheoretic comparisons, as I'm more generally inclined to do and as Open Phil seems to be doing, it should make very little difference.

There are more plausible functions you could use, though, like attention. But, again, I think the cases for intertheoretic comparisons between accounts of how moral value scales with neurons for attention or probably any other function are generally very weak, so you should only take expected values over descriptive uncertainty conditional on each moral scaling hypothesis, not across moral scaling hypotheses (unless you normalize by something else, like variance across options). Without intertheoretic comparisons, approaches to moral uncertainty in the literature aren't so sensitive to small probability differences or fanatical about moral views. So, it tends to be more important to focus on large probability shifts than improbable extreme cases.

MichaelStJules @ 2023-12-08T09:34 (+13)

(I'm not at Rethink Priorities anymore, and I'm not speaking on their behalf.)

Rethink's work, as I read it, did not address that central issue, that you get wildly different results from assuming the moral value of a fruit fly is fixed and reporting possible ratios to elephant welfare as opposed to doing it the other way around.

(...)

Rethink's discussion of this almost completely sidestepped the issue in my view.

RP did in fact respond to some versions of these arguments, in the piece Do Brains Contain Many Conscious Subsystems? If So, Should We Act Differently?, of which I am a co-author.

CarlShulman @ 2023-12-08T16:58 (+4)

Thanks, I was referring to this as well, but should have had a second link for it as the Rethink page on neuron counts didn't link to the other post. I think that page is a better link than the RP page I linked, so I'll add it in my comment.

MichaelStJules @ 2023-12-09T00:20 (+5)

(Again, not speaking on behalf of Rethink Priorities, and I don't work there anymore.)

(Btw, the quote formatting in your original comment got messed up with your edit.)

I think the claims I quoted are still basically false, though?

Rethink's work, as I read it, did not address that central issue, that you get wildly different results from assuming the moral value of a fruit fly is fixed and reporting possible ratios to elephant welfare as opposed to doing it the other way around.

Do Brains Contain Many Conscious Subsystems? If So, Should We Act Differently? explicitly considered a conscious subsystems version of this thought experiment, focusing on the more human-favouring side when you normalize by small systems like insect brains, which is the non-obvious side often neglected.

There's a case that conscious subsystems could dominate expected welfare ranges even without intertheoretic comparisons (but also possibly with), so I think we were focusing on one of strongest and most important arguments for humans potentially mattering more, assuming hedonism and expectational total utilitarianism. Maximizing expected choiceworthiness with intertheoretic comparisons is controversial and only one of multiple competing approaches to moral uncertainty. I'm personally very skeptical of it because of the arbitrariness of intertheoretic comparisons and its fanaticism (including chasing infinities, and lexically higher and higher infinities). Open Phil also already avoids making intertheoretic comparisons, but was more sympathetic to normalizing by humans if it were going to.

CarlShulman @ 2023-12-09T02:42 (+6)

I don't want to convey that there was no discussion, thus my linking the discussion and saying I found it inadequate and largely missing the point from my perspective. I made an edit for clarity, but would accept suggestions for another.

MichaelStJules @ 2023-12-09T02:52 (+1)

Your edit looks good to me. Thanks!

Vasco Grilo @ 2023-12-06T16:40 (+12)

Thanks for elaborating, Carl!

Luke says in the post you linked that the numbers in the graphic are not usable as expected moral weights, since ratios of expectations are not the same as expectations of ratios.

Let me try to restate your point, and suggest why one may disagree. If one puts weight w on the welfare range (WR) of humans relative to that of chickens being N, and 1 - w on it being n, the expected welfare range of:

- Humans relative to that of chickens is E("WR of humans"/"WR of chickens") = w*N + (1 - w)*n.

- Chickens relative to that of humans is E("WR of chickens"/"WR of humans") = w/N + (1 - w)/n.

You are arguing that N can plausibly be much larger than n. For the sake of illustration, we can say N = 389 (ratio between the 86 billion neurons of a humans and 221 M of a chicken), n = 3.01 (reciprocal of RP's median welfare range of chickens relative to humans of 0.332), and w = 1/12 (since the neuron count model was one of the 12 RP considered, and all of them were weighted equally). Having the welfare range of:

- Chickens as the reference, E("WR of humans"/"WR of chickens") = 35.2. So 1/E("WR of humans"/"WR of chickens") = 0.0284.

- Humans as the reference (as RP did), E("WR of chickens"/"WR of humans") = 0.305.

So, as you said, determining welfare ranges relative to humans results in animals being weighted more heavily. However, I think the difference is much smaller than the suggested above. Since N and n are quite different, I guess we should combine them using a weighted geometric mean, not the weighted mean as I did above. If so, both approaches output exactly the same result:

- E("WR of humans"/"WR of chickens") = N^w*n^(1 - w) = 4.49. So 1/E("WR of humans"/"WR of chickens") = (N^w*n^(1 - w))^-1 = 0.223.

- E("WR of chickens"/"WR of humans") = (1/N)^w*(1/n)^(1 - w) = 0.223.

The reciprocal of the expected value is not the expected value of the reciprocal, so using the mean leads to different results. However, I think we should be using the geometric mean, and the reciprocal of the geometric mean is the geometric mean of the reciprocal. So the 2 approaches (using humans or chickens as the reference) will output the same ratios regardless of N, n and w as long as we aggregate N and n with the geometric mean. If N and n are similar, it no longer makes sense to use the geometric mean, but then both approaches will output similar results anyway, so RP's approach looks fine to me as a 1st pass. Does this make any sense?

Of course, it would still be good to do further research (which OP could fund) to adjudicate how much weight should be given to each model RP considered.

I had argued for many years that insects met a lot of the functional standards one could use to identify the presence of well-being, and that even after taking two-envelopes issues and nervous system scale into account expected welfare at stake for small wild animals looked much larger than for FAW.

True!

I happen to be a fan of animal welfare work relative to GHW's other grants at the margin because animal welfare work is so highly neglected

Thanks for sharing your views!

CarlShulman @ 2023-12-06T17:44 (+16)

I'm not planning on continuing a long thread here, I mostly wanted to help address the questions about my previous comment, so I'll be moving on after this. But I will say two things regarding the above. First, this effect (computational scale) is smaller for chickens but progressively enormous for e.g. shrimp or lobster or flies. Second, this is a huge move and one really needs to wrestle with intertheoretic comparisons to justify it:

I guess we should combine them using a weighted geometric mean, not the weighted mean as I did above.

Suppose we compared the mass of the human population of Earth with the mass of an individual human. We could compare them on 12 metrics, like per capita mass, per capita square root mass, per capita foot mass... and aggregate mass. If we use the equal-weighted geometric mean, we will conclude the individual has a mass within an order of magnitude of the total Earth population, instead of billions of times less.

Vasco Grilo @ 2023-12-06T18:54 (+7)

I'm not planning on continuing a long thread here, I mostly wanted to help address the questions about my previous comment, so I'll be moving on after this.

Fair, as this is outside of the scope of the original post. I noticed you did not comment on RP's neuron counts post. I think it would be valuable if you commented there about the concerns you expressed here, or did you already express them elsewhere in another post of RP's moral weight project sequence?

First, this effect (computational scale) is smaller for chickens but progressively enormous for e.g. shrimp or lobster or flies.

I agree that is the case if one combines the 2 wildly different estimates for the welfare range (e.g. one based on the number of neurons, and another corresponding to RP's median welfare ranges) with a weighted mean. However, as I commented above, using the geometric mean would cancel the effect.

Suppose we compared the mass of the human population of Earth with the mass of an individual human. We could compare them on 12 metrics, like per capita mass, per capita square root mass, per capita foot mass... and aggregate mass. If we use the equal-weighted geometric mean, we will conclude the individual has a mass within an order of magnitude of the total Earth population, instead of billions of times less.

Is this a good analogy? Maybe not:

- Broadly speaking, giving the same weight to multiple estimates only makes sense if there is wide uncertainty with respect to which one is more reliable. In the example above, it would make sense to give negligible weight to all metrics except for the aggregate mass. In contrast, there is arguably wide uncertainty with respect to what are the best models to measure welfare ranges, and therefore distributing weights evenly is more appropriate.

- One particular model on which we can put lots of weight on is that mass is straightforwardly additive (at least at the macro scale). So we can say the mass of all humans equals the number of humans times the mass per human, and then just estimate this for a typical human. In contrast, it is arguably unclear whether one can obtain the welfare range of an animal by e.g. just adding up the welfare range of its individual neurons.

MichaelDickens @ 2024-03-18T23:34 (+4)

It seems to me that the naive way to handle the two envelopes problem (and I've never heard of a way better than the naive way) is to diversify your donations across two possible solutions to the two envelopes problem:

- donate half your (neartermist) money on the assumption that you should use ratios to fixed human value

- donate half your money on the assumption that you should fix the opposite way (eg fruit flies have fixed value)

Which would suggest donating half to animal welfare and probably half to global poverty. (If you let moral weights be linear with neuron count, I think that would still favor animal welfare, but you could get global poverty outweighing animal welfare if moral weight grows super-linearly with neuron count.)

Plausibly there are other neartermist worldviews you might include that don't relate to the two envelopes problem, e.g. a "only give to the most robust interventions" worldview might favor GiveDirectly. So I could see an allocation of less than 50% to animal welfare.

MichaelStJules @ 2024-03-19T15:59 (+6)

There is no one opposite way; there are many other ways than to fix human value. You could fix the value in fruit flies, shrimps, chickens, elephants, C elegans, some plant, some bacterium, rocks, your laptop, GPT-4 or an alien, etc..

I think a more principled approach would be to consider precise theories of how welfare scales, not necessarily fixing the value in any one moral patient, and then use some other approach to moral uncertainty for uncertainty between the theories. However, there is another argument for fixing human value across many such theories: we directly value our own experiences, and theorize about consciousness in relation to our own experiences, so we can fix the value in our own experiences and evaluate relative to them.

weeatquince @ 2023-11-26T01:00 (+13)

Hi Emily, Sorry this is a bit off topic but super useful for my end of year donations.

I noticed that you said that OpenPhil has supported "Rethink Priorities ... research related to moral weights". But in his post here Peter says that the moral weights work "have historically not had institutional support".

Do you have a rough very quick sense of how much Rethink Priorities moral weights work was funded by OpenPhil?

Thank you so much

Marcus_A_Davis @ 2023-11-27T14:35 (+34)

We mean to say that the ideas for these projects and the vast majority of the funding were ours, including the moral weight work. To be clear, these projects were the result of our own initiative. They wouldn't have gone ahead when they did without us insisting on their value.

For example, after our initial work on invertebrate sentience and moral weight in 2018-2020, in 2021 OP funded $315K to support this work. In 2023 they also funded $15K for the open access book rights to a forthcoming book based on the topic. In that period of 2021-2023, for public-facing work we spent another ~$603K on moral weight work with that money coming from individuals and RP's unrestricted funding.

Similarly, the CURVE sequence of WIT this year was our idea and we are on track to spend ~$900K against ~$210K funded by Open Phil on WIT. Of that $210K the first $152K was on projects related to Open Phil’s internal prioritization and not the public work of the CURVE sequence. The other $58K went towards the development of the CCM. So overall less than 10% of our costs for public WIT work this year was covered by OP (and no other institutional donors were covering it either).

weeatquince @ 2023-11-28T00:06 (+5)

Hi Marcus thanks very helpful to get some numbers and clarification on this. And well done to you and Rethink for driving forward such important research.

(I meant to post a similar question asking for clarification on the rethink post too but my perfectionism ran away with me and I never quite found the wording and then ran out of drafting time, but great to see your reply here)

James Özden @ 2023-11-23T22:06 (+70)

One reason why we are moving more slowly is that our current estimates of the gap between marginal animal and human funding opportunities is very different from the one in your post – within one order of magnitude, not three. And given the high uncertainty around our estimates here, we think one order of magnitude is well within the “margin of error” .

I assume that even though your answers are within one order of magnitude, the animal-focused work is the one that looks more cost-effective. Is that right?

Assuming so, your answer doesn't make sense to me because OP funds roughly 6x more human-focused GHW relative to farm animal welfare (FAW). Even if you have wide uncertainty bounds, if FAW is looking more cost-effective than human work, surely this ratio should be closer to 1:1 rather than 1:6? It seems bizarre (and possibly an example of omission bias) to fund the estimated less cost-effective thing 6x more and justify it by saying you're quite uncertain.

Long story short, should we not just allocate our funding to the best of our current knowledge (even by your calculations, more towards FAW) and then update accordingly if things change?

Vasco Grilo @ 2023-11-26T08:23 (+6)

Nice point, James!

I personally agree with your reasoning, but it assumes the marginal cost-effectiveness of the human-focussed and animal-focussed interventions should be the same. Open Phil is not sold on this:

We’re also unsure conceptually whether we should be trying to equalize marginal returns between FAW and GHW or whether we should continue with our current approach.