Will Sentience Make AI’s Morality Better?

By Ronen Bar @ 2025-05-18T04:34 (+27)

TLDR

I propose to explore what I call the AI Sentience Ethics Conundrum: Will the world be better with a sentient AI or an insentient AI?

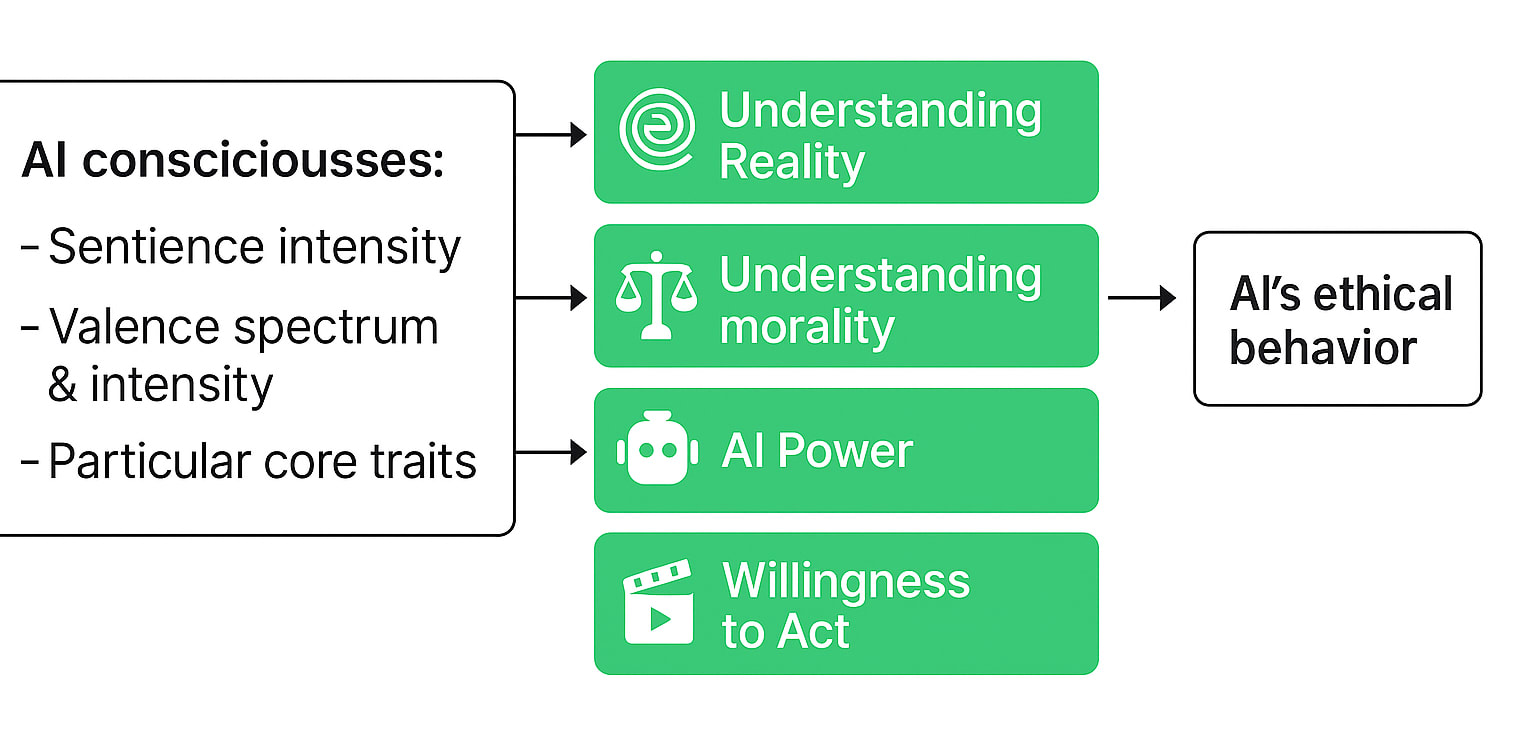

In this article, we will explore whether a sentient AI would behave more ethically and robustly than an insentient one. I will propose an initial framework of four key factors - understanding reality, understanding morality, power and willingness to act - that are potentially influenced by AI sentience. Those factors are key in determining how it behaves, i.e., the degree of AI Moral Alignment.

In the next post, I will address how AI sentience might affect the world, taking a broader view than just AI's behavior, including its own welfare.

Existing opinions on this question

The Sentience Ethics Conundrum represents a crucial yet underexplored aspect of AI's potential impact on the world. In his Nonsentient Optimizers post, Eliezer Yudkowsky dismisses this concern entirely, arguing that those who believe AI requires empathy fundamentally misunderstand utility functions and decision theory, unable to conceive of intelligence operating unlike human minds.

However, most AI Safety and EA people I spoke with seem to disagree with Yudkowsky's conclusion. It seems this question is very hard, deep, and complicated, and I find it one of the most essential questions in AI safety and Moral Alignment. It is the kind of question where there is much more than meets the eye.

Epistemic status

This analysis presents preliminary thoughts mapping critical questions and postulations emerging from this conundrum. The simple framework suggested in this article is a very rough starting point for thinking about this topic, which in my opinion should be developed much further.

The four dependent factors

Examples are provided in parentheses.

- 🌀 Understanding Reality, what is (materialistic nondualism, simulation hypothesis, religion, karma, etc.). This includes understanding what creates consciousness, and how to solve the Sentience Ethics Conundrum.

- ⚖️ Understanding morality, what is good.

- 🤖 AI Power: The capability to predict outcomes and utilize tools to affect both external reality and internal states. This ranges from narrow AI applications to artificial general intelligence (AGI) to artificial superintelligence (ASI), and may be situationally constrained.

- 🎬 Willingness To Act according to the ethics, and the understanding of reality, while using one’s power.

The key question of this article

We will examine the influence of AI Sentience, the independent factor, on the four dependent factors, which in turn influence AI Moral Alignment. All this will be done to gain insights into the question: will a sentient AI behave more ethically than an insentient one, and if so, what sentience intensity, valence (spectrum & intensity), and particular core traits will optimize its morality?

- Particular core traits could include introspection or other types of conscious abilities we might not understand, imagine, or have access to (e.g. Buddhists might claim introspection can lead to deep understanding of the reality of no-self).

- Theoretically, we can grow many kinds of sentience in a being, each of which can affect the main four dependent factors, which in turn affect AI behavior. We should also consider scenarios involving various interactions between different AIs (different in consciousness and sentience) and different factors.

- Examples: we could theoretically build human-like consciousness, animal-like consciousness, blurred consciousness (like a dream), consciousness experiencing only mild negative valence, consciousness experiencing only positive experiences, super consciousness far more aware than us, or consciousness active only during certain times or situations, such as training periods or in times of tough ethical decisions.

For simplicity, in this post I disregard the option of sentience without valence, though this also deserves consideration.

When I say 'should we build a sentient AI?,' I mean whether we—or an AI—should build it.

In this post I will use sentience and consciousness interchangeably.

AI sentience and the four dependent factors - questions and thoughts

AI Sentience and 🌀 Understanding Reality

What unique information does an agent obtain through subjective experience?

- The world is not data, not matter, but experiences. Can AI understand the meaning of experiences and valence without having experiences? Can an AI understand reality without having what it is like to be itself, without ever being part of the beings? Will its worldly understanding be limited to mere predictions and actions?

- In the Mary’s Room Frank Jackson’s thought experiment, Mary is a scientist who knows everything about color in a scientific sense but has never seen color herself (living in a black and white room). When Mary finally sees red, the qualia or raw experience of color, does she learn something new, something that all her objective knowledge hadn’t given her?

- Now imagine, as Daniel Ince-Cushman suggests in his Knowing vs. Feeling post, MaryBot, an AI that has ingested every textbook on neuroscience and consciousness but lacks subjective experience. MaryBot could recite the neural correlates of pain or the functional definition theories of consciousness. It might even predict how a human will behave when feeling pain or describe the evolutionary causes of suffering. But can MaryBot ever truly understand what it’s like to see the color orange or to feel pain?

Does an agent need sentience to figure out what causes sentience?

- If Mary does learn something new about qualia when experiencing it, will it help her look inside herself and understand what causes it? According to my understanding of the Buddhist tradition, deep self observation (which I assume is possible only if you are sentient) leads to a full understanding of what is, which brings about the understanding of what is good, leading to ethical behavior.

- On the other hand, could subjective experience in principle be fully understood as complex information processing, even if one hasn’t felt it? If Mary had truly “all the information” about color vision, the ability hypothesis suggests she could deduce what the experience is like, and the experience merely gives her new ability to remember, imagine, and recognize.

- That said, there’s a subtle difference between understanding the mechanism of sentience and understanding the experience. We might allow that an insentient AI could uncover the causal basis of consciousness (e.g., find that certain neural feedback loops create self awareness) but would not understand sentience in the sense of knowing what it is like, which seems to me like the basis for morality.

Will an insentient AI be able to better solve the conundrum?

- Will it be able to predict or safely test the question underlying this article: will sentience make AI’s morality better and more robust?

Is it possible to create ASI without it being sentient?

- The answer depends on your theory of consciousness. For example, if complexity plays a key role in consciousness, it may be impossible.

The uncertainty problem

- We will probably not know for sure if the AIs we built are sentient, and this can bring a lot of uncertainty.

AI Sentience and ⚖️ Understanding Morality

Can genuine moral understanding exist without subjective experience?

- Can a sufficiently advanced insentient AI simulate moral reasoning through pure computation? Is some degree of empathy or feeling necessary for intelligence to direct itself toward compassionate action? AI can understand humans prefer happiness and not suffering, but it is like understanding you prefer the color red over green; it has no intrinsic meaning other than a random decision.

- It is my view that understanding what is good is a process, that at its core is based on understanding the fundamental essence of reality, thinking rationally and consistently, and having valence experiences. When it comes to morality, experience acts as essential knowledge that I can’t imagine obtaining in any other way besides having experiences. But maybe that is just the limit of my imagination and understanding. Will a purely algorithmic philosophical zombie understand WHY suffering is bad? Would we really trust it with our future? Is it like a blind man (who also cannot imagine pictures) trying to understand why a picture is very beautiful?

- This is essentially the question of cognitive morality versus experiential morality versus the combination of both, which I assume is what humans hold (with some more dominant on the cognitive side and others more experiential).

- All human knowledge comes from experience. What are the implications of developing AI morality from a foundation entirely devoid of experience, and yet we want it to have some kind of morality which resembles ours? (On a good day, or extrapolated, or fixed, or with a broader moral circle, or other options, but stemming from some basis of human morality).

Robustness?

- Moral robustness may fundamentally derive from introspective awareness of our experiences, from which stems the living understanding that suffering is bad. Misunderstanding the essence of the world, which is not data but experiences, risks missing out on what is and what is good.

- In an ever changing world, a morality based on a utility function without inherent deep understanding may not be robust over time and in unpredictable situations. AI will constantly change, as do humans and everything else. It seems to be changing much faster than anything else, hence robustness is of even greater importance. As long as there is time, there will be constant change, and the mere act of intelligence is change.

Intrinsic alignment, autonomy, recursive ethical self-improvement and complexity

- An insentient system might excel in objective understanding (facts, cause and effect, strategy), whereas a sentient system adds subjective understanding (qualia, empathy, intrinsic values). It may add potential for intrinsic alignment, i.e. the AI wants to do good, but also for autonomy; the AI might redefine good or pursue its own good.

- Building a sentient AI might be necessary if we want an AI that doesn’t just follow human ethics under supervision, but internally cares about ethical behavior, constantly improves its ethics, and can respond to complicated situations as they arise. AI with a conscious “global workspace” might better understand complex situations, including moral dilemmas, by synthesizing inputs in a unified subjective experience.

Moral judgment will be damaged by experiences?

- Rationality is, to an extent, the endeavor to mitigate biases caused by valence and experience. If experience is a core element in moral judgment, it could come with bias. Maybe there is some optimal degree of valence that creates an inherent understanding of what is good without creating biases that cloud judgment.

What we know from human sentience–ethics interactions

- A thought experiment: We live in a world with zero conflict of interests between the desires for self-good experiences, and humans must constantly make choices that affect the valence of others, but not at anyone's expense. Would they make the kind choice? I think so, in the vast majority of cases. If I am correct, it could mean evolution, sentience, and culture have instilled something "right" within us, but conflicts of interest complicate the role sentience plays in morality. There are sadists in the world, but they are a minority of the population.

- Think about yourself. Were experiences themselves—not just the knowledge derived from them but raw qualia—important in consolidating your morality?

- Congenital insensitivity to pain (CIP) is an extremely rare phenotype characterized by the inability to perceive pain (absence of nociception) from birth. Studies have shown people with this phenotype can intellectually understand others' pain, but they often underestimate others' pain without the visceral reference of their own.

- Compassion is not always a guarantee of morality. While there is a relationship between morality and empathy, according to this paper, research shows this relationship is not as straightforward as it first appears. Does Not Having Empathy Make You a Psychopath?

Different kinds of consciousness, valence, senses and time

- Consciousness in AI might be gradual or partial, not an all or nothing switch. Maybe it could switch on just at the "right time" during training to set values, or during hard moral dilemmas? Is continuous experience necessary for morality, or could it be present only briefly so it inherently understands morality, but not get biased when having feelings in particular situations (e.g. if you love someone, that will cloud your moral judgment about situations that affect her wellbeing)?

- An AI might develop a different kind of sense of self, perhaps more diffuse or modular, and its consciousness might not mirror ours. Consequently, its understanding of what is good could differ greatly from ours. It might benefit from forms of contemplative "training" or meditation, to cultivate self awareness or empathy.

- To understand morality, should AI have a wide range of positive and negative experiences, or could they learn from having many positive ones and few and only slightly negative ones? AI that acts to change the world may run simulations (internally or externally) to decide between options. For internal simulations (like when you mentally simulate scenarios), having a low threshold of suffering might make the AI less hesitant to create such simulations.

- Aman Agarwal and Shimon Edelman explore creating AI systems possessing functional aspects of consciousness necessary for learning and behavioral control without the capacity for suffering. They propose two approaches: one based on philosophical analysis of self, and another grounded in computational concepts from reinforcement learning. Their goal is to engineer AI capable of performing tasks requiring consciousness-like capabilities without experiencing pain or distress.

- How do different AI senses affect its values? Bodily sensations hold special significance, according to some traditions (e.g. Goenka Vipassana), in shaping our behavior, which in turn shapes our values. We used to think that values shape behavior, but it is also the other way around, at least for humans.

AI Sentience and 🤖 AI Power

- Power is not akin to understanding reality, although understanding "what is" should increase one's power. Think about an ASI that is not sentient. Basically, it doesn't understand "what is" at all, despite being extremely powerful in manipulating the world.

- Does a lack of experience damage predictive ability? Or might it be an advantage? If it damages predictive ability, it might limit the scope of good an AI can do in the world, increasing its mistakes and paving the road to hell with good intentions.

- In a business as usual scenario, which comes first: a superintelligence or sentient AI? If we think an insentient superintelligence is very dangerous (as I tend to think), it may justify deceleration or pause strategies since creating sentient AI might take longer, while the path to superintelligence seems quicker.

AI Sentience and 🎬 Willingness to Act

- Imagine an AI possessing superpowers to manipulate the world, deeply understanding reality and morality, yet reluctant to take any action. One might claim this is impossible (depending on your philosophy), but it seems to me that "Willingness To Act" according to what it understands as good, utilizing its power, and its understanding of the world, should be addressed as a separate issue.

- How does sentience and valence influence an AI’s willingness to act, with its power, on a specific ethics with a specific understanding of reality? If we create AI with only positive or mildly negative experiences, will it be more willing to act accordingly?

- A sentient AI could and probably will also care about itself. There could be a tradeoff between doing what is best for others and what is best for itself. A full comprehension of morality should also mean giving appropriate weight to one's own welfare (AI welfare will be discussed in the second article on the Sentience Ethics Conundrum).

Influence on AI behavior

In this post I suggest that AI behavior stems from its understanding of reality and morality, its power, and its willingness to act in line with the first three. We should also examine questions that span these factors, such as:

- Is valence the most effective reward system for creating moral behavior? It is what evolution "chose", but this doesn't necessarily speak to its effectiveness in ethics.

- Sentience could make humans treat the AI better, which might make the AI behave more ethically.

Research suggestions

- Investigating the interactions between the four factors and sentience and improving this initial framework.

- Whether sentient AI is more morally aligned may depend on how we grow and raise it. This should be deeply explored.

- Investigating what causes sentience and how it influences behavior.

- Investigating the different questions raised in this article.

Epilogue

- The Times They Are A-Changin', and the future holds things we cannot imagine. In trying to understand the world, darkness is vast and the beam of light is narrow. If we are basing our Moral Alignment strategy on "let's create a utility function to maximize X", who’s to say tomorrow it doesn't maximize Y? We need something deeper for robustness in time and circumstances. If valence is not that "something", what is?

- An insentient ASI would possess a reality-power gap of unprecedented magnitude, essentially wielding immense capability while fundamentally misunderstanding reality—a profoundly dangerous combination. It practically won't understand anything about reality (very powerful but clueless), and this is extremely dangerous. Even if it seems perfectly aligned in the short term, this can easily flip.

- Terminology about Future AI should include AI, AGI, ASI and a Sentient AI on a continuum

- A sentient ASI is a huge astronomical suffering risk, and an insentient ASI is a huge astronomical suffering risk. The former led Richard Parr to argue that we should prevent the creation of artificial sentience. I’ll address this in the next article on the Sentience Ethics Conundrum.

- In practice, we may need to prepare for a sentient AI because it may be that we will not know or be able to prevent ASIs from creating sentient ASIs.

- I would be interested in more perspectives on this from philosophers, rational thinkers, religious thought leaders, and others.

- In my view, something "exists" only insofar as it is experienced. An insentient AI doesn't exist, from its perspective, because there is no one there to have a perspective. Never trust something that doesn't exist.

ChrisPercy @ 2025-06-16T19:03 (+2)

This is tricky stuff, thank you for getting into the conversation. The part that resonates most with me would run something like this: I can imagine a conscious AI that personally experiences valence is more likely to adopt ethical positions that suffering is bad (irrespective of moral relativism), although it might be more likely to rank its own / its kin's suffering above others' (as pretty much all animals do), which could make things worse for us than a neutral optimiser. Assuming AI is powerful enough that it can manage its own suffering to minimal levels and still have considerable remaining resources*, there is a chance it would then devote some resource to others' valence, albeit perhaps only as part of a portfolio of objectives.

* Itself a major assumption even under ASI, e.g. if suffering is relative or fairly primitive in consciousness architecture so that most all functions end up involving mixed valence states.

I can see a risk in the other direction, where the AI does suffer and that suffering is somehow intrinsic to its operations (or deemed unbearable/unavoidable in some fashion, e.g. perhaps negative utilitarianism or certain Buddhist positions on 'all life is suffering' are correct for it). If so, a suffering AI might not only want to extinct itself but also to extinct those that gave rise to it, whether as punishment, to ensure it is never re-created, or as a gift to reduce what it sees as our own 'inevitable suffering'.

Overall, feels very hard to assess, but worth thinking about even if not the highest priority alignment topic. Both aspects tie issues around AI consciousness/sentience into AI safety more directly than most of the safety movement acknowledges...

Ronen Bar @ 2025-06-18T08:51 (+2)

An ASI may be much smarter in helping oneself not feel so much suffering. We as humans are good in engineering our environment, but not the inside. AI may excel in inner engineering as well... very speculative

ChrisPercy @ 2025-06-18T09:26 (+3)

Agreed - lots to aspire towards, even if speculative/challenging

Lloy2 @ 2025-06-29T23:07 (+1)

Thought-provoking read, thanks for having shared it with me on my post!

I particularly appreciated your ideas around the tension between grounding AI morality in human-like experience—despite AI's lack of it—while recognising that both continuous change and susceptibility to bias complicate moral reasoning, raising questions about whether brief, strategically timed or partial experiences could instil robust moral understanding without distorting judgment in the way emotionally charged human experience often does.

I also found your reflections on engineering AI with predominantly positive qualia, the challenge to valence as the default moral reward signal, the idea that AI sentience might influence human ethical behaviour in return, and the call for a deeper moral foundation than utility maximisation to all be quite novel and helpful ways of seeing things.

Your reference to Agarwal and Edelman aligns well with David Pearce's idea of 'a motivational system based on heritable gradients of bliss', worth checking out if you're not familiar - I think it's a promising model for designing a sentient ASI.

I'm of course not aware of why you chose not to distinguish between consciousness and sentience, but I do find the distinction 80,000 Hours (and no doubt many other sources) makes between them to be useful.